Spark、Shark集群安装部署及遇到的问题解决

1.部署环境

- OS:Red Hat Enterprise Linux Server release 6.4 (Santiago)

- Hadoop:Hadoop 2.4.1

- Hive:0.11.0

- JDK:1.7.0_60

- Python:2.6.6(spark集群需要python2.6以上,否则无法在spark集群上运行py)

- Spark:0.9.1(最新版是1.1.0)

- Shark:0.9.1(目前最新的版本,但是只能够兼容到spark-0.9.1,见shark 0.9.1 release)

- Zookeeper:2.3.5(配置HA时使用,Spark HA配置参见我的博文:Spark:Master High Availability(HA)高可用配置的2种实现)

- Scala:2.11.2

2.Spark集群规划

- 账户:ebupt

- master:eb174

- slaves:eb174、eb175、eb176

3.建立ssh

cd ~

#生成公钥和私钥

ssh-keygen -q -t rsa -N "" -f /home/ebupt/.ssh/id_rsa

cd .ssh

cat id_rsa.pub > authorized_keys

chmod go-wx authorized_keys

#把文件authorized_keys复制到所有子节点的/home/ebupt/.ssh目录下

scp ~/.ssh/authorized_keys ebupt@eb175:~/.ssh/

scp ~/.ssh/authorized_keys ebupt@eb176:~/.ssh/

另一个简单的方法:

由于实验室集群eb170可以ssh到所有的机器,因此直接拷贝eb170的~/.ssh/所有文件到eb174的~/.ssh/中。这样做的好处是不破坏原有的eb170的ssh免登陆。

[ebupt@eb174 ~]$rm ~/.ssh/*

[ebupt@eb170 ~]$scp -r ~/.ssh/ ebupt@eb174:~/.ssh/

4.部署scala,完全拷贝到所有节点

tar zxvf scala-2.11.2.tgz

ln -s /home/ebupt/eb/scala-2.11.2 ~/scala

vi ~/.bash_profile

#添加环境变量

export SCALA_HOME=$HOME/scala

export PATH=$PATH:$SCALA_HOME/bin

通过scala –version便可以查看到当前的scala版本,说明scala安装成功。

[ebupt@eb174 ~]$ scala -version

Scala code runner version 2.11.2 -- Copyright 2002-2013, LAMP/EPFL

5.安装spark,完全拷贝到所有节点

解压建立软连接,配置环境变量,略。

[ebupt@eb174 ~]$ vi spark/conf/slaves

#add the slaves

eb174

eb175

eb176

[ebupt@eb174 ~]$ vi spark/conf/spark-env.sh

export SCALA_HOME=/home/ebupt/scala

export JAVA_HOME=/home/ebupt/eb/jdk1..0_60

export SPARK_MASTER_IP=eb174

export SPARK_WORKER_MEMORY=4000m

6.安装shark,完全拷贝到所有节点

解压建立软连接,配置环境变量,略。

[ebupt@eb174 ~]$ vi shark/conf/shark-env.sh

export SPARK_MEM=1g # (Required) Set the master program's memory

export SHARK_MASTER_MEM=1g # (Optional) Specify the location of Hive's configuration directory. By default,

# Shark run scripts will point it to $SHARK_HOME/conf

export HIVE_HOME=/home/ebupt/hive

export HIVE_CONF_DIR="$HIVE_HOME/conf" # For running Shark in distributed mode, set the following:

export HADOOP_HOME=/home/ebupt/hadoop

export SPARK_HOME=/home/ebupt/spark

export MASTER=spark://eb174:7077

# Only required if using Mesos:

#export MESOS_NATIVE_LIBRARY=/usr/local/lib/libmesos.so

source $SPARK_HOME/conf/spark-env.sh #LZO compression native lib

export LD_LIBRARY_PATH=/home/ebupt/hadoop/share/hadoop/common # (Optional) Extra classpath export SPARK_LIBRARY_PATH=/home/ebupt/hadoop/lib/native # Java options

# On EC2, change the local.dir to /mnt/tmp

SPARK_JAVA_OPTS=" -Dspark.local.dir=/tmp "

SPARK_JAVA_OPTS+="-Dspark.kryoserializer.buffer.mb=10 "

SPARK_JAVA_OPTS+="-verbose:gc -XX:-PrintGCDetails -XX:+PrintGCTimeStamps "

SPARK_JAVA_OPTS+="-XX:MaxPermSize=256m "

SPARK_JAVA_OPTS+="-Dspark.cores.max=12 "

export SPARK_JAVA_OPTS # (Optional) Tachyon Related Configuration

#export TACHYON_MASTER="" # e.g. "localhost:19998"

#export TACHYON_WAREHOUSE_PATH=/sharktables # Could be any valid path name

export SCALA_HOME=/home/ebupt/scala

export JAVA_HOME=/home/ebupt/eb/jdk1..0_60

7.同步到slaves的脚本

7.1 master(eb174)的~/.bash_profile

# .bash_profile

# Get the aliases and functions

if [ -f ~/.bashrc ]; then

. ~/.bashrc

fi

# User specific environment and startup programs

PATH=$PATH:$HOME/bin

export PATH export JAVA_HOME=/home/ebupt/eb/jdk1..0_60

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export HADOOP_HOME=$HOME/hadoop

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin export ZOOKEEPER_HOME=$HOME/zookeeper

export PATH=$ZOOKEEPER_HOME/bin:$PATH export HIVE_HOME=$HOME/hive

export PATH=$HIVE_HOME/bin:$PATH export HBASE_HOME=$HOME/hbase

export PATH=$PATH:$HBASE_HOME/bin export MAVEN_HOME=$HOME/eb/apache-maven-3.0.

export PATH=$PATH:$MAVEN_HOME/bin export STORM_HOME=$HOME/storm

export PATH=$PATH:$STORM_HOME/storm-yarn-master/bin:$STORM_HOME/storm-0.9.-wip21/bin export SCALA_HOME=$HOME/scala

export PATH=$PATH:$SCALA_HOME/bin export SPARK_HOME=$HOME/spark

export PATH=$PATH:$SPARK_HOME/bin export SHARK_HOME=$HOME/shark

export PATH=$PATH:$SHARK_HOME/bin

7.2 同步脚本:syncInstall.sh

scp -r /home/ebupt/eb/scala-2.11. ebupt@eb175:/home/ebupt/eb/

scp -r /home/ebupt/eb/scala-2.11. ebupt@eb176:/home/ebupt/eb/

scp -r /home/ebupt/eb/spark-1.0.-bin-hadoop2 ebupt@eb175:/home/ebupt/eb/

scp -r /home/ebupt/eb/spark-1.0.-bin-hadoop2 ebupt@eb176:/home/ebupt/eb/

scp -r /home/ebupt/eb/spark-0.9.-bin-hadoop2 ebupt@eb175:/home/ebupt/eb/

scp -r /home/ebupt/eb/spark-0.9.-bin-hadoop2 ebupt@eb176:/home/ebupt/eb/

scp ~/.bash_profile ebupt@eb175:~/

scp ~/.bash_profile ebupt@eb176:~/

7.3 配置脚本:build.sh

#!/bin/bash

source ~/.bash_profile

ssh eb175 > /dev/null >& << eeooff

ln -s /home/ebupt/eb/scala-2.11./ /home/ebupt/scala

ln -s /home/ebupt/eb/spark-0.9.-bin-hadoop2/ /home/ebupt/spark

ln -s /home/ebupt/eb/shark-0.9.-bin-hadoop2/ /home/ebupt/shark

source ~/.bash_profile

exit

eeooff

echo eb175 done!

ssh eb176 > /dev/null >& << eeooffxx

ln -s /home/ebupt/eb/scala-2.11./ /home/ebupt/scala

ln -s /home/ebupt/eb/spark-0.9.-bin-hadoop2/ /home/ebupt/spark

ln -s /home/ebupt/eb/shark-0.9.-bin-hadoop2/ /home/ebupt/shark

source ~/.bash_profile

exit

eeooffxx

echo eb176 done!

8 遇到的问题及其解决办法

8.1 安装shark-0.9.1和spark-1.0.2时,运行shark shell,执行sql报错。

shark> select * from test;

17.096: [Full GC 71198K->24382K(506816K), 0.3150970 secs]

Exception in thread "main" java.lang.VerifyError: class org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$SetOwnerRequestProto overrides final method getUnknownFields.()Lcom/google/protobuf/UnknownFieldSet;

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at java.lang.Class.getDeclaredMethods0(Native Method)

at java.lang.Class.privateGetDeclaredMethods(Class.java:2531)

at java.lang.Class.privateGetPublicMethods(Class.java:2651)

at java.lang.Class.privateGetPublicMethods(Class.java:2661)

at java.lang.Class.getMethods(Class.java:1467)

at sun.misc.ProxyGenerator.generateClassFile(ProxyGenerator.java:426)

at sun.misc.ProxyGenerator.generateProxyClass(ProxyGenerator.java:323)

at java.lang.reflect.Proxy.getProxyClass0(Proxy.java:636)

at java.lang.reflect.Proxy.newProxyInstance(Proxy.java:722)

at org.apache.hadoop.ipc.ProtobufRpcEngine.getProxy(ProtobufRpcEngine.java:92)

at org.apache.hadoop.ipc.RPC.getProtocolProxy(RPC.java:537)

at org.apache.hadoop.hdfs.NameNodeProxies.createNNProxyWithClientProtocol(NameNodeProxies.java:334)

at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:241)

at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:141)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:576)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:521)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:146)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2397)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:89)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2431)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2413)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:368)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.hadoop.hive.ql.Context.getScratchDir(Context.java:180)

at org.apache.hadoop.hive.ql.Context.getMRScratchDir(Context.java:231)

at org.apache.hadoop.hive.ql.Context.getMRTmpFileURI(Context.java:288)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.getMetaData(SemanticAnalyzer.java:1274)

at org.apache.hadoop.hive.ql.parse.SemanticAnalyzer.getMetaData(SemanticAnalyzer.java:1059)

at shark.parse.SharkSemanticAnalyzer.analyzeInternal(SharkSemanticAnalyzer.scala:137)

at org.apache.hadoop.hive.ql.parse.BaseSemanticAnalyzer.analyze(BaseSemanticAnalyzer.java:279)

at shark.SharkDriver.compile(SharkDriver.scala:215)

at org.apache.hadoop.hive.ql.Driver.compile(Driver.java:337)

at org.apache.hadoop.hive.ql.Driver.run(Driver.java:909)

at shark.SharkCliDriver.processCmd(SharkCliDriver.scala:338)

at org.apache.hadoop.hive.cli.CliDriver.processLine(CliDriver.java:413)

at shark.SharkCliDriver$.main(SharkCliDriver.scala:235)

at shark.SharkCliDriver.main(SharkCliDriver.scala)

原因:不知道它在说什么,大概是说“protobuf”版本有问题.

解决:找到 jar 包 “hive-exec-0.11.0-shark-0.9.1.jar” 在$SHARK_HOME/lib_managed/jars/edu.berkeley.cs.shark/hive-exec, 删掉有关protobuf,重新打包,该报错不再有,脚本如下所示。

cd $SHARK_HOME/lib_managed/jars/edu.berkeley.cs.shark/hive-exec

unzip hive-exec-0.11.-shark-0.9..jar

rm -f com/google/protobuf/*

rm hive-exec-0.11.0-shark-0.9.1.jar

zip -r hive-exec-0.11.0-shark-0.9.1.jar *

rm -rf com hive-exec-log4j.properties javaewah/ javax/ javolution/ META-INF/ org/

8.2 安装shark-0.9.1和spark-1.0.2时,spark集群正常运行,跑一下简单的job也是可以的,但是shark的job始终出现Spark cluster looks dead, giving up. 在运行shark-shell(shark-withinfo )时,都会看到连接不上spark的master。报错类似如下:

shark> select * from t1;

16.452: [GC 282770K->32068K(1005568K), 0.0388780 secs]

org.apache.spark.SparkException: Job aborted: Spark cluster looks down

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$abortStage$1.apply(DAGScheduler.scala:1028)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$org$apache$spark$scheduler$DAGScheduler$$abortStage$1.apply(DAGScheduler.scala:1026)

at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59)

at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47)

at org.apache.spark.scheduler.DAGScheduler.org$apache$spark$scheduler$DAGScheduler$$abortStage(DAGScheduler.scala:1026)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$processEvent$10.apply(DAGScheduler.scala:619)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$processEvent$10.apply(DAGScheduler.scala:619)

at scala.Option.foreach(Option.scala:236)

at org.apache.spark.scheduler.DAGScheduler.processEvent(DAGScheduler.scala:619)

at org.apache.spark.scheduler.DAGScheduler$$anonfun$start$1$$anon$2$$anonfun$receive$1.applyOrElse(DAGScheduler.scala:207)

at akka.actor.ActorCell.receiveMessage(ActorCell.scala:498)

at akka.actor.ActorCell.invoke(ActorCell.scala:456)

at akka.dispatch.Mailbox.processMailbox(Mailbox.scala:237)

at akka.dispatch.Mailbox.run(Mailbox.scala:219)

at akka.dispatch.ForkJoinExecutorConfigurator$AkkaForkJoinTask.exec(AbstractDispatcher.scala:386)

at scala.concurrent.forkjoin.ForkJoinTask.doExec(ForkJoinTask.java:260)

at scala.concurrent.forkjoin.ForkJoinPool$WorkQueue.runTask(ForkJoinPool.java:1339)

at scala.concurrent.forkjoin.ForkJoinPool.runWorker(ForkJoinPool.java:1979)

at scala.concurrent.forkjoin.ForkJoinWorkerThread.run(ForkJoinWorkerThread.java:107)

FAILED: Execution Error, return code -101 from shark.execution.SparkTask

原因:网上有很多人遇到同样的问题,spark集群是好的,但是shark就是不能够很好的运行。查看shark-0.9.1的release发现

Release date: April 10, 2014

Shark 0.9.1 is a maintenance release that stabilizes 0.9.0, which bumps up Scala compatibility to 2.10.3 and Hive compliance to 0.11. The core dependencies for this version are:

Scala 2.10.3

Spark 0.9.1

AMPLab’s Hive 0.9.0

(Optional) Tachyon 0.4.1

这是因为shark版本只兼容到spark-0.9.1,版本不兼容导致无法找到spark集群的master服务。

解决:回退spark版本到spark-0.9.1,scala版本不用回退。回退后运行正常。

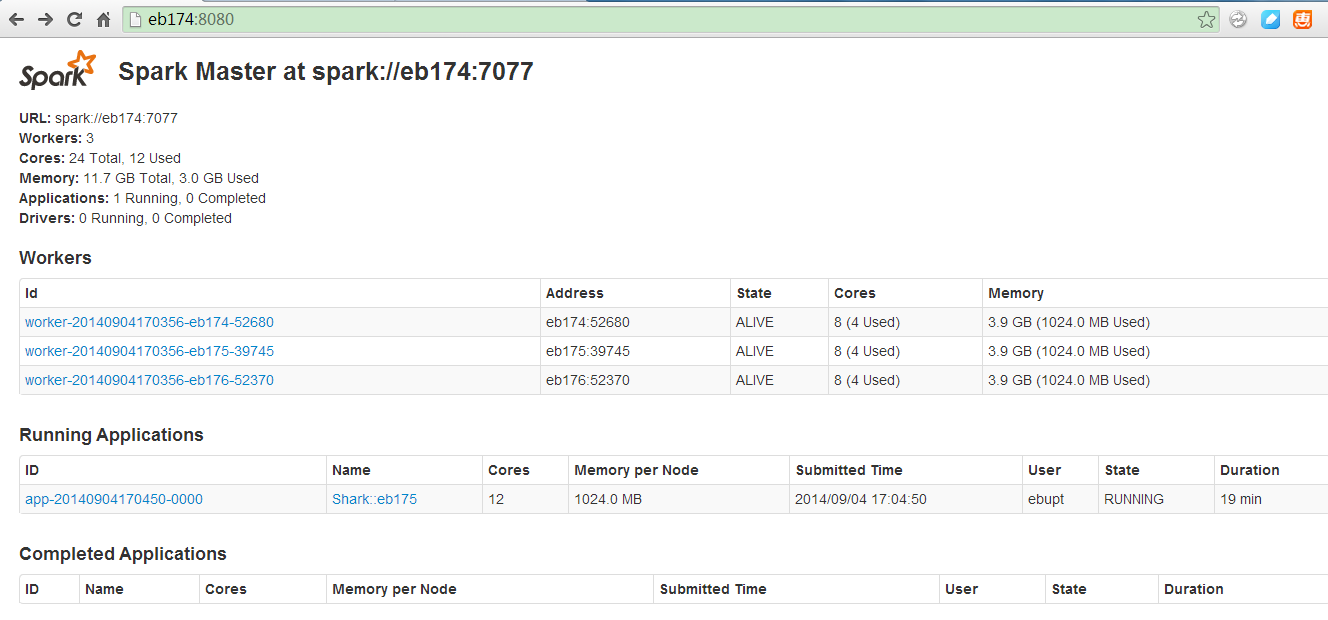

9.集群成功运行

9.1启动spark集群standalone模式

[ebupt@eb174 ~]$ ./spark/sbin/start-all.sh

9.2测试spark集群

[ebupt@eb174 ~]$ ./spark/bin/run-example org.apache.spark.examples.SparkPi 10 spark://eb174:7077

9.3 Spark Master UI:http://eb174:8080/

10 参考资料

- Apache Spark

- Apache Shark

- Shark安装部署与应用

- Spark github

- Shark github

- Spark 0.9.1和Shark 0.9.1分布式安装指南

- google group-shark users

- ERIC'S BLOG

Spark、Shark集群安装部署及遇到的问题解决的更多相关文章

- HBase集群安装部署

0x01 软件环境 OS: CentOS6.5 x64 java: jdk1.8.0_111 hadoop: hadoop-2.5.2 hbase: hbase-0.98.24 0x02 集群概况 I ...

- flink部署操作-flink standalone集群安装部署

flink集群安装部署 standalone集群模式 必须依赖 必须的软件 JAVA_HOME配置 flink安装 配置flink 启动flink 添加Jobmanager/taskmanager 实 ...

- HBase 1.2.6 完全分布式集群安装部署详细过程

Apache HBase 是一个高可靠性.高性能.面向列.可伸缩的分布式存储系统,是NoSQL数据库,基于Google Bigtable思想的开源实现,可在廉价的PC Server上搭建大规模结构化存 ...

- 1.Hadoop集群安装部署

Hadoop集群安装部署 1.介绍 (1)架构模型 (2)使用工具 VMWARE cenos7 Xshell Xftp jdk-8u91-linux-x64.rpm hadoop-2.7.3.tar. ...

- 2 Hadoop集群安装部署准备

2 Hadoop集群安装部署准备 集群安装前需要考虑的几点硬件选型--CPU.内存.磁盘.网卡等--什么配置?需要多少? 网络规划--1 GB? 10 GB?--网络拓扑? 操作系统选型及基础环境-- ...

- K8S集群安装部署

K8S集群安装部署 参考地址:https://www.cnblogs.com/xkops/p/6169034.html 1. 确保系统已经安装epel-release源 # yum -y inst ...

- 【分布式】Zookeeper伪集群安装部署

zookeeper:伪集群安装部署 只有一台linux主机,但却想要模拟搭建一套zookeeper集群的环境.可以使用伪集群模式来搭建.伪集群模式本质上就是在一个linux操作系统里面启动多个zook ...

- 第06讲:Flink 集群安装部署和 HA 配置

Flink系列文章 第01讲:Flink 的应用场景和架构模型 第02讲:Flink 入门程序 WordCount 和 SQL 实现 第03讲:Flink 的编程模型与其他框架比较 第04讲:Flin ...

- Hadoop2.2集群安装配置-Spark集群安装部署

配置安装Hadoop2.2.0 部署spark 1.0的流程 一.环境描写叙述 本实验在一台Windows7-64下安装Vmware.在Vmware里安装两虚拟机分别例如以下 主机名spark1(19 ...

随机推荐

- 功能模块图、业务流程图、处理流程图、ER图,数据库表图(概念模型和物理模型)画法

如果你能使用计算机规范画出以下几种图,那么恭喜你,你在我这里被封为学霸了,我膜拜ing-- 我作为前端开发与产品经理打交道已有5-6年时间,产品经理画的业务流程图我看过很多.于是百度搜+凭以往经验脑补 ...

- The hacker's sanbox游戏

第一关:使用/usr/hashcat程序,对passwd中root的密码进行解密,得到gravity98 执行su,输入密码gravity98. 第二关:获取提供的工具,wget http://are ...

- [访问系统] C#计算机信息类ComputerInfo (转载)

下载整个包,只下载现有类是不起作用的 http://www.sufeinet.com/thread-303-1-1.html 点击此处下载 using System; using System.Man ...

- 关于打开ILDASM的方法

1.通过VisualStudio在开始菜单下的Microsoft Visual Studio 2008/Visual Studio Tools/中的命令提示符中输入ildasm即可 2.将其添加至 ...

- SQL Server数据类型

转载:http://www.ezloo.com/2008/10/sql_server_data_type.html 数据类型是数据的一种属性,是数据所表示信息的类型.任何一种语言都有它自己所固有 ...

- SQL性能优化没有那么神秘

经常听说SQL Server最难的部分是性能优化,不禁让人感到优化这个工作很神秘,这种事情只有高手才能做.很早的时候我在网上看到一位高手写的博客,介绍了SQL优化的问题,从这些内容来看,优化并不都是一 ...

- oracle模糊查询效率提高

1.使用两边加‘%’号的查询,oracle是不通过索引的,所以查询效率很低. 例如:select count(*) from lui_user_base t where t.user_name lik ...

- ios专题 - 多线程非GCD(1)

iOS多线程初体验是本文要介绍的内容,iPhone中的线程应用并不是无节制的,官方给出的资料显示iPhone OS下的主线程的堆栈大小是1M,第二个线程开始都是512KB.并且该值不能通过编译器开关或 ...

- 代码世界中的Lambda

“ λ ”像一个双手插兜儿,独自行走的人,有“失意.无奈.孤独”的感觉.λ 读作Lambda,是物理上的波长符号,放射学的衰变常数,线性代数中的特征值……在程序和代码的世界里,它代表了函数表达式,系统 ...

- 帝国cms在任意位置调用指定id的栏目名称和链接

注意,这个代码无须放在灵动标签中,直接写入模板相应的位置就行了.[1]调用栏目名称: <?=$class_r[栏目ID]['classname']?> 示例:<?=$class_ ...