OpenACC Julia 图形

▶ 书上的代码,逐步优化绘制 Julia 图形的代码

● 无并行优化(手动优化了变量等)

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N (1024 * 8) int julia(const float cre, const float cim, float zre, float zim, const int maxIter)// 计算单点迭代次数

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += ) // 一个迭代里计算两次

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ; // 最大迭代次数

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; // 迭代常数和画幅步长

int *image = (int *)malloc(sizeof(int) * N * N);

FILE *pf = fopen("R:/output.txt", "w"); for (int i = ; i < N; i++)

{

for (int j = ; j < N; j++)

fprintf(pf, "%d ", julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter));

fprintf(pf, "\n");

} fclose(pf);

free(image);

//getchar();

return ;

}

● 输出结果(后面所有代码的输出都相同,不再写了)

● 改进 1,计算并行化

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N;

int *image = (int *)malloc(sizeof(int) * N * N); #pragma acc data copyout(image[0:N * N]) // 数据域

{

#pragma acc kernels loop independent // loop 并行化,强制独立

for (int i = ; i < N; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

}

/*// 注释掉写入文件的部分,防止 Nvvp 加入分析

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

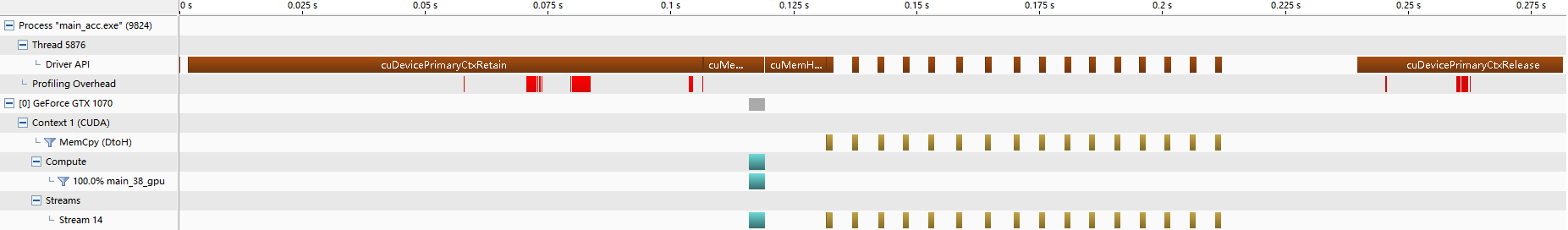

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Generating copyout(image[:])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: compute region reached time

: kernel launched time

grid: [256x128] block: [32x4]

device time(us): total= max= min= avg=

● 改进 2,分块计算,没有明显性能提升,为异步做准备

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N;

int *image = (int *)malloc(sizeof(int) * N * N); #pragma acc data copyout(image[0:N * N])

{

const int numblock = ; // 指定分块数量

for (int block = ; block < numblock; block++) // 每次计算一块

{

const int start = block * (N / numblock), end = start + N / numblock; // 每块的始末下标

#pragma acc kernels loop independent

for (int i = start; i < end; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

}

}

/*

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

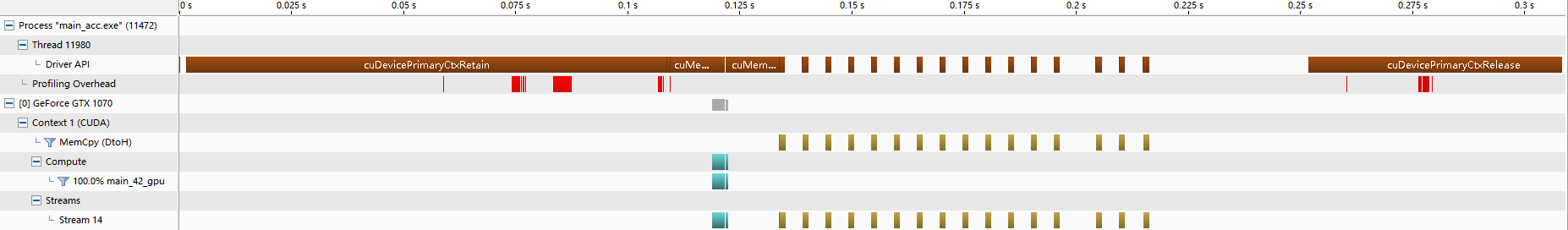

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Generating copyout(image[:])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: compute region reached times

: kernel launched times

grid: [256x128] block: [32x4]

device time(us): total= max= min= avg=

● 改进 3,分块传输,没有明显性能提升,为异步做准备

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N;

int *image = (int *)malloc(sizeof(int) * N * N); #pragma acc data create(image[0:N * N]) // 改成 create,不需要从主机拷贝初始数据

{

const int numBlock = , blockSize = N * N / numBlock; // 仍然分块计算

for (int block = ; block < numBlock; block++)

{

const int start = block * (N / numBlock), end = start + N / numBlock;

#pragma acc kernels loop independent

for (int i = start; i < end; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

#pragma acc update host(image[block * blockSize : blockSize]) // 每计算完一块就向主机回传数据

}

}

/*

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

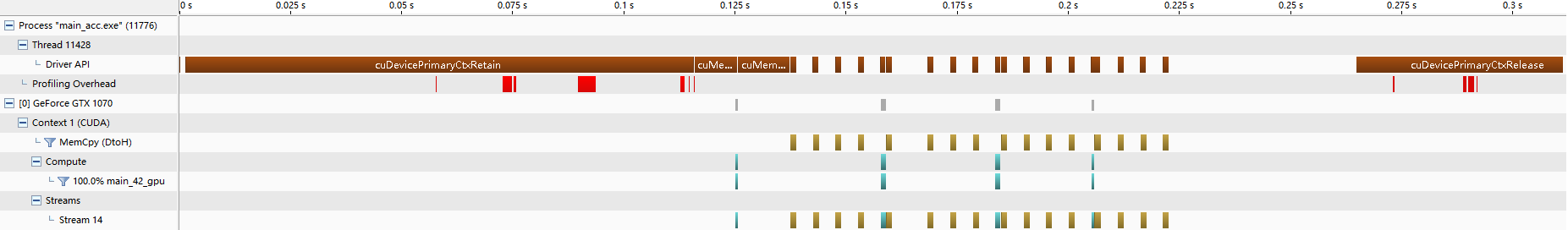

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Generating create(image[:])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated

, Generating update self(image[block*blockSize:blockSize]) D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: compute region reached times

: kernel launched times

grid: [256x128] block: [32x4]

elapsed time(us): total=, max=, min= avg=,

: update directive reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

● 改进 4,异步计算 - 双向传输

#include <stdio.h>

#include <stdlib.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N;

int *image = (int *)malloc(sizeof(int) * N * N); #pragma acc data create(image[0:N * N])

{

const int numBlock = , blockSize = N / numBlock * N;

for (int block = ; block < numBlock; block++)

{

const int start = block * (N / numBlock), end = start + N / numBlock;

#pragma acc kernels loop independent async(block + 1) // 异步计算,用块编号作标记

for (int i = start; i < end; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

#pragma acc update host(image[block * blockSize : blockSize]) async(block + 1) // 计算完一块就异步传输

}

#pragma acc wait

}

/*

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc -acc -Minfo main.c -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Generating create(image[:])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated

, Generating update self(image[block*blockSize:blockSize]) D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= queue= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

Timing may be affected by asynchronous behavior

set PGI_ACC_SYNCHRONOUS to to disable async() clauses

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: compute region reached times

: kernel launched times

grid: [256x128] block: [32x4]

device time(us): total= max= min= avg=

: update directive reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

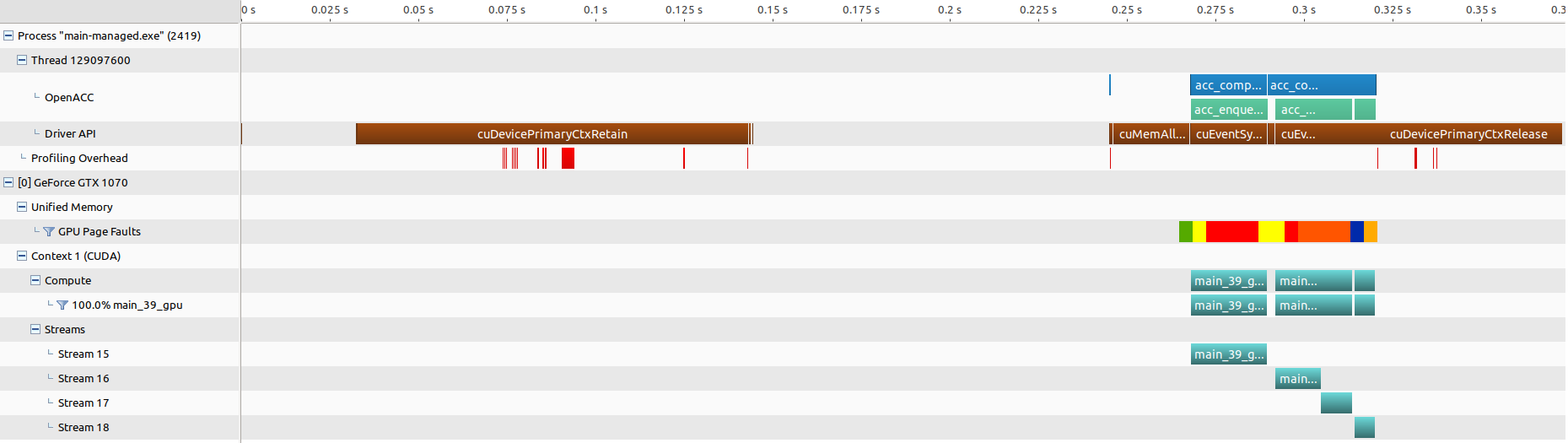

● 使用统一内存访址(Ubuntu,win64 不支持)

● 改进 4,多设备版本 1,使用 OpenMP

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const int numBlock = acc_get_num_devices(acc_device_nvidia), blockSize = N / numBlock * N; // 使用 OpenMP 检测目标设备数量,以此作为分块数

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; int *image = (int *)malloc(sizeof(int) * N * N);

acc_init(acc_device_nvidia); // 一次性初始化全部目标设备 #pragma omp parallel num_threads(numBlock) // 使用多个线程,分别向目标设备发送任务

{

acc_set_device_num(omp_get_thread_num(), acc_device_nvidia);// 标记目标设备

#pragma omp for

for (int block = ; block < numBlock; block++)

{

const int start = block * (N / numBlock), end = start + N / numBlock;

#pragma acc data copyout(image[block * blockSize : blockSize])

{

#pragma acc kernels loop independent

for (int i = start; i < end; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

}

}

}

/*

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc -acc -mp -Minfo main.c -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Parallel region activated

, Parallel loop activated with static block schedule

, Generating copyout(image[block*blockSize:blockSize])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated

, Barrier

, Parallel region terminated D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: compute region reached time

: kernel launched time

grid: [256x128] block: [32x4]

device time(us): total= max= min= avg=

● 改进 5,多设备版本 2,调整 OpenMP

#include <stdio.h>

#include <stdlib.h>

#include <omp.h>

#include <openacc.h> #define N (1024 * 8) #pragma acc routine seq

int julia(const float cre, const float cim, float zre, float zim, const int maxIter)

{

float zre2 = 0.0f, zim2 = 0.0f;

for (int iter = ; iter < maxIter; iter += )

{

zre2 = zre * zre - zim * zim + cre, zim2 = * zre * zim + cim;

if (zre2 * zre2 + zim2 * zim2 > 4.0f)

return iter; zre = zre2 * zre2 - zim2 * zim2 + cre, zim = * zre2 * zim2 + cim;

if (zre * zre + zim * zim > 4.0f)

return iter;

}

return maxIter + + (maxIter % );

} int main()

{

const int maxIter = ;

const int numBlock = acc_get_num_devices(acc_device_nvidia), blockSize = N / numBlock * N;

const float cre = -0.8350, cim = -0.2321, h = 4.0f / N; int *image = (int *)malloc(sizeof(int) * N * N);

acc_init(acc_device_nvidia); #pragma omp parallel for num_threads(numBlock) // 把函数 acc_set_device_num 单独放在一起

for(int block = ;block<numBlock;block++)

acc_set_device_num(block, acc_device_nvidia); #pragma omp for num_threads(numBlock)

for (int block = ; block < numBlock; block++)

{

const int start = block * (N / numBlock), end = start + N / numBlock;

#pragma acc data copyout(image[block * blockSize : blockSize])

{

#pragma acc kernels loop independent

for (int i = start; i < end; i++)

{

for (int j = ; j < N; j++)

image[i * N + j] = julia(cre, cim, i * h - 2.0f, j * h - 2.0f, maxIter);

}

}

}

/*

FILE *pf = fopen("R:/output.txt", "w");

for (int i = 0; i < N; i++)

{

for (int j = 0; j < N; j++)

fprintf(pf, "%d ", image[i * N + j]);

fprintf(pf, "\n");

}

fclose(pf);

*/

free(image);

//getchar();

return ;

}

● 输出结果

D:\Code\OpenACC\OpenACCProject\OpenACCProject>pgcc main.c -acc -Minfo -o main_acc.exe

julia:

, Generating acc routine seq

Generating Tesla code

, FMA (fused multiply-add) instruction(s) generated

, FMA (fused multiply-add) instruction(s) generated

main:

, Generating copyout(image[block*blockSize:blockSize])

, Loop is parallelizable

FMA (fused multiply-add) instruction(s) generated

, Loop is parallelizable

Accelerator kernel generated

Generating Tesla code

, #pragma acc loop gang, vector(4) /* blockIdx.y threadIdx.y */

, #pragma acc loop gang, vector(32) /* blockIdx.x threadIdx.x */

, FMA (fused multiply-add) instruction(s) generated D:\Code\OpenACC\OpenACCProject\OpenACCProject>main_acc.exe

launch CUDA kernel file=D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c function=main

line= device= threadid= num_gangs= num_workers= vector_length= grid=256x128 block=32x4

PGI: "acc_shutdown" not detected, performance results might be incomplete.

Please add the call "acc_shutdown(acc_device_nvidia)" to the end of your application to ensure that the performance results are complete. Accelerator Kernel Timing data

D:\Code\OpenACC\OpenACCProject\OpenACCProject\main.c

main NVIDIA devicenum=

time(us): ,

: data region reached times

: data copyout transfers:

device time(us): total=, max=, min= avg=,

: compute region reached time

: kernel launched time

grid: [256x128] block: [32x4]

elapsed time(us): total=, max=, min=, avg=,

OpenACC Julia 图形的更多相关文章

- OpenCV绘制朱利亚(Julia)集合图形

朱利亚集合是一个在复平面上形成分形的点的集合.以法国数学家加斯顿·朱利亚(Gaston Julia)的名字命名. 朱利亚集合可以由下式进行反复迭代得到: 对于固定的复数c,取某一z值(如z = z0) ...

- CUDA+OpenCV 绘制朱利亚(Julia)集合图形

Julia集中的元素都是经过简单的迭代计算得到的,很适合用CUDA进行加速.对一个600*600的图像,需要进行360000次迭代计算,所以在CUDA中创建了600*600个线程块(block),每个 ...

- OpenACC 云水参数化方案

▶ 书上第十三章,用一系列步骤优化一个云水参数化方案.用于熟悉 Fortran 以及 OpenACC 在旗下的表现 ● 代码,文件较多,放在一起了 ! main.f90 PROGRAM main US ...

- 详解 CUDA By Example 中的 Julia Set 绘制GPU优化

笔者测试环境VS2019. 基本介绍 原书作者引入Julia Sets意在使用GPU加速图形的绘制.Julia Set 是指满足下式迭代收敛的复数集合 \[ Z_{n+1}=Z_{n}^2+C \] ...

- 现代3D图形编程学习-基础简介(3)-什么是opengl (译)

本书系列 现代3D图形编程学习 OpenGL是什么 在我们编写openGL程序之前,我们首先需要知道什么是OpenGL. 将OpenGL作为一个API OpenGL 通常被认为是应用程序接口(API) ...

- 超全面的.NET GDI+图形图像编程教程

本篇主题内容是.NET GDI+图形图像编程系列的教程,不要被这个滚动条吓到,为了查找方便,我没有分开写,上面加了目录了,而且很多都是源码和图片~ (*^_^*) 本人也为了学习深刻,另一方面也是为了 ...

- Ubuntu设置root用户登录图形界面

Ubuntu默认的是root用户不能登录图形界面的,只能以其他用户登录图形界面.这样就很麻烦,因为权限的问题,不能随意复制删除文件,用gedit编辑文件时经常不能保存,只能用vim去编辑. 解决的办法 ...

- 第六代智能英特尔® 酷睿™ 处理器图形 API 开发人员指南

欢迎查看第六代智能英特尔® 酷睿™ 处理器图形 API 开发人员指南,该处理器可为开发人员和最终用户提供领先的 CPU 和图形性能增强.各种新特性和功能以及显著提高的性能. 本指南旨在帮助软件开发人员 ...

- 通过Matrix进行二维图形仿射变换

Affine Transformation是一种二维坐标到二维坐标之间的线性变换,保持二维图形的"平直性"和"平行性".仿射变换可以通过一系列的原子变换的复合来 ...

随机推荐

- hive中实现类似MySQL中的group_concat功能

hive> desc t; OK id string str string Time taken: 0.249 seconds hive> select * from t ...

- 自动AC机

可以在lemon和cena环境下使用. #include<iostream> #include<cstdio> #include<cstring> #include ...

- 使用slot编写弹窗组件

具体slot用法详见http://www.cnblogs.com/keepfool/p/5637834.html html: <!--测试弹窗--> <dialog-test v-i ...

- citus real-time 分析demo( 来自官方文档)

citus 对于多租户以及实时应用的开发都是比较好的,官方也提供了demo 参考项目 https://github.com/rongfengliang/citus-hasuar-graphql 环 ...

- JQuery 在网页中查询

最近遇到客户的一个需求,要在网页中添加一个Search 功能,其实对于网页的搜索,Ctrl+F,真的是非常足够了,但是客户的需求,不得不做,这里就做了个关于Jquery Search function ...

- 【转】每天一个linux命令(59):rcp命令

原文网址:http://www.cnblogs.com/peida/archive/2013/03/14/2958685.html rcp代表“remote file copy”(远程文件拷贝).该命 ...

- Oracle根据表的大小排序SQL语句

--按照数据行数排序select table_name,blocks,num_rows from dba_tables where owner not like '%SYS%' and table_n ...

- winform自定义控件 (转帖)

定义控件 本文以按钮为例,制作一个imagebutton,继承系统button, 分四种状态 1,正常状态 2,获得焦点 3,按下按钮 4,禁用 当然你得准备一张图片,包含四种状态的样式,同样你也可以 ...

- 访问php网站报500错误时显示错误显示

调试时可在访问的php文件开头输入 ini_set("display_errors", "On"); error_reporting(E_ALL | E_STR ...

- C++ 获取类成员函数地址方法 浅析

C语言中可以用函数地址直接调用函数: void print () { printf ("function print"); } typdef void (*fu ...