VI ORB-SLAM初始化与VINS初始化对比(将vi orb-slam初始化方法移植到vins中)

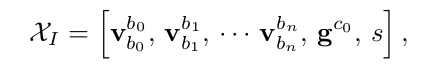

初始化时需要求出的变量:相机和imu外参r t、重力g、尺度s、陀螺仪和加速度计偏置ba bg。

下面对两种算法初始化的详细步骤进行对比:

求陀螺仪偏置bg

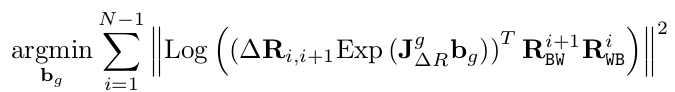

- 求解公式相同,求解方法不同。公式如下,VI ORB-SLAM使用图优化的方式。

Vector3d Optimizer::OptimizeInitialGyroBias(const vector<cv::Mat>& vTwc, const vector<IMUPreintegrator>& vImuPreInt)

{

int N = vTwc.size(); if(vTwc.size()!=vImuPreInt.size()) cerr<<"vTwc.size()!=vImuPreInt.size()"<<endl;

Matrix4d Tbc = ConfigParam::GetEigTbc();

Matrix3d Rcb = Tbc.topLeftCorner(,).transpose(); // Setup optimizer

g2o::SparseOptimizer optimizer;

g2o::BlockSolverX::LinearSolverType * linearSolver; linearSolver = new g2o::LinearSolverEigen<g2o::BlockSolverX::PoseMatrixType>(); g2o::BlockSolverX * solver_ptr = new g2o::BlockSolverX(linearSolver); g2o::OptimizationAlgorithmGaussNewton* solver = new g2o::OptimizationAlgorithmGaussNewton(solver_ptr);

//g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr);

optimizer.setAlgorithm(solver); // Add vertex of gyro bias, to optimizer graph

g2o::VertexGyrBias * vBiasg = new g2o::VertexGyrBias();

vBiasg->setEstimate(Eigen::Vector3d::Zero());

vBiasg->setId();

optimizer.addVertex(vBiasg); // Add unary edges for gyro bias vertex

//for(std::vector<KeyFrame*>::const_iterator lit=vpKFs.begin(), lend=vpKFs.end(); lit!=lend; lit++)

for(int i=; i<N; i++)

{

// Ignore the first KF

if(i==)

continue; const cv::Mat& Twi = vTwc[i-]; // pose of previous KF

Matrix3d Rwci = Converter::toMatrix3d(Twi.rowRange(,).colRange(,));

//Matrix3d Rwci = Twi.rotation_matrix();

const cv::Mat& Twj = vTwc[i]; // pose of this KF

Matrix3d Rwcj = Converter::toMatrix3d(Twj.rowRange(,).colRange(,));

//Matrix3d Rwcj =Twj.rotation_matrix(); const IMUPreintegrator& imupreint = vImuPreInt[i]; g2o::EdgeGyrBias * eBiasg = new g2o::EdgeGyrBias();

eBiasg->setVertex(, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex()));

// measurement is not used in EdgeGyrBias

eBiasg->dRbij = imupreint.getDeltaR();

eBiasg->J_dR_bg = imupreint.getJRBiasg();

eBiasg->Rwbi = Rwci*Rcb;

eBiasg->Rwbj = Rwcj*Rcb;

//eBiasg->setInformation(Eigen::Matrix3d::Identity());

eBiasg->setInformation(imupreint.getCovPVPhi().bottomRightCorner(,).inverse());

optimizer.addEdge(eBiasg);

} // It's actualy a linear estimator, so 1 iteration is enough.

//optimizer.setVerbose(true);

optimizer.initializeOptimization();

optimizer.optimize(); g2o::VertexGyrBias * vBgEst = static_cast<g2o::VertexGyrBias*>(optimizer.vertex()); return vBgEst->estimate();

}

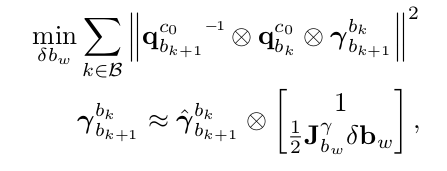

- VINS中公式如下。使用LDLT分解,解方程组。

void solveGyroscopeBias(map<double, ImageFrame> &all_image_frame, Vector3d* Bgs)

{

Matrix3d A;

Vector3d b;

Vector3d delta_bg;

A.setZero();

b.setZero();

map<double, ImageFrame>::iterator frame_i;

map<double, ImageFrame>::iterator frame_j;

for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end(); frame_i++)

{

frame_j = next(frame_i);

MatrixXd tmp_A(, );

tmp_A.setZero();

VectorXd tmp_b();

tmp_b.setZero();

Eigen::Quaterniond q_ij(frame_i->second.R.transpose() * frame_j->second.R);

tmp_A = frame_j->second.pre_integration->jacobian.template block<, >(O_R, O_BG);

tmp_b = * (frame_j->second.pre_integration->delta_q.inverse() * q_ij).vec();

A += tmp_A.transpose() * tmp_A;

b += tmp_A.transpose() * tmp_b; }

delta_bg = A.ldlt().solve(b);

ROS_WARN_STREAM("gyroscope bias initial calibration " << delta_bg.transpose()); for (int i = ; i <= WINDOW_SIZE; i++)

Bgs[i] += delta_bg; for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end( ); frame_i++)

{

frame_j = next(frame_i);

frame_j->second.pre_integration->repropagate(Vector3d::Zero(), Bgs[]);

}

}

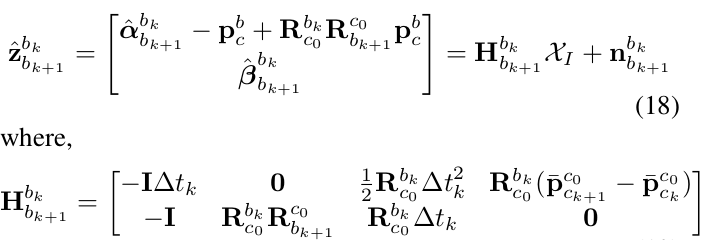

求重力加速度g、尺度s和外参平移量T

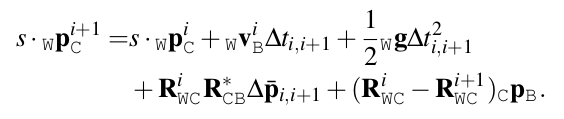

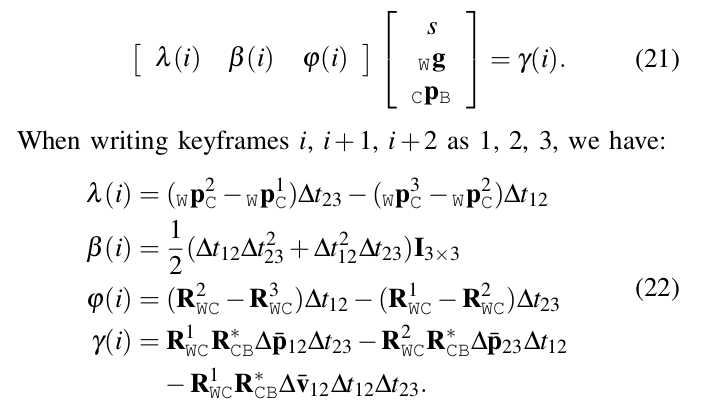

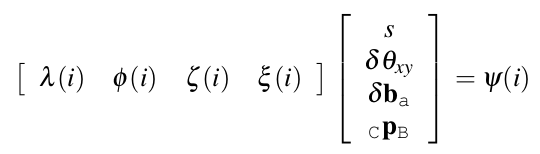

- VINS中并没有求外参T,而是在紧耦合优化时以初值为0直接进行优化。两算法求取公式略有不同。VI ORB-SLAM公式如下,使用SVD分解求解方程的解:

// Solve A*x=B for x=[s,gw] 4x1 vector

cv::Mat A = cv::Mat::zeros(*(N-),,CV_32F);

cv::Mat B = cv::Mat::zeros(*(N-),,CV_32F);

cv::Mat I3 = cv::Mat::eye(,,CV_32F); // Step 2.

// Approx Scale and Gravity vector in 'world' frame (first KF's camera frame)

for(int i=; i<N-; i++)

{

//KeyFrameInit* pKF1 = vKFInit[i];//vScaleGravityKF[i];

KeyFrameInit* pKF2 = vKFInit[i+];

KeyFrameInit* pKF3 = vKFInit[i+];

// Delta time between frames

double dt12 = pKF2->mIMUPreInt.getDeltaTime();

double dt23 = pKF3->mIMUPreInt.getDeltaTime();

// Pre-integrated measurements

cv::Mat dp12 = Converter::toCvMat(pKF2->mIMUPreInt.getDeltaP());

cv::Mat dv12 = Converter::toCvMat(pKF2->mIMUPreInt.getDeltaV());

cv::Mat dp23 = Converter::toCvMat(pKF3->mIMUPreInt.getDeltaP()); // Pose of camera in world frame

cv::Mat Twc1 = vTwc[i].clone();//pKF1->GetPoseInverse();

cv::Mat Twc2 = vTwc[i+].clone();//pKF2->GetPoseInverse();

cv::Mat Twc3 = vTwc[i+].clone();//pKF3->GetPoseInverse();

// Position of camera center

cv::Mat pc1 = Twc1.rowRange(,).col();

cv::Mat pc2 = Twc2.rowRange(,).col();

cv::Mat pc3 = Twc3.rowRange(,).col();

// Rotation of camera, Rwc

cv::Mat Rc1 = Twc1.rowRange(,).colRange(,);

cv::Mat Rc2 = Twc2.rowRange(,).colRange(,);

cv::Mat Rc3 = Twc3.rowRange(,).colRange(,); // Stack to A/B matrix

// lambda*s + beta*g = gamma

cv::Mat lambda = (pc2-pc1)*dt23 + (pc2-pc3)*dt12;

cv::Mat beta = 0.5*I3*(dt12*dt12*dt23 + dt12*dt23*dt23);

cv::Mat gamma = (Rc3-Rc2)*pcb*dt12 + (Rc1-Rc2)*pcb*dt23 + Rc1*Rcb*dp12*dt23 - Rc2*Rcb*dp23*dt12 - Rc1*Rcb*dv12*dt12*dt23;

lambda.copyTo(A.rowRange(*i+,*i+).col());

beta.copyTo(A.rowRange(*i+,*i+).colRange(,));

gamma.copyTo(B.rowRange(*i+,*i+));

// Tested the formulation in paper, -gamma. Then the scale and gravity vector is -xx // Debug log

//cout<<"iter "<<i<<endl;

}

// Use svd to compute A*x=B, x=[s,gw] 4x1 vector

// A = u*w*vt, u*w*vt*x=B

// Then x = vt'*winv*u'*B

cv::Mat w,u,vt;

// Note w is 4x1 vector by SVDecomp()

// A is changed in SVDecomp() with cv::SVD::MODIFY_A for speed

cv::SVDecomp(A,w,u,vt,cv::SVD::MODIFY_A);

// Debug log

//cout<<"u:"<<endl<<u<<endl;

//cout<<"vt:"<<endl<<vt<<endl;

//cout<<"w:"<<endl<<w<<endl; // Compute winv

cv::Mat winv=cv::Mat::eye(,,CV_32F);

for(int i=;i<;i++)

{

if(fabs(w.at<float>(i))<1e-)

{

w.at<float>(i) += 1e-;

// Test log

cerr<<"w(i) < 1e-10, w="<<endl<<w<<endl;

} winv.at<float>(i,i) = ./w.at<float>(i);

}

// Then x = vt'*winv*u'*B

cv::Mat x = vt.t()*winv*u.t()*B; // x=[s,gw] 4x1 vector

double sstar = x.at<float>(); // scale should be positive

cv::Mat gwstar = x.rowRange(,); // gravity should be about ~9.8

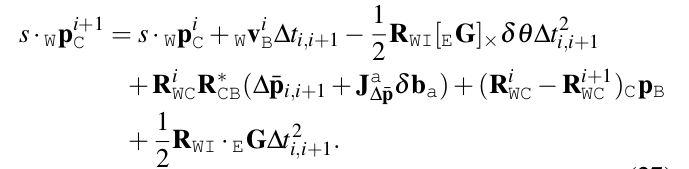

- VINS求出尺度s、重力加速度g和速度v(这里求出滑窗内每帧的速度v意义在哪里?),并没有求外参的平移,方法为LDLT分解。公式如下:

bool LinearAlignment(map<double, ImageFrame> &all_image_frame, Vector3d &g, VectorXd &x)

{

int all_frame_count = all_image_frame.size();

int n_state = all_frame_count * + + ; MatrixXd A{n_state, n_state};

A.setZero();

VectorXd b{n_state};

b.setZero(); map<double, ImageFrame>::iterator frame_i;

map<double, ImageFrame>::iterator frame_j;

int i = ;

for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end(); frame_i++, i++)

{

frame_j = next(frame_i); MatrixXd tmp_A(, );

tmp_A.setZero();

VectorXd tmp_b();

tmp_b.setZero(); double dt = frame_j->second.pre_integration->sum_dt; tmp_A.block<, >(, ) = -dt * Matrix3d::Identity();

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * dt * dt / * Matrix3d::Identity();

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * (frame_j->second.T - frame_i->second.T) / 100.0;

tmp_b.block<, >(, ) = frame_j->second.pre_integration->delta_p + frame_i->second.R.transpose() * frame_j->second.R * TIC[] - TIC[];

//cout << "delta_p " << frame_j->second.pre_integration->delta_p.transpose() << endl;

tmp_A.block<, >(, ) = -Matrix3d::Identity();

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * frame_j->second.R;

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * dt * Matrix3d::Identity();

tmp_b.block<, >(, ) = frame_j->second.pre_integration->delta_v;

//cout << "delta_v " << frame_j->second.pre_integration->delta_v.transpose() << endl; Matrix<double, , > cov_inv = Matrix<double, , >::Zero();

//cov.block<6, 6>(0, 0) = IMU_cov[i + 1];

//MatrixXd cov_inv = cov.inverse();

cov_inv.setIdentity(); MatrixXd r_A = tmp_A.transpose() * cov_inv * tmp_A;

VectorXd r_b = tmp_A.transpose() * cov_inv * tmp_b; A.block<, >(i * , i * ) += r_A.topLeftCorner<, >();

b.segment<>(i * ) += r_b.head<>(); A.bottomRightCorner<, >() += r_A.bottomRightCorner<, >();

b.tail<>() += r_b.tail<>(); A.block<, >(i * , n_state - ) += r_A.topRightCorner<, >();

A.block<, >(n_state - , i * ) += r_A.bottomLeftCorner<, >();

}

A = A * 1000.0;

b = b * 1000.0;

x = A.ldlt().solve(b);

double s = x(n_state - ) / 100.0;

ROS_DEBUG("estimated scale: %f", s);

g = x.segment<>(n_state - );

ROS_DEBUG_STREAM(" result g " << g.norm() << " " << g.transpose());

if(fabs(g.norm() - G.norm()) > 1.0 || s < )

{

return false;

} RefineGravity(all_image_frame, g, x);

s = (x.tail<>())() / 100.0;

(x.tail<>())() = s;

ROS_DEBUG_STREAM(" refine " << g.norm() << " " << g.transpose());

if(s < 0.0 )

return false;

else

return true;

}

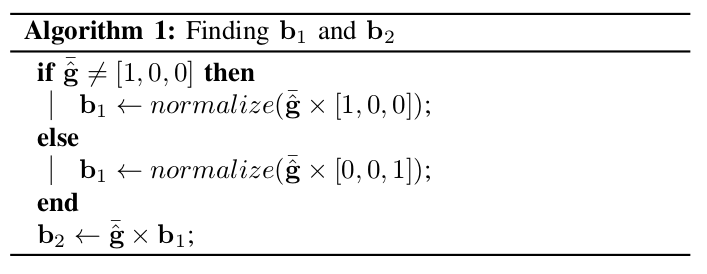

这里在求出g之后,还对其进行了优化,方法为LDLT分解,公式如下。

void RefineGravity(map<double, ImageFrame> &all_image_frame, Vector3d &g, VectorXd &x)

{

Vector3d g0 = g.normalized() * G.norm();

Vector3d lx, ly;

//VectorXd x;

int all_frame_count = all_image_frame.size();

int n_state = all_frame_count * + + ; MatrixXd A{n_state, n_state};

A.setZero();

VectorXd b{n_state};

b.setZero(); map<double, ImageFrame>::iterator frame_i;

map<double, ImageFrame>::iterator frame_j;

for(int k = ; k < ; k++)

{

MatrixXd lxly(, );

lxly = TangentBasis(g0);

int i = ;

for (frame_i = all_image_frame.begin(); next(frame_i) != all_image_frame.end(); frame_i++, i++)

{

frame_j = next(frame_i); MatrixXd tmp_A(, );

tmp_A.setZero();

VectorXd tmp_b();

tmp_b.setZero(); double dt = frame_j->second.pre_integration->sum_dt; tmp_A.block<, >(, ) = -dt * Matrix3d::Identity();

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * dt * dt / * Matrix3d::Identity() * lxly;

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * (frame_j->second.T - frame_i->second.T) / 100.0;

tmp_b.block<, >(, ) = frame_j->second.pre_integration->delta_p + frame_i->second.R.transpose() * frame_j->second.R * TIC[] - TIC[] - frame_i->second.R.transpose() * dt * dt / * g0; tmp_A.block<, >(, ) = -Matrix3d::Identity();

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * frame_j->second.R;

tmp_A.block<, >(, ) = frame_i->second.R.transpose() * dt * Matrix3d::Identity() * lxly;

tmp_b.block<, >(, ) = frame_j->second.pre_integration->delta_v - frame_i->second.R.transpose() * dt * Matrix3d::Identity() * g0; Matrix<double, , > cov_inv = Matrix<double, , >::Zero();

//cov.block<6, 6>(0, 0) = IMU_cov[i + 1];

//MatrixXd cov_inv = cov.inverse();

cov_inv.setIdentity(); MatrixXd r_A = tmp_A.transpose() * cov_inv * tmp_A;

VectorXd r_b = tmp_A.transpose() * cov_inv * tmp_b; A.block<, >(i * , i * ) += r_A.topLeftCorner<, >();

b.segment<>(i * ) += r_b.head<>(); A.bottomRightCorner<, >() += r_A.bottomRightCorner<, >();

b.tail<>() += r_b.tail<>(); A.block<, >(i * , n_state - ) += r_A.topRightCorner<, >();

A.block<, >(n_state - , i * ) += r_A.bottomLeftCorner<, >();

}

A = A * 1000.0;

b = b * 1000.0;

x = A.ldlt().solve(b);

VectorXd dg = x.segment<>(n_state - );

g0 = (g0 + lxly * dg).normalized() * G.norm();

//double s = x(n_state - 1);

}

g = g0;

}

求加速度计偏置

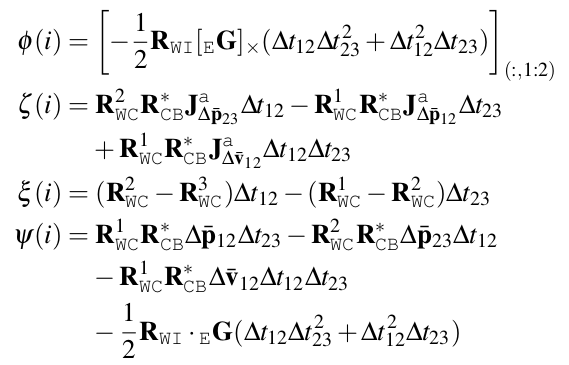

- VINS中并没有计算该值,和外参T一样,是在后面直接进行优化。VI ORB-SLAM中则单独对该值进行了求取,求取方式同样为SVD公式如下:

// Step 3.

// Use gravity magnitude 9.8 as constraint

// gI = [0;0;1], the normalized gravity vector in an inertial frame, NED type with no orientation.

cv::Mat gI = cv::Mat::zeros(,,CV_32F);

gI.at<float>() = ;

// Normalized approx. gravity vecotr in world frame

cv::Mat gwn = gwstar/cv::norm(gwstar);

// Debug log

//cout<<"gw normalized: "<<gwn<<endl; // vhat = (gI x gw) / |gI x gw|

cv::Mat gIxgwn = gI.cross(gwn);

double normgIxgwn = cv::norm(gIxgwn);

cv::Mat vhat = gIxgwn/normgIxgwn;

double theta = std::atan2(normgIxgwn,gI.dot(gwn));

// Debug log

//cout<<"vhat: "<<vhat<<", theta: "<<theta*180.0/M_PI<<endl; Eigen::Vector3d vhateig = Converter::toVector3d(vhat);

Eigen::Matrix3d RWIeig = Sophus::SO3::exp(vhateig*theta).matrix();

cv::Mat Rwi = Converter::toCvMat(RWIeig);

cv::Mat GI = gI*ConfigParam::GetG();//9.8012;

// Solve C*x=D for x=[s,dthetaxy,ba] (1+2+3)x1 vector

cv::Mat C = cv::Mat::zeros(*(N-),,CV_32F);

cv::Mat D = cv::Mat::zeros(*(N-),,CV_32F); for(int i=; i<N-; i++)

{

//KeyFrameInit* pKF1 = vKFInit[i];//vScaleGravityKF[i];

KeyFrameInit* pKF2 = vKFInit[i+];

KeyFrameInit* pKF3 = vKFInit[i+];

// Delta time between frames

double dt12 = pKF2->mIMUPreInt.getDeltaTime();

double dt23 = pKF3->mIMUPreInt.getDeltaTime();

// Pre-integrated measurements

cv::Mat dp12 = Converter::toCvMat(pKF2->mIMUPreInt.getDeltaP());

cv::Mat dv12 = Converter::toCvMat(pKF2->mIMUPreInt.getDeltaV());

cv::Mat dp23 = Converter::toCvMat(pKF3->mIMUPreInt.getDeltaP());

cv::Mat Jpba12 = Converter::toCvMat(pKF2->mIMUPreInt.getJPBiasa());

cv::Mat Jvba12 = Converter::toCvMat(pKF2->mIMUPreInt.getJVBiasa());

cv::Mat Jpba23 = Converter::toCvMat(pKF3->mIMUPreInt.getJPBiasa());

// Pose of camera in world frame

cv::Mat Twc1 = vTwc[i].clone();//pKF1->GetPoseInverse();

cv::Mat Twc2 = vTwc[i+].clone();//pKF2->GetPoseInverse();

cv::Mat Twc3 = vTwc[i+].clone();//pKF3->GetPoseInverse();

// Position of camera center

cv::Mat pc1 = Twc1.rowRange(,).col();

cv::Mat pc2 = Twc2.rowRange(,).col();

cv::Mat pc3 = Twc3.rowRange(,).col();

// Rotation of camera, Rwc

cv::Mat Rc1 = Twc1.rowRange(,).colRange(,);

cv::Mat Rc2 = Twc2.rowRange(,).colRange(,);

cv::Mat Rc3 = Twc3.rowRange(,).colRange(,);

// Stack to C/D matrix

// lambda*s + phi*dthetaxy + zeta*ba = psi

cv::Mat lambda = (pc2-pc1)*dt23 + (pc2-pc3)*dt12;

cv::Mat phi = - 0.5*(dt12*dt12*dt23 + dt12*dt23*dt23)*Rwi*SkewSymmetricMatrix(GI); // note: this has a '-', different to paper

cv::Mat zeta = Rc2*Rcb*Jpba23*dt12 + Rc1*Rcb*Jvba12*dt12*dt23 - Rc1*Rcb*Jpba12*dt23;

cv::Mat psi = (Rc1-Rc2)*pcb*dt23 + Rc1*Rcb*dp12*dt23 - (Rc2-Rc3)*pcb*dt12

- Rc2*Rcb*dp23*dt12 - Rc1*Rcb*dv12*dt23*dt12 - 0.5*Rwi*GI*(dt12*dt12*dt23 + dt12*dt23*dt23); // note: - paper

lambda.copyTo(C.rowRange(*i+,*i+).col());

phi.colRange(,).copyTo(C.rowRange(*i+,*i+).colRange(,)); //only the first 2 columns, third term in dtheta is zero, here compute dthetaxy 2x1.

zeta.copyTo(C.rowRange(*i+,*i+).colRange(,));

psi.copyTo(D.rowRange(*i+,*i+)); // Debug log

//cout<<"iter "<<i<<endl;

} // Use svd to compute C*x=D, x=[s,dthetaxy,ba] 6x1 vector

// C = u*w*vt, u*w*vt*x=D

// Then x = vt'*winv*u'*D

cv::Mat w2,u2,vt2;

// Note w2 is 6x1 vector by SVDecomp()

// C is changed in SVDecomp() with cv::SVD::MODIFY_A for speed

cv::SVDecomp(C,w2,u2,vt2,cv::SVD::MODIFY_A);

// Debug log

//cout<<"u2:"<<endl<<u2<<endl;

//cout<<"vt2:"<<endl<<vt2<<endl;

//cout<<"w2:"<<endl<<w2<<endl; // Compute winv

cv::Mat w2inv=cv::Mat::eye(,,CV_32F);

for(int i=;i<;i++)

{

if(fabs(w2.at<float>(i))<1e-)

{

w2.at<float>(i) += 1e-;

// Test log

cerr<<"w2(i) < 1e-10, w="<<endl<<w2<<endl;

} w2inv.at<float>(i,i) = ./w2.at<float>(i);

}

// Then y = vt'*winv*u'*D

cv::Mat y = vt2.t()*w2inv*u2.t()*D; double s_ = y.at<float>();

cv::Mat dthetaxy = y.rowRange(,);

cv::Mat dbiasa_ = y.rowRange(,);

Vector3d dbiasa_eig = Converter::toVector3d(dbiasa_); // dtheta = [dx;dy;0]

cv::Mat dtheta = cv::Mat::zeros(,,CV_32F);

dthetaxy.copyTo(dtheta.rowRange(,));

Eigen::Vector3d dthetaeig = Converter::toVector3d(dtheta);

// Rwi_ = Rwi*exp(dtheta)

Eigen::Matrix3d Rwieig_ = RWIeig*Sophus::SO3::exp(dthetaeig).matrix();

cv::Mat Rwi_ = Converter::toCvMat(Rwieig_);

VI ORB-SLAM初始化与VINS初始化对比(将vi orb-slam初始化方法移植到vins中)的更多相关文章

- 关于跨进程使用回调函数的研究:以跨进程获取Richedit中RTF流为例(在Delphi 初始化每一个TWinControl 对象时,将会在窗体 的属性(PropData)中加入一些标志,DLL的HInstance的值与HOST 进程的HInstance并不一致)

建议先参考我上次写的博文跨进程获取Richedit中Text: 获得QQ聊天输入框中的内容 拿到这个问题,我习惯性地会从VCL内核开始分析.找到TRichEdit声明的单元,分析TRichEdit保存 ...

- SLAM+语音机器人DIY系列:(六)SLAM建图与自主避障导航——2.google-cartographer机器人SLAM建图

摘要 通过前面的基础学习,本章进入最为激动的机器人自主导航的学习.在前面的学习铺垫后,终于迎来了最大乐趣的时刻,就是赋予我们的miiboo机器人能自由行走的生命.本章将围绕机器人SLAM建图.导航避障 ...

- Linux下对比两个文件夹的方法

最近拿到一份源代码,要命的是这份源代码是浅克隆模式的git包,所以无法完整显示里面的修改的内容. 今天花了一点点时间,找了一个在Linux对比两个文件夹的方法. 其实方法很简单,用meld 去对比两个 ...

- (转) SLAM系统的研究点介绍 与 Kinect视觉SLAM技术介绍

首页 视界智尚 算法技术 每日技术 来打我呀 注册 SLAM系统的研究点介绍 本文主要谈谈SLAM中的各个研究点,为研究生们(应该是博客的多数读者吧)作一个提纲挈领的摘要.然后,我 ...

- 为了让vi命令也可以使用vim的配置,需要修改 vi /etc/bashrc 增加一行 alias vi='vim'此时,经过上面配置已经可以显示语法高亮了

为了让vi命令也可以使用vim的配置,需要修改 vi /etc/bashrc 增加一行 aliasvi='vim'此时,经过上面配置已经可以显示语法高亮了

- easyui的combobox下拉框初始化默认值以及保持该值一直显示的方法

easyui的combobox下拉框默认初始值是空,下面是实现从远程加载数据之后初始化默认值,以及让该值一直排在下拉框的最顶部的方式. 目前的需求是需要在初始化的时候添加"全部数据库&quo ...

- 一天搞定CSS: 标签样式初始化(CSS reset)及淘宝样式初始化代码--09

样式初始化:是指对HTML中某些标签的默认样式进行清除 样式初始化目的: 不同浏览器的默认样式不一样,若不清理,会导致相同的代码在浏览器中解析结果不一样,为了避免这种情况,所以需要进行样式初始化. 代 ...

- k8s中初始化容器(init container)的作用及其使用方法

概述 在容器的部署过程中,有的时候需要在容器运行之前进行一些预配置的工作,比如下载配置,判断某些服务是否启动,修改配置等一些准备的工作,想要实现这些功能,在k8s中可以使用初始化容器,在应用容器运行之 ...

- Delphi会自动初始化全局变量和类成员变量,但不初始化局部变量

If you don't explicitly initialize a global variable, the compiler initializes it to 0. Object insta ...

随机推荐

- Entity Framework 6.X实现记录执行的SQL功能

Entity Framework在使用时,很多时间操纵的是Model,并没有写sql语句,有时候为了调试或优化等,又需要追踪Entity framework自动生成的sql(最好还能记录起来,方便出错 ...

- 执行SQL的DbHelperSQL

using System; using System.Collections; using System.Collections.Specialized; using System.Data; usi ...

- [翻译] NMBottomTabBarController

NMBottomTabBarController A customisable tab bar controller for iOS written in Objective C. It uses a ...

- 统计过程控制与评价 Cpk、SPC、PPM

Cpk(Process capability index)--工序能力指数 SPC(Statisical Process Control)--工艺过程统计受控状态分析 PPM(Parts Per Mi ...

- QT 登录记住密码方法之一:Qt QSettings读写配置文件

不过本文写的是明文保存,最好还是加密一下,以防文件被非法读取 /**登录初始化的时候处理这部分操作*/ Settings cfg("user.ini",QSettings::Ini ...

- JavaScript创建对象的6种方式

JavaScript创建对象简单的说,无非就是使用内置对象(Object)或各种自定义对象,当然还可以用JSON,但写法有很多种,也能混合使用. 1.对象字面量的方式 person = {name : ...

- VMWare虚拟机下为Ubuntu 12.04.1配置静态IP(NAT方式)

背景 在虚拟机下运行操作系统,尤其是Linux系统已经是非常常见的做法.有时你想在虚拟机下搭建一个(模拟)服务器来供主机访问,比如搭建一个telnet/ssh.此时你会发现,每次启动虚拟机,VMWar ...

- centos下mysqlreport安装和使用

首先查看你的机器是否安装了perl: #perl -v 显示版本号即表示已安装 然后: #yum install perl-DBD-mysql perl-DBI #yum install mysqlr ...

- Hadoop学习之路(十四)MapReduce的核心运行机制

概述 一个完整的 MapReduce 程序在分布式运行时有两类实例进程: 1.MRAppMaster:负责整个程序的过程调度及状态协调 2.Yarnchild:负责 map 阶段的整个数据处理流程 3 ...

- 最长公共子序列&最长公共子串

首先区别最长公共子串和最长公共子序列 LCS(计算机科学算法:最长公共子序列)_百度百科 最长公共子串,这个子串要求在原字符串中是连续的.而最长公共子序列则并不要求连续. 最长公共子序列: http ...