TensorFlow从入门到理解(五):你的第一个循环神经网络RNN(回归例子)

运行代码:

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt BATCH_START = 0

TIME_STEPS = 20

BATCH_SIZE = 50

INPUT_SIZE = 1

OUTPUT_SIZE = 1

CELL_SIZE = 10

LR = 0.006 def get_batch():

global BATCH_START, TIME_STEPS

# xs shape (50batch, 20steps)

xs = np.arange(BATCH_START, BATCH_START+TIME_STEPS*BATCH_SIZE).reshape((BATCH_SIZE, TIME_STEPS)) / (10*np.pi)

seq = np.sin(xs)

res = np.cos(xs)

BATCH_START += TIME_STEPS

# plt.plot(xs[0, :], res[0, :], 'r', xs[0, :], seq[0, :], 'b--')

# plt.show()

# returned seq, res and xs: shape (batch, step, input)

return [seq[:, :, np.newaxis], res[:, :, np.newaxis], xs] class LSTMRNN(object):

def __init__(self, n_steps, input_size, output_size, cell_size, batch_size):

self.n_steps = n_steps

self.input_size = input_size

self.output_size = output_size

self.cell_size = cell_size

self.batch_size = batch_size

with tf.name_scope('inputs'):

self.xs = tf.placeholder(tf.float32, [None, n_steps, input_size], name='xs')

self.ys = tf.placeholder(tf.float32, [None, n_steps, output_size], name='ys')

with tf.variable_scope('in_hidden'):

self.add_input_layer()

with tf.variable_scope('LSTM_cell'):

self.add_cell()

with tf.variable_scope('out_hidden'):

self.add_output_layer()

with tf.name_scope('cost'):

self.compute_cost()

with tf.name_scope('train'):

self.train_op = tf.train.AdamOptimizer(LR).minimize(self.cost) def add_input_layer(self,):

l_in_x = tf.reshape(self.xs, [-1, self.input_size], name='2_2D') # (batch*n_step, in_size)

# Ws (in_size, cell_size)

Ws_in = self._weight_variable([self.input_size, self.cell_size])

# bs (cell_size, )

bs_in = self._bias_variable([self.cell_size,])

# l_in_y = (batch * n_steps, cell_size)

with tf.name_scope('Wx_plus_b'):

l_in_y = tf.matmul(l_in_x, Ws_in) + bs_in

# reshape l_in_y ==> (batch, n_steps, cell_size)

self.l_in_y = tf.reshape(l_in_y, [-1, self.n_steps, self.cell_size], name='2_3D') def add_cell(self):

lstm_cell = tf.contrib.rnn.BasicLSTMCell(self.cell_size, forget_bias=1.0, state_is_tuple=True)

with tf.name_scope('initial_state'):

self.cell_init_state = lstm_cell.zero_state(self.batch_size, dtype=tf.float32)

self.cell_outputs, self.cell_final_state = tf.nn.dynamic_rnn(

lstm_cell, self.l_in_y, initial_state=self.cell_init_state, time_major=False) def add_output_layer(self):

# shape = (batch * steps, cell_size)

l_out_x = tf.reshape(self.cell_outputs, [-1, self.cell_size], name='2_2D')

Ws_out = self._weight_variable([self.cell_size, self.output_size])

bs_out = self._bias_variable([self.output_size, ])

# shape = (batch * steps, output_size)

with tf.name_scope('Wx_plus_b'):

self.pred = tf.matmul(l_out_x, Ws_out) + bs_out def compute_cost(self):

losses = tf.contrib.legacy_seq2seq.sequence_loss_by_example(

[tf.reshape(self.pred, [-1], name='reshape_pred')],

[tf.reshape(self.ys, [-1], name='reshape_target')],

[tf.ones([self.batch_size * self.n_steps], dtype=tf.float32)],

average_across_timesteps=True,

softmax_loss_function=self.ms_error,

name='losses'

)

with tf.name_scope('average_cost'):

self.cost = tf.div(

tf.reduce_sum(losses, name='losses_sum'),

self.batch_size,

name='average_cost')

tf.summary.scalar('cost', self.cost) @staticmethod

def ms_error(labels, logits):

return tf.square(tf.subtract(labels, logits)) def _weight_variable(self, shape, name='weights'):

initializer = tf.random_normal_initializer(mean=0., stddev=1.,)

return tf.get_variable(shape=shape, initializer=initializer, name=name) def _bias_variable(self, shape, name='biases'):

initializer = tf.constant_initializer(0.1)

return tf.get_variable(name=name, shape=shape, initializer=initializer) if __name__ == '__main__':

model = LSTMRNN(TIME_STEPS, INPUT_SIZE, OUTPUT_SIZE, CELL_SIZE, BATCH_SIZE)

sess = tf.Session()

merged = tf.summary.merge_all()

writer = tf.summary.FileWriter("logs", sess.graph)

init = tf.global_variables_initializer()

sess.run(init)

# relocate to the local dir and run this line to view it on Chrome (http://0.0.0.0:6006/):

# $ tensorboard --logdir='logs' plt.ion()

plt.show()

for i in range(200):

seq, res, xs = get_batch()

if i == 0:

feed_dict = {

model.xs: seq,

model.ys: res,

# create initial state

}

else:

feed_dict = {

model.xs: seq,

model.ys: res,

model.cell_init_state: state # use last state as the initial state for this run

} _, cost, state, pred = sess.run(

[model.train_op, model.cost, model.cell_final_state, model.pred],

feed_dict=feed_dict) # plotting

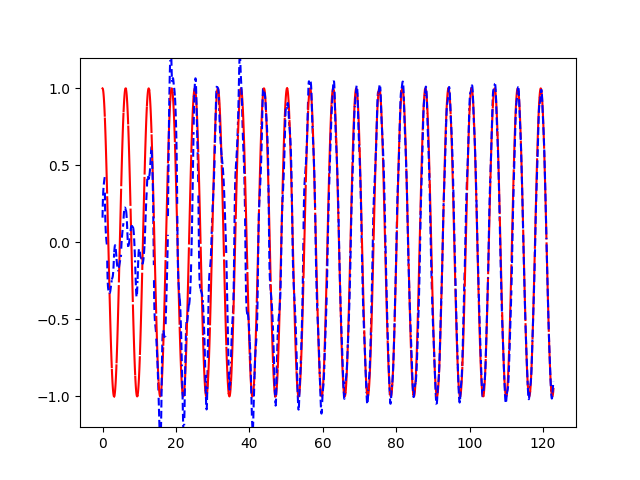

plt.plot(xs[0, :], res[0].flatten(), 'r', xs[0, :], pred.flatten()[:TIME_STEPS], 'b--')

plt.ylim((-1.2, 1.2))

plt.draw()

plt.pause(0.3) if i % 20 == 0:

print('cost: ', round(cost, 4))

result = sess.run(merged, feed_dict)

writer.add_summary(result, i)

运行结果:

TensorFlow从入门到理解(五):你的第一个循环神经网络RNN(回归例子)的更多相关文章

- TensorFlow从入门到理解(四):你的第一个循环神经网络RNN(分类例子)

运行代码: import tensorflow as tf from tensorflow.examples.tutorials.mnist import input_data # set rando ...

- TensorFlow从入门到理解

一.<莫烦Python>学习笔记: TensorFlow从入门到理解(一):搭建开发环境[基于Ubuntu18.04] TensorFlow从入门到理解(二):你的第一个神经网络 Tens ...

- 通过keras例子理解LSTM 循环神经网络(RNN)

博文的翻译和实践: Understanding Stateful LSTM Recurrent Neural Networks in Python with Keras 正文 一个强大而流行的循环神经 ...

- 基于TensorFlow的循环神经网络(RNN)

RNN适用场景 循环神经网络(Recurrent Neural Network)适合处理和预测时序数据 RNN的特点 RNN的隐藏层之间的节点是有连接的,他的输入是输入层的输出向量.extend(上一 ...

- TensorFlow从入门到理解(六):可视化梯度下降

运行代码: import tensorflow as tf import numpy as np import matplotlib.pyplot as plt from mpl_toolkits.m ...

- TensorFlow从入门到理解(三):你的第一个卷积神经网络(CNN)

运行代码: from __future__ import print_function import tensorflow as tf from tensorflow.examples.tutoria ...

- TensorFlow从入门到理解(二):你的第一个神经网络

运行代码: from __future__ import print_function import tensorflow as tf import numpy as np import matplo ...

- TensorFlow从入门到理解(一):搭建开发环境【基于Ubuntu18.04】

*注:教程及本文章皆使用Python3+语言,执行.py文件都是用终端(如果使用Python2+和IDE都会和本文描述有点不符) 一.安装,测试,卸载 TensorFlow官网介绍得很全面,很完美了, ...

- 循环神经网络-RNN入门

首先学习RNN需要一定的基础,即熟悉普通的前馈神经网络,特别是BP神经网络,最好能够手推. 所谓前馈,并不是说信号不能反向传递,而是网络在拓扑结构上不存在回路和环路. 而RNN最大的不同就是存在环路. ...

随机推荐

- linux server 产生大量 Too many open files CLOSE_WAIT激增

情景描述:系统产生大量“Too many open files” 原因分析:在服务器与客户端通信过程中,因服务器发生了socket未关导致的closed_wait发生,致使监听port打开的句柄数到了 ...

- Gnome添加Open with Code菜单

解决方法 测试环境: Manjaro Linux Rolling at 2018-08-31 19:04:49 Microsoft Visual Stdio Code 1.26.1 Bulid: 20 ...

- JavaScript ES6 核心功能一览

JavaScript 在过去几年里发生了很大的变化.这里介绍 12 个你马上就能用的新功能. JavaScript 历史 新的语言规范被称作 ECMAScript 6.也称为 ES6 或 ES2015 ...

- ECharts使用心得总结

https://blog.csdn.net/whiteosk/article/details/52684053 项目中的图表形式很多,基本可以在ECharts中找到相应实例,但UI设计图中的图表跟百度 ...

- 多文件协作,extern、static、头文件

多个cpp文件协同工作.使用外部函数.变量时,必须先声明再使用.声明外部函数(一般在main.cpp中),extern可省略(主函数中默认可访问外部函数)extern void RectArea(); ...

- jQuery中mouseleave和mouseout的区别详解

很多人在使用jQuery实现鼠标悬停效果时,一般都会用到mouseover和mouseout这对事件.而在实现过程中,可能会出现一些不理想的状况. 先看下使用mouseout的效果: <p> ...

- RPC与REST的区别

https://blog.csdn.net/douliw/article/details/52592188 RPC是以动词为中心的, REST是以名词为中心的, 此处的 动词指的是一些方法, 名词是指 ...

- 基于RBAC模型的权限系统设计(Github开源项目)

RBAC(基于角色的访问控制):英文名称Rose base Access Controller.本博客介绍这种模型的权限系统设计.取消了用户和权限的直接关联,改为通过用户关联角色.角色关联权限的方法来 ...

- Nginx+Keeplived双机热备(主从模式)

Nginx+Keeplived双机热备(主从模式) 参考资料: http://www.cnblogs.com/kevingrace/p/6138185.html 双机高可用一般是通过虚拟IP(漂移IP ...

- 剑指Offer_编程题_2

题目描述 请实现一个函数,将一个字符串中的空格替换成“%20”.例如,当字符串为We Are Happy.则经过替换之后的字符串为We%20Are%20Happy. class Solution ...