Off-heap Memory in Apache Flink and the curious JIT compiler

https://flink.apache.org/news/2015/09/16/off-heap-memory.html

Running data-intensive code in the JVM and making it well-behaved is tricky. Systems that put billions of data objects naively onto the JVM heap face unpredictable OutOfMemoryErrors and Garbage Collection stalls. Of course, you still want to to keep your data in memory as much as possible, for speed and responsiveness of the processing applications. In that context, “off-heap” has become almost something like a magic word to solve these problems.

In this blog post, we will look at how Flink exploits off-heap memory.

The feature is part of the upcoming release, but you can try it out with the latest nightly builds. We will also give a few interesting insights into the behavior for Java’s JIT compiler for highly optimized methods and loops.

Why actually bother with off-heap memory?

Given that Flink has a sophisticated level of managing on-heap memory, why do we even bother with off-heap memory? It is true that “out of memory” has been much less of a problem for Flink because of its heap memory management techniques. Nonetheless, there are a few good reasons to offer the possibility to move Flink’s managed memory out of the JVM heap:

Very large JVMs (100s of GBytes heap memory) tend to be tricky. It takes long to start them (allocate and initialize heap) and garbage collection stalls can be huge (minutes). While newer incremental garbage collectors (like G1) mitigate this problem to some extend, an even better solution is to just make the heap much smaller and allocate Flink’s managed memory chunks outside the heap.

I/O and network efficiency: In many cases, we write MemorySegments to disk (spilling) or to the network (data transfer). Off-heap memory can be written/transferred with zero copies, while heap memory always incurs an additional memory copy.

Off-heap memory can actually be owned by other processes. That way, cached data survives process crashes (due to user code exceptions) and can be used for recovery. Flink does not exploit that, yet, but it is interesting future work.

Flink传统的基于‘on-heap’ 内存管理机制,已经可以解决很多的java关于‘out of memory’或gc的问题,那我们为何还要用 ‘off-heap’的技术,

1. very large的JVM会要很长的启动时间,并且gc的代价也会很大

2. heap在写磁盘或network时,至少要一次copy,而off-heap可以实现zero copy

3. off-heap内存是进程共享的,JVM进程crash不会丢失数据

The opposite question is also valid. Why should Flink ever not use off-heap memory?

On-heap is easier and interplays better with tools. Some container environments and monitoring tools get confused when the monitored heap size does not remotely reflect the amount of memory used by the process.

Short lived memory segments are cheaper on the heap. Flink sometimes needs to allocate some short lived buffers, which works cheaper on the heap than off-heap.

Some operations are actually a bit faster on heap memory (or the JIT compiler understands them better).

为何Flink不直接用off-heap memory?

越强大的东西,一般都越麻烦,

所以一般case下,用on-heap就够了

The off-heap Memory Implementation

Given that all memory intensive internal algorithms are already implemented against the MemorySegment, our implementation to switch to off-heap memory is actually trivial.

You can compare it to replacing allByteBuffer.allocate(numBytes) calls with ByteBuffer.allocateDirect(numBytes).

In Flink’s case it meant that we made the MemorySegment abstract and added the HeapMemorySegment and OffHeapMemorySegment subclasses.

TheOffHeapMemorySegment takes the off-heap memory pointer from a java.nio.DirectByteBuffer and implements its specialized access methods using sun.misc.Unsafe.

We also made a few adjustments to the startup scripts and the deployment code to make sure that the JVM is permitted enough off-heap memory (direct memory, -XX:MaxDirectMemorySize).

使用off-heap在内存管理机制上和使用on-heap并没有太大的区别,

相比于NIO,使用ByteBuffer.allocate(numBytes)来分配heap内存,而用ByteBuffer.allocateDirect(numBytes)来分配off-heap内存

Flink,对MemorySegment,生成两个子类,HeapMemorySegment and OffHeapMemorySegment

其中OffHeapMemorySegment,以java.nio.DirectByteBuffer的形式使用off-heap memory, 通过sun.misc.Unsafe接口来操作这些memory

Understanding the JIT and tuning the implementation

The MemorySegment was (before our change) a standalone class, it was final (had no subclasses). Via Class Hierarchy Analysis (CHA), the JIT compiler was able to determine that all of the accessor method calls go to one specific implementation. That way, all method calls can be perfectly de-virtualized and inlined, which is essential to performance, and the basis for all further optimizations (like vectorization of the calling loop).

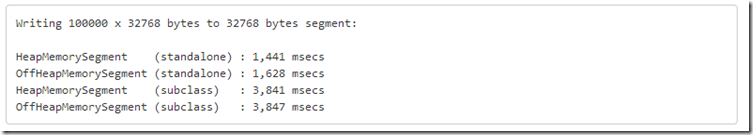

With two different memory segments loaded at the same time, the JIT compiler cannot perform the same level of optimization any more, which results in a noticeable difference in performance: A slowdown of about 2.7 x in the following example:

这里是考虑性能优化问题,

这里提出的一个问题就是,如果MemorySegment是standalone class类,没有之类,那么会比较高效,因为编译的时候,他所调研的method都是确定的,可以提前做优化;

如果具有两个子类,那么只有到真正运行到时候才知道是哪个子类,这样就不能提前做优化;

实际测,性能的差距在2.7倍左右

解决方法:

Approach 1: Make sure that only one memory segment implementation is ever loaded.

We re-structured the code a bit to make sure that all places that produce long-lived and short-lived memory segments instantiate the same MemorySegment subclass (Heap- or Off-Heap segment). Using factories rather than directly instantiating the memory segment classes, this was straightforward.

如果在代码里面只可能实例化其中的一个子类,另一个子类根本就没有被实例化过,那么JIT会意识到,并做优化;我们可以用factories来实例化对象,这样更方便;

Approach 2: Write one segment that handles both heap and off-heap memory

We created a class HybridMemorySegment which handles transparently both heap- and off-heap memory. It can be initialized either with a byte array (heap memory), or with a pointer to a memory region outside the heap (off-heap memory).

第二种方法就是用HybridMemorySegment,同时处理heap和off-heap,这样就不需要子类

并且有tricky的方式,可以做到透明的处理两种memory

细节看原文

Off-heap Memory in Apache Flink and the curious JIT compiler的更多相关文章

- Peeking into Apache Flink's Engine Room

http://flink.apache.org/news/2015/03/13/peeking-into-Apache-Flinks-Engine-Room.html Join Processin ...

- Flink监控:Monitoring Apache Flink Applications

This post originally appeared on the Apache Flink blog. It was reproduced here under the Apache Lice ...

- 深入理解Apache Flink

Apache Flink(下简称Flink)项目是大数据处理领域最近冉冉升起的一颗新星,其不同于其他大数据项目的诸多特性吸引了越来越多人的关注.本文将深入分析Flink的一些关键技术与特性,希望能够帮 ...

- 深入理解Apache Flink核心技术

深入理解Apache Flink核心技术 2016年02月18日 17:04:03 阅读数:1936 标签: Apache-Flink数据流程序员JVM 版权声明:本文为博主原创文章,未经博主允许 ...

- Apache Flink 开发环境搭建和应用的配置、部署及运行

https://mp.weixin.qq.com/s/noD2Jv6m-somEMtjWTJh3w 本文是根据 Apache Flink 系列直播课程整理而成,由阿里巴巴高级开发工程师沙晟阳分享,主要 ...

- Apache Flink 进阶(八):详解 Metrics 原理与实战

本文由 Apache Flink Contributor 刘彪分享,本文对两大问题进行了详细的介绍,即什么是 Metrics.如何使用 Metrics,并对 Metrics 监控实战进行解释说明. 什 ...

- Apache Flink

Flink 剖析 1.概述 在如今数据爆炸的时代,企业的数据量与日俱增,大数据产品层出不穷.今天给大家分享一款产品—— Apache Flink,目前,已是 Apache 顶级项目之一.那么,接下来, ...

- 新一代大数据处理引擎 Apache Flink

https://www.ibm.com/developerworks/cn/opensource/os-cn-apache-flink/index.html 大数据计算引擎的发展 这几年大数据的飞速发 ...

- Managing Large State in Apache Flink®: An Intro to Incremental Checkpointing

January 23, 2018- Apache Flink, Flink Features Stefan Richter and Chris Ward Apache Flink was purpos ...

随机推荐

- 013_VM+WinDbg安装

预计平均三天一课,录制过程中,大纲会实时更新(更改) 主讲:郁金香灬老师 QQ150330575 开发环境:VC6,VS2003,VS2008 www.yjxsoft.net www.yjxsoft ...

- Tomcat安装及配置

用来进行web开发的工具有很多,Tomcat是其中一个开源的且免费的java Web服务器,是Apache软件基金会的项目.电脑上安装配置Tomcat的方法和java有些相同,不过首先需要配置好jav ...

- Android 在资源文件(res/strings.xml)定义一维数组,间接定义二维数组

经常我们会在资源文件(res/strings.xml)定义字符串,一维数组,那定义二维数组?直接定义二维数组没找到,可以间接定义. 其实很简单,看过用过一次就可以记住了,一维数组估计大家经常用到,但是 ...

- 水题 HDOJ 4727 The Number Off of FFF

题目传送门 /* 水题:判断前后的差值是否为1,b[i]记录差值,若没有找到,则是第一个出错 */ #include <cstdio> #include <iostream> ...

- BZOJ3821 : 玄学

对操作建立线段树,每个节点维护一个有序的操作表,表示用$[l,r]$的操作在每段区间上的作用效果. 对于一个线段树节点,合并左右儿子信息只在该区间刚刚被填满时进行,利用归并排序,时间复杂度为$O(n\ ...

- BZOJ4134 : ljw和lzr的hack比赛

设$f[x]$为$x$子树里的子游戏的sg值,$h[x]$为$x$所有儿子节点$f[x]$的异或和,则: $f[x]=mex(y到x路径上所有点的h的异或和\ xor\ y到x路径上所有点的f的异或和 ...

- robotium 新建 android 测试项目:

注意:新建项目后再运行前一定要修改Manifest文件中的instrumentation 中的target package, 这个是测试的入口 1. 程序开始要通知系统我要测的app是什么 如何知道a ...

- cocos2d 中判断CGPoint或者CGSize是否相等

cocos2d 中判断CGPoint是否相等 调用CGPointEqualToPoint(point1, point2) 判断CGSize是否相等 调用CGSizeEqualToSize(size1, ...

- winform学习之----图片控件应用(上一张,下一张)

示例1: int i = 0; string[] path = Directory.GetFiles(@"C:\Users\Administrator\Desktop\图片&q ...

- Ubuntu SSH root user cannot login

Open /etc/ssh/sshd_config and check if PermitRootLogin is set to yes. If not, then set it to yes and ...