基于H5的摄像头视频数据流采集

最近,为了支持部门团队的项目,通过H5实现摄像头的视频流数据的捕获,抓取到视频流后,传输到视频识别服务器进行后续的逻辑处理。

视频数据的采集过程,其实是比较没有谱的过程,因为之前没有研究过HTML5操控摄像头并取视频流。

研究了下网络上的所谓的经验帖子,大都说基于WebRTC的方案,没有错,但是也不对,我们这里涉及到的技术,确切的说是基于H5的navigator以及MediaRecorder API实现,辅助的工具是FileReader以及Blob。

参考的资料:(相关的内容,在这里就不详细描述)

navigator的getUserMedia: https://developer.mozilla.org/en-US/docs/Web/API/Navigator/getUserMedia

MediaRecorder:https://developer.mozilla.org/en-US/docs/Web/API/MediaStream_Recording_API

FileReader:https://developer.mozilla.org/en-US/docs/Web/API/FileReader

Blob:https://developer.mozilla.org/en-US/docs/Web/API/Blob

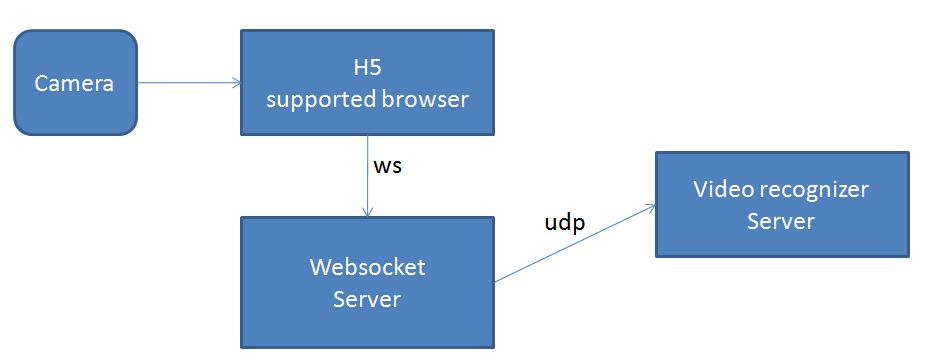

这个任务的实现逻辑,前端搭建一个Java的小Web应用,H5视频采集之后,通过WebSocket的方式,将视频流数据传递到Java的web小应用后台,然后从后台向视频识别服务器通过UDP传递视频数据。基本的架构如下图:

说明一下,本博文,视频采集的部分,参考了一个老外的帖子,从他的帖子,改造后,得到我们的项目需要的效果。参考的帖子地址:https://addpipe.com/blog/mediarecorder-api/

接下来,上前端页面以及代码. 大体说下,我的软件架构,jersey2 + freemarker + spring。

前端页面:

<!DOCTYPE html>

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8" />

<meta name="viewport" content="width=device-width, minimum-scale=1.0, initial-scale=1.0, user-scalable=yes">

<meta name="mobile-web-app-capable" content="yes">

<meta id="theme-color" name="theme-color" content="#fff">

<base target="_blank">

<title>Media Recorder API Demo</title>

<link rel="stylesheet" href="${basePath}/css/video/main.css" /> #basePath是Web项目的根地址,例如 http://10.90.9.20:9080/RDConsumer

<style>

a#downloadLink {

display: block;

margin: 1em ;

min-height: .2em;

}

p#data {

min-height: 6em;

}

</style>

</head>

<body>

<div id="container">

<div style = "text-align:center;">

<h1>Media Recorder API Demo </h1>

<h2>Record a 640x480 video using the media recorder API implemented in Firefox and Chrome</h2>

<video controls autoplay></video><br>

<button id="rec" onclick="onBtnRecordClicked()">Record</button>

<button id="pauseRes" onclick="onPauseResumeClicked()" disabled>Pause</button>

<button id="stop" onclick="onBtnStopClicked()" disabled>Stop</button>

</div>

<a id="downloadLink" download="mediarecorder.webm" name="mediarecorder.webm" href></a>

<p id="data"></p>

<script src="${basePath}/js/jquery-1.11.1.min.js"></script>

<script src="${basePath}/js/video/main.js"></script>

<h2>Works on:</h2>

<p><ul><li>Firefox and up</li><li>Chrome , (video only, enable <em>experimental Web Platform features</em> at <a href="chrome://flags/#enable-experimental-web-platform-features">chrome://flags</a>)</li><li>Chrome 49+</li></ul></p>

<h2>

<span style="color:red">Issues:</span>

<p><ul><li>Pause does not stop audio recording on Chrome ,</li></ul></p>

<h2>Containers & codecs:</h2>

<p><table style="width:100%">

<thead>

<tr>

<th> </th><th>Chrome </th><th>Chrome </th><th>Chrome +</th><th>Chrome +</th><th>Firefox +</th>

</tr>

</thead>

<tbody>

<tr>

<td><strong>Container</strong></td><td>webm</td><td>webm</td><td>webm</td><td>webm</td><td>webm</td>

</tr>

<tr>

<td><strong>Video</strong></td><td>VP8</td><td>VP8</td><td>VP8/VP9</td><td>VP8/VP9/H264</td><td>VP8</td>

</tr>

<tr>

<td><strong>Audio</strong></td><td>none</td><td>none</td><td>Opus @ 48kHz</td><td>Opus @ 48kHz</td><td>Vorbis @ 44.1 kHz</td>

</tr>

</tbody>

</table>

</p>

<h2>Links:</h2>

<p>

<ul>

<li>Article: <a target="_blank" href="https://addpipe.com/blog/mediarecorder-api/">https://addpipe.com/blog/mediarecorder-api/</a></li>

<li>GitHub: <a target="_blank" href="https://github.com/addpipe/Media-Recorder-API-Demo">https://github.com/addpipe/Media-Recorder-API-Demo</a></li>

<li>W3C Draft: <a target="_blank" href="http://w3c.github.io/mediacapture-record/MediaRecorder.html">http://w3c.github.io/mediacapture-record/MediaRecorder.html</a></li>

<li>Media Recorder API at % penetration thanks to Chrome: <a target="_blank" href="https://addpipe.com/blog/media-recorder-api-is-now-supported-by-65-of-all-desktop-internet-users/">https://addpipe.com/blog/media-recorder-api-is-now-supported-by-65-of-all-desktop-internet-users/</a></li>

</ul>

</p>

</div>

</body>

</html>

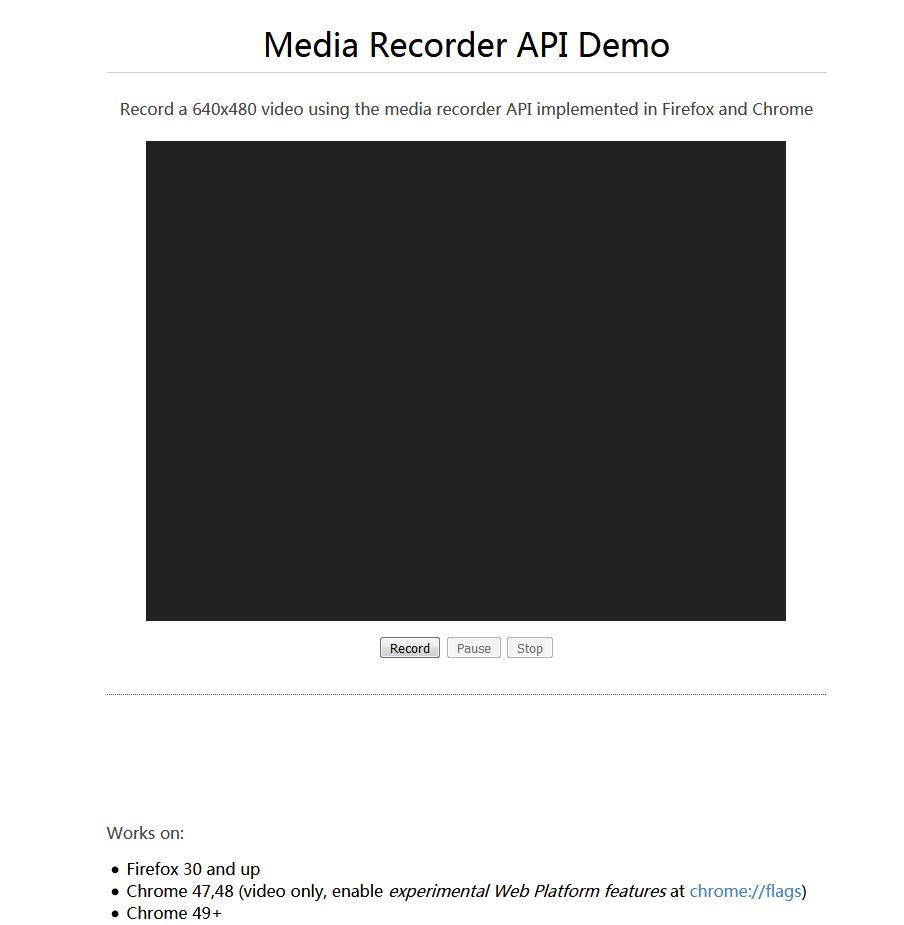

前端界面的效果图:

JS的代码(重点之一在这个JS里面的红色部分,下面代码是main.js的正文内容):

'use strict';

/* globals MediaRecorder */

// Spec is at http://dvcs.w3.org/hg/dap/raw-file/tip/media-stream-capture/RecordingProposal.html

navigator.getUserMedia = navigator.getUserMedia || navigator.webkitGetUserMedia || navigator.mozGetUserMedia || navigator.msGetUserMedia;

if(getBrowser() == "Chrome"){

var constraints = {"audio": true, "video": { "mandatory": { "minWidth": , "maxWidth": , "minHeight": ,"maxHeight": }, "optional": [] } };//Chrome

}else if(getBrowser() == "Firefox"){

var constraints = {audio: false, video: { width: { min: 640, ideal: 640, max: 640 }, height: { min: 480, ideal: 480, max: 480 }}}; //Firefox

}

var recBtn = document.querySelector('button#rec');

var pauseResBtn = document.querySelector('button#pauseRes');

var stopBtn = document.querySelector('button#stop');

var videoElement = document.querySelector('video');

var dataElement = document.querySelector('#data');

var downloadLink = document.querySelector('a#downloadLink');

videoElement.controls = false;

function errorCallback(error){

console.log('navigator.getUserMedia error: ', error);

}

/*

var mediaSource = new MediaSource();

mediaSource.addEventListener('sourceopen', handleSourceOpen, false);

var sourceBuffer;

*/

var mediaRecorder;

var chunks = [];

var count = ;

var wsurl = "ws://10.90.9.20:9080/RDConsumer/websocket"

var ws = null;

function createWs(){

var url = wsurl;

if ('WebSocket' in window) {

ws = new WebSocket(url);

} else if ('MozWebSocket' in window) {

ws = new MozWebSocket(url);

} else {

console.log("您的浏览器不支持WebSocket。");

return ;

}

}

function init() {

if (ws != null) {

console.log("现已连接");

return ;

}

createWs();

ws.onopen = function() {

//设置发信息送类型为:ArrayBuffer

ws.binaryType = "arraybuffer";

}

ws.onmessage = function(e) {

console.log(e.data.toString());

}

ws.onclose = function(e) {

console.log("onclose: closed");

ws = null;

createWs(); //这个函数在这里之所以再次调用,是为了解决视频传输的过程中突发的连接断开问题。

}

ws.onerror = function(e) {

console.log("onerror: error");

ws = null;

createWs(); //同上面的解释

}

}

$(document).ready(function(){

init();

})

function startRecording(stream) {

log('Start recording...');

if (typeof MediaRecorder.isTypeSupported == 'function'){

/*

MediaRecorder.isTypeSupported is a function announced in https://developers.google.com/web/updates/2016/01/mediarecorder and later introduced in the MediaRecorder API spec http://www.w3.org/TR/mediastream-recording/

*/

//这里涉及到视频的容器以及编解码参数,这个与浏览器有密切的关系

if (MediaRecorder.isTypeSupported('video/webm;codecs=vp9')) {

var options = {mimeType: 'video/webm;codecs=h264'};

} else if (MediaRecorder.isTypeSupported('video/webm;codecs=h264')) {

var options = {mimeType: 'video/webm;codecs=h264'};

} else if (MediaRecorder.isTypeSupported('video/webm;codecs=vp8')) {

var options = {mimeType: 'video/webm;codecs=vp8'};

}

log('Using '+options.mimeType);

mediaRecorder = new MediaRecorder(stream, options);

}else{

log('isTypeSupported is not supported, using default codecs for browser');

mediaRecorder = new MediaRecorder(stream);

}

pauseResBtn.textContent = "Pause";

mediaRecorder.start();

var url = window.URL || window.webkitURL;

videoElement.src = url ? url.createObjectURL(stream) : stream;

videoElement.play();

//这个地方,是视频数据捕获好了后,会触发MediaRecorder一个dataavailable的Event,在这里做视频数据的采集工作,主要是基于Blob进行转写,利用FileReader进行读取。FileReader一定

//要注册loadend的监听器,或者写onload的函数。在loadend的监听函数里面,进行格式转换,方便websocket进行数据传输,因为websocket的数据类型支持blob以及arrayBuffer,我们这里用

//的是arrayBuffer,所以,将视频数据的Blob转写为Unit8Buffer,便于websocket的后台服务用ByteBuffer接收。

mediaRecorder.ondataavailable = function(e) {

//log('Data available...');

//console.log(e.data);

//console.log(e.data.type);

//console.log(e);

chunks.push(e.data);

var reader = new FileReader();

reader.addEventListener("loadend", function() {

//reader.result是一个含有视频数据流的Blob对象

var buf = new Uint8Array(reader.result);

console.log(reader.result);

if(reader.result.byteLength > 0){ //加这个判断,是因为有很多数据是空的,这个没有必要发到后台服务器,减轻网络开销,提升性能吧。

ws.send(buf);

}

});

reader.readAsArrayBuffer(e.data);

};

mediaRecorder.onerror = function(e){

log('Error: ' + e);

};

mediaRecorder.onstart = function(){

log('Started & state = ' + mediaRecorder.state);

};

mediaRecorder.onstop = function(){

log('Stopped & state = ' + mediaRecorder.state);

var blob = new Blob(chunks, {type: "video/webm"});

chunks = [];

var videoURL = window.URL.createObjectURL(blob);

downloadLink.href = videoURL;

videoElement.src = videoURL;

downloadLink.innerHTML = 'Download video file';

var rand = Math.floor((Math.random() * ));

var name = "video_"+rand+".webm" ;

downloadLink.setAttribute( "download", name);

downloadLink.setAttribute( "name", name);

};

mediaRecorder.onpause = function(){

log('Paused & state = ' + mediaRecorder.state);

}

mediaRecorder.onresume = function(){

log('Resumed & state = ' + mediaRecorder.state);

}

mediaRecorder.onwarning = function(e){

log('Warning: ' + e);

};

}

//function handleSourceOpen(event) {

// console.log('MediaSource opened');

// sourceBuffer = mediaSource.addSourceBuffer('video/webm; codecs="vp9"');

// console.log('Source buffer: ', sourceBuffer);

//}

//点击按钮,启动视频流的采集。重点是getUserMedia函数使用。本案例中,视频采集的入口,是点击页面上的record按钮,也就是下面这个函数的逻辑。

function onBtnRecordClicked (){

if (typeof MediaRecorder === 'undefined' || !navigator.getUserMedia) {

alert('MediaRecorder not supported on your browser, use Firefox 30 or Chrome 49 instead.');

}else {

navigator.getUserMedia(constraints, startRecording, errorCallback);

recBtn.disabled = true;

pauseResBtn.disabled = false;

stopBtn.disabled = false;

}

}

function onBtnStopClicked(){

mediaRecorder.stop();

videoElement.controls = true;

recBtn.disabled = false;

pauseResBtn.disabled = true;

stopBtn.disabled = true;

}

function onPauseResumeClicked(){

if(pauseResBtn.textContent === "Pause"){

console.log("pause");

pauseResBtn.textContent = "Resume";

mediaRecorder.pause();

stopBtn.disabled = true;

}else{

console.log("resume");

pauseResBtn.textContent = "Pause";

mediaRecorder.resume();

stopBtn.disabled = false;

}

recBtn.disabled = true;

pauseResBtn.disabled = false;

}

function log(message){

dataElement.innerHTML = dataElement.innerHTML+'<br>'+message ;

}

//browser ID

function getBrowser(){

var nVer = navigator.appVersion;

var nAgt = navigator.userAgent;

var browserName = navigator.appName;

var fullVersion = ''+parseFloat(navigator.appVersion);

var majorVersion = parseInt(navigator.appVersion,);

var nameOffset,verOffset,ix;

// In Opera, the true version is after "Opera" or after "Version"

if ((verOffset=nAgt.indexOf("Opera"))!=-) {

browserName = "Opera";

fullVersion = nAgt.substring(verOffset+);

if ((verOffset=nAgt.indexOf("Version"))!=-)

fullVersion = nAgt.substring(verOffset+);

}

// In MSIE, the true version is after "MSIE" in userAgent

else if ((verOffset=nAgt.indexOf("MSIE"))!=-) {

browserName = "Microsoft Internet Explorer";

fullVersion = nAgt.substring(verOffset+);

}

// In Chrome, the true version is after "Chrome"

else if ((verOffset=nAgt.indexOf("Chrome"))!=-) {

browserName = "Chrome";

fullVersion = nAgt.substring(verOffset+);

}

// In Safari, the true version is after "Safari" or after "Version"

else if ((verOffset=nAgt.indexOf("Safari"))!=-) {

browserName = "Safari";

fullVersion = nAgt.substring(verOffset+);

if ((verOffset=nAgt.indexOf("Version"))!=-)

fullVersion = nAgt.substring(verOffset+);

}

// In Firefox, the true version is after "Firefox"

else if ((verOffset=nAgt.indexOf("Firefox"))!=-) {

browserName = "Firefox";

fullVersion = nAgt.substring(verOffset+);

}

// In most other browsers, "name/version" is at the end of userAgent

else if ( (nameOffset=nAgt.lastIndexOf(' ')+) <

(verOffset=nAgt.lastIndexOf('/')) )

{

browserName = nAgt.substring(nameOffset,verOffset);

fullVersion = nAgt.substring(verOffset+);

if (browserName.toLowerCase()==browserName.toUpperCase()) {

browserName = navigator.appName;

}

}

// trim the fullVersion string at semicolon/space if present

if ((ix=fullVersion.indexOf(";"))!=-)

fullVersion=fullVersion.substring(,ix);

if ((ix=fullVersion.indexOf(" "))!=-)

fullVersion=fullVersion.substring(,ix);

majorVersion = parseInt(''+fullVersion,);

if (isNaN(majorVersion)) {

fullVersion = ''+parseFloat(navigator.appVersion);

majorVersion = parseInt(navigator.appVersion,);

}

return browserName;

}

其中的byteLength的判断,是有原因的,前端打印的日志可以看出:我的这个案例,用的是Firefox的浏览器,因为我本地的Chrome的版本比较新,在应用启动的时候爆出错误:

时间紧,没有深入研究这个错误,所以一直都是Firefox基础上进行验证的。

下面剩下的就是Java后台的Websocket的服务了。直接上代码:

/*

* Copyright © reserved by roomdis.com, service for tgn company whose important business is rural e-commerce.

*/

package com.roomdis.mqr.infra.core; import java.io.IOException;

import java.net.DatagramPacket;

import java.net.DatagramSocket;

import java.net.InetAddress;

import java.net.SocketException;

import java.net.UnknownHostException;

import java.nio.ByteBuffer;

import java.util.Map;

import java.util.concurrent.ConcurrentHashMap; import javax.websocket.OnClose;

import javax.websocket.OnError;

import javax.websocket.OnMessage;

import javax.websocket.OnOpen;

import javax.websocket.Session;

import javax.websocket.server.ServerEndpoint; import org.apache.log4j.Logger;

import org.springframework.web.context.ContextLoader; import com.google.gson.Gson;

import com.roomdis.mqr.infra.msg.KefuMessage; /**

* @author shihuc

* @date 2017年8月22日 下午2:20:18

*/

@ServerEndpoint("/websocket")

public class WebsocketService { private static Logger logger = Logger.getLogger(WebsocketService.class); private HttpSendService httpSendService; private String videoRecServerHost = "10.90.7.10"; private int videoRecServerPort = ; /*

* 当存在多个客户端访问时,为了保证会话继续保持,将连接缓存。

*/

private static Map<String, WebsocketService> webSocketMap = new ConcurrentHashMap<String, WebsocketService>();

private Session session; private static final WebsocketService instance = new WebsocketService(); public static final WebsocketService getInstance() {

return instance;

} @OnMessage

public void onTextMessage(String message, Session session) throws IOException, InterruptedException { // Print the client message for testing purposes

logger.info("Received: " + message);

//TODO: 调用接口将消息发送给客户端后台服务系统

Gson gson = new Gson();

KefuMessage kfMsg = gson.fromJson(message, KefuMessage.class);

httpSendService = ContextLoader.getCurrentWebApplicationContext().getBean(HttpSendService.class);

} /**

* 主要用来接受二进制数据。

*

* @author shihuc

* @param message

* @param session

* @throws IOException

* @throws InterruptedException

*/

@OnMessage

public void onBinaryMessage(ByteBuffer message, Session session, boolean last) throws IOException, InterruptedException {

byte [] sentBuf = message.array(); logger.info("Binary Received: " + sentBuf.length + ", last: " + last); //下面的代码逻辑,是用UDP协议发送视频流数据到视频处理服务器做后续逻辑处理

//sendToVideoRecognizer(sentBuf);

} /**

* @author shihuc

* @param sentBuf

* @throws SocketException

* @throws UnknownHostException

* @throws IOException

*/

private void sendToVideoRecognizer(byte[] sentBuf) throws SocketException, UnknownHostException, IOException {

DatagramSocket client = new DatagramSocket();

InetAddress addr = InetAddress.getByName(videoRecServerHost);

DatagramPacket sendPacket = new DatagramPacket(sentBuf, sentBuf.length, addr, videoRecServerPort);

client.send(sendPacket);

client.close();

} // @OnOpen

// public void onOpen(Session session){

// this.session = session;

// String staffId = session.getQueryString();

// webSocketMap.put(staffId, this);

// logger.info(staffId + " client opened");

// } @OnOpen

public void onOpen(Session session){

logger.info("client opened: " + session.toString());

} @OnClose

public void onClose() {

logger.info("client onclose");

} @OnError

public void onError(Session session, Throwable error){

logger.info("connection onError");

logger.info(error.getCause());

} public boolean sendMessage(String message, String staffId) throws IOException{

WebsocketService client = webSocketMap.get(staffId);

if (client == null) {

return false;

}

boolean result=false;

try {

client.session.getBasicRemote().sendText(message);

result=true;

} catch (IOException e) {

try {

client.session.close();

} catch (IOException e1) {

e1.printStackTrace();

}

}

return result;

}

}

这里,重点要注意的是,@OnMessage注解对应的函数,入参非常有讲究的。对于arrayBuffer的二进制数据类型,参数个数必须是三个,最后的boolean的必须有,否则前端发送数据的时候,浏览器上会抛出错误:

最后,看看后台运行的日志:

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: false

[-- ::] [ INFO] [http-nio--exec-] [com.roomdis.mqr.infra.core.WebsocketService.onBinaryMessage(WebsocketService.java:)] - Binary Received: , last: true

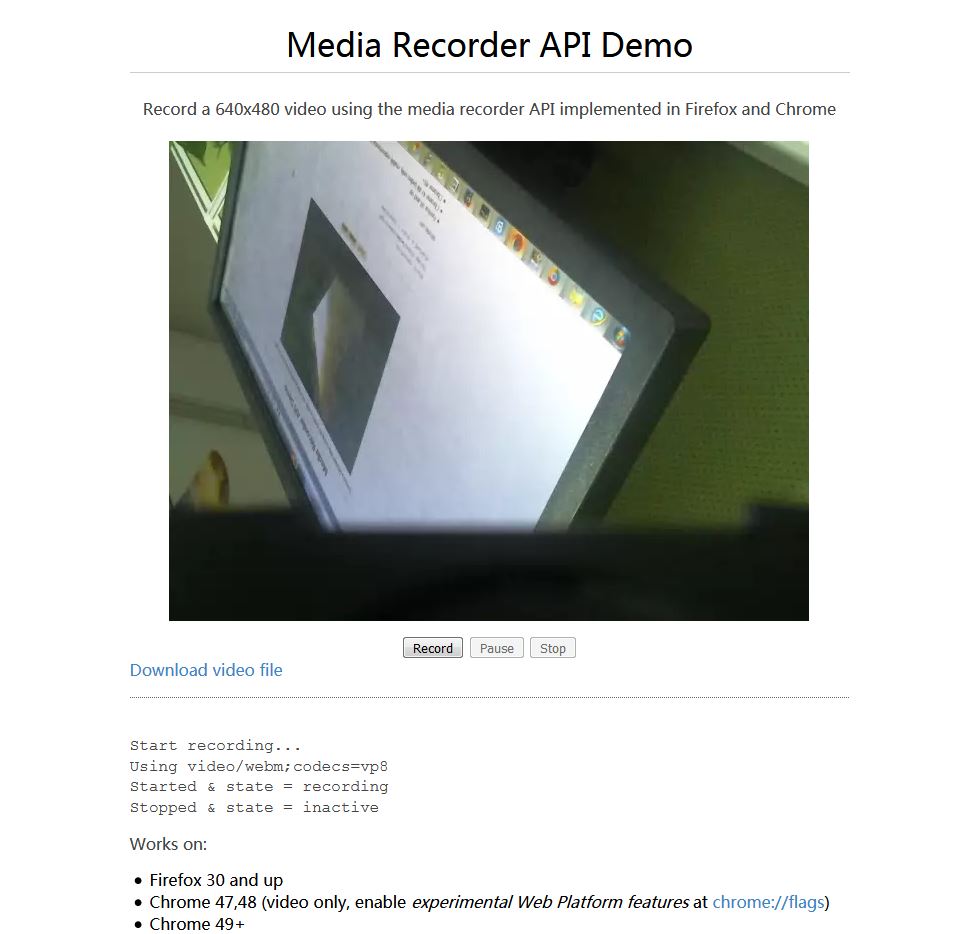

并附上一副前端运行的效果截图:

总结:

1. 重点研究getUserMedia。

2.重点研究MediaRecorder。

3.重点研究Blob以及FileReader。

4.重点研究Websocket的@OnMessage的注解函数的参数,以及数据传输中连接可能会断掉的处理方案。

2018-05-03

PS:既然有人对我这个研究有兴趣,我就将源码共享出来,帮助有需要的技术爱好者。源码地址在github上面:https://github.com/shihuc/VideoConverter

希望有兴趣的朋友,通过关注我的博客,共同互动,研究一些特别的应用!

基于H5的摄像头视频数据流采集的更多相关文章

- 基于opencv网络摄像头在ubuntu下的视频获取

基于opencv网络摄像头在ubuntu下的视频获取 1 工具 原料 平台 :UBUNTU12.04 安装库 Opencv-2.3 2 安装编译运行步骤 安装编译opencv-2.3 参 ...

- 基于opencv在摄像头ubuntu根据视频获取

基于opencv在摄像头ubuntu根据视频获取 1 工具 原料 平台 :UBUNTU12.04 安装库 Opencv-2.3 2 安装编译执行步骤 安装编译opencv-2.3 參考h ...

- javaCV开发详解之2:推流器实现,推本地摄像头视频到流媒体服务器以及摄像头录制视频功能实现(基于javaCV-FFMPEG、javaCV-openCV)

javaCV系列文章: javacv开发详解之1:调用本机摄像头视频 javaCV开发详解之2:推流器实现,推本地摄像头视频到流媒体服务器以及摄像头录制视频功能实现(基于javaCV-FFMPEG.j ...

- 如何用FFmpeg API采集摄像头视频和麦克风音频,并实现录制文件的功能

之前一直用Directshow技术采集摄像头数据,但是觉得涉及的细节比较多,要开发者比较了解Directshow的框架知识,学习起来有一点点难度.最近发现很多人问怎么用FFmpeg采集摄像头图像,事实 ...

- FPGA_VIP_V101 摄像头视频采集 调试总结之SDRAM引起的水平条纹噪声问题

FPGA_VIP_V101 摄像头视频采集 调试总结之SDRAM引起的水平条纹噪声问题 此问题困扰我很近,终于在最近的项目调整中总结了规律并解决了. 因为之前对sdram并不熟悉,用得也不是太多,于是 ...

- 基于PCIe的多路视频采集与显示子系统

基于PCIe的多路视频采集与显示子系统 1 概述 视频采集与显示子系统可以实时采集多路视频信号,并存储到视频采集队列中,借助高效的硬实时视频帧出入队列管理和PCIe C2H DMA引擎, ...

- 基于DirectShow的MPEG-4视频传输系统的研究与实现

1 引言 近年来,随着国民经济的发展,社会各个部门对于视频监视系统的需求越来越多.但目前的很多监视系统都跟具体的硬件相关,必须要具体的采集卡的支持才能实现.所以有必要开发一种具有通用性的视频监 ...

- Android中直播视频技术探究之---桌面屏幕视频数据源采集功能分析

一.前言 之前介绍了Android直播视频中一种视频源数据采集:摄像头Camera视频数据采集分析 中介绍了利用Camera的回调机制,获取摄像头的每一帧数据,然后进行二次处理进行推流.现在我们在介绍 ...

- 用Flask实现视频数据流传输

Flask 是一个 Python 实现的 Web 开发微框架.这篇文章是一个讲述如何用它实现传送视频数据流的详细教程. 我敢肯定,现在你已经知道我在O’Reilly Media上发布了有关Flask的 ...

随机推荐

- eShopOnContainers 知多少[3]:Identity microservice

首先感谢晓晨Master和EdisonChou的审稿!也感谢正在阅读的您! 引言 通常,服务所公开的资源和 API 必须仅限受信任的特定用户和客户端访问.那进行 API 级别信任决策的第一步就是身份认 ...

- 【死磕 Spring】----- IOC 之 加载 Bean

原文出自:http://cmsblogs.com 先看一段熟悉的代码: ClassPathResource resource = new ClassPathResource("bean.xm ...

- What?VS2019创建新项目居然没有.NET Core3.0的模板?Bug?

今天是个值得欢喜的日子,因为VS2019在今天正式发布了.作为微软粉,我已经用了一段时间的VS2019 RC版本了.但是,今天有很多小伙伴在我的<ASP.NET Core 3.0 上的gRPC服 ...

- Eureka服务下线后快速感知配置

现在由于eureka服务越来越多,发现服务提供者在停掉很久之后,服务调用者很长时间并没有感知到变化,依旧还在持续调用下线的服务,导致长时间后才能返回错误,因此需要调整eureka服务和客户端的配置,以 ...

- Mac实用技巧之:访达/Finder

更多Mac实用技巧系列文章请访问我的博客:Mac实用技巧系列文章 Finder就相当于windows XP系统的『我的电脑』或win7/win10系统里的『计算机』(打开后叫资源管理器),find是查 ...

- ASCII Art ヾ(≧∇≦*)ゝ

Conmajia, 2012 Updated on Feb. 18, 2018 What is ASCII art? It's graphic symbols formed by ASCII char ...

- 【带着canvas去流浪(7)】绘制水球图

目录 一. 任务说明 二. 重点提示 三. 示例代码 四. 文字淹水效果的实现 五. 关于canvas抗锯齿 六. 小结 示例代码托管在:http://www.github.com/dashnowor ...

- Identity4实现服务端+api资源控制+客户端请求

准备写一些关于Identity4相关的东西,最近也比较对这方面感兴趣.所有做个开篇笔记记录一下,以便督促自己下一个技术方案方向 已经写好的入门级别Identity4的服务+api资源访问控制和简单的客 ...

- day08 Html

<del>我被删除了</del> <!--delete--> <b>我是粗体</b> <!-- bold --> <i&g ...

- 零基础学Python--------第10章 文件及目录操作

第10章 文件及目录操作 10.1 基本文件操作 在Python中,内置了文件(File)对象.在使用文件对象时,首先需要通过内置的open() 方法创建一个文件对象,然后通过对象提供的方法进行一些基 ...