①CM+CDH6.2.0安装(全网最全)

CM+CDH6.2.0环境准备

一 虚拟机及CentOs7配置

CentOS下载地址:http://isoredirect.centos.org/centos/7/isos/x86_64/

CentOS百度网盘:https://pan.baidu.com/s/196ji62wTpIAhkTw9u4P6pw:提取码:seqd

VMware Workstation下载地址:https://www.vmware.com/cn/products/workstation-pro.html

VMware Workstation百度网盘:https://pan.baidu.com/s/1gaJMqZJXSHGUEw4tHS4fdA提取码:jrv8

master(16g+80g+2cpu+2核)+2台slave(8g+60g+2cpu+2核)

1.1 打开"VMware Workstation",选择“创建新的虚拟机”

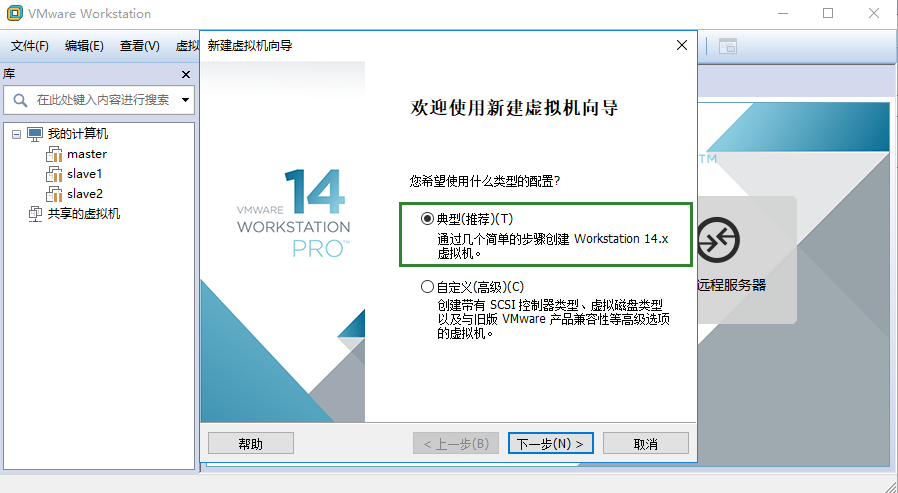

1.2 选择“典型”选项,点击“下一步 ”

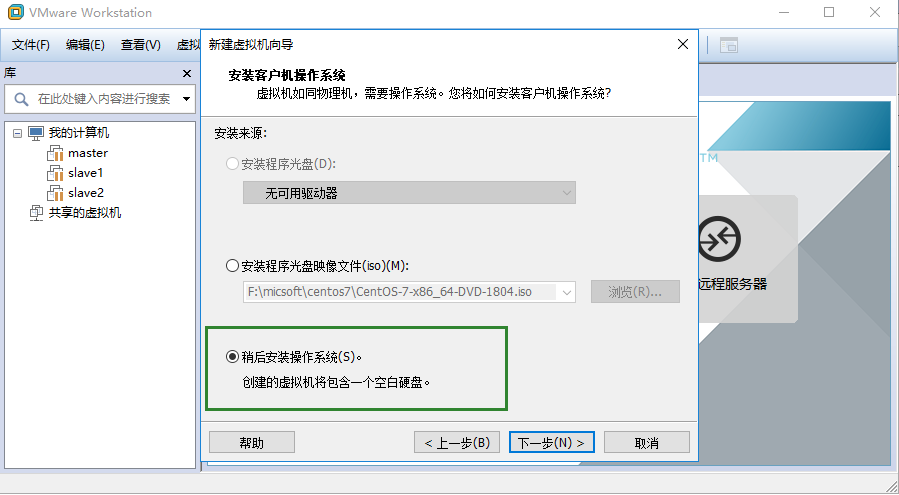

1.3 选择"稍后安装操作系统“,点击“下一步 ”

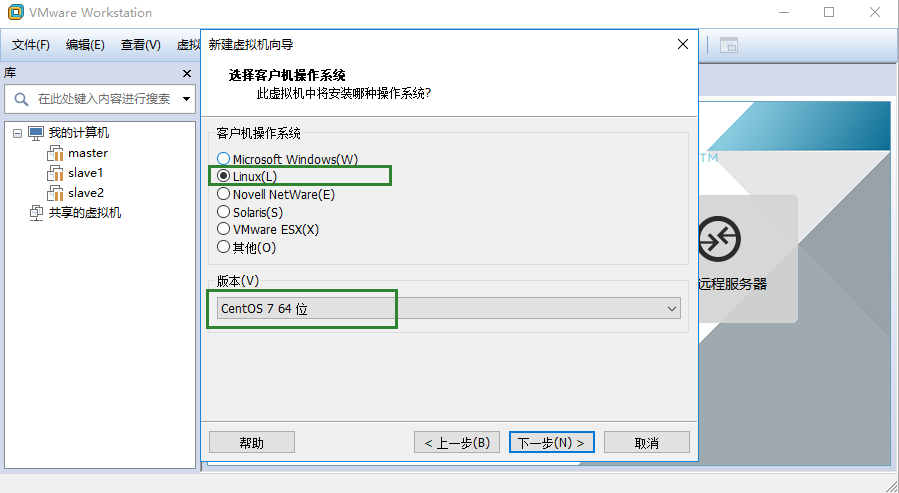

1.4 选择"Linux CentOS7 64位“,点击“下一步 ”

1.5 选择"虚拟机名称及存放位置“,点击“下一步 ”

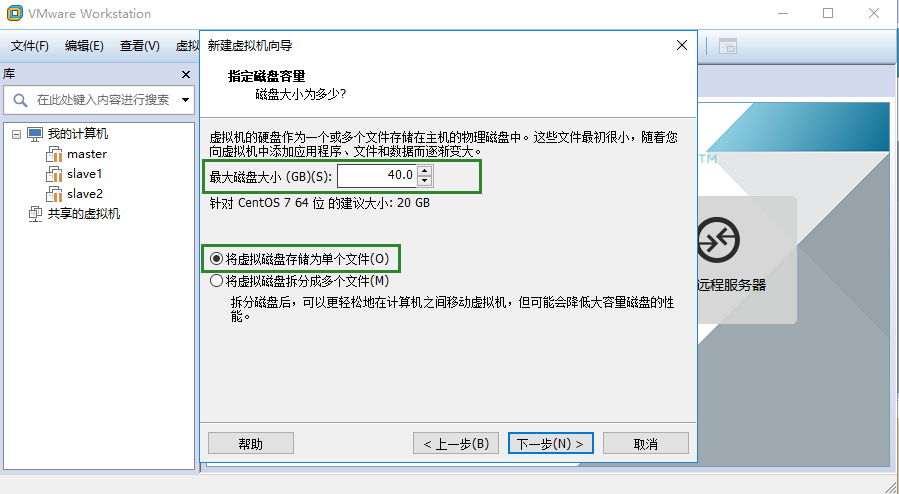

1.6 选择"磁盘大小及单文件存储“,点击“下一步 ”

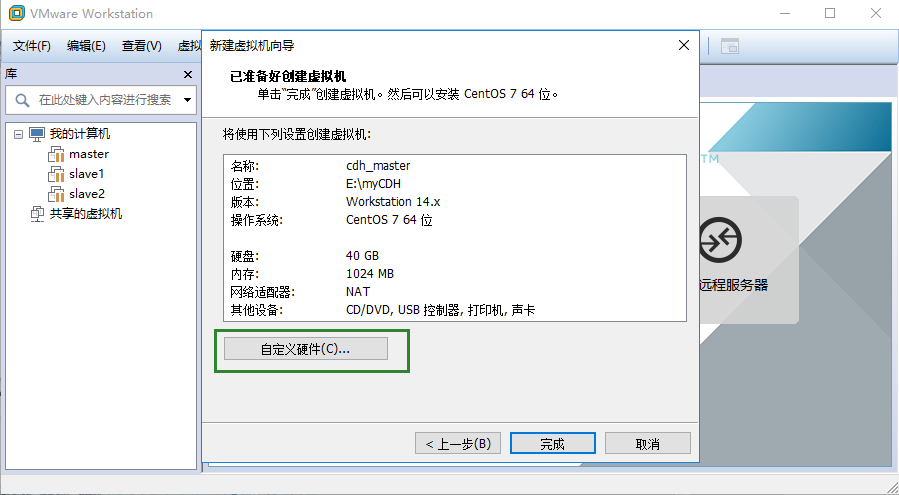

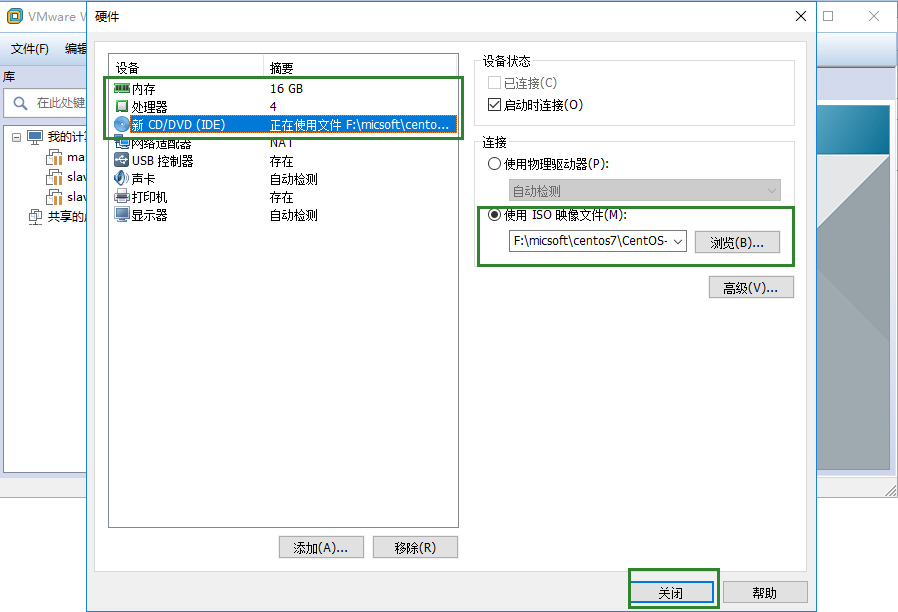

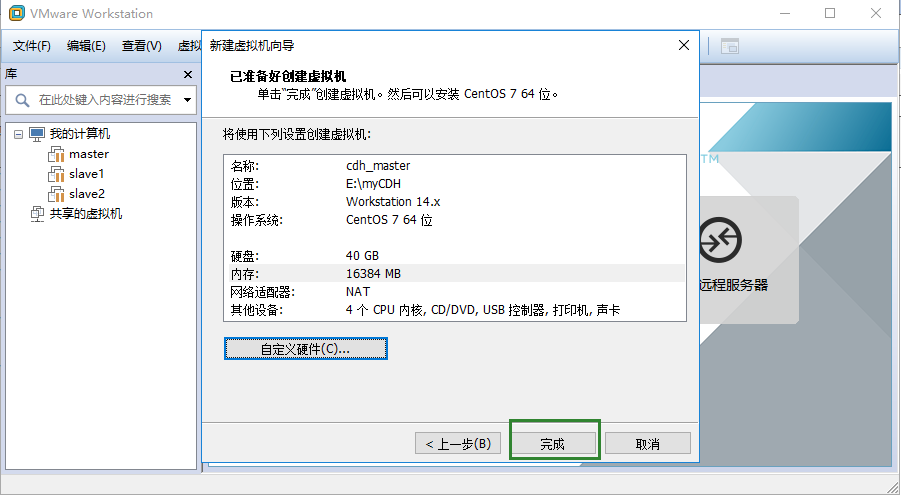

1.7 选择"自定义硬件“,修改内存-处理器-CD指向,点击“完成 ”

二 CentOs7安装

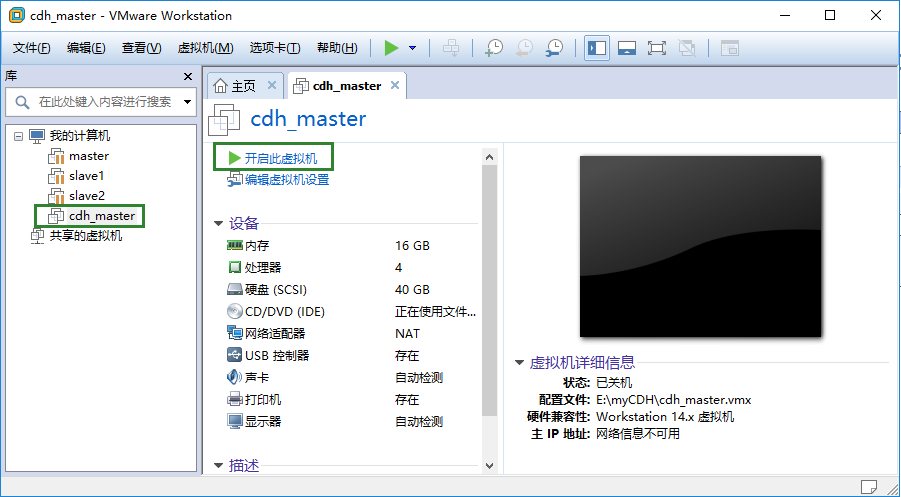

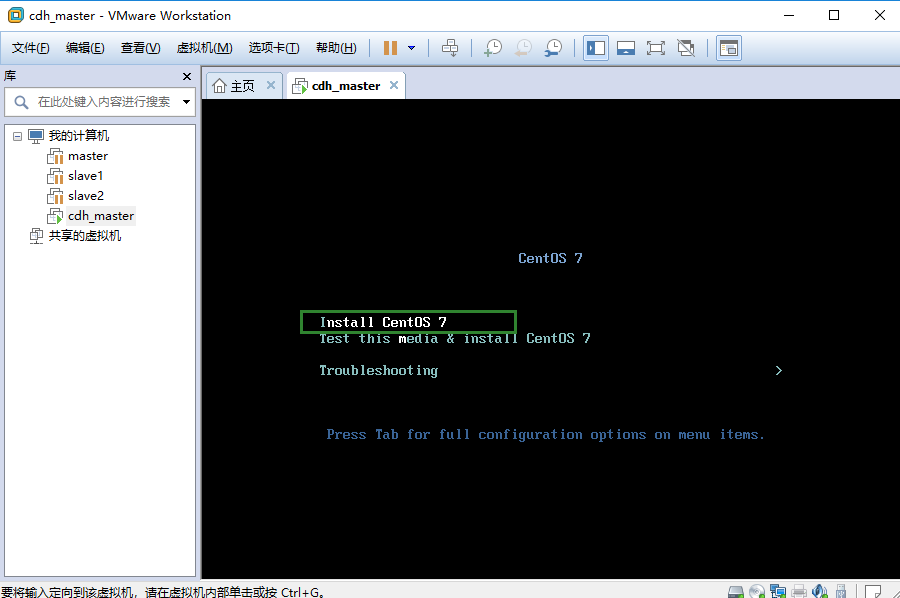

2.1 选择创建好的虚拟机,”开启此虚拟机“

2.2 选择安装centos7

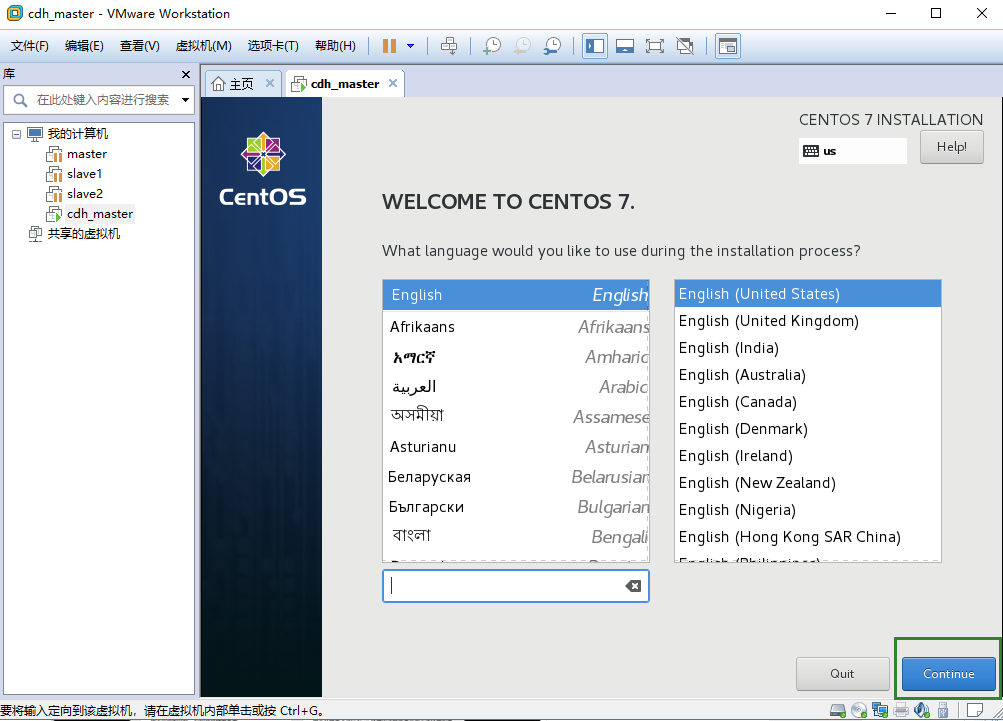

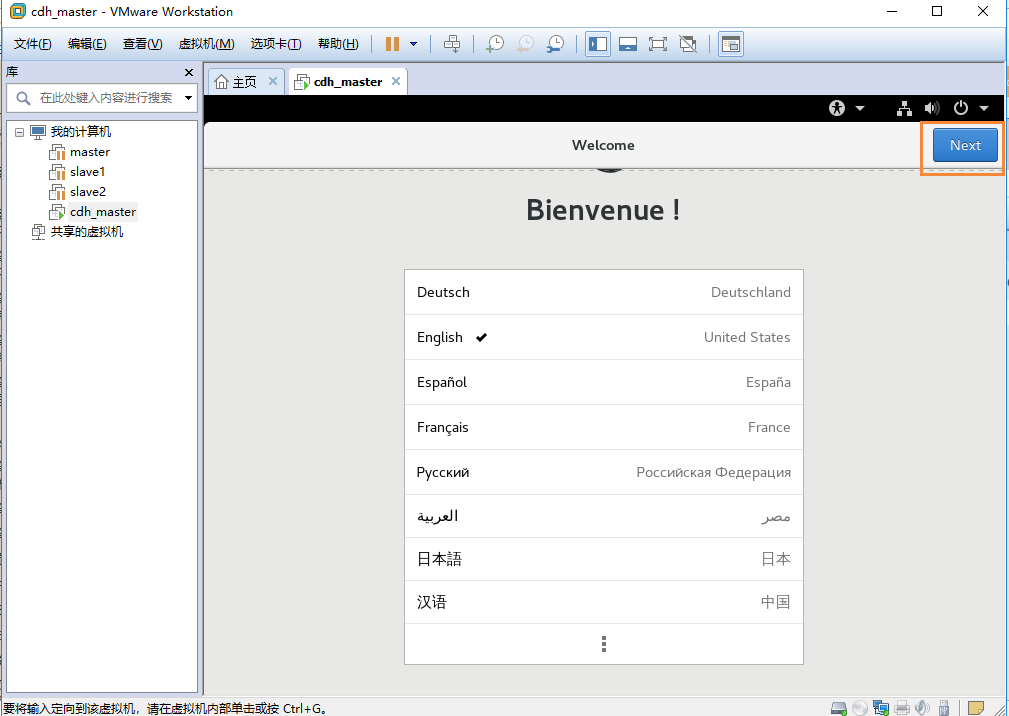

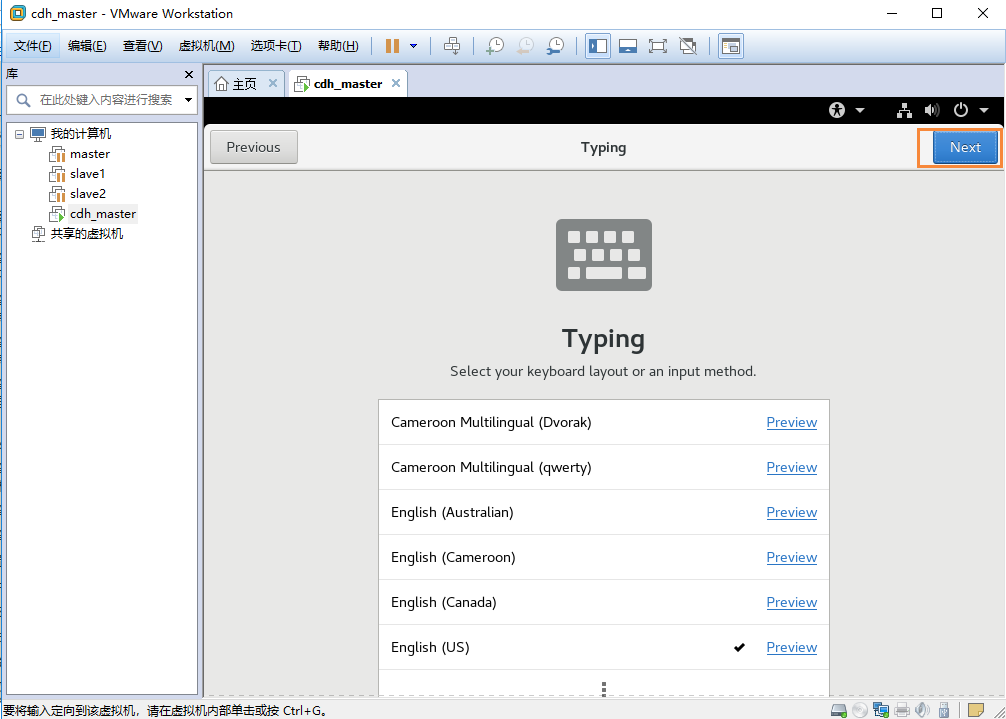

2.3 选择英文版安装

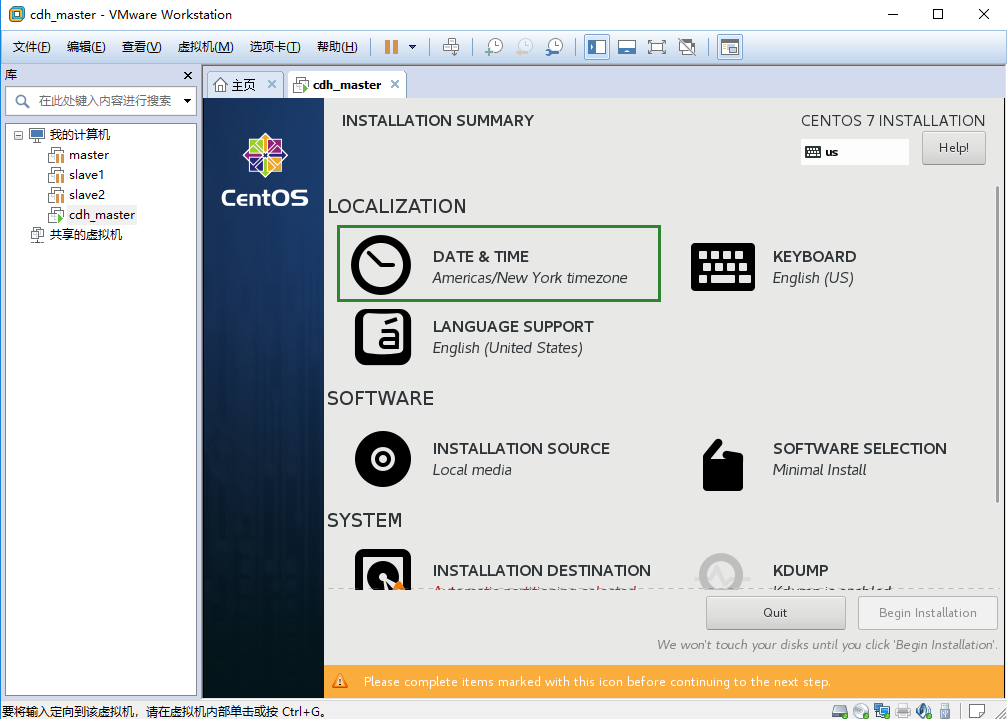

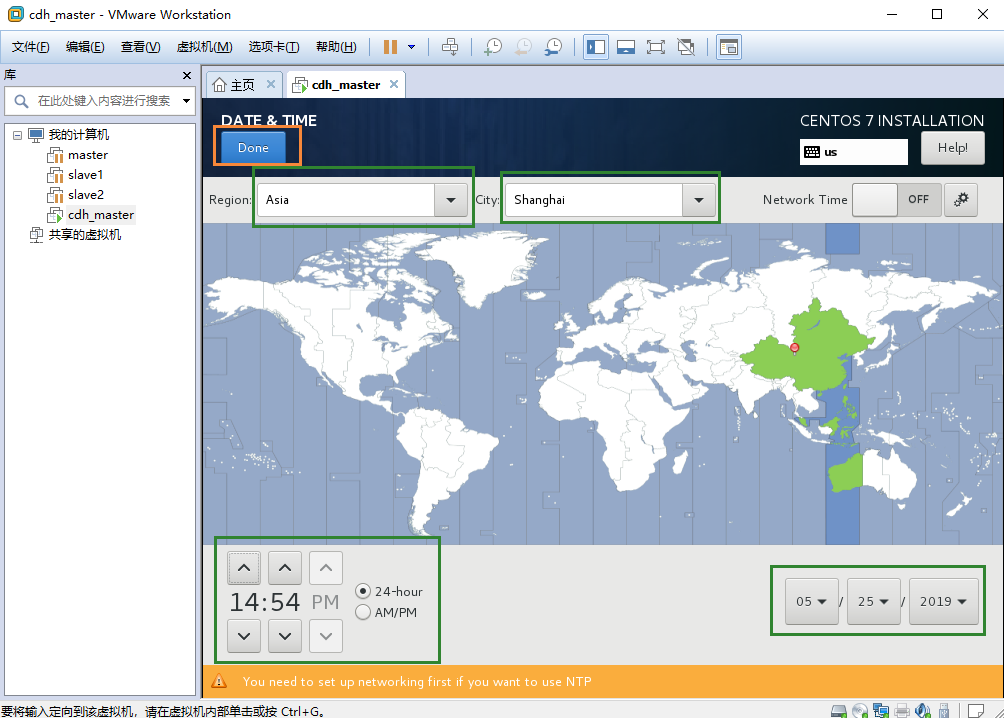

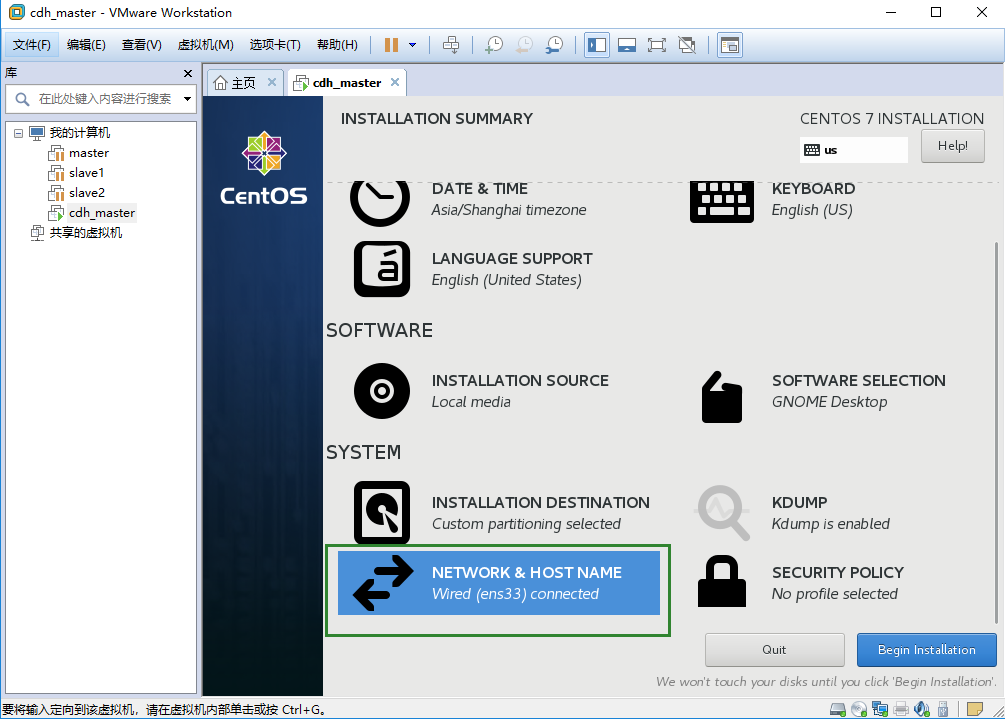

2.4 配置时间

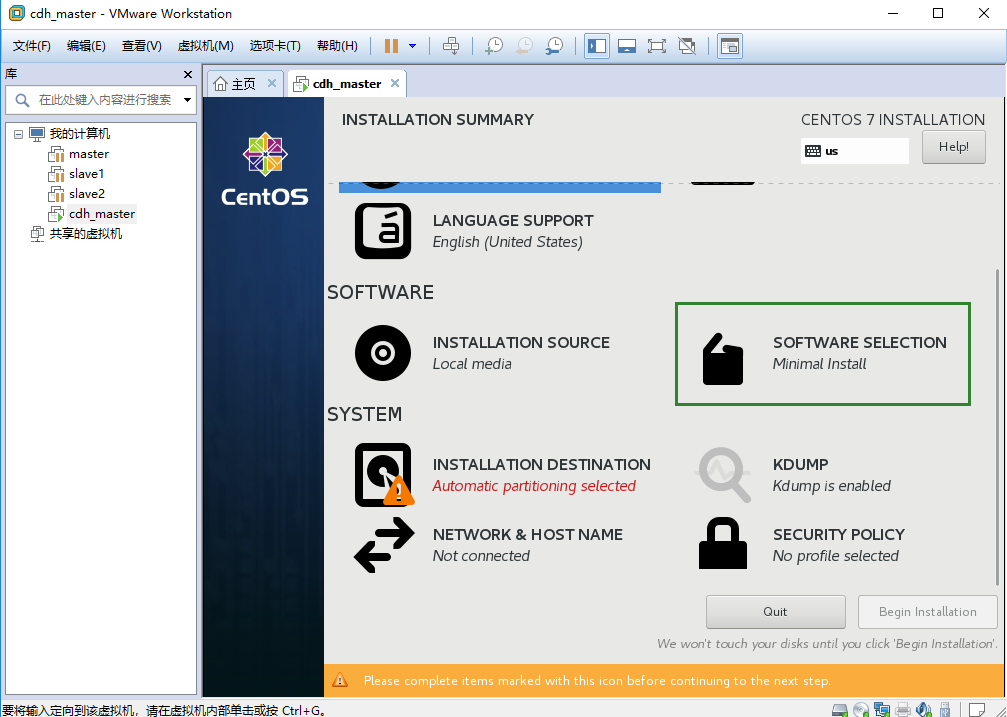

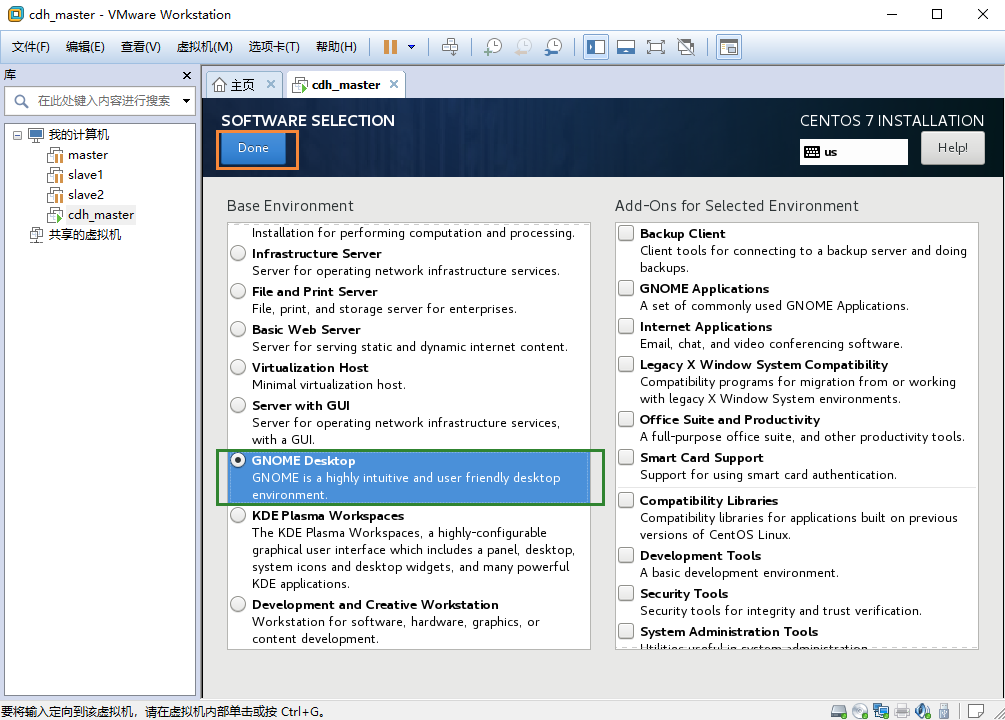

2.5 软件选择

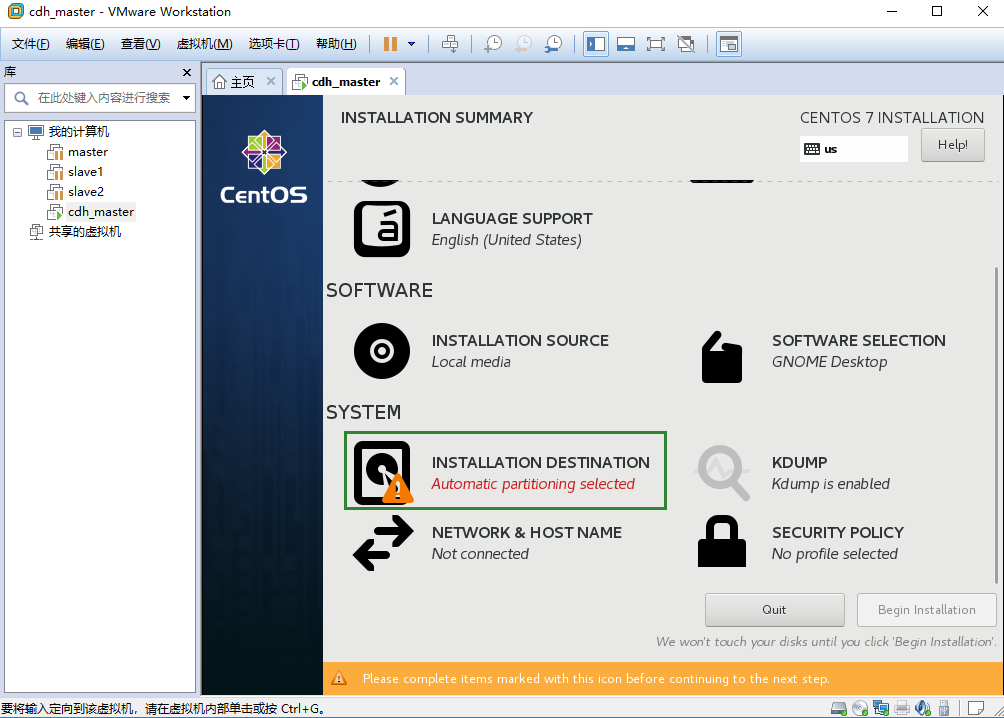

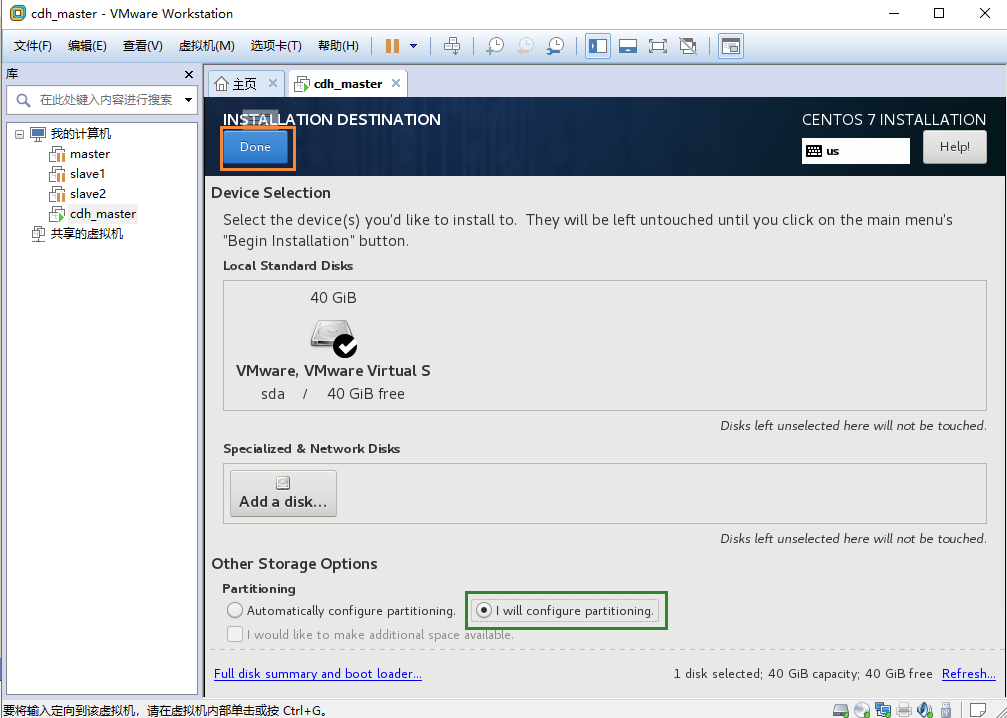

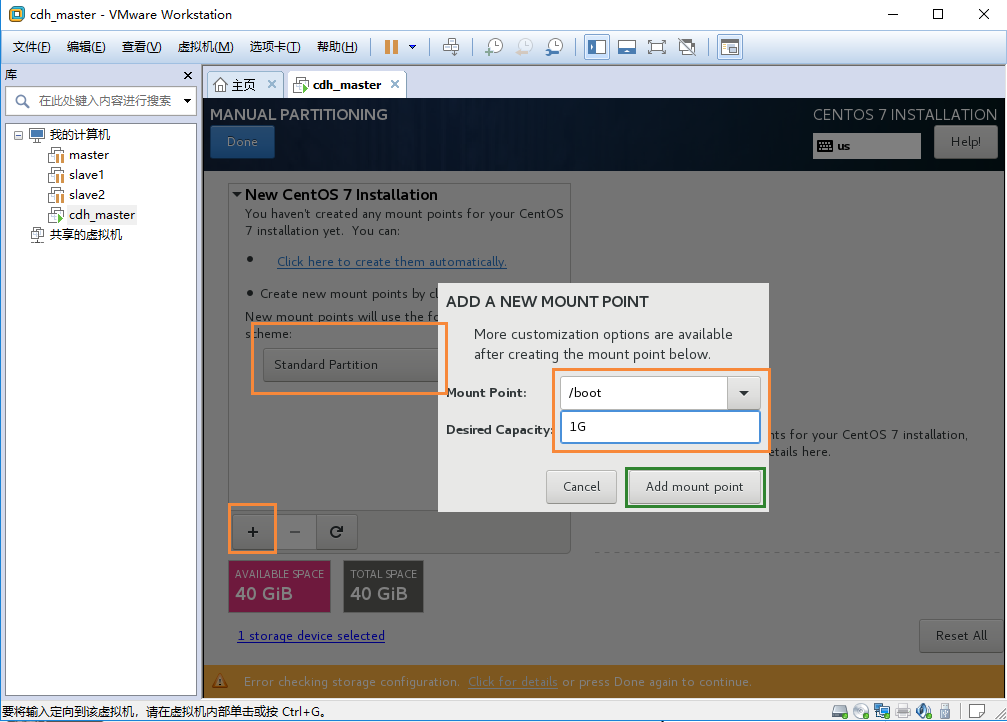

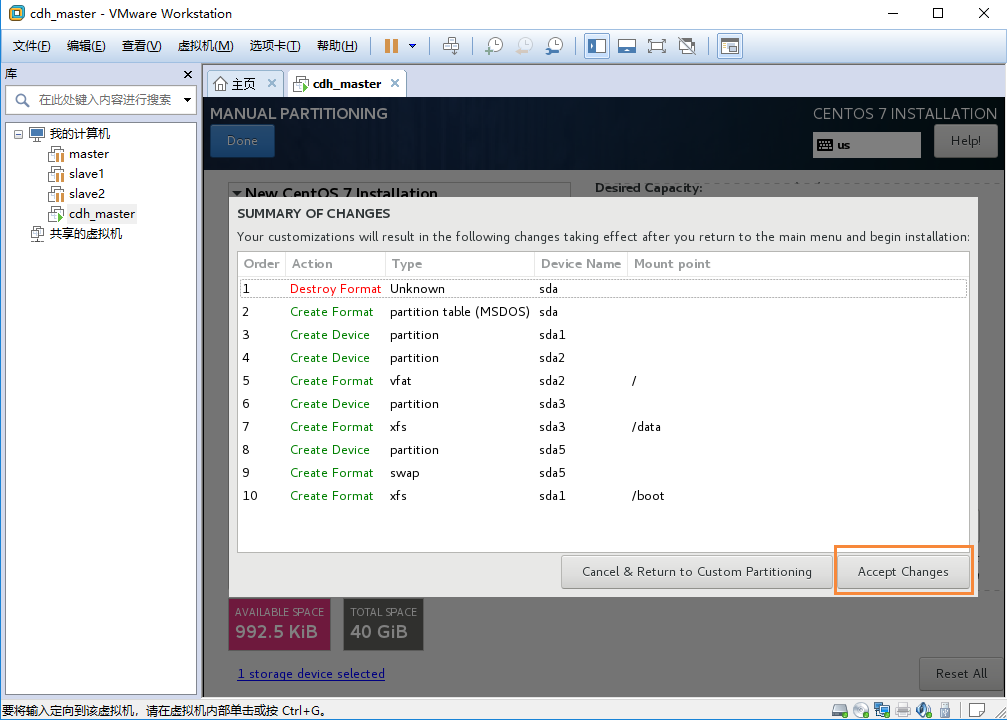

2.6 系统分区设置

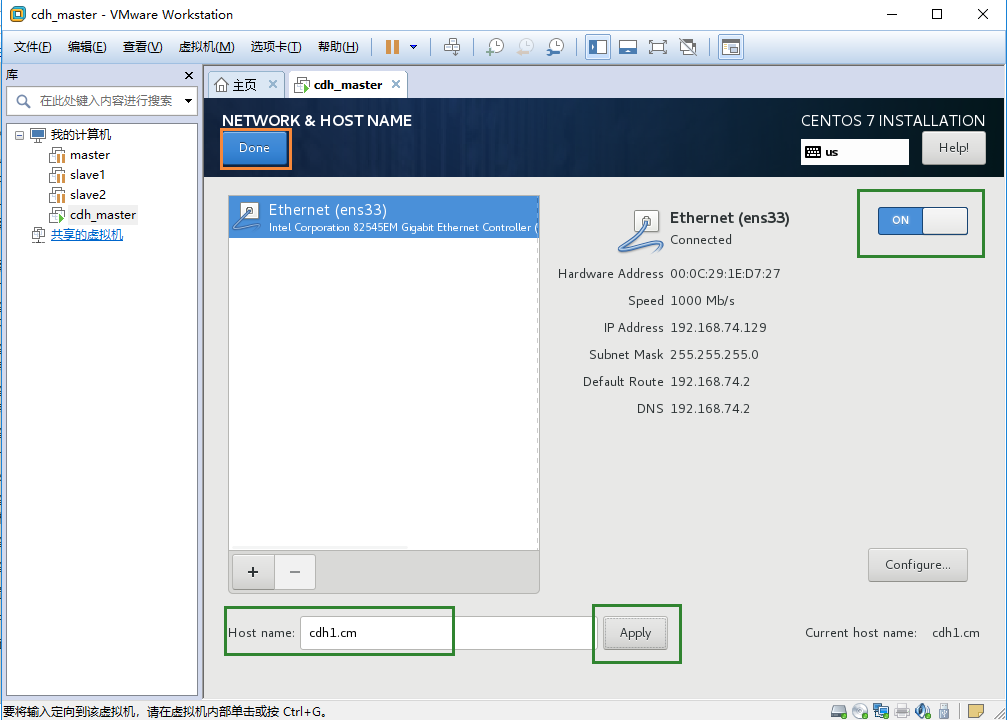

2.7 网关配置

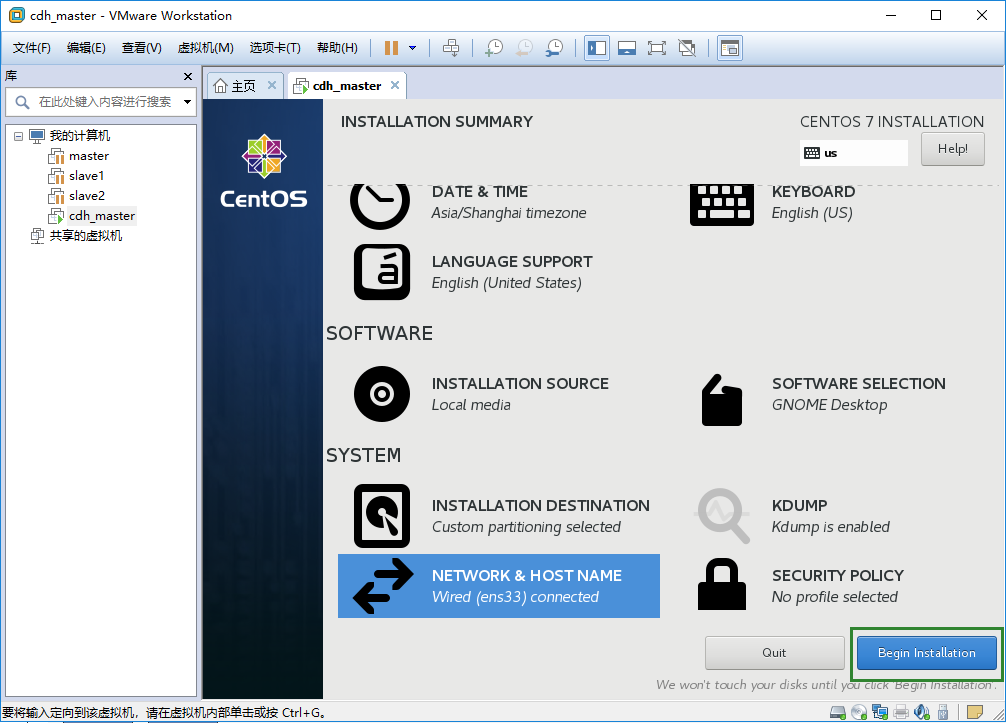

2.8 开始安装

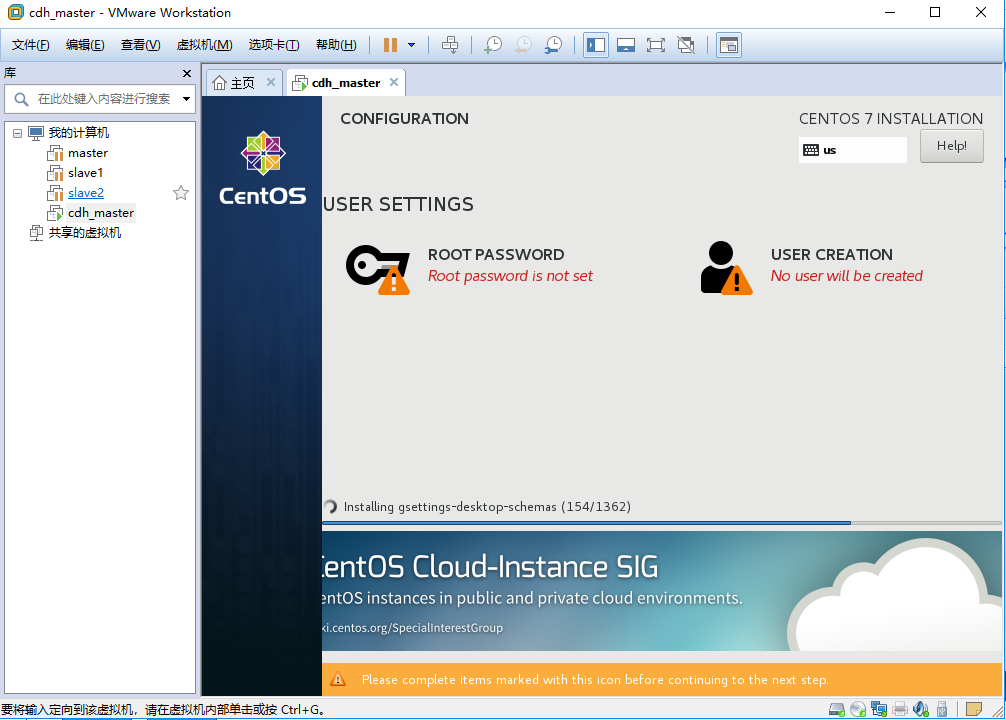

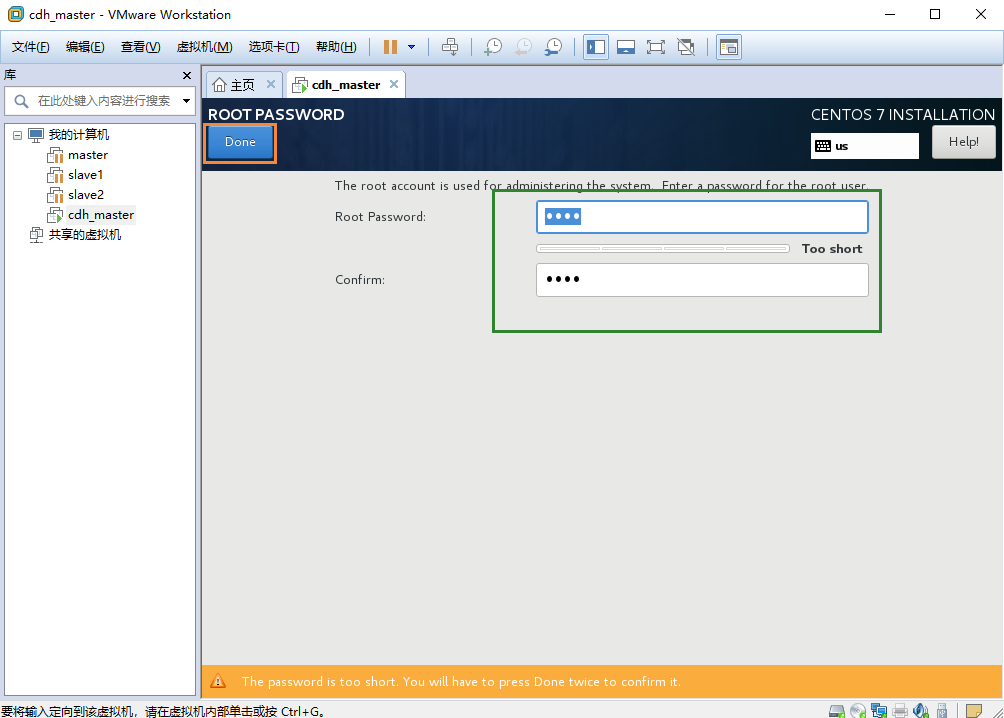

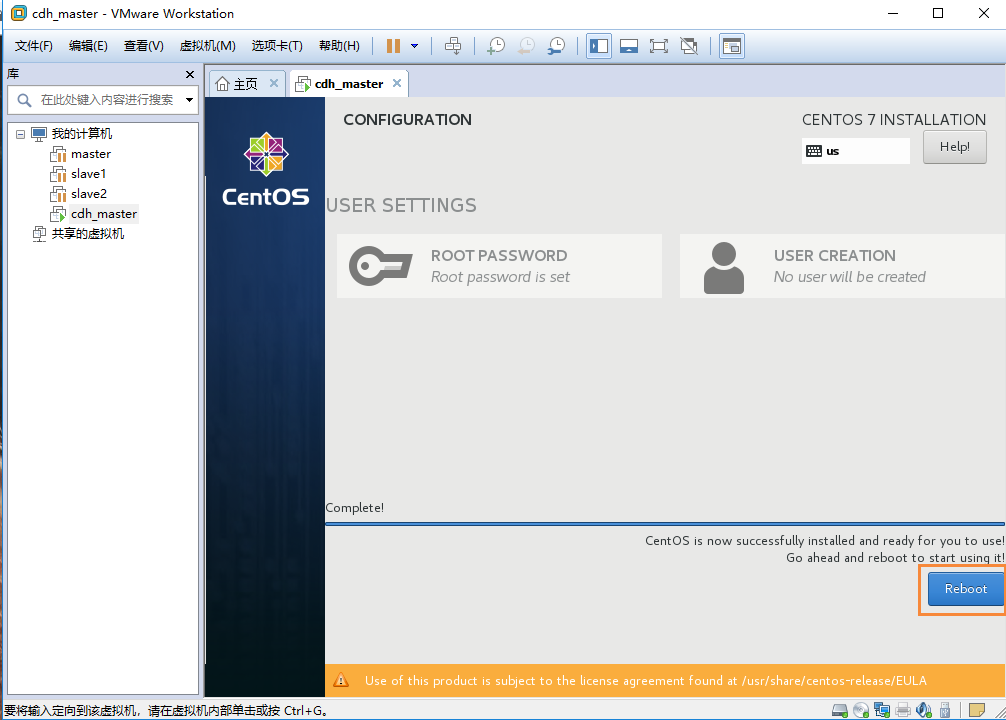

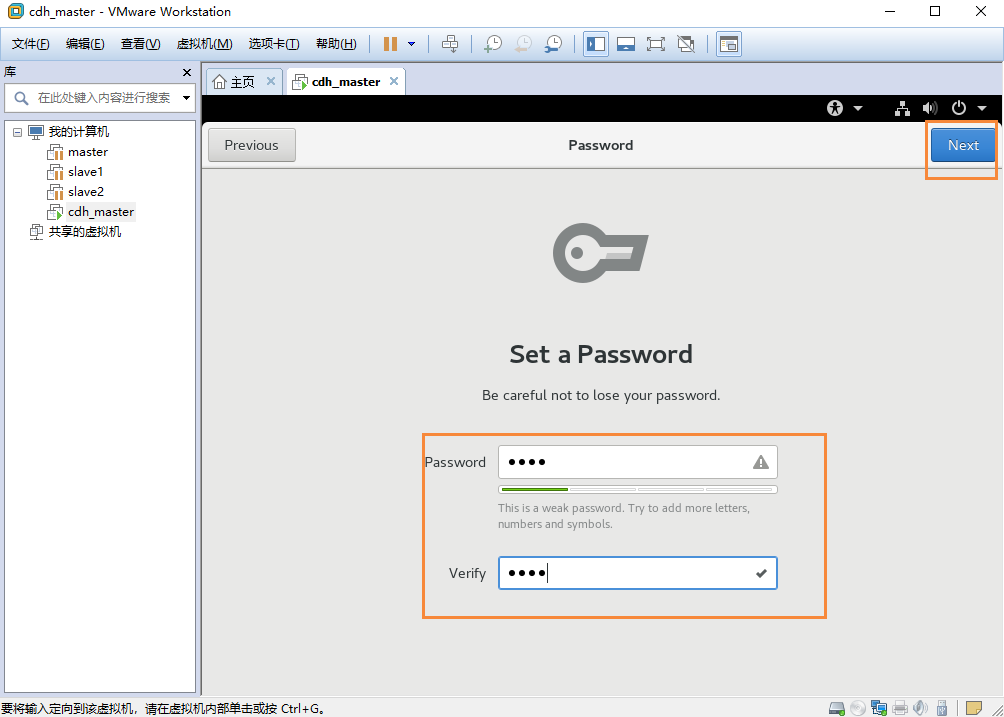

2.9 修改密码(一定要记住哦),选择是否创建用户(这里没有创建),等待安装完成

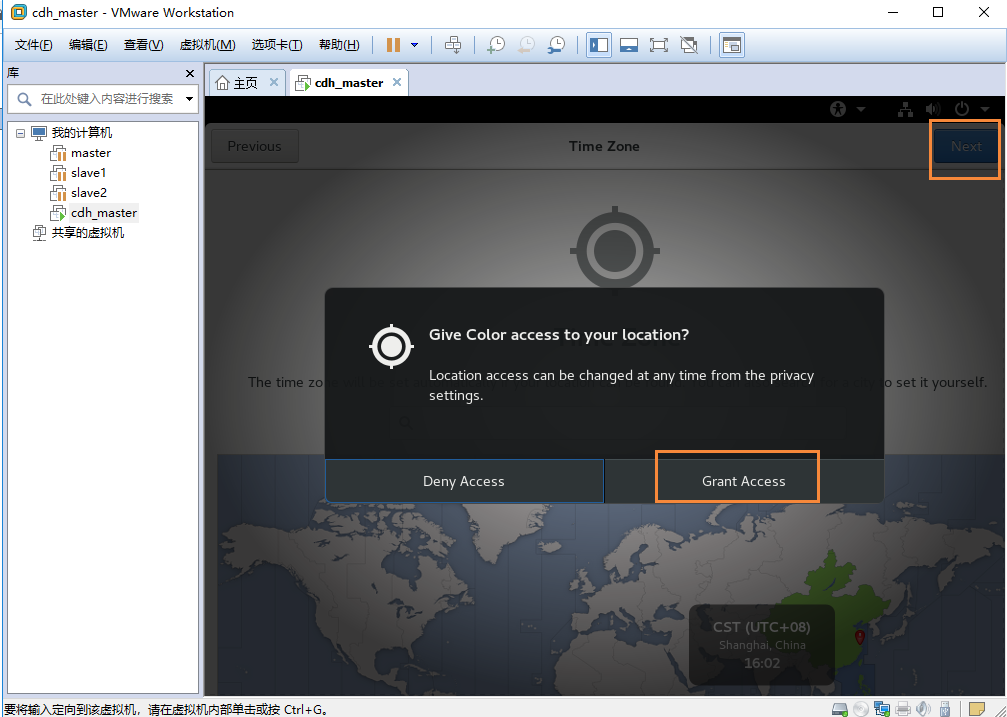

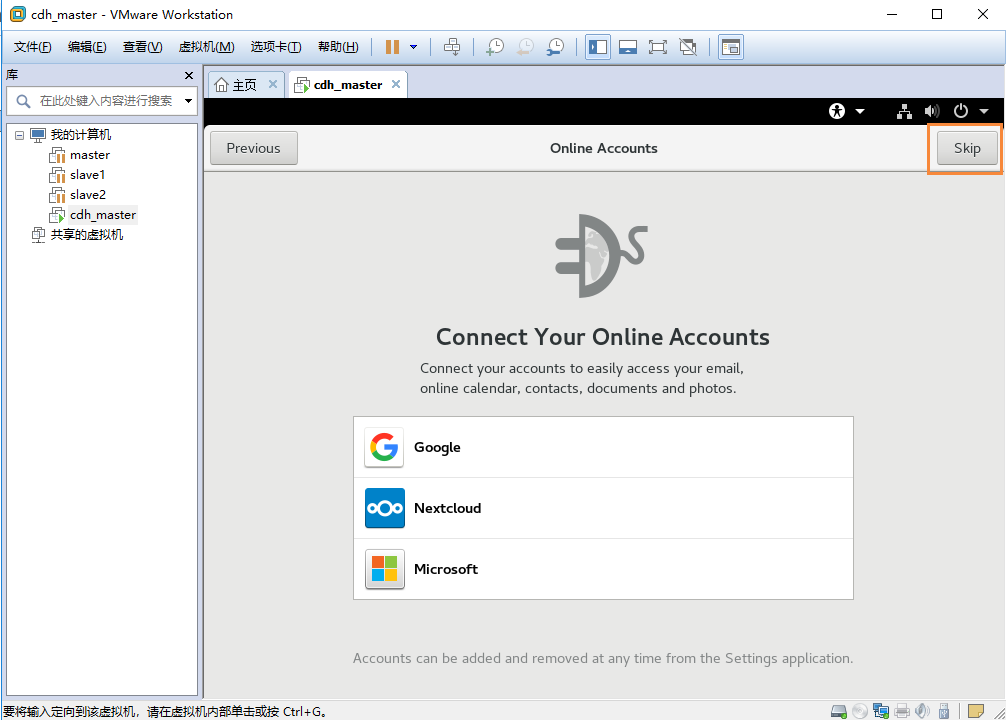

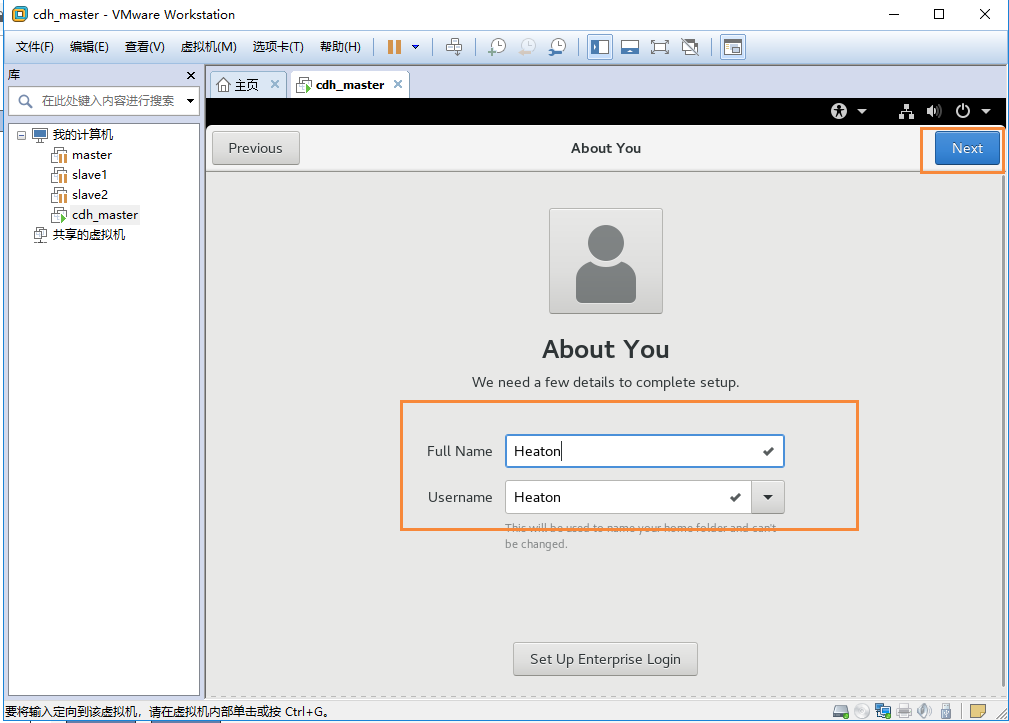

2.10 同意协议,配置相关信息

三 配置IP

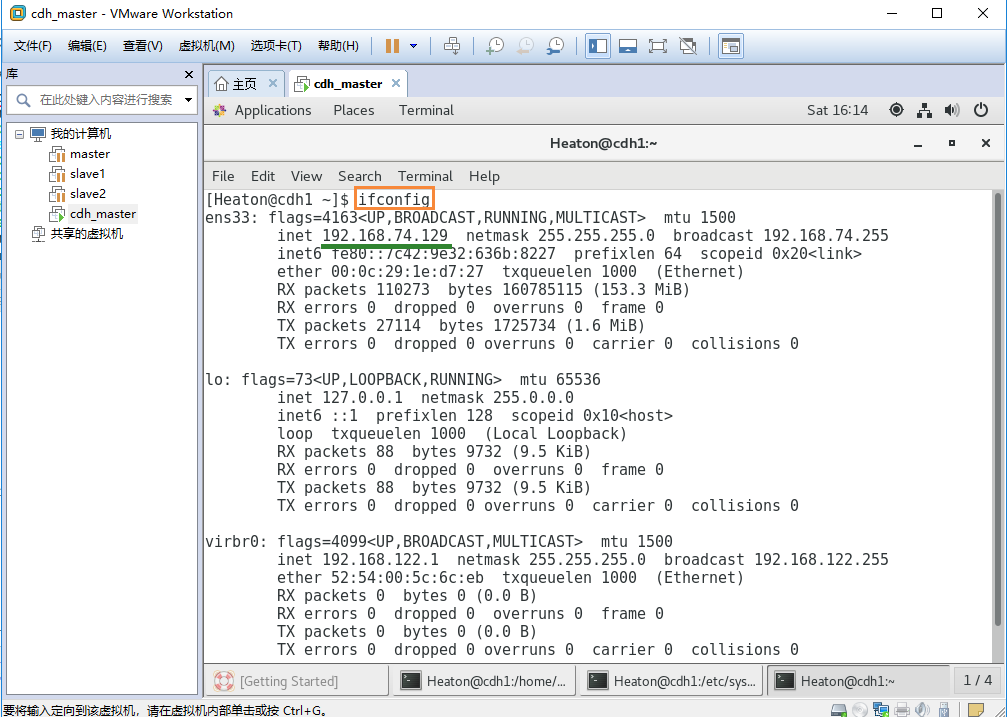

3.1 查看原有IP

ifconfig

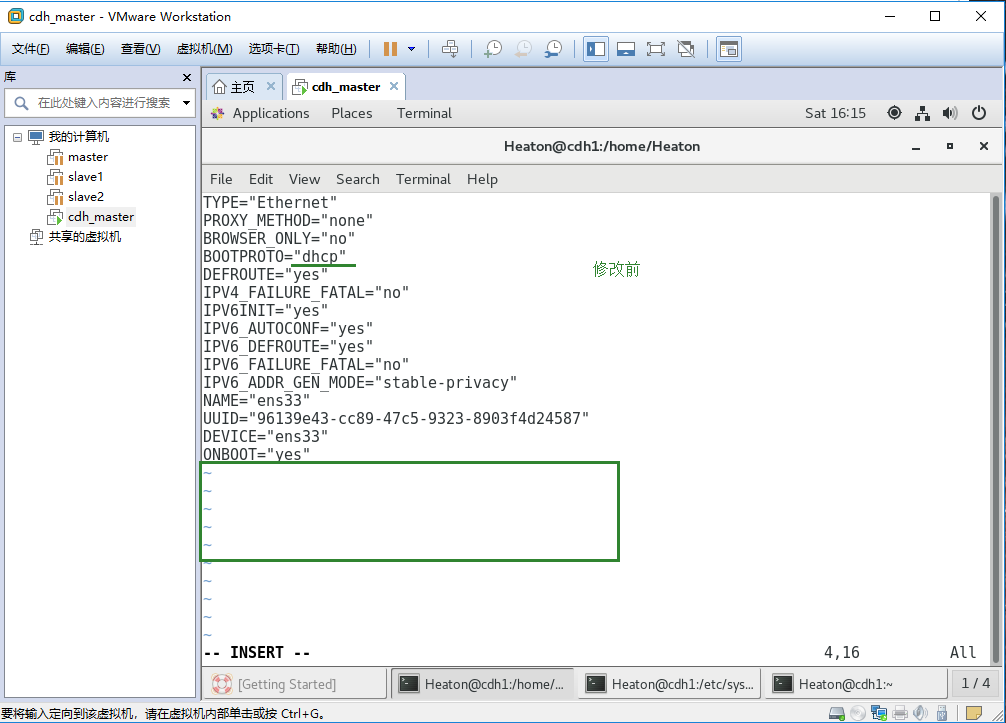

3.2 修改网络配置文件

#进入root用户:需要输入密码

su root

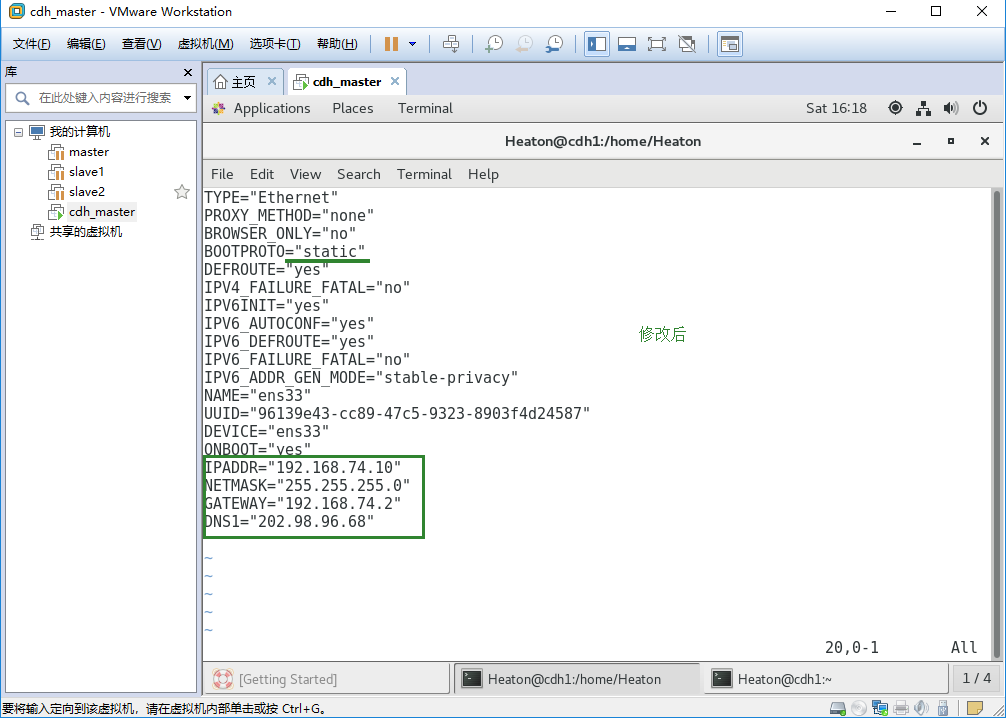

#如下图中修改配置文件

vim /etc/sysconfig/network-scripts/ifcfg-ens33

#增加内容START(三台机器IPADDR:10,11,12)

IPADDR="192.168.74.10"

NETMASK="255.255.255.0"

GATEWAY="192.168.74.2"

DNS1="202.98.96.68"

#增加内容END

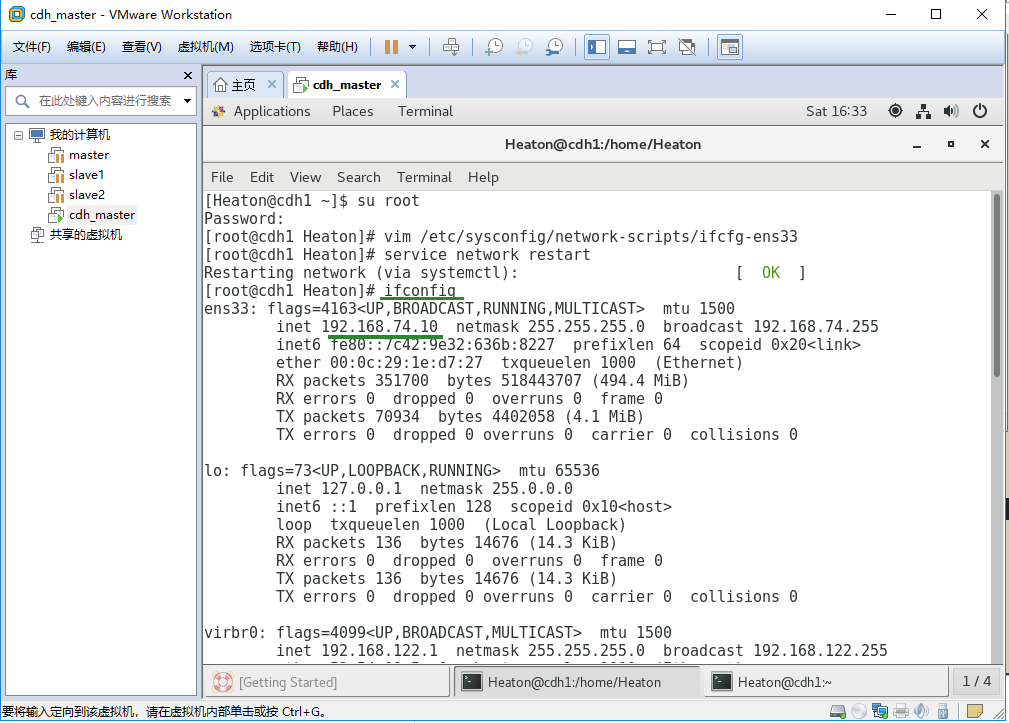

#修改完文件后,重启网络服务

service network restart

3.3 再次查看IP

ifconfig

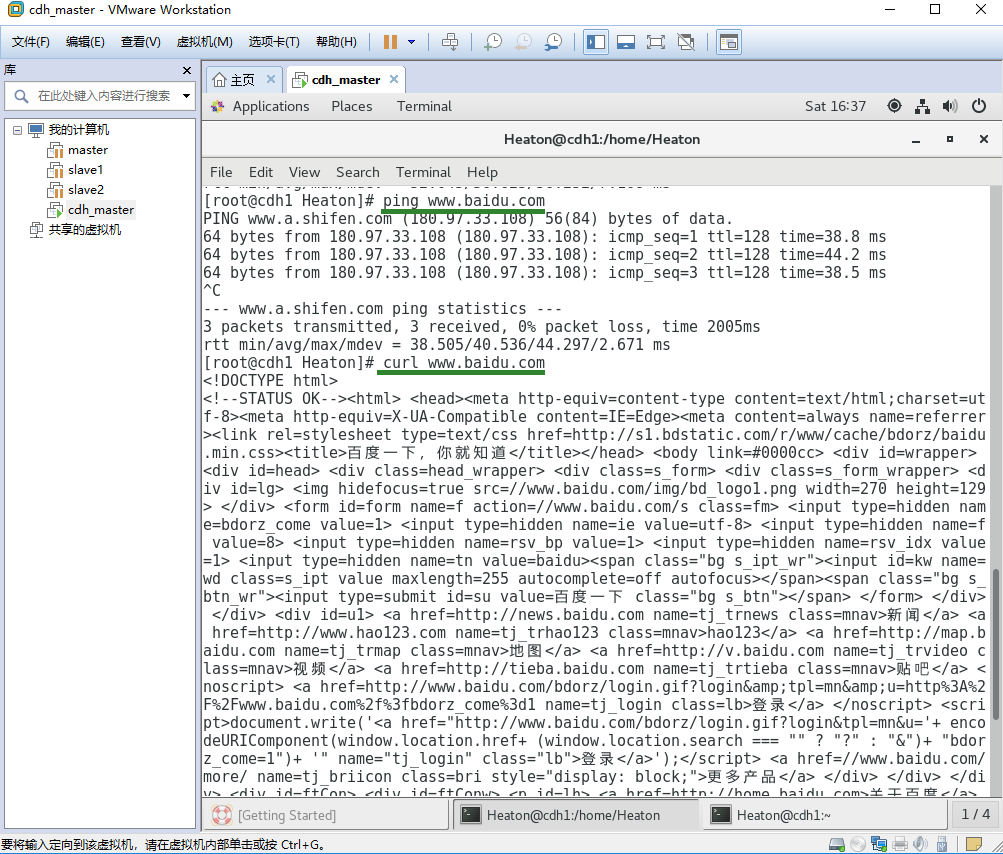

3.4 验证网络是否通畅

3.5 永久关闭网络管理让机子强行执行静态分配

#停止网络管理

systemctl stop NetworkManager

#删除网络管理

systemctl disable NetworkManager

#重启网络

systemctl restart network.service

四 关闭防火墙

4.1 永久关闭网络管理让机子强行执行静态分配

#查看防火墙状态

systemctl status firewalld.service

#临时关闭防火墙

systemctl stop firewalld.service

#禁止firewall开机启动

systemctl disable firewalld.service

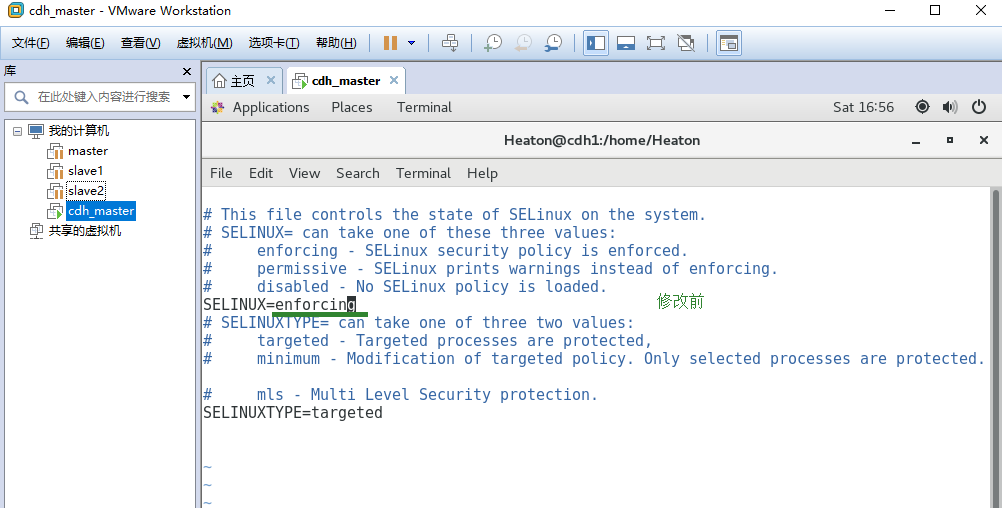

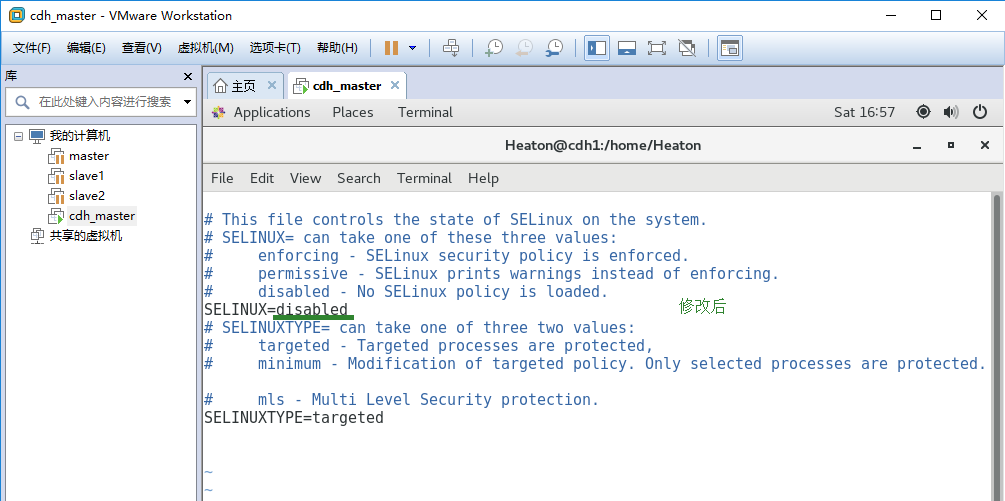

4.2 关闭内核防火墙

#临时关闭内核防火墙

setenforce 0

getenforce

#永久关闭内核防火墙

vim /etc/selinux/config

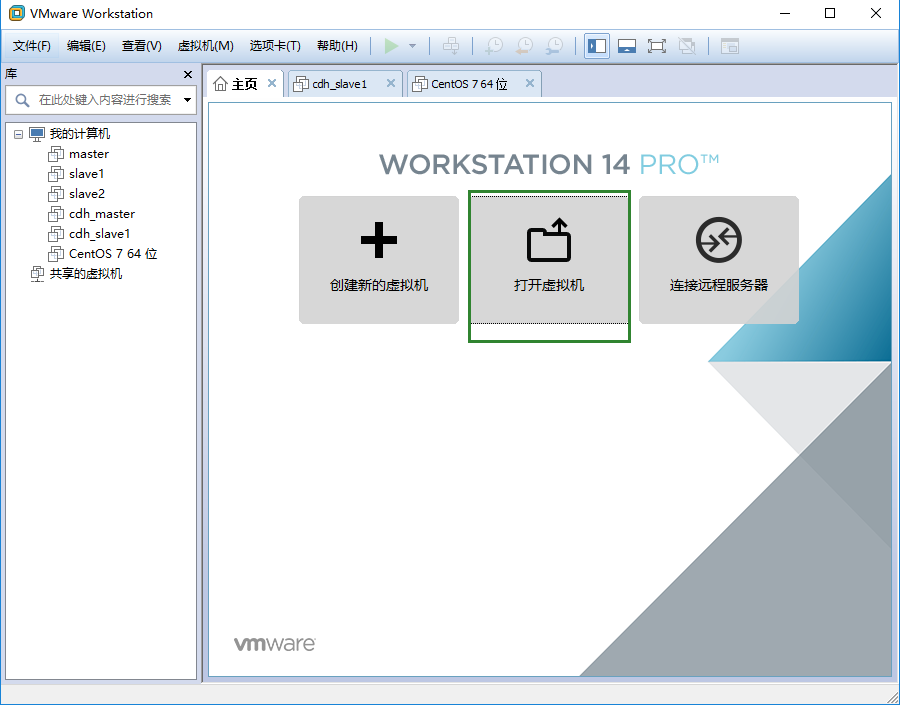

五 准备其他节点机器

5.1 仿照上述4个步骤,建立节点机器,使IP在同一网段

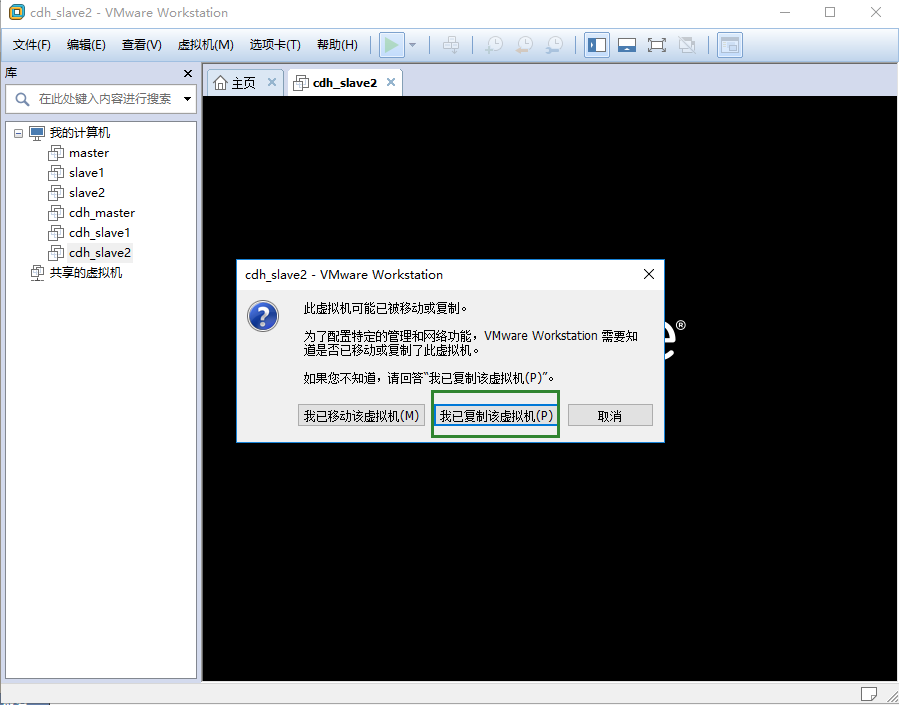

5.2 多台相同配置的虚拟机可以采取复制虚拟机(找到原始机文件目录copy一份作为目标机)

5.3 根据”三配置IP“修改IP地址,虚拟机之间不能相同

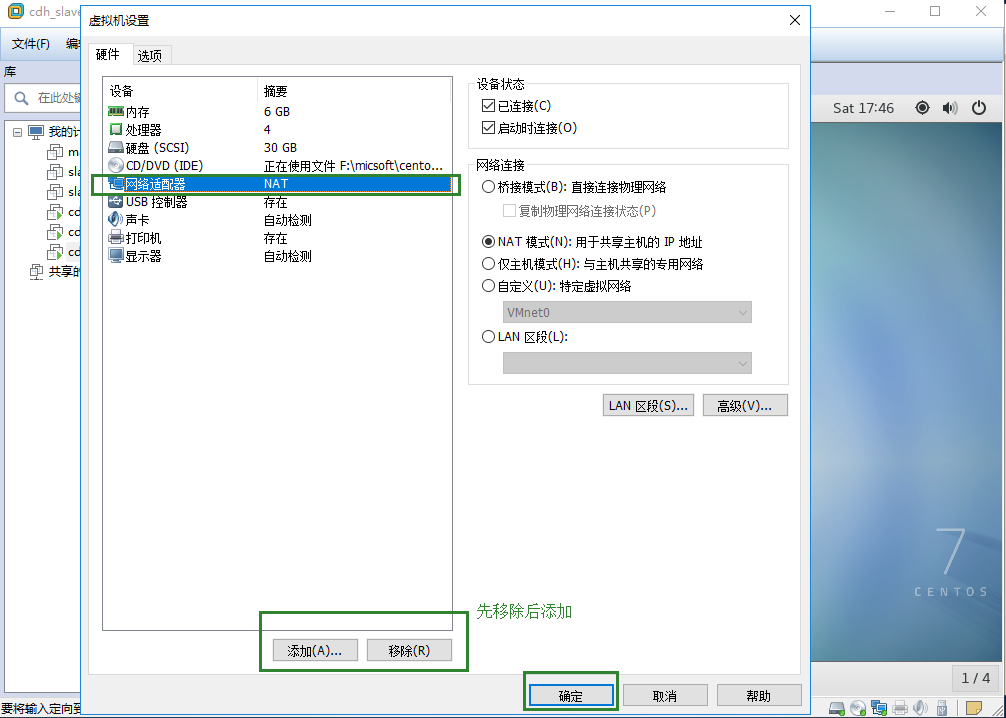

5.4 网卡设置冲突,故将参与复制的原始机和目标机网卡移除在重新添加,会自动配置好。

5.5 检查是否可以上网,即完成配置

六 修改主机名及IP对应关系

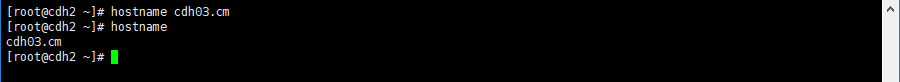

6.1 临时修改命名(即时生效)

#查看机器名

hostname

#修改机器名(3台机器)

#cdh01机器名

hostname cdh01.cm

#cdh02机器名

hostname cdh02.cm

#cdh03机器名

hostname cdh03.cm

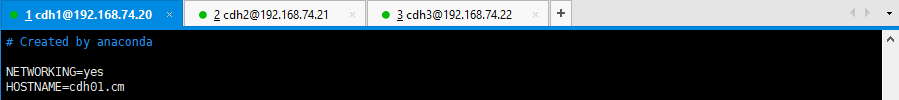

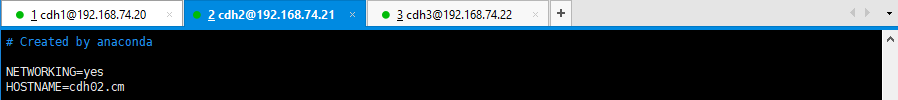

6.2 永久修改命名(重启后生效)

#cdh01机器名

vim /etc/sysconfig/network

#增加如下内容:

NETWORKING=yes

HOSTNAME=cdh01.cm

#cdh02机器名

vim /etc/sysconfig/network

#增加如下内容:

NETWORKING=yes

HOSTNAME=cdh02.cm

#cdh03机器名

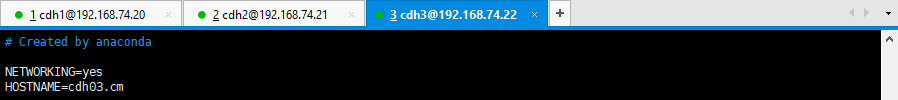

vim /etc/sysconfig/network

#增加如下内容:

NETWORKING=yes

HOSTNAME=cdh03.cm

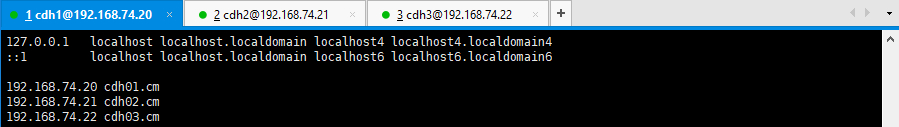

6.3 修改IP对应关系(配置完使用ping命令检测)

#cdh01-cdh03机器

vim /etc/hosts

#增加如下内容:

192.168.74.20 cdh01.cm

192.168.74.21 cdh02.cm

192.168.74.22 cdh03.cm

七 SSH免密

所有节点

#生成密钥对(公钥和私钥)三次回车生成密钥

ssh-keygen -t rsa

#查看公钥

cat /root/.ssh/id_rsa.pub

主节点

#将密匙输出到/root/.ssh/authorized_keys

cat /root/.ssh/id_rsa.pub > /root/.ssh/authorized_keys

chmod 600 /root/.ssh/authorized_keys

#追加密钥到主节点(需要操作及密码验证,追加完后查看一下该文件)

ssh cdh02.cm cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

ssh cdh03.cm cat /root/.ssh/id_rsa.pub >> /root/.ssh/authorized_keys

cat /root/.ssh/authorized_keys

#复制密钥到从节点

scp /root/.ssh/authorized_keys root@cdh02.cm:/root/.ssh/authorized_keys

scp /root/.ssh/authorized_keys root@cdh03.cm:/root/.ssh/authorized_keys

所有节点互相进行ssh连接

ssh cdh01.cm

ssh cdh02.cm

ssh cdh03.cm

八 修改时间同步(需要ntp)

主节点

#更新yum源ntp

yum -y install ntp

#查询机器时间

date

#时间同步

ntpdate pool.ntp.org

#查看时间同步服务

service ntpd status

#临时启用时间同步服务

service ntpd start

#配置ntpd永久生效(重启生效)

chkconfig ntpd on

从节点

使用crontab定时任务

crontab -e

添加定时任务(每分钟和主机同步)内容如下:

0-59/1 * * * * /usr/sbin/ntpdate cdh01.cm

九 配置本地yum云(需要httpd)(先看安装部分,下载需要的文件)

主节点

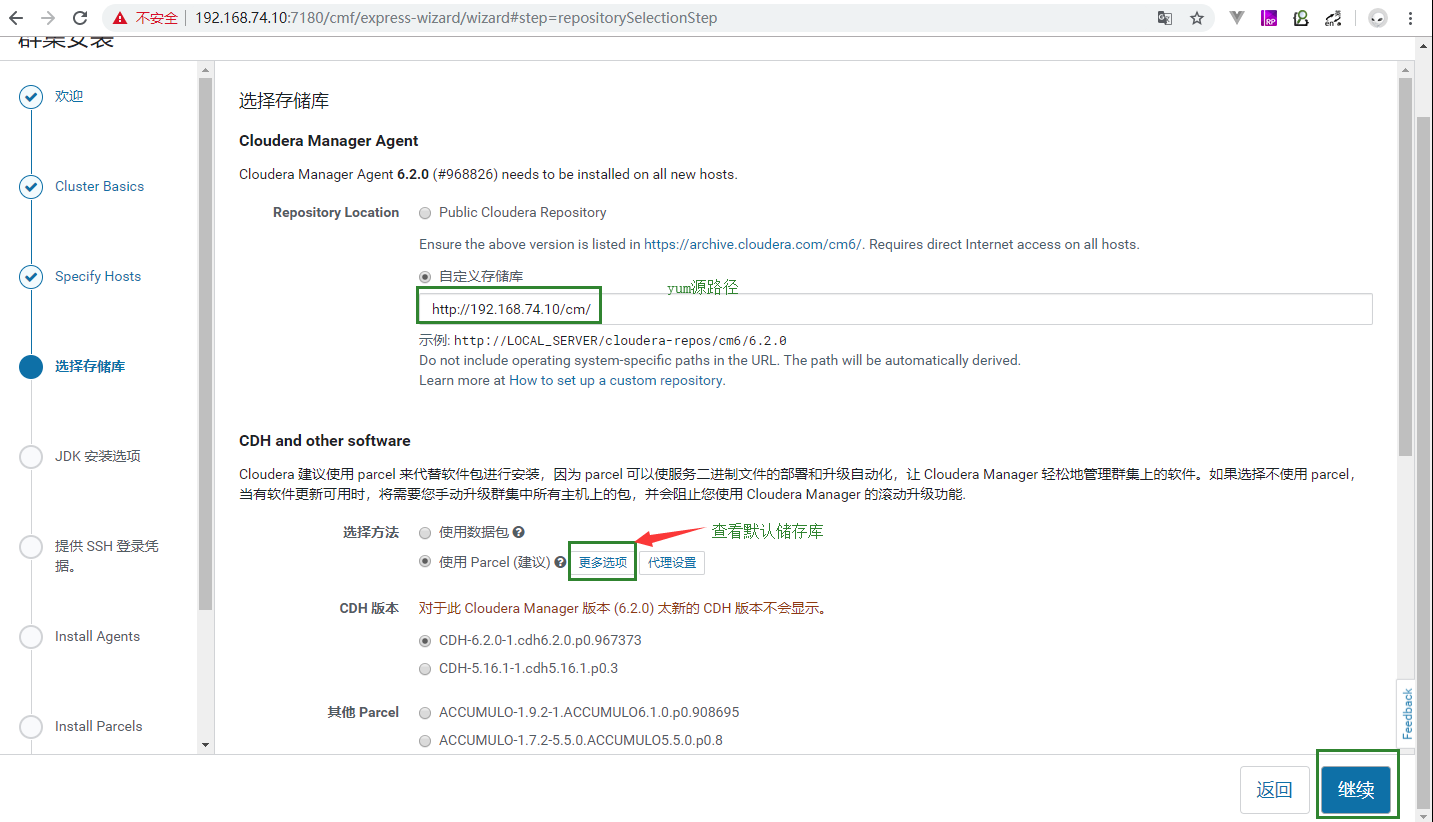

9.1 更新yum源httpd,进入其下目录,创建cm-6.1.0,管理文件夹,然后通过浏览器访问管理

#更新yum源httpd

yum -y install httpd

#查看httpd状态

systemctl status httpd.service

#启动httpd

service httpd start

#配置httpd永久生效(重启生效)

chkconfig httpd on

9.2 更新yum源yum-utils

#更新yum源yum-utils createrepo

yum -y install yum-utils createrepo

#进入yum源路径

cd /var/www/html/

#创建cm文件夹

mkdir cm

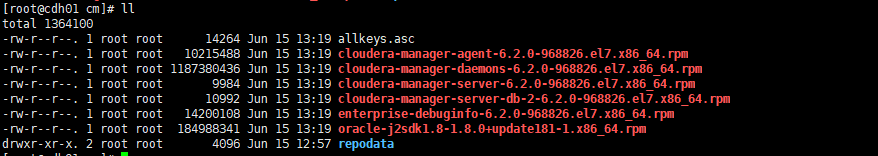

9.3 将下载好的资源添加进/var/www/html/cm/(参考cm+cdh安装)

#在添加完需要资源后,使用工具和本地的yum源创建联系

createrepo /var/www/html/cm/

9.4 创建本地repo文件

vim /etc/yum.repos.d/cloudera-manager.repo

#添加如下内容

[cloudera-manager]

name=Cloudera Manager, Version yum

baseurl=http://192.168.74.10/cm

gpgcheck=0

enabled=1

- 更新yum源

yum clean all

yum makecache

验证

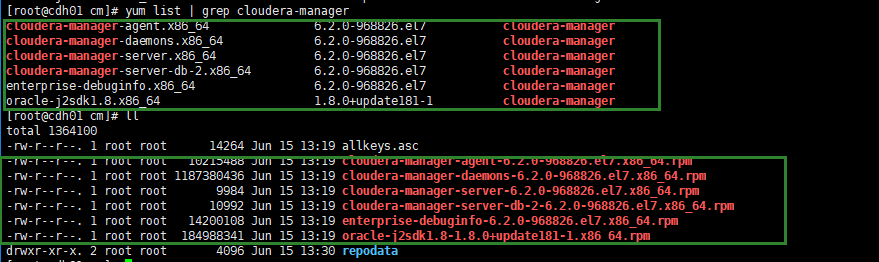

yum list | grep cloudera-manager

9.5 将本地yum文件分发至从节点

scp /etc/yum.repos.d/cloudera-manager.repo root@cdh02.cm:/etc/yum.repos.d/

scp /etc/yum.repos.d/cloudera-manager.repo root@cdh03.cm:/etc/yum.repos.d/

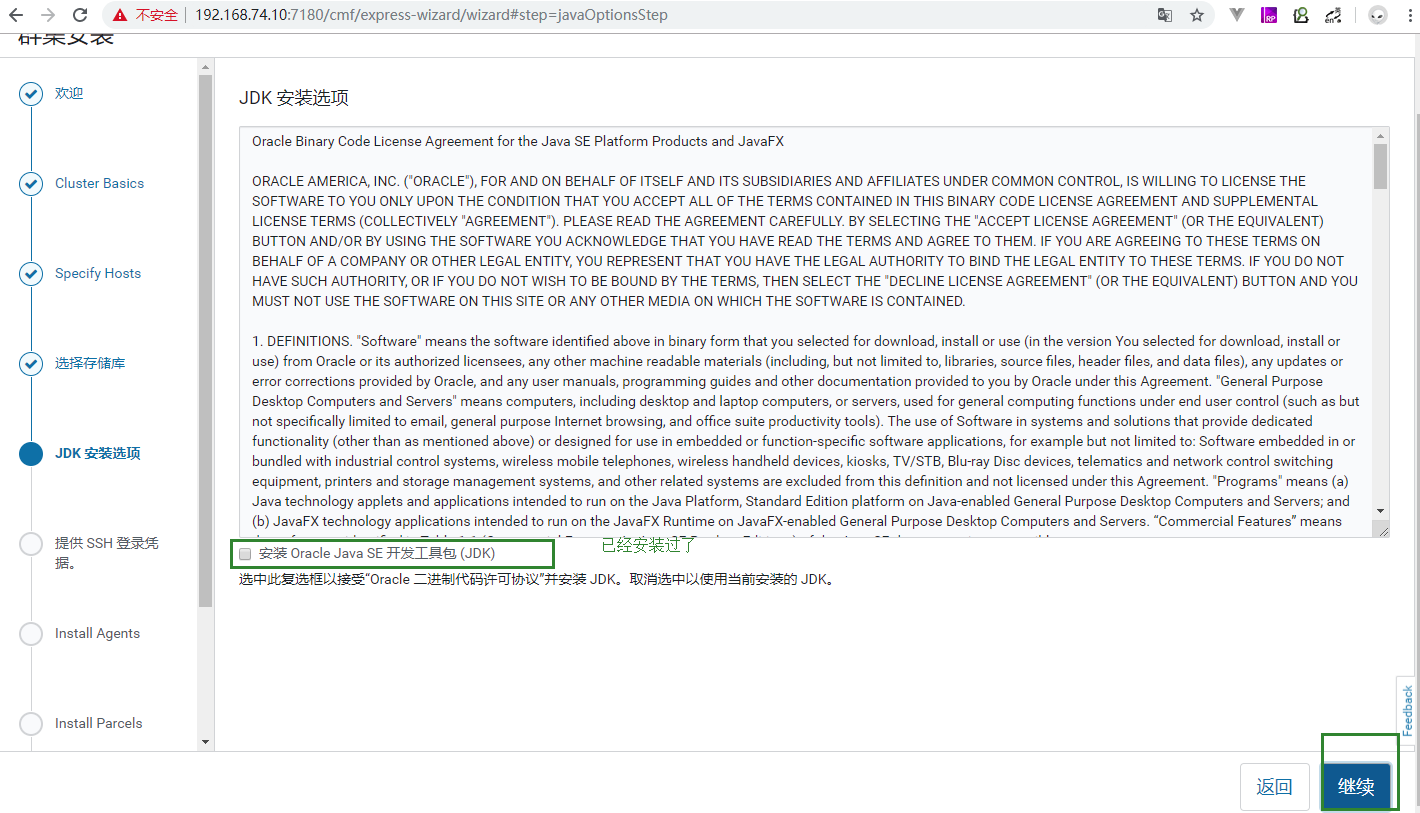

十 安装JDK(所有节点)

#查看命令

rpm -qa | grep java

#删除命令

rpm -e --nodeps xxx

将oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm上传至每个节点安装

rpm -ivh oracle-j2sdk1.8-1.8.0+update181-1.x86_64.rpm

修改配置文件

vim /etc/profile

#添加

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

刷新源

source /etc/profile

检验

java

javac

十一 安装Mysql

Mysql 下载地址:https://pan.baidu.com/s/1oaWnAi9J2swKnViAnF0cKQ 提取码:164h

此处先把mysql-connector-java-5.1.47.jar传到每台服务器的/usr/share/java(如果目录不存在需要先创建)目录下,并更名为mysql-connector-java.jar,如果不更名后面会报错

主节点

- 1.把mysql-5.7.11-linux-glibc2.5-x86_64.tar.gz解压到/usr/local目录下并改名为mysql

2.创建归档,数据,临时文件夹,创建用户,赋予权限

mkdir mysql/arch mysql/data mysql/tmp

useradd mysql

chown -R mysql.mysql /usr/local/mysql

yum -y install perl perl-devel

3.修改

vi /etc/my.cnf

(删除原有配置,增加如下配置)

[client]

port=3306

socket=/usr/local/mysql/data/mysql.sock

default-character-set=utf8mb4 [mysqld]

port=3306

socket=/usr/local/mysql/data/mysql.sock skip-slave-start skip-external-locking

key_buffer_size=256M

sort_buffer_size=2M

read_buffer_size=2M

read_rnd_buffer_size=4M

query_cache_size=32M

max_allowed_packet=16M

myisam_sort_buffer_size=128M

tmp_table_size=32M table_open_cache=512

thread_cache_size=8

wait_timeout=86400

interactive_timeout=86400

max_connections=600 # Try number of CPU's*2 for thread_concurrency

#thread_concurrency=32 #isolation level and default engine

default-storage-engine=INNODB

transaction-isolation=READ-COMMITTED server-id=1739

basedir=/usr/local/mysql

datadir=/usr/local/mysql/data

pid-file=/usr/local/mysql/data/hostname.pid #open performance schema

log-warnings

sysdate-is-now binlog_format=ROW

log_bin_trust_function_creators=1

log-error=/usr/local/mysql/data/hostname.err

log-bin=/usr/local/mysql/arch/mysql-bin

expire_logs_days=7 innodb_write_io_threads=16 relay-log=/usr/local/mysql/relay_log/relay-log

relay-log-index=/usr/local/mysql/relay_log/relay-log.index

relay_log_info_file=/usr/local/mysql/relay_log/relay-log.info log_slave_updates=1

gtid_mode=OFF

enforce_gtid_consistency=OFF # slave

slave-parallel-type=LOGICAL_CLOCK

slave-parallel-workers=4

master_info_repository=TABLE

relay_log_info_repository=TABLE

relay_log_recovery=ON #other logs

#general_log=1

#general_log_file=/usr/local/mysql/data/general_log.err

#slow_query_log=1

#slow_query_log_file=/usr/local/mysql/data/slow_log.err #for replication slave

sync_binlog=500 #for innodb options

innodb_data_home_dir=/usr/local/mysql/data/

innodb_data_file_path=ibdata1:1G;ibdata2:1G:autoextend innodb_log_group_home_dir=/usr/local/mysql/arch

innodb_log_files_in_group=4

innodb_log_file_size=1G

innodb_log_buffer_size=200M #根据生产需要,调整pool size

innodb_buffer_pool_size=2G

#innodb_additional_mem_pool_size=50M #deprecated in 5.6

tmpdir=/usr/local/mysql/tmp innodb_lock_wait_timeout=1000

#innodb_thread_concurrency=0

innodb_flush_log_at_trx_commit=2 innodb_locks_unsafe_for_binlog=1 #innodb io features: add for mysql5.5.8

performance_schema

innodb_read_io_threads=4

innodb-write-io-threads=4

innodb-io-capacity=200

#purge threads change default(0) to 1 for purge

innodb_purge_threads=1

innodb_use_native_aio=on #case-sensitive file names and separate tablespace

innodb_file_per_table=1

lower_case_table_names=1 [mysqldump]

quick

max_allowed_packet=128M [mysql]

no-auto-rehash

default-character-set=utf8mb4 [mysqlhotcopy]

interactive-timeout [myisamchk]

key_buffer_size=256M

sort_buffer_size=256M

read_buffer=2M

write_buffer=2M

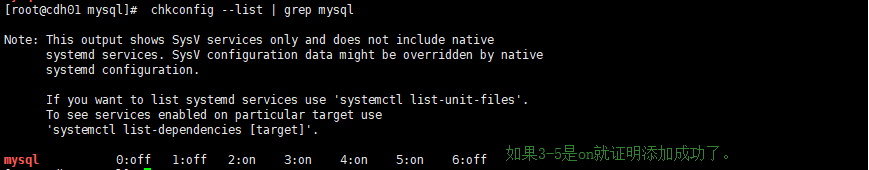

4.配置服务及开机自启动

cd /usr/local/mysql

#将服务文件拷贝到init.d下,并重命名为mysql

cp support-files/mysql.server /etc/rc.d/init.d/mysql

#赋予可执行权限

chmod +x /etc/rc.d/init.d/mysql

#删除服务

chkconfig --del mysql

#添加服务

chkconfig --add mysql

chkconfig --level 345 mysql on

#添加快捷方式

ln -s /usr/local/mysql/bin/mysql /usr/bin/

5.安装mysql的初始db

bin/mysqld \

--defaults-file=/etc/my.cnf \

--user=mysql \

--basedir=/usr/local/mysql/ \

--datadir=/usr/local/mysql/data/ \

--initialize

在初始化时如果加上 –initial-insecure,则会创建空密码的 root@localhost 账号,否则会创建带密码的 root@localhost 账号,密码直接写在 log-error 日志文件中

(在5.6版本中是放在 ~/.mysql_secret 文件里,更加隐蔽,不熟悉的话可能会无所适从)

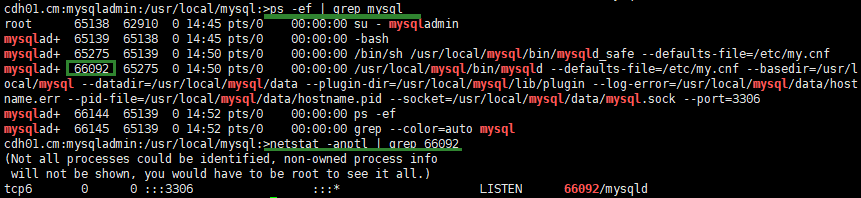

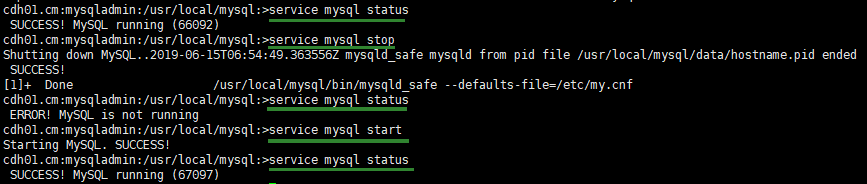

6.启动

#下面命令执行完记得敲回车

/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my.cnf &

#启动mysql(如果上面命令没有启动mysql)

service mysql start

启动查看停止重启

7.查看临时密码

cat data/hostname.err |grep password

8.登录及修改用户密码

mysql -u root -p

#输入刚刚日志中的密码进入

#设置密码

set password for 'root'@'localhost'=password('root');

#配置远程可以访问

grant all privileges on *.* to 'root'@'%' identified by 'root' with grant option;

use mysql

delete from user where host!='%';

#刷新

flush privileges;

#退出

quit

9.登录创建cm需要的库的用户

服务名 数据库名 用户名 Cloudera Manager Server scm scm Activity Monitor amon amon Reports Manager rman rman Hue hue hue Hive Metastore Server metastore hive Sentry Server sentry sentry Cloudera Navigator Audit Server nav nav Cloudera Navigator Metadata Server navms navms Oozie oozie oozie mysql -u root -p

#输入修改后的密码

#scm库和权限暂时不创建,后面指定数据库,会自动创建

#CREATE DATABASE scm DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE amon DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE rman DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE metastore DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE hue DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

CREATE DATABASE oozie DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

#GRANT ALL ON scm.* TO 'scm'@'%' IDENTIFIED BY 'scm';

GRANT ALL ON amon.* TO 'amon'@'%' IDENTIFIED BY 'amon';

GRANT ALL ON rman.* TO 'rman'@'%' IDENTIFIED BY 'rman';

GRANT ALL ON metastore.* TO 'hive'@'%' IDENTIFIED BY 'hive';

GRANT ALL ON hue.* TO 'hue'@'%' IDENTIFIED BY 'hue';

GRANT ALL ON oozie.* TO 'oozie'@'%' IDENTIFIED BY 'oozie';

#####注意此处再授权一个本主机名地址,不然web页面配置很容易出错

GRANT ALL ON amon.* TO 'amon'@'本主机名' IDENTIFIED BY 'amon';

#刷新源

FLUSH PRIVILEGES;

#检查权限是否正确

show grants for 'amon'@'%';

show grants for 'rman'@'%';

show grants for 'hive'@'%';

show grants for 'hue'@'%';

show grants for 'oozie'@'%'; #hive中文乱码解决

alter table COLUMNS_V2 modify column COMMENT varchar(256) character set utf8;

alter table TABLE_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8;

alter table PARTITION_KEYS modify column PKEY_COMMENT varchar(4000) character set utf8;

alter table INDEX_PARAMS modify column PARAM_VALUE varchar(4000) character set utf8; #退出

quit #重启服务

service mysql restart

十二 常用yum源更新,gcc,G++,C++等环境(暂缓)

yum -y install chkconfig python bind-utils psmisc libxslt zlib sqlite cyrus-sasl-plain cyrus-sasl-gssapi fuse fuse-libs redhat-lsb postgresql* portmap mod_ssl openssl openssl-devel python-psycopg2 MySQL-python python-devel telnet pcre-devel gcc gcc-c++

CM+CDH安装

十三 CM+CDH安装

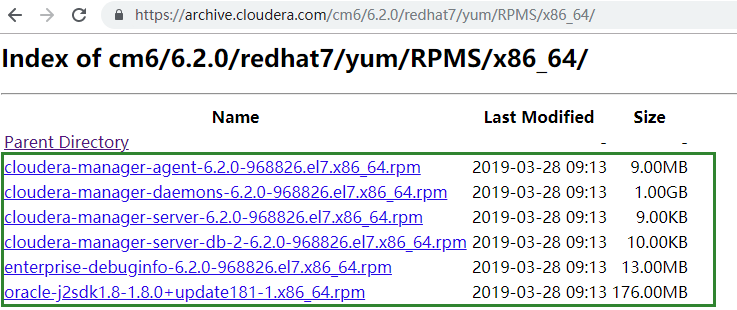

13.1 CM下载地址:https://archive.cloudera.com/cm6/

- CM百度网盘地址:https://pan.baidu.com/s/1W2urUqeOPE4_cJP-IVXxGw 提取码:r1h8

13.2 通过yum安装daemons,agent,server

主节点

yum list | grep cloudera-manager

yum -y install cloudera-manager-daemons cloudera-manager-agent cloudera-manager-server

从节点

yum list | grep cloudera-manager

yum -y install cloudera-manager-daemons cloudera-manager-agent

13.3 上传cloudera-manager-installer.bin到主节点,赋予权限

mkdir /usr/software

cd /usr/software

chmod +x cloudera-manager-installer.bin

13.4CDH 下载地址:https://archive.cloudera.com/cdh6/6.2.0/parcels/

- CDH百度网盘地址:https://pan.baidu.com/s/13T9cHx8VVm2Xf8KxvweJWg 提取码:hnki

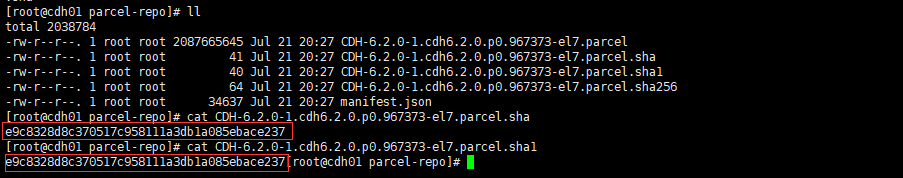

13.5 配置本地Parcel存储库

将1.3上传到指定文件夹

cd /opt/cloudera/parcel-repo/

校验文件是否下载完全

sha1sum CDH-6.2.0-1.cdh6.2.0.p0.967373-el7.parcel | awk '{ print $1 }' > CDH-6.2.0-1.cdh6.2.0.p0.967373-el7.parcel.sha

13.6 执行安装

./cloudera-manager-installer.bin

rm -f /etc/cloudera-scm-server/db.properties

#再次执行脚本(一路yes)

./cloudera-manager-installer.bin

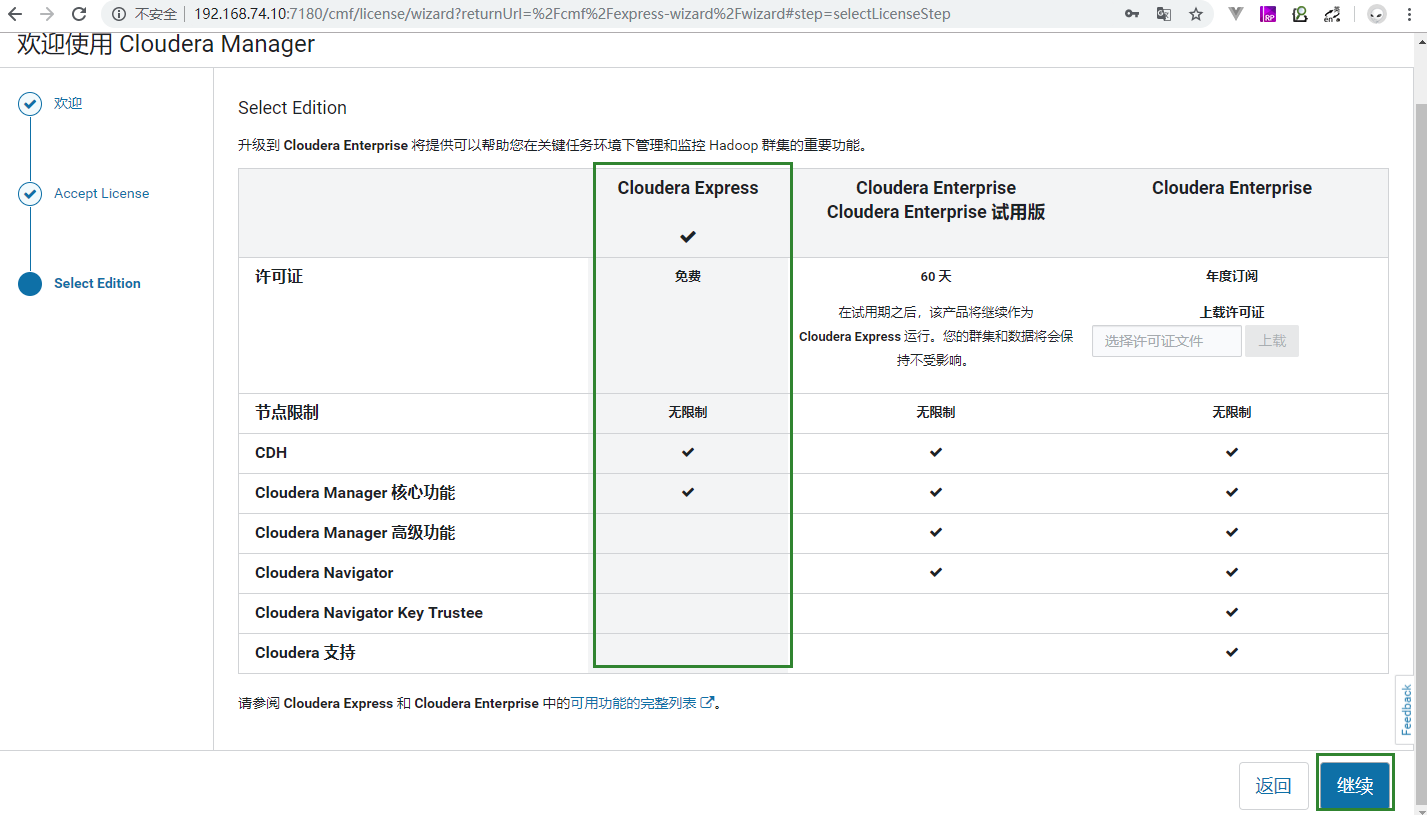

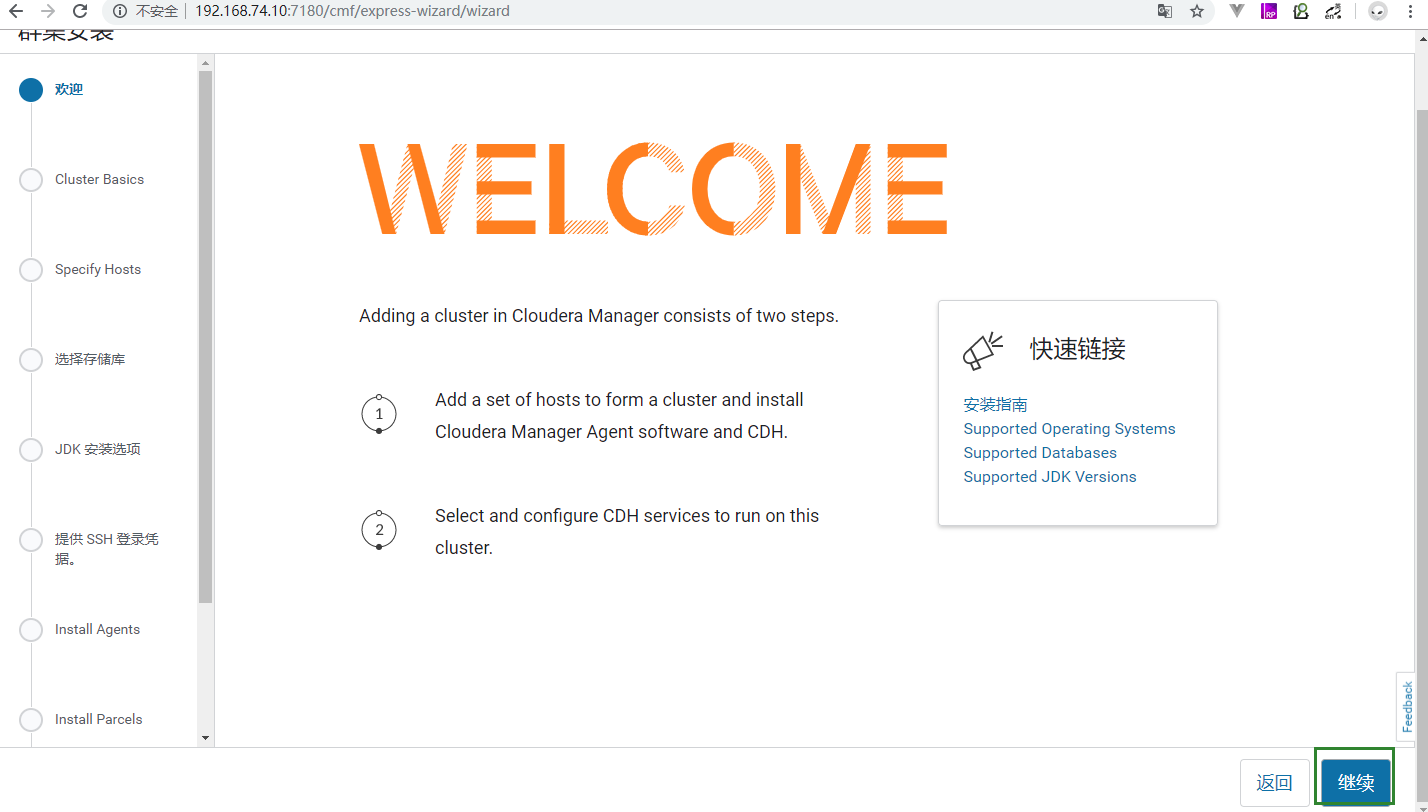

此处便安装完成,可以登录主机IP+7180端口登录web页面,用户名密码admin/admin,但是此处暂时不要急于登录网页并配置参数,先改掉数据库连接方式重启cloudera-scm-server后再进行配置,操作如下:

- 1) 执行脚本scm_prepare_database.sh

#设置Cloudera Manager 数据库

/opt/cloudera/cm/schema/scm_prepare_database.sh mysql -uroot -p'root' scm scm scm

#进如mysql

mysql -uroot -proot

GRANT ALL ON scm.* TO 'scm'@'%' IDENTIFIED BY 'scm';

FLUSH PRIVILEGES;

show grants for 'scm'@'%';

quit

- 2) 停止ClouderaManager服务

service cloudera-scm-server stop

service cloudera-scm-server-db stop

- 3) 删除内嵌的默认数据库PostgreSQL的配置

rm -f /etc/cloudera-scm-server/db.mgmt.properties

- 4) 启动ClouderaManager服务

service cloudera-scm-server start

- 如果有问题查看日志

vim /var/log/cloudera-scm-server/cloudera-scm-server.log

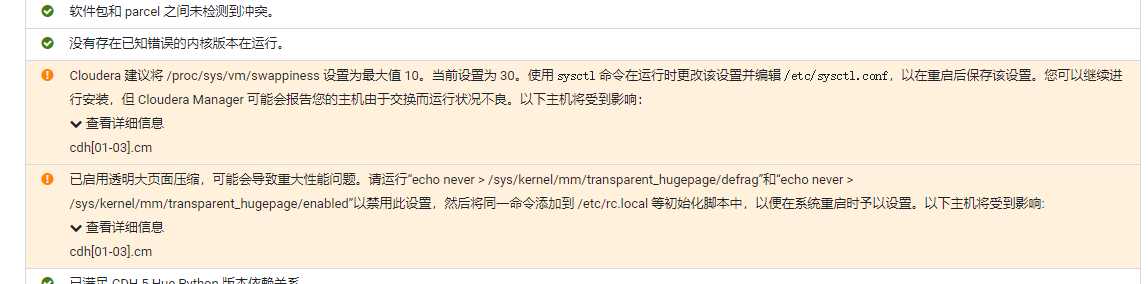

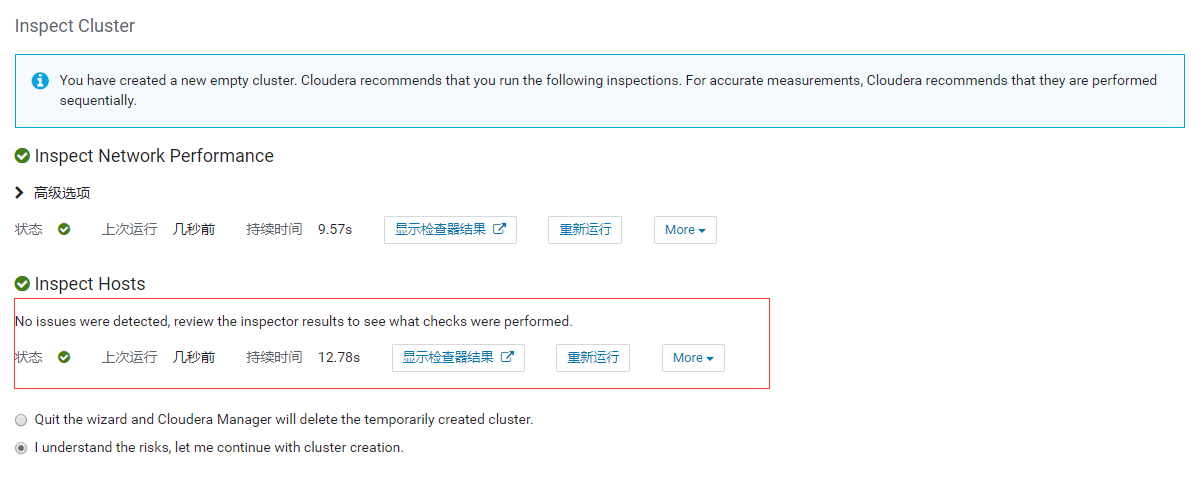

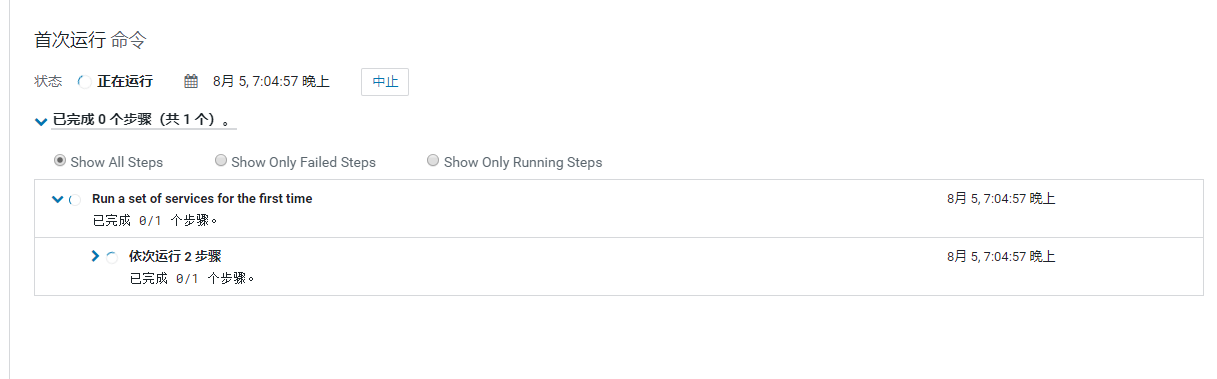

13.7 CM-CDH安装(账号:admin,密码:admin)

- 所有机器都要修改

#问题一

#临时

sysctl vm.swappiness=10

#永久

echo 'vm.swappiness=10'>> /etc/sysctl.conf

#问题二

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

#将问题二命令复制进来,转发给其他机器

vim /etc/rc.local

scp /etc/rc.local root@xxxx:/etc/

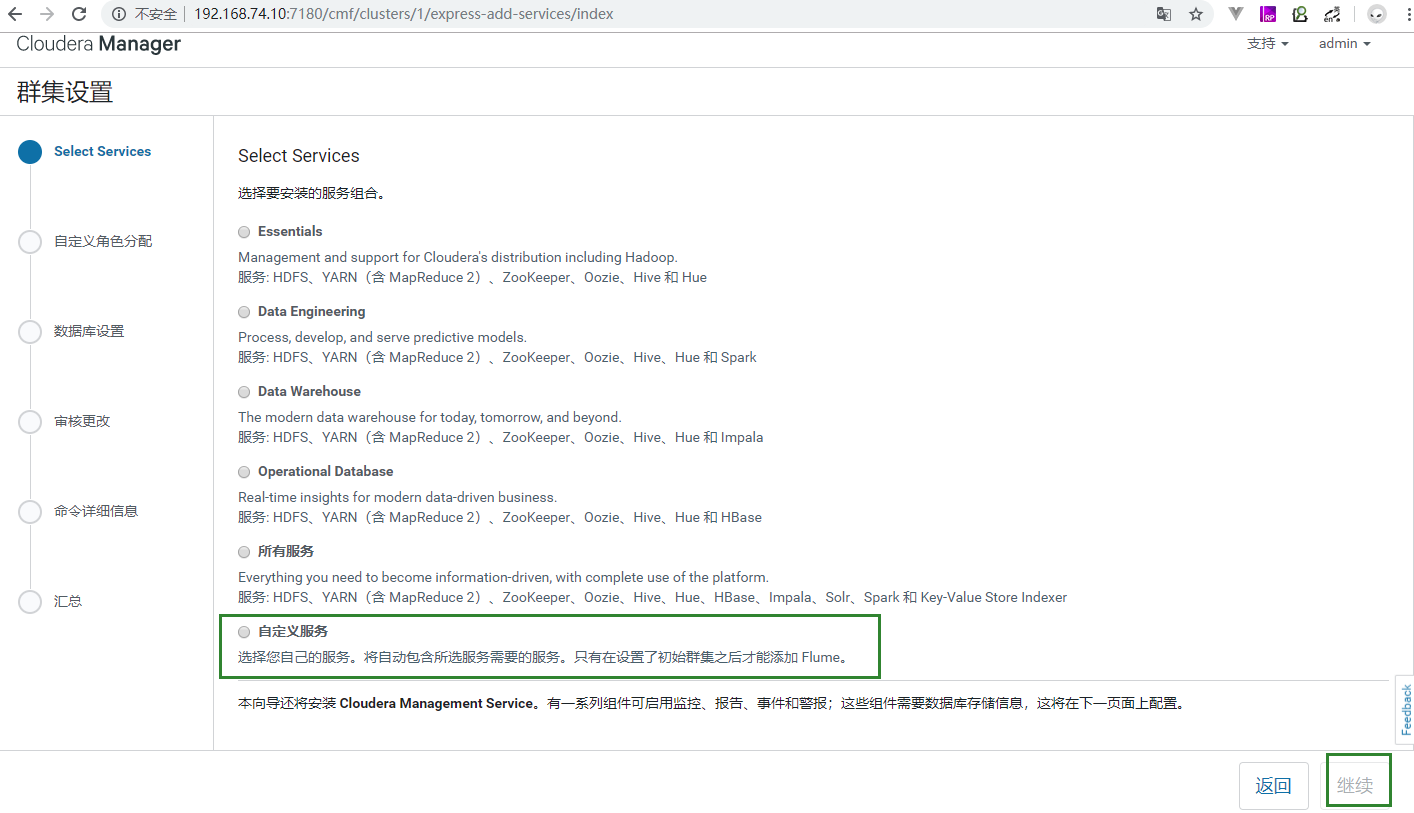

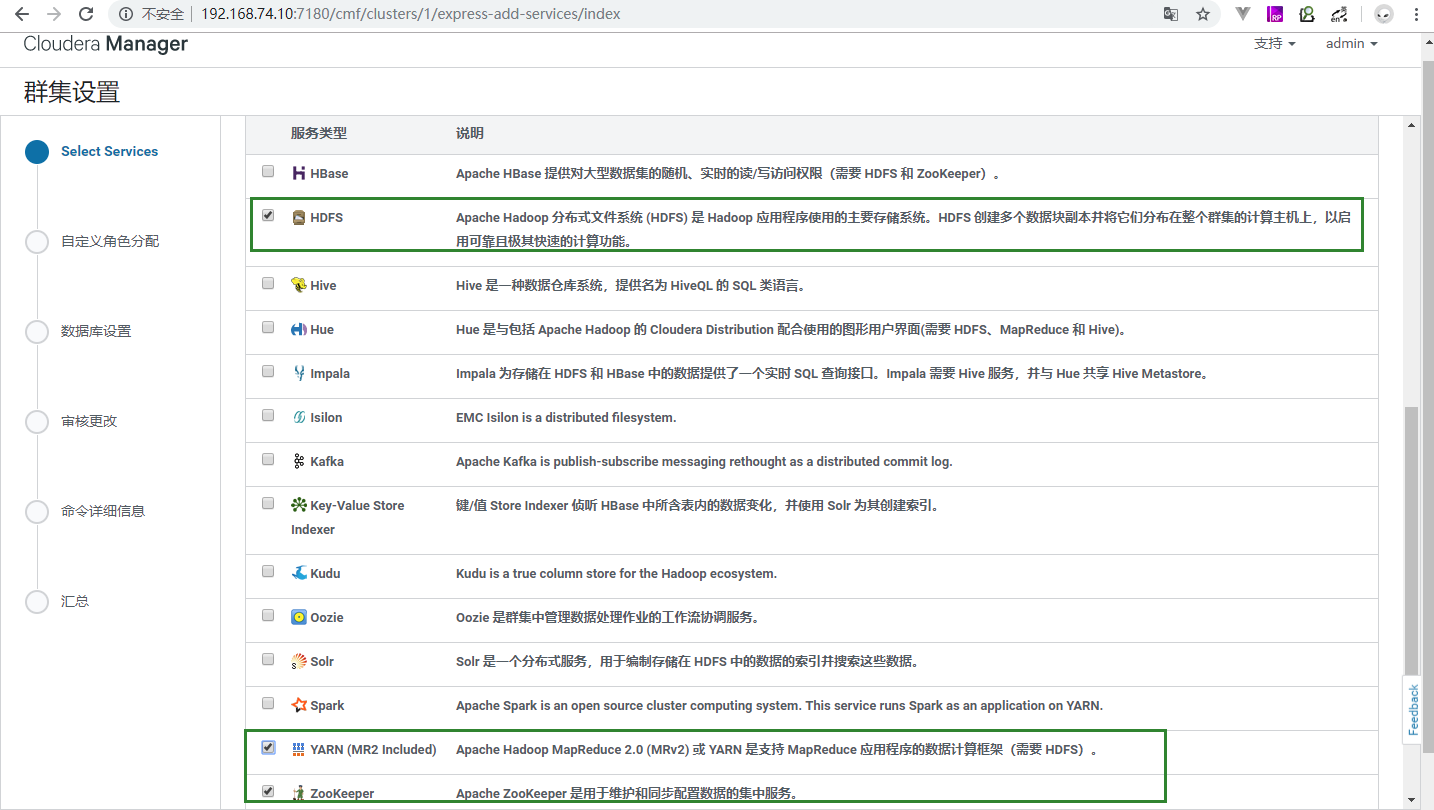

- 选择组件,为了简单安全有效,选择HDFS,YARN,ZOOKEEPER

- 一些基本配置,不需要管,下一步

- 到此安装完成

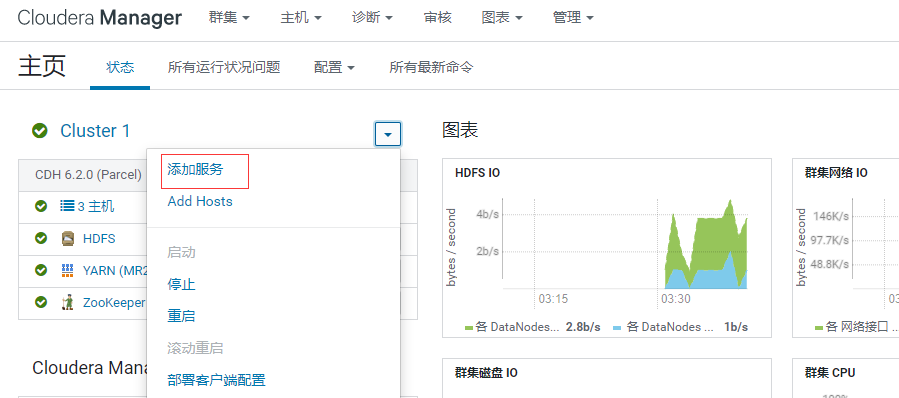

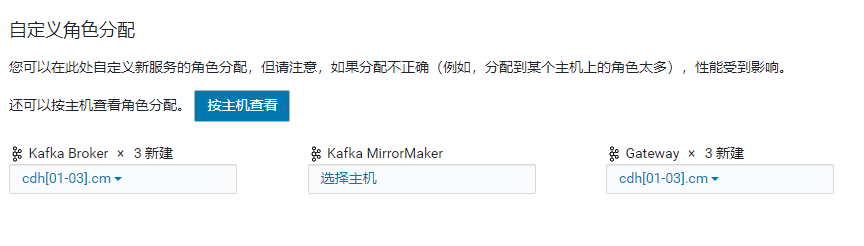

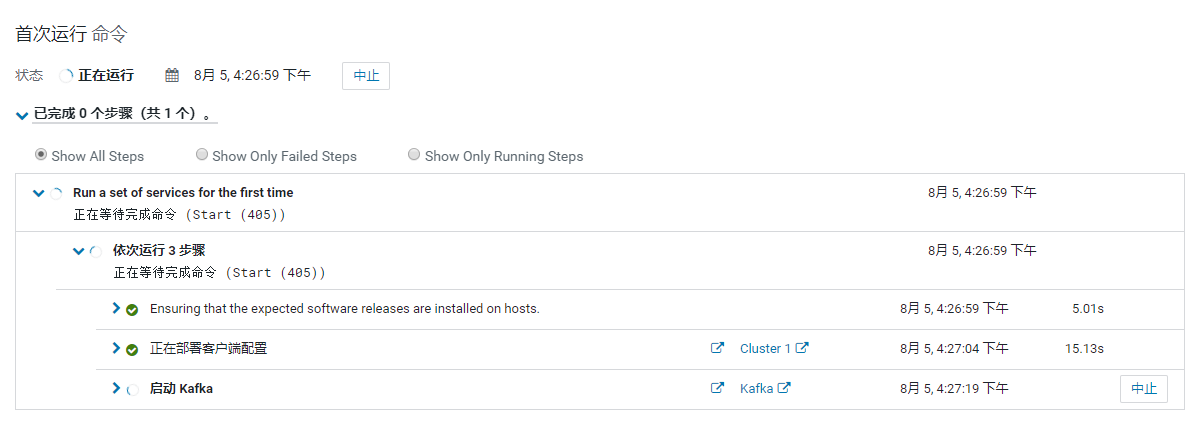

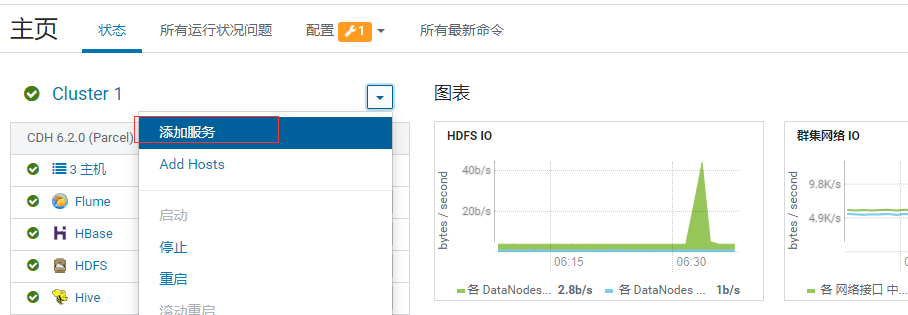

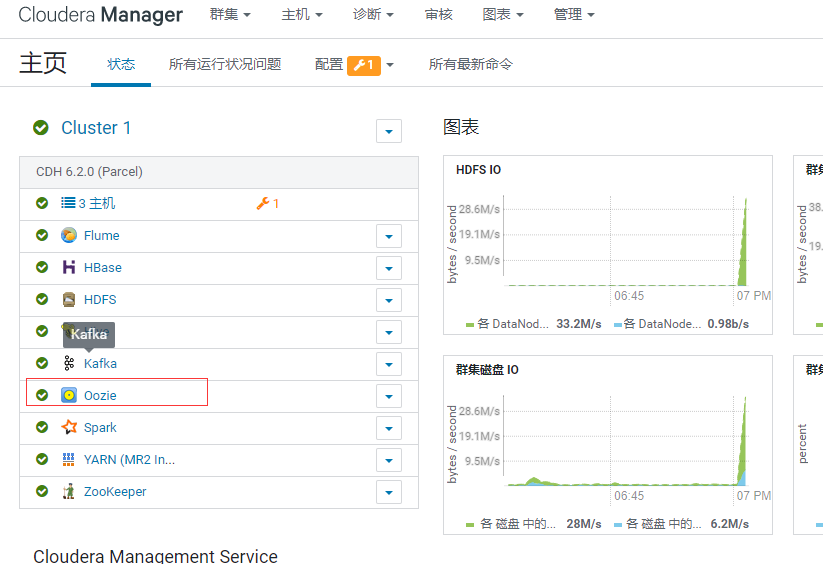

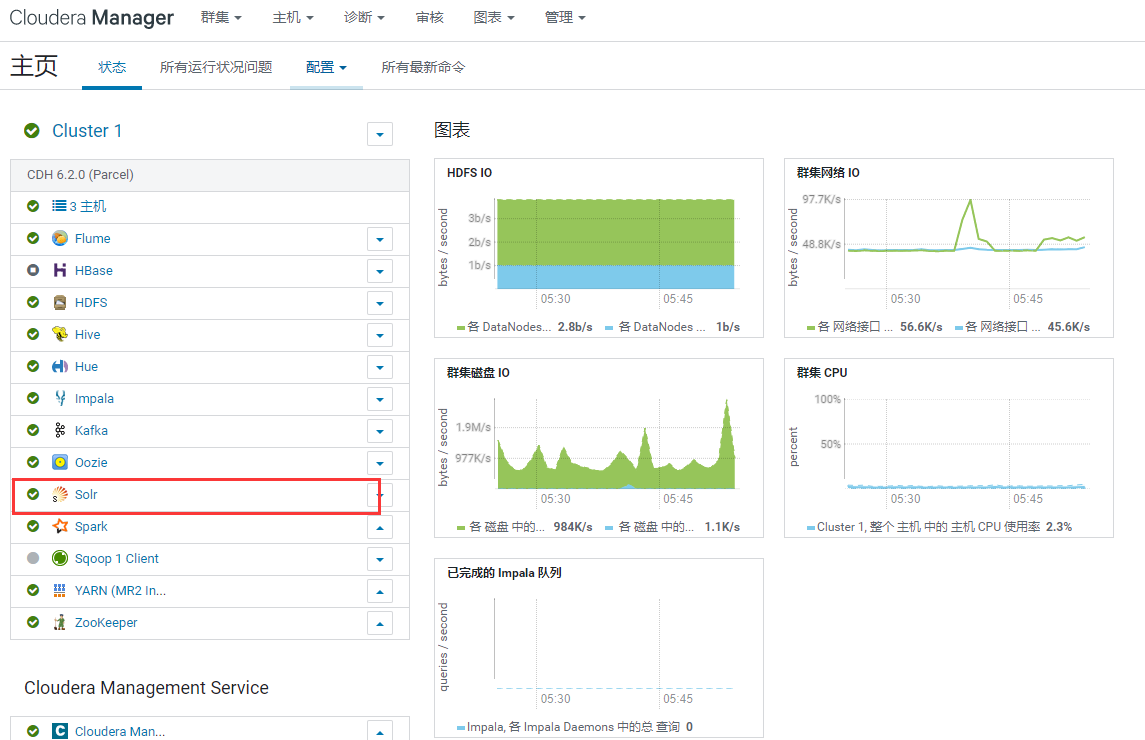

十四 安装Kafka

十五 安装Flume

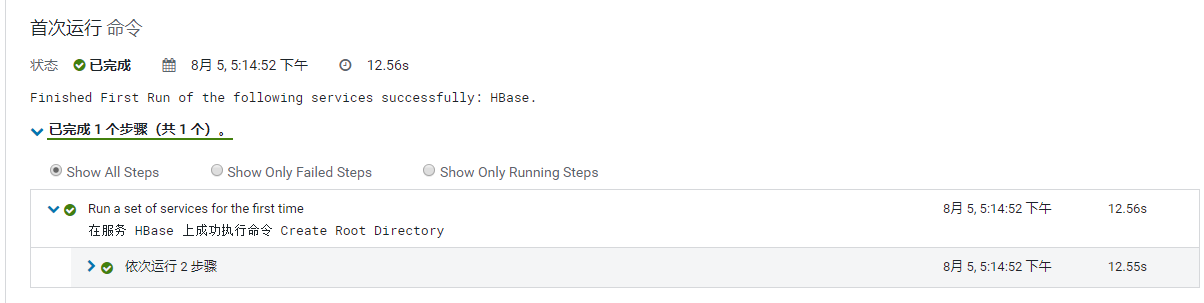

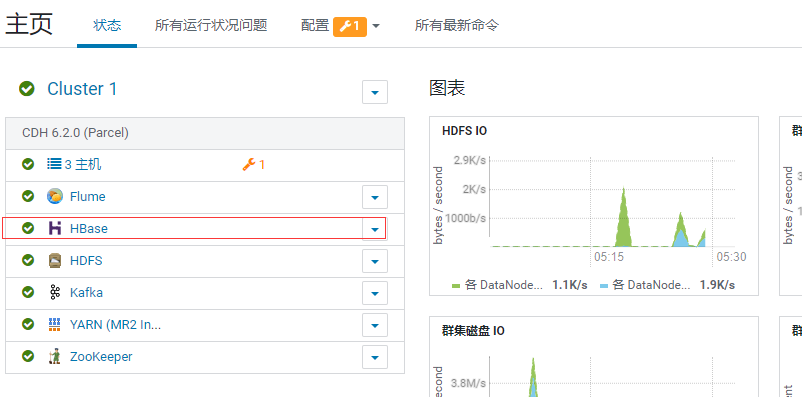

十六 安装Hbase

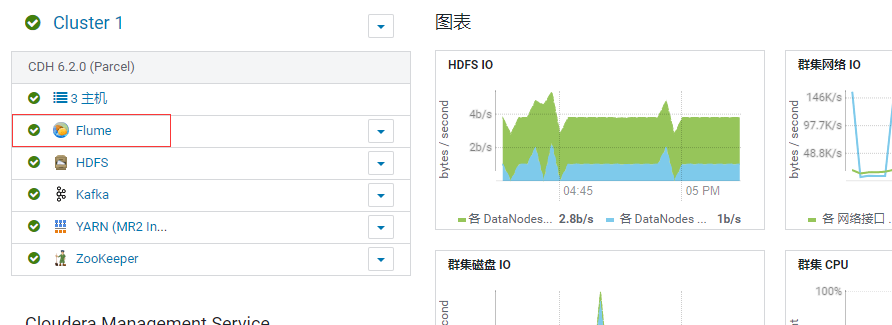

涉及过多,全部服务重启

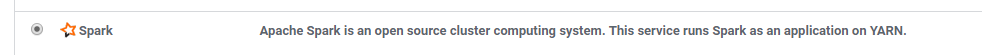

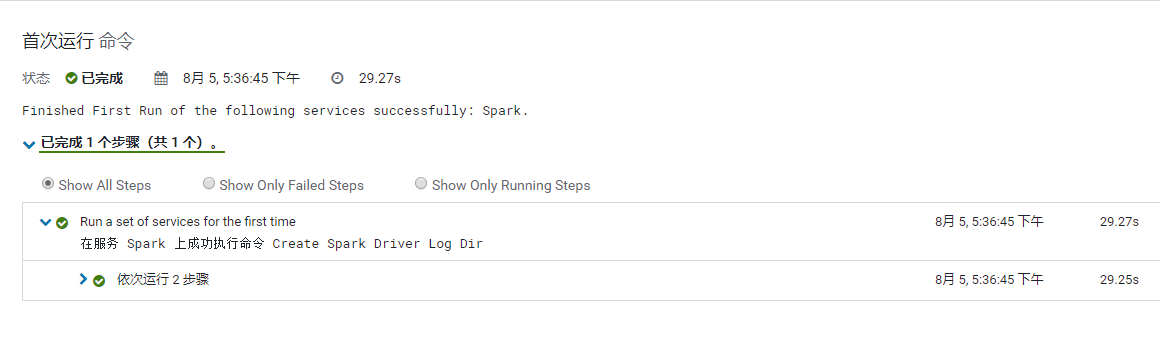

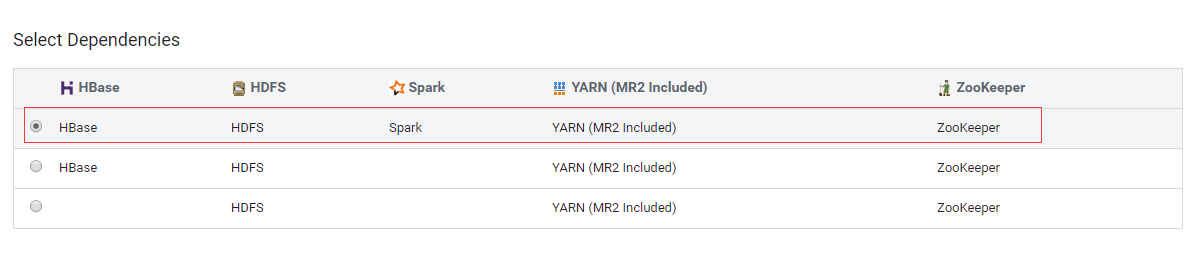

十七 安装Spark

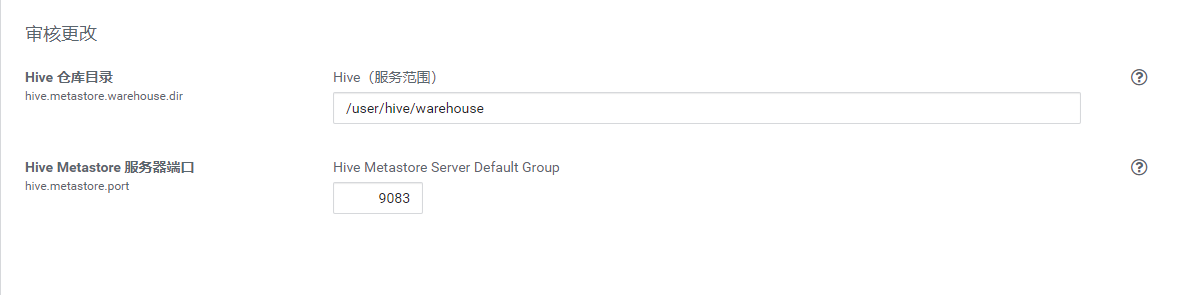

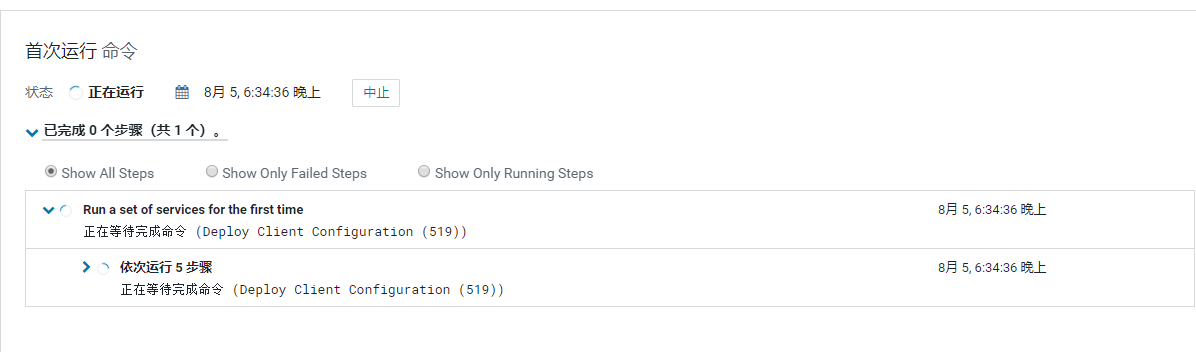

十八 安装hive

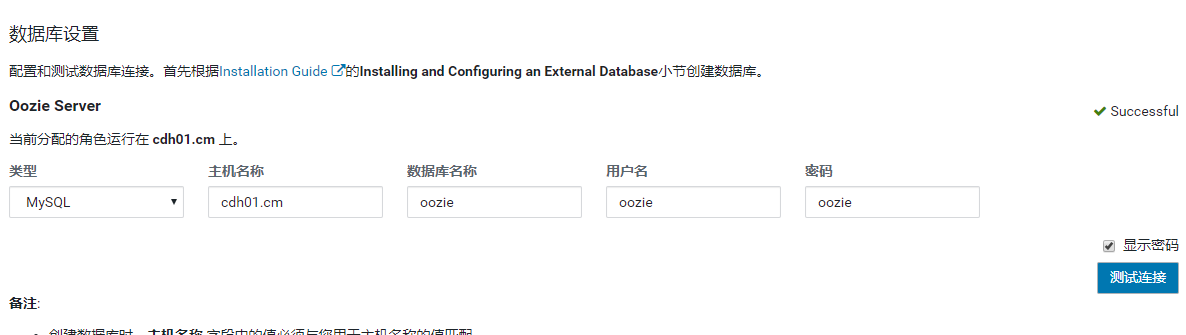

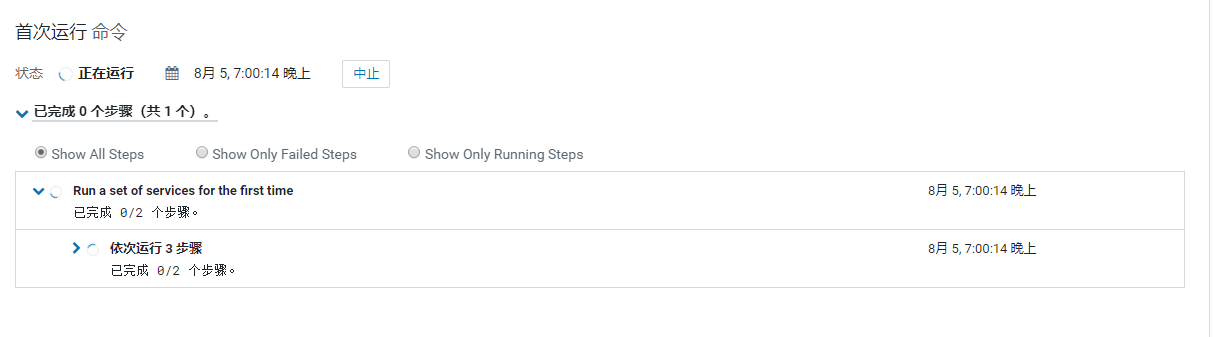

十九 安装Oozie

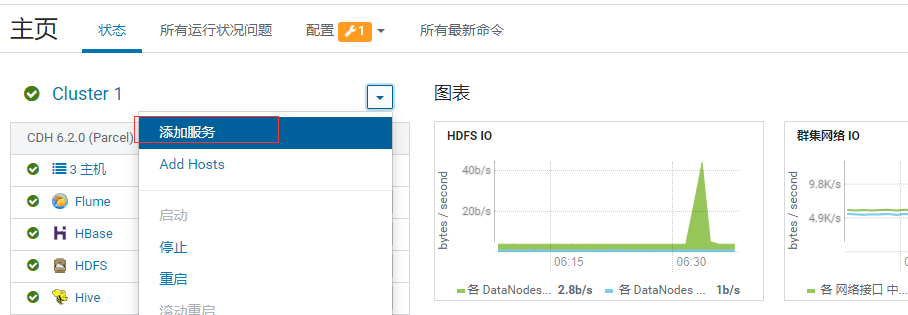

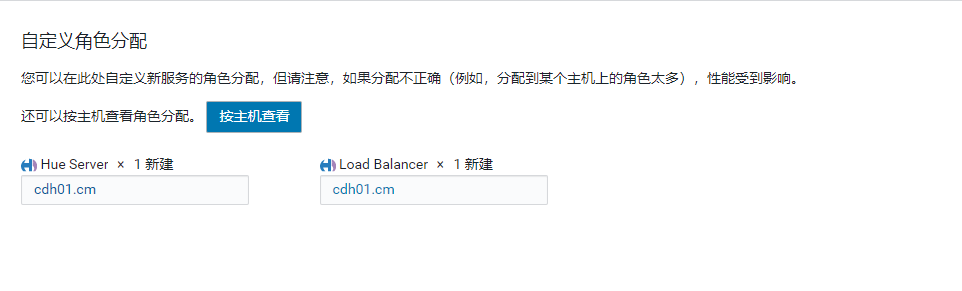

二十 安装hue

出现以下配置问题

Thrift Server role must be configured in HBase service to use the Hue HBase Browser application.

常规警告: 必须在 HBase 服务中配置 Thrift Server 角色以使用 Hue HBase Browser 应用程序。

解决办法

- 给HBase添加Thrift Server角色, 为了方便, 将Thrift Server添加到Hue同一主机

重启Hbase服务后,报以下配置问题

- 在 HBase Thrift Server 属性中选择服务器以使用 Hue HBase Browser 应用程序

二十一 安装Sqoop

选择三台机器

二十二 安装Impala

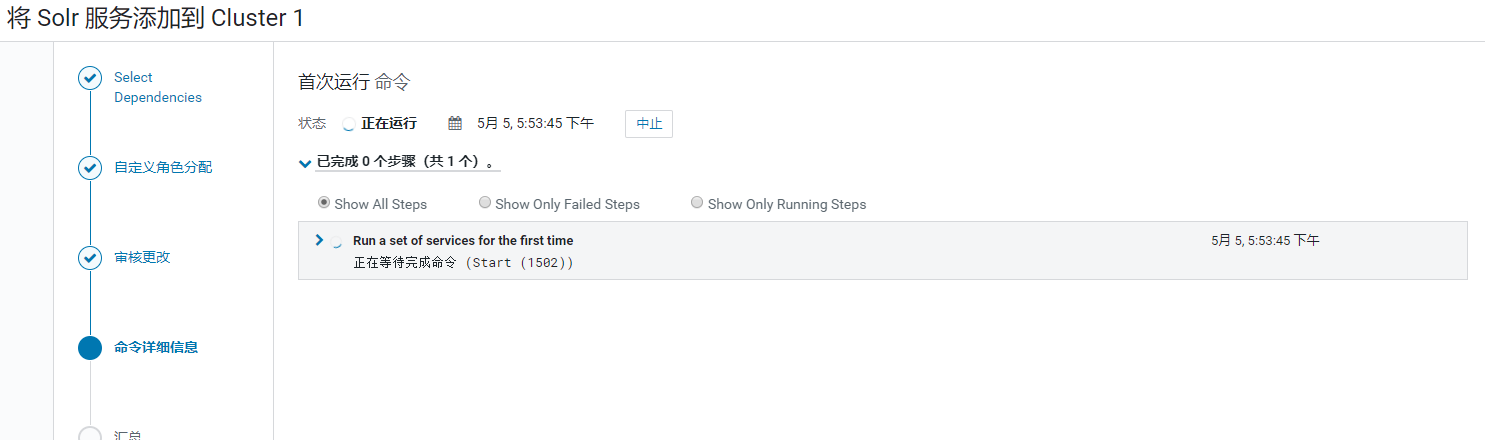

二十三 安装Solr

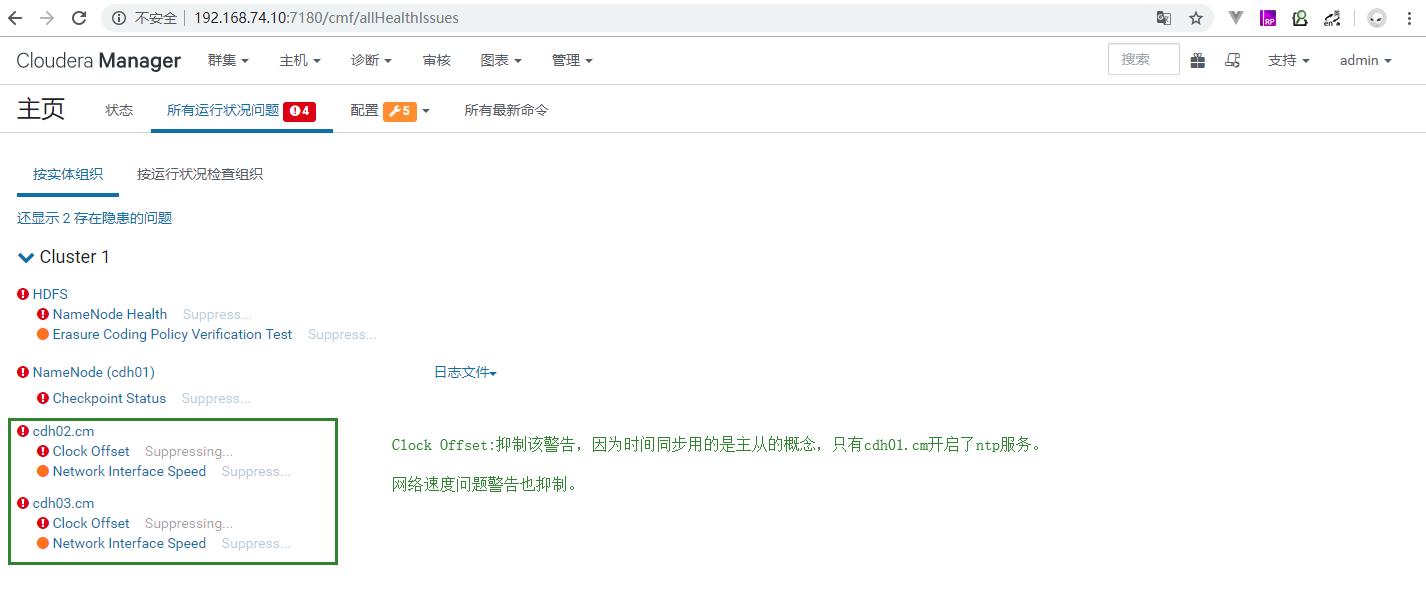

二十四 环境问题解决

24.1 Clock Offset

24.2 Hdfs问题

24.2.1 HDFS Canary

直接抑制

24.2.2 文件系统检查点

不良 : 文件系统检查点已有 20 小时,40 分钟。占配置检查点期限 1 小时的 2,068.25%。 临界阈值:400.00%。 自上个文件系统检查点以来已发生 255 个事务。这是 1,000,000 的已配置检查点事务目标的 0.03%。

解决方法:

1、namenode的Cluster ID 与 secondnamenode的Cluster ID 不一致,对比/dfs/nn/current/VERSION 和/dfs/snn/current/VERSION中的Cluster ID 来确认,如果不一致改成一致后重启节点应该可以解决。

2、修改之后还出现这个状况,查看secondnamenode 日志,报

ERROR: Exception in doCheckpoint java.io.IOException: Inconsistent checkpoint field

这个错误,直接删除 /dfs/snn/current/下所有文件,重启snn节点

24.2.3 擦除编码策略验证测试(先抑制掉)

24.2.4 堆大小(这里给了4G)

24.2.5 内存被调拨过度Memory Overcommit Validation Threshold

所有服务安装完后,总的分配内存是27.9G,总内存是31.4G.内存调拨为27.9/31.4=0.89>默认值0.8,改成0.9就OK了。

二十五 cdh 6.2.0引用maven

| Project | groupId | artifactId | version |

|---|---|---|---|

| Apache Avro | org.apache.avro | avro | 1.8.2-cdh6.2.0 |

| org.apache.avro | avro-compiler | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-guava-dependencies | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-ipc | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-mapred | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-maven-plugin | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-protobuf | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-service-archetype | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-thrift | 1.8.2-cdh6.2.0 | |

| org.apache.avro | avro-tools | 1.8.2-cdh6.2.0 | |

| org.apache.avro | trevni-avro | 1.8.2-cdh6.2.0 | |

| org.apache.avro | trevni-core | 1.8.2-cdh6.2.0 | |

| Apache Crunch | org.apache.crunch | crunch-archetype | 0.11.0-cdh6.2.0 |

| org.apache.crunch | crunch-contrib | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-core | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-examples | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-hbase | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-hive | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-scrunch | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-spark | 0.11.0-cdh6.2.0 | |

| org.apache.crunch | crunch-test | 0.11.0-cdh6.2.0 | |

| Apache Flume 1.x | org.apache.flume | flume-ng-auth | 1.9.0-cdh6.2.0 |

| org.apache.flume | flume-ng-configuration | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-ng-core | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-ng-embedded-agent | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-ng-node | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-ng-sdk | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-ng-tests | 1.9.0-cdh6.2.0 | |

| org.apache.flume | flume-tools | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-channels | flume-file-channel | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-channels | flume-jdbc-channel | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-channels | flume-kafka-channel | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-channels | flume-spillable-memory-channel | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-clients | flume-ng-log4jappender | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-config-filter-api | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-environment-variable-config-filter | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-external-process-config-filter | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-configfilters | flume-ng-hadoop-credential-store-config-filter | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-legacy-sources | flume-avro-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-legacy-sources | flume-thrift-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-dataset-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-hdfs-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-hive-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-http-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-irc-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-ng-hbase2-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-ng-kafka-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sinks | flume-ng-morphline-solr-sink | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sources | flume-jms-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sources | flume-kafka-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sources | flume-scribe-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sources | flume-taildir-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-ng-sources | flume-twitter-source | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-shared | flume-shared-kafka | 1.9.0-cdh6.2.0 | |

| org.apache.flume.flume-shared | flume-shared-kafka-test | 1.9.0-cdh6.2.0 | |

| GCS Connector | com.google.cloud.bigdataoss | gcs-connector | hadoop3-1.9.10-cdh6.2.0 |

| com.google.cloud.bigdataoss | gcsio | 1.9.10-cdh6.2.0 | |

| com.google.cloud.bigdataoss | util | 1.9.10-cdh6.2.0 | |

| com.google.cloud.bigdataoss | util-hadoop | hadoop3-1.9.10-cdh6.2.0 | |

| Apache Hadoop | org.apache.hadoop | hadoop-aliyun | 3.0.0-cdh6.2.0 |

| org.apache.hadoop | hadoop-annotations | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-archive-logs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-archives | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-assemblies | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-auth | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-aws | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-azure | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-azure-datalake | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-build-tools | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-client | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-client-api | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-client-integration-tests | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-client-minicluster | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-client-runtime | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-cloud-storage | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-common | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-datajoin | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-distcp | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-extras | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-gridmix | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-hdfs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-hdfs-client | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-hdfs-httpfs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-hdfs-native-client | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-hdfs-nfs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-kafka | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-kms | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-app | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-common | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-core | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-hs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-hs-plugins | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-jobclient | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-nativetask | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-shuffle | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-client-uploader | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-mapreduce-examples | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-maven-plugins | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-minicluster | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-minikdc | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-nfs | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-openstack | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-resourceestimator | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-rumen | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-sls | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-streaming | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-api | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-applications-distributedshell | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-applications-unmanaged-am-launcher | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-client | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-common | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-registry | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-applicationhistoryservice | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-common | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-nodemanager | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-resourcemanager | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-router | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-sharedcachemanager | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-tests | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-timeline-pluginstorage | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice-hbase | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-timelineservice-hbase-tests | 3.0.0-cdh6.2.0 | |

| org.apache.hadoop | hadoop-yarn-server-web-proxy | 3.0.0-cdh6.2.0 | |

| Apache HBase | org.apache.hbase | hbase-annotations | 2.1.0-cdh6.2.0 |

| org.apache.hbase | hbase-checkstyle | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-client | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-client-project | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-common | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-endpoint | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-error-prone | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-examples | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-external-blockcache | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-hadoop-compat | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-hadoop2-compat | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-http | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-it | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-mapreduce | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-metrics | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-metrics-api | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-procedure | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-protocol | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-protocol-shaded | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-replication | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-resource-bundle | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-rest | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-rsgroup | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-server | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-shaded-client | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-shaded-client-byo-hadoop | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-shaded-client-project | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-shaded-mapreduce | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-shell | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-spark | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-spark-it | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-testing-util | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-thrift | 2.1.0-cdh6.2.0 | |

| org.apache.hbase | hbase-zookeeper | 2.1.0-cdh6.2.0 | |

| HBase Indexer | com.ngdata | hbase-indexer-all | 1.5-cdh6.2.0 |

| com.ngdata | hbase-indexer-cli | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-common | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-demo | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-dist | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-engine | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-model | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-morphlines | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-mr | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-indexer-server | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-sep-api | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-sep-demo | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-sep-impl | 1.5-cdh6.2.0 | |

| com.ngdata | hbase-sep-tools | 1.5-cdh6.2.0 | |

| Apache Hive | org.apache.hive | hive-accumulo-handler | 2.1.1-cdh6.2.0 |

| org.apache.hive | hive-ant | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-beeline | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-classification | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-cli | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-common | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-contrib | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-exec | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-hbase-handler | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-hplsql | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-jdbc | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-kryo-registrator | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-llap-client | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-llap-common | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-llap-ext-client | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-llap-server | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-llap-tez | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-metastore | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-orc | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-serde | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-service | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-service-rpc | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-shims | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-spark-client | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-storage-api | 2.1.1-cdh6.2.0 | |

| org.apache.hive | hive-testutils | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-hcatalog-core | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-hcatalog-pig-adapter | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-hcatalog-server-extensions | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-hcatalog-streaming | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-webhcat | 2.1.1-cdh6.2.0 | |

| org.apache.hive.hcatalog | hive-webhcat-java-client | 2.1.1-cdh6.2.0 | |

| org.apache.hive.shims | hive-shims-0.23 | 2.1.1-cdh6.2.0 | |

| org.apache.hive.shims | hive-shims-common | 2.1.1-cdh6.2.0 | |

| org.apache.hive.shims | hive-shims-scheduler | 2.1.1-cdh6.2.0 | |

| Apache Kafka | org.apache.kafka | connect-api | 2.1.0-cdh6.2.0 |

| org.apache.kafka | connect-basic-auth-extension | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | connect-file | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | connect-json | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | connect-runtime | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | connect-transforms | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-clients | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-examples | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-log4j-appender | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-streams | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-streams-examples | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-streams-scala_2.11 | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-streams-scala_2.12 | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-streams-test-utils | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka-tools | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka_2.11 | 2.1.0-cdh6.2.0 | |

| org.apache.kafka | kafka_2.12 | 2.1.0-cdh6.2.0 | |

| Kite SDK | org.kitesdk | kite-data-core | 1.0.0-cdh6.2.0 |

| org.kitesdk | kite-data-crunch | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-hbase | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-hive | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-mapreduce | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-oozie | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-s3 | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-data-spark | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-hadoop-compatibility | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-maven-plugin | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-minicluster | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-avro | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-core | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-hadoop-core | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-hadoop-parquet-avro | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-hadoop-rcfile | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-hadoop-sequencefile | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-json | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-maxmind | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-metrics-scalable | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-metrics-servlets | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-protobuf | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-saxon | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-solr-cell | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-solr-core | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-tika-core | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-tika-decompress | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-twitter | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-morphlines-useragent | 1.0.0-cdh6.2.0 | |

| org.kitesdk | kite-tools | 1.0.0-cdh6.2.0 | |

| Apache Kudu | org.apache.kudu | kudu-client | 1.9.0-cdh6.2.0 |

| org.apache.kudu | kudu-client-tools | 1.9.0-cdh6.2.0 | |

| org.apache.kudu | kudu-flume-sink | 1.9.0-cdh6.2.0 | |

| org.apache.kudu | kudu-mapreduce | 1.9.0-cdh6.2.0 | |

| org.apache.kudu | kudu-spark2-tools_2.11 | 1.9.0-cdh6.2.0 | |

| org.apache.kudu | kudu-spark2_2.11 | 1.9.0-cdh6.2.0 | |

| org.apache.kudu | kudu-test-utils | 1.9.0-cdh6.2.0 | |

| Apache Oozie | org.apache.oozie | oozie-client | 5.1.0-cdh6.2.0 |

| org.apache.oozie | oozie-core | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-examples | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-fluent-job-api | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-fluent-job-client | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-server | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-distcp | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-git | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-hcatalog | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-hive | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-hive2 | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-oozie | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-pig | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-spark | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-sqoop | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-sharelib-streaming | 5.1.0-cdh6.2.0 | |

| org.apache.oozie | oozie-tools | 5.1.0-cdh6.2.0 | |

| org.apache.oozie.test | oozie-mini | 5.1.0-cdh6.2.0 | |

| Apache Pig | org.apache.pig | pig | 0.17.0-cdh6.2.0 |

| org.apache.pig | piggybank | 0.17.0-cdh6.2.0 | |

| org.apache.pig | pigsmoke | 0.17.0-cdh6.2.0 | |

| org.apache.pig | pigunit | 0.17.0-cdh6.2.0 | |

| Cloudera Search | com.cloudera.search | search-crunch | 1.0.0-cdh6.2.0 |

| com.cloudera.search | search-mr | 1.0.0-cdh6.2.0 | |

| Apache Sentry | com.cloudera.cdh | solr-upgrade | 1.0.0-cdh6.2.0 |

| org.apache.sentry | sentry-binding-hbase-indexer | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-hive | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-hive-common | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-hive-conf | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-hive-follower | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-kafka | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-binding-solr | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-core-common | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-core-model-db | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-core-model-indexer | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-core-model-kafka | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-core-model-solr | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-dist | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-hdfs-common | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-hdfs-dist | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-hdfs-namenode-plugin | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-hdfs-service | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-policy-common | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-policy-engine | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-provider-cache | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-provider-common | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-provider-db | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-provider-file | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-service-api | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-service-client | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-service-providers | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-service-server | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-service-web | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-shaded | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-shaded-miscellaneous | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-spi | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-tests-hive | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-tests-kafka | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-tests-solr | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | sentry-tools | 2.1.0-cdh6.2.0 | |

| org.apache.sentry | solr-sentry-handlers | 2.1.0-cdh6.2.0 | |

| Apache Solr | org.apache.lucene | lucene-analyzers-common | 7.4.0-cdh6.2.0 |

| org.apache.lucene | lucene-analyzers-icu | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-kuromoji | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-morfologik | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-nori | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-opennlp | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-phonetic | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-smartcn | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-stempel | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-analyzers-uima | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-backward-codecs | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-benchmark | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-classification | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-codecs | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-core | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-demo | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-expressions | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-facet | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-grouping | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-highlighter | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-join | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-memory | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-misc | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-queries | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-queryparser | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-replicator | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-sandbox | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-spatial | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-spatial-extras | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-spatial3d | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-suggest | 7.4.0-cdh6.2.0 | |

| org.apache.lucene | lucene-test-framework | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-analysis-extras | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-analytics | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-cell | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-clustering | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-core | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-dataimporthandler | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-dataimporthandler-extras | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-jetty-customizations | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-langid | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-ltr | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-prometheus-exporter | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-security-util | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-sentry-audit-logging | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-solrj | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-test-framework | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-uima | 7.4.0-cdh6.2.0 | |

| org.apache.solr | solr-velocity | 7.4.0-cdh6.2.0 | |

| Apache Spark | org.apache.spark | spark-avro_2.11 | 2.4.0-cdh6.2.0 |

| org.apache.spark | spark-catalyst_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-core_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-graphx_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-hadoop-cloud_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-hive_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-kubernetes_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-kvstore_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-launcher_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-mllib-local_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-mllib_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-network-common_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-network-shuffle_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-network-yarn_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-repl_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-sketch_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-sql-kafka-0-10_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-sql_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming-flume-assembly_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming-flume-sink_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming-flume_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming-kafka-0-10-assembly_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming-kafka-0-10_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-streaming_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-tags_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-unsafe_2.11 | 2.4.0-cdh6.2.0 | |

| org.apache.spark | spark-yarn_2.11 | 2.4.0-cdh6.2.0 | |

| Apache Sqoop | org.apache.sqoop | sqoop | 1.4.7-cdh6.2.0 |

| Apache ZooKeeper | org.apache.zookeeper | zookeeper | 3.4.5-cdh6.2.0 |

①CM+CDH6.2.0安装(全网最全)的更多相关文章

- CDH6.2.0安装并使用基于HBase的Geomesa

1. 查看CDH 安装的hadoop 和 hbase 对应的版本 具体可以参考以下博客: https://www.cxyzjd.com/article/spark_Streaming/10876290 ...

- CDH6.3.0下Apache Atlas2.1.0安装与配置

CDH6.3.0下Apache Atlas2.1.0安装与配置 0. 说明 文中的${ATLAS_HOME}, ${HIVE_HOME} 环境变更需要根据实际环境进行替换. 1. 依赖 A. 软件依赖 ...

- 环境篇:CM+CDH6.3.2环境搭建(全网最全)

环境篇:CM+CDH6.3.2环境搭建(全网最全) 一 环境准备 1.1 三台虚拟机准备 Master( 32g内存 + 100g硬盘 + 4cpu + 每个cpu2核) 2台Slave( 12g内存 ...

- CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-总目录

CentOS7+CDH5.14.0安装全流程记录,图文详解全程实测-总目录: 0.Windows 10本机下载Xshell,以方便往Linux主机上上传大文件 1.CentOS7+CDH5.14.0安 ...

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装爬虫框架Scrapy(离线方式和在线方式)(图文详解)

不多说,直接上干货! 参考博客 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装OpenCV(离线方式和在线方式)(图文详解) 第一步:首先,提示升级下pip 第二步 ...

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装OpenCV(离线方式和在线方式)(图文详解)

不多说,直接上干货! 说明: Anaconda2-5.0.0-Windows-x86_64.exe安装下来,默认的Python2.7 Anaconda3-4.2.0-Windows-x86_64.ex ...

- CDH6.1.0离线安装——笔记

一. 概述 该文档主要记录大数据平台的搭建CDH版的部署过程,以供后续部署环境提供技术参考. 1.1 主流大数据部署方法 目前主流的hadoop平台部署方法主要有以下三种: Apache hadoop ...

- CDH6.2.0离线安装(详细)

目录 01 准备工作 02 环境配置 03 CDH安装 报错 01 准备工作 官网地址下载页面:https://www.cloudera.com/downloads/cdh.html,现在下载好像需要 ...

- 全网最全的Windows下Python2 / Python3里正确下载安装用来向微信好友发送消息的itchat库(图文详解)

不多说,直接上干货! 建议,你用Anaconda2或Anaconda3. 见 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装用来向微信好友发送消息的itchat库( ...

随机推荐

- 纵我不往,知识不来--学习Java第一周心得

暑假第一周,也是开始学习java的第一周. 本周的主要时间花在了小学期的任务上,但也草草开始了java的学习.首先安装好了所需要的软件,然后在网上下载了一份<Java基础笔记>,看了前五章 ...

- AS优化

第一步:打开AS安装所在的位置,用记事本打开“红色框”选中的文件. 如图: 第二步:打开“studio64.exe.vmoptions”文件后修改里面的值,修改后如下: 1 2 3 4 5 6 7 8 ...

- Perl中的bless的理解

bless有两个参数:对象的引用.类的名称. 类的名称是一个字符串,代表了类的类型信息,这是理解bless的关键. 所谓bless就是把 类型信息 赋予 实例变量. [xywang@mnsdev13: ...

- uniapp安卓ios百度人脸识别、活体检测、人脸采集APP原生插件

插件亮点 1 支持安卓平板(横竖屏均可),苹果的iPad.2 颜色图片均可更换. 特别提醒 此插件包含 android 端和 iOS 端,考虑到有些同学只做其中一个端的 app,特意分为 2 个插件, ...

- HashMap、Hashtable、LinkedHashMap、TreeMap、ConcurrentHashMap的区别

Map是Java最常用的集合类之一.它有很多实现类,我总结了几种常用的Map实现类,如下图所示.本篇文章重点总结几个Map实现类的特点和区别: 特点总结: 实现类 HashMap LinkedHash ...

- 大数据学习之路-hdfs

1.什么是hadoop hadoop中有3个核心组件: 分布式文件系统:HDFS —— 实现将文件分布式存储在很多的服务器上 分布式运算编程框架:MAPREDUCE —— 实现在很多机器上分布式并行运 ...

- Serverless Kubernetes 入门:对 Kubernetes 做减法

作者 | 贤维 阿里巴巴高级技术专家 导读:Serverless Kubernetes 是阿里云容器服务团队对未来 Kubernetes 演进方向的一种探索,通过对 Kubernetes 做减法,降 ...

- placeHolder和defaultValue的区别

placeHolder和defaultValue的区别 (1)placeHolder用于提示用户,它不与后端进行交互. (2)defaultValue则是与后端交互时的默认值. 举例说明:在Selec ...

- Linux磁盘管理之LVM逻辑卷快照

一.快照的工作原理 所谓快照就是将当时的系统数据记录下来,在未来若有数据变动,则会将变更前的数据放入快照区进行保存.我们可理解为快照就是给系统拍了一张照片,记录当时系统在拍快照的状态.只不过现实生活中 ...

- 【转】DB2数据库编目的概念以及对其的正确解析

此文章主要向大家描述的是DB2数据库编目的概念以及对DB2数据库编目的概念的正确理解,在DB2中编目(catalog)这个单词看似很难理解,我自己当初在学习DB2数据库的时候也常常被这个编目搞的很不明 ...