Keras入门——(1)全连接神经网络FCN

Anaconda安装Keras:

conda install keras

安装完成:

在Jupyter Notebook中新建并执行代码:

import keras from keras.datasets import mnist # 从keras中导入mnist数据集 from keras.models import Sequential # 导入序贯模型 from keras.layers import Dense # 导入全连接层 from keras.optimizers import SGD # 导入优化函数 (x_train, y_train), (x_test, y_test) = mnist.load_data() # 加载mnist数据集

因为众所周知的原因,下载墙外的文件超时报错,参考 https://www.cnblogs.com/shinny/p/9283372.html 进行修改;

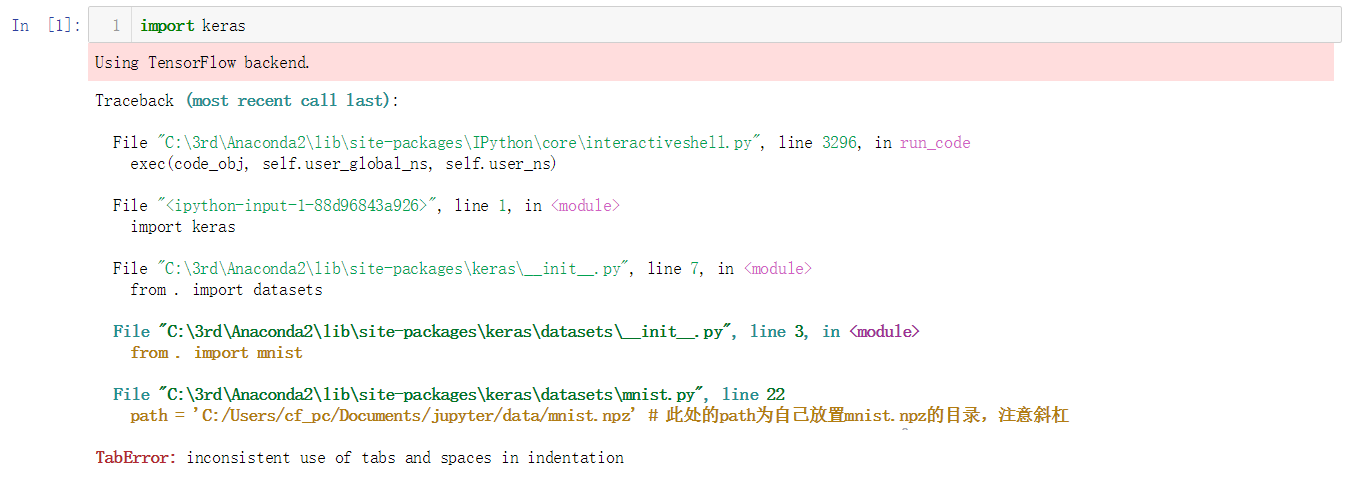

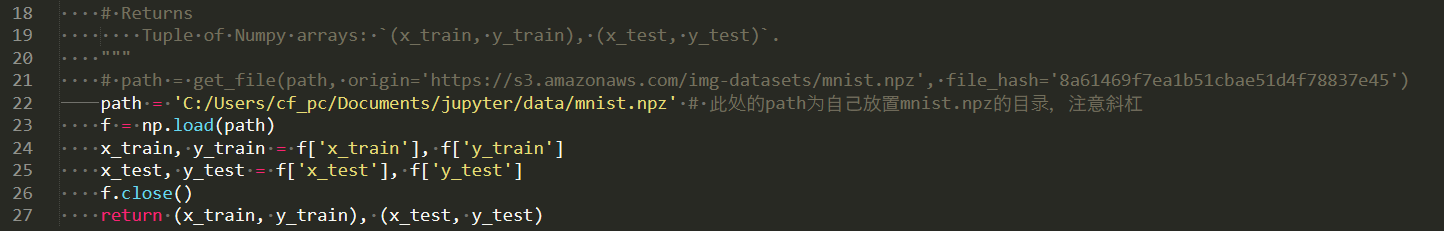

重复执行,报错:“TabError: inconsistent use of tabs and spaces in indentation”

参照 https://blog.csdn.net/qq_41096996/article/details/85947560 进行修改:

执行成功!

继续执行如下代码:

print(x_train.shape,y_train.shape) #(60000, 28, 28) (60000,) print(x_test.shape,y_test.shape) #(10000, 28, 28) (10000,)

继续执行:

import matplotlib.pyplot as plt # 导入可视化的包 im = plt.imshow(x_train[0],cmap='gray')

继续执行:

plt.show() y_train[0]

继续执行:

x_train = x_train.reshape(60000,784) # 将图片摊平,变成向量 x_test = x_test.reshape(10000,784) # 对测试集进行同样的处理 print(x_train.shape) #(60000, 784) print(x_test.shape) #(10000, 784)

继续执行:

x_train[0]

#array([ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 3, 18, 18, 18,

126, 136, 175, 26, 166, 255, 247, 127, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 30, 36, 94, 154, 170, 253,

253, 253, 253, 253, 225, 172, 253, 242, 195, 64, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 49, 238, 253, 253, 253,

253, 253, 253, 253, 253, 251, 93, 82, 82, 56, 39, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 18, 219, 253,

253, 253, 253, 253, 198, 182, 247, 241, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

80, 156, 107, 253, 253, 205, 11, 0, 43, 154, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 14, 1, 154, 253, 90, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 139, 253, 190, 2, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 11, 190, 253, 70,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 35,

241, 225, 160, 108, 1, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 81, 240, 253, 253, 119, 25, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 45, 186, 253, 253, 150, 27, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 16, 93, 252, 253, 187,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 249,

253, 249, 64, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 46, 130,

183, 253, 253, 207, 2, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 39, 148,

229, 253, 253, 253, 250, 182, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 24, 114,

221, 253, 253, 253, 253, 201, 78, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 23, 66,

213, 253, 253, 253, 253, 198, 81, 2, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 18, 171,

219, 253, 253, 253, 253, 195, 80, 9, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 55, 172,

226, 253, 253, 253, 253, 244, 133, 11, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

136, 253, 253, 253, 212, 135, 132, 16, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0], dtype=uint8)

继续执行:

x_train = x_train / 255

x_test = x_test / 255

x_train[0]

#array([0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.01176471, 0.07058824, 0.07058824,

0.07058824, 0.49411765, 0.53333333, 0.68627451, 0.10196078,

0.65098039, 1. , 0.96862745, 0.49803922, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.11764706, 0.14117647, 0.36862745, 0.60392157,

0.66666667, 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.99215686, 0.88235294, 0.6745098 , 0.99215686, 0.94901961,

0.76470588, 0.25098039, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.19215686, 0.93333333,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.98431373, 0.36470588,

0.32156863, 0.32156863, 0.21960784, 0.15294118, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.07058824, 0.85882353, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.77647059, 0.71372549,

0.96862745, 0.94509804, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.31372549, 0.61176471, 0.41960784, 0.99215686, 0.99215686,

0.80392157, 0.04313725, 0. , 0.16862745, 0.60392157,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.05490196,

0.00392157, 0.60392157, 0.99215686, 0.35294118, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.54509804,

0.99215686, 0.74509804, 0.00784314, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.04313725, 0.74509804, 0.99215686,

0.2745098 , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.1372549 , 0.94509804, 0.88235294, 0.62745098,

0.42352941, 0.00392157, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.31764706, 0.94117647, 0.99215686, 0.99215686, 0.46666667,

0.09803922, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.17647059,

0.72941176, 0.99215686, 0.99215686, 0.58823529, 0.10588235,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.0627451 , 0.36470588,

0.98823529, 0.99215686, 0.73333333, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.97647059, 0.99215686,

0.97647059, 0.25098039, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.18039216, 0.50980392,

0.71764706, 0.99215686, 0.99215686, 0.81176471, 0.00784314,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0.15294118,

0.58039216, 0.89803922, 0.99215686, 0.99215686, 0.99215686,

0.98039216, 0.71372549, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0.09411765, 0.44705882, 0.86666667, 0.99215686, 0.99215686,

0.99215686, 0.99215686, 0.78823529, 0.30588235, 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.09019608, 0.25882353, 0.83529412, 0.99215686,

0.99215686, 0.99215686, 0.99215686, 0.77647059, 0.31764706,

0.00784314, 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0.07058824, 0.67058824, 0.85882353,

0.99215686, 0.99215686, 0.99215686, 0.99215686, 0.76470588,

0.31372549, 0.03529412, 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0.21568627, 0.6745098 ,

0.88627451, 0.99215686, 0.99215686, 0.99215686, 0.99215686,

0.95686275, 0.52156863, 0.04313725, 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0.53333333, 0.99215686, 0.99215686, 0.99215686,

0.83137255, 0.52941176, 0.51764706, 0.0627451 , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. , 0. ,

0. , 0. , 0. , 0. ])

继续执行:

y_train = keras.utils.to_categorical(y_train,10) y_test = keras.utils.to_categorical(y_test,10)

继续执行:

model = Sequential() # 构建一个空的序贯模型 # 添加神经网络层 model.add(Dense(512,activation='relu',input_shape=(784,))) model.add(Dense(256,activation='relu')) model.add(Dense(10,activation='softmax')) model.summary()

返回信息:

WARNING:tensorflow:From C:\3rd\Anaconda2\lib\site-packages\tensorflow\python\framework\op_def_library.py:263: colocate_with (from tensorflow.python.framework.ops) is deprecated and will be removed in a future version. Instructions for updating: Colocations handled automatically by placer. _________________________________________________________________ Layer (type) Output Shape Param # ================================================================= dense_1 (Dense) (None, 512) 401920 _________________________________________________________________ dense_2 (Dense) (None, 256) 131328 _________________________________________________________________ dense_3 (Dense) (None, 10) 2570 ================================================================= Total params: 535,818 Trainable params: 535,818 Non-trainable params: 0 _________________________________________________________________

继续执行:

model.compile(optimizer=SGD(),loss='categorical_crossentropy',metrics=['accuracy']) model.fit(x_train,y_train,batch_size=64,epochs=5,validation_data=(x_test,y_test)) # 此处直接将测试集用作了验证集

返回正在执行信息:

执行结束信息:

WARNING:tensorflow:From C:\3rd\Anaconda2\lib\site-packages\tensorflow\python\ops\math_ops.py:3066: to_int32 (from tensorflow.python.ops.math_ops) is deprecated and will be removed in a future version. Instructions for updating: Use tf.cast instead. Train on 60000 samples, validate on 10000 samples Epoch 1/5 60000/60000 [==============================] - 7s 123us/step - loss: 0.7558 - acc: 0.8162 - val_loss: 0.3672 - val_acc: 0.8991 Epoch 2/5 60000/60000 [==============================] - 7s 112us/step - loss: 0.3356 - acc: 0.9068 - val_loss: 0.2871 - val_acc: 0.9204 Epoch 3/5 60000/60000 [==============================] - 7s 112us/step - loss: 0.2798 - acc: 0.9211 - val_loss: 0.2537 - val_acc: 0.9296 Epoch 4/5 60000/60000 [==============================] - 7s 117us/step - loss: 0.2468 - acc: 0.9302 - val_loss: 0.2313 - val_acc: 0.9332 Epoch 5/5 60000/60000 [==============================] - 7s 122us/step - loss: 0.2228 - acc: 0.9378 - val_loss: 0.2084 - val_acc: 0.9404 <keras.callbacks.History at 0x1dcaea054a8>

继续执行:

score = model.evaluate(x_test,y_test) #10000/10000 [==============================] - 1s 53us/step

继续执行:

print("loss:",score[0])

#loss: 0.2084256855905056

继续执行:

print("accu:",score[1])

#accu: 0.9404

参考:

https://www.cnblogs.com/ncuhwxiong/p/9836648.html

https://www.cnblogs.com/shinny/p/9283372.html

https://blog.csdn.net/qq_41096996/article/details/85947560

Keras入门——(1)全连接神经网络FCN的更多相关文章

- 深度学习tensorflow实战笔记(1)全连接神经网络(FCN)训练自己的数据(从txt文件中读取)

1.准备数据 把数据放进txt文件中(数据量大的话,就写一段程序自己把数据自动的写入txt文件中,任何语言都能实现),数据之间用逗号隔开,最后一列标注数据的标签(用于分类),比如0,1.每一行表示一个 ...

- TensorFlow之DNN(二):全连接神经网络的加速技巧(Xavier初始化、Adam、Batch Norm、学习率衰减与梯度截断)

在上一篇博客<TensorFlow之DNN(一):构建“裸机版”全连接神经网络>中,我整理了一个用TensorFlow实现的简单全连接神经网络模型,没有运用加速技巧(小批量梯度下降不算哦) ...

- TensorFlow之DNN(一):构建“裸机版”全连接神经网络

博客断更了一周,干啥去了?想做个聊天机器人出来,去看教程了,然后大受打击,哭着回来补TensorFlow和自然语言处理的基础了.本来如意算盘打得挺响,作为一个初学者,直接看项目(不是指MINIST手写 ...

- MINIST深度学习识别:python全连接神经网络和pytorch LeNet CNN网络训练实现及比较(三)

版权声明:本文为博主原创文章,欢迎转载,并请注明出处.联系方式:460356155@qq.com 在前两篇文章MINIST深度学习识别:python全连接神经网络和pytorch LeNet CNN网 ...

- tensorflow中使用mnist数据集训练全连接神经网络-学习笔记

tensorflow中使用mnist数据集训练全连接神经网络 ——学习曹健老师“人工智能实践:tensorflow笔记”的学习笔记, 感谢曹老师 前期准备:mnist数据集下载,并存入data目录: ...

- 【TensorFlow/简单网络】MNIST数据集-softmax、全连接神经网络,卷积神经网络模型

初学tensorflow,参考了以下几篇博客: soft模型 tensorflow构建全连接神经网络 tensorflow构建卷积神经网络 tensorflow构建卷积神经网络 tensorflow构 ...

- 全卷积神经网络FCN理解

论文地址:https://people.eecs.berkeley.edu/~jonlong/long_shelhamer_fcn.pdf 这篇论文使用全卷积神经网络来做语义上的图像分割,开创了这一领 ...

- 如何使用numpy实现一个全连接神经网络?(上)

全连接神经网络的概念我就不介绍了,对这个不是很了解的朋友,可以移步其他博主的关于神经网络的文章,这里只介绍我使用基本工具实现全连接神经网络的方法. 所用工具: numpy == 1.16.4 matp ...

- Tensorflow 多层全连接神经网络

本节涉及: 身份证问题 单层网络的模型 多层全连接神经网络 激活函数 tanh 身份证问题新模型的代码实现 模型的优化 一.身份证问题 身份证号码是18位的数字[此处暂不考虑字母的情况],身份证倒数第 ...

随机推荐

- SAIF anno

https://www.cnblogs.com/IClearner/p/6898463.html SAIF--RTL BACK分析法 RTL backward SAIF文件是通过对RTL代码进行仿真得 ...

- 刷题72. Edit Distance

一.题目说明 题目72. Edit Distance,计算将word1转换为word2最少需要的操作.操作包含:插入一个字符,删除一个字符,替换一个字符.本题难度为Hard! 二.我的解答 这个题目一 ...

- jquery--获取input checkbox是否被选中以及渲染checkbox选中状态

jquery获取checkbox是否被选中 html <div><input type="checkbox" id="is_delete" n ...

- Spring 事务归纳

Spring transaction 什么是事务 A用户向B用户转帐100,第一步要从A帐户扣出100,第二步要将B帐户加上100.其中无论是第一步失败,还是第二步失败.都应该将A.B帐户的余额保持和 ...

- java1.8特性

java1.8特性 1.lambda表达式 Java8为集合类引入了另一个重要概念:流(stream).一个流通常以一个集合类实例为其数据源,然后在其上定义各种操作 例如 .filter .forEa ...

- Java+Selenium自动化测试学习(一)

自动化测试基本流程 1.设置chromedriver的地址System.setProperty(); 2.创建一个默认浏览器ChromeDriver driver = new ChromeDriver ...

- 概率DP lightoj 1265

题意: 1.两只老虎相遇 就互相残杀 2.老虎与鹿相遇 鹿死 3.老虎与人相遇 人死 4.人与鹿相遇 鹿死 5.鹿与鹿相遇 无果 求人活的概率 解析:如果老虎为0 则人活得概率为1 ...

- cookie、session以及中间件

cookie cookie是保存客户端浏览器上的键值对,是服务端设置在客户端浏览器上的键值对,也就意味着浏览器其实可以拒绝服务端的'命令',默认情况下浏览器都是直接让服务端设置键值对 设置cookie ...

- yii components文件到底应该放些什么代码

项目全局用的代码,比如项目所有controller和model的共通操作或者放一些第三方的组件.插件之类的项目全局用的代码

- 列表与数组 Perl入门第三章

列表List 是标量的有序集合.数组array则是存储列表的变量.数组/列表的每个元素element都是单独的标量变量,拥有独立的标量值. 1. 数组: 访问数组中的元素: $fred[0]=&quo ...