使用windos电脑模拟搭建web集群(一)

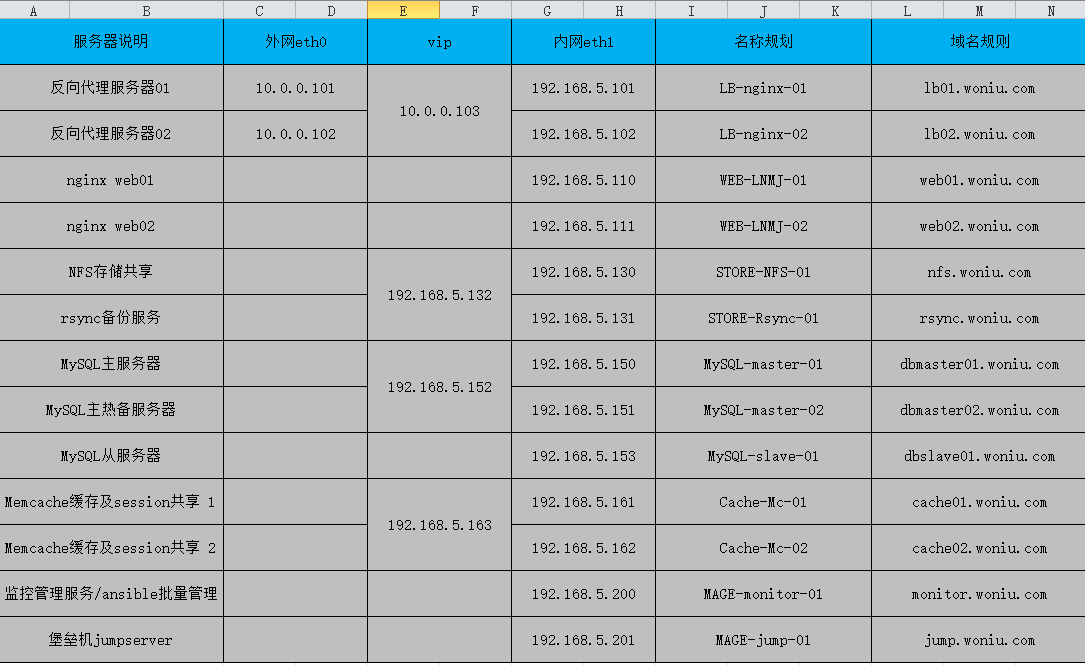

资源规划

1.环境准备

centos7.2 虚拟机 13个 可以先配置一台,做好基础优化,然后克隆13台分布在 windos宿主机上

两台windos笔记 都是8g的内存 一台有点内存吃紧。 没有物理服务器玩,屌丝的玩法,有硬件资源的老兄,只能羡慕你了 。

vmare 虚拟 13台虚拟机

eth0 用的是nat模式 eth1用是桥接模式

2.在监控主机安装ansible

直接yum安装

yum install -y ansible

如果不想配置密钥对使用ansible

更改 /etc/ansible/ansible.cfg 配置

host_key_checking = False //打开这个注释

3.根据资源分布表配置ansible的 主机清单

[root@mage-monitor- ~]# tail - /etc/ansible/hosts

192.168.5.103 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.110 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.111 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.130 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.131 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.132 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.150 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.151 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.152 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.153 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.161 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.162 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.163 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.200 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

192.168.5.201 ansible_ssh_port= ansible_ssh_user=root ansible_ssh_pass=

测试一下配置

[root@mage-monitor- ~]# ansible all -m ping

192.168.5.130 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.102 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.110 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.101 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.111 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.131 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.161 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.150 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.153 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.151 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.162 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.201 | SUCCESS => {

"changed": false,

"ping": "pong"

}

192.168.5.200 | SUCCESS => {

"changed": false,

"ping": "pong"

}

4.配置DNS (所有主机)

先在monitor主机上配一份 然后用ansible的copy模块批量分发

直接复制excel 也管用

[root@mage-monitor- ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

:: localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.5.101 LB-nginx- lb01.woniu.com

192.168.5.102 LB-nginx- lb02.woniu.com

192.168.5.110 WEB-LNMJ- web01.woniu.com

192.168.5.111 WEB-LNMJ- web02.woniu.com

192.168.5.130 STORE-NFS- nfs.woniu.com

192.168.5.131 STORE-Rsync- rsync.woniu.com

192.168.5.150 MySQL-master- dbmaster01.woniu.com

192.168.5.151 MySQL-master- dbmaster02.woniu.com

192.168.5.153 MySQL-slave- dbslave01.woniu.com

192.168.5.161 Cache-Mc- cache01.woniu.com

192.168.5.162 Cache-Mc- cache02.woniu.com

192.168.5.200 MAGE-monitor- monitor.woniu.com

192.168.5.201 MAGE-jump- jump.woniu.com

随机测试一个是否真的管用

结论:管用

4.使用ansible copy模块分发文件

[root@mage-monitor- ~]# ansible all -m copy -a "src=/etc/hosts dest=/etc/ backup=yes"

192.168.5.111 | SUCCESS => {

"backup_file": "/etc/hosts.4654.2018-07-04@05:43:06~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697385.22-47924055524411/source",

"state": "file",

"uid":

}

192.168.5.130 | SUCCESS => {

"backup_file": "/etc/hosts.4615.2018-07-04@05:43:07~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697385.3-197755389326565/source",

"state": "file",

"uid":

}

192.168.5.110 | SUCCESS => {

"backup_file": "/etc/hosts.4147.2018-07-04@05:43:07~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697385.16-251244921102860/source",

"state": "file",

"uid":

}

192.168.5.101 | SUCCESS => {

"backup_file": "/etc/hosts.9355.2018-07-04@17:43:07~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697385.08-106777289042025/source",

"state": "file",

"uid":

}

192.168.5.102 | SUCCESS => {

"backup_file": "/etc/hosts.9438.2018-07-04@17:43:07~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697385.09-259223683192222/source",

"state": "file",

"uid":

}

192.168.5.131 | SUCCESS => {

"backup_file": "/etc/hosts.4552.2018-07-04@05:43:08~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697387.2-149000912314060/source",

"state": "file",

"uid":

}

192.168.5.161 | SUCCESS => {

"backup_file": "/etc/hosts.3986.2018-07-04@05:43:08~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697387.46-259570305288606/source",

"state": "file",

"uid":

}

192.168.5.150 | SUCCESS => {

"backup_file": "/etc/hosts.13119.2018-07-04@17:43:08~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697387.21-147931918956879/source",

"state": "file",

"uid":

}

192.168.5.153 | SUCCESS => {

"backup_file": "/etc/hosts.11253.2018-07-04@17:43:08~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697387.46-183080296309039/source",

"state": "file",

"uid":

}

192.168.5.151 | SUCCESS => {

"backup_file": "/etc/hosts.10615.2018-07-04@17:43:08~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697387.34-187115266458800/source",

"state": "file",

"uid":

}

192.168.5.162 | SUCCESS => {

"backup_file": "/etc/hosts.4544.2018-07-04@05:43:09~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697388.5-127274012015792/source",

"state": "file",

"uid":

}

192.168.5.200 | SUCCESS => {

"changed": false,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"gid": ,

"group": "root",

"mode": "",

"owner": "root",

"path": "/etc/hosts",

"size": ,

"state": "file",

"uid":

}

192.168.5.201 | SUCCESS => {

"backup_file": "/etc/hosts.4501.2018-07-04@05:43:10~",

"changed": true,

"checksum": "221c515383928f333f15de0e7b68a49de47bf1d8",

"dest": "/etc/hosts",

"gid": ,

"group": "root",

"md5sum": "39a534a177bbef2d011ba5c1db448f70",

"mode": "",

"owner": "root",

"size": ,

"src": "/root/.ansible/tmp/ansible-tmp-1530697389.06-77460370855032/source",

"state": "file",

"uid":

}

其他所有主机是黄色的 success 而 200这台主机是绿色的,因为200这台的hosts文件就是我分发的这个

内容相同不覆盖,其他主机的hosts内容不一致都被覆盖了 所以显示黄色

我还加了一个参数backup=yes

检验一下,任选一台主机 hosts文件已被更改

并且还备份了 一个原来的

基础环境 就搞完了,下面就是在各台主机上 安装软件配置软件

使用windos电脑模拟搭建web集群(一)的更多相关文章

- 使用windos模拟搭建web集群(二)

一.通过rsync搭建备份服务器 这三个目录我们需要做实时热备,他们分别是 系统的脚本目录 系统的配置文件目录 系统的定时任务目录 [root@mage-monitor- ~]# cat /se ...

- Haproxy配合Nginx搭建Web集群部署

Haproxy配合Nginx搭建Web集群部署实验 1.Haproxy介绍 2.Haproxy搭建 Web 群集 1.Haproxy介绍: a)常见的Web集群调度器: 目前常见的Web集群调度器分为 ...

- Haproxy搭建web集群

目录: 一.常见的web集群调度器 二.Haproxy应用分析 三.Haproxy调度算法原理 四.Haproxy特性 五.Haproxy搭建 Web 群集 一.常见的web集群调度器 目前常见的we ...

- 使用windos电脑模拟搭建集群(三)实现全网监控

这里我们采用小米监控 open-falcon 这是server端就是 192.168.5.200 这台主机, agent就是负责将数据提交到 server端 agent整个集群所有主机都 ...

- 在本地模拟搭建zookeeper集群环境实例

先给一堆学习文档,方便以后查看 官网文档地址大全: OverView(概述) http://zookeeper.apache.org/doc/r3.4.6/zookeeperOver.html Get ...

- 使用windos电脑模拟搭建集群(四)web环境 linux+nginx+jdk+tomcat

1.使用ansible的playbook自动安装两台web主机的nginx 1.配置模块 主机清单 2.创建 playbook目录并编写安装nginx的playbook mkdir -p playbo ...

- Web集群调度器-Haproxy

Web集群调度器-Haproxy 目录 Web集群调度器-Haproxy 一.Web集群调度器 1.常用的Web集群调度器 2. Haproxy应用分析 3. Haproxy的主要特性 4. 常用集群 ...

- 每秒处理3百万请求的Web集群搭建-用 LVS 搭建一个负载均衡集群

这篇文章是<打造3百万次请求/秒的高性能服务器集群>系列的第3部分,有关于性能测试工具以及优化WEB服务器部分的内容请参看以前的文章. 本文基于你已经优化好服务器以及网络协议栈的基础之上, ...

- 每秒处理3百万请求的Web集群搭建-如何生成每秒百万级别的 HTTP 请求?

本文是构建能够每秒处理 3 百万请求的高性能 Web 集群系列文章的第一篇.它记录了我使用负载生成器工具的一些经历,希望它能帮助每一个像我一样不得不使用这些工具的人节省时间. 负载生成器是一些生成用于 ...

随机推荐

- HTML标准开头

<!doctype html> <html> <head> <meta charset="utf-8"> <title&g ...

- laravel5.5入口文件分析

入口文件 public/index.php 1.加载composer的自动加载器 require __DIR__.'/../vendor/autoload.php'; 自动加载,不用再各种requir ...

- 为什么i=i++后,i的值不变(深入解析)

在Java中,运行以下代码: int i=10; i=i++; System.out.println(i); 得到的结果仍然为10,为什么呢?理论上,运算的过程不应该是i首先把10取出来,赋值给i,然 ...

- 《数据结构》C++代码 BFS与DFS

BFS,广度优先搜索,一层一层去遍历图,故称广度优先.实现方式:队列. DFS,深度优先搜索,撞墙才回头的遍历,称为深度优先.实现方式:递归(栈). 这两种遍历方式,是访问图的基本方式.如果拿树做对比 ...

- 【Invert Binary Tree】cpp

题目: Invert Binary Tree Total Accepted: 20346 Total Submissions: 57084My Submissions Question Solutio ...

- 【ZigZag Conversion】cpp

题目: The string "PAYPALISHIRING" is written in a zigzag pattern on a given number of rows l ...

- 【Spiral Matrix】cpp

题目: Given a matrix of m x n elements (m rows, n columns), return all elements of the matrix in spira ...

- Nuget.config格式错误,请检查nuget.config配置文件

安装 VS 2015 Professional 版,安装后,我想加一个nuget的包配置. 然后提示我:Nuget.config格式错误,请检查nuget.config配置文件 我找到了 Nuget. ...

- 转载css3 图片圆形显示 如何CSS将正方形图片显示为圆形图片布局

转载 原文:http://www.divcss5.com/wenji/w732.shtml 原本不是圆形图片,通过CSS样式布局实现成圆形图片,首先图片必须为正方形. 二.使用布局技术 - ...

- php session 测试

2018-06-22 08:26:30 session指的是默认php提供的文件session形式 当前我的认识是,php并不记录session的过期时间,但是php.ini中有session的垃圾回 ...