【python实现卷积神经网络】开始训练

代码来源:https://github.com/eriklindernoren/ML-From-Scratch

卷积神经网络中卷积层Conv2D(带stride、padding)的具体实现:https://www.cnblogs.com/xiximayou/p/12706576.html

激活函数的实现(sigmoid、softmax、tanh、relu、leakyrelu、elu、selu、softplus):https://www.cnblogs.com/xiximayou/p/12713081.html

损失函数定义(均方误差、交叉熵损失):https://www.cnblogs.com/xiximayou/p/12713198.html

优化器的实现(SGD、Nesterov、Adagrad、Adadelta、RMSprop、Adam):https://www.cnblogs.com/xiximayou/p/12713594.html

卷积层反向传播过程:https://www.cnblogs.com/xiximayou/p/12713930.html

全连接层实现:https://www.cnblogs.com/xiximayou/p/12720017.html

批量归一化层实现:https://www.cnblogs.com/xiximayou/p/12720211.html

池化层实现:https://www.cnblogs.com/xiximayou/p/12720324.html

padding2D实现:https://www.cnblogs.com/xiximayou/p/12720454.html

Flatten层实现:https://www.cnblogs.com/xiximayou/p/12720518.html

上采样层UpSampling2D实现:https://www.cnblogs.com/xiximayou/p/12720558.html

Dropout层实现:https://www.cnblogs.com/xiximayou/p/12720589.html

激活层实现:https://www.cnblogs.com/xiximayou/p/12720622.html

定义训练和测试过程:https://www.cnblogs.com/xiximayou/p/12725873.html

代码在mlfromscratch/examples/convolutional_neural_network.py 中:

from __future__ import print_function

from sklearn import datasets

import matplotlib.pyplot as plt

import math

import numpy as np # Import helper functions

from mlfromscratch.deep_learning import NeuralNetwork

from mlfromscratch.utils import train_test_split, to_categorical, normalize

from mlfromscratch.utils import get_random_subsets, shuffle_data, Plot

from mlfromscratch.utils.data_operation import accuracy_score

from mlfromscratch.deep_learning.optimizers import StochasticGradientDescent, Adam, RMSprop, Adagrad, Adadelta

from mlfromscratch.deep_learning.loss_functions import CrossEntropy

from mlfromscratch.utils.misc import bar_widgets

from mlfromscratch.deep_learning.layers import Dense, Dropout, Conv2D, Flatten, Activation, MaxPooling2D

from mlfromscratch.deep_learning.layers import AveragePooling2D, ZeroPadding2D, BatchNormalization, RNN def main(): #----------

# Conv Net

#---------- optimizer = Adam() data = datasets.load_digits()

X = data.data

y = data.target # Convert to one-hot encoding

y = to_categorical(y.astype("int")) X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, seed=1) # Reshape X to (n_samples, channels, height, width)

X_train = X_train.reshape((-1,1,8,8))

X_test = X_test.reshape((-1,1,8,8)) clf = NeuralNetwork(optimizer=optimizer,

loss=CrossEntropy,

validation_data=(X_test, y_test)) clf.add(Conv2D(n_filters=16, filter_shape=(3,3), stride=1, input_shape=(1,8,8), padding='same'))

clf.add(Activation('relu'))

clf.add(Dropout(0.25))

clf.add(BatchNormalization())

clf.add(Conv2D(n_filters=32, filter_shape=(3,3), stride=1, padding='same'))

clf.add(Activation('relu'))

clf.add(Dropout(0.25))

clf.add(BatchNormalization())

clf.add(Flatten())

clf.add(Dense(256))

clf.add(Activation('relu'))

clf.add(Dropout(0.4))

clf.add(BatchNormalization())

clf.add(Dense(10))

clf.add(Activation('softmax')) print ()

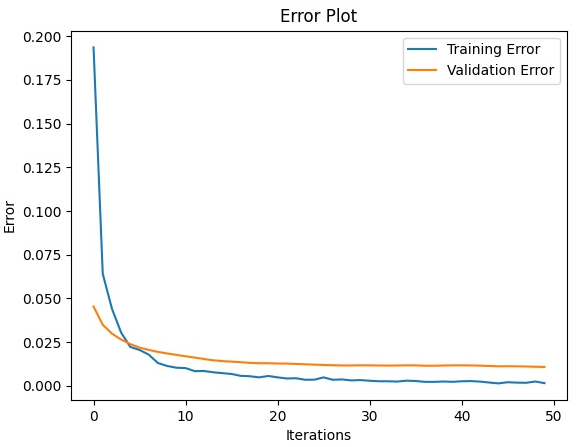

clf.summary(name="ConvNet") train_err, val_err = clf.fit(X_train, y_train, n_epochs=50, batch_size=256) # Training and validation error plot

n = len(train_err)

training, = plt.plot(range(n), train_err, label="Training Error")

validation, = plt.plot(range(n), val_err, label="Validation Error")

plt.legend(handles=[training, validation])

plt.title("Error Plot")

plt.ylabel('Error')

plt.xlabel('Iterations')

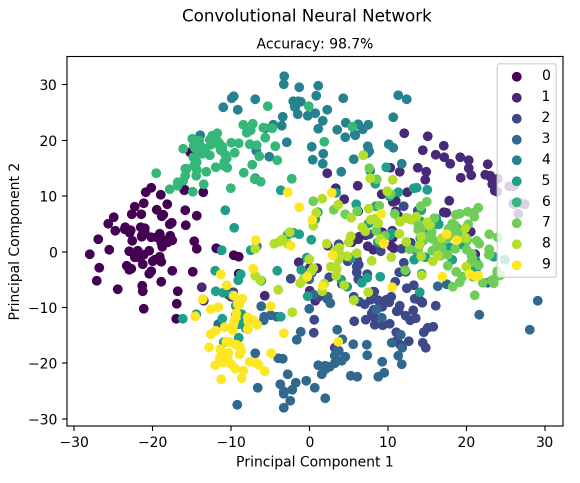

plt.show() _, accuracy = clf.test_on_batch(X_test, y_test)

print ("Accuracy:", accuracy) y_pred = np.argmax(clf.predict(X_test), axis=1)

X_test = X_test.reshape(-1, 8*8)

# Reduce dimension to 2D using PCA and plot the results

Plot().plot_in_2d(X_test, y_pred, title="Convolutional Neural Network", accuracy=accuracy, legend_labels=range(10)) if __name__ == "__main__":

main()

我们还是一步步进行分析:

1、优化器使用Adam()

2、数据集使用的是sklearn.datasets中的手写数字,其部分数据如下:

(1797, 64)

(1797,)

[[ 0. 0. 5. 13. 9. 1. 0. 0. 0. 0. 13. 15. 10. 15. 5. 0. 0. 3.

15. 2. 0. 11. 8. 0. 0. 4. 12. 0. 0. 8. 8. 0. 0. 5. 8. 0.

0. 9. 8. 0. 0. 4. 11. 0. 1. 12. 7. 0. 0. 2. 14. 5. 10. 12.

0. 0. 0. 0. 6. 13. 10. 0. 0. 0.]

[ 0. 0. 0. 12. 13. 5. 0. 0. 0. 0. 0. 11. 16. 9. 0. 0. 0. 0.

3. 15. 16. 6. 0. 0. 0. 7. 15. 16. 16. 2. 0. 0. 0. 0. 1. 16.

16. 3. 0. 0. 0. 0. 1. 16. 16. 6. 0. 0. 0. 0. 1. 16. 16. 6.

0. 0. 0. 0. 0. 11. 16. 10. 0. 0.]

[ 0. 0. 0. 4. 15. 12. 0. 0. 0. 0. 3. 16. 15. 14. 0. 0. 0. 0.

8. 13. 8. 16. 0. 0. 0. 0. 1. 6. 15. 11. 0. 0. 0. 1. 8. 13.

15. 1. 0. 0. 0. 9. 16. 16. 5. 0. 0. 0. 0. 3. 13. 16. 16. 11.

5. 0. 0. 0. 0. 3. 11. 16. 9. 0.]

[ 0. 0. 7. 15. 13. 1. 0. 0. 0. 8. 13. 6. 15. 4. 0. 0. 0. 2.

1. 13. 13. 0. 0. 0. 0. 0. 2. 15. 11. 1. 0. 0. 0. 0. 0. 1.

12. 12. 1. 0. 0. 0. 0. 0. 1. 10. 8. 0. 0. 0. 8. 4. 5. 14.

9. 0. 0. 0. 7. 13. 13. 9. 0. 0.]

[ 0. 0. 0. 1. 11. 0. 0. 0. 0. 0. 0. 7. 8. 0. 0. 0. 0. 0.

1. 13. 6. 2. 2. 0. 0. 0. 7. 15. 0. 9. 8. 0. 0. 5. 16. 10.

0. 16. 6. 0. 0. 4. 15. 16. 13. 16. 1. 0. 0. 0. 0. 3. 15. 10.

0. 0. 0. 0. 0. 2. 16. 4. 0. 0.]

[ 0. 0. 12. 10. 0. 0. 0. 0. 0. 0. 14. 16. 16. 14. 0. 0. 0. 0.

13. 16. 15. 10. 1. 0. 0. 0. 11. 16. 16. 7. 0. 0. 0. 0. 0. 4.

7. 16. 7. 0. 0. 0. 0. 0. 4. 16. 9. 0. 0. 0. 5. 4. 12. 16.

4. 0. 0. 0. 9. 16. 16. 10. 0. 0.]

[ 0. 0. 0. 12. 13. 0. 0. 0. 0. 0. 5. 16. 8. 0. 0. 0. 0. 0.

13. 16. 3. 0. 0. 0. 0. 0. 14. 13. 0. 0. 0. 0. 0. 0. 15. 12.

7. 2. 0. 0. 0. 0. 13. 16. 13. 16. 3. 0. 0. 0. 7. 16. 11. 15.

8. 0. 0. 0. 1. 9. 15. 11. 3. 0.]

[ 0. 0. 7. 8. 13. 16. 15. 1. 0. 0. 7. 7. 4. 11. 12. 0. 0. 0.

0. 0. 8. 13. 1. 0. 0. 4. 8. 8. 15. 15. 6. 0. 0. 2. 11. 15.

15. 4. 0. 0. 0. 0. 0. 16. 5. 0. 0. 0. 0. 0. 9. 15. 1. 0.

0. 0. 0. 0. 13. 5. 0. 0. 0. 0.]

[ 0. 0. 9. 14. 8. 1. 0. 0. 0. 0. 12. 14. 14. 12. 0. 0. 0. 0.

9. 10. 0. 15. 4. 0. 0. 0. 3. 16. 12. 14. 2. 0. 0. 0. 4. 16.

16. 2. 0. 0. 0. 3. 16. 8. 10. 13. 2. 0. 0. 1. 15. 1. 3. 16.

8. 0. 0. 0. 11. 16. 15. 11. 1. 0.]

[ 0. 0. 11. 12. 0. 0. 0. 0. 0. 2. 16. 16. 16. 13. 0. 0. 0. 3.

16. 12. 10. 14. 0. 0. 0. 1. 16. 1. 12. 15. 0. 0. 0. 0. 13. 16.

9. 15. 2. 0. 0. 0. 0. 3. 0. 9. 11. 0. 0. 0. 0. 0. 9. 15.

4. 0. 0. 0. 9. 12. 13. 3. 0. 0.]]

[0 1 2 3 4 5 6 7 8 9]

3、接着有一个to_categorical()函数,在mlfromscratch.utils下的data_manipulation.py中:

def to_categorical(x, n_col=None):

""" One-hot encoding of nominal values """

if not n_col:

n_col = np.amax(x) + 1

one_hot = np.zeros((x.shape[0], n_col))

one_hot[np.arange(x.shape[0]), x] = 1

return one_hot

用于将标签转换为one-hot编码。

4、划分训练集和测试集:train_test_split(),在mlfromscratch.utils下的data_manipulation.py中:

def train_test_split(X, y, test_size=0.5, shuffle=True, seed=None):

""" Split the data into train and test sets """

if shuffle:

X, y = shuffle_data(X, y, seed)

# Split the training data from test data in the ratio specified in

# test_size

split_i = len(y) - int(len(y) // (1 / test_size))

X_train, X_test = X[:split_i], X[split_i:]

y_train, y_test = y[:split_i], y[split_i:] return X_train, X_test, y_train, y_test

5、由于卷积神经网络的输入是[batchsize,channel,wheight,width]的维度,因此要将原始数据进行转换,即将(1797,64)转换为(1797,1,8,8)格式的数据。这里batchsize就是样本的数量。

6、定义卷积神经网络的训练和测试过程:包括优化器、损失函数、测试数据

7、定义模型结构

8、输出模型每层的类型、参数数量以及输出大小

9、将数据输入到模型中,设置epochs的大小以及batch_size的大小

10、计算训练和测试的错误,并绘制成图

11、计算准确率

12、绘制测试集中每一类预测的结果,这里有一个plot_in_2d()函数,位于mlfromscratch.utils下的misc.py中

# Plot the dataset X and the corresponding labels y in 2D using PCA.

def plot_in_2d(self, X, y=None, title=None, accuracy=None, legend_labels=None):

X_transformed = self._transform(X, dim=2)

x1 = X_transformed[:, 0]

x2 = X_transformed[:, 1]

class_distr = [] y = np.array(y).astype(int) colors = [self.cmap(i) for i in np.linspace(0, 1, len(np.unique(y)))] # Plot the different class distributions

for i, l in enumerate(np.unique(y)):

_x1 = x1[y == l]

_x2 = x2[y == l]

_y = y[y == l]

class_distr.append(plt.scatter(_x1, _x2, color=colors[i])) # Plot legend

if not legend_labels is None:

plt.legend(class_distr, legend_labels, loc=1) # Plot title

if title:

if accuracy:

perc = 100 * accuracy

plt.suptitle(title)

plt.title("Accuracy: %.1f%%" % perc, fontsize=10)

else:

plt.title(title) # Axis labels

plt.xlabel('Principal Component 1')

plt.ylabel('Principal Component 2') plt.show()

接下来就可以实际进行操作了,我是在谷歌colab中,首先使用:

!git clone https://github.com/eriklindernoren/ML-From-Scratch.git

将相关代码复制下来。

然后进行安装:在ML-From-Scratch目录下输入:

!python setup.py install

最后输入:

!python mlfromscratch/examples/convolutional_neural_network.py

最终结果:

+---------+

| ConvNet |

+---------+

Input Shape: (1, 8, 8)

+----------------------+------------+--------------+

| Layer Type | Parameters | Output Shape |

+----------------------+------------+--------------+

| Conv2D | 160 | (16, 8, 8) |

| Activation (ReLU) | 0 | (16, 8, 8) |

| Dropout | 0 | (16, 8, 8) |

| BatchNormalization | 2048 | (16, 8, 8) |

| Conv2D | 4640 | (32, 8, 8) |

| Activation (ReLU) | 0 | (32, 8, 8) |

| Dropout | 0 | (32, 8, 8) |

| BatchNormalization | 4096 | (32, 8, 8) |

| Flatten | 0 | (2048,) |

| Dense | 524544 | (256,) |

| Activation (ReLU) | 0 | (256,) |

| Dropout | 0 | (256,) |

| BatchNormalization | 512 | (256,) |

| Dense | 2570 | (10,) |

| Activation (Softmax) | 0 | (10,) |

+----------------------+------------+--------------+

Total Parameters: 538570 Training: 100% [------------------------------------------------] Time: 0:01:32

<Figure size 640x480 with 1 Axes>

Accuracy: 0.9846796657381616

<Figure size 640x480 with 1 Axes>

至此,结合代码一步一步看卷积神经网络的整个实现过程就完成了。通过结合代码的形式,可以加深对深度学习中卷积神经网络相关知识的理解。

【python实现卷积神经网络】开始训练的更多相关文章

- 基于Python的卷积神经网络和特征提取

基于Python的卷积神经网络和特征提取 用户1737318发表于人工智能头条订阅 224 在这篇文章中: Lasagne 和 nolearn 加载MNIST数据集 ConvNet体系结构与训练 预测 ...

- 《TensorFlow实战》中AlexNet卷积神经网络的训练中

TensorFlow实战中AlexNet卷积神经网络的训练 01 出错 TypeError: as_default() missing 1 required positional argument: ...

- python机器学习卷积神经网络(CNN)

卷积神经网络(CNN) 关注公众号"轻松学编程"了解更多. 一.简介 卷积神经网络(Convolutional Neural Network,CNN)是一种前馈神经网络,它的人 ...

- 【python实现卷积神经网络】定义训练和测试过程

代码来源:https://github.com/eriklindernoren/ML-From-Scratch 卷积神经网络中卷积层Conv2D(带stride.padding)的具体实现:https ...

- Python CNN卷积神经网络代码实现

# -*- coding: utf-8 -*- """ Created on Wed Nov 21 17:32:28 2018 @author: zhen "& ...

- 使用卷积神经网络CNN训练识别mnist

算的的上是自己搭建的第一个卷积神经网络.网络结构比较简单. 输入为单通道的mnist数据集.它是一张28*28,包含784个特征值的图片 我们第一层输入,使用5*5的卷积核进行卷积,输出32张特征图, ...

- 【python实现卷积神经网络】卷积层Conv2D反向传播过程

代码来源:https://github.com/eriklindernoren/ML-From-Scratch 卷积神经网络中卷积层Conv2D(带stride.padding)的具体实现:https ...

- 【python实现卷积神经网络】激活函数的实现(sigmoid、softmax、tanh、relu、leakyrelu、elu、selu、softplus)

代码来源:https://github.com/eriklindernoren/ML-From-Scratch 卷积神经网络中卷积层Conv2D(带stride.padding)的具体实现:https ...

- 【python实现卷积神经网络】损失函数的定义(均方误差损失、交叉熵损失)

代码来源:https://github.com/eriklindernoren/ML-From-Scratch 卷积神经网络中卷积层Conv2D(带stride.padding)的具体实现:https ...

随机推荐

- Python3学习之路~10.1 多进程、进程间通信、进程池

一 多进程multiprocessing multiprocessing is a package that supports spawning processes using an API simi ...

- 15. 获取类路径下文件对应的输入流(inputStream)方式

//获取 inputStream 方式一Resource resource = new ClassPathResource("excel/template/test.xlsx"); ...

- 我的Keras使用总结(2)——构建图像分类模型(针对小数据集)

Keras基本的使用都已经清楚了,那么这篇主要学习如何使用Keras进行训练模型,训练训练,主要就是“练”,所以多做几个案例就知道怎么做了. 在本文中,我们将提供一些面向小数据集(几百张到几千张图片) ...

- springcloud基础-eureka(注册中心)案例

一.新建项目,取名eureka-server pom.xml <?xml version="1.0" encoding="UTF-8"?> < ...

- c# winform 访问WebServices 服务(通过WEB引用的方式进行访问)

第一步.Winform项目引用WEB服务 第二步.代码声明实例化 Web引用 YzServ.TestServ yzserv = new WebYzServ.TestServ(); yzserv.AAA ...

- STL篇--list容器

list容器: 1.list 容器 的本质就是双向环形链表,最后一个节点刻意做成空节点,符合容器的左闭右开的原则2.list 的迭代器 是一个智能指针,其实就是一个类,通过操作符重载模拟各种操作(++ ...

- gRPC(2):客户端创建和调用原理

1. gRPC 客户端创建流程 1.1 背景 gRPC 是在 HTTP/2 之上实现的 RPC 框架,HTTP/2 是第 7 层(应用层)协议,它运行在 TCP(第 4 层 - 传输层)协议之上,相比 ...

- Nuget多项目批量打包上传服务器的简明教程

本篇不会介绍Nuget是什么,如何打包上传Nuget包,怎么搭建私有Nuget服务器.这些问题园子里都有相应的文章分享,这里不做过多阐述.另外本文假设你已经下载了Nuget.exe,并且已经设置好了环 ...

- tcp/ip面试题

TCP协议 1.OSI与TCP/IP各层的结构和功能,协议和作用. OSI七层模型对应TCP/IP四层模型,只是分法不同而已. 应用层:提供应用层服务,文件传输(FTP),电子邮件( ...

- html之样式

HTML 样式 1. font字体 font-family 字体样式 比如:微软雅黑.Serif 字体.Sans-serif 字体.Monospace 字体.Cursive 字体.Fantasy 字体 ...