Azkaban_Oozie_action

http://azkaban.github.io/azkaban/docs/2.5/

There is no reason why MySQL was chosen except that it is a widely used DB. We are looking to implement compatibility with other DB's, although the search requirement on historically running jobs benefits from a relational data store.

【solve the problem of Hadoop job dependencies】

Azkaban was implemented at LinkedIn to solve the problem of Hadoop job dependencies. We had jobs that needed to run in order, from ETL jobs to data analytics products.

Initially a single server solution, with the increased number of Hadoop users over the years, Azkaban has evolved to be a more robust solution.

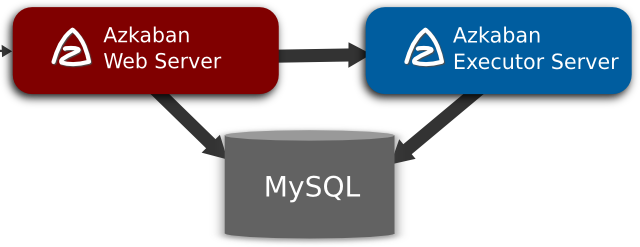

Azkaban consists of 3 key components:

- Relational Database (MySQL)

- AzkabanWebServer

- AzkabanExecutorServer

Relational Database (MySQL)

Azkaban uses MySQL to store much of its state. Both the AzkabanWebServer and the AzkabanExecutorServer access the DB.

How does AzkabanWebServer use the DB?

The web server uses the db for the following reasons:

- Project Management - The projects, the permissions on the projects as well as the uploaded files.

- Executing Flow State - Keep track of executing flows and which Executor is running them.

- Previous Flow/Jobs - Search through previous executions of jobs and flows as well as access their log files.

- Scheduler - Keeps the state of the scheduled jobs.

- SLA - Keeps all the sla rules

How does the AzkabanExecutorServer use the DB?

The executor server uses the db for the following reasons:

- Access the project - Retrieves project files from the db.

- Executing Flows/Jobs - Retrieves and updates data for flows and that are executing

- Logs - Stores the output logs for jobs and flows into the db.

- Interflow dependency - If a flow is running on a different executor, it will take state from the DB.

There is no reason why MySQL was chosen except that it is a widely used DB. We are looking to implement compatibility with other DB's, although the search requirement on historically running jobs benefits from a relational data store.

AzkabanWebServer

The AzkabanWebServer is the main manager to all of Azkaban. It handles project management, authentication, scheduler, and monitoring of executions. It also serves as the web user interface.

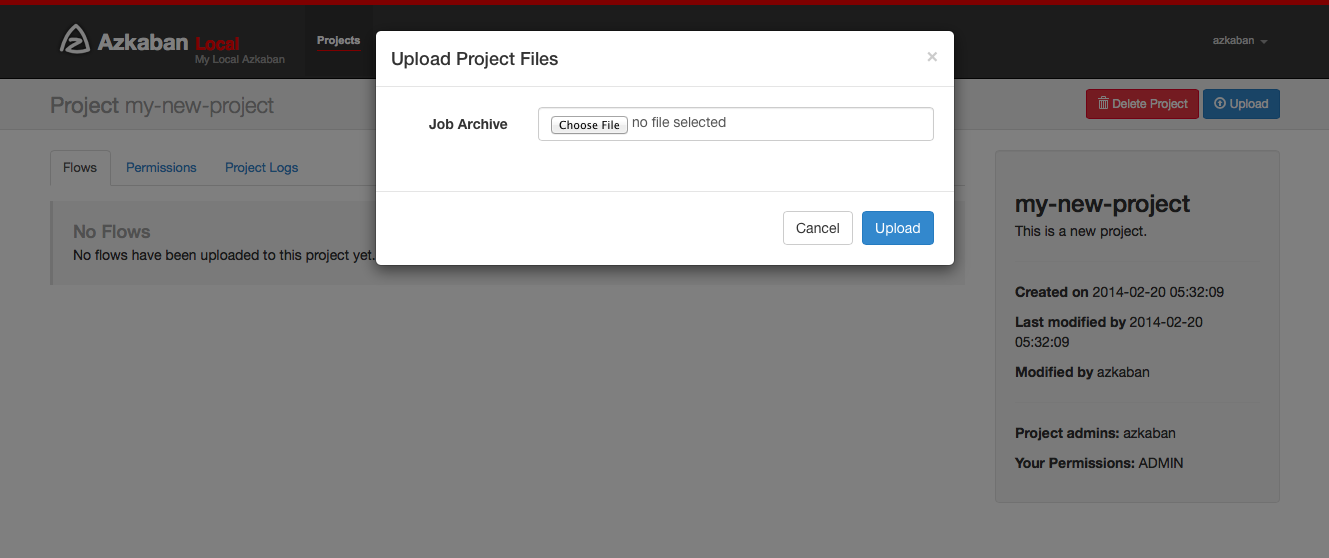

Using Azkaban is easy. Azkaban uses *.job key-value property files to define individual tasks in a work flow, and the _dependencies_ property to define the dependency chain of the jobs. These job files and associated code can be archived into a *.zip and uploaded through the web server through the Azkaban UI or through curl.

AzkabanExecutorServer

Previous versions of Azkaban had both the AzkabanWebServer and the AzkabanExecutorServer features in a single server. The Executor has since been separated into its own server. There were several reasons for splitting these services: we will soon be able to scale the number of executions and fall back on operating Executors if one fails. Also, we are able to roll our upgrades of Azkaban with minimal impact on the users. As Azkaban's usage grew, we found that upgrading Azkaban became increasingly more difficult as all times of the day became 'peak'.

【 no cyclical dependencies detected】

Select the archive file of your workflow files that you want to upload. Currently Azkaban only supports *.zip files. The zip should contain the *.job files and any files needed to run your jobs. Job names must be unique in a project.

Azkaban will validate the contents of the zip to make sure that dependencies are met and that there's no cyclical dependencies detected. If it finds any invalid flows, the upload will fail.

Uploads overwrite all files in the project. Any changes made to jobs will be wiped out after a new zip file is uploaded.

After a successful upload, you should see all of your flows listed on the screen.

http://oozie.apache.org/

Apache Oozie Workflow Scheduler for Hadoop

Overview

Oozie is a workflow scheduler system to manage Apache Hadoop jobs.

Oozie Workflow jobs are Directed Acyclical Graphs (DAGs) of actions.

Oozie Coordinator jobs are recurrent Oozie Workflow jobs triggered by time (frequency) and data availability.

Oozie is integrated with the rest of the Hadoop stack supporting several types of Hadoop jobs out of the box (such as Java map-reduce, Streaming map-reduce, Pig, Hive, Sqoop and Distcp) as well as system specific jobs (such as Java programs and shell scripts).

Oozie is a scalable, reliable and extensible system.

Azkaban_Oozie_action的更多相关文章

随机推荐

- WPS复制时删除超链接

按Ctrl+A全选,之后再按Ctrl+Shift+F9,即可一次性全部删除超链接.

- [HAOI2011]Problem b&&[POI2007]Zap

题目大意: $q(q\leq50000)$组询问,对于给定的$a,b,c,d(a,b,c,d\leq50000)$,求$\displaystyle\sum_{i=a}^b\sum_{j=c}^d[\g ...

- php中for与foreach对比

总体来说,如果数据库过几十万了,才能看出来快一点还是慢一点,如果低于10万的循环,就不用测试了.php推荐用foreach.循环数字数组时,for需要事先count($arr)计算数组长度,需要引入自 ...

- 避免在block中循环引用(Retain Cycle in Block)

让我们长话短说.请参阅如下代码: - (IBAction)didTapUploadButton:(id)sender { NSString *clientID = @"YOUR_CLIENT ...

- Blocks的申明调用与Queue当做锁的用法

Blocks的申明与调用 话说Blocks在方法内使用还是挺方便的,之前都是把相同的代码封装成外部函数,然后在一个方法里需要的时候调用,这样挺麻烦的.使用Blocks之后,我们可以把相同代码在这个方法 ...

- Python中xml、字典、json、类四种数据的转换

最近学python,觉得python很强很大很强大,写一个学习随笔,当作留念注:xml.字典.json.类四种数据的转换,从左到右依次转换,即xml要转换为类时,先将xml转换为字典,再将字典转换为j ...

- mootools客户端框架

mootools客户端框架 学习:http://www.chinamootools.com/ 官网:https://mootools.net/ 下载地址: https://github.com/moo ...

- 白盒测试中如何实现真正意义上并发测试(Java)

在这个话题开始之前,首先我们来弄清楚为什么要做并发测试? 一般并发测试,是指模拟并发访问,测试多用户并发访问同一个应用.模块.数据时是否产生隐藏的并发问题,如内存泄漏.线程锁.资源争用问题. 站在性能 ...

- ps快捷键记录

alt+delete 前景色填充 ctrl+delete 背景色填充 alt+shift+鼠标调节 变换选取,做圆环 ctrl+t 自由变换 alt+鼠标拖动 快捷复制某区域 delete ...

- vs:如何添加.dll文件

Newtonsoft.Json.dll 一个第三方的json序列化和反序列化dll,转换成json供页面使用,页面json数据转换成对象,供.net使用 Mysql.Data.dll mysql数 ...