世纪佳缘信息爬取存储到mysql,下载图片到本地,从数据库选取账号对其发送消息更新发信状态

利用这种方法,可以把所有会员信息存储下来,多线程发信息,10秒钟就可以对几百个会员完成发信了。

首先是筛选信息后爬取账号信息,

#-*-coding:utf-8-*-

import requests,re,json,time,threadpool,os

from mydba import MySql

from gevent import monkey #monkey.patch_all() header={

'Cookie':'guider_quick_search=on; SESSION_HASH=xxxxxxxxcd4523713c3d3700579350daa9992f1a; user_access=1; save_jy_login_name=1314xxxx; sl_jumper=%26cou%3D17; last_login_time=1498576743; user_attr=000000; pclog=%7B%22160961843%22%3A%221498576757268%7C1%7C0%22%7D; IM_S=%7B%22IM_CID%22%3A8677484%2C%22svc%22%3A%7B%22code%22%3A0%2C%22nps%22%3A0%2C%22unread_count%22%3A%220%22%2C%22ocu%22%3A0%2C%22ppc%22%3A0%2C%22jpc%22%3A0%2C%22regt%22%3A%221486465367%22%2C%22using%22%3A%2240%2C33%2C2%2C%22%2C%22user_type%22%3A%2210%22%2C%22uid%22%3A160961843%7D%2C%22IM_SV%22%3A%22123.59.161.3%22%2C%22m%22%3A26%2C%22f%22%3A0%2C%22omc%22%3A0%7D; FROM_BD_WD=%25E4%25B8%2596%25E7%25BA%25AA%25E4%25BD%25B3%25E7%25BC%2598; FROM_ST_ID=416640; FROM_ST=.jiayuan.com; REG_ST_ID=15; REG_ST_URL=http://bzclk.baidu.com/adrc.php?t=06KL00c00f7t0wC0Gfum0QkHAsjnX7Fu00000PNeYH300000uybcI1.THL2sQ1PEPZRVfK85yF9pywd0ZnqryRkryfdryDsnj0kuWR3u0Kd5RuKP17Knj97rDDvPRNAPH9APbRkwHc4njIAnDPjP1NA0ADqI1YhUyPGujYzrH0dnWTYnHckFMKzUvwGujYkP6K-5y9YIZ0lQzqzuyT8ph-9XgN9UB4WUvYETLfE5v-b5HfkPWmYnaudThsqpZwYTjCEQLILIz4Jpy74Iy78QhPEUfKWThnqnWRdnjn&tpl=tpl_10762_15668_1&l=1053916117&attach=location%3D%26linkName%3D%25E6%25A0%2587%25E9%25A2%2598%26linkText%3D%25E4%25B8%2596%25E7%25BA%25AA%25E4%25BD%25B3%25E7%25BC%2598%25E7%25BD%2591%25EF%25BC%2588Jiayuan.com%25EF%25BC%2589%253A2017%25EF%25BC%258C%26xp%3Did(%2522m78828180%2522)%252FDIV%255B1%255D%252FDIV%255B1%255D%252FDIV%255B1%255D%252FH2%255B1%255D%252FA%255B1%255D%26linkType%3D%26checksum%3D191&ie=UTF-8&f=8&tn=baidu&wd=%E4%B8%96%E7%BA%AA%E4%BD%B3%E7%BC%98&oq=%E4%B8%96%E7%BA%AA%E4%BD%B3%E7%BC%98&rqlang=cn; REG_REF_URL=http://www.jiayuan.com/usercp/profile.php?action=work; PHPSESSID=d5557cc5a4560c14c2dc685bcb7fc009; stadate1=159xxxx; myloc=44%7C4403; myage=xx; PROFILE=160xxxxxx%3A%25E9%25A3%258E%3Am%3Aat1.jyimg.com%2F42%2Ffb%2F4558c12f370e446240ba148bcac4%3A1%3A%3A1%3A4558c12f3_2_avatar_p.jpg%3A1%3A1%3A61%3A10; mysex=m; myuid=159xxxxxxx; myincome=40; mylevel=2; main_search:160961843=%7C%7C%7C00; RAW_HASH=J3ewrCGVZG5eU2agrymP2-bNz0IQQpivXOncdsOO63oS3%2AH4o4%2AsAifV0twuNFXqWippm3rMHXvTK%2APmGbap-ZYZpz-18ogWLnBXkcpY87GlFps.; COMMON_HASH=424558c12fxxxxx40ba148bcac4fb; IM_CON=%7B%22IM_TM%22%3A1498577459991%2C%22IM_SN%22%3A3%7D; IM_M=%5B%5D; pop_time=1498577573654; IM_CS=2; IM_ID=12; is_searchv2=1; IM_TK=1498577921417',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36',

} def fun(i): url='http://search.jiayuan.com/v2/search_v2.php?sex=f&key=&stc=1%3A4403%2C2%3A23.27%2C3%3A155.170%2C23%3A1&sn=default&sv=1&p='+str(i)+'&f=select&listStyle=bigPhoto&pri_uid=xxxx&jsversion=v5' while(1):

res=''

try:

res=requests.get(url,headers=header)

except Exception,e:

print e

if res!='':

break content=res.content

dictx= json.loads(re.findall('##jiayser##([\s\S]*?)##jiayser##',content)[0]) ##content是一个json但加了其他东西,需要把json提取出来

#print dictx

for dcx in dictx['userInfo']:

#print dcx

uhash=dcx['helloUrl'].encode('utf8').split('uhash=')[-1]

crawled_time=time.strftime('%Y-%m-%d %H:%M:%S', time.localtime(time.time()))

#print uhash

sql='''insert into shijijiayuan values(NULL,"%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s","%s")'''%(dcx['nickname'],dcx['age'],dcx['sex'],dcx['education'],dcx['height'],uhash,dcx['image'],dcx['marriage'],dcx['matchCondition'],\

dcx['uid'],dcx['work_location'],dcx['work_sublocation'],dcx['shortnote'],crawled_time)

#print '要插入的sql语句是:',sql

if not os.path.exists('images/%s_%s.jpg'%(dcx['nickname'],dcx['uid'])):

pass while(1):

res2=''

try:

res2=requests.get(dcx['image'],headers=header,timeout=30)

except Exception,e:

print e

if res2!='':

break

try:

with open('images/%s_%s.jpg'%(dcx['nickname'],dcx['uid']),'wb') as f:

f.write(res2.content)

print '%s保存图片成功%s'%(dcx['uid'])

except Exception,e: print '%s保存图片失败%s'%(dcx['uid'],str(e)) sql2 = 'select 1 from shijijiayuan where uid=%s'%dcx['uid'] mysqlx2 = MySql('localhost', 'root', '', 'test', 'utf8')

mysqlx2.query(sql2)

is_exist = mysqlx2.cursor.fetchone() ##判断下数据库有没有这个uid,uid是账号的唯一

if is_exist==(1,):

print '已存在%s'%dcx['uid']

else:

mysqlx2.query(sql) del mysqlx2 pool = threadpool.ThreadPool(300) ##用thredpool线程池开300线程

requestsx = threadpool.makeRequests(fun,[i for i in range(1,500)])

[pool.putRequest(req) for req in requestsx]

pool.wait()

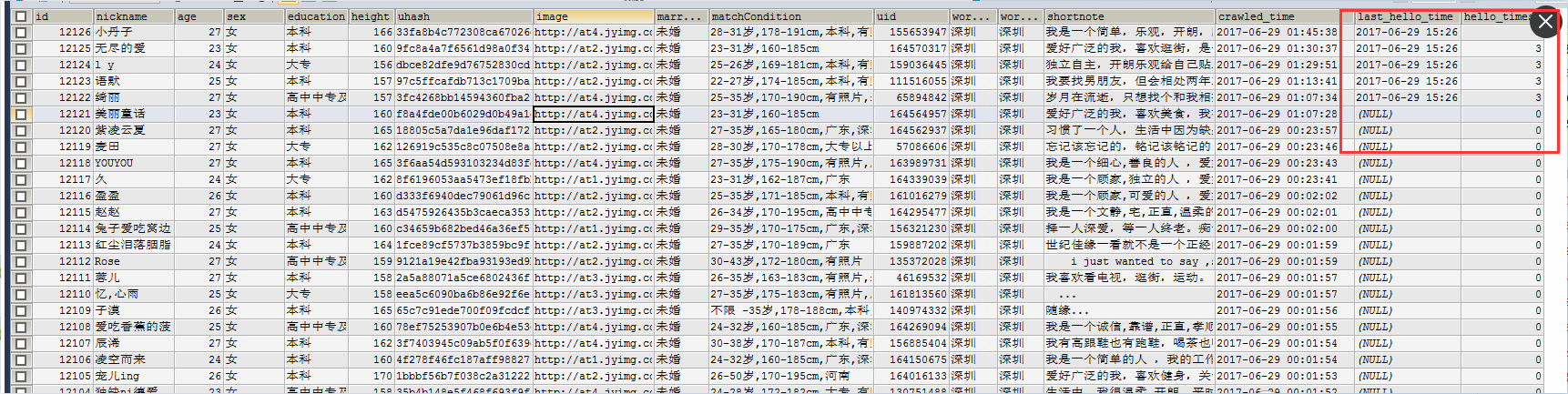

执行这个后,就可以看到数据库里面会有很多会员信息了。

附上建表语句

CREATE TABLE `shijijiayuan` (

`id` int(10) NOT NULL AUTO_INCREMENT,

`nickname` varchar(20) DEFAULT NULL,

`age` int(5) DEFAULT NULL,

`sex` varchar(5) DEFAULT NULL,

`education` varchar(30) DEFAULT NULL,

`height` int(5) DEFAULT NULL,

`uhash` varchar(50) DEFAULT NULL,

`image` varchar(160) DEFAULT NULL,

`marriage` varchar(10) DEFAULT NULL,

`matchCondition` varchar(200) DEFAULT NULL,

`uid` int(15) DEFAULT NULL,

`work_location` varchar(10) DEFAULT NULL,

`work_sublocation` varchar(10) DEFAULT NULL,

`shortnote` varchar(1000) DEFAULT NULL,

`crawled_time` datetime DEFAULT NULL,

`last_hello_time` timestamp NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

`hello_times` int(3) DEFAULT '0',

PRIMARY KEY (`id`)

) ENGINE=InnoDB AUTO_INCREMENT=0 DEFAULT CHARSET=utf8

这个是下载的图片。

第二部分是对会员发送信息。

#-*-coding:utf-8-*-

import requests,re,json,time,threadpool,os,random

from concurrent.futures import ThreadPoolExecutor

from mydba import MySql

from gevent import monkey

from greetings import greeting_list header={

'Cookie':'guider_quick_search=on; SESSION_HASH=yyyyyyya6f4b944ea522fb669bd758f17ea00; FROM_BD_WD=%25E4%25B8%2596%25E7%25BA%25AA%25E4%25BD%25B3%25E7%25BC%2598; FROM_ST_ID=416640; FROM_ST=.jiayuan.com; REG_ST_ID=15; REG_ST_URL=http://bzclk.baidu.com/adrc.php?t=06KL00c00f7t0wC0Gfum0QkHAsjb97wu00000PNeYH300000v9ZrmW.THL2sQ1PEPZRVfK85yF9pywd0Znqrj6vPWm1nH0snj0sm1N9PsKd5HTYwHTvrRmLfH9anDmLwHFjfRwArj6sfRcLPWTkn1610ADqI1YhUyPGujYzrH0dnWTYnHckFMKzUvwGujYkP6K-5y9YIZ0lQzqzuyT8ph-9XgN9UB4WUvYETLfE5v-b5HfkPWmYnaudThsqpZwYTjCEQLILIz4Jpy74Iy78QhPEUfKWThnqPWfdns&tpl=tpl_10762_15668_1&l=1053916117&attach=location%3D%26linkName%3D%25E6%25A0%2587%25E9%25A2%2598%26linkText%3D%25E4%25B8%2596%25E7%25BA%25AA%25E4%25BD%25B3%25E7%25BC%2598%25E7%25BD%2591%25EF%25BC%2588Jiayuan.com%25EF%25BC%2589%253A2017%25EF%25BC%258C%26xp%3Did(%2522m78828180%2522)%252FDIV%255B1%255D%252FDIV%255B1%255D%252FDIV%255B1%255D%252FH2%255B1%255D%252FA%255B1%255D%26linkType%3D%26checksum%3D191&ie=UTF-8&f=8&tn=baidu&wd=%E4%B8%96%E7%BA%AA%E4%BD%B3%E7%BC%98&oq=%E4%B8%96%E7%BA%AA%E4%BD%B3%E7%BC%98&rqlang=cn; user_access=1; _gscu_1380850711=98711257z5x28w21; _gscbrs_1380850711=1; save_jy_login_name=xxxxx; last_login_time=1498711280; user_attr=000000; pclog=%7B%22160961843%22%3A%221498711275466%7C1%7C0%22%7D; IM_S=%7B%22IM_CID%22%3A7816174%2C%22IM_SV%22%3A%22123.59.161.3%22%2C%22svc%22%3A%7B%22code%22%3A0%2C%22nps%22%3A0%2C%22unread_count%22%3A%221%22%2C%22ocu%22%3A0%2C%22ppc%22%3A0%2C%22jpc%22%3A0%2C%22regt%22%3A%221486465367%22%2C%22using%22%3A%2240%2C33%2C2%2C%22%2C%22user_type%22%3A%2210%22%2C%22uid%22%3A160961843%7D%2C%22m%22%3A12%2C%22f%22%3A0%2C%22omc%22%3A0%7D; REG_REF_URL=http://www.jiayuan.com/msg/?from=menu; PHPSESSID=8902adb4512b9e8904156c8b4267dc4c; stadate1=159961843; myloc=44%7C4403; myage=xx; PROFILE=160961843%3A%25E9%25A3%258E%3Am%3Aat1.jyimg.com%2F42%2Ffb%2F4558c12f370e446240ba148bcac4%3A1%3A%3A1%3A4558c12f3_2_avatar_p.jpg%3A1%3A1%3A61%3A10; mysex=m; myuid=15xxxx; myincome=40; mylevel=2; main_search:160xxxx=%7C%7C%7C00; RAW_HASH=suldoq0mMteEqQAs3qWKnMx-9sll5N8EDN-tu7bwGBA%2AtITMUwUbnL59YPR8ydVDXCxjt19iNPlu8kzi-jp8cU%2AzbhYS1iuOJyt%2APg32BhCebEE.; COMMON_HASH=424xxxx446240ba148bcac4fb; IM_CS=2; IM_ID=3; is_searchv2=1; pop_time=1498713117447; IM_CON=%7B%22IM_TM%22%3A1498713128148%2C%22IM_SN%22%3A9%7D; IM_M=%5B%5D; IM_TK=1498713324458',

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36',

} mysqlx = MySql('localhost', 'root', '', 'test', 'utf8') mysqlx.query("SELECT nickname,uhash FROM shijijiayuan order by id desc limit 10,5") #SELECT * FROM shijijiayuan where hello_times=0 limit 1,1 #select语句选取几个账号发信

fetchallx= mysqlx.cursor.fetchall()

nickname_uhash_list=[ft for ft in fetchallx] url2='http://www.jiayuan.com/msg/dosend.php?type=hello&randomfrom=4'

url3='http://www.jiayuan.com/165508547'

print nickname_uhash_list

def hello(nickname,uhash): datax2={

'textfield':'嗨。%s妹子。'%(nickname)+random.choice(greeting_list), ##问候语是一个列表,随机发送一个信息给他。

'list':'',

'category':'',

'xitong_zidingyi_wenhouyu':'',

'xitong_zidingyi_wenhouyu':'',

'new_type':'',

'pre_url':'',

'sendtype':'',

'hellotype':'hello',

'pro_id':'',

'new_profile':'',

'to_hash':uhash, #9c248d2c96868695640e76ed74e9d3b0 f11e574d44c7967b88afb9940392c14b uhash的值决定对谁发送信息,不是uid来决定,接口中没有uid参数

'fxly':'search_v2',

'tj_wz':'none',

'need_fxtyp_tanchu':'',

'self_pay':'',

'fxbc':'',

'cai_xin':'',

'zhuanti':'',

'liwu_nofree':'',

'liwu_nofree_id':'',

}

try:

contentx='空'

resx= requests.post(url2,data=datax2,headers=header)

contentx= resx.content

print contentx

if '发送成功' in contentx: ##如果发送信息成功,就把数据库中的最后发信时间更新下,然后把发信次数加1,以便于之后用slect语句选定没有发信的人对其发信

mysqlx2 = MySql('localhost', 'root', '', 'test', 'utf8')

#update student set score=score+1 where id = 1

sql='''UPDATE shijijiayuan SET hello_times=hello_times+1 WHERE uhash="%s"'''%(uhash)

#print sql

mysqlx2.query(sql)

del mysqlx2

except Exception,e:

print e poolx = ThreadPoolExecutor(max_workers=10) ##这也是多线程发信,世纪佳缘网站发信接口,响应时间非常巨大,浪费时间,用多线程Threadpoolexurot非常好的包,是未来要做的事,把一切东西扔到这里面,本篇没有体现出比threadpool好的优点。 [poolx.submit(hello,i[0],i[1]) for i in nickname_uhash_list]

执行完这个脚本后,在网页或者app中就可以看到发的信息了。

这个是打了招呼之后表的变化。

没有什么难度,就是要分析,首先是分析账号信息在哪里,是ajax,返回的是json加了一些字符,把json提取出来,转成字典,比较好弄。

然后就是要分析发信接口是哪一个url,这个根据规律猜测下然后查看下post的值就能找到,然后就是找出接口中的关键字段,关键字段不多,uhash是决定发送给谁(尝试几下就可以),textfield是发送的内容。

本文的登录是headers中的cookie字段携带。

世纪佳缘信息爬取存储到mysql,下载图片到本地,从数据库选取账号对其发送消息更新发信状态的更多相关文章

- python多线程爬取世纪佳缘女生资料并简单数据分析

一. 目标 作为一只万年单身狗,一直很好奇女生找对象的时候都在想啥呢,这事也不好意思直接问身边的女生,不然别人还以为你要跟她表白啥的,况且工科出身的自己本来接触的女生就少,即使是挨个问遍,样本量也 ...

- python 爬取世纪佳缘,经过js渲染过的网页的爬取

#!/usr/bin/python #-*- coding:utf-8 -*- #爬取世纪佳缘 #这个网站是真的烦,刚开始的时候用scrapy框架写,但是因为刚接触框架,碰到js渲染的页面之后就没办法 ...

- Python爬取招聘信息,并且存储到MySQL数据库中

前面一篇文章主要讲述,如何通过Python爬取招聘信息,且爬取的日期为前一天的,同时将爬取的内容保存到数据库中:这篇文章主要讲述如何将python文件压缩成exe可执行文件,供后面的操作. 这系列文章 ...

- Python 招聘信息爬取及可视化

自学python的大四狗发现校招招python的屈指可数,全是C++.Java.PHP,但看了下社招岗位还是有的.于是为了更加确定有多少可能找到工作,就用python写了个爬虫爬取招聘信息,数据处理, ...

- 批量采集世纪佳缘会员图片及winhttp异步采集效率

原始出处:http://www.cnblogs.com/Charltsing/p/winhttpasyn.html 最近老有人问能不能绕过世纪佳缘的会员验证来采集图片,我测试了一下,发现是可以的. 同 ...

- 豆瓣电影信息爬取(json)

豆瓣电影信息爬取(json) # a = "hello world" # 字符串数据类型# b = {"name":"python"} # ...

- 安居客scrapy房产信息爬取到数据可视化(下)-可视化代码

接上篇:安居客scrapy房产信息爬取到数据可视化(下)-可视化代码,可视化的实现~ 先看看保存的数据吧~ 本人之前都是习惯把爬到的数据保存到本地json文件, 这次保存到数据库后发现使用mongod ...

- requests+bs4爬取豌豆荚排行榜及下载排行榜app

爬取排行榜应用信息 爬取豌豆荚排行榜app信息 - app_detail_url - 应用详情页url - app_image_url - 应用图片url - app_name - 应用名称 - ap ...

- 初识python 之 爬虫:爬取某网站的壁纸图片

用到的主要知识点:requests.get 获取网页HTMLetree.HTML 使用lxml解析器解析网页xpath 使用xpath获取网页标签信息.图片地址request.urlretrieve ...

随机推荐

- How to Acquire or Improve Debugging Skills

http://blogs.msdn.com/b/debuggingtoolbox/archive/2007/06/08/recommended-books-how-to-acquire-or-impr ...

- asp.net 获取客户端IP

一.名词 首先说一下接下来要讲到的一些名词. 在Web开发中,我们大多都习惯使用HTTP请求头中的某些属性来获取客户端的IP地址,常见的属性是REMOTE_ADDR.HTTP_VIA和HTTP_X_F ...

- LeetCode: Merge Intervals 解题报告

Merge IntervalsGiven a collection of intervals, merge all overlapping intervals. For example,Given [ ...

- deepin linux手工更新系统

sudo apt-get updatesudo apt-get dist-upgrade -y 可以使用阿里云的镜像

- JS学习笔记(6)--音乐播放器

说明(2017.3.15): 1. lrc.js里面存储LRC歌词的格式的数组,获取里面的时间轴,转为秒数. 2. 通过audio.currentTime属性,setinterval每秒获取歌曲播放的 ...

- ecshop和ucenter的整合

按照网上的教材,一直提示数据库.密码错误,开始怀疑代码错了,毕竟都是两个老古董. 于是开始调试,居然调试也不能很好的支持,点击下一步后就卡死了,好吧,只好用log大法了, error_log(prin ...

- 常见Java工具——jps

简介 最常用的一个. 与Linux中的查看Java进程命令功能相同: ps -ef | grep java jps与这个命令的区别在于,jps仅仅过滤出Java本身的进程以及运行的引导类,就是引导ma ...

- unarchive模块

unarchive模块 用于解压文件,模块包含如下选项: copy:在解压文件之前,是否先将文件复制到远程主机,默认为yes.若为no,则要求目标主机上压缩包必须存在. creates:指定一个文件名 ...

- linux下node-webkit安装vlc插件

一.下载node-webkit 下载linux版本的node-webkit,网址如下:https://github.com/rogerwang/node-webkit.文件解压之后又如下几个文件,其中 ...

- python扩展

补充一些有趣的知识 1. sys模块方法的补充,打印进度条 import sys,time for i in range(20): sys.stdout.write("#") sy ...