HEVC与VP9之间的对比

在streamingmedia上看到的一篇对比HEVC与VP9的文章,挺不错。另外这边文章的几个comment也是不错的。

下面是全文。

The Great UHD Codec Debate: Google's VP9 Vs. HEVC/H.265

As of today, the great UHD codec debate involves two main participants: Google's VP9 and HEVC/H.265. Which one succeeds—and where—involves a number of factors and will likely differ in various streaming-related markets, which I discuss in the "State of Codecs" article in the 2015 Streaming Media Sourcebook. While the actual performance of the two codecs is a consideration, it's generally not the deciding factor—certainly it wasn't with VP8 and H.264. Still, codec performance matters, and in this article I'll analyze just that, looking at three criteria: quality, encoding time, and the CPU required to play back the encoded streams.

By way of background, as I reported back in November, there have been several HEVC/VP9 comparisons; some found the two codecs even, with others finding VP9 on par with H.264 and much less effective than HEVC. I reported on some quick and dirty comparisons at Streaming Media West and expanded upon those for this article. The short answer is that the quality produced by each codec is very similar.

Here's a brief overview of my testing. I selected three 4K source clips. The first, which I called the New clip, was a collection of footage I shot with a RED camera for a consulting project; this clip is designed to represent real-world footage. The second was a short section from Blender.org's movie Tears of Steel that represents traditional movie content. The third was a short section from Blender.org's movie Sintel that represents animated footage. Working in Adobe Premiere Pro, I produced very high data rate H.264 mezzanine clips in 4K, 1920x1080, and 1280x800 resolutions. These mezzanine clips were the starting points for all encodes.

I then supplied the clips and detailed encoding specifications to three recipients: to Google for encoding to VP9; to a contact at MulticoreWare, which is coordinating the x265 project; and to a supplier of HEVC IP who chose to remain nameless. All three companies encoded clips to my specifications and supplied them to me. I also contacted MainConcept, which supplied a copy of its flagship encoder, TotalCode Studio, and advised me to use the standard presets modified for the appropriate resolution, data rates, and frame rates. I quickly learned that TotalCode has two limitations. First, it can only encode to mod-16 resolutions, which meant it couldn't meet the target resolutions for either of the 4K outputs of the two Blender movies. Since the objective testing that I performed requires a precise matching of input and output resolution, I couldn't test either 4K clip.

The second limitation is that the encoder currently supports only single-pass encoding. As we'll see, this definitely impacted output quality in all encoded clips.

Encoding Quality

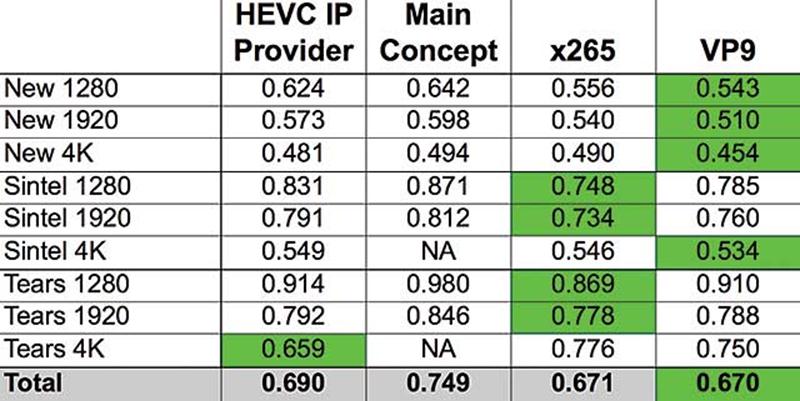

Once I received or created all the test clips, I confirmed that they met the data rate limits, then compared their respective video quality measurement (VQM) scores using the University of Moscow Video Quality Measurement Test. You can see the results in Table 1, with lower scores being better.

Table 1. HEVC vs. VP9 results with lower scores better

The three columns on the left show the results from the three HEVC participants with VP9 the last column on the right. The lowest (and best) score for each clip is highlighted in green, and the average score for each technology is shown in the total row at the bottom of the table. As you can see, VP9 scored the lowest (and best) of all tested codecs, though the difference between x265 and VP9 was negligible and commercially irrelevant, as was the difference between x265 and the IP Provider. I comment on MainConcept's performance later in this article.

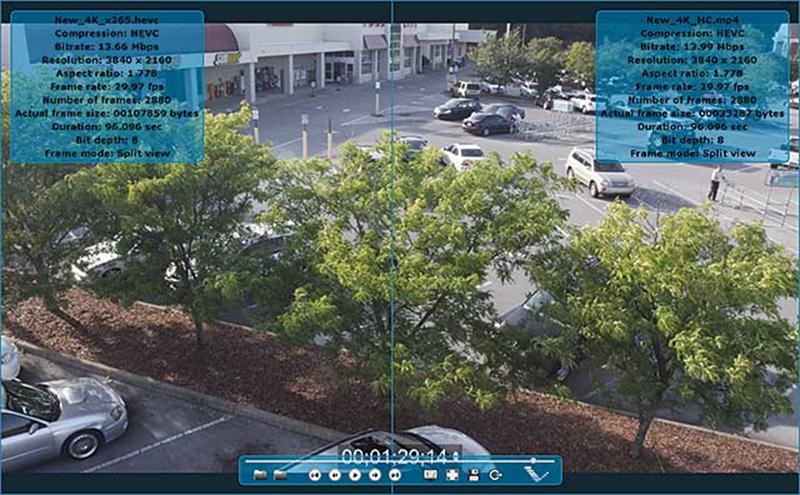

After running the objective tests, I viewed each clip in real time to observe any motion-related artifacts that might not have been picked up in the objective ratings. Vanguard Video's Visual Comparison Tool is particularly useful for this, with a split screen view that lets you play two videos at once and drag the centerline during playback to shift from viewing one video to the other (Figure 1), or overlay a video atop another and switch between them using the CTRL TAB keystroke combination. I saw nothing during these trials that contracted the objective VQM findings.

Figure 1. Comparing the quality of x265 and MainConcept via Vanguard Video's Visual Comparison Tool

At present, VP9 delivers the same level of performance as HEVC, which isn't surprising given that VP8 performed very similarly to H.264. So if you're choosing between the two, pure codec quality shouldn't be a major differentiator.

Regarding MainConcept, the ratings are not surprising, since the current version of TotalCode Studio only supports single-pass encoding. Since the clips were produced using 125 percent constrained VBR encoding, this meant that technologies with two passes could allocate more data to the hard to encode regions in all test clips.

As an example, Figure 2 shows how MainConcept compared to x265 over the duration of the 1920x1080 New clip, with MainConcept in blue and x265 in red. Lower scores are better. As you can see, the quality of the two codecs was very similar except for the region on the extreme right: the high motion, high detail shot shown in Figure 1 that was the hardest to encode in the entire clip. With the benefit of two-pass encoding, TotalCode Studio could have allocated more data to this region, which likely would have resulted in much closer scores.

Figure 2. MainConcept proved very close to x265 in all but the highest motion regions of the clip (MainConcept is blue, x265 red, lower is better).

As a complement to the main testing, I encoded a talking head clip with consistent motion throughout with both codecs x265 and MainConcept, testing the theory that consistent motion would negate the benefit of two-pass encoding. Despite the two-pass encoding advantage enjoyed by x265, MainConcept won this trial with a VQM score of 0.410, while x265 was 0.488. In addition, in the single-pass encoding trials I ran for the aforementioned HEVC configuration story, MainConcept was slightly ahead in most tests. So despite the results shown in Table 1, it appears that if and when MainConcept implements dual-pass encoding, overall quality should be very close to x265. So let's move to the next factor, encoding time.

Encoding Time

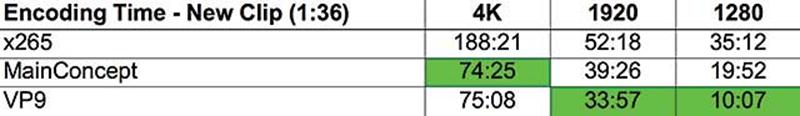

To test encoding time, I encoded all clips on my 12/24-core Z800 workstation configured with two 3.33 GHz X5680 Intel Xeon CPUs and Windows 7 running on 24GB of RAM. I encoded all three resolutions of the New Test clip, which is 1 minute, 36 seconds in length, with X.265, MainConcept, and VP9.

To test x265, I used the command script used by MulticoreWare, which used the Slower x265 preset. To put this in perspective, in tests performed for a how-to article on encoding HEVC in the Streaming Media Sourcebook, I ran some comparison encodes between the Slower preset and Placebo, the highest-quality preset. Though Placebo took about 73 percent longer than Slower, the quality was only 2.44 percent higher, so we didn't leave a lot of quality on the table using Slower.

With MainConcept, I used 27 for the P/Q value, which controls the quality/encoding time trade-off. According to tests performed for the aforementioned article, encoding at the highest setting, 30, would have taken 230 percent longer and only would have delivered an extra 1.39 percent improvement in quality.

With VP9, I encoded using presets supplied by Google, which used the Good preset and a speed of 1, which is designed to supply a good balance between encoding quality and speed. I also enabled multiple columns and multiple threads to use more of the cores on my HP Z800. After encoding, I confirmed that the quality of both the x.265 files and VP9 files were consistent with what I had been provided. The results can be found in Table 2, with all times shown as minutes:seconds.

Table 2. Encoding times for the New test clip at various resolutions

Times with the green background are the fastest, and as you can see, VP9 won two of three trials, despite using two passes, while MainConcept only used one. Obviously, when it comes to encoding, time is money. It looks like VP9 will be the cheapest to encode of all three, particularly once MainConcept implements two-pass encoding.

Playback CPU

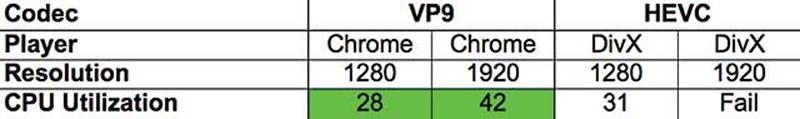

The third leg of the performance triple crown is the CPU required to play back the streams. Obviously, lower CPU requirements translate to greater reach among older and less powerful computers, along with reduced battery consumption. To measure this, I played the video on the noted computers with Performance Monitor (Windows) or Activity Monitor (Mac) open, while recording the CPU utilized during playback for the first 60 seconds of playback. Since there was no single player that played all videos on the Mac and Windows platforms, I used a variety of players.

Let's start with the Mac playback results shown in Table 3, with the best results highlighted in green. Here, I tested on a 3.06GHz Core 2 Duo MacBook Pro running OS 10.6.2 on 8GB RAM, playing back VP9 in Chrome and HEVC in the DivX player. As you can see, VP9 required slightly lower CPU horsepower than HEVC with the 1280x800 file and was able to play the 1920x1080 VP9 file, while the 1920x1080 HEVC file stopped playing after a few moments. On this relatively old MacBook Pro, VP9 is more playback-friendly.

Table 3. Playback CPU consumption on a MacBook Pro

Next stop were tests on my venerable Dell Precision 390 workstation, which runs a 2.93GHz Core 2 Extreme CPU with Windows XP and 3GB of RAM. On this computer I tested two players for both technologies, just to learn if there would be substantial differences between them. I show the results in Table 4, with the best results highlighted in green.

Table 4. Playback CPU consumption on a Dell Precision 390 workstation

Starting with VP9 on the left, Firefox was more efficient than Chome at both resolutions. With HEVC, DivX was far and away more efficient than VLC Player. If you average playback of the two codecs over the two videos files and players, HEVC was slightly more efficient; that's the 49 number in green compared to VP9's 54.

The Mac is from around 2009, while the Dell is from 2007, so both computers are long in the tooth. I ran the last series of tests on a much more recent HP Elitebook 8760W notebook with a 2.3GHz i7 CPU running Windows 7 with 16GB of RAM. (See Table 5 for results.) With VP9, the situation reversed and Chrome was slightly more efficient than Firefox. For HEVC, the DivX Player was slightly more efficient than Vanguard Video's Visual Comparison Tool (shown in Figure 1) playing both files, though to be fair, the Vanguard tool is an analysis tool, not a highly optimized player.

Table 5. Playback CPU consumption on an HP Elitebook 8760W

Overall, VP9 was slightly more efficient, though the results are very positive for both UHD codecs playing on a high-performance, but reasonable platform. On the serious Mac and Windows workstations I use for encoding, the required CPU horsepower would be negligible.

The conclusion: If you were hoping that the UHD debate would be decided by a distinct qualitative or performance advantage one way or the other, you're out of luck. From a quality perspective, the two codecs are very close, as they are in playback CPU, though VP9 does seem to have clear advantage in encoding efficiency. Overall, as with H.264 and VP8, it appears that the winner or loser between HEVC and VP9 will be selected by the politics of the situation, not based upon pure codec quality and performance.

This article appears in the April 2015 issue of Streaming Media as "The Great UHD Codec Debate."

Thanks for including x265 in your shoot-out Jan. If I'm not mistaken, these tests were done back in December, and four months later, x265 is significantly faster (in some cases, twice as fast). There have also been significant improvements in two pass rate control since that time.

How did you define your test settings? A fixed 3 sec GOP is reasonable for adaptive streaming tests, but that would normally be coupled with CBR, not VBR. Perhaps a 1-pass CBR fixed-GOP (ala adaptive streaming) and/or a 2-pass VBR adaptive-GOP encode (ala progressive download or disc) would provide more realistic scenarios than the combination of the two.

Limiting to only 3 B-frames is pretty odd for modern codecs, particularly combined with 5 reference frames (which would get limited to 4 in some of your combinations). There are also a lot of undefined parameters, particularly VBV. Measuring rate control by just the ABR can miss important differences in how well the stream follows the spec buffer requirements.

I see the vp9 command line in your linked spreadsheet. It would be helpful to see the x265 one as well.

Lastly, IIRC the VQM metric is still a frame-by-frame analysis that doesn't track potential temporal discontinuities in video (like keyframe strobing). Their paper isn't entirely clear on this matter, so I may be incorrect. In general, I've found that metrics that don't incorporate the temporal axis of video can miss annoying visual discontinuities. The Spatio-Temporal SSIM test from the MSU tool can be a useful backup metric to pick up on some of those issues.

Jan Ozer · Top Commenter · Long Branch High School

Ben:

Thanks for your note.

- CBR vs. VBR. Many producers use 2-pass VBR for adaptive constrained to as high as 200%. In fact, in the last round of high end tests I produced with Elemental and Telestream, indicated that many of their clients were doing the same, which is how we got to the 125% constrained VBR used in those (and these tests).

Also, in tests you can see the results of here http://www.streaminglearningcenter.com/articles/handout-for-multiscreen-delivery-workshop.html, VBR (page 10-11), VBR delivers up to about 4% better quality (as measured by VQM) and avoids occasional transient issues that I've observed in CBR files. I used 125% because that's still pretty conservative, but eliminates the transient spikes.

The work I've done on B-frames (same presentation, page 42) seems to show that there's little benefit after 3 or 4.

On reference frames (page 43), increasing from 5 to 16 reference frames seemed to reduce quality, and increased encoding time by about 50%. The quality aspects are subjective as opposed to VQM-based, so I'm not as certain about these.

If you have any objective findings that show these parameters are suboptimal, I'd love to see them. I've used most for years, and continue to do so for consistency between tests. Hey, would love to see the encoding parameters you're using at Amazon.

VQM very clearly shows strobing as you can see frame by frame quality-that's the red spike in Figure 2. I'll check the Spatio-Temporal SSIM test to see if that adds any value. As I discuss in the article ("I viewed each clip in real time to observe any motion-related artifacts that might not have been picked up in the objective ratings"), I visually checked the files specifically for temporal artifacts and didn't see any that would change my impression.

I'm re-running a very limited set of VP9/x265 tests for my session at Streaming Media East next week. Google is supplying a new VP9 exe; I've asked for the same from the x265 guys but haven't heard back. If you'd like, I'd love to get your input on the command lines, but I'd need a quick turn.

Thanks again for your input. You can contact me via email if you think you can take a look at the command line parameters.

Thanks.

Reply · · May 5 at 1:07pm

Colleen Kelly Henry · Oakland, California

I am shocked — shocked...

Dan Grois · אוניברסיטת בן גוריון בנגב

Jan, I follow some of your posts and appreciate your works, but this time, in a good mood, is there a co-incidence that this article is published in the (1st) April issue? Otherwise, I don't have any reasonable explanation to what you are doing here, and how you cannot be aware about the fact that in this work you compare rate control mechanisms (which is absolutely irrelevant to your saying that "…at present, VP9 delivers the same level of performance as HEVC….") instead of comparing the codecs performance! Codecs should be compared with the rate control turned off; otherwise, it acts as the very significant noise to the measurements. Upon selecting the codec based on the codec's performance, you can use any rate control that you want... Especially, while allowing varying QP for VP9 from 0 to 56 and enabling VP9 to use as many alt-ref frames as desired and where it is desired for the two-pass run mode (after obtaining the rate-distortion data in the 1st pass run) !! - this obviously doesn't fit the HEVC settings! And what about the real (and not target) bit rate for the tested codecs? …. Also, I assume that the reason that you use the VQM metric is that it is considered to be an objective metric with a high correlation to the subjective assessments, and you don't have resources to perform the subjective assessments by yourself… - I can understand that. However, you cannot ignore Touradj's article: http://infoscience.epfl.ch/record/200925/files/article-vp9-submited-v2.pdf, which contains very extensive experimental results – completely different to what you are present here, especially in the light of the fact that VP9 settings for the above-mentioned Touradj's article have been provided directly from the VP9 team. And, if you don't know, Touradj is considered to be one of the leading experts for subjective assessments....

Nice article, Jan, many thanks for sharing these results. I think as John says, it would be good to see this work continued with comprehensive subjective testing. As you pointed out, this is a time consuming and expensive process. The codecs are clearly continuing to evolve, so this topic will run and run...

Reply · · May 6 at 2:18am

Harish Rajagopal · Architect at Wipro

Could not get a view of bit-rate savings between VP9 and HEVC. Was that something that you considered in the study? For example mapping bit-rates with quality observed in both VP9 and HEVC would have thrown some light there.

Reply · · May 4 at 8:23am

Shevach Riabtsev · אוניברסיטת חיפה - University Of Haifa

There are several missing points in the comparison:

1) HEVC is more friendly for parallelization due to a particular WPP mode. The WPP mode is excellently suited for multi-cores platforms.

2) HEVC supports 10bits video and as far as i know VP9 has not a support for 10bits

James Bankoski · Wynantskill, New York

Vp9 supports 10 and 12 bit along with 422 and 444..

Reply · · May 4 at 7:13am

Nice report but I think the results need a big caveat which I'll attempt to provide. I don't think you can say anything about which encoder is better from these tests, sorry!

I am uncomfortable with the methodology. Objective results from long, heterogenous sequences like these should be taken with a very large pinch of salt. Much depends on how the metric averages/accumulates over time. The effects of encoders' temporal rate control can have unpredictable effects on the metric. I have come across situations where the effects of temporal rate control and metric temporal averaging combined to make a bad encoder with extreme quality fluctuations actually appear better in the numbers. Even looking at the Figure 2 above doesn't tell me for sure which encoder is better.

But the main problem with using metrics, however sophisticated is that they favour encoders whose internal psychovisual noise model/assumptions are most similar to that of the metric. Seems MSU's VQM metric is based on DCT coefficients so its usefulness will be limited (what about spatial, temporal masking?). Metrics (even PSNR) are great for comparing two very similar encoders or one encoder with slightly different tweaks but for this sort of shootout, there is still no substitute for eyeballs. IMHO - John @ http://parabolaresear.ch

Jan Ozer · Top Commenter · Long Branch High School

John:

Thanks for your comments. Always more one can do, and different tests one can apply.

As described in the article and illustrated in Figure 1, I did confirm objective scores with my own eyeballs, which admittedly is not a double blind study with 1000 participants. Many companies in many tests rely on the concept of golden eyeballs and I've been comparing codecs since 1994. So I tend to trust my eyes. I'd love to be able to test more extensively, but you do what you can within the budget of the project. Still, the fact that YouTube has streamed over 25 billion hours of VP9-encoded video over the last 12 months is pretty good subjective confirmation that the codec is, at the very least, competitive.

Given how close the results were overall, I would agree that it's tough to pick a conclusive winner. However, given that the world-myself included-thought that VP9's quality would trail HEVC's significantly, the fact that it's the equivalent, is a very significant finding in itself. Again, if you thought the HEVC vs. VP9 war was going be be won based upon quality, you're mistaken.

Reply · · April 29 at 5:12am

Jan Ozer Yes, you (we) can trust your eyes (so long as local bitrate is the same).

It's fantastic to see VPx getting some love! Now, if only there was a VP9 standard document somewhere, I could get started on some VP9 products...?!? Btw, if there is a HEVC vs VP9 war, it's only just started. Both will evolve...Reply · · April 29 at 6:19am

Gopi Jayaraman · Leading Software Design Engineer at Imagination Technologies

Compression efficiency chart between hevc & vp9 for various quality?

HEVC与VP9之间的对比的更多相关文章

- HEVC,VP9,x264性能对比

Dan Grois等人在论文<Performance Comparison of H.265/MPEG-HEVC, VP9, andH.264/MPEG-AVC Encoders>中,比较 ...

- HEVC与VP9编码效率对比

HEVC(High EfficiencyVideo Coding,高效率视频编码)是一种视频压缩标准,H.264/MPEG-4 AVC的继任者.目前正在由ISO/IEC MPEG和ITU-T VCEG ...

- 编解码器之战:AV1、HEVC、VP9和VVC

视频Codec专家Jan Ozer在Streaming Media West上主持了一场开放论坛,邀请百余名观众参与热门Codec的各项优势与短板.本文整理了讨论的主要成果,基本代表了AV1.HEVC ...

- FFmpeg 2.1 试用(新版支持HEVC,VP9)

前两天帮一位老师转码图像的时候,无意间发现新版FFmpeg竟然支持了下一代编码标准HEVC,以及Google提出的下一代编码标准VP9.真心没想到FFmpeg对下一代的编码标准支持的是如此之快.我还以 ...

- 【转载】SQL Server - 使用 Merge 语句实现表数据之间的对比同步

原文地址:SQL Server - 使用 Merge 语句实现表数据之间的对比同步 表数据之间的同步有很多种实现方式,比如删除然后重新 INSERT,或者写一些其它的分支条件判断再加以 INSERT ...

- 解析xml文件的几种技术与Dom4j与sax之间的对比

一.解析xml文件的几种技术:dom4j.sax.jaxb.jdom.dom 1.dom4j dom4j是一个Java的XML API,类似于jdom,用来读写XML文件的.dom4j是一个非常优秀的 ...

- 【Java基础】接口和抽象类之间的对比

Java 中的接口和抽象类之间的对比 一.接口 Interface,将其翻译成插座可能就更好理解了.我们通常利用接口来定义实现类的行为,当你将插座上连接笔记本的三角插头拔掉,换成微波炉插上去的时候,你 ...

- (转)CSS字体大小: em与px、pt、百分比之间的对比

CSS样式最混乱的一个方面是应用程序中文本扩展的font-size属性.在CSS中,你可以用四个不同的单位度量来显示在web浏览器中的文本 大小.这四个单位哪一种最适合Web? 这个问题引起了广泛的争 ...

- Python 数据库之间差异对比

参考资料: Python 集合(set) 此脚本用于两个数据库之间的表.列.栏位.索引的差异对比. cat oracle_diff.py #!/home/dba/.pyenv/versions/3 ...

随机推荐

- 如何确定系统上的CPU插槽数量

环境 Red Hat Enterprise Linux 7 Red Hat Enterprise Linux 6 Red Hat Enterprise Linux 5 Red Hat Enterpri ...

- Codeforces 915G Coprime Arrays 莫比乌斯反演 (看题解)

Coprime Arrays 啊,我感觉我更本不会莫比乌斯啊啊啊, 感觉每次都学不会, 我好菜啊. #include<bits/stdc++.h> #define LL long long ...

- MySQL安装教程图解

核心提示:下面的是MySQL安装的图解,用的可执行文件安装的,详细说明了一下! 下面的是MySQL安装的图解,用的可执行文件安装的,详细说明了一下! MySQL下载地址 打开下载的mysql安装文件m ...

- 解决 js setTimeout 传递带参数的函数无效果

最近 js 用到 setTimeout 递归调用 刷新进度 setTimeout ("getProgress(name,type)", 3000) ; 发现getProgres ...

- BZOJ2527 [Poi2011]Meteors 整体二分 树状数组

原文链接http://www.cnblogs.com/zhouzhendong/p/8686460.html 题目传送门 - BZOJ2527 题意 有$n$个国家. 太空里有$m$个太空站排成一个圆 ...

- M. Subsequence 南昌邀请赛

链接: https://nanti.jisuanke.com/t/38232 先给出一个s母串 然后给出n个子串 判断是否为母串的子序列 3000ms 2993ms过的.... 蒻鲫的代码: 建立表 ...

- Python 豆瓣mv爬取

爬取网址:https://www.dbmeinv.com/ 豆瓣mv(现已更名) 注:自制力不好的同学,先去准备营养快线! import requests from bs4 import ...

- fastadmin系统配置

常规管理--->系统配置--->字典配置-->配置分组-->追加--填上键值-->回车 然后在点上图的+添加自定义的配置项(如果需要删除配置项,需要删除数据库中fa_co ...

- 【python】函数式编程

No1: 函数式编程:即函数可以作为参数传递,也可以作为返回值 No2: map()函数接收两个参数,一个是函数,一个是Iterable,map将传入的函数依次作用到序列的每个元素,并把结果作为新的 ...

- ubuntu安装虚拟环境

首先 sudo pip install virtualenv sudo pip install virtualenvwrapper 然后进行配置 sudo gedit /.bashrc export ...