全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装爬虫框架Scrapy(离线方式和在线方式)(图文详解)

不多说,直接上干货!

参考博客

全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装OpenCV(离线方式和在线方式)(图文详解)

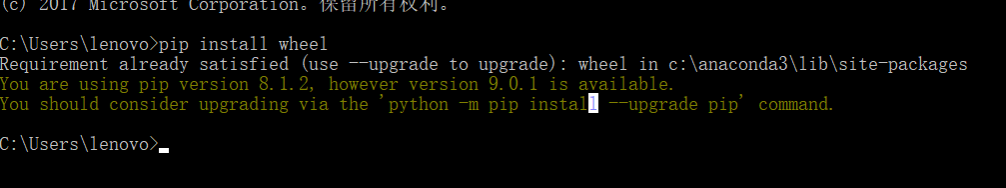

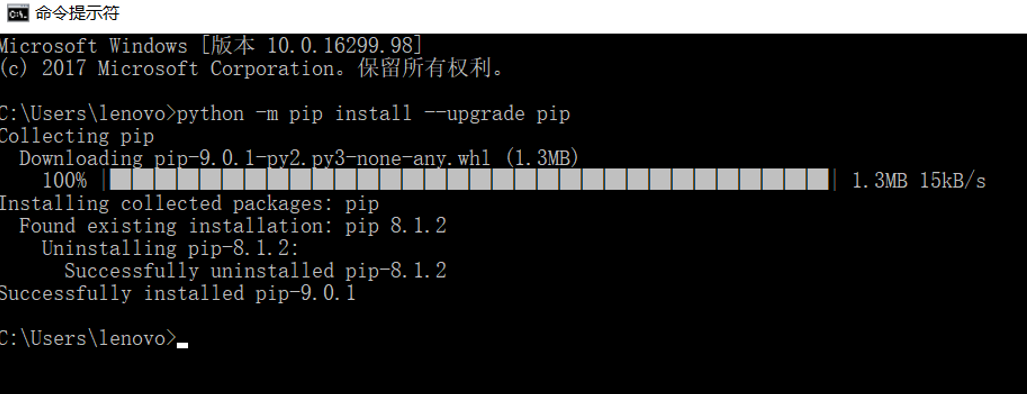

第一步:首先,提示升级下pip

第二步:下载安装wheel

也可以去网站里先下载好,离线安装。也可以如上在线安装。

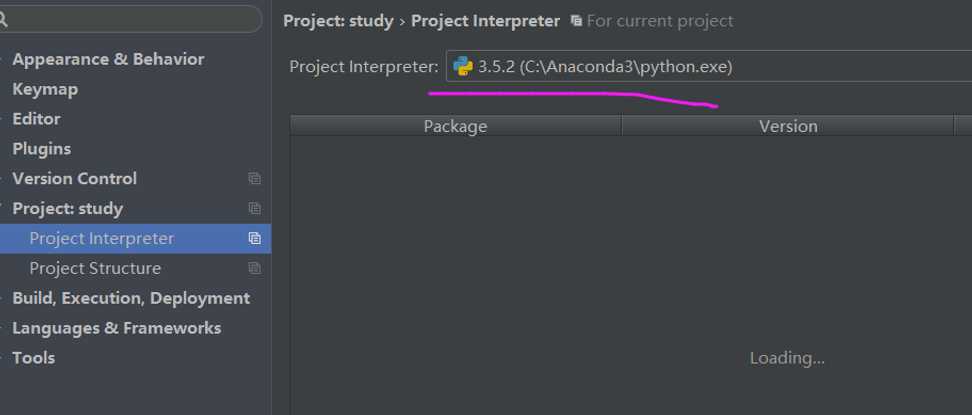

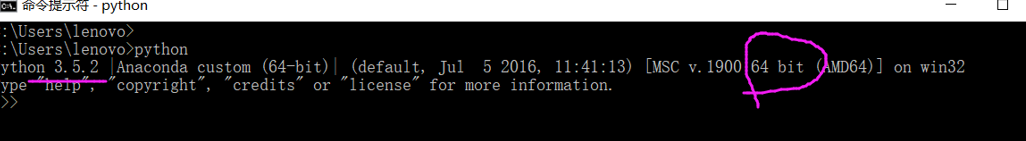

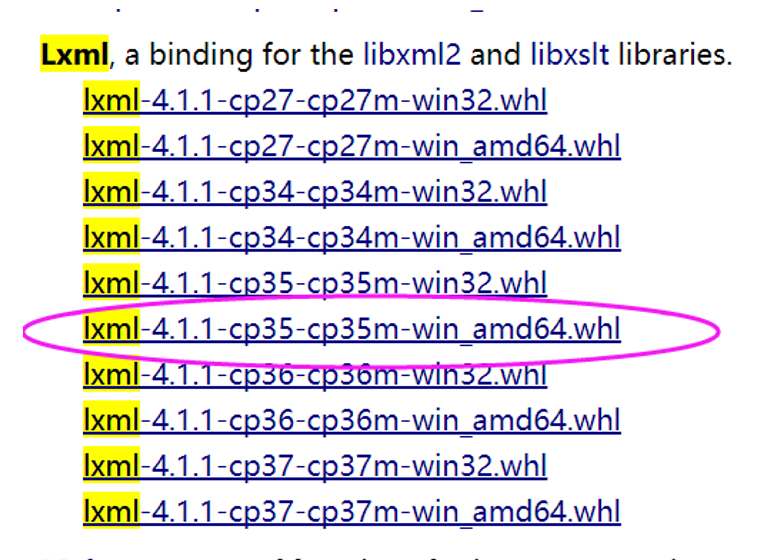

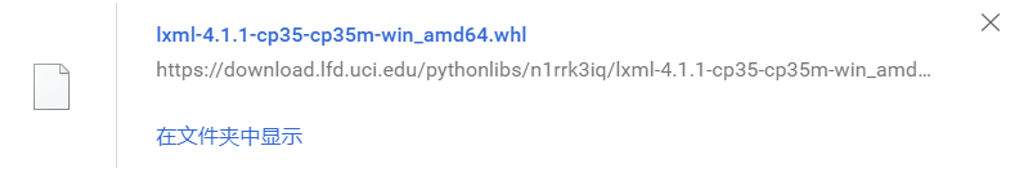

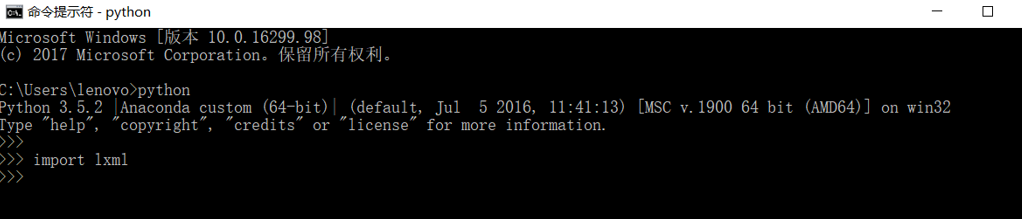

第三步: 安装lxml

因为,我的是

成功!

验证下

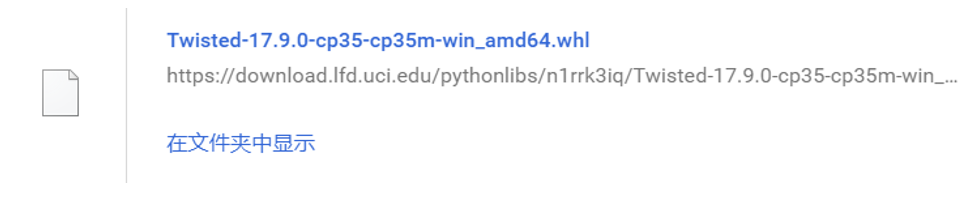

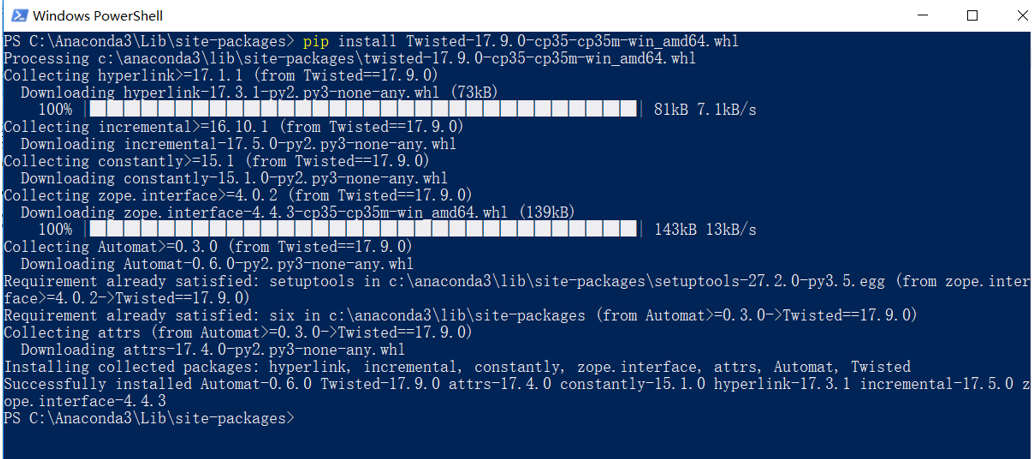

第四步:安装Twisted

PS C:\Anaconda3\Lib\site-packages> pip install Twisted-17.9.-cp35-cp35m-win_amd64.whl

Processing c:\anaconda3\lib\site-packages\twisted-17.9.-cp35-cp35m-win_amd64.whl

Collecting hyperlink>=17.1. (from Twisted==17.9.)

Downloading hyperlink-17.3.-py2.py3-none-any.whl (73kB)

% |████████████████████████████████| 81kB .1kB/s

Collecting incremental>=16.10. (from Twisted==17.9.)

Downloading incremental-17.5.-py2.py3-none-any.whl

Collecting constantly>=15.1 (from Twisted==17.9.)

Downloading constantly-15.1.-py2.py3-none-any.whl

Collecting zope.interface>=4.0. (from Twisted==17.9.)

Downloading zope.interface-4.4.-cp35-cp35m-win_amd64.whl (139kB)

% |████████████████████████████████| 143kB 13kB/s

Collecting Automat>=0.3. (from Twisted==17.9.)

Downloading Automat-0.6.-py2.py3-none-any.whl

Requirement already satisfied: setuptools in c:\anaconda3\lib\site-packages\setuptools-27.2.-py3..egg (from zope.interface>=4.0.->Twisted==17.9.)

Requirement already satisfied: six in c:\anaconda3\lib\site-packages (from Automat>=0.3.->Twisted==17.9.)

Collecting attrs (from Automat>=0.3.->Twisted==17.9.)

Downloading attrs-17.4.-py2.py3-none-any.whl

Installing collected packages: hyperlink, incremental, constantly, zope.interface, attrs, Automat, Twisted

Successfully installed Automat-0.6. Twisted-17.9. attrs-17.4. constantly-15.1. hyperlink-17.3. incremental-17.5. zope.interface-4.4.

PS C:\Anaconda3\Lib\site-packages>

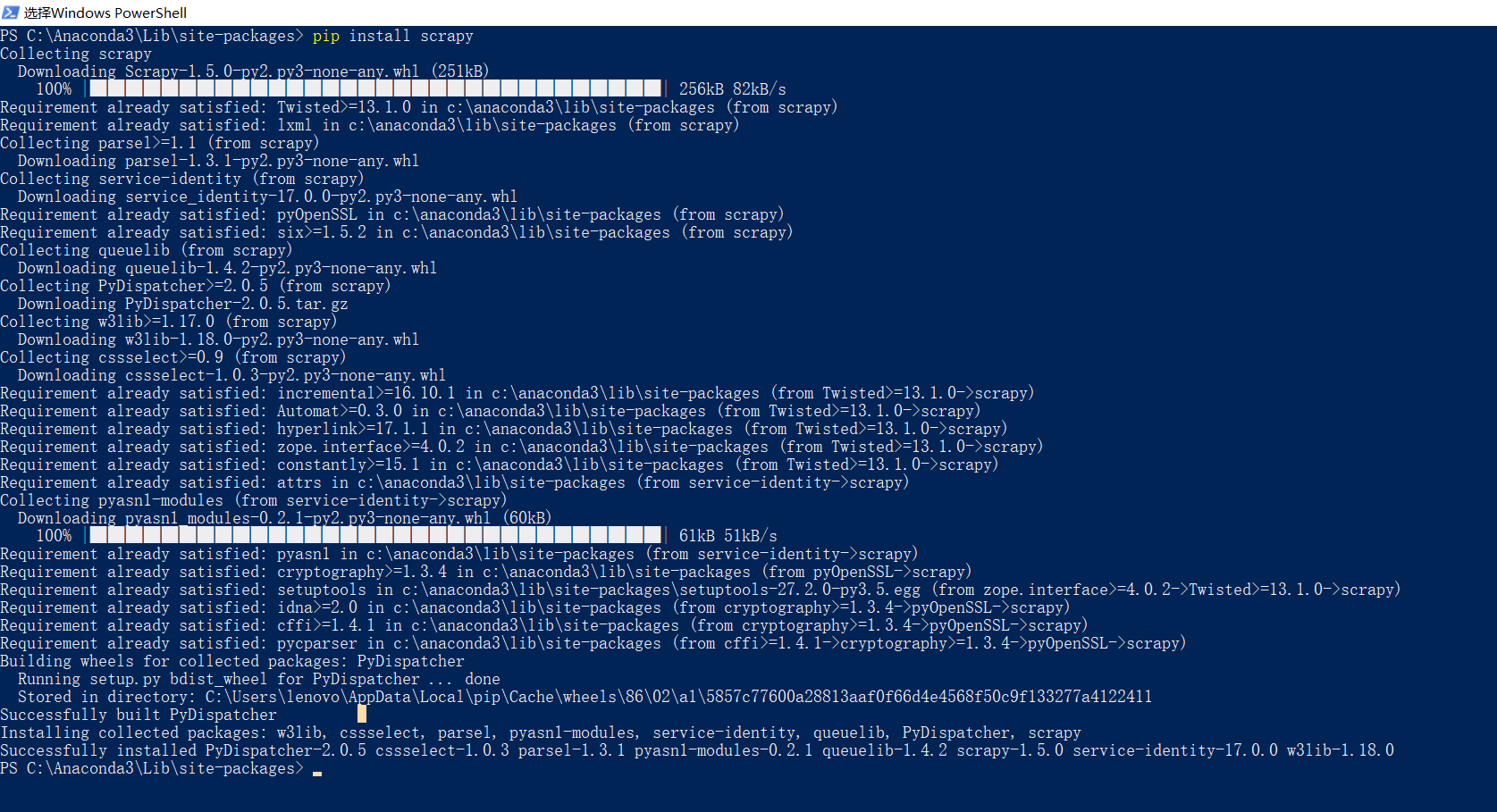

第五步:安装scrapy

PS C:\Anaconda3\Lib\site-packages> pip install scrapy

Collecting scrapy

Downloading Scrapy-1.5.-py2.py3-none-any.whl (251kB)

% |████████████████████████████████| 256kB 82kB/s

Requirement already satisfied: Twisted>=13.1. in c:\anaconda3\lib\site-packages (from scrapy)

Requirement already satisfied: lxml in c:\anaconda3\lib\site-packages (from scrapy)

Collecting parsel>=1.1 (from scrapy)

Downloading parsel-1.3.-py2.py3-none-any.whl

Collecting service-identity (from scrapy)

Downloading service_identity-17.0.-py2.py3-none-any.whl

Requirement already satisfied: pyOpenSSL in c:\anaconda3\lib\site-packages (from scrapy)

Requirement already satisfied: six>=1.5. in c:\anaconda3\lib\site-packages (from scrapy)

Collecting queuelib (from scrapy)

Downloading queuelib-1.4.-py2.py3-none-any.whl

Collecting PyDispatcher>=2.0. (from scrapy)

Downloading PyDispatcher-2.0..tar.gz

Collecting w3lib>=1.17. (from scrapy)

Downloading w3lib-1.18.-py2.py3-none-any.whl

Collecting cssselect>=0.9 (from scrapy)

Downloading cssselect-1.0.-py2.py3-none-any.whl

Requirement already satisfied: incremental>=16.10. in c:\anaconda3\lib\site-packages (from Twisted>=13.1.->scrapy)

Requirement already satisfied: Automat>=0.3. in c:\anaconda3\lib\site-packages (from Twisted>=13.1.->scrapy)

Requirement already satisfied: hyperlink>=17.1. in c:\anaconda3\lib\site-packages (from Twisted>=13.1.->scrapy)

Requirement already satisfied: zope.interface>=4.0. in c:\anaconda3\lib\site-packages (from Twisted>=13.1.->scrapy)

Requirement already satisfied: constantly>=15.1 in c:\anaconda3\lib\site-packages (from Twisted>=13.1.->scrapy)

Requirement already satisfied: attrs in c:\anaconda3\lib\site-packages (from service-identity->scrapy)

Collecting pyasn1-modules (from service-identity->scrapy)

Downloading pyasn1_modules-0.2.-py2.py3-none-any.whl (60kB)

% |████████████████████████████████| 61kB 51kB/s

Requirement already satisfied: pyasn1 in c:\anaconda3\lib\site-packages (from service-identity->scrapy)

Requirement already satisfied: cryptography>=1.3. in c:\anaconda3\lib\site-packages (from pyOpenSSL->scrapy)

Requirement already satisfied: setuptools in c:\anaconda3\lib\site-packages\setuptools-27.2.-py3..egg (from zope.interface>=4.0.->Twisted>=13.1.->scrapy)

Requirement already satisfied: idna>=2.0 in c:\anaconda3\lib\site-packages (from cryptography>=1.3.->pyOpenSSL->scrapy)

Requirement already satisfied: cffi>=1.4. in c:\anaconda3\lib\site-packages (from cryptography>=1.3.->pyOpenSSL->scrapy)

Requirement already satisfied: pycparser in c:\anaconda3\lib\site-packages (from cffi>=1.4.->cryptography>=1.3.->pyOpenSSL->scrapy)

Building wheels for collected packages: PyDispatcher

Running setup.py bdist_wheel for PyDispatcher ... done

Stored in directory: C:\Users\lenovo\AppData\Local\pip\Cache\wheels\\\a1\5857c77600a28813aaf0f66d4e4568f50c9f133277a4122411

Successfully built PyDispatcher

Installing collected packages: w3lib, cssselect, parsel, pyasn1-modules, service-identity, queuelib, PyDispatcher, scrapy

Successfully installed PyDispatcher-2.0. cssselect-1.0. parsel-1.3. pyasn1-modules-0.2. queuelib-1.4. scrapy-1.5. service-identity-17.0. w3lib-1.18.

PS C:\Anaconda3\Lib\site-packages>

验证下

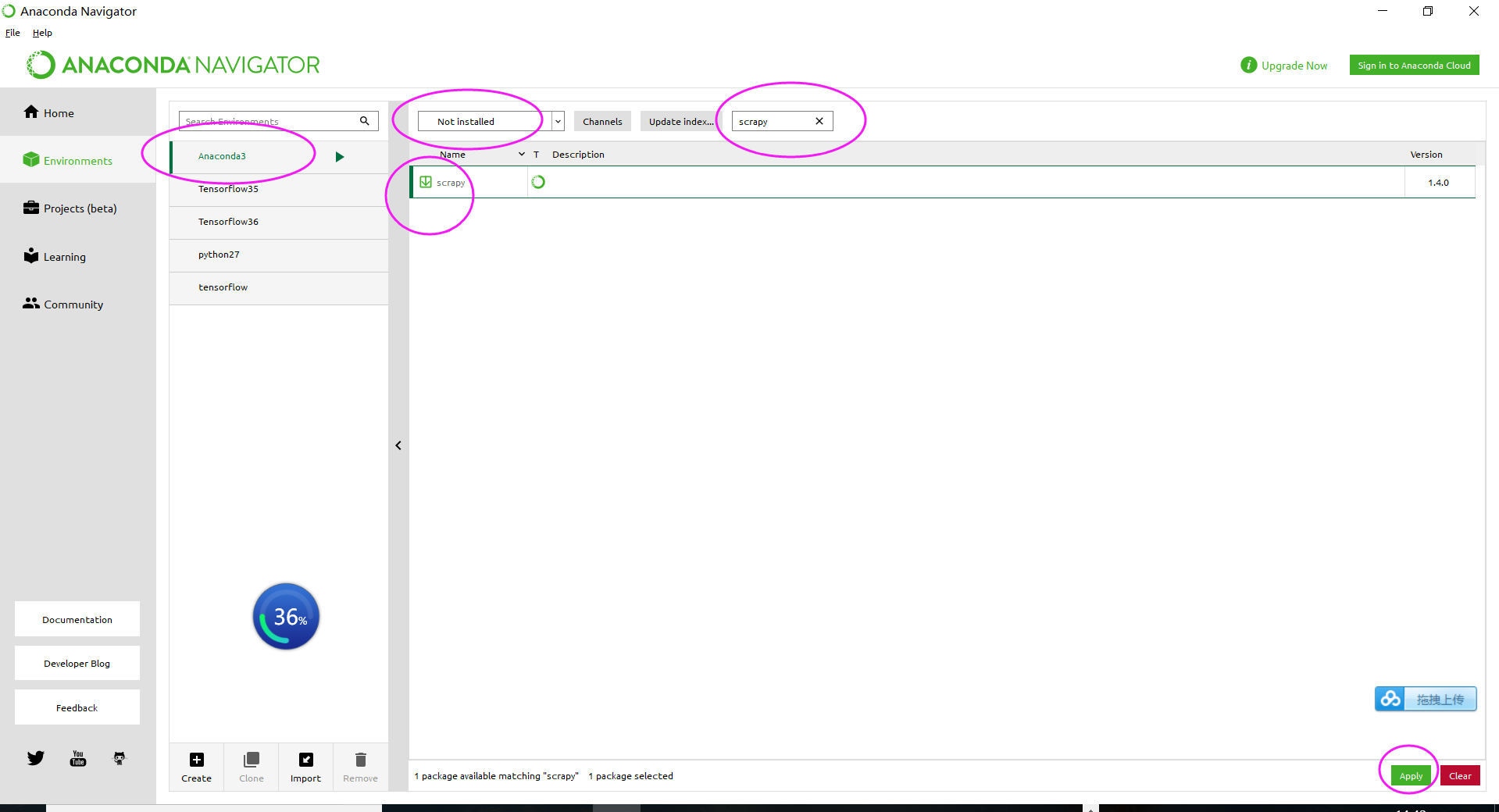

当然,你也可以直接在这里安装

同时,大家可以关注我的个人博客:

http://www.cnblogs.com/zlslch/ 和 http://www.cnblogs.com/lchzls/ http://www.cnblogs.com/sunnyDream/

详情请见:http://www.cnblogs.com/zlslch/p/7473861.html

人生苦短,我愿分享。本公众号将秉持活到老学到老学习无休止的交流分享开源精神,汇聚于互联网和个人学习工作的精华干货知识,一切来于互联网,反馈回互联网。

目前研究领域:大数据、机器学习、深度学习、人工智能、数据挖掘、数据分析。 语言涉及:Java、Scala、Python、Shell、Linux等 。同时还涉及平常所使用的手机、电脑和互联网上的使用技巧、问题和实用软件。 只要你一直关注和呆在群里,每天必须有收获

对应本平台的讨论和答疑QQ群:大数据和人工智能躺过的坑(总群)(161156071)

全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装爬虫框架Scrapy(离线方式和在线方式)(图文详解)的更多相关文章

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装OpenCV(离线方式和在线方式)(图文详解)

不多说,直接上干货! 说明: Anaconda2-5.0.0-Windows-x86_64.exe安装下来,默认的Python2.7 Anaconda3-4.2.0-Windows-x86_64.ex ...

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装用来向微信好友发送消息的itchat库(图文详解)

不多说,直接上干货! Anaconda2 里 PS C:\Anaconda2\Scripts> PS C:\Anaconda2\Scripts> pip.exe install itch ...

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装用来定时任务apscheduler库(图文详解)

不多说,直接上干货! Anaconda2 里 PS C:\Anaconda2\Scripts> PS C:\Anaconda2\Scripts> pip.exe install apsc ...

- 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装Theano(图文详解)

不多说,直接上干货! Theano的安装教程目前网上一搜很多,前几天折腾了好久,终于安装成功了Anaconda3(Python3)的Theano,嗯~发博客总结并分享下经验教训吧. 渣电脑,显卡用的是 ...

- 全网最全的Windows下Python2 / Python3里正确下载安装用来向微信好友发送消息的itchat库(图文详解)

不多说,直接上干货! 建议,你用Anaconda2或Anaconda3. 见 全网最全的Windows下Anaconda2 / Anaconda3里正确下载安装用来向微信好友发送消息的itchat库( ...

- 全网最全的Windows下Anaconda2 / Anaconda3里Python语言实现定时发送微信消息给好友或群里(图文详解)

不多说,直接上干货! 缘由: (1)最近看到情侣零点送祝福,感觉还是很浪漫的事情,相信有很多人熬夜为了给爱的人送上零点祝福,但是有时等着等着就睡着了或者时间并不是卡的那么准就有点强迫症了,这是也许程序 ...

- 全网最详细的Windows系统里Oracle 11g R2 Database(64bit)的完全卸载(图文详解)

不多说,直接上干货! 前期博客 全网最详细的Windows系统里Oracle 11g R2 Database(64bit)的下载与安装(图文详解) 若你不想用了,则可安全卸载. 完全卸载Oracle ...

- Windows下如何正确下载并安装可视化的Redis数据库管理工具(redis-desktop-manager)(图文详解)

不多说,直接上干货! Redis Desktop Manager是一个可视化的Redis数据库管理工具,使用非常简单. 官网下载:https://redisdesktop.com/down ...

- 全网最详细的用pip安装****模块报错:Could not find a version that satisfies the requirement ****(from version:) No matching distribution found for ****的解决办法(图文详解)

不多说,直接上干货! 问题详情 这个问题,很普遍.如我这里想实现,Windows下Anaconda2 / Anaconda3里正确下载安装用来向微信好友发送消息的itchat库. 见,我撰写的 全网最 ...

随机推荐

- bower简明入门教程

什么是bower: Bower是一个客户端技术的软件包管理器,它可用于搜索.安装和卸载如JavaScript.HTML.CSS之类的网络资源.其他一些建立在Bower基础之上的开发工具,如YeoMan ...

- Problem creating zip: Execution exce ption (and the archive is probably corrupt but I could not delete it): Java heap space -> [Help 1]

今天mvn编译的时候报错: [ERROR] Failed to execute goal org.apache.maven.plugins:maven-assembly-plugin:2.5.5:s ...

- java开发师笔试面试每日12题(3)

1.JDK和JRE的区别是什么? Java运行时环境(JRE)是将要执行Java程序的Java虚拟机.它同时也包含了执行applet需要的浏览器插件.Java开发工具包(JDK)是完整的Java软件开 ...

- ICO图标下载地址

http://findicons.com/ http://www.iconfont.cn/

- 《python语言程序设计》_第二章笔记之2.13_软件开发流程

#程序1: 设计:由用户键入利率.贷款数以及贷款的年限,系统计算出每月还贷数和总还款数 注意:输入的年利率是带有百分比的数字,例如:4.5%.程序需要将它除以100转换成小数.因为一年有12个月,所以 ...

- vmWare pro 14.1.1+ubuntu-desktop-amd64的总体安装流程

vmWare pro正常安装 下载后双击安装,按步骤走即可 创建虚拟机 设置虚拟机 window设置虚拟化技术 电脑重启后,弹出如下所示,选择 疑难解答->高级选项->UEFI固件设置-& ...

- 第一课:Python入门(笔记)

一.变量 1.什么是变量 #变量即变化的量,核心是“变”与“量”二字,变即变化,量即衡量状态. 2.为什么要有变量 #程序执行的本质就是一系列状态的变化,变是程序执行的直接体现,所以我们需要有一种机制 ...

- QEMU KVM Libvirt手册(5) – snapshots

前面讲了QEMU的qcow2格式的internal snapshot和external snapshot,这都是虚拟机文件格式的功能. 这是文件级别的. 还可以是文件系统级别的,比如很多文件系统支持s ...

- 包建强的培训课程(2):Android与设计模式

@import url(http://i.cnblogs.com/Load.ashx?type=style&file=SyntaxHighlighter.css);@import url(/c ...

- web安全Wargame—Natas解题思路(1-26)

前言: Natas是一个教授服务器端Web安全基础知识的 wargame,通过在每一关寻找Web安全漏洞,来获取通往下一关的秘钥,适合新手入门Web安全. 传送门~ 接下来给大家分享一下,1-20题的 ...