【源码编译】spark源码编译

本文采用cdh版本spark-1.6.0-cdh5.12.0

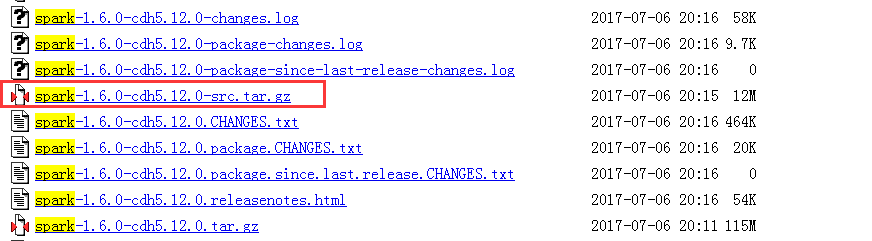

1.源码包下载

2.进入根目录编译,编译的方式有2种

maven

mvn clean package \ -DskipTests -Phadoop-2.6 \ -Dhadoop.version=2.6.0-cdh5.12.0 -Pyarn \ -Phive-1.1.0 -Phive-thriftserver

make-distribution

./make-distribution.sh --tgz \ -Phadoop-2.6 -Dhadoop.version=2.6.0-cdh5.12.0 \ -Pyarn \ -Phive-1.1.0 -Phive-thriftserver

参数说明

--name:指定编译完成后Spark安装包的名字--tgz:以tgz的方式进行压缩-Psparkr:编译出来的Spark支持R语言-Phadoop-2.4:以hadoop-2.4的profile进行编译,具体的profile可以看出源码根目录中的pom.xml中查看-Phive和-Phive-thriftserver:编译出来的Spark支持对Hive的操作-Pmesos:编译出来的Spark支持运行在Mesos上-Pyarn:编译出来的Spark支持运行在YARN上

(1)maven方式编译

[WARNING] The requested profile "hive-1.1.0" could not be activated because it does not exist.

[ERROR] Failed to execute goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile (scala-compile-first) on project spark-hive-thriftserver_2.10: Execution scala-compile-first of goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile failed. CompileFailed -> [Help 1]

org.apache.maven.lifecycle.LifecycleExecutionException: Failed to execute goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile (scala-compile-first) on project spark-hive-thriftserver_2.10: Execution scala-compile-first of goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile failed.

at org.apache.maven.lifecycle.internal.MojoExecutor.execute(MojoExecutor.java:212)

at org.apache.maven.lifecycle.internal.MojoExecutor.execute(MojoExecutor.java:153)

at org.apache.maven.lifecycle.internal.MojoExecutor.execute(MojoExecutor.java:145)

at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject(LifecycleModuleBuilder.java:116)

at org.apache.maven.lifecycle.internal.LifecycleModuleBuilder.buildProject(LifecycleModuleBuilder.java:80)

at org.apache.maven.lifecycle.internal.builder.singlethreaded.SingleThreadedBuilder.build(SingleThreadedBuilder.java:51)

at org.apache.maven.lifecycle.internal.LifecycleStarter.execute(LifecycleStarter.java:128)

at org.apache.maven.DefaultMaven.doExecute(DefaultMaven.java:307)

at org.apache.maven.DefaultMaven.doExecute(DefaultMaven.java:193)

at org.apache.maven.DefaultMaven.execute(DefaultMaven.java:106)

at org.apache.maven.cli.MavenCli.execute(MavenCli.java:863)

at org.apache.maven.cli.MavenCli.doMain(MavenCli.java:288)

at org.apache.maven.cli.MavenCli.main(MavenCli.java:199)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.codehaus.plexus.classworlds.launcher.Launcher.launchEnhanced(Launcher.java:289)

at org.codehaus.plexus.classworlds.launcher.Launcher.launch(Launcher.java:229)

at org.codehaus.plexus.classworlds.launcher.Launcher.mainWithExitCode(Launcher.java:415)

at org.codehaus.plexus.classworlds.launcher.Launcher.main(Launcher.java:356)

Caused by: org.apache.maven.plugin.PluginExecutionException: Execution scala-compile-first of goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile failed.

at org.apache.maven.plugin.DefaultBuildPluginManager.executeMojo(DefaultBuildPluginManager.java:145)

at org.apache.maven.lifecycle.internal.MojoExecutor.execute(MojoExecutor.java:207)

... 20 more

Caused by: Compilation failed

at sbt.compiler.AnalyzingCompiler.call(AnalyzingCompiler.scala:105)

at sbt.compiler.AnalyzingCompiler.compile(AnalyzingCompiler.scala:48)

at sbt.compiler.AnalyzingCompiler.compile(AnalyzingCompiler.scala:41)

at sbt.compiler.AggressiveCompile$$anonfun$3$$anonfun$compileScala$1$1.apply$mcV$sp(AggressiveCompile.scala:99)

at sbt.compiler.AggressiveCompile$$anonfun$3$$anonfun$compileScala$1$1.apply(AggressiveCompile.scala:99)

at sbt.compiler.AggressiveCompile$$anonfun$3$$anonfun$compileScala$1$1.apply(AggressiveCompile.scala:99)

at sbt.compiler.AggressiveCompile.sbt$compiler$AggressiveCompile$$timed(AggressiveCompile.scala:166)

at sbt.compiler.AggressiveCompile$$anonfun$3.compileScala$1(AggressiveCompile.scala:98)

at sbt.compiler.AggressiveCompile$$anonfun$3.apply(AggressiveCompile.scala:143)

at sbt.compiler.AggressiveCompile$$anonfun$3.apply(AggressiveCompile.scala:87)

at sbt.inc.IncrementalCompile$$anonfun$doCompile$1.apply(Compile.scala:39)

at sbt.inc.IncrementalCompile$$anonfun$doCompile$1.apply(Compile.scala:37)

at sbt.inc.IncrementalCommon.cycle(Incremental.scala:99)

at sbt.inc.Incremental$$anonfun$1.apply(Incremental.scala:38)

at sbt.inc.Incremental$$anonfun$1.apply(Incremental.scala:37)

at sbt.inc.Incremental$.manageClassfiles(Incremental.scala:65)

at sbt.inc.Incremental$.compile(Incremental.scala:37)

at sbt.inc.IncrementalCompile$.apply(Compile.scala:27)

at sbt.compiler.AggressiveCompile.compile2(AggressiveCompile.scala:157)

at sbt.compiler.AggressiveCompile.compile1(AggressiveCompile.scala:71)

at com.typesafe.zinc.Compiler.compile(Compiler.scala:184)

at com.typesafe.zinc.Compiler.compile(Compiler.scala:164)

at sbt_inc.SbtIncrementalCompiler.compile(SbtIncrementalCompiler.java:92)

at scala_maven.ScalaCompilerSupport.incrementalCompile(ScalaCompilerSupport.java:303)

at scala_maven.ScalaCompilerSupport.compile(ScalaCompilerSupport.java:119)

at scala_maven.ScalaCompilerSupport.doExecute(ScalaCompilerSupport.java:99)

at scala_maven.ScalaMojoSupport.execute(ScalaMojoSupport.java:482)

at org.apache.maven.plugin.DefaultBuildPluginManager.executeMojo(DefaultBuildPluginManager.java:134)

... 21 more

[ERROR]

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/PluginExecutionException

[ERROR]

[ERROR] After correcting the problems, you can resume the build with the command

[ERROR] mvn <goals> -rf :spark-hive-thriftserver_2.10

[root@node1 spark-src

解决方式总结

①修改scala版本

./dev/change-scala-version.sh 2.11

改变版本的时候会提示只能修改为2.10或者2.11版本,其他版本无效

②hive-thiftserver的pom中增加jline包,在官网下载对应的cdh版hive包时可以看到需要的是2.12版本的包

<dependency> <groupId>jline</groupId> <artifactId>jline</artifactId> <version>2.12</version> </dependency>

使用方式(a)后编译通过,(b)依然失败

然后报出新的问题

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Spark Project Parent POM ........................... SUCCESS [ 3.273 s] [INFO] Spark Project Test Tags ............................ SUCCESS [ 1.958 s] [INFO] Spark Project Core ................................. SUCCESS [01:27 min] [INFO] Spark Project Bagel ................................ SUCCESS [ 27.661 s] [INFO] Spark Project GraphX ............................... SUCCESS [01:31 min] [INFO] Spark Project ML Library ........................... SUCCESS [05:46 min] [INFO] Spark Project Tools ................................ SUCCESS [ 36.041 s] [INFO] Spark Project Networking ........................... SUCCESS [ 8.248 s] [INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 16.996 s] [INFO] Spark Project Streaming ............................ SUCCESS [03:28 min] [INFO] Spark Project Catalyst ............................. SUCCESS [04:50 min] [INFO] Spark Project SQL .................................. SUCCESS [09:38 min] [INFO] Spark Project Hive ................................. SUCCESS [06:20 min] [INFO] Spark Project Docker Integration Tests ............. SUCCESS [02:17 min] [INFO] Spark Project Unsafe ............................... SUCCESS [ 3.147 s] [INFO] Spark Project Assembly ............................. FAILURE [02:00 min] [INFO] Spark Project External Twitter ..................... SKIPPED [INFO] Spark Project External Flume ....................... SKIPPED [INFO] Spark Project External Flume Sink .................. SKIPPED [INFO] Spark Project External MQTT ........................ SKIPPED [INFO] Spark Project External MQTT Assembly ............... SKIPPED [INFO] Spark Project External ZeroMQ ...................... SKIPPED [INFO] Spark Project Examples ............................. SKIPPED [INFO] Spark Project REPL ................................. SKIPPED [INFO] Spark Project Launcher ............................. SKIPPED [INFO] Spark Project External Kafka ....................... SKIPPED [INFO] Cloudera Spark Project Lineage ..................... SKIPPED [INFO] Spark Project YARN ................................. SKIPPED [INFO] Spark Project YARN Shuffle Service ................. SKIPPED [INFO] Spark Project Hive Thrift Server ................... SKIPPED [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ [INFO] Total time: 38:59 min [INFO] Finished at: 2017-09-17T07:08:26+08:00 [INFO] Final Memory: 82M/880M [INFO] ------------------------------------------------------------------------ [WARNING] The requested profile "hive-1.1.0" could not be activated because it does not exist. [ERROR] Failed to execute goal on project spark-assembly_2.11: Could not resolve dependencies for project org.apache.spark:spark-assembly_2.11:jar:1.6.0-cdh5.12.0: Failed to collect dependencies at org.apache.spark:spark-hive-thriftserver_2.10:jar:1.6.0-cdh5.12.0: Failed to read artifact descriptor for org.apache.spark:spark-hive-thriftserver_2.10:jar:1.6.0-cdh5.12.0: Could not transfer artifact org.apache.spark:spark-hive-thriftserver_2.10:pom:1.6.0-cdh5.12.0 from/to CN (http://maven.oschina.net/content/groups/public/): Connect to maven.oschina.net:80 [maven.oschina.net/183.78.182.122] failed: Connection refused (Connection refused) -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException [ERROR] [ERROR] After correcting the problems, you can resume the build with the command [ERROR] mvn <goals> -rf :spark-assembly_2.11

通过错误信息得知oschina无法下载spark-hive-thriftserver的pom文件,所以更换为【aliyun】的地址

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

依然无法下载,继续更换地址【repo2】

<mirror>

<id>repo2</id>

<mirrorOf>central</mirrorOf>

<name>Human Readable Name for this Mirror.</name>

<url>http://repo2.maven.org/maven2/</url>

</mirror>

继续报错

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Spark Project Parent POM ........................... SUCCESS [ 8.238 s] [INFO] Spark Project Test Tags ............................ SUCCESS [ 2.852 s] [INFO] Spark Project Core ................................. SUCCESS [ 37.382 s] [INFO] Spark Project Bagel ................................ SUCCESS [ 3.085 s] [INFO] Spark Project GraphX ............................... SUCCESS [ 5.634 s] [INFO] Spark Project ML Library ........................... SUCCESS [ 14.805 s] [INFO] Spark Project Tools ................................ SUCCESS [ 1.538 s] [INFO] Spark Project Networking ........................... SUCCESS [ 2.839 s] [INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 0.901 s] [INFO] Spark Project Streaming ............................ SUCCESS [ 11.593 s] [INFO] Spark Project Catalyst ............................. SUCCESS [ 14.390 s] [INFO] Spark Project SQL .................................. SUCCESS [ 20.328 s] [INFO] Spark Project Hive ................................. SUCCESS [ 23.584 s] [INFO] Spark Project Docker Integration Tests ............. SUCCESS [ 4.114 s] [INFO] Spark Project Unsafe ............................... SUCCESS [ 0.986 s] [INFO] Spark Project Assembly ............................. FAILURE [ 39.408 s] [INFO] Spark Project External Twitter ..................... SKIPPED [INFO] Spark Project External Flume ....................... SKIPPED [INFO] Spark Project External Flume Sink .................. SKIPPED [INFO] Spark Project External MQTT ........................ SKIPPED [INFO] Spark Project External MQTT Assembly ............... SKIPPED [INFO] Spark Project External ZeroMQ ...................... SKIPPED [INFO] Spark Project Examples ............................. SKIPPED [INFO] Spark Project REPL ................................. SKIPPED [INFO] Spark Project Launcher ............................. SKIPPED [INFO] Spark Project External Kafka ....................... SKIPPED [INFO] Cloudera Spark Project Lineage ..................... SKIPPED [INFO] Spark Project YARN ................................. SKIPPED [INFO] Spark Project YARN Shuffle Service ................. SKIPPED [INFO] Spark Project Hive Thrift Server ................... SKIPPED [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ [INFO] Total time: 03:13 min [INFO] Finished at: 2017-09-17T08:01:29+08:00 [INFO] Final Memory: 82M/971M [INFO] ------------------------------------------------------------------------ [WARNING] The requested profile "hive-1.1.0" could not be activated because it does not exist. [ERROR] Failed to execute goal on project spark-assembly_2.11: Could not resolve dependencies for project org.apache.spark:spark-assembly_2.11:jar:1.6.0-cdh5.12.0: Could not find artifact org.apache.spark:spark-hive-thriftserver_2.10:jar:1.6.0-cdh5.12.0 in cdh.repo (https://repository.cloudera.com/artifactory/cloudera-repos) -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException [ERROR] [ERROR] After correcting the problems, you can resume the build with the command [ERROR] mvn <goals> -rf :spark-assembly_2.11

说明repo2的仓库没有spark-hive-thriftserver_2.10.jar

尝试其他地址后要么是连接拒绝,要么是无法解决依赖

(2)使用make-distribution.sh方式编译

直接运行后报出错误

JAVA_HOME is not set

在make-distribution.sh文件中增加配置

export JAVA_HOME=/usr/local/jdk1.8.0_144

然后开始执行

./make-distribution.sh --tgz \ -Phadoop-2.6 -Dhadoop.version=2.6.0-cdh5.12.0 \ -Pyarn \ -Phive-1.1.0 -Phive-thriftserver

经过各种mvn镜像库之后还是失败,而且执行时间特别长,第一次用了一晚上,第二次用了一天,所以决定放弃,最后一次错误信息

[INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary: [INFO] [INFO] Spark Project Parent POM ........................... SUCCESS [ 9.254 s] [INFO] Spark Project Test Tags ............................ SUCCESS [ 23.704 s] [INFO] Spark Project Core ................................. SUCCESS [41:06 min] [INFO] Spark Project Bagel ................................ SUCCESS [05:15 min] [INFO] Spark Project GraphX ............................... SUCCESS [35:37 min] [INFO] Spark Project ML Library ........................... SUCCESS [ 01:44 h] [INFO] Spark Project Tools ................................ SUCCESS [17:16 min] [INFO] Spark Project Networking ........................... SUCCESS [18:23 min] [INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [04:41 min] [INFO] Spark Project Streaming ............................ SUCCESS [ 02:18 h] [INFO] Spark Project Catalyst ............................. SUCCESS [ 02:57 h] [INFO] Spark Project SQL .................................. SUCCESS [ 02:30 h] [INFO] Spark Project Hive ................................. SUCCESS [ 02:14 h] [INFO] Spark Project Docker Integration Tests ............. SUCCESS [46:29 min] [INFO] Spark Project Unsafe ............................... SUCCESS [07:48 min] [INFO] Spark Project Assembly ............................. FAILURE [01:26 min] [INFO] Spark Project External Twitter ..................... SKIPPED [INFO] Spark Project External Flume ....................... SKIPPED [INFO] Spark Project External Flume Sink .................. SKIPPED [INFO] Spark Project External MQTT ........................ SKIPPED [INFO] Spark Project External MQTT Assembly ............... SKIPPED [INFO] Spark Project External ZeroMQ ...................... SKIPPED [INFO] Spark Project Examples ............................. SKIPPED [INFO] Spark Project REPL ................................. SKIPPED [INFO] Spark Project Launcher ............................. SKIPPED [INFO] Spark Project External Kafka ....................... SKIPPED [INFO] Cloudera Spark Project Lineage ..................... SKIPPED [INFO] Spark Project YARN ................................. SKIPPED [INFO] Spark Project YARN Shuffle Service ................. SKIPPED [INFO] Spark Project Hive Thrift Server ................... SKIPPED [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ [INFO] Total time: 14:44 h [INFO] Finished at: 2017-09-18T16:43:22+08:00 [INFO] Final Memory: 76M/959M [INFO] ------------------------------------------------------------------------ [WARNING] The requested profile "hive-1.1.0" could not be activated because it does not exist. [ERROR] Failed to execute goal on project spark-assembly_2.11: Could not resolve dependencies for project org.apache.spark:spark-assembly_2.11:jar:1.6.0-cdh5.12.0: Failed to collect dependencies at org.apache.spark:spark-hive-thriftserver_2.10:jar:1.6.0-cdh5.12.0: Failed to read artifact descriptor for org.apache.spark:spark-hive-thriftserver_2.10:jar:1.6.0-cdh5.12.0: Could not transfer artifact org.apache.spark:spark-hive-thriftserver_2.10:pom:1.6.0-cdh5.12.0 from/to twttr-repo (http://maven.twttr.com): Connect to maven.twttr.com:80 [maven.twttr.com/199.59.149.208] failed: Connection refused (Connection refused) -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/DependencyResolutionException [ERROR] [ERROR] After correcting the problems, you can resume the build with the command [ERROR] mvn <goals> -rf :spark-assembly_2.11

【源码编译】spark源码编译的更多相关文章

- 编译spark源码及塔建源码阅读环境

编译spark源码及塔建源码阅读环境 (一),编译spark源码 1,更换maven的下载镜像: <mirrors> <!-- 阿里云仓库 --> <mirror> ...

- Spark 学习(三) maven 编译spark 源码

spark 源码编译 scala 版本2.11.4 os:ubuntu 14.04 64位 memery 3G spark :1.1.0 下载源码后解压 1 准备环境,安装jdk和scala,具体参考 ...

- Spark笔记--使用Maven编译Spark源码(windows)

1. 官网下载源码 source code,地址: http://spark.apache.org/downloads.html 2. 使用maven编译: 注意在编译之前,需要设置java堆大小以及 ...

- 编译Spark源码

Spark编译有两种处理方式,第一种是通过SBT,第二种是通过Maven.作过Java工作的一般对于Maven工具会比较熟悉,这边也是选用Maven的方式来处理Spark源码编译工作. 在开始编译工作 ...

- Windows使用Idea编译spark源码

1. 环境准备 JDK1.8 Scala2.11.8 Maven 3.3+ IDEA with scala plugin 2. 下载spark源码 下载地址 https://archive.apach ...

- window环境下使用sbt编译spark源码

前些天用maven编译打包spark,搞得焦头烂额的,各种错误,层出不穷,想想也是醉了,于是乎,换种方式,使用sbt编译,看看人品如何! 首先,从官网spark官网下载spark源码包,解压出来.我这 ...

- Windows环境编译Spark源码

一.下载源码包 1. 下载地址有官网和github: http://spark.apache.org/downloads.html https://github.com/apache/spark Li ...

- Spark—编译Spark源码

Spark版本:Spark-2.1.0 Hadoop版本:hadooop-2.6.0-cdh5.7.0 官方文档:http://spark.apache.org/docs/latest/buildin ...

- 编译spark源码 Maven 、SBT 2种方式编译

由于实际环境较为复杂,从Spark官方下载二进制安装包可能不具有相关功能或不支持指定的软件版本,这就需要我们根据实际情况编译Spark源代码,生成所需要的部署包. Spark可以通过Maven和SBT ...

- Spark源码编译并在YARN上运行WordCount实例

在学习一门新语言时,想必我们都是"Hello World"程序开始,类似地,分布式计算框架的一个典型实例就是WordCount程序,接触过Hadoop的人肯定都知道用MapRedu ...

随机推荐

- 【python-opencv】16-图像平滑

[微语]“你以后向成为什么样的人?” “什么意思,难道我以后就不能成为我自己吗?” ----<阿甘正传> 补充知识点:如何理解图像的低频是轮廓,高频是噪声和细节 图像的频率:灰 ...

- SQL SERVER 聚集索引 非聚集索引 区别

转自http://blog.csdn.net/single_wolf_wolf/article/details/52915862 一.理解索引的结构 索引在数据库中的作用类似于目录在书籍中的作用,用来 ...

- gh-ost安装

下载 : https://github.com/github/gh-ost/releases/tag/v1.0.28 先安装Go语言: sudo yum install golang 将gh-ost文 ...

- BGD-py实现学习【1】[转载]

转自:https://github.com/icrtiou/Coursera-ML-AndrewNg 1.源码-对数据读取 import numpy as np import pandas as pd ...

- Linux环境下解压rar文件

可以用unrar命令解压rar后缀的文件 unrar e test.rar 解压文件到当前目录 unrar x test.rar /path/to/extract unrar l test.rar 查 ...

- 机器学习理论基础学习3.3--- Linear classification 线性分类之logistic regression(基于经验风险最小化)

一.逻辑回归是什么? 1.逻辑回归 逻辑回归假设数据服从伯努利分布,通过极大化似然函数的方法,运用梯度下降来求解参数,来达到将数据二分类的目的. logistic回归也称为逻辑回归,与线性回归这样输出 ...

- 浅谈Android View滑动和弹性滑动

引言 View的滑动这一块在实际开发中是非常重要的,无论是优秀的用户体验还是自定义控件都是需要对这一块了解的,我们今天来谈一下View的滑动. View的滑动 View滑动功能主要可以使用3种方式来实 ...

- bootstrap modal 垂直居中对齐

bootstrap modal 垂直居中对齐 文章参考 http://www.bubuko.com/infodetail-666582.html http://v3.bootcss.com/Jav ...

- 电子地图/卫星地图下载并转存为jpg图片

1.下载水经注万能地图下载器破解版 http://download.csdn.net/download/hyb2012/8714725,此软件为绿色免安装且免注册 2.下载后解压缩后,运行sgwn.e ...

- promise-async-await

通常而言,这3个关键字 都是用来「优雅」的处理ajax异步请求的 //es6的时候promise诞生,很好的解决了嵌套回调地狱,改良方案为链式回调. // es2017的时候诞生了async.awai ...