Spark记录-SparkSQL远程操作MySQL和ORACLE

1.项目引入mysql和oracle驱动

2.将mysql和oracle驱动上传到hdfs

3.远程调试源代码如下:

import org.apache.spark.sql.SQLContext

import org.apache.spark.{SparkConf, SparkContext}

object jdbc {

def main(args: Array[String]): Unit = {

System.setProperty("hadoop.home.dir", "D:\\hadoop") //加载hadoop组件

val conf = new SparkConf().setAppName("mysql").setMaster("spark://192.168.66.66:7077")

.set("spark.executor.memory", "1g")

.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

//.setJars(Seq("D:\\workspace\\scala\\out\\scala.jar"))//加载远程spark

.setJars(Array("hdfs://192.168.66.66:9000/spark-jars/ojdbc14-10.2.0.1.0.jar",

"hdfs://192.168.66.66:9000/spark-jars/mysql-connector-java-5.1.39.jar"))

val sc = new SparkContext(conf)

val sqlContext = new SQLContext(sc)

//操作MySQL

val mysql = sqlContext.read.format("jdbc").option("url","jdbc:mysql://192.168.66.66:3306/test").

option("dbtable","student").option("driver","com.mysql.jdbc.Driver").

option("user","root").option("password","1").load()

mysql.show()

val mysql2= sqlContext.read.format("jdbc").options(

Map(

"driver" -> "com.mysql.jdbc.Driver",

"url" -> "jdbc:mysql://192.168.66.66:3306",

"dbtable" -> "test.student",

"user" -> "root",

"password" -> "1",

"fetchsize" -> "3")).load()

mysql2.show

mysql.registerTempTable("student")

mysql.sqlContext.sql("select * from student").collect().foreach(println)

//操作ORACLE

val oracle= sqlContext.read.format("jdbc").options(

Map(

"driver" -> "oracle.jdbc.driver.OracleDriver",

"url" -> "jdbc:oracle:thin:@10.2.1.169:1521:BIT",

"dbtable" -> "tab_lg",

"user" -> "lxb",

"password" -> "lxb123",

"fetchsize" -> "3")).load()

//oracle.show

oracle.registerTempTable("tab_lg")

oracle.sqlContext.sql("select * from tab_lg limit 10").collect().foreach(println)

}

}

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/C:/Users/xinfang/.m2/repository/org/slf4j/slf4j-log4j12/1.7.22/slf4j-log4j12-1.7.22.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/C:/Users/xinfang/.m2/repository/org/apache/logging/log4j/log4j-slf4j-impl/2.4.1/log4j-slf4j-impl-2.4.1.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

17/12/11 16:03:49 INFO SparkContext: Running Spark version 1.6.3

17/12/11 16:03:51 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/12/11 16:03:51 INFO SecurityManager: Changing view acls to: xinfang

17/12/11 16:03:51 INFO SecurityManager: Changing modify acls to: xinfang

17/12/11 16:03:51 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(xinfang); users with modify permissions: Set(xinfang)

17/12/11 16:03:52 INFO Utils: Successfully started service 'sparkDriver' on port 55112.

17/12/11 16:03:53 INFO Slf4jLogger: Slf4jLogger started

17/12/11 16:03:53 INFO Remoting: Starting remoting

17/12/11 16:03:53 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@172.20.107.151:55125]

17/12/11 16:03:53 INFO Utils: Successfully started service 'sparkDriverActorSystem' on port 55125.

17/12/11 16:03:53 INFO SparkEnv: Registering MapOutputTracker

17/12/11 16:03:53 INFO SparkEnv: Registering BlockManagerMaster

17/12/11 16:03:53 INFO DiskBlockManager: Created local directory at C:\Users\xinfang\AppData\Local\Temp\blockmgr-7ffc898d-c1fc-42e3-bcd6-72c47e1564cd

17/12/11 16:03:53 INFO MemoryStore: MemoryStore started with capacity 1122.0 MB

17/12/11 16:03:54 INFO SparkEnv: Registering OutputCommitCoordinator

17/12/11 16:03:54 INFO Utils: Successfully started service 'SparkUI' on port 4040.

17/12/11 16:03:54 INFO SparkUI: Started SparkUI at http://172.20.107.151:4040

17/12/11 16:03:54 INFO SparkContext: Added JAR hdfs://192.168.66.66:9000/spark-jars/ojdbc14-10.2.0.1.0.jar at hdfs://192.168.66.66:9000/spark-jars/ojdbc14-10.2.0.1.0.jar with timestamp 1512979434526

17/12/11 16:03:54 INFO SparkContext: Added JAR hdfs://192.168.66.66:9000/spark-jars/mysql-connector-java-5.1.39.jar at hdfs://192.168.66.66:9000/spark-jars/mysql-connector-java-5.1.39.jar with timestamp 1512979434527

17/12/11 16:03:54 INFO AppClient$ClientEndpoint: Connecting to master spark://192.168.66.66:7077...

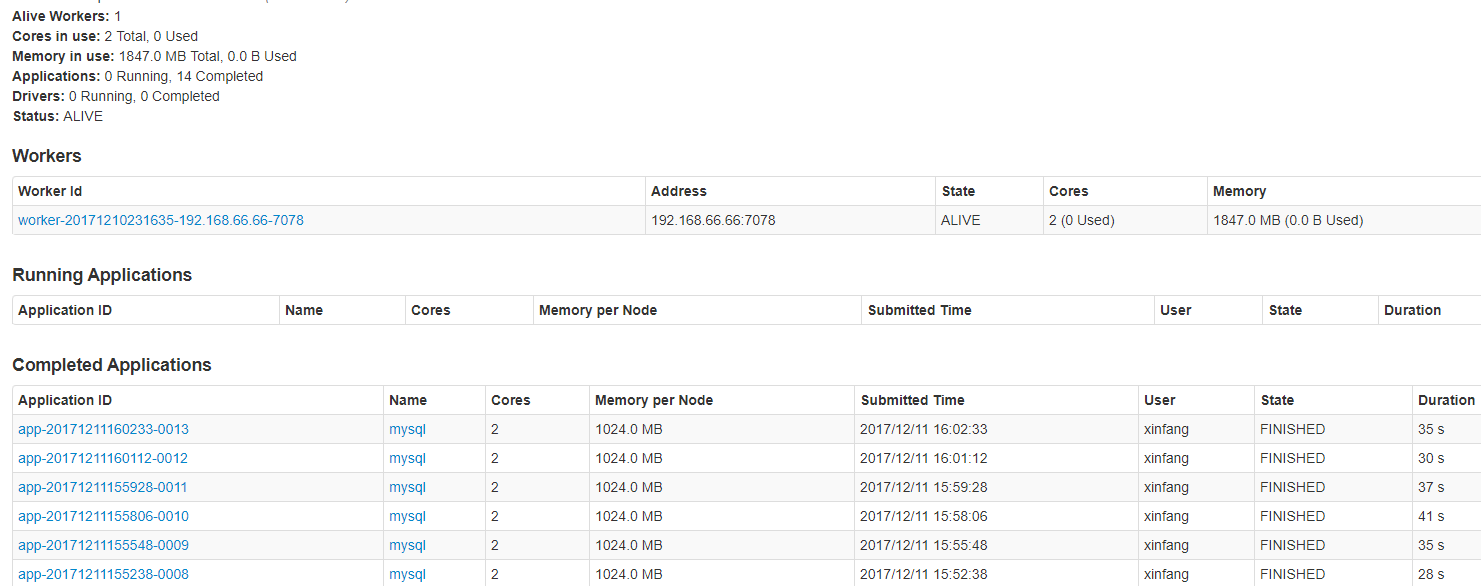

17/12/11 16:04:04 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-20171211160233-0013

17/12/11 16:04:04 INFO AppClient$ClientEndpoint: Executor added: app-20171211160233-0013/0 on worker-20171210231635-192.168.66.66-7078 (192.168.66.66:7078) with 2 cores

17/12/11 16:04:04 INFO SparkDeploySchedulerBackend: Granted executor ID app-20171211160233-0013/0 on hostPort 192.168.66.66:7078 with 2 cores, 1024.0 MB RAM

17/12/11 16:04:04 INFO AppClient$ClientEndpoint: Executor updated: app-20171211160233-0013/0 is now RUNNING

17/12/11 16:04:04 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 55147.

17/12/11 16:04:04 INFO NettyBlockTransferService: Server created on 55147

17/12/11 16:04:04 INFO BlockManagerMaster: Trying to register BlockManager

17/12/11 16:04:04 INFO BlockManagerMasterEndpoint: Registering block manager 172.20.107.151:55147 with 1122.0 MB RAM, BlockManagerId(driver, 172.20.107.151, 55147)

17/12/11 16:04:04 INFO BlockManagerMaster: Registered BlockManager

17/12/11 16:04:04 INFO SparkDeploySchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

17/12/11 16:04:08 INFO SparkDeploySchedulerBackend: Registered executor NettyRpcEndpointRef(null) (xinfang:55156) with ID 0

17/12/11 16:04:08 INFO BlockManagerMasterEndpoint: Registering block manager xinfang:18681 with 511.1 MB RAM, BlockManagerId(0, xinfang, 18681)

17/12/11 16:04:08 INFO SparkContext: Starting job: show at jdbc.scala:18

17/12/11 16:04:08 INFO DAGScheduler: Got job 0 (show at jdbc.scala:18) with 1 output partitions

17/12/11 16:04:08 INFO DAGScheduler: Final stage: ResultStage 0 (show at jdbc.scala:18)

17/12/11 16:04:08 INFO DAGScheduler: Parents of final stage: List()

17/12/11 16:04:08 INFO DAGScheduler: Missing parents: List()

17/12/11 16:04:08 INFO DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at show at jdbc.scala:18), which has no missing parents

17/12/11 16:04:09 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 4.9 KB, free 1122.0 MB)

17/12/11 16:04:09 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 2.4 KB, free 1122.0 MB)

17/12/11 16:04:09 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on 172.20.107.151:55147 (size: 2.4 KB, free: 1122.0 MB)

17/12/11 16:04:09 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1006

17/12/11 16:04:09 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at show at jdbc.scala:18)

17/12/11 16:04:09 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

17/12/11 16:04:09 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, xinfang, partition 0,PROCESS_LOCAL, 2069 bytes)

17/12/11 16:04:14 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on xinfang:18681 (size: 2.4 KB, free: 511.1 MB)

17/12/11 16:04:22 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 13334 ms on xinfang (1/1)

17/12/11 16:04:22 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

17/12/11 16:04:22 INFO DAGScheduler: ResultStage 0 (show at jdbc.scala:18) finished in 13.369 s

17/12/11 16:04:23 INFO DAGScheduler: Job 0 finished: show at jdbc.scala:18, took 14.822540 s

+---+----+---+---+

| id|name|age|sex|

+---+----+---+---+

| 1| 信方| 26| 男|

| 2| 瑶瑶| 22| 女|

+---+----+---+---+ 17/12/11 16:04:23 INFO SparkContext: Starting job: show at jdbc.scala:27

17/12/11 16:04:23 INFO DAGScheduler: Got job 1 (show at jdbc.scala:27) with 1 output partitions

17/12/11 16:04:23 INFO DAGScheduler: Final stage: ResultStage 1 (show at jdbc.scala:27)

17/12/11 16:04:23 INFO DAGScheduler: Parents of final stage: List()

17/12/11 16:04:23 INFO DAGScheduler: Missing parents: List()

17/12/11 16:04:23 INFO DAGScheduler: Submitting ResultStage 1 (MapPartitionsRDD[3] at show at jdbc.scala:27), which has no missing parents

17/12/11 16:04:23 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 4.9 KB, free 1122.0 MB)

17/12/11 16:04:23 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 2.4 KB, free 1122.0 MB)

17/12/11 16:04:23 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on 172.20.107.151:55147 (size: 2.4 KB, free: 1122.0 MB)

17/12/11 16:04:23 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006

17/12/11 16:04:23 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (MapPartitionsRDD[3] at show at jdbc.scala:27)

17/12/11 16:04:23 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

17/12/11 16:04:23 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, xinfang, partition 0,PROCESS_LOCAL, 2069 bytes)

17/12/11 16:04:24 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on xinfang:18681 (size: 2.4 KB, free: 511.1 MB)

17/12/11 16:04:24 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 425 ms on xinfang (1/1)

17/12/11 16:04:24 INFO DAGScheduler: ResultStage 1 (show at jdbc.scala:27) finished in 0.426 s

17/12/11 16:04:24 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

17/12/11 16:04:24 INFO DAGScheduler: Job 1 finished: show at jdbc.scala:27, took 0.485020 s

+---+----+---+---+

| id|name|age|sex|

+---+----+---+---+

| 1| 信方| 26| 男|

| 2| 瑶瑶| 22| 女|

+---+----+---+---+ 17/12/11 16:04:25 INFO SparkContext: Starting job: collect at jdbc.scala:29

17/12/11 16:04:25 INFO DAGScheduler: Got job 2 (collect at jdbc.scala:29) with 1 output partitions

17/12/11 16:04:25 INFO DAGScheduler: Final stage: ResultStage 2 (collect at jdbc.scala:29)

17/12/11 16:04:25 INFO DAGScheduler: Parents of final stage: List()

17/12/11 16:04:25 INFO DAGScheduler: Missing parents: List()

17/12/11 16:04:25 INFO DAGScheduler: Submitting ResultStage 2 (MapPartitionsRDD[5] at collect at jdbc.scala:29), which has no missing parents

17/12/11 16:04:25 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 5.0 KB, free 1122.0 MB)

17/12/11 16:04:25 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 2.4 KB, free 1122.0 MB)

17/12/11 16:04:25 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on 172.20.107.151:55147 (size: 2.4 KB, free: 1122.0 MB)

17/12/11 16:04:25 INFO SparkContext: Created broadcast 2 from broadcast at DAGScheduler.scala:1006

17/12/11 16:04:25 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 2 (MapPartitionsRDD[5] at collect at jdbc.scala:29)

17/12/11 16:04:25 INFO TaskSchedulerImpl: Adding task set 2.0 with 1 tasks

17/12/11 16:04:25 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 2, xinfang, partition 0,PROCESS_LOCAL, 2069 bytes)

17/12/11 16:04:25 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on xinfang:18681 (size: 2.4 KB, free: 511.1 MB)

17/12/11 16:04:25 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 2) in 287 ms on xinfang (1/1)

17/12/11 16:04:25 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

17/12/11 16:04:25 INFO DAGScheduler: ResultStage 2 (collect at jdbc.scala:29) finished in 0.290 s

17/12/11 16:04:25 INFO DAGScheduler: Job 2 finished: collect at jdbc.scala:29, took 0.333871 s

[1,信方,26,男]

[2,瑶瑶,22,女]

17/12/11 16:04:26 INFO SparkContext: Starting job: collect at jdbc.scala:41

17/12/11 16:04:26 INFO DAGScheduler: Got job 3 (collect at jdbc.scala:41) with 1 output partitions

17/12/11 16:04:26 INFO DAGScheduler: Final stage: ResultStage 3 (collect at jdbc.scala:41)

17/12/11 16:04:26 INFO DAGScheduler: Parents of final stage: List()

17/12/11 16:04:26 INFO DAGScheduler: Missing parents: List()

17/12/11 16:04:26 INFO DAGScheduler: Submitting ResultStage 3 (MapPartitionsRDD[7] at collect at jdbc.scala:41), which has no missing parents

17/12/11 16:04:26 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 5.9 KB, free 1122.0 MB)

17/12/11 16:04:26 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2.8 KB, free 1122.0 MB)

17/12/11 16:04:26 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on 172.20.107.151:55147 (size: 2.8 KB, free: 1122.0 MB)

17/12/11 16:04:26 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1006

17/12/11 16:04:26 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 3 (MapPartitionsRDD[7] at collect at jdbc.scala:41)

17/12/11 16:04:26 INFO TaskSchedulerImpl: Adding task set 3.0 with 1 tasks

17/12/11 16:04:26 INFO TaskSetManager: Starting task 0.0 in stage 3.0 (TID 3, xinfang, partition 0,PROCESS_LOCAL, 2069 bytes)

17/12/11 16:04:26 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on xinfang:18681 (size: 2.8 KB, free: 511.1 MB)

17/12/11 16:04:29 INFO TaskSetManager: Finished task 0.0 in stage 3.0 (TID 3) in 3053 ms on xinfang (1/1)

17/12/11 16:04:29 INFO TaskSchedulerImpl: Removed TaskSet 3.0, whose tasks have all completed, from pool

17/12/11 16:04:29 INFO DAGScheduler: ResultStage 3 (collect at jdbc.scala:41) finished in 3.055 s

17/12/11 16:04:29 INFO DAGScheduler: Job 3 finished: collect at jdbc.scala:41, took 3.101476 s

[96.0000000000,2015-08-18,深圳市宝安区石岩人民医院官田社区健康服务中心,宝安区,7.0000000000,113.947973,22.683914,25.0000000000]

[97.0000000000,2016-03-03,深圳市龙岗区第三人民医院,龙岗区,76.0000000000,114.2070007,22.65066798,367.0000000000]

[98.0000000000,2016-03-15,深圳市龙岗区第三人民医院,龙岗区,120.0000000000,114.2070007,22.65066798,439.0000000000]

[99.0000000000,2014-03-17,深圳市光明新区人民医院,光明新区,117.0000000000,113.914073,22.72181,637.0000000000]

[100.0000000000,2015-06-21,深圳市龙岗区南湾人民医院,龙岗区,84.0000000000,114.235159,22.732797,339.0000000000]

[101.0000000000,2015-12-28,深圳市福田区园岭医院上林社区健康服务中心,福田区,49.0000000000,114.06297,22.529945,78.0000000000]

[102.0000000000,2014-03-08,深圳市坪山新区人民医院,坪山新区,46.0000000000,114.357942,22.693397,165.0000000000]

[103.0000000000,2016-02-27,深圳市宝安区福永医院兴围社区健康服务中心,宝安区,65.0000000000,113.852402,22.70585,95.0000000000]

[104.0000000000,2016-03-04,深圳市宝安区松岗人民医院沙埔社区健康服务中心,宝安区,45.0000000000,113.855092,22.770395,63.0000000000]

[105.0000000000,2015-03-06,深圳市儿童医院,福田区,253.0000000000,114.0507065,22.5502964,864.0000000000]

17/12/11 16:04:29 INFO SparkContext: Invoking stop() from shutdown hook

17/12/11 16:04:29 INFO SparkUI: Stopped Spark web UI at http://172.20.107.151:4040

17/12/11 16:04:29 INFO SparkDeploySchedulerBackend: Shutting down all executors

17/12/11 16:04:29 INFO SparkDeploySchedulerBackend: Asking each executor to shut down

17/12/11 16:04:29 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

17/12/11 16:04:29 INFO MemoryStore: MemoryStore cleared

17/12/11 16:04:29 INFO BlockManager: BlockManager stopped

17/12/11 16:04:29 INFO BlockManagerMaster: BlockManagerMaster stopped

17/12/11 16:04:29 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

17/12/11 16:04:29 INFO RemoteActorRefProvider$RemotingTerminator: Shutting down remote daemon.

17/12/11 16:04:29 INFO SparkContext: Successfully stopped SparkContext

17/12/11 16:04:29 INFO ShutdownHookManager: Shutdown hook called

17/12/11 16:04:30 INFO ShutdownHookManager: Deleting directory C:\Users\xinfang\AppData\Local\Temp\spark-318cb532-3e2f-44dd-bdc6-07637f0f37b6

17/12/11 16:04:30 INFO RemoteActorRefProvider$RemotingTerminator: Remote daemon shut down; proceeding with flushing remote transports. Process finished with exit code 0

Spark记录-SparkSQL远程操作MySQL和ORACLE的更多相关文章

- Spark记录-SparkSQL一些操作

scala>val spark=new org.apache.spark.sql.SQLContext(sc) user.json {"age":"45" ...

- Spark记录-SparkSQL相关学习

$spark-sql --help 查看帮助命令 $设置任务个数,在这里修改为20个 spark-sql>SET spark.sql.shuffle.partitions=20; $选择数据 ...

- Spark记录-SparkSql官方文档中文翻译(部分转载)

1 概述(Overview) Spark SQL是Spark的一个组件,用于结构化数据的计算.Spark SQL提供了一个称为DataFrames的编程抽象,DataFrames可以充当分布式SQL查 ...

- Spark记录-Spark-Shell客户端操作读取Hive数据

1.拷贝hive-site.xml到spark/conf下,拷贝mysql-connector-java-xxx-bin.jar到hive/lib下 2.开启hive元数据服务:hive --ser ...

- spark-sql jdbc操作mysql

SparkConf sparkConf = new SparkConf() .setMaster("local") .setAppName("MySqlTest" ...

- Spark记录-SparkSQL

Spark SQL的一个用途是执行SQL查询.Spark SQL也可以用来从现有的Hive安装中读取数据.有关如何配置此功能的更多信息,请参阅Hive表部分.从另一种编程语言中运行SQL时,结果将作为 ...

- 在spark中操作mysql数据 ---- spark学习之七

使用spark的 DataFrame 来操作mysql数据. DataFrame是比RDD更高一个级别的抽象,可以应用SQL语句进行操作,详细参考: https://spark.apache.org/ ...

- 【Python】python读取文件操作mysql

尾大不掉,前阵子做检索测试时,总是因为需要业务端操作db和一些其他服务,这就使得检索测试对环境和数据依赖性特别高,极大提高了测试成本. Mock服务和mysql可以很好的解决这个问题,所以那阵子做了两 ...

- Spark之 使用SparkSql操作mysql和DataFrame的Scala实现

通过读取文件转换成DataFrame数据写入到mysql中 package com.zy.sparksql import java.util.Properties import org.apache. ...

随机推荐

- PAT L3-003 社交集群

https://pintia.cn/problem-sets/994805046380707840/problems/994805053141925888 当你在社交网络平台注册时,一般总是被要求填写 ...

- cxGrid导出Excel货币符号问题

cxGrid导出到Excel,对于Currency类型总是加上了货币符号,可以修改导出文件设置来去掉: 在cxXLSExport.pas文件中,修改: procedure TcxXLSExportPr ...

- XSS编码与绕过

XSS编码与绕过 0x00 背景 对于了解web安全的朋友来说,都知道XSS这种漏洞,其危害性不用强调了.一般对于该漏洞的防护有两个思路:一是过滤敏感字符,诸如[<,>,script,' ...

- Node fs模块异步读取验证并异步写入

console.log("1:开始读成取文件内容...");fs.readFile('./public/2.log',function(err,data){ if(err){ co ...

- linux学习之centos(四):git的安装

整个流程如下:(参考文章:linux安装git方法) [carsonzhu@localhost 桌面]$ wget https://github.com/git/git/archive/v2.8.3. ...

- liunx上安装MySQL一个非常简单的方法

1.官网下载yum源 https://www.mysql.com/ 2.把yum源包上传到linux,安装. 执行命令安装 [root@bogon ~]# yum localinstall mysql ...

- python异常提示表

Python常见的异常提示及含义对照表如下: 异常名称 描述 BaseException 所有异常的基类 SystemExit 解释器请求退出 KeyboardInterrupt 用户中断执行(通常是 ...

- MyBatis在表名作为参数时遇到的问题

之前在用MyBatis的时候没用过表名作为参数,最近使用到了. 基于注释使用MyBatis的Dao层代码如下: @Repository public interface Base1102Dao { @ ...

- 在finally块中使用try catch,并且catch的时候抛出异常的一个问题

在finally中使用try/catch,并且catch的时候抛出异常 IDEA会提示警告 Reports throw statements inside of finally blocks. Whi ...

- MT【205】寻找对称中心

函数$f(x)=\dfrac{x}{x+1}+\dfrac{x+1}{x+2}+\cdots+\dfrac{x+2018}{x+2019}$ 的图像的对称中心_____ 提示:根据定义域可知如果有对称 ...