Spark应用_PageView_UserView_HotChannel

Spark应用_PageView_UserView_HotChannel

一、PV

对某一个页面的访问量,在页面中进行刷新一次就是一次pv

PV {p1, (u1,u2,u3,u1,u2,u4…)} 对同一个页面的浏览量进行统计,用户可以重复

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

public class PV_ANA {

public static void main(String[] args) {

SparkConf conf = new SparkConf()

.setAppName("PV_ANA")

.setMaster("local")

.set("spark.testing.memory", "2147480000");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logRDD = sc.textFile("f:/userLog");

String str = "View";

final Broadcast<String> broadcast = sc.broadcast(str);

pvAnalyze(logRDD, broadcast);

}

private static void pvAnalyze(JavaRDD<String> logRDD,

final Broadcast<String> broadcast) {

JavaRDD<String> filteredLogRDD = logRDD.filter

(new Function<String, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(String s) throws Exception {

String actionParam = broadcast.value();

String action = s.split("\t")[5];

return actionParam.equals(action);

}

});

JavaPairRDD<String, String> pariLogRDD = filteredLogRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String s)

throws Exception {

String pageId = s.split("\t")[3];

return new Tuple2<String, String>(pageId, null);

}

});

pariLogRDD.groupByKey().foreach(new VoidFunction

<Tuple2<String, Iterable<String>>>() {

private static final long serialVersionUID = 1L;

@Override

public void call(Tuple2<String, Iterable<String>> tuple)

throws Exception {

String pageId = tuple._1;

Iterator<String> iterator = tuple._2.iterator();

long count = 0L;

while (iterator.hasNext()) {

iterator.next();

count++;

}

System.out.println("PAGEID:" + pageId + "\t PV_COUNT:" + count);

}

});

}

}

|

二、UV

UV {p1, (u1,u2,u3,u4,u5…)} 对一个页面有多少用户访问,用户不可以重复

【方式一】

【流程图】

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

|

public class UV_ANA {

public static void main(String[] args) {

SparkConf conf = new SparkConf()

.setAppName("UV_ANA")

.setMaster("local")

.set("spark.testing.memory", "2147480000");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logRDD = sc.textFile("f:/userLog");

String str = "View";

final Broadcast<String> broadcast = sc.broadcast(str);

uvAnalyze(logRDD, broadcast);

}

private static void uvAnalyze(JavaRDD<String> logRDD,

final Broadcast<String> broadcast) {

JavaRDD<String> filteredLogRDD = logRDD.filter

(new Function<String, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(String s) throws Exception {

String actionParam = broadcast.value();

String action = s.split("\t")[5];

return actionParam.equals(action);

}

});

JavaPairRDD<String, String> pairLogRDD = filteredLogRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String s) throws Exception {

String pageId = s.split("\t")[3];

String userId = s.split("\t")[2];

return new Tuple2<String, String>(pageId, userId);

}

});

pairLogRDD.groupByKey().foreach(new VoidFunction

<Tuple2<String, Iterable<String>>>() {

private static final long serialVersionUID = 1L;

@Override

public void call(Tuple2<String, Iterable<String>> tuple)

throws Exception {

String pageId = tuple._1;

Iterator<String> iterator = tuple._2.iterator();

Set<String> userSets = new HashSet<>();

while (iterator.hasNext()) {

String userId = iterator.next();

userSets.add(userId);

}

System.out.println("PAGEID:" + pageId + "\t " +

"UV_COUNT:" + userSets.size());

}

});

}

}

|

【方式二】

【流程图】

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

|

public class UV_ANAoptz {

public static void main(String[] args) {

SparkConf conf = new SparkConf()

.setAppName("UV_ANAoptz")

.setMaster("local")

.set("spark.testing.memory", "2147480000");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logRDD = sc.textFile("f:/userLog");

String str = "View";

final Broadcast<String> broadcast = sc.broadcast(str);

uvAnalyzeOptz(logRDD, broadcast);

}

private static void uvAnalyzeOptz(JavaRDD<String> logRDD,

final Broadcast<String> broadcast) {

JavaRDD<String> filteredLogRDD = logRDD.filter

(new Function<String, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(String s) throws Exception {

String actionParam = broadcast.value();

String action = s.split("\t")[5];

return actionParam.equals(action);

}

});

JavaPairRDD<String, String> pairRDD = filteredLogRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String s)

throws Exception {

String pageId = s.split("\t")[3];

String userId = s.split("\t")[2];

return new Tuple2<String, String>(pageId + "_" +

userId, null);

}

});

JavaPairRDD<String, Iterable<String>> groupUp2LogRDD = pairRDD.groupByKey();

Map<String, Object> countByKey = groupUp2LogRDD.mapToPair

(new PairFunction<Tuple2<String, Iterable<String>>,

String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(Tuple2<String,

Iterable<String>> tuple)

throws Exception {

String pu = tuple._1;

String[] spilted = pu.split("_");

String pageId = spilted[0];

return new Tuple2<String, String>(pageId, null);

}

}).countByKey();

Set<String> keySet = countByKey.keySet();

for (String key : keySet) {

System.out.println("PAGEID:" + key + "\tUV_COUNT:" +

countByKey.get(key));

}

}

}

|

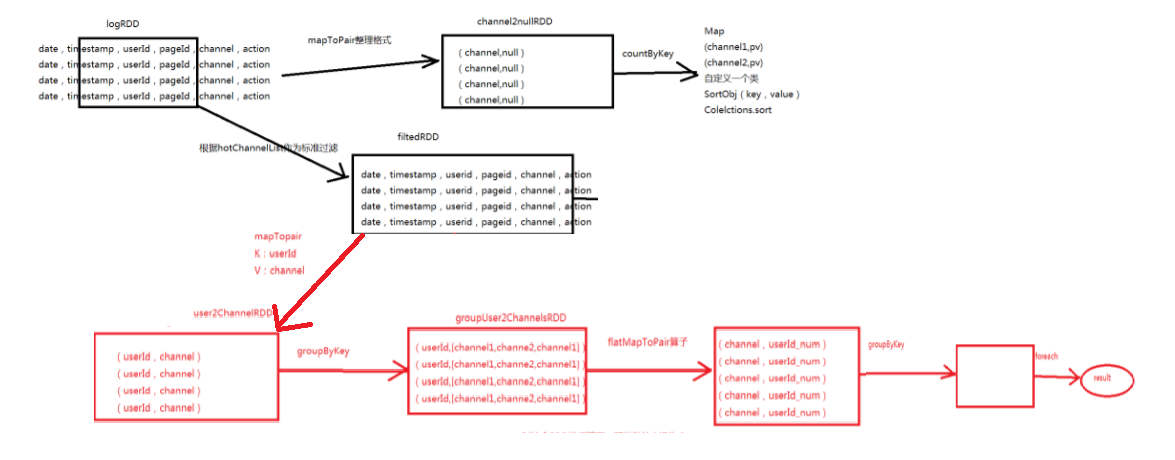

三、热门版块下用户访问的数量

统计出热门版块中最活跃的top3用户。

【方式一】

【流程图】

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

|

public class HotChannel {

public static void main(String[] args) {

SparkConf conf = new SparkConf()

.setAppName("HotChannel")

.setMaster("local")

.set("spark.testing.memory", "2147480000");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logRDD = sc.textFile("f:/userLog");

String str = "View";

final Broadcast<String> broadcast = sc.broadcast(str);

hotChannel(sc, logRDD, broadcast);

}

private static void hotChannel(JavaSparkContext sc, JavaRDD<String> logRDD,

final Broadcast<String> broadcast) {

JavaRDD<String> filteredLogRDD = logRDD.filter

(new Function<String, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(String v1) throws Exception {

String actionParam = broadcast.value();

String action = v1.split("\t")[5];

return actionParam.equals(action);

}

});

JavaPairRDD<String, String> channel2nullRDD = filteredLogRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String s) throws Exception {

String channel = s.split("\t")[4];

return new Tuple2<String, String>(channel, null);

}

});

Map<String, Object> channelPVMap = channel2nullRDD.countByKey();

Set<String> keySet = channelPVMap.keySet();

List<SortObj> channels = new ArrayList<>();

for (String channel : keySet) {

channels.add(new SortObj(channel, Integer.valueOf

(channelPVMap.get(channel) + "")));

}

Collections.sort(channels, new Comparator<SortObj>() {

@Override

public int compare(SortObj o1, SortObj o2) {

return o2.getValue() - o1.getValue();

}

});

List<String> hotChannelList = new ArrayList<>();

for (int i = 0; i < 3; i++) {

hotChannelList.add(channels.get(i).getKey());

}

for (String channel : hotChannelList) {

System.out.println("channel:" + channel);

}

final Broadcast<List<String>> hotChannelListBroadcast =

sc.broadcast(hotChannelList);

JavaRDD<String> filterRDD = logRDD.filter(new Function<String, Boolean>() {

@Override

public Boolean call(String s) throws Exception {

List<String> hostChannels = hotChannelListBroadcast.value();

String channel = s.split("\t")[4];

String userId = s.split("\t")[2];

return hostChannels.contains(channel) && !"null".equals(userId);

}

});

JavaPairRDD<String, String> channel2UserRDD = filterRDD.mapToPair

(new PairFunction<String, String, String>() {

@Override

public Tuple2<String, String> call(String s)

throws Exception {

String[] splited = s.split("\t");

String channel = splited[4];

String userId = splited[2];

return new Tuple2<String, String>(channel, userId);

}

});

channel2UserRDD.groupByKey().foreach(new VoidFunction

<Tuple2<String, Iterable<String>>>() {

@Override

public void call(Tuple2<String, Iterable<String>> tuple)

throws Exception {

String channel = tuple._1;

Iterator<String> iterator = tuple._2.iterator();

Map<String, Integer> userNumMap = new HashMap<>();

while (iterator.hasNext()) {

String userId = iterator.next();

Integer count = userNumMap.get(userId);

if (count == null) {

count = 1;

} else {

count++;

}

userNumMap.put(userId, count);

}

List<SortObj> lists = new ArrayList<>();

Set<String> keys = userNumMap.keySet();

for (String key : keys) {

lists.add(new SortObj(key, userNumMap.get(key)));

}

Collections.sort(lists, new Comparator<SortObj>() {

@Override

public int compare(SortObj O1, SortObj O2) {

return O2.getValue() - O1.getValue();

}

});

System.out.println("HOT_CHANNEL:" + channel);

for (int i = 0; i < 3; i++) {

SortObj sortObj = lists.get(i);

System.out.println(sortObj.getKey() + "=="

+ sortObj.getValue());

}

}

});

}

}

|

【方式二】

【流程图】

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

|

public class HotChannelOpz {

public static void main(String[] args) {

SparkConf conf = new SparkConf()

.setAppName("hotChannelOpz")

.setMaster("local")

.set("spark.testing.memory", "2147480000");

JavaSparkContext sc = new JavaSparkContext(conf);

JavaRDD<String> logRDD = sc.textFile("f:/userLog");

String str = "View";

final Broadcast<String> broadcast = sc.broadcast(str);

hotChannelOpz(sc, logRDD, broadcast);

}

private static void hotChannelOpz(JavaSparkContext sc, JavaRDD<String> logRDD,

final Broadcast<String> broadcast) {

JavaRDD<String> filteredLogRDD = logRDD.filter

(new Function<String, Boolean>() {

private static final long serialVersionUID = 1L;

@Override

public Boolean call(String v1) throws Exception {

String actionParam = broadcast.value();

String action = v1.split("\t")[5];

return actionParam.equals(action);

}

});

JavaPairRDD<String, String> channel2nullRDD = filteredLogRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String val)

throws Exception {

String channel = val.split("\t")[4];

return new Tuple2<String, String>(channel, null);

}

});

Map<String, Object> channelPVMap = channel2nullRDD.countByKey();

Set<String> keySet = channelPVMap.keySet();

List<SortObj> channels = new ArrayList<>();

for (String channel : keySet) {

channels.add(new SortObj(channel, Integer.valueOf

(channelPVMap.get(channel) + "")));

}

Collections.sort(channels, new Comparator<SortObj>() {

@Override

public int compare(SortObj o1, SortObj o2) {

return o2.getValue() - o1.getValue();

}

});

List<String> hotChannelList = new ArrayList<>();

for (int i = 0; i < 3; i++) {

hotChannelList.add(channels.get(i).getKey());

}

final Broadcast<List<String>> hotChannelListBroadcast =

sc.broadcast(hotChannelList);

JavaRDD<String> filtedRDD = logRDD.filter

(new Function<String, Boolean>() {

@Override

public Boolean call(String v1) throws Exception {

List<String> hostChannels = hotChannelListBroadcast.value();

String channel = v1.split("\t")[4];

String userId = v1.split("\t")[2];

return hostChannels.contains(channel) &&

!"null".equals(userId);

}

});

JavaPairRDD<String, String> user2ChannelRDD = filtedRDD.mapToPair

(new PairFunction<String, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Tuple2<String, String> call(String val)

throws Exception {

String[] splited = val.split("\t");

String userId = splited[2];

String channel = splited[4];

return new Tuple2<String, String>(userId, channel);

}

});

JavaPairRDD<String, String> userVistChannelsRDD =

user2ChannelRDD.groupByKey().

flatMapToPair(new PairFlatMapFunction

<Tuple2<String, Iterable<String>>, String, String>() {

private static final long serialVersionUID = 1L;

@Override

public Iterable<Tuple2<String, String>> call

(Tuple2<String, Iterable<String>> tuple)

throws Exception {

String userId = tuple._1;

Iterator<String> iterator = tuple._2.iterator();

Map<String, Integer> channelMap = new HashMap<>();

while (iterator.hasNext()) {

String channel = iterator.next();

Integer count = channelMap.get(channel);

if (count == null)

count = 1;

else

count++;

channelMap.put(channel, count);

}

List<Tuple2<String, String>> list = new ArrayList<>();

Set<String> keys = channelMap.keySet();

for (String channel : keys) {

Integer channelNum = channelMap.get(channel);

list.add(new Tuple2<String, String>(channel,

userId + "_" + channelNum));

}

return list;

}

});

userVistChannelsRDD.groupByKey().foreach(new VoidFunction

<Tuple2<String, Iterable<String>>>() {

@Override

public void call(Tuple2<String, Iterable<String>> tuple)

throws Exception {

String channel = tuple._1;

Iterator<String> iterator = tuple._2.iterator();

List<SortObj> list = new ArrayList<>();

while (iterator.hasNext()) {

String ucs = iterator.next();

String[] splited = ucs.split("_");

String userId = splited[0];

Integer num = Integer.valueOf(splited[1]);

list.add(new SortObj(userId, num));

}

Collections.sort(list, new Comparator<SortObj>() {

@Override

public int compare(SortObj o1, SortObj o2) {

return o2.getValue() - o1.getValue();

}

});

System.out.println("HOT_CHANNLE:" + channel);

for (int i = 0; i < 3; i++) {

SortObj sortObj = list.get(i);

System.out.println(sortObj.getKey() + "==="

+ sortObj.getValue());

}

}

});

}

}

|

Spark应用_PageView_UserView_HotChannel的更多相关文章

- Spark踩坑记——Spark Streaming+Kafka

[TOC] 前言 在WeTest舆情项目中,需要对每天千万级的游戏评论信息进行词频统计,在生产者一端,我们将数据按照每天的拉取时间存入了Kafka当中,而在消费者一端,我们利用了spark strea ...

- Spark RDD 核心总结

摘要: 1.RDD的五大属性 1.1 partitions(分区) 1.2 partitioner(分区方法) 1.3 dependencies(依赖关系) 1.4 compute(获取分区迭代列表) ...

- spark处理大规模语料库统计词汇

最近迷上了spark,写一个专门处理语料库生成词库的项目拿来练练手, github地址:https://github.com/LiuRoy/spark_splitter.代码实现参考wordmaker ...

- Hive on Spark安装配置详解(都是坑啊)

个人主页:http://www.linbingdong.com 简书地址:http://www.jianshu.com/p/a7f75b868568 简介 本文主要记录如何安装配置Hive on Sp ...

- Spark踩坑记——数据库(Hbase+Mysql)

[TOC] 前言 在使用Spark Streaming的过程中对于计算产生结果的进行持久化时,我们往往需要操作数据库,去统计或者改变一些值.最近一个实时消费者处理任务,在使用spark streami ...

- Spark踩坑记——初试

[TOC] Spark简介 整体认识 Apache Spark是一个围绕速度.易用性和复杂分析构建的大数据处理框架.最初在2009年由加州大学伯克利分校的AMPLab开发,并于2010年成为Apach ...

- Spark读写Hbase的二种方式对比

作者:Syn良子 出处:http://www.cnblogs.com/cssdongl 转载请注明出处 一.传统方式 这种方式就是常用的TableInputFormat和TableOutputForm ...

- (资源整理)带你入门Spark

一.Spark简介: 以下是百度百科对Spark的介绍: Spark 是一种与 Hadoop 相似的开源集群计算环境,但是两者之间还存在一些不同之处,这些有用的不同之处使 Spark 在某些工作负载方 ...

- Spark的StandAlone模式原理和安装、Spark-on-YARN的理解

Spark是一个内存迭代式运算框架,通过RDD来描述数据从哪里来,数据用那个算子计算,计算完的数据保存到哪里,RDD之间的依赖关系.他只是一个运算框架,和storm一样只做运算,不做存储. Spark ...

随机推荐

- 跟我一起读postgresql源码(七)——Executor(查询执行模块之——数据定义语句的执行)

1.数据定义语句的执行 数据定义语句(也就是之前我提到的非可优化语句)是一类用于定义数据模式.函数等的功能性语句.不同于元组增删査改的操作,其处理方式是为每一种类型的描述语句调用相应的处理函数. 数据 ...

- 动态求区间K大值(权值线段树)

我们知道我们可以通过主席树来维护静态区间第K大值.我们又知道主席树满足可加性,所以我们可以用树状数组来维护主席树,树状数组的每一个节点都可以开一颗主席树,然后一起做. 我们注意到树状数组的每一棵树都和 ...

- composer安装laravel

安装composer composer是一个很有用的工具,我将用它在本机(win7)上安装laravel 到composer的官网,根据自己的系统要求下载相应的版本 安装laravel 首先cmd下进 ...

- SSM整合---实现全部用户查询

SSM整合 准备 1.创建工程 2.导入必须jar包 链接: https://pan.baidu.com/s/1nvCDQJ3 密码: v5xs 3.工程结构 代码 SqlMapConfig < ...

- TPYBoard实例之利用WHID为隔离主机建立隐秘通道

本文作者:xiaowuyi,来自FreeBuf.COM(MicroPythonQQ交流群:157816561,公众号:MicroPython玩家汇) 0引言 从2014年BADUSB出现以后,USB- ...

- css3实现梯形三角

近期移动端项目中,图片很多 移动端尽量少图片,以便提升加载速度!这时候css3可以大放光芒比如梯形的背景图 --------------------------------- ------------ ...

- HTML知识点总结之表单元素

网页不可能是纯静态的,没有任何的交互功能:绝大多数的网站都有表单元素的使用.表单提供了一个浏览者和网站交互的途径,比如用户注册登录,用户留言等功能. form form元素只是一个数据获取元素的容器, ...

- [转载]MySQL UUID() 函数

目录 目录 一 引子 二 MySQL UUID() 函数 三 复制中的 UUID()四 UUID_SHORT() 函数 3.1 实验环境介绍 3.2 搭建复制环境 3.3 基于 STATEMENT 模 ...

- 【转】jar包和war包的介绍和区别

JavaSE程序可以打包成Jar包(J其实可以理解为Java了),而JavaWeb程序可以打包成war包(w其实可以理解为Web了).然后把war发布到Tomcat的webapps目录下,Tomcat ...

- nodejs 做后台的一个完整业务整理

大家知道js现在不仅仅可以写前端界面而且可以写后端的业务了,这样js就可以写一个全栈的项目.这里介绍一个nodejs + express + mongodb + bootstap 的全栈项目. 1.安 ...