学习笔记(七): Logistic Regression

目录

1.Loss function for Logistic Regression

2.Regularization in Logistic Regression

Calculating a Probability

Many problems require a probability estimate as output. Logistic regression is an extremely efficient mechanism for calculating probabilities. Practically speaking, you can use the returned probability in either of the following two ways:

"As is"

Converted to a binary category.

Let's consider how we might use the probability "as is." Suppose we create a logistic regression model to predict the probability that a dog will bark during the middle of the night. We'll call that probability:

p(bark | night)

If the logistic regression model predicts a p(bark | night) of 0.05, then over a year, the dog's owners should be startled awake approximately 18 times:

startled = p(bark | night) * nights

18 ~= 0.05 * 365

In many cases, you'll map the logistic regression output into the solution to a binary classification problem, in which the goal is to correctly predict one of two possible labels (e.g., "spam" or "not spam").

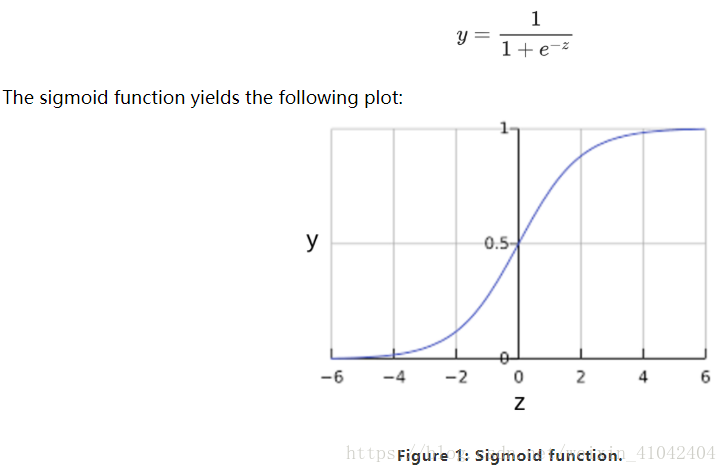

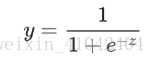

You might be wondering how a logistic regression model can ensure output that always falls between 0 and 1. As it happens, a sigmoid function, produces output having those same characteristics:

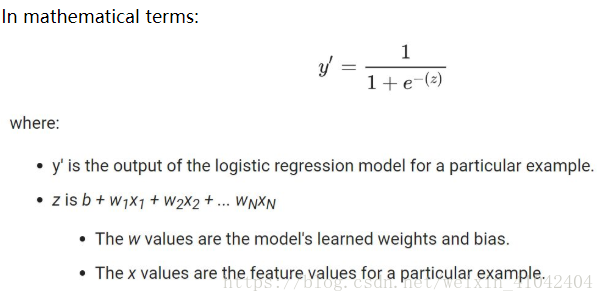

If z represents the output of the linear layer of a model trained with logistic regression, then sigmoid(z) will yield a value (a probability) between 0 and 1. 如果 z 表示使用逻辑回归训练的模型的线性层的输出,则 S 型(z) 函数会生成一个介于 0 和 1 之间的值(概率)。

Note that z is also referred to as the log-odds 对数几率 because the inverse of the sigmoid S函数的反函数 states that z can be defined as the log of the probability of the "1" label (e.g., "dog barks") divided by the probability of the "0" label (e.g., "dog doesn't bark"):

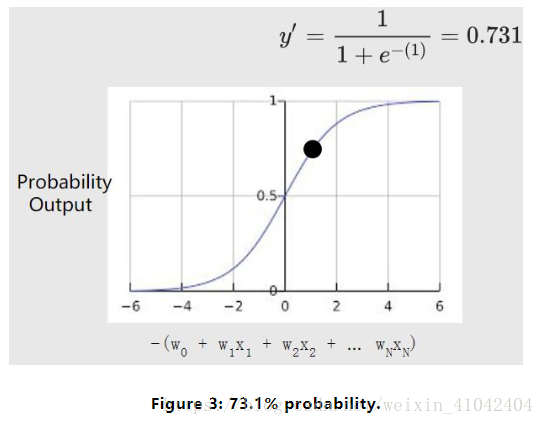

Sample logistic regression inference calculation

Suppose we had a logistic regression model with three features that learned the following bias and weights:

b = 1,w1 = 2,w2 = -1,w3 = 5

Further suppose the following feature values for a given example:

x1 = 0,x2 = 10,x3 = 2

Therefore, the log-odds:

b+w1x1+w2x2+w3x3

will be:

(1) + (2)(0) + (-1)(10) + (5)(2) = 1

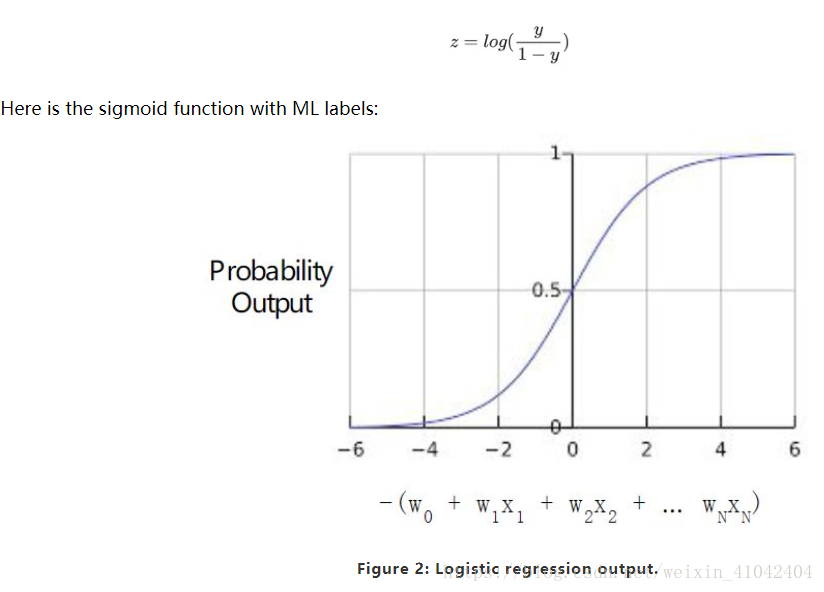

Consequently, the logistic regression prediction for this particular example will be 0.731:

Model Training

1.Loss function for Logistic Regression

The loss function for linear regression is squared loss.

The loss function for logistic regression is Log Loss, which is defined as follows:

The equation for Log Loss is closely related to Shannon's Entropy measure from Information Theory.

对数损失函数的方程式与”Shannon 信息论中的熵测量“密切相关。

It is also the negative logarithm of the likelihood function, assuming a Bernoulli distribution of y.

它也是似然函数的负对数(假设“y”属于伯努利分布)。

Indeed, minimizing the loss function yields a maximum likelihood estimate.

最大限度地降低损失函数的值会生成最大的似然估计值。

2.Regularization in Logistic Regression

Regularization is extremely important in logistic regression modeling. Without regularization, the asymptotic nature渐进性 of logistic regression would keep driving loss towards 0 in high dimensions. Consequently, most logistic regression models use one of the following two strategies to dampen model complexity:

L2 regularization.

Early stopping, that is, limiting the number of training steps or the learning rate.

L1 regularization.

Imagine that you assign a unique id to each example, and map each id to its own feature. If you don't specify a regularization function, the model will become completely overfit. That's because the model would try to drive loss to zero on all examples and never get there, driving the weights for each indicator feature to +infinity or -infinity.

This can happen in high dimensional data with feature crosses, when there’s a huge mass of rare crosses that happen only on one example each.当有大量罕见的特征组合且每个样本中仅一个时,包含特征组合的高维度数据会出现这种情况。

Fortunately, using L2 or early stopping will prevent this problem.

Summary

Logistic regression models generate probabilities.

Log Loss is the loss function for logistic regression.

Logistic regression is widely used by many practitioners.

Glossay

1.sigmoid function:

A function that maps logistic or multinomial regression output (log odds) to probabilities, returning a value between 0 and 1:

where z in logistic regression problems is simply:

z=b+w1x1+w2x2+…wnxn

In other words, the sigmoid function converts z into a probability between 0 and 1.

In some neural networks, the sigmoid function acts as the activation function.

2.binary classification:

A type of classification task that outputs one of two mutually exclusive互斥 classes.

For example, a machine learning model that evaluates email messages and outputs either "spam" or "not spam" is a binary classifier.

3.logistic regression:

A model that generates a probability for each possible discrete label value in classification problems by applying a sigmoid function to a linear prediction.

Although logistic regression is often used in binary classification problems, it can also be used in multi-class classification problems (where it becomes called multi-class logistic regression or multinomial多项 regression).

4.Log Loss:

The loss function used in binary logistic regression二元逻辑回归.

5.log-odds对数几率:

The logarithm of the odds of some event.

If the event refers to a binary probability, then odds refers to the ratio of the probability of success (p) to the probability of failure (1-p).

For example, suppose that a given event has a 90% probability of success and a 10% probability of failure. In this case, odds is calculated as follows:

odds=p/(1-p)=.9/.1=9

The log-odds is simply the logarithm of the odds.

By convention, "logarithm" refers to natural logarithm, but logarithm could actually be any base greater than 1. 按照惯例,“对数”是指自然对数,但对数实际上可以是大于1的任何基数。

Sticking to convention, the log-odds of our example is therefore:

log-odds=ln(9) =2.2

The log-odds are the inverse of the sigmoid function.

学习笔记(七): Logistic Regression的更多相关文章

- (转)Qt Model/View 学习笔记 (七)——Delegate类

Qt Model/View 学习笔记 (七) Delegate 类 概念 与MVC模式不同,model/view结构没有用于与用户交互的完全独立的组件.一般来讲, view负责把数据展示 给用户,也 ...

- Learning ROS for Robotics Programming Second Edition学习笔记(七) indigo PCL xtion pro live

中文译著已经出版,详情请参考:http://blog.csdn.net/ZhangRelay/article/category/6506865 Learning ROS forRobotics Pro ...

- Typescript 学习笔记七:泛型

中文网:https://www.tslang.cn/ 官网:http://www.typescriptlang.org/ 目录: Typescript 学习笔记一:介绍.安装.编译 Typescrip ...

- 机器学习实战(Machine Learning in Action)学习笔记————05.Logistic回归

机器学习实战(Machine Learning in Action)学习笔记————05.Logistic回归 关键字:Logistic回归.python.源码解析.测试作者:米仓山下时间:2018- ...

- [ML学习笔记] 回归分析(Regression Analysis)

[ML学习笔记] 回归分析(Regression Analysis) 回归分析:在一系列已知自变量与因变量之间相关关系的基础上,建立变量之间的回归方程,把回归方程作为算法模型,实现对新自变量得出因变量 ...

- python3.4学习笔记(七) 学习网站博客推荐

python3.4学习笔记(七) 学习网站博客推荐 深入 Python 3http://sebug.net/paper/books/dive-into-python3/<深入 Python 3& ...

- Go语言学习笔记七: 函数

Go语言学习笔记七: 函数 Go语言有函数还有方法,神奇不.这有点像python了. 函数定义 func function_name( [parameter list] ) [return_types ...

- iOS 学习笔记七 【博爱手把手教你使用2016年gitHub Mac客户端】

iOS 学习笔记七 [博爱手把手教你使用gitHub客户端] 第一步:首先下载git客户端 链接:https://desktop.github.com 第二步:fork 大神的代码[这里以我的代码为例 ...

- 【opencv学习笔记七】访问图像中的像素与图像亮度对比度调整

今天我们来看一下如何访问图像的像素,以及如何改变图像的亮度与对比度. 在之前我们先来看一下图像矩阵数据的排列方式.我们以一个简单的矩阵来说明: 对单通道图像排列如下: 对于双通道图像排列如下: 那么对 ...

- Linux学习笔记(七) 查询系统

1.查看命令 (1)man 可以使用 man 命令名称 命令查看某个命令的详细用法,其显示的内容如下: NAME:命令名称 SYNOPSIS:语法 DESCRIPTION:说明 OPTIONS:选项 ...

随机推荐

- POJ1013 Counterfeit Dollar

题目来源:http://poj.org/problem?id=1013 题目大意:有12枚硬币,其中有一枚假币.所有钱币的外表都一样,所有真币的重量都一样,假币的重量与真币不同,但我们不知道假币的重量 ...

- Luogu P2833 等式 我是傻子x2

又因为调一道水题而浪费时间...不过细节太多了$qwq$,暴露出自己代码能力的不足$QAQ$ 设$d=gcd(a,b)$,这题不是显然先解出来特解,即解出 $\frac{a}{d}x_0+\frac{ ...

- HQL和SQL

hql是面向对象查询,格式:from + 类名 + 类对象 + where + 对象的属性 sql是面向数据库表查询,格式:from + 表名 + where + 表中字段 1.查询 一般在hiber ...

- 09.Spring Bean 注册 - BeanDefinitionRegistry

基本概念 BeanDefinitionRegistry ,该类的作用主要是向注册表中注册 BeanDefinition 实例,完成 注册的过程. 它的接口定义如下: public interface ...

- UnityError 动画系统中anystate跳转重复播放当前动画解决方案

- (转)/etc/sysctl.conf 调优 & 优化Linux内核参数

/etc/sysctl.conf 调优 & 优化Linux内核参数 from: http://apps.hi.baidu.com/share/detail/15652067 http://ke ...

- 如何设计企业移动应用 by宋凯

移动应用设计内部培训 by宋凯 企业移动应用的特点:简约.效率.增强ERP与环境的结合.及时.安全.企业内社交. 一句话定义你的移动应用:然后围绕这句话来设计你的APP. 一:如何定义你的应用: 1, ...

- 机器学习框架ML.NET学习笔记【4】多元分类之手写数字识别

一.问题与解决方案 通过多元分类算法进行手写数字识别,手写数字的图片分辨率为8*8的灰度图片.已经预先进行过处理,读取了各像素点的灰度值,并进行了标记. 其中第0列是序号(不参与运算).1-64列是像 ...

- Http报文长度的计算

客户机与服务器需要某种方式来标示一个报文在哪里结束和在下一个报文在哪里开始. 一般使用Content-Length表示body报文体的长度,这样往后截取即可获得整个报文.对于普通的页面.图片皆可使用. ...

- Maven的学习资料收集--(七) 构建Spring项目

在这里,使用Maven构建一个Spring项目 构建单独项目的话,其实都差不多 1. 新建一个Web项目 参考之前的博客 2.修改 pom.xml,添加Spring依赖 <project xml ...