ElasticSearch(三):通分词器(Analyzer)进行分词(Analysis)

ElasticSearch(三):通过分词器(Analyzer)进行分词(Analysis)

## Analysis与Analyzer

* Analysis文本分析就是把全文转换成一系列单词的过程,也叫做分词。

* Analysis是通过Analyzer来实现的,它是专门处理分词的组件。可以使用ElasticSearch内置的分词器,也可以按需定制化分词器。

* 除了在数据写入时用分词器转换词条,在匹配查询语句时,也需要用相同的分词器对查询语句进行分析。

Analyzer的组成

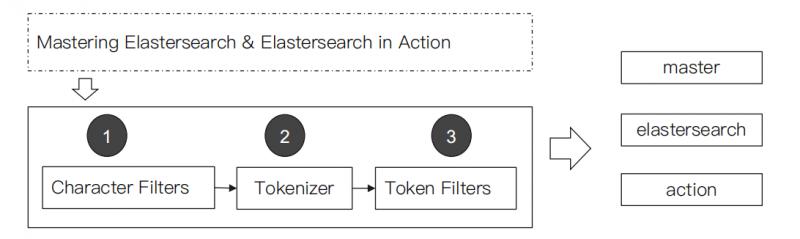

分词器是专门处理分词的组件,Analyzer由三个部分组成:

- Character Filters:主要作用是对原始文本进行处理,例如去除HTML标签。

- Tokenizer:主要作用是按照规则来切分单词。

- Token Filter:将切分好的单词进行加工,例如:小写转换、删除停用词、增加同义词。

ElasticSearch的内置分词器

- Standard Analyzer:默认分词器,按词切分,小写处理。

#standard

GET _analyze

{

"analyzer": "standard",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:Quick小写处理, brown-foxes被切分为 brown,foxes

{

"tokens" : [

{

"token" : "2",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<NUM>",

"position" : 0

},

{

"token" : "running",

"start_offset" : 2,

"end_offset" : 9,

"type" : "<ALPHANUM>",

"position" : 1

},

{

"token" : "quick",#小写处理

"start_offset" : 10,

"end_offset" : 15,

"type" : "<ALPHANUM>",

"position" : 2

},

{

"token" : "brown",

"start_offset" : 16,

"end_offset" : 21,

"type" : "<ALPHANUM>",

"position" : 3

},

{

"token" : "foxes",

"start_offset" : 22,

"end_offset" : 27,

"type" : "<ALPHANUM>",

"position" : 4

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "<ALPHANUM>",

"position" : 5

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "<ALPHANUM>",

"position" : 6

},

{

"token" : "lazy",

"start_offset" : 38,

"end_offset" : 42,

"type" : "<ALPHANUM>",

"position" : 7

},

{

"token" : "dogs",

"start_offset" : 43,

"end_offset" : 47,

"type" : "<ALPHANUM>",

"position" : 8

},

{

"token" : "in",

"start_offset" : 48,

"end_offset" : 50,

"type" : "<ALPHANUM>",

"position" : 9

},

{

"token" : "the",

"start_offset" : 51,

"end_offset" : 54,

"type" : "<ALPHANUM>",

"position" : 10

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "<ALPHANUM>",

"position" : 11

},

{

"token" : "evening",

"start_offset" : 62,

"end_offset" : 69,

"type" : "<ALPHANUM>",

"position" : 12

}

]

}

- Simple Analyzer:按照非字母切分(符号被过滤),小写处理。

#simpe

GET _analyze

{

"analyzer": "simple",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:数字2被过滤,Quick小写处理, brown-foxes被切分为 brown,foxes

{

"tokens" : [

{

"token" : "running",

"start_offset" : 2,

"end_offset" : 9,

"type" : "word",

"position" : 0

},

{

"token" : "quick",

"start_offset" : 10,

"end_offset" : 15,

"type" : "word",

"position" : 1

},

{

"token" : "brown",

"start_offset" : 16,

"end_offset" : 21,

"type" : "word",

"position" : 2

},

{

"token" : "foxes",

"start_offset" : 22,

"end_offset" : 27,

"type" : "word",

"position" : 3

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "word",

"position" : 4

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "word",

"position" : 5

},

{

"token" : "lazy",

"start_offset" : 38,

"end_offset" : 42,

"type" : "word",

"position" : 6

},

{

"token" : "dogs",

"start_offset" : 43,

"end_offset" : 47,

"type" : "word",

"position" : 7

},

{

"token" : "in",

"start_offset" : 48,

"end_offset" : 50,

"type" : "word",

"position" : 8

},

{

"token" : "the",

"start_offset" : 51,

"end_offset" : 54,

"type" : "word",

"position" : 9

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "word",

"position" : 10

},

{

"token" : "evening",

"start_offset" : 62,

"end_offset" : 69,

"type" : "word",

"position" : 11

}

]

}

- Stop Analyzer:停用词过滤(is/a/the),小写处理。

#stop

GET _analyze

{

"analyzer": "stop",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:2,in,the被过滤,Quick小写处理, brown-foxes被切分为 brown,foxes

{

"tokens" : [

{

"token" : "running",

"start_offset" : 2,

"end_offset" : 9,

"type" : "word",

"position" : 0

},

{

"token" : "quick",

"start_offset" : 10,

"end_offset" : 15,

"type" : "word",

"position" : 1

},

{

"token" : "brown",

"start_offset" : 16,

"end_offset" : 21,

"type" : "word",

"position" : 2

},

{

"token" : "foxes",

"start_offset" : 22,

"end_offset" : 27,

"type" : "word",

"position" : 3

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "word",

"position" : 4

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "word",

"position" : 5

},

{

"token" : "lazy",

"start_offset" : 38,

"end_offset" : 42,

"type" : "word",

"position" : 6

},

{

"token" : "dogs",

"start_offset" : 43,

"end_offset" : 47,

"type" : "word",

"position" : 7

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "word",

"position" : 10

},

{

"token" : "evening",

"start_offset" : 62,

"end_offset" : 69,

"type" : "word",

"position" : 11

}

]

}

- WhiteSpace Analyzer:按照空格切分,不转小写。

#whitespace

GET _analyze

{

"analyzer": "whitespace",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:按空格切分

{

"tokens" : [

{

"token" : "2",

"start_offset" : 0,

"end_offset" : 1,

"type" : "word",

"position" : 0

},

{

"token" : "running",

"start_offset" : 2,

"end_offset" : 9,

"type" : "word",

"position" : 1

},

{

"token" : "Quick",

"start_offset" : 10,

"end_offset" : 15,

"type" : "word",

"position" : 2

},

{

"token" : "brown-foxes",

"start_offset" : 16,

"end_offset" : 27,

"type" : "word",

"position" : 3

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "word",

"position" : 4

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "word",

"position" : 5

},

{

"token" : "lazy",

"start_offset" : 38,

"end_offset" : 42,

"type" : "word",

"position" : 6

},

{

"token" : "dogs",

"start_offset" : 43,

"end_offset" : 47,

"type" : "word",

"position" : 7

},

{

"token" : "in",

"start_offset" : 48,

"end_offset" : 50,

"type" : "word",

"position" : 8

},

{

"token" : "the",

"start_offset" : 51,

"end_offset" : 54,

"type" : "word",

"position" : 9

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "word",

"position" : 10

},

{

"token" : "evening.",

"start_offset" : 62,

"end_offset" : 70,

"type" : "word",

"position" : 11

}

]

}

- Keyword Analyzer:不分词,直接将输入当作输出。

#keyword

GET _analyze

{

"analyzer": "keyword",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:

{

"tokens" : [

{

"token" : "2 running Quick brown-foxes leap over lazy dogs in the summer evening.",

"start_offset" : 0,

"end_offset" : 70,

"type" : "word",

"position" : 0

}

]

}

- Pattern Analyzer:正则表达式分词,默认\W+(非字符分隔)。

#pattern

GET _analyze

{

"analyzer": "pattern",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:

{

"tokens" : [

{

"token" : "2",

"start_offset" : 0,

"end_offset" : 1,

"type" : "word",

"position" : 0

},

{

"token" : "running",

"start_offset" : 2,

"end_offset" : 9,

"type" : "word",

"position" : 1

},

{

"token" : "quick",

"start_offset" : 10,

"end_offset" : 15,

"type" : "word",

"position" : 2

},

{

"token" : "brown",

"start_offset" : 16,

"end_offset" : 21,

"type" : "word",

"position" : 3

},

{

"token" : "foxes",

"start_offset" : 22,

"end_offset" : 27,

"type" : "word",

"position" : 4

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "word",

"position" : 5

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "word",

"position" : 6

},

{

"token" : "lazy",

"start_offset" : 38,

"end_offset" : 42,

"type" : "word",

"position" : 7

},

{

"token" : "dogs",

"start_offset" : 43,

"end_offset" : 47,

"type" : "word",

"position" : 8

},

{

"token" : "in",

"start_offset" : 48,

"end_offset" : 50,

"type" : "word",

"position" : 9

},

{

"token" : "the",

"start_offset" : 51,

"end_offset" : 54,

"type" : "word",

"position" : 10

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "word",

"position" : 11

},

{

"token" : "evening",

"start_offset" : 62,

"end_offset" : 69,

"type" : "word",

"position" : 12

}

]

}

- Language:提供了30多种常见语言的分词器。

#english

GET _analyze

{

"analyzer": "english",

"text": "2 running Quick brown-foxes leap over lazy dogs in the summer evening."

}

#分词结果:running转为run,Quick转为quick,brown-foxes 转为brown、fox,in、the过滤等等

{

"tokens" : [

{

"token" : "2",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<NUM>",

"position" : 0

},

{

"token" : "run",

"start_offset" : 2,

"end_offset" : 9,

"type" : "<ALPHANUM>",

"position" : 1

},

{

"token" : "quick",

"start_offset" : 10,

"end_offset" : 15,

"type" : "<ALPHANUM>",

"position" : 2

},

{

"token" : "brown",

"start_offset" : 16,

"end_offset" : 21,

"type" : "<ALPHANUM>",

"position" : 3

},

{

"token" : "fox",

"start_offset" : 22,

"end_offset" : 27,

"type" : "<ALPHANUM>",

"position" : 4

},

{

"token" : "leap",

"start_offset" : 28,

"end_offset" : 32,

"type" : "<ALPHANUM>",

"position" : 5

},

{

"token" : "over",

"start_offset" : 33,

"end_offset" : 37,

"type" : "<ALPHANUM>",

"position" : 6

},

{

"token" : "lazi",

"start_offset" : 38,

"end_offset" : 42,

"type" : "<ALPHANUM>",

"position" : 7

},

{

"token" : "dog",

"start_offset" : 43,

"end_offset" : 47,

"type" : "<ALPHANUM>",

"position" : 8

},

{

"token" : "summer",

"start_offset" : 55,

"end_offset" : 61,

"type" : "<ALPHANUM>",

"position" : 11

},

{

"token" : "even",

"start_offset" : 62,

"end_offset" : 69,

"type" : "<ALPHANUM>",

"position" : 12

}

]

}

- Custom Analyzer:自定义分词器。

#需要安装analysis-icu插件

POST _analyze

{

"analyzer": "icu_analyzer",

"text": "他说的确实在理”"

}

#返回结果

{

"tokens" : [

{

"token" : "他",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "说的",

"start_offset" : 1,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "确实",

"start_offset" : 3,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "在",

"start_offset" : 5,

"end_offset" : 6,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "理",

"start_offset" : 6,

"end_offset" : 7,

"type" : "<IDEOGRAPHIC>",

"position" : 4

}

]

}

中文分词比较:

POST _analyze

{

"analyzer": "standard",

"text": "他说的确实在理”"

}

#返回结果

{

"tokens" : [

{

"token" : "他",

"start_offset" : 0,

"end_offset" : 1,

"type" : "<IDEOGRAPHIC>",

"position" : 0

},

{

"token" : "说",

"start_offset" : 1,

"end_offset" : 2,

"type" : "<IDEOGRAPHIC>",

"position" : 1

},

{

"token" : "的",

"start_offset" : 2,

"end_offset" : 3,

"type" : "<IDEOGRAPHIC>",

"position" : 2

},

{

"token" : "确",

"start_offset" : 3,

"end_offset" : 4,

"type" : "<IDEOGRAPHIC>",

"position" : 3

},

{

"token" : "实",

"start_offset" : 4,

"end_offset" : 5,

"type" : "<IDEOGRAPHIC>",

"position" : 4

},

{

"token" : "在",

"start_offset" : 5,

"end_offset" : 6,

"type" : "<IDEOGRAPHIC>",

"position" : 5

},

{

"token" : "理",

"start_offset" : 6,

"end_offset" : 7,

"type" : "<IDEOGRAPHIC>",

"position" : 6

}

]

}

ElasticSearch(三):通分词器(Analyzer)进行分词(Analysis)的更多相关文章

- Elasticsearch(10) --- 内置分词器、中文分词器

Elasticsearch(10) --- 内置分词器.中文分词器 这篇博客主要讲:分词器概念.ES内置分词器.ES中文分词器. 一.分词器概念 1.Analysis 和 Analyzer Analy ...

- ElasticSearch7.3 学习之倒排索引揭秘及初识分词器(Analyzer)

一.倒排索引 1. 构建倒排索引 例如说有下面两个句子doc1,doc2 doc1:I really liked my small dogs, and I think my mom also like ...

- es的分词器analyzer

analyzer 分词器使用的两个情形: 1,Index time analysis. 创建或者更新文档时,会对文档进行分词2,Search time analysis. 查询时,对查询语句 ...

- Lucene.net(4.8.0)+PanGu分词器问题记录一:分词器Analyzer的构造和内部成员ReuseStategy

前言:目前自己在做使用Lucene.net和PanGu分词实现全文检索的工作,不过自己是把别人做好的项目进行迁移.因为项目整体要迁移到ASP.NET Core 2.0版本,而Lucene使用的版本是3 ...

- Lucene.net(4.8.0) 学习问题记录一:分词器Analyzer的构造和内部成员ReuseStategy

前言:目前自己在做使用Lucene.net和PanGu分词实现全文检索的工作,不过自己是把别人做好的项目进行迁移.因为项目整体要迁移到ASP.NET Core 2.0版本,而Lucene使用的版本是3 ...

- Elasticsearch修改分词器以及自定义分词器

Elasticsearch修改分词器以及自定义分词器 参考博客:https://blog.csdn.net/shuimofengyang/article/details/88973597

- 【Lucene3.6.2入门系列】第05节_自定义停用词分词器和同义词分词器

首先是用于显示分词信息的HelloCustomAnalyzer.java package com.jadyer.lucene; import java.io.IOException; import j ...

- Lucene学习-深入Lucene分词器,TokenStream获取分词详细信息

Lucene学习-深入Lucene分词器,TokenStream获取分词详细信息 在此回复牛妞的关于程序中分词器的问题,其实可以直接很简单的在词库中配置就好了,Lucene中分词的所有信息我们都可以从 ...

- 自然语言处理之中文分词器-jieba分词器详解及python实战

(转https://blog.csdn.net/gzmfxy/article/details/78994396) 中文分词是中文文本处理的一个基础步骤,也是中文人机自然语言交互的基础模块,在进行中文自 ...

- 【ELK】【docker】【elasticsearch】2.使用elasticSearch+kibana+logstash+ik分词器+pinyin分词器+繁简体转化分词器 6.5.4 启动 ELK+logstash概念描述

官网地址:https://www.elastic.co/guide/en/elasticsearch/reference/current/docker.html#docker-cli-run-prod ...

随机推荐

- springmvc引入静态资源文件

如果web.xml中配置的DispatcherServlet请求映射为“/”, springmvc将捕获web容器所有的请求,当然也包括对静态资源的请求.springmvc会将他们当成一个普通请求处理 ...

- 面试常考各类排序算法总结.(c#)

前言 面试以及考试过程中必会出现一道排序算法面试题,为了加深对排序算法的理解,在此我对各种排序算法做个总结归纳. 1.冒泡排序算法(BubbleSort) 1.1 算法描述 (1)比较相邻的元素.如果 ...

- 从零开始入门 K8s | Kubernetes 网络概念及策略控制

作者 | 阿里巴巴高级技术专家 叶磊 一.Kubernetes 基本网络模型 本文来介绍一下 Kubernetes 对网络模型的一些想法.大家知道 Kubernetes 对于网络具体实现方案,没有什 ...

- 数据结构4_java---顺序串,字符串匹配算法(BF算法,KMP算法)

1.顺序串 实现的操作有: 构造串 判断空串 返回串的长度 返回位序号为i的字符 将串的长度扩充为newCapacity 返回从begin到end-1的子串 在第i个字符之前插入字串str 删除子串 ...

- MyBatis 开发手册

这一遍看Mybatis的原因是怀念一下去年的 10月24号我写自己第一个项目时使用全配置文件版本的MyBatis,那时我们三个人刚刚大二,说实话,当时还是觉得MyBatis挺难玩的,但是今年再看最新版 ...

- go-select

select语句属于条件分支流程控制方法,不过它只能用于通道. select语句中的case关键字只能后跟用于通道的发送操作的表达式以及接收操作的表达式或语句. ch1 := make(chan ) ...

- Python3+RobotFramework+pycharm环境搭建

我的环境为 python3.6.5+pycharm 2019.1.3+robotframework3.1.2 1.安装python3.x 略 之后在cmd下执行:pip install robot ...

- 百万年薪python之路 -- 迭代器

3.1 可迭代对象 3.1.1 可迭代对象定义 **在python中,但凡内部含有 _ _ iter_ _方法的对象,都是可迭代对象**. 3.1.2 查看对象内部方法 该对象内部含有什么方法除了看源 ...

- Swagger--解决日期格式显示为Unix时间戳格式 UTC格式

在swagger UI模型架构上,字段日期显示为“日期”:“2018-10-15T09:10:47.507Z”但我需要将其作为“日期”:“2018-9-26 12:18:48”. tips:以下这两种 ...

- 包管理-rpm

rpm包管理 程序源代码---->预处理---->编译---->汇编---->链接 数据处理 转为汇编代码 进行汇编 引入库文件 静态编译:. ...