Data Deduplication Workflow Part 1

Data deduplication provides a new approach to store data and eliminate duplicate data in chunk level.

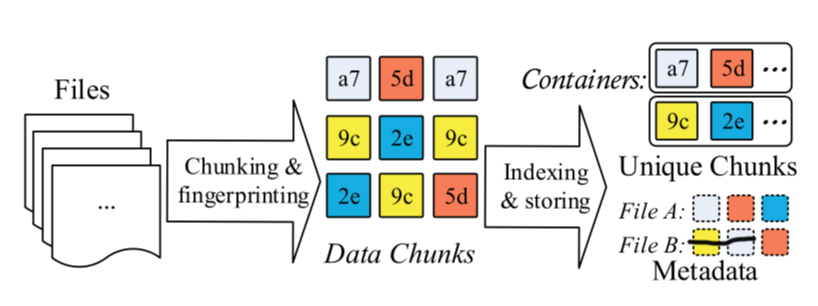

A typical data deduplication workflow can be explained like this.

File metadata describes how to restore the file use unique chunks.

Chunk level deduplication approach has five key stages.

- Chunking

- Fingerprinting

- Indexing fringerprints

- Further compression

- Storage management

Different Stage has its own challenges, which may become the bottleneck for restoring file or compressing files.

Chunking

At the chunking stage, we should split the data stream into chunks, which can be presented at the fingerprints.

The different method splitting data streaming has different result and different efficiency.

The splitting method can be divided into two categories:

Fixed Size Chunking, which just split the data stream into fixed size chunk, simply and easily .

Content Defined Chunking, which split the data into variable size chunk, depending on the content.

Although fixed size chunking is simple and quick, the biggest problem is Boundary Shift. Boundary Shift Problem is when little part of data stream is modified, all the subsequent chunks will be changed, because of the boundary is shifted.

Content defined chunking uses a sliding-window technique on the content of data stream and computes a hash value of the window. If the hash value is satisfied some predefined conditions, it will generate a chunk.

Chunk's size can also be optimized. If we use CDC (content defined chunking), the size of chunking can not be in charge. On some extremely condition, it will generate too large or too small chunking. If a chunking is too large, the compression ratio will decrease. Because the large chunk can hide duplicates from being detected. If a chunking is too small, the file metadata will increase. What's more, it can cause indexing fingerprints problem. So we can define the max and min chunk size.

Chunking still has some problems such as how to detect the deduplicate accurately, how to accelerate computing time cost.

Data Deduplication Workflow Part 1的更多相关文章

- Data De-duplication

偶尔看到data deduplication的博客,还挺有意思,记录之 http://blog.csdn.net/liuben/article/details/5829083?reload http: ...

- 大数据去重(data deduplication)方案

数据去重(data deduplication)是大数据领域司空见惯的问题了.除了统计UV等传统用法之外,去重的意义更在于消除不可靠数据源产生的脏数据--即重复上报数据或重复投递数据的影响,使计算产生 ...

- Note: Transparent data deduplication in the cloud

What Design and implement ClearBox which allows a storage service provider to transparently attest t ...

- 论文阅读 Prefetch-aware fingerprint cache management for data deduplication systems

论文链接 https://link.springer.com/article/10.1007/s11704-017-7119-0 这篇论文试图解决的问题是在cache 环节之前,prefetch-ca ...

- Data Deduplication in Windows Server 2012

https://blogs.technet.microsoft.com/filecab/2012/05/20/introduction-to-data-deduplication-in-windows ...

- Note: File Recipe Compression in Data Deduplication Systems

Zero-Chunk Suppression 检测全0数据块,将其用预先计算的自身的指纹信息代替. Detect zero chunks and replace them with a special ...

- SharePoint 2013 create workflow by SharePoint Designer 2013

这篇文章主要基于上一篇http://www.cnblogs.com/qindy/p/6242714.html的基础上,create a sample workflow by SharePoint De ...

- Seven Python Tools All Data Scientists Should Know How to Use

Seven Python Tools All Data Scientists Should Know How to Use If you’re an aspiring data scientist, ...

- 重复数据删除(De-duplication)技术研究(SourceForge上发布dedup util)

dedup util是一款开源的轻量级文件打包工具,它基于块级的重复数据删除技术,可以有效缩减数据容量,节省用户存储空间.目前已经在Sourceforge上创建项目,并且源码正在不断更新中.该工具生成 ...

随机推荐

- 多线程基础(主要内容转载于https://segmentfault.com/a/1190000014428190)

进程作为资源分配的基本单位 线程作为资源调度的基本单位,是程序的执行单元,执行路径(单线程:一条执行路径,多线程:多条执行路径).是程序使用CPU的最基本单位. 线程有3个基本状态: 执行.就绪.阻塞 ...

- .netcore 开发的 iNeuOS 物联网平台部署在 Ubuntu 操作系统,无缝跨平台。助力《2019 中国.NET 开发者峰会》。

2019 中国.NET 开发者峰会正式启动 目 录 1. 概述... 2 2. 准备运行程序包... 2 3. 安装.netcore. 3 4. 安 ...

- Switch-case语句的应用

/** switch语句有关规则 • switch(表达式)中表达式的值必须是下述几种类型之一:byte,short, char,int,枚举 (jdk 5.0),String (jdk 7.0 ...

- Java中获取刚插入数据库中的数据Id(主键,自动增长)

public int insert(String cName, String ebrand, String cGender) { String sql = "insert into Clot ...

- 阿里terway源码分析

背景 随着公司业务的发展,底层容器环境也需要在各个区域部署,实现多云架构, 使用各个云厂商提供的CNI插件是k8s多云环境下网络架构的一种高效的解法.我们在阿里云的方案中,便用到了阿里云提供的CNI插 ...

- Fiddler的安装

相关下载软件(链接:https://pan.baidu.com/s/1HFdFNph6FGHOFtZq09ldpg 提取码:3u3l ) fiddler是基于.net环境的,所以需要先安装.net 安 ...

- Vuex的简单应用

### 源码地址 https://github.com/moor-mupan/mine-summary/tree/master/前端知识库/Vuex_demo/demo 1. 什么是Vuex? Vue ...

- 第九周课程总结&实验报告(七)

实验任务详情: 完成火车站售票程序的模拟. 要求: (1)总票数1000张: (2)10个窗口同时开始卖票: (3)卖票过程延时1秒钟: (4)不能出现一票多卖或卖出负数号票的情况. 实验代码 pac ...

- 高性能封装检测浏览器支持css3属性函数

css3出来已经很久了,现在来谈判断浏览器是否支持某个css3的属性虽说有点过时了,但是还是可以谈谈的,然后,此篇主要谈的不是判断是否支持,而是怎么封装更好,为什么这么封装,欢迎吐槽. 入题,判断浏览 ...

- EXC_BAD_ACCESS的本质详解以及僵尸模式调试原理

原文:What Is EXC_BAD_ACCESS and How to Debug It 有时候,你会遇到由EXC_BAD_ACCESS造成的崩溃. 这篇文章会告诉你什么是EXC_BAD_ACCES ...