第十一章:Python の 网络编程基础(三)

本課主題

- 多线程的创建和使用

- 消息队列的介绍

- Python 操作 memached 和 redis 实战

- 本周作业

消息队列的介绍

对列是在内存中创建的,如果整个进程里的程序运行完毕之后会被清空,消息就清空了。

- Firt-in-First-Out 先进先出队列,这是一个有两扇门的升降机,先进先出形式。

class Queue:

'''Create a queue object with a given maximum size. If maxsize is <= 0, the queue size is infinite.

''' def __init__(self, maxsize=0):

self.maxsize = maxsize

self._init(maxsize) # mutex must be held whenever the queue is mutating. All methods

# that acquire mutex must release it before returning. mutex

# is shared between the three conditions, so acquiring and

# releasing the conditions also acquires and releases mutex.

self.mutex = threading.Lock() # Notify not_empty whenever an item is added to the queue; a

# thread waiting to get is notified then.

self.not_empty = threading.Condition(self.mutex) # Notify not_full whenever an item is removed from the queue;

# a thread waiting to put is notified then.

self.not_full = threading.Condition(self.mutex) # Notify all_tasks_done whenever the number of unfinished tasks

# drops to zero; thread waiting to join() is notified to resume

self.all_tasks_done = threading.Condition(self.mutex)

self.unfinished_tasks = 0 def task_done(self):

'''Indicate that a formerly enqueued task is complete. Used by Queue consumer threads. For each get() used to fetch a task,

a subsequent call to task_done() tells the queue that the processing

on the task is complete. If a join() is currently blocking, it will resume when all items

have been processed (meaning that a task_done() call was received

for every item that had been put() into the queue). Raises a ValueError if called more times than there were items

placed in the queue.

'''

with self.all_tasks_done:

unfinished = self.unfinished_tasks - 1

if unfinished <= 0:

if unfinished < 0:

raise ValueError('task_done() called too many times')

self.all_tasks_done.notify_all()

self.unfinished_tasks = unfinished def join(self):

'''Blocks until all items in the Queue have been gotten and processed. The count of unfinished tasks goes up whenever an item is added to the

queue. The count goes down whenever a consumer thread calls task_done()

to indicate the item was retrieved and all work on it is complete. When the count of unfinished tasks drops to zero, join() unblocks.

'''

with self.all_tasks_done:

while self.unfinished_tasks:

self.all_tasks_done.wait() def qsize(self):

'''Return the approximate size of the queue (not reliable!).'''

with self.mutex:

return self._qsize() def empty(self):

'''Return True if the queue is empty, False otherwise (not reliable!). This method is likely to be removed at some point. Use qsize() == 0

as a direct substitute, but be aware that either approach risks a race

condition where a queue can grow before the result of empty() or

qsize() can be used. To create code that needs to wait for all queued tasks to be

completed, the preferred technique is to use the join() method.

'''

with self.mutex:

return not self._qsize() def full(self):

'''Return True if the queue is full, False otherwise (not reliable!). This method is likely to be removed at some point. Use qsize() >= n

as a direct substitute, but be aware that either approach risks a race

condition where a queue can shrink before the result of full() or

qsize() can be used.

'''

with self.mutex:

return 0 < self.maxsize <= self._qsize() def put(self, item, block=True, timeout=None):

'''Put an item into the queue. If optional args 'block' is true and 'timeout' is None (the default),

block if necessary until a free slot is available. If 'timeout' is

a non-negative number, it blocks at most 'timeout' seconds and raises

the Full exception if no free slot was available within that time.

Otherwise ('block' is false), put an item on the queue if a free slot

is immediately available, else raise the Full exception ('timeout'

is ignored in that case).

'''

with self.not_full:

if self.maxsize > 0:

if not block:

if self._qsize() >= self.maxsize:

raise Full

elif timeout is None:

while self._qsize() >= self.maxsize:

self.not_full.wait()

elif timeout < 0:

raise ValueError("'timeout' must be a non-negative number")

else:

endtime = time() + timeout

while self._qsize() >= self.maxsize:

remaining = endtime - time()

if remaining <= 0.0:

raise Full

self.not_full.wait(remaining)

self._put(item)

self.unfinished_tasks += 1

self.not_empty.notify() def get(self, block=True, timeout=None):

'''Remove and return an item from the queue. If optional args 'block' is true and 'timeout' is None (the default),

block if necessary until an item is available. If 'timeout' is

a non-negative number, it blocks at most 'timeout' seconds and raises

the Empty exception if no item was available within that time.

Otherwise ('block' is false), return an item if one is immediately

available, else raise the Empty exception ('timeout' is ignored

in that case).

'''

with self.not_empty:

if not block:

if not self._qsize():

raise Empty

elif timeout is None:

while not self._qsize():

self.not_empty.wait()

elif timeout < 0:

raise ValueError("'timeout' must be a non-negative number")

else:

endtime = time() + timeout

while not self._qsize():

remaining = endtime - time()

if remaining <= 0.0:

raise Empty

self.not_empty.wait(remaining)

item = self._get()

self.not_full.notify()

return item def put_nowait(self, item):

'''Put an item into the queue without blocking. Only enqueue the item if a free slot is immediately available.

Otherwise raise the Full exception.

'''

return self.put(item, block=False) def get_nowait(self):

'''Remove and return an item from the queue without blocking. Only get an item if one is immediately available. Otherwise

raise the Empty exception.

'''

return self.get(block=False) # Override these methods to implement other queue organizations

# (e.g. stack or priority queue).

# These will only be called with appropriate locks held # Initialize the queue representation

def _init(self, maxsize):

self.queue = deque() def _qsize(self):

return len(self.queue) # Put a new item in the queue

def _put(self, item):

self.queue.append(item) # Get an item from the queue

def _get(self):

return self.queue.popleft()class Queue源码

import queue q = queue.Queue()

q.put(123)

q.put(456)

print(q.get()) #Queue()例子

- Last-in-First-Out 后进先出队列,这是一个只有单扇门的升降机,先进先出形式。

class LifoQueue(Queue):

'''Variant of Queue that retrieves most recently added entries first.''' def _init(self, maxsize):

self.queue = [] def _qsize(self):

return len(self.queue) def _put(self, item):

self.queue.append(item) def _get(self):

return self.queue.pop()class LifoQueue源码

import queue q = queue.LifoQueue()

q.put(123)

q.put(456)

print(q.get()) #LifoQueue()例子

- 优先级先出对列 Priority Queue,会加一个优先级,优先级比较高的那一个会先出。

class PriorityQueue(Queue):

'''Variant of Queue that retrieves open entries in priority order (lowest first). Entries are typically tuples of the form: (priority number, data).

''' def _init(self, maxsize):

self.queue = [] def _qsize(self):

return len(self.queue) def _put(self, item):

heappush(self.queue, item) def _get(self):

return heappop(self.queue)class PriorityQueue源码

import queue q = queue.PriorityQueue()

q.put((1,'alex1'))

q.put((6,'alex2'))

q.put((3,'alex3')) print(q.get()) # 优先级越小愈优先 """

(1, 'alex1')

"""PriorityQueue()例子

- 双向对列

class deque(object):

"""

deque([iterable[, maxlen]]) --> deque object A list-like sequence optimized for data accesses near its endpoints.

"""

def append(self, *args, **kwargs): # real signature unknown

""" Add an element to the right side of the deque. """

pass def appendleft(self, *args, **kwargs): # real signature unknown

""" Add an element to the left side of the deque. """

pass def clear(self, *args, **kwargs): # real signature unknown

""" Remove all elements from the deque. """

pass def copy(self, *args, **kwargs): # real signature unknown

""" Return a shallow copy of a deque. """

pass def count(self, value): # real signature unknown; restored from __doc__

""" D.count(value) -> integer -- return number of occurrences of value """

return 0 def extend(self, *args, **kwargs): # real signature unknown

""" Extend the right side of the deque with elements from the iterable """

pass def extendleft(self, *args, **kwargs): # real signature unknown

""" Extend the left side of the deque with elements from the iterable """

pass def index(self, value, start=None, stop=None): # real signature unknown; restored from __doc__

"""

D.index(value, [start, [stop]]) -> integer -- return first index of value.

Raises ValueError if the value is not present.

"""

return 0 def insert(self, index, p_object): # real signature unknown; restored from __doc__

""" D.insert(index, object) -- insert object before index """

pass def pop(self, *args, **kwargs): # real signature unknown

""" Remove and return the rightmost element. """

pass def popleft(self, *args, **kwargs): # real signature unknown

""" Remove and return the leftmost element. """

pass def remove(self, value): # real signature unknown; restored from __doc__

""" D.remove(value) -- remove first occurrence of value. """

pass def reverse(self): # real signature unknown; restored from __doc__

""" D.reverse() -- reverse *IN PLACE* """

pass def rotate(self, *args, **kwargs): # real signature unknown

""" Rotate the deque n steps to the right (default n=1). If n is negative, rotates left. """

pass def __add__(self, *args, **kwargs): # real signature unknown

""" Return self+value. """

pass def __bool__(self, *args, **kwargs): # real signature unknown

""" self != 0 """

pass def __contains__(self, *args, **kwargs): # real signature unknown

""" Return key in self. """

pass def __copy__(self, *args, **kwargs): # real signature unknown

""" Return a shallow copy of a deque. """

pass def __delitem__(self, *args, **kwargs): # real signature unknown

""" Delete self[key]. """

pass def __eq__(self, *args, **kwargs): # real signature unknown

""" Return self==value. """

pass def __getattribute__(self, *args, **kwargs): # real signature unknown

""" Return getattr(self, name). """

pass def __getitem__(self, *args, **kwargs): # real signature unknown

""" Return self[key]. """

pass def __ge__(self, *args, **kwargs): # real signature unknown

""" Return self>=value. """

pass def __gt__(self, *args, **kwargs): # real signature unknown

""" Return self>value. """

pass def __iadd__(self, *args, **kwargs): # real signature unknown

""" Implement self+=value. """

pass def __imul__(self, *args, **kwargs): # real signature unknown

""" Implement self*=value. """

pass def __init__(self, iterable=(), maxlen=None): # known case of _collections.deque.__init__

"""

deque([iterable[, maxlen]]) --> deque object A list-like sequence optimized for data accesses near its endpoints.

# (copied from class doc)

"""

pass def __iter__(self, *args, **kwargs): # real signature unknown

""" Implement iter(self). """

pass def __len__(self, *args, **kwargs): # real signature unknown

""" Return len(self). """

pass def __le__(self, *args, **kwargs): # real signature unknown

""" Return self<=value. """

pass def __lt__(self, *args, **kwargs): # real signature unknown

""" Return self<value. """

pass def __mul__(self, *args, **kwargs): # real signature unknown

""" Return self*value.n """

pass @staticmethod # known case of __new__

def __new__(*args, **kwargs): # real signature unknown

""" Create and return a new object. See help(type) for accurate signature. """

pass def __ne__(self, *args, **kwargs): # real signature unknown

""" Return self!=value. """

pass def __reduce__(self, *args, **kwargs): # real signature unknown

""" Return state information for pickling. """

pass def __repr__(self, *args, **kwargs): # real signature unknown

""" Return repr(self). """

pass def __reversed__(self): # real signature unknown; restored from __doc__

""" D.__reversed__() -- return a reverse iterator over the deque """

pass def __rmul__(self, *args, **kwargs): # real signature unknown

""" Return self*value. """

pass def __setitem__(self, *args, **kwargs): # real signature unknown

""" Set self[key] to value. """

pass def __sizeof__(self): # real signature unknown; restored from __doc__

""" D.__sizeof__() -- size of D in memory, in bytes """

pass maxlen = property(lambda self: object(), lambda self, v: None, lambda self: None) # default

"""maximum size of a deque or None if unbounded""" __hash__ = Noneclass deque源码

import queue q = queue.deque()

q.append(123)

q.append(333)

q.append(888)

q.append(999) q.appendleft(456) print(q.pop())

print(q.popleft()) """

999

456

"""deque()例子

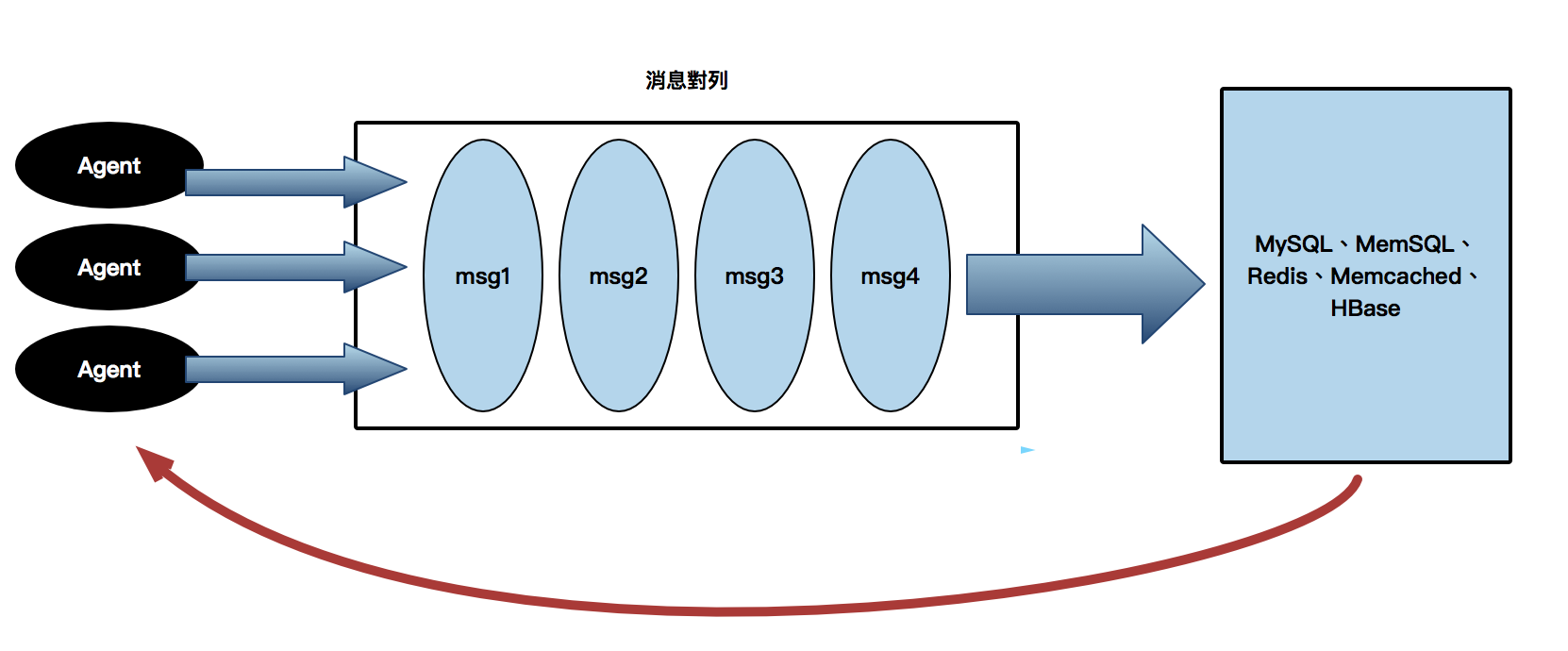

消息队列的好处

处理并发的能力变大啦

对列的好处是什么,如果没有这个队列的话,每个连结都有一个最大的连接数,在等着的过程中如果没有消息对列的话,服务端便需要维护这条连接,这也是对服务端造成资源的消耗和浪费,而这条服务端跟客户端的条接便需要挂起,第一、没有新的连接可以进来,第二、正在连接的客务端其实只是等待着。

如果有对列的存在,它没有连接数的限制,你就不需要去担心或维护这个空连接。

一次产生订单,可能中间有很多不同的步骤需要创建,每次产生订单都会耗时耗资源。单次处理请求的能力会降低。

目的是提高处理并发的能力和可以支持瞬间爆发的客戶请求

当没有消息对列:客户提交了订单的请求,客户端和服务器端一样连接等待服务器端查询后返回数据给客户端

当有消息对列时:客户提交了订单的请求,把消息推送到消息对列中,此时客户端和服务器端的连接可以断开,不需要一直连接,当服务器端完整了查询后它会 更新数据库的一个状态,此时,客户端会自动刷新到去获得该请求的状态。

另外一個好處是當你把請求放在消息對列中,数据处理能力变得更可扩展、更灵活,只需要多添加几个服务器端,它们就会到对列里拿请求来帮忙。

基本操作

这是一个先进先出的消息对列

- 创建对列:Quene(x), x代表队列最大长度,过了最大个数放不进去就会卡住。

def __init__(self, maxsize=0):

self.maxsize = maxsize

self._init(maxsize) # mutex must be held whenever the queue is mutating. All methods

# that acquire mutex must release it before returning. mutex

# is shared between the three conditions, so acquiring and

# releasing the conditions also acquires and releases mutex.

self.mutex = threading.Lock() # Notify not_empty whenever an item is added to the queue; a

# thread waiting to get is notified then.

self.not_empty = threading.Condition(self.mutex) # Notify not_full whenever an item is removed from the queue;

# a thread waiting to put is notified then.

self.not_full = threading.Condition(self.mutex) # Notify all_tasks_done whenever the number of unfinished tasks

# drops to zero; thread waiting to join() is notified to resume

self.all_tasks_done = threading.Condition(self.mutex)

self.unfinished_tasks = 0Queue.__init__( )方法

import queue

q = queue.Queue(10)基本创建对列的语法 queue.Queue(x)

import queue # 這是一個有兩扇門的升降机,先進先出形式。

q = queue.Queue(2) #只允许2个人排队 # 在队列中加数据

q.put(11)

q.put(22)

q.put(33, timeout=2) # 过了2秒还没有空闲位置客人就会闹着,程序会报错 """

Traceback (most recent call last):

File "/s13/Day11/practice/s2.py", line 32, in <module>

q.put(33, timeout=2)

File "queue.py", line 141, in put

raise Full

queue.Full

"""数据放不进队列中

- 最大支持当前队列的个数:maxsize (字段)

- 放数据 - put( )

put(self, item, block=True, timeout=None)

# put - 在队列中加数据

# timeout: 可以加一个超时的时限,过了就报错

# block: 设置是否阻塞 q = queue.Queue(10) # 在队列中加数据

q.put(11)

q.put(22)

q.put(33, block=False, timeout=2)放数据 put( )

- 放数据 - put_nowait( ),默应是不阻塞的:block = False

- 取数据 - get( )

get(self, block=True, timeout=None)

# get - 在队列中取数据,默应是阻塞

# timeout: 可以加一个超时的时限,过了就报错

# block: 设置是否阻塞 #First-in-first-out

q = queue.Queue(10) # 在队列中加数据

q.put(11)

q.put(22) # 在队列中取数据

print(q.qsize()) #查看对列的长度

print(q.get())

print(q.get(timeout=2)) """

True

11

"""取数据 get( )

- 取数据 - get_nowait( ),默应是不阻塞的:block = False

- 检查当前对列是否为空 - empty( )

q = queue.Queue(10)

print(q.empty()) # True 因为当前没有消息在队列中

q.put(11) # 在队列中加数据

print(q.empty()) # False 因为当前有消息在队列中 """

True

False

"""empty( )

- 检查当前对列是否满了 - full( )

- 检查当前对列里有几个真实元素 - qsize( )

- task_done( ) - 阻塞进程,当队列中任务执行完毕之后,不再阻塞

join( ) - 等待整个任务全部完成后才终止import queue q = queue.Queue(5)

q.put(123)

q.put(456) print(q.get())

q.task_done() # 完成一个动作要调用 task_done() 表明自己已经完成任务 print(q.get())

q.task_done() q.join() # 表示如果对列里的任务没有完任完成,就会等待着,不会终止程序。task_done( )和join( )

heapq

在集合中找最大或者是最小值的时候可以用 heapq 来解决

portfolio = [

{'name': 'IBM', 'shares': 100, 'price': 91.1},

{'name': 'AAPL', 'shares': 50, 'price': 543.22},

{'name': 'FB', 'shares': 200, 'price': 21.09},

{'name': 'HPQ', 'shares': 35, 'price': 31.75},

{'name': 'YHOO', 'shares': 45, 'price': 16.35},

{'name': 'ACME', 'shares': 75, 'price': 115.65}

] cheap = heapq.nsmallest(3,portfolio, key = lambda s: s['price'])

expensive = heapq.nlargest(3,portfolio, key = lambda s: s['price'])

heapq

Python 操作 memached 和 redis 实战

它本质上是通过 socket 来连接然后进行socket通信

Memcached 天生支持集群

[更新中]

[C1,1]

[C2,2]

[C3,1]

[C1,C2,C2,C3]

数字/ len([C1,C2,C2,C3])

Memached 介紹和操作实战

- 安装

- 安装其对应的模块 (API)

Python API 操作 Memached 数据库

- add

- replace

- set 和 set_multi

- delete 和 delete_multi

- append 和 prepend

- decr 和 incr

- gets和cas

Redis 介紹和操作实战

- 安装

wget http://download.redis.io/redis-stable.tar.gz

tar xvzf redis-stable.tar.gz

cd redis-stable

make安装稳定版的Redis

172.16.201.133:6379> ping

PONG #连接正常会返回 Pong安装后的小测试

- Docker 安装 Redis

docker pull redis:latest # Pull 最新的 Docker Image

docker run -itd --name redis-test -p 6379:6379 redis

# -p 6379:6379:映射容器服务的 6379 端口到宿主机的 6379 端口。外部可以直接通过宿主机ip:6379 访问到 Redis 的服务。

docker exec -it redis-test /bin/bash # 进入 docker image - 安装其对应的模块 (API)

Cli 操作 Redis 数据库

- 连接 Redis,先在虚拟器输入 >> redis-server 来启动 Redis服务器,然后可以输入下面代码连接数据库。

redis-cli -h 172.16.201.133 -p 6379 -a mypass

Redis连接

sudo vim /etc/redis/redis.conf #把 bind 127.0.0.1 改成 0.0.0.0

bind 0.0.0.0 #重新启动 Redis 服务器

sudo /etc/init.d/redis-server restart远端连接 Redis

user@py-ubuntu:~$ redis-server

1899:C 20 Oct 14:58:33.558 # Warning: no config file specified, using the default config. In order to specify a config file use redis-server /path/to/redis.conf

1899:M 20 Oct 14:58:33.559 * Increased maximum number of open files to 10032 (it was originally set to 1024).

_._

_.-``__ ''-._

_.-`` `. `_. ''-._ Redis 3.0.6 (00000000/0) 64 bit

.-`` .-```. ```\/ _.,_ ''-._

( ' , .-` | `, ) Running in standalone mode

|`-._`-...-` __...-.``-._|'` _.-'| Port: 6379

| `-._ `._ / _.-' | PID: 1899

`-._ `-._ `-./ _.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' | http://redis.io

`-._ `-._`-.__.-'_.-' _.-'

|`-._`-._ `-.__.-' _.-'_.-'|

| `-._`-._ _.-'_.-' |

`-._ `-._`-.__.-'_.-' _.-'

`-._ `-.__.-' _.-'

`-._ _.-'

`-.__.-' 1899:M 20 Oct 14:58:33.564 # WARNING: The TCP backlog setting of 511 cannot be enforced because /proc/sys/net/core/somaxconn is set to the lower value of 128.

1899:M 20 Oct 14:58:33.564 # Server started, Redis version 3.0.6

1899:M 20 Oct 14:58:33.564 # WARNING overcommit_memory is set to 0! Background save may fail under low memory condition. To fix this issue add 'vm.overcommit_memory = 1' to /etc/sysctl.conf and then reboot or run the command 'sysctl vm.overcommit_memory=1' for this to take effect.

1899:M 20 Oct 14:58:33.564 # WARNING you have Transparent Huge Pages (THP) support enabled in your kernel. This will create latency and memory usage issues with Redis. To fix this issue run the command 'echo never > /sys/kernel/mm/transparent_hugepage/enabled' as root, and add it to your /etc/rc.local in order to retain the setting after a reboot. Redis must be restarted after THP is disabled.

1899:M 20 Oct 14:58:33.565 * DB loaded from disk: 0.000 seconds

1899:M 20 Oct 14:58:33.565 * The server is now ready to accept connections on port 6379 user@py-ubuntu:~$ redis-cli -h 192.168.80.128 -p 6379

192.168.80.128:6379>Redis 服务器

- String

SET mykey "apple" # 设置1条key,value组合

SETNX mykey "redis" # 设置1条key,value组合如果当前库没有这条key存在

MSET k1 "v1" k2 "v2" k3 "v3" # 同时设置多条key,value组合String操作(redis-cli)

- Get Key from Redis

127.0.0.1:> EXISTS fruits

(integer)

127.0.0.1:> GET fruits

"apple"Exists and Get操作 (redis-cli)

- List

- Hash

172.16.201.133:6379> HMSET person name "Janice" sex "F" age 20 #设置person的key,value组合

OK 172.16.201.133:6379> HGETALL person #查看person的所有key,value

1) "name"

2) "Janice"

3) "sex"

4) "F"

5) "age"

6) "" 172.16.201.133:6379> HEXISTS person name #查看person中有没有name这个key,如果有,返回1

(integer) 1 172.16.201.133:6379> HEXISTS person birthday #查看person中有没有birthday这个key,如果沒有,返回0

(integer) 0 172.16.201.133:6379> HGET person name #查看person中name key的值

"Janice" 172.16.201.133:6379> HKEYS person #查看person有哪些key

1) "name"

2) "sex"

3) "age" 172.16.201.133:6379> HMGET person name sex age #查看person这些key的值

1) "Janice"

2) "F"

3) "" 172.16.201.133:6379> HVALS person #查看person的所有值

1) "Janice"

2) "F"

3) "" 172.16.201.133:6379> HLEN person #查看person的长度

(integer) 3Hash操作(redis-cli)

- Set

- Publisher-Subscriber

#Subscriber

172.16.201.133:6379> SUBSCRIBE redisChat

Reading messages... (press Ctrl-C to quit)

1) "subscribe"

2) "redisChat"

3) (integer) 1

--------------------------------------------------------

1) "message"

2) "redisChat"

3) "Redis is a great caching technique"

1) "message"

2) "redisChat"

3) "Learn redis by tutorials point" #Publisher

172.16.201.133:6379> PUBLISH redisChat "Redis is a great caching technique"

(integer) 1

172.16.201.133:6379> PUBLISH redisChat "Learn redis by tutorials point"

(integer) 1Pub-Sub操作(redis-cli)

- xxxx

Python API 操作 Redis 数据库

连接 Redis 有两种方法,一种是直接连接,一种是通过线程池的方式去连接,试说明两种方法的优点和缺点。发送数据前的连接是非常耗时的,你发送数据可能需要1秒,但每次连接可能需要5秒,如果维护了一个连接池的话,就不用每次都重新连接数据库。

- 普通连接

import redis r = redis.Redis(host='172.16.201.133', port=6379)

r.set('fruits','apple') # 设置key

print(r.get('fruits')) # 返回字节连接Redis

- 连接池方式连接:先维护一个连接池,然后在本地拿到这个连接池就直接发送数据

import redis pool = redis.ConnectionPool(host='172.16.201.133', port=6379) #创建一个线程池

r = redis.Redis(connection_pool=pool) # 把线程池传入这个 redis 对象里 r.set('fruits','apple') # 设置key

print(r.get('fruits')) # 返回字节连接池方式连接Redis

- String

- List

>>> import redis

>>> pool = redis.ConnectionPool(host='172.16.201.133', port=6379)

>>> r = redis.Redis(connection_pool=pool)

>>> r.lpush('li',11,22,33,44,55,66,77,88,99)

9 >>> r.lpop('li') # get the list from the left

b'' >>> r.lrange('li',2,5)

[b'', b'', b'', b''] >>> r.rpop('li') # get the list from the right

b''操作List(redis-api)

- Hash

>>> import redis

>>> r = redis.Redis(host='172.16.201.133', port=6379)

>>> r.hmset('person',{'name':'Janice','sex':'F','age':20})

True >>> r.hset('movies','name','Doctors')

0 >>> r.hsetnx('movies','name','Secret Garden')

0 >>> print(r.hget('movies','name'))

b'Doctors' >>> print(r.hget('person','name'))

b'Janice' >>> print(r.hgetall('person'))

{b'sex': b'F', b'name': b'Janice', b'age': b''} >>> print(r.hmget('person',['name','sex','age']))

[b'Janice', b'F', b''] >>> print(r.hexists('person','name'))

True >>> print(r.hexists('person','birthday'))

False >>> print(r.hvals('person'))

[b'Janice', b'F', b''] >>> print(r.hvals('movies'))

[b'Doctors'] >>> print(r.hlen('person'))

3 >>> print(r.hdel('person','sex','gender','age'))

2 >>> print(r.hgetall('person'))

{b'name': b'Janice'}操作Hash(redis-api)

- Set

>>> import redis

>>> r = redis.Redis(host='172.16.201.133', port=6379)

>>> r.set('fruits', 'apple')

True >>> r.setnx('k1', 'v1')

True >>> r.mset(k2='v2', k3='v3')

True >>> r.get('fruits')

b'apple' >>> r.get('k1')

b'v1' >>> r.mget('k2','k3')

[b'v2', b'v3'] >>> r.getrange('fruits',1,4)

b'pole' >>> r.strlen('fruits')

5操作Set(redis-api)

- Sorted Set

#add data to Sorted Set in Redis

sorted_set_name='stories'

post_id='5ec0c831f0d6e6001a84aa7b' #key

timestamp=1589692465 #value r.zadd(sorted_set_name, {post_id:ts}) # add multiple keys

r.zadd(sorted_set_name, {post_id1: ts1, post_id2: ts2}) #get data from Sorted Set in Redis

r.zrange(sorted_set_name, 0, 1500, desc=True, withscores=True) 操作 SortedSet (redis-api)操作 SortedSet (redis-api)

- Publisher 和 Subscriber:发布和订阅。试说明其原理。

- Pipeline:通过管队来一致性对 redis 进行操作

- xxxx

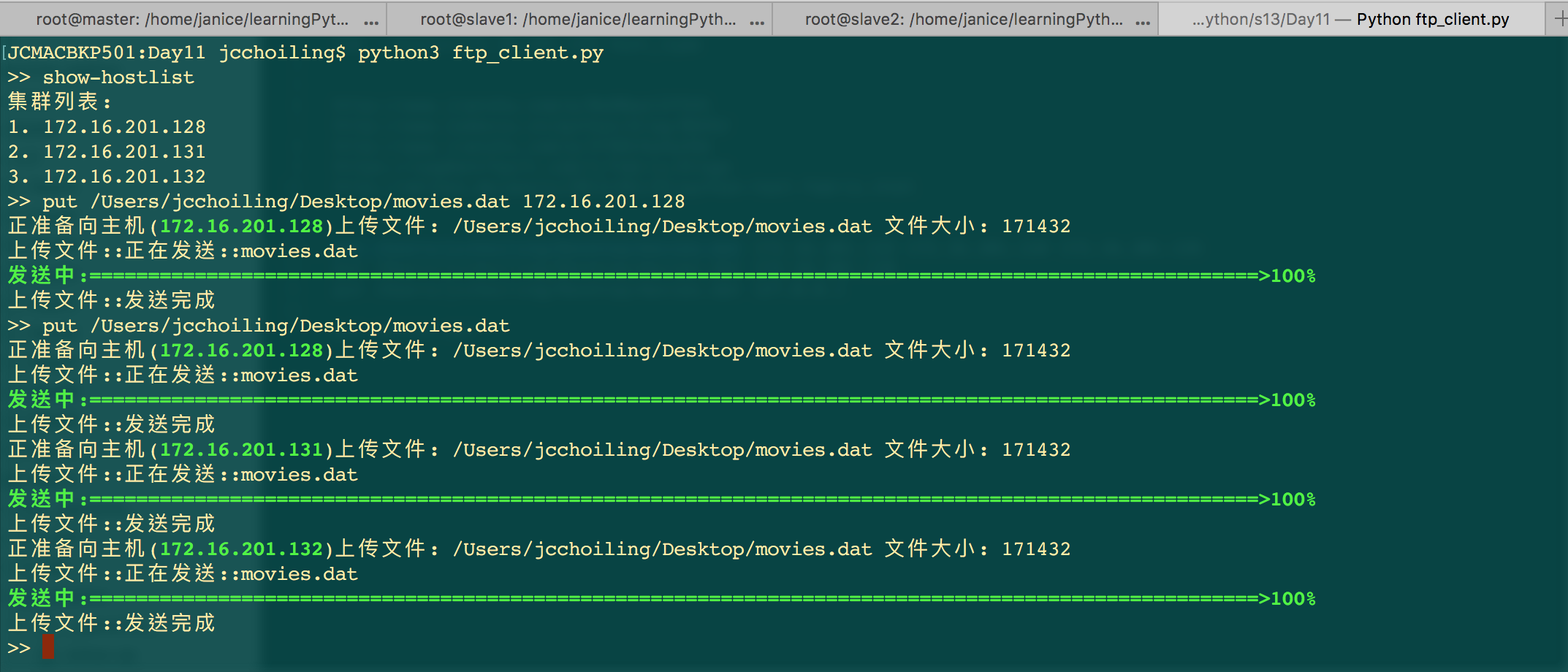

本周作业

类 Fabric 主机管理程序开发:

- 运行程序列出主机组或者主机列表

- 选择指定主机或主机组

- 选择让主机或者主机组执行命令或者向其传输文件(上传/下载)

參考資料

银角大王:Python之路【第九篇】:Python操作 RabbitMQ、Redis、Memcache、SQLAlchemy

金角大王:

其他:Redis 数据类型详解

第十一章:Python の 网络编程基础(三)的更多相关文章

- python网络编程基础(线程与进程、并行与并发、同步与异步、阻塞与非阻塞、CPU密集型与IO密集型)

python网络编程基础(线程与进程.并行与并发.同步与异步.阻塞与非阻塞.CPU密集型与IO密集型) 目录 线程与进程 并行与并发 同步与异步 阻塞与非阻塞 CPU密集型与IO密集型 线程与进程 进 ...

- 第5章 Linux网络编程基础

第5章 Linux网络编程基础 5.1 socket地址与API 一.理解字节序 主机字节序一般为小端字节序.网络字节序一般为大端字节序.当格式化的数据在两台使用了不同字节序的主机之间直接传递时,接收 ...

- Python网络编程基础|百度网盘免费下载|零基础入门学习资料

百度网盘免费下载:Python网络编程基础|零基础学习资料 提取码:k7a1 目录: 第1部分 底层网络 第1章 客户/服务器网络介绍 第2章 网络客户端 第3章 网络服务器 第4章 域名系统 第5章 ...

- Python网络编程基础pdf

Python网络编程基础(高清版)PDF 百度网盘 链接:https://pan.baidu.com/s/1VGwGtMSZbE0bSZe-MBl6qA 提取码:mert 复制这段内容后打开百度网盘手 ...

- 好书推荐---Python网络编程基础

Python网络编程基础详细的介绍了网络编程的相关知识,结合python,看起来觉得很顺畅!!!

- Python网络编程基础 PDF 完整超清版|网盘链接内附提取码下载|

点此获取下载地址提取码:y9u5 Python网络编程最好新手入门书籍!175个详细案例,事实胜于雄辩,Sockets.DNS.Web Service.FTP.Email.SMTP.POP.IMAP. ...

- 第九章:Python の 网络编程基础(一)

本課主題 何为TCP/IP协议 初认识什么是网络编程 网络编程中的 "粘包" 自定义 MySocket 类 本周作业 何为TCP/IP 协议 TCP/IP协议是主机接入互网以及接入 ...

- python网络编程基础

一.客户端/服务器架构 网络中到处都应有了C/S架构,我们学习socket就是为了完成C/S架构的开发. 二.scoket与网络协议 如果想要实现网络通信我们需要对tcpip,http等很多网络知识有 ...

- 《Python网络编程基础》第四章 域名系统

域名系统(DNS) 是一个分布式的数据库,它主要用来把主机名转换成IP地址.DNS以及相关系统之所以存在,主要有以下两个原因: 它们可以使人们比较容易地记住名字,如www.baidu.com. 它 ...

随机推荐

- N卡全部历史驱动

记录一下寻找驱动方法 打开链接 http://www.geforce.cn/drivers/beta-legacy 默认搜索出来是10个,之后打开控制台输入如下内容回车显示全部(100,可以修改数字来 ...

- Java 并发专题 : Executor具体介绍 打造基于Executor的Webserver

转载标明出处:http://blog.csdn.net/lmj623565791/article/details/26938985 继续并发,貌似并发的文章非常少有人看啊~哈~ 今天准备具体介绍jav ...

- Java泛型深入理解

泛型的优点: 泛型的主要优点就是让编译器保留參数的类型信息,执行类型检查,执行类型转换(casting)操作,编译器保证了这些类型转换(casting)的绝对无误. /******* 不使用泛型类型 ...

- Qt---自定义界面之 Style Sheet

这次讲Qt Style Sheet(QSS),QSS是一种与CSS类似的语言,实际上这两者几乎完全一样.既然谈到CSS我们就有必要说一下盒模型. 1. 盒模型(The Box Model) 在样式中, ...

- 初装Ubuntu一般配置

1. 开启ssh远程 2.修改root密码 sudo passwd 输入两次密码. 3.授权普通用户root权限 修改 /etc/sudoers 文件,找到下面一行,在root下面添加一行,如下所示: ...

- FastDFS并发会有bug,其实我也不太信?- 一次并发问题的排查经历

前一段时间,业务部门同事反馈在一次生产服务器升级之后,POS消费上传小票业务偶现异常,上传小票业务有重试机制,有些重试三次也不会成功,他们排查了一下没有找到原因,希望架构部帮忙解决. 公司使用的是Fa ...

- 微信小程序教学第三章第三节(含视频):小程序中级实战教程:视图与数据关联

§ 视图与数据关联 本文配套视频地址: https://v.qq.com/x/page/z0554wyswib.html 开始前请把 ch3-3 分支中的 code/ 目录导入微信开发工具 首先 首先 ...

- 【bird-front】前端框架介绍

bird前端项目,基于react.antd.antd-admin,封装常用数据组件,细粒度权限解决方案. bird-front是基于react的后台管理系统前端项目,框架构建部分严重借鉴于antd管理 ...

- 【java设计模式】【创建模式Creational Pattern】建造模式Builder Pattern

package com.tn.pattern; public class Client { public static void main(String[] args) { Director dire ...

- HTML基础教程-元素

HTML 元素 HTML 文档是由 HTML 元素定义的. HTML 元素 HTML 元素指的是从开始标签(start tag)到结束标签(end tag)的所有代码. 注释:开始标签常被称为开放标签 ...