Kafka:ZK+Kafka+Spark Streaming集群环境搭建(三十):使用flatMapGroupsWithState替换agg

flatMapGroupsWithState的出现解决了什么问题:

flatMapGroupsWithState的出现在spark structured streaming原因(从spark.2.2.0开始才开始支持):

1)可以实现agg函数;

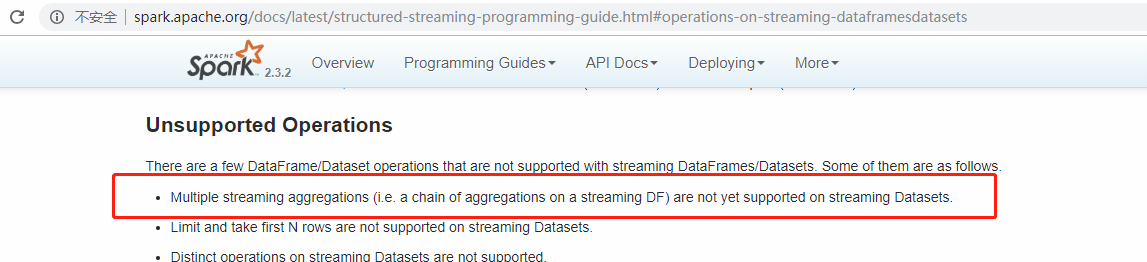

2)就目前最新spark2.3.2版本来说在spark structured streming中依然不支持对dataset多次agg操作

,而flatMapGroupsWithState可以替代agg的作用,同时它允许在sink为append模式下在agg之前使用。

注意:尽管允许agg之前使用,但前提是:输出(sink)方式Append方式。

flatMapGroupsWithState的使用示例(从网上找到):

参考:《https://jaceklaskowski.gitbooks.io/spark-structured-streaming/spark-sql-streaming-KeyValueGroupedDataset-flatMapGroupsWithState.html》

说明:以下示例代码实现了“select deviceId,count(0) as count from tbName group by deviceId.”。

1)spark2.3.0版本下定义一个Signal实体类:

scala> spark.version

res0: String = 2.3.0-SNAPSHOT import java.sql.Timestamp

type DeviceId = Int

case class Signal(timestamp: java.sql.Timestamp, value: Long, deviceId: DeviceId)

2)使用Rate source方式生成一些测试数据(随机实时流方式),并查看执行计划:

// input stream

import org.apache.spark.sql.functions._

val signals = spark.

readStream.

format("rate").

option("rowsPerSecond", 1).

load.

withColumn("value", $"value" % 10). // <-- randomize the values (just for fun)

withColumn("deviceId", rint(rand() * 10) cast "int"). // <-- 10 devices randomly assigned to values

as[Signal] // <-- convert to our type (from "unpleasant" Row)

scala> signals.explain

== Physical Plan ==

*Project [timestamp#0, (value#1L % 10) AS value#5L, cast(ROUND((rand(4440296395341152993) * 10.0)) as int) AS deviceId#9]

+- StreamingRelation rate, [timestamp#0, value#1L]

3)对Rate source流对象进行groupBy,使用flatMapGroupsWithState实现agg

// stream processing using flatMapGroupsWithState operator

val device: Signal => DeviceId = { case Signal(_, _, deviceId) => deviceId }

val signalsByDevice = signals.groupByKey(device) import org.apache.spark.sql.streaming.GroupState

type Key = Int

type Count = Long

type State = Map[Key, Count]

case class EventsCounted(deviceId: DeviceId, count: Long)

def countValuesPerKey(deviceId: Int, signalsPerDevice: Iterator[Signal], state: GroupState[State]): Iterator[EventsCounted] = {

val values = signalsPerDevice.toList

println(s"Device: $deviceId")

println(s"Signals (${values.size}):")

values.zipWithIndex.foreach { case (v, idx) => println(s"$idx. $v") }

println(s"State: $state") // update the state with the count of elements for the key

val initialState: State = Map(deviceId -> 0)

val oldState = state.getOption.getOrElse(initialState)

// the name to highlight that the state is for the key only

val newValue = oldState(deviceId) + values.size

val newState = Map(deviceId -> newValue)

state.update(newState) // you must not return as it's already consumed

// that leads to a very subtle error where no elements are in an iterator

// iterators are one-pass data structures

Iterator(EventsCounted(deviceId, newValue))

}

import org.apache.spark.sql.streaming.{GroupStateTimeout, OutputMode} val signalCounter = signalsByDevice.flatMapGroupsWithState(

outputMode = OutputMode.Append,

timeoutConf = GroupStateTimeout.NoTimeout)(func = countValuesPerKey)

4)使用Console Sink方式打印agg结果:

import org.apache.spark.sql.streaming.{OutputMode, Trigger}

import scala.concurrent.duration._

val sq = signalCounter.

writeStream.

format("console").

option("truncate", false).

trigger(Trigger.ProcessingTime(10.seconds)).

outputMode(OutputMode.Append).

start

5)console print

...

-------------------------------------------

Batch:

-------------------------------------------

+--------+-----+

|deviceId|count|

+--------+-----+

+--------+-----+

...

// :: INFO StreamExecution: Streaming query made progress: {

"id" : "a43822a6-500b-4f02-9133-53e9d39eedbf",

"runId" : "79cb037e-0f28-4faf-a03e-2572b4301afe",

"name" : null,

"timestamp" : "2017-08-21T06:57:26.719Z",

"batchId" : ,

"numInputRows" : ,

"processedRowsPerSecond" : 0.0,

"durationMs" : {

"addBatch" : ,

"getBatch" : ,

"getOffset" : ,

"queryPlanning" : ,

"triggerExecution" : ,

"walCommit" :

},

"stateOperators" : [ {

"numRowsTotal" : ,

"numRowsUpdated" : ,

"memoryUsedBytes" :

} ],

"sources" : [ {

"description" : "RateSource[rowsPerSecond=1, rampUpTimeSeconds=0, numPartitions=8]",

"startOffset" : null,

"endOffset" : ,

"numInputRows" : ,

"processedRowsPerSecond" : 0.0

} ],

"sink" : {

"description" : "ConsoleSink[numRows=20, truncate=false]"

}

}

// :: DEBUG StreamExecution: batch committed

...

-------------------------------------------

Batch:

-------------------------------------------

Device:

Signals ():

. Signal(-- ::27.682,,)

State: GroupState(<undefined>)

Device:

Signals ():

. Signal(-- ::26.682,,)

State: GroupState(<undefined>)

Device:

Signals ():

. Signal(-- ::28.682,,)

State: GroupState(<undefined>)

+--------+-----+

|deviceId|count|

+--------+-----+

| | |

| | |

| | |

+--------+-----+

...

// :: INFO StreamExecution: Streaming query made progress: {

"id" : "a43822a6-500b-4f02-9133-53e9d39eedbf",

"runId" : "79cb037e-0f28-4faf-a03e-2572b4301afe",

"name" : null,

"timestamp" : "2017-08-21T06:57:30.004Z",

"batchId" : ,

"numInputRows" : ,

"inputRowsPerSecond" : 0.91324200913242,

"processedRowsPerSecond" : 2.2388059701492535,

"durationMs" : {

"addBatch" : ,

"getBatch" : ,

"getOffset" : ,

"queryPlanning" : ,

"triggerExecution" : ,

"walCommit" :

},

"stateOperators" : [ {

"numRowsTotal" : ,

"numRowsUpdated" : ,

"memoryUsedBytes" :

} ],

"sources" : [ {

"description" : "RateSource[rowsPerSecond=1, rampUpTimeSeconds=0, numPartitions=8]",

"startOffset" : ,

"endOffset" : ,

"numInputRows" : ,

"inputRowsPerSecond" : 0.91324200913242,

"processedRowsPerSecond" : 2.2388059701492535

} ],

"sink" : {

"description" : "ConsoleSink[numRows=20, truncate=false]"

}

}

// :: DEBUG StreamExecution: batch committed

...

-------------------------------------------

Batch:

-------------------------------------------

Device:

Signals ():

. Signal(-- ::36.682,,)

State: GroupState(<undefined>)

Device:

Signals ():

. Signal(-- ::32.682,,)

. Signal(-- ::35.682,,)

State: GroupState(Map( -> ))

Device:

Signals ():

. Signal(-- ::34.682,,)

State: GroupState(<undefined>)

Device:

Signals ():

. Signal(-- ::29.682,,)

State: GroupState(<undefined>)

Device:

Signals ():

. Signal(-- ::31.682,,)

. Signal(-- ::33.682,,)

State: GroupState(Map( -> ))

Device:

Signals ():

. Signal(-- ::30.682,,)

. Signal(-- ::37.682,,)

State: GroupState(Map( -> ))

Device:

Signals ():

. Signal(-- ::38.682,,)

State: GroupState(<undefined>)

+--------+-----+

|deviceId|count|

+--------+-----+

| | |

| | |

| | |

| | |

| | |

| | |

| | |

+--------+-----+

...

// :: INFO StreamExecution: Streaming query made progress: {

"id" : "a43822a6-500b-4f02-9133-53e9d39eedbf",

"runId" : "79cb037e-0f28-4faf-a03e-2572b4301afe",

"name" : null,

"timestamp" : "2017-08-21T06:57:40.005Z",

"batchId" : ,

"numInputRows" : ,

"inputRowsPerSecond" : 0.9999000099990002,

"processedRowsPerSecond" : 9.242144177449168,

"durationMs" : {

"addBatch" : ,

"getBatch" : ,

"getOffset" : ,

"queryPlanning" : ,

"triggerExecution" : ,

"walCommit" :

},

"stateOperators" : [ {

"numRowsTotal" : ,

"numRowsUpdated" : ,

"memoryUsedBytes" :

} ],

"sources" : [ {

"description" : "RateSource[rowsPerSecond=1, rampUpTimeSeconds=0, numPartitions=8]",

"startOffset" : ,

"endOffset" : ,

"numInputRows" : ,

"inputRowsPerSecond" : 0.9999000099990002,

"processedRowsPerSecond" : 9.242144177449168

} ],

"sink" : {

"description" : "ConsoleSink[numRows=20, truncate=false]"

}

}

// :: DEBUG StreamExecution: batch committed // In the end...

sq.stop // Use stateOperators to access the stats

scala> println(sq.lastProgress.stateOperators().prettyJson)

{

"numRowsTotal" : ,

"numRowsUpdated" : ,

"memoryUsedBytes" :

}

Kafka:ZK+Kafka+Spark Streaming集群环境搭建(三十):使用flatMapGroupsWithState替换agg的更多相关文章

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十二)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网。

Centos7出现异常:Failed to start LSB: Bring up/down networking. 按照<Kafka:ZK+Kafka+Spark Streaming集群环境搭 ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十)安装hadoop2.9.0搭建HA

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十九)ES6.2.2 安装Ik中文分词器

注: elasticsearch 版本6.2.2 1)集群模式,则每个节点都需要安装ik分词,安装插件完毕后需要重启服务,创建mapping前如果有机器未安装分词,则可能该索引可能为RED,需要删除后 ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十五)Spark编写UDF、UDAF、Agg函数

Spark Sql提供了丰富的内置函数让开发者来使用,但实际开发业务场景可能很复杂,内置函数不能够满足业务需求,因此spark sql提供了可扩展的内置函数. UDF:是普通函数,输入一个或多个参数, ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十六)Structured Streaming中ForeachSink的用法

Structured Streaming默认支持的sink类型有File sink,Foreach sink,Console sink,Memory sink. ForeachWriter实现: 以写 ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十四)定义一个avro schema使用comsumer发送avro字符流,producer接受avro字符流并解析

参考<在Kafka中使用Avro编码消息:Consumer篇>.<在Kafka中使用Avro编码消息:Producter篇> 在了解如何avro发送到kafka,再从kafka ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十八)ES6.2.2 增删改查基本操作

#文档元数据 一个文档不仅仅包含它的数据 ,也包含 元数据 —— 有关 文档的信息. 三个必须的元数据元素如下:## _index 文档在哪存放 ## _type 文档表示的对象类别 ## ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(十三)kafka+spark streaming打包好的程序提交时提示虚拟内存不足(Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical memory used; 2.2 GB of 2.1 G)

异常问题:Container is running beyond virtual memory limits. Current usage: 119.5 MB of 1 GB physical mem ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(九)安装kafka_2.11-1.1.0

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

- Kafka:ZK+Kafka+Spark Streaming集群环境搭建(八)安装zookeeper-3.4.12

如何搭建配置centos虚拟机请参考<Kafka:ZK+Kafka+Spark Streaming集群环境搭建(一)VMW安装四台CentOS,并实现本机与它们能交互,虚拟机内部实现可以上网.& ...

随机推荐

- 关于java中Stream理解

关于java中Stream理解 Stream是什么 Stream:Java 8新增的接口,Stream可以认为是一个高级版本的Iterator.它代表着数据流,流中的数据元素的数量可以是有限的, 也可 ...

- 洛谷p1072 gcd,质因数分解

/* 可以得a>=c,b<=d,枚举d的质因子p 那么a,b,c,d,x中包含的p个数是ma,mb,mc,md,mx 在gcd(a,x)=c中 ma<mc => 无解 ma=m ...

- python 全栈开发,Day33(tcp协议和udp协议,互联网协议与osi模型,socket概念,套接字(socket)初使用)

先来回顾一下昨天的内容 网络编程开发架构 B/S C/S架构网卡 mac地址网段 ip地址 : 表示了一台电脑在网络中的位置 子网掩码 : ip和子网掩码按位与得到网段 网关ip : 内置在路由器中的 ...

- 步步为营-10-string的简单操作

using System; using System.Collections.Generic; using System.IO; using System.Linq; using System.Tex ...

- [转] JavaScript:彻底理解同步、异步和事件循环(Event Loop)

一. 单线程 我们常说“JavaScript是单线程的”. 所谓单线程,是指在JS引擎中负责解释和执行JavaScript代码的线程只有一个.不妨叫它主线程. 但是实际上还存在其他的线程.例如:处理A ...

- [APIO2011]方格染色

题解: 挺不错的一道题目 首先4个里面只有1个1或者3个1 那么有一个特性就是4个数xor为1 为什么要用xor呢? 在于xor能把相同的数消去 然后用一般的套路 看看确定哪些值能确定全部 yy一下就 ...

- K-means聚类算法及python代码实现

K-means聚类算法(事先数据并没有类别之分!所有的数据都是一样的) 1.概述 K-means算法是集简单和经典于一身的基于距离的聚类算法 采用距离作为相似性的评价指标,即认为两个对象的距离越近,其 ...

- Redis数据结构之哈希

这个部分依旧分为两个步骤,一个是redis客户端,一个是java的客户端 一:Redis客户端 1.get与set 2.删除 3.增加 4.是否存在 hexists myhash2 age 5.获取长 ...

- java爬虫抓取腾讯漫画评论

package com.eteclab.wodm.utils; import java.io.BufferedWriter; import java.io.File; import java.io.F ...

- 更改具有Foreign key约束的表

1.Foreign key 说明: foreign key(外键) 建立起了表与表之间的约束关系,让表与表之间的数据更具有完整性和关联性.设想,有两张表A.B,A表中保存了许多电脑制造商的信息,比如联 ...