kubernetes ui 搭建

1、部署Kubernetes云计算平台,至少准备两台服务器,此处为3台

- Kubernetes Master节点:192.168.0.111

- Kubernetes Node1节点:192.168.0.112

- Kubernetes Node2节点:192.168.0.113

2、每台服务器主机都运行如下命令

- systemctl stop firewalld

- systemctl disable firewalld

- yum -y install ntp

- ntpdate pool.ntp.org #保证每台服务器时间一致性

- systemctl start ntpd

- systemctl enable ntpd

3、Kubernetes Master 安装与配置 Kubernetes Master节点上安装etcd和Kubernetes、flannel网络,命令如下

- yum install kubernetes-master etcd flannel -y

Master /etc/etcd/etcd.conf 配置文件,代码如下

- cat>/etc/etcd/etcd.conf<<EOF

- # [member]

- ETCD_NAME=etcd1

- ETCD_DATA_DIR="/data/etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT=""

- #ETCD_HEARTBEAT_INTERVAL=""

- #ETCD_ELECTION_TIMEOUT=""

- ETCD_LISTEN_PEER_URLS="http://192.168.0.111:2380"

- ETCD_LISTEN_CLIENT_URLS="http://192.168.0.111:2379,http://127.0.0.1:2379"

- ETCD_MAX_SNAPSHOTS=""

- #ETCD_MAX_WALS=""

- #ETCD_CORS=""

- #

- #[cluster]

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.111:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- ETCD_INITIAL_CLUSTER="etcd1=http://192.168.0.111:2380,etcd2=http://192.168.0.112:2380,etcd3=http://192.168.0.113:2380"

- #ETCD_INITIAL_CLUSTER_STATE="new"

- #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

- ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.111:2379"

- #ETCD_DISCOVERY=""

- #ETCD_DISCOVERY_SRV=""

- #ETCD_DISCOVERY_FALLBACK="proxy"

- #ETCD_DISCOVERY_PROXY=""

- #

- #[proxy]

- #ETCD_PROXY="off"

- #ETCD_PROXY_FAILURE_WAIT=""

- #ETCD_PROXY_REFRESH_INTERVAL=""

- #ETCD_PROXY_DIAL_TIMEOUT=""

- #ETCD_PROXY_WRITE_TIMEOUT=""

- #ETCD_PROXY_READ_TIMEOUT=""

- #

- #[security]

- #ETCD_CERT_FILE=""

- #ETCD_KEY_FILE=""

- #ETCD_CLIENT_CERT_AUTH="false"

- #ETCD_TRUSTED_CA_FILE=""

- #ETCD_PEER_CERT_FILE=""

- #ETCD_PEER_KEY_FILE=""

- #ETCD_PEER_CLIENT_CERT_AUTH="false"

- #ETCD_PEER_TRUSTED_CA_FILE=""

- #

- #[logging]

- #ETCD_DEBUG="false"

- # examples for -log-package-levels etcdserver=WARNING,security=DEBUG

- #ETCD_LOG_PACKAGE_LEVELS=""

- EOF

- mkdir -p /data/etcd/;chmod -R /data/etcd/

- systemctl restart etcd.service

Master /etc/kubernetes/config配置文件,命令如下:

- cat>/etc/kubernetes/config<<EOF

- # kubernetes system config

- # The following values are used to configure various aspects of all

- # kubernetes i, including

- # kube-apiserver.service

- # kube-controller-manager.service

- # kube-scheduler.service

- # kubelet.service

- # kube-proxy.service

- # logging to stderr means we get it in the systemd journal

- KUBE_LOGTOSTDERR="--logtostderr=true"

- # journal message level, is debug

- KUBE_LOG_LEVEL="--v=0"

- # Should this cluster be allowed to run privileged docker containers

- KUBE_ALLOW_PRIV="--allow-privileged=false"

- # How the controller-manager, scheduler, and proxy find the apiserver

- KUBE_MASTER="--master=http://192.168.0.111:8080"

- EOF

将Kubernetes 的apiserver进程的服务地址告诉kubernetes的controller-manager,scheduler,proxy进程。

Master /etc/kubernetes/apiserver 配置文件,代码如下:

- cat>/etc/kubernetes/apiserver<<EOF

- # kubernetes system config

- # The following values are used to configure the kube-apiserver

- # The address on the local server to listen to.

- KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

- # The port on the local server to listen on.

- KUBE_API_PORT="--port=8080"

- # Port minions listen on

- KUBELET_PORT="--kubelet-port=10250"

- # Comma separated list of nodes in the etcd cluster

- KUBE_ETCD_SERVERS="--etcd-servers=http://192.168.0.111:2379,http://192.168.0.112:2379,http://192.168.0.113:2379"

- # Address range to use for i

- KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.254.0.0/16"

- # default admission control policies

- #KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

- KUBE_ADMISSION_CONTROL="--admission_control=NamespaceLifecycle,NamespaceExists,LimitRanger,ResourceQuota"

- # Add your own!

- KUBE_API_ARGS=""

- EOF

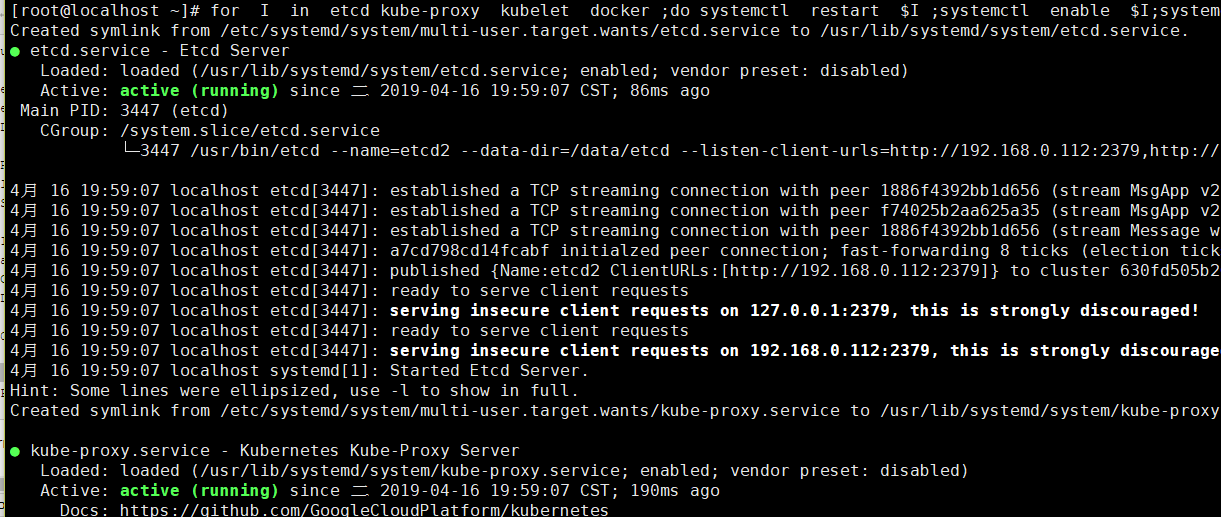

- for i in etcd kube-apiserver kube-controller-manager kube-scheduler ;do systemctl restart $i ;systemctl enable $i ;systemctl status $i;done

启动Kubernetes Master节点上的etcd, apiserver, controller-manager和scheduler进程及状态

4、Kubernetes Node1安装配置

在Kubenetes Node1节点上安装flannel、docker和Kubernetes

- yum install kubernetes-node etcd docker flannel*rhsm* -y

在Node1节点上配置

vim node1 /etc/etcd/etcd.conf 配置如下

- cat>/etc/etcd/etcd.conf<<EOF

- ##########

- # [member]

- ETCD_NAME=etcd2

- ETCD_DATA_DIR="/data/etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT=""

- #ETCD_HEARTBEAT_INTERVAL=""

- #ETCD_ELECTION_TIMEOUT=""

- ETCD_LISTEN_PEER_URLS="http://192.168.0.112:2380"

- ETCD_LISTEN_CLIENT_URLS="http://192.168.0.112:2379,http://127.0.0.1:2379"

- ETCD_MAX_SNAPSHOTS=""

- #ETCD_MAX_WALS=""

- #ETCD_CORS=""

- #[cluster]

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.112:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- ETCD_INITIAL_CLUSTER="etcd1=http://192.168.0.111:2380,etcd2=http://192.168.0.112:2380,etcd3=http://192.168.0.113:2380"

- #ETCD_INITIAL_CLUSTER_STATE="new"

- #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

- ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.112:2379"

- #ETCD_DISCOVERY=""

- #ETCD_DISCOVERY_SRV=""

- #ETCD_DISCOVERY_FALLBACK="proxy"

- #ETCD_DISCOVERY_PROXY=""

- #[proxy]

- #ETCD_PROXY="off"

- #ETCD_PROXY_FAILURE_WAIT=""

- #ETCD_PROXY_REFRESH_INTERVAL=""

- #ETCD_PROXY_DIAL_TIMEOUT=""

- #ETCD_PROXY_WRITE_TIMEOUT=""

- #ETCD_PROXY_READ_TIMEOUT=""

- #

- #[security]

- #ETCD_CERT_FILE=""

- #ETCD_KEY_FILE=""

- #ETCD_CLIENT_CERT_AUTH="false"

- #ETCD_TRUSTED_CA_FILE=""

- #ETCD_PEER_CERT_FILE=""

- #ETCD_PEER_KEY_FILE=""

- #ETCD_PEER_CLIENT_CERT_AUTH="false"

- #ETCD_PEER_TRUSTED_CA_FILE=""

- #

- #[logging]

- #ETCD_DEBUG="false"

- # examples for -log-package-levels etcdserver=WARNING,security=DEBUG

- #ETCD_LOG_PACKAGE_LEVELS=""

- EOF

- mkdir -p /data/etcd/;chmod -R /data/etcd/;service etcd restart

配置信息告诉flannel进程etcd服务的位置以及在etcd上网络配置信息的节点位置。

Node1 kubernetes配置 vim 配置 /etc/kubernetes/config

- cat>/etc/kubernetes/config<<EOF

- # kubernetes system config

- # The following values are used to configure various aspects of all

- # kubernetes services, including

- # kube-apiserver.service

- # kube-controller-manager.service

- # kube-scheduler.service

- # kubelet.service

- # kube-proxy.service

- # logging to stderr means we get it in the systemd journal

- KUBE_LOGTOSTDERR="--logtostderr=true"

- # journal message level, is debug

- KUBE_LOG_LEVEL="--v=0"

- # Should this cluster be allowed to run privileged docker containers

- KUBE_ALLOW_PRIV="--allow-privileged=false"

- # How the controller-manager, scheduler, and proxy find the apiserver

- KUBE_MASTER="--master=http://192.168.0.111:8080"

- EOF

配置/etc/kubernetes/kubelet代码如下

- cat>/etc/kubernetes/kubelet<<EOF

- ###

- # kubernetes kubelet (minion) config

- # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

- KUBELET_ADDRESS="--address=0.0.0.0"

- # The port for the info server to serve on

- KUBELET_PORT="--port=10250"

- # You may leave this blank to use the actual hostname

- KUBELET_HOSTNAME="--hostname-override=192.168.0.112"

- # location of the api-server

- KUBELET_API_SERVER="--api-servers=http://192.168.0.111:8080"

- # pod infrastructure container

- #KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=192.168.0.123:5000/centos68"

- KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

- # Add your own!

- KUBELET_ARGS=""

- EOF

- for I in etcd kube-proxy kubelet docker ;do systemctl restart $I ;systemctl enable $I;systemctl status $I ;done

- iptables -P FORWARD ACCEPT

分别启动Kubernetes Node节点上kube-proxy、kubelet、docker、flanneld进程并查看其状态

4、在Kubernetes Node2节点上安装flannel、docker和Kubernetes

- yum install kubernetes-node etcd docker flannel *rhsm* -y

Node2 节点配置Etcd配置

Node2 /etc/etcd/etcd.config 配置flannel内容如下:

- cat>/etc/etcd/etcd.conf<<EOF

- ##########

- # [member]

- ETCD_NAME=etcd3

- ETCD_DATA_DIR="/data/etcd"

- #ETCD_WAL_DIR=""

- #ETCD_SNAPSHOT_COUNT=""

- #ETCD_HEARTBEAT_INTERVAL=""

- #ETCD_ELECTION_TIMEOUT=""

- ETCD_LISTEN_PEER_URLS="http://192.168.0.113:2380"

- ETCD_LISTEN_CLIENT_URLS="http://192.168.0.113:2379,http://127.0.0.1:2379"

- ETCD_MAX_SNAPSHOTS=""

- #ETCD_MAX_WALS=""

- #ETCD_CORS=""

- #[cluster]

- ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.0.113:2380"

- # if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

- ETCD_INITIAL_CLUSTER="etcd1=http://192.168.0.111:2380,etcd2=http://192.168.0.112:2380,etcd3=http://192.168.0.113:2380"

- #ETCD_INITIAL_CLUSTER_STATE="new"

- #ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

- ETCD_ADVERTISE_CLIENT_URLS="http://192.168.0.113:2379"

- #ETCD_DISCOVERY=""

- #ETCD_DISCOVERY_SRV=""

- #ETCD_DISCOVERY_FALLBACK="proxy"

- #ETCD_DISCOVERY_PROXY=""

- #[proxy]

- #ETCD_PROXY="off"

- #ETCD_PROXY_FAILURE_WAIT=""

- #ETCD_PROXY_REFRESH_INTERVAL=""

- #ETCD_PROXY_DIAL_TIMEOUT=""

- #ETCD_PROXY_WRITE_TIMEOUT=""

- #ETCD_PROXY_READ_TIMEOUT=""

- #

- #[security]

- #ETCD_CERT_FILE=""

- #ETCD_KEY_FILE=""

- #ETCD_CLIENT_CERT_AUTH="false"

- #ETCD_TRUSTED_CA_FILE=""

- #ETCD_PEER_CERT_FILE=""

- #ETCD_PEER_KEY_FILE=""

- #ETCD_PEER_CLIENT_CERT_AUTH="false"

- #ETCD_PEER_TRUSTED_CA_FILE=""

- #

- #[logging]

- #ETCD_DEBUG="false"

- # examples for -log-package-levels etcdserver=WARNING,security=DEBUG

- #ETCD_LOG_PACKAGE_LEVELS=""

- EOF

- mkdir -p /data/etcd/;chmod -R /data/etcd/;service etcd restart

Node2 Kubernetes 配置

vim /etc/kubernete/config

- cat>/etc/kubernetes/config<<EOF

- # kubernetes system config

- # The following values are used to configure various aspects of all

- # kubernetes services, including

- # kube-apiserver.service

- # kube-controller-manager.service

- # kube-scheduler.service

- # kubelet.service

- # kube-proxy.service

- # logging to stderr means we get it in the systemd journal

- KUBE_LOGTOSTDERR="--logtostderr=true"

- # journal message level, is debug

- KUBE_LOG_LEVEL="--v=0"

- # Should this cluster be allowed to run privileged docker containers

- KUBE_ALLOW_PRIV="--allow-privileged=false"

- # How the controller-manager, scheduler, and proxy find the apiserver

- KUBE_MASTER="--master=http://192.168.0.111:8080"

- EOF

配置文件/etc/kubernetes/kubelet 代码如下

- cat>/etc/kubernetes/kubelet<<EOF

- ###

- # kubernetes kubelet (minion) config

- # The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

- KUBELET_ADDRESS="--address=0.0.0.0"

- # The port for the info server to serve on

- KUBELET_PORT="--port=10250"

- # You may leave this blank to use the actual hostname

- KUBELET_HOSTNAME="--hostname-override=192.168.0.113"

- # location of the api-server

- KUBELET_API_SERVER="--api-servers=http://192.168.0.111:8080"

- # pod infrastructure container

- #KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=192.168.0.123:5000/centos68"

- KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

- # Add your own!

- KUBELET_ARGS=""

- EOF

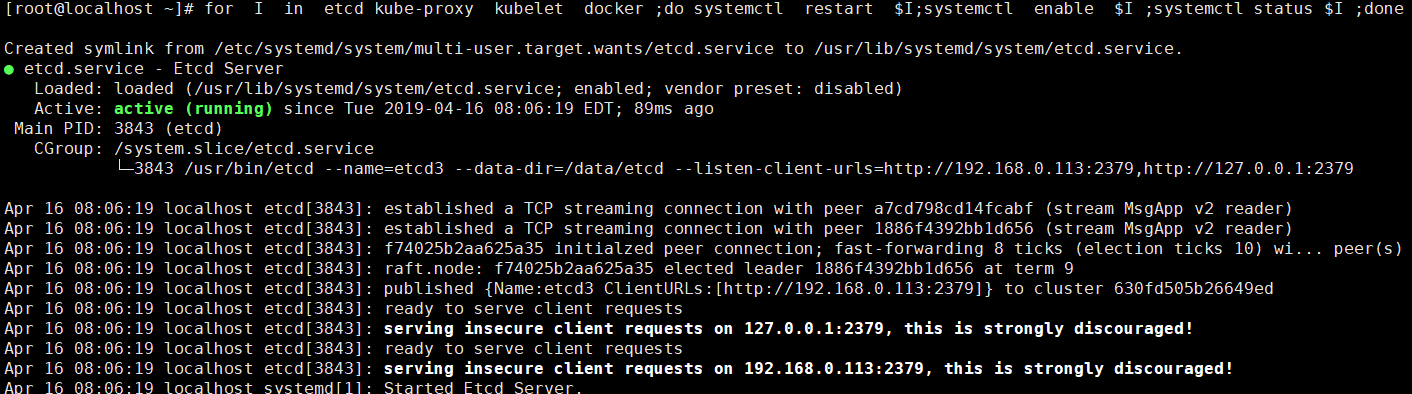

- for I in etcd kube-proxy kubelet docker ;do systemctl restart $I;systemctl enable $I ;systemctl status $I ;done

- iptables -P FORWARD ACCEPT

此时可以在Master节点上使用kubectl get nodes 查看加入到kubernetes集群的两个Node节点:此时kubernetes集群环境搭建完成

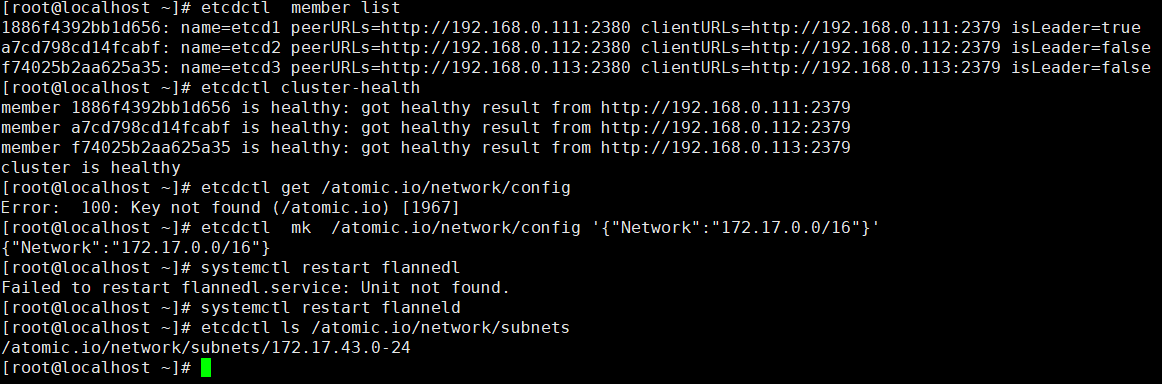

5、Kubernetes flanneld网络配置

Kubernetes整个集群所有的服务器(Master minion)配置Flanneld,/etc/sysconfig/flanneld 代码如下

- cat>/etc/sysconfig/flanneld<<EOF

- # Flanneld configuration options

- # etcd url location. Point this to the server where etcd runs

- FLANNEL_ETCD_ENDPOINTS="http://192.168.0.111:2379"

- # etcd config key. This is the configuration key that flannel queries

- # For address range assignment

- FLANNEL_ETCD_PREFIX="/atomic.io/network"

- # Any additional options that you want to pass

- #FLANNEL_OPTIONS=""

- EOF

- service flanneld restart

在Master 服务器,测试Etcd集群是否正常,同时在Etcd配置中心创建flannel网络配置

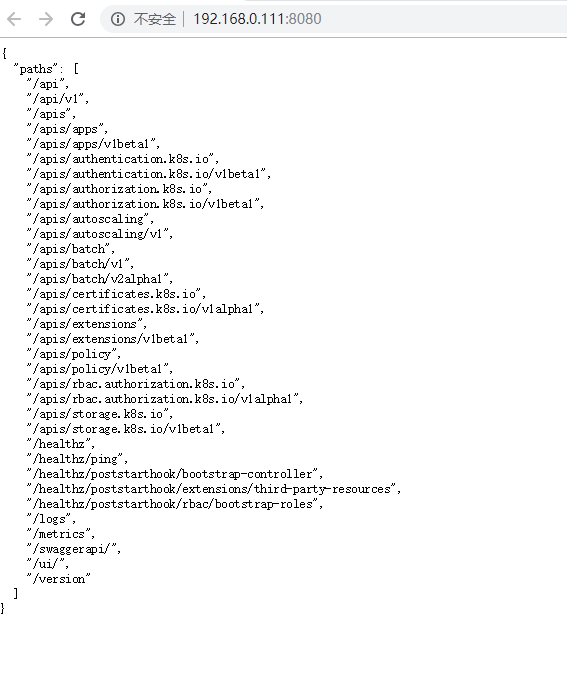

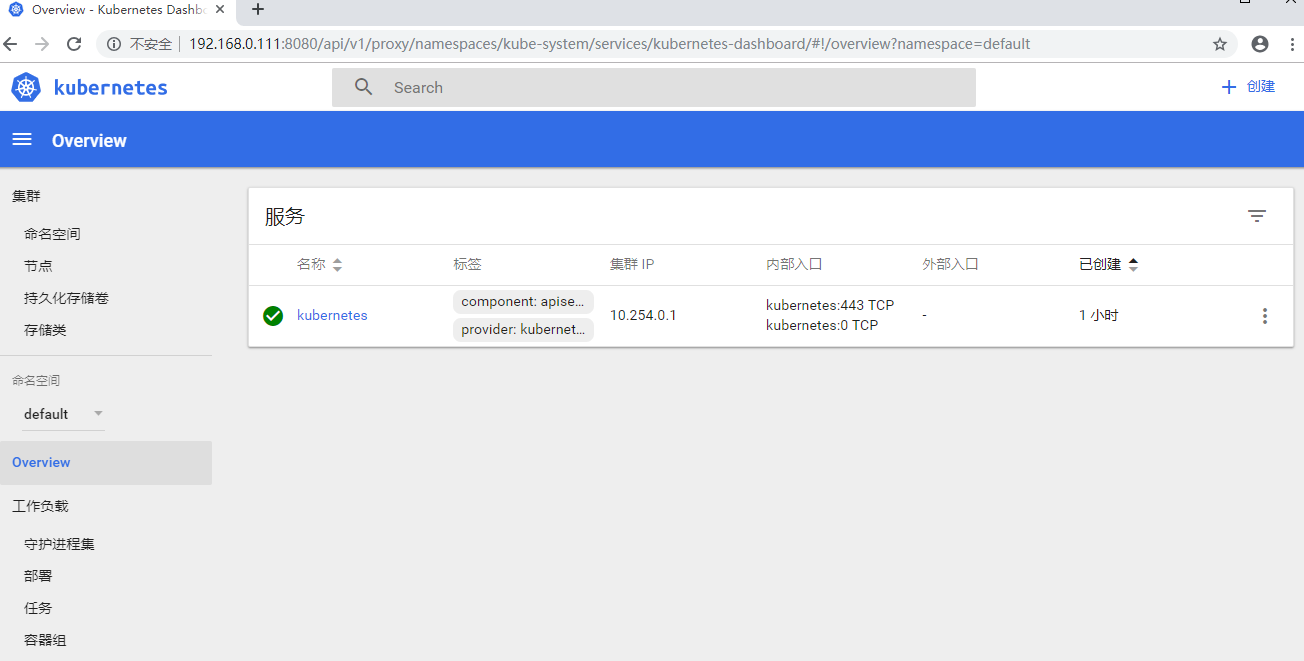

6、Kubernetes Dashboard UI界面

Kubernetes实现的最重要的工作是对Docker容器集群统一的管理和调度,通常使用命令行来操作Kubernetes集群及各个节点,命令行操作非常不方便,如果使用UI界面来可视化操作,会更加方便的管理和维护。

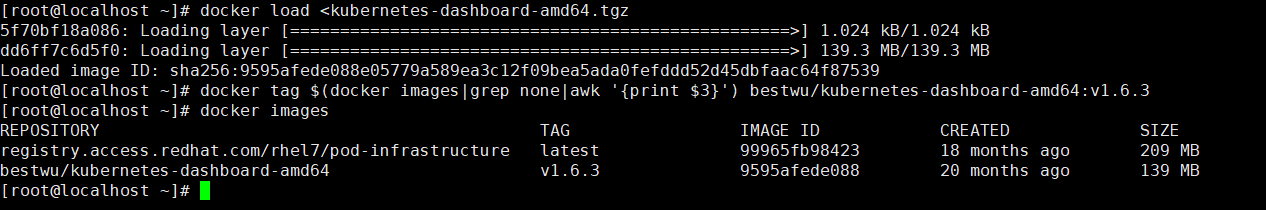

在Node节点提前导入两个列表镜像 如下为配置kubernetes dashboard完整过程

1 docker load <pod-infrastructure.tgz,将导入的pod镜像名称修改,命令如下:

docker tag $(docker images|grep none|awk '{print $3}') registry.access.redhat.com/rhel7/pod-infrastructure

2 docker load <kubernetes-dashboard-amd64.tgz,将导入的pod镜像名称修改,命令如下:

docker tag $(docker images|grep none|awk '{print $3}') bestwu/kubernetes-dashboard-amd64:v1.6.3

然后在Master端,创建dashboard-controller.yaml,代码如下

- apiVersion: extensions/v1beta1

- kind: Deployment

- metadata:

- name: kubernetes-dashboard

- namespace: kube-system

- labels:

- k8s-app: kubernetes-dashboard

- kubernetes.io/cluster-service: "true"

- spec:

- selector:

- matchLabels:

- k8s-app: kubernetes-dashboard

- template:

- metadata:

- labels:

- k8s-app: kubernetes-dashboard

- annotations:

- scheduler.alpha.kubernetes.io/critical-pod: ''

- scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

- spec:

- containers:

- - name: kubernetes-dashboard

- image: bestwu/kubernetes-dashboard-amd64:v1.6.3

- resources:

- # keep request = limit to keep this container in guaranteed class

- limits:

- cpu: 100m

- memory: 50Mi

- requests:

- cpu: 100m

- memory: 50Mi

- ports:

- - containerPort:

- args:

- - --apiserver-host=http://192.168.0.111:8080

- livenessProbe:

- httpGet:

- path: /

- port:

- initialDelaySeconds:

- timeoutSeconds:

创建dashboard-service.yaml,代码如下:

- apiVersion: v1

- kind: Service

- metadata:

- name: kubernetes-dashboard

- namespace: kube-system

- labels:

- k8s-app: kubernetes-dashboard

- kubernetes.io/cluster-service: "true"

- spec:

- selector:

- k8s-app: kubernetes-dashboard

- ports:

- - port:

- targetPort:

创建dashboard dashborad pods模块:

- kubectl create -f dashboard-controller.yaml

- kubectl create -f dashboard-service.yaml

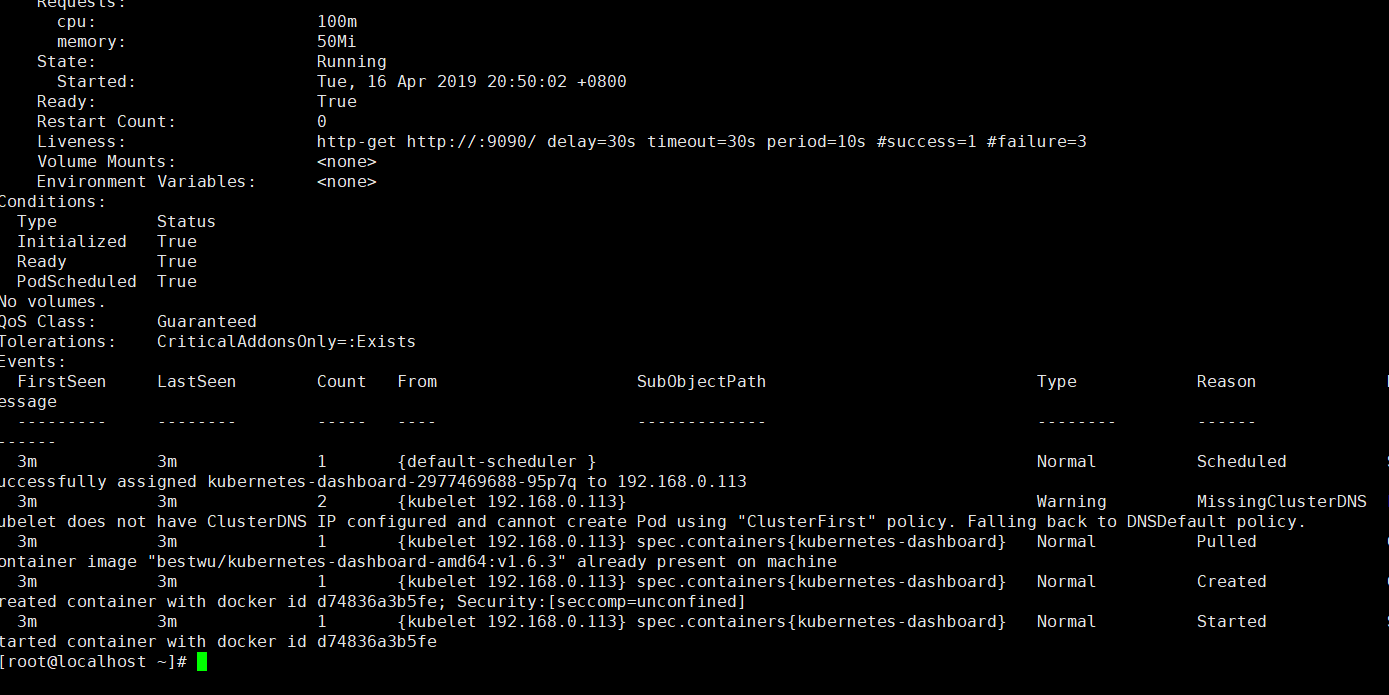

创建完成后,查看Pods和Service的详细信息:

- kubectl get namespace

- kubectl get deployment --all-namespaces

- kubectl get svc --all-namespaces

- kubectl get pods --all-namespaces

- kubectl get pod -o wide --all-namespaces

- kubectl describe service/kubernetes-dashboard --namespace="kube-system"

- kubectl describe pod/kubernetes-dashboard-468712587-754dc --namespace="kube-system"

- kubectl delete pod/kubernetes-dashboard-468712587-754dc --namespace="kube-system"--grace-period= --force

wget http://mirror.centos.org/centos/7/os/x86_64/Packages/python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm

rpm2cpio python-rhsm-certificates-1.19.10-1.el7_4.x86_64.rpm | cpio -iv --to-stdout ./etc/rhsm/ca/redhat-uep.pem | tee /etc/rhsm/ca/redhat-uep.pem

注释:rpm2cpio命令用于将rpm软件包转换为cpio格式的文件

cpio命令主要是用来建立或者还原备份档的工具程序,cpio命令可以复制文件到归档包中,或者从归档包中复制文件。

-i 还原备份档

-v 详细显示指令的执行过程

kubernetes ui 搭建的更多相关文章

- Docker Kubernetes 环境搭建

Docker Kubernetes 环境搭建 节点规划 版本 系统:Centos 7.4 x64 Docker版本:18.09.0 Kubernetes版本:v1.8 etcd存储版本:etcd-3. ...

- 如何利用Reveal神器查看各大APP UI搭建层级

作者 乔同X2016.08.22 19:45 写了3195字,被42人关注,获得了73个喜欢 如何利用Reveal神器查看各大APP UI搭建层级 字数413 阅读110 评论0 喜欢5 title: ...

- .Net微服务实战之Kubernetes的搭建与使用

系列文章 .Net微服务实战之技术选型篇 .Net微服务实战之技术架构分层篇 .Net微服务实战之DevOps篇 .Net微服务实战之负载均衡(上) .Net微服务实战之CI/CD 前言 说到微服务就 ...

- 三、kubernetes环境搭建(实践)

一.目前近况 docker 版本 K8S支持 18.06的 二.安装docker #1.配置仓库 sudo yum install -y yum-utils device-mapper-persist ...

- 二、kubernetes环境搭建

主要内容 1.环境准备(2主机) 2.安装流程 3.问题分析 4.总结 环境配置(2主机) 系统:CentOS 7.3 x64 网络:局域网(VPC) 主机: master:172.16.0.17 m ...

- Kubernetes的搭建与配置(一):集群环境搭建

1.环境介绍及准备: 1.1 物理机操作系统 物理机操作系统采用Centos7.3 64位,细节如下. [root@localhost ~]# uname -a Linux localhost.loc ...

- kubernetes 环境搭建

一.规划1.系统centos 7 2.ip规划及功能分配192.168.2.24 master 192.168.2.24 etcd 192.168.2.25 node1(即minion)192.168 ...

- 配置kubernetes UI图形化界面

配置Kubernetes网络 在master和nodes上都需要安装flannel yum install flannel 在master和nodes上都需要配置flannel vi /etc/sys ...

- Kubernetes UI配置

#配置,在控制节点上操作#这里的镜像在谷歌上面需要FQ下载#######################################生成windows证书,将生成的证书IE.p12导入到IE个人证 ...

随机推荐

- solr7.4 安装与使用

1.solr7环境要求 solr7需要java8环境,且需要在环境变量中添加 JAVA_HOME变量. 2.solr 安装 下载地址 https://lucene.apache.org/solr/mi ...

- 为什么TCP比UDP可靠真正原因,以及并发编程的基础问题

一 为什么TCP协议比UDP协议传输数据可靠: 我们知道在传输数据的时候,数据是先存在操作系统的缓存中,然后发送给客户端,在客户端也是要经过客户端的操作系统的,因为这个过程涉及到计算机硬件,也就是物 ...

- MySQL字符集及校对规则的理解

阅读目录:MySQL的字符集和校对规则 MySQL的字符集 MySQL与字符集 正确使用字符集 MySQL客户端与字符集 字符集编码转换原理 字符集常见处理操作 字符集的正确实践 MySQL的校对 ...

- 【Python爬虫实战】多线程爬虫---糗事百科段子爬取

多线程爬虫:即程序中的某些程序段并行执行,合理地设置多线程,可以让爬虫效率更高糗事百科段子普通爬虫和多线程爬虫分析该网址链接得出:https://www.qiushibaike.com/8hr/pag ...

- error:hadoop 中没有etc目录

download binary 而不是 source http://hadoop.apache.org/#Download+Hadoop

- 正则表达式-使用说明Regular Expression How To (Perl, Python, etc)

notepad++ wiki about regular expression 正则表达式-使用说明Regular Expression How To (Perl, Python, etc) http ...

- UVA-714-二分+贪心

题意:K个人复制M本书,每本书有Pi页,要求每个人都能分到至少一本书,如果分到多本书籍,分到的书籍编号是连续的,并且K个人里面分到的书籍总页数最大的那个人总数最小. 如果有多组解,保证 K1 < ...

- 45.更新一下scrapy爬取工商信息爬虫代码

这里是完整的工商信息采集代码,不过此程序需要配合代理ip软件使用.问题:1.网站对ip之前没做限制,但是采集了一段时间就被检测到设置了反爬,每个ip只能访问十多次左右就被限制访问.2.网站对请求头的检 ...

- hping安装过程

转载: http://www.safecdn.cn/website-announcement/2018/12/hping-install/97.html Hping的主要功能有: 测试防火墙实用的 ...

- Maven概念模型

两个核心 1.依懒管理:对jar包管理 2.项目构建管理:通过命令进行项目构建管理