hadoop问题集(1)

参考:

http://dataunion.org/22887.html

1.mapreduce_shuffle does not exist

执行任何时报错:

Container launch failed for container_1433170139587_0005_01_000002 : org.apache.hadoop.yarn.exceptions.InvalidAuxServiceException:The auxService:mapreduce_shuffle does not exist

原因是yarn中没有aux的信息。在yarn-site.xml中加入:

<property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property>虽然该属性有默认值mapreduce_shuffle,但是好像不起作用,因此必要显式声明出来。

2.yarn启动正常但是WEB打不开

netstat -a|grep 8088找不到程序,webui无法打开,resourcemanager和nodemanager启动正常。解决办法:

关闭防火墙:service iptables stop;

3.执行hive命令

create table t2 as select * from t;发现不启动任务:

hive> create table t2 asselect*from t;WARNING:Hive-on-MR is deprecated inHive2and may not be available in the future versions.Considerusing a different execution engine (i.e. tez, spark)orusingHive1.X releases.Query ID = root_20161010171214_e714ba72-d6da-4699-96cb-8c2384fcdf54Total jobs =3LaunchingJob1out of 3Number of reduce tasks isset to 0 since there's no reduce operator

其实是yarn没启动,启动yarn后解决。hive实际是封装了mr任务。

4.执行hdfs命令报standy错误

hdfs@WH0PRDBRP00AP0013 conf]$ hadoop fs -ls /usrls:Operation category READ isnot supported in state standby.Visit https://s.apache.org/sbnn-error

这个原因是HDFS客户端连接到了standby 的namenode上,而不是连接到active上的

修改core-site.xml,改成当前acitve的集群.或者修改为集群名(等完善)

5.执行hvie任务报错

报run as nobody,但是需要用root来执行。经过百度,原因是:使用了LCE(LinuxContainerExecutor)后。LCE有以下限制:

使用LinuxContainerExecutor作为container的执行者需要注意的是,job提交不能用root用户,在container-executor的源码中可以看出,会检查user信息,有这样一段注释:

/**Is the user a real user account?* Checks:* 1. Not root* 2. UID is above the minimum configured.* 3. Not in banned user list* Returns NULL on failure*/struct passwd* check_user(constchar*user)

user信息的检查会在org.apache.hadoop.mapreduce.job的init阶段执行。

Container启动的过程大致如下:

资源本地化——启动container——运行container——资源回收

解决办法:

即可。

教参:

http://community.cloudera.com/t5/Batch-Processing-and-Workflow/YARN-force-nobody-user-on-all-jobs-and-so-they-fail/td-p/26050

It sounds like YARN is running under the linux container executor which is used to provide secure containers or resource isolation (using cgroups).If you don't need these features or if this was enabled accidentally then you can probably fix the problem by unchecking "Always Use Linux Container Executor" in YARN configuration under Cloudera Manager.Or if you do need LCE, then one thing to check is that your local users (e.g. m.giusto) exist on all nodes .https://issues.apache.org/jira/browse/YARN-3252

6.执行hive任务报错:

无法在本地目录初始化用户数据。找到

在各个DATANODE节点删除usercache下面的数据:

rm -rf /yarn/nm/usercache/*

即可。

原因是yarn在由cgroups切换成yarn管理后,可能会验证之前执行过任务的用户的缓存数据。

参考资料:

http://dataunion.org/22887.html

http://stackoverflow.com/questions/29397509/yarn-application-exited-with-exitcode-1000-not-able-to-initialize-user-directo

https://community.hortonworks.com/questions/11285/yarn-jobs-fails-with-not-able-to-initialize-user-d.html

7.更换DATANODE的数据目录

1.停止集群

2.找到DATANODE的数据目录,如/dfs/dn

3.创建新的目录如梭/nfs/dn,并将权限修改为和/dfs/dn一样

4.复制cp -r /dfs/dn/* /nfs/dn

5.执行hdfs fsck验证有没有坏块

6.开启集群

7.删除原目录

参考:

http://support.huawei.com/huaweiconnect/enterprise/thread-328729.html

8.hive不支持中文注释(hive程序本身不支持)

原因是元数据库中的表是latin1的,hive的元数据创建脚本中明确指定了character set =latin1。可以通知修改mysql数据库编码实现:

1.先停止hive元数据服务

2.备份hive库的表

create table BUCKETING_COLS_BK asselect*from BUCKETING_COLS;create table CDS_BK asselect*from CDS;create table COLUMNS_V2_BK asselect*from COLUMNS_V2;create table COMPACTION_QUEUE_BK asselect*from COMPACTION_QUEUE;create table COMPLETED_TXN_COMPONENTS_BK asselect*from COMPLETED_TXN_COMPONENTS;create table DATABASE_PARAMS_BK asselect*from DATABASE_PARAMS;create table DBS_BK asselect*from DBS;create table DB_PRIVS_BK asselect*from DB_PRIVS;create table DELEGATION_TOKENS_BK asselect*from DELEGATION_TOKENS;create table FUNCS_BK asselect*from FUNCS;create table FUNC_RU_BK asselect*from FUNC_RU;create table GLOBAL_PRIVS_BK asselect*from GLOBAL_PRIVS;create table HIVE_LOCKS_BK asselect*from HIVE_LOCKS;create table IDXS_BK asselect*from IDXS;create table INDEX_PARAMS_BK asselect*from INDEX_PARAMS;create table MASTER_KEYS_BK asselect*from MASTER_KEYS;create table NEXT_COMPACTION_QUEUE_ID_BK asselect*from NEXT_COMPACTION_QUEUE_ID;create table NEXT_LOCK_ID_BK asselect*from NEXT_LOCK_ID;create table NEXT_TXN_ID_BK asselect*from NEXT_TXN_ID;create table NOTIFICATION_LOG_BK asselect*from NOTIFICATION_LOG;create table NOTIFICATION_SEQUENCE_BK asselect*from NOTIFICATION_SEQUENCE;create table NUCLEUS_TABLES_BK asselect*from NUCLEUS_TABLES;create table PARTITIONS_BK asselect*from PARTITIONS;create table PARTITION_EVENTS_BK asselect*from PARTITION_EVENTS;create table PARTITION_KEYS_BK asselect*from PARTITION_KEYS;create table PARTITION_KEY_VALS_BK asselect*from PARTITION_KEY_VALS;create table PARTITION_PARAMS_BK asselect*from PARTITION_PARAMS;create table PART_COL_PRIVS_BK asselect*from PART_COL_PRIVS;create table PART_COL_STATS_BK asselect*from PART_COL_STATS;create table PART_PRIVS_BK asselect*from PART_PRIVS;create table ROLES_BK asselect*from ROLES;create table ROLE_MAP_BK asselect*from ROLE_MAP;create table SDS_BK asselect*from SDS;create table SD_PARAMS_BK asselect*from SD_PARAMS;create table SEQUENCE_TABLE_BK asselect*from SEQUENCE_TABLE;create table SERDES_BK asselect*from SERDES;create table SERDE_PARAMS_BK asselect*from SERDE_PARAMS;create table SKEWED_COL_NAMES_BK asselect*from SKEWED_COL_NAMES;create table SKEWED_COL_VALUE_LOC_MAP_BK asselect*from SKEWED_COL_VALUE_LOC_MAP;create table SKEWED_STRING_LIST_BK asselect*from SKEWED_STRING_LIST;create table SKEWED_STRING_LIST_VALUES_BK asselect*from SKEWED_STRING_LIST_VALUES;create table SKEWED_VALUES_BK asselect*from SKEWED_VALUES;create table SORT_COLS_BK asselect*from SORT_COLS;create table TABLE_PARAMS_BK asselect*from TABLE_PARAMS;create table TAB_COL_STATS_BK asselect*from TAB_COL_STATS;create table TBLS_BK asselect*from TBLS;create table TBL_COL_PRIVS_BK asselect*from TBL_COL_PRIVS;create table TBL_PRIVS_BK asselect*from TBL_PRIVS;create table TXNS_BK asselect*from TXNS;create table TXN_COMPONENTS_BK asselect*from TXN_COMPONENTS;create table TYPES_BK asselect*from TYPES;create table TYPE_FIELDS_BK asselect*from TYPE_FIELDS;create table VERSION_BK asselect*from VERSION;

但会出现这个问题:

然后把备份表修重命名,发现然存入的是乱码,说明hive程序本身是只支持latin1的。而且改了以后,以前创建的HIVE表会出现drop不掉等问题,所以不要改!

9.MR任务报错(hive任务卡很久后失败)

任务一直卡在“2017-02-15 02:58:53 Starting to launch local task to process map join; maximum memory = 1908932608”,很久才报错。这个日志中Execution log at: /tmp/root/root_20170215145252_3c3577ca-f368-40c6-b97c-3659ea2e52d8.log没有重要内容。

Execution log at:/tmp/root/root_20170215145252_3c3577ca-f368-40c6-b97c-3659ea2e52d8.log2017-02-1502:58:53Starting to launch local task to process map join; maximum memory =1908932608java.lang.NullPointerExceptionat com.dy.hive.rcas.pro.GetCityName.evaluate(GetCityName.java:34)at sun.reflect.GeneratedMethodAccessor14.invoke(UnknownSource)..............java.sql.SQLException:Couldnot open client transport with JDBC Uri: jdbc:hive2://10.80.2.106:10000: java.net.SocketException: Connection resetat org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:219)..............Causedby: org.apache.thrift.transport.TTransportException: java.net.SocketException:Connection resetat org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129)...27 moreCausedby: java.net.SocketException:Connection resetat java.net.SocketInputStream.read(SocketInputStream.java:210)...32 morejava.sql.SQLException:Couldnot open client transport with JDBC Uri: jdbc:hive2://10.80.2.106:10000: java.net.SocketException: Connection resetat org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:219)at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:167)..............Causedby: org.apache.thrift.transport.TTransportException: java.net.SocketException:Connection resetat org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129)at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86)...29 moreCausedby: java.net.SocketException:Connection resetat java.net.SocketInputStream.read(SocketInputStream.java:210)...34 morejava.sql.SQLException:Couldnot open client transport with JDBC Uri: jdbc:hive2://10.80.2.106:10000: java.net.SocketException: Connection resetat org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:219)at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:167)..............Causedby: org.apache.thrift.transport.TTransportException: java.net.SocketException:Connection resetat org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129)at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86)...27 moreCausedby: java.net.SocketException:Connection resetat java.net.SocketInputStream.read(SocketInputStream.java:210)...32 morejava.sql.SQLException:Couldnot open client transport with JDBC Uri: jdbc:hive2://10.80.2.106:10000: java.net.SocketException: Connection resetat org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:219)at org.apache.hive.jdbc.HiveConnection.<init>(HiveConnection.java:167)..............Causedby: org.apache.thrift.transport.TTransportException: java.net.SocketException:Connection resetat org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129)...29 moreCausedby: java.net.SocketException:Connection resetat java.net.SocketInputStream.read(SocketInputStream.java:210)...34 morejava.sql.SQLException:Couldnot open client transport with JDBC Uri: jdbc:hive2://10.80.2.106:10000: java.net.SocketException: Connection resetat org.apache.hive.jdbc.HiveConnection.openTransport(HiveConnection.java:219)Causedby: org.apache.thrift.transport.TTransportException: java.net.SocketException:Connection resetat org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:129)...27 moreCausedby: java.net.SocketException:Connection resetat java.net.SocketInputStream.read(SocketInputStream.java:210)...32 more

看样子是连接不上hiveserver2,用beeline测试:

果然是连接不上hiveserver2。

查看hiveserver2的超时配置:

近一步发现是hiveserver2一直报错:

[HiveServer2-Background-Pool:Thread-2147]:LaunchingJob1out of 12017-02-1516:40:52,275 INFO org.apache.hadoop.hive.ql.Driver:[HiveServer2-Background-Pool:Thread-2147]:Starting task [Stage-1:MAPRED]in serial mode2017-02-1516:40:52,276 INFO org.apache.hadoop.hive.ql.exec.Task:[HiveServer2-Background-Pool:Thread-2147]:Number of reduce tasks isset to 0 since there\'s no reduce operator2017-02-1516:40:52,289 INFO hive.ql.Context:[HiveServer2-Background-Pool:Thread-2147]:New scratch dir is hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-1872017-02-1516:40:52,363 INFO org.apache.hadoop.hive.ql.exec.mr.ExecDriver:[HiveServer2-Background-Pool:Thread-2147]:Using org.apache.hadoop.hive.ql.io.CombineHiveInputFormat2017-02-1516:40:52,363 INFO org.apache.hadoop.hive.ql.exec.mr.ExecDriver:[HiveServer2-Background-Pool:Thread-2147]: adding libjars: file:///opt/bqjr_extlib/hive/hive-function.jar,file:///opt/bqjr_extlib/hive/scala-library-2.10.6.jar2017-02-1516:40:52,363 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:Processingalias t12017-02-1516:40:52,364 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:Adding input file hdfs://bqdpm1:8020/user/hive/S1/CODE_LIBRARY2017-02-1516:40:52,364 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:ContentSummarynot cached for hdfs://bqdpm1:8020/user/hive/S1/CODE_LIBRARY2017-02-1516:40:52,365 INFO hive.ql.Context:[HiveServer2-Background-Pool:Thread-2147]:New scratch dir is hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-1872017-02-1516:40:52,380 INFO org.apache.hadoop.hive.ql.log.PerfLogger:[HiveServer2-Background-Pool:Thread-2147]:<PERFLOG method=serializePlan from=org.apache.hadoop.hive.ql.exec.Utilities>2017-02-1516:40:52,380 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:SerializingMapWork via kryo2017-02-1516:40:52,413 INFO org.apache.hadoop.hive.ql.log.PerfLogger:[HiveServer2-Background-Pool:Thread-2147]:</PERFLOG method=serializePlan start=1487148052380end=1487148052413 duration=33from=org.apache.hadoop.hive.ql.exec.Utilities>2017-02-1516:40:52,421 ERROR org.apache.hadoop.hive.ql.exec.mr.ExecDriver:[HiveServer2-Background-Pool:Thread-2147]: yarn2017-02-1516:40:52,433 INFO org.apache.hadoop.yarn.client.RMProxy:[HiveServer2-Background-Pool:Thread-2147]:Connecting to ResourceManager at bqdpm1/10.80.2.105:80322017-02-1516:40:52,455 INFO org.apache.hadoop.yarn.client.RMProxy:[HiveServer2-Background-Pool:Thread-2147]:Connecting to ResourceManager at bqdpm1/10.80.2.105:80322017-02-1516:40:52,456 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]: PLAN PATH = hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-187/-mr-10004/b7762b50-90d0-4773-a638-3a2ea799fabb/map.xml2017-02-1516:40:52,456 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]: PLAN PATH = hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-187/-mr-10004/b7762b50-90d0-4773-a638-3a2ea799fabb/reduce.xml2017-02-1516:40:52,456 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:***************non-local mode***************2017-02-1516:40:52,456 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:local path = hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-187/-mr-10004/b7762b50-90d0-4773-a638-3a2ea799fabb/reduce.xml2017-02-1516:40:52,456 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:Open file to read in plan: hdfs://bqdpm1:8020/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-187/-mr-10004/b7762b50-90d0-4773-a638-3a2ea799fabb/reduce.xml2017-02-1516:40:52,458 INFO org.apache.hadoop.hive.ql.exec.Utilities:[HiveServer2-Background-Pool:Thread-2147]:Filenot found:File does not exist:/tmp/hive/hive/22422b3e-dbd5-4597-a47f-77cb3db77335/hive_2017-02-15_16-40-52_166_1409282555491854976-187/-mr-10004/b7762b50-90d0-4773-a638-3a2ea799fabb/reduce.xmlat org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:66)at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:56)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocationsUpdateTimes(FSNamesystem.java:1934)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocationsInt(FSNamesystem.java:1875)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1855)at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.getBlockLocations(FSNamesystem.java:1827)at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.getBlockLocations(NameNodeRpcServer.java:566)at org.apache.hadoop.hdfs.server.namenode.AuthorizationProviderProxyClientProtocol.getBlockLocations(AuthorizationProviderProxyClientProtocol.java:88)at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.getBlockLocations(ClientNamenodeProtocolServerSideTranslatorPB.java:361)at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:1073)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2086)at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2082)at java.security.AccessController.doPrivileged(NativeMethod)at javax.security.auth.Subject.doAs(Subject.java:415)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2080)

该问题目前尚未解决…

最新发现:

早就发现了任务会一直创建job.今天再看日志,发现用到了org.apache.hive.spark.client.SparkClientImpl,因为该查询自定义的临时UDF是没用JAVA写的,没用到SPARK,但任务引用了其它用SPARK写的UDF,所以想到可能是临时UDF的问题.检验该同事写的临时UDF,发现其中有JDBC查询,这样每行就会创建一个查询任务,因而不但创建JOB.

解决办法:修改UDF.将JDBC查询的内容直接通过HDFS加载到内存,在内存中比较.

10. yarn查看日志

没设置日志归集或者日志没有归集完成均会出现这种情况.

11.hive无法启动任务

2016-11-17 18:02:21,081 FATAL org.apache.hadoop.yarn.server.resourcemanager.ResourceManager: Error starting ResourceManager

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Invalid resource scheduler memory allocation configuration, yarn.scheduler.minimum-allocation-mb=1024, yarn.scheduler.maximum-allocation-mb=512, min should equal greater than 0, max should be no smaller than min.

yarn.scheduler.minimum-allocation-mb=1024

yarn.scheduler.maximum-allocation-mb=512

最大可用内存比最小可用内存小~,配置错误,最大内存修改为物理内存的60%

12.hive表删除不掉

删除hive表报外键依赖.原因不明,可能跟修改hive元数据库字符集有关.

java.sql.BatchUpdateException:Cannotdeleteor update a parent row: a foreign key constraint fails ("hive"."TABLE_PARAMS_ORG", CONSTRAINT "TABLE_PARAMS_FK1" FOREIGN KEY ("TBL_ID") REFERENCES "TBLS"("TBL_ID"))at com.mysql.jdbc.SQLError.createBatchUpdateException(SQLError.java:1158)at com.mysql.jdbc.PreparedStatement.executeBatchSerially(PreparedStatement.java:1773)

1.查找与TBLS有外键依赖的表:

SELECT C.TABLE_SCHEMA ,C.REFERENCED_TABLE_NAME ,C.REFERENCED_COLUMN_NAME ,C.TABLE_NAME ,C.COLUMN_NAME ,C.CONSTRAINT_NAME ,T.TABLE_COMMENT ,R.UPDATE_RULER.DELETE_RULEFROM INFORMATION_SCHEMA.KEY_COLUMN_USAGE CJOIN INFORMATION_SCHEMA. TABLES TON T.TABLE_NAME = C.TABLE_NAMEJOIN INFORMATION_SCHEMA.REFERENTIAL_CONSTRAINTS RON R.TABLE_NAME = C.TABLE_NAMEAND R.CONSTRAINT_NAME = C.CONSTRAINT_NAMEAND R.REFERENCED_TABLE_NAME = C.REFERENCED_TABLE_NAMEWHERE C.REFERENCED_TABLE_NAME ='TBLS'AND C.TABLE_SCHEMA='hive';

查找TBLS中与S1.USER_INFO有关的依赖:

mysql> SELECT-> X.NAME DB_NAME,-> A.TBL_ID,-> A.TBL_NAME,-> A.TBL_TYPE,-> B.ORIG_TBL_ID IDXS,-> C.TBL_ID PARTITIONS,-> D.TBL_ID PARTITION_KEYS,-> E.TBL_ID TABLE_PARAMS_ORG,-> F.TBL_ID TAB_COL_STATS,-> G.TBL_ID TBL_COL_PRIVS,-> H.TBL_ID TBL_PRIVS-> FROM TBLS A LEFT JOIN DBS X ON A.DB_ID=X.DB_ID-> LEFT JOIN IDXS B ON (A.TBL_ID=B.ORIG_TBL_ID)-> LEFT JOIN PARTITIONS C ON (A.TBL_ID=C.TBL_ID)-> LEFT JOIN PARTITION_KEYS D ON (A.TBL_ID=D.TBL_ID)-> LEFT JOIN TABLE_PARAMS_ORG E ON (A.TBL_ID=E.TBL_ID)-> LEFT JOIN TAB_COL_STATS F ON (A.TBL_ID=F.TBL_ID)-> LEFT JOIN TBL_COL_PRIVS G ON (A.TBL_ID=G.TBL_ID)-> LEFT JOIN TBL_PRIVS H ON (A.TBL_ID=H.TBL_ID)-> WHERE A.TBL_NAME='USER_INFO';+---------+--------+-----------+---------------+------+------------+----------------+------------------+---------------+---------------+-----------+23| DB_NAME | TBL_ID | TBL_NAME | TBL_TYPE | IDXS | PARTITIONS | PARTITION_KEYS | TABLE_PARAMS_ORG | TAB_COL_STATS | TBL_COL_PRIVS | TBL_PRIVS |24+---------+--------+-----------+---------------+------+------------+----------------+------------------+---------------+---------------+-----------+25| s1 |14464| user_info | MANAGED_TABLE | NULL | NULL | NULL |14464| NULL | NULL |14464|26| mmt |13517| user_info | MANAGED_TABLE | NULL | NULL | NULL |13517| NULL | NULL |13517|27| s2 |13641| user_info | MANAGED_TABLE | NULL | NULL | NULL |13641| NULL | NULL |13641|28| s3 |14399| user_info | MANAGED_TABLE | NULL | NULL | NULL |14399| NULL | NULL |14399|29+---------+--------+-----------+---------------+------+------------+----------------+------------------+---------------+---------------+-----------+

可以发现有两个表有依赖,TABLE_PARAMS_ORG\TBL_PRIVS.

先删除这两个表中的数据再删除TBLS中的.

delete from TABLE_PARAMS_ORG where TBL_ID=14464;

delete from TBL_PRIVS where TBL_ID=14464;

delete from TBLS where TBL_ID=14464;

13.hive无法连接metestore

Exceptionin thread "main" java.lang.RuntimeException:Unable to instantiate org.apache.hadoop.hive.ql.metadata.SessionHiveMetaStoreClientat org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1550)at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:86)

hive-site.xml中的配置

<value>root</value><description>Username to use against metastore database</description></property># <property># <name>hive.metastore.uris</name># <value>thrift://node1:9083</value># </property>

hive使用metastore需要在hive-site.xml中配置hive.metastore.uris

<property><name>hive.metastore.local</name><!--不使用本地数据库 --><value>false</value></property><property><name>javax.jdo.option.ConnectionURL</name><!--数据库连接串 --><value>jdbc:mysql://yangxw:3306/hive_meta</value></property><property><name>javax.jdo.option.ConnectionDriverName</name><!--数据库驱动 --><value>com.mysql.jdbc.Driver</value></property><property><name>javax.jdo.option.ConnectionUserName</name><value>hive</value><!--数据库名 --><description>username to use againstmetastore database</description></property><property><name>javax.jdo.option.ConnectionPassword</name><!--数据库用户名 --><value>root</value><description>password to use against metastore database</description></property><property><name>javax.jdo.option.ConnectionUserName</name><!--数据库用密码--><value>root</value><description>Username to use against metastore database</description></property><property><name>hive.metastore.uris</name><!--metastore的端口--><value>thrift://node1:9083</value></property>

然后需要在mysql中初始化metastore元数据.

schematool -dbType mysql -initSchema

然后开启metastore服务:

nohup bin/hive --service metastore -p 9083 >.metastore_log &

仔细查看hive.xml,发现hive.xml中,注释写成了#,而xml中注释要写成,因此原本不打算用metatosre的,由于注释错误用上了,但又没有开启metastore服务,所有报错.可以开启metastore服务或者注释掉hive.metastore.uris 元素.

在hive-site.xml中,#注释是无效的,直接被忽略!因为hive-site.xml是xml文件使用来注释

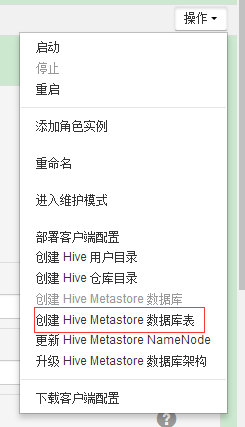

14.CDH中删除重建hive元数据库

之前修改了元数据库编码,导致某些表缺数据,因此在测试库上删除元数据库.

重建hive元数据库有两种方式:

1)手动创建

<1>删除mysql中的元数据库

<2>执行 ./schematool -dbType mysql -initSchema命令创建

SLF4J:Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]Metastore connection URL: jdbc:derby:;databaseName=metastore_db;create=trueMetastoreConnectionDriver: org.apache.derby.jdbc.EmbeddedDriverMetastore connection User: APPStarting metastore schema initialization to 1.1.0Initialization script hive-schema-1.1.0.mysql.sqlError:Syntax error:Encountered"<EOF>" at line 1, column 64.(state=42X01,code=30000)org.apache.hadoop.hive.metastore.HiveMetaException:Schema initialization FAILED!Metastore state would be inconsistent !!

但是由于CDH修改了hive-site.xml的配置,配置文件中没有出元数据库的IP:数据库名:端口:用户名:密码:数据库等,执行这个命令会出错. 因此可以手动在mysql里创建表.找到/opt/cloudera/parcels/CDH/lib/hive/scripts/metastore/upgrade/mysql/hive-schema-1.1.0.mysql.sql 这个文件,在mysql里执行即可:

mysql>create database hive charset utf8;

mysql>soure /opt/cloudera/parcels/CDH/lib/hive/scripts/metastore/upgrade/mysql/hive-schema-1.1.0.mysql.sql

注意:该文件一定要在/opt/cloudera/parcels/CDH/lib/hive/scripts/metastore/upgrade/mysql/下执行,因为脚本中引用了本路径的另外一个sql文件

执行这个文件经常会报语法错误,或者直接把文件内容复制到mysql命令台中执行即可(报错多执行几次).执行完后应该有53张表.

2)通过CDH创建

先在mysql删除hive库,然后再创建hive库

如果没有创建hive库是会报错的

Exceptionin thread "main" java.lang.RuntimeException: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException:Unknown database 'hive'at com.cloudera.cmf.service.hive.HiveMetastoreDbUtil.countTables(HiveMetastoreDbUtil.java:201)at com.cloudera.cmf.service.hive.HiveMetastoreDbUtil.printTableCount(HiveMetastoreDbUtil.java:281)at com.cloudera.cmf.service.hive.HiveMetastoreDbUtil.main(HiveMetastoreDbUtil.java:331)Causedby: com.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException:Unknown database 'hive'at sun.reflect.NativeConstructorAccessorImpl.newInstance0(NativeMethod)at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:526)at com.mysql.jdbc.Util.handleNewInstance(Util.java:404)at com.mysql.jdbc.Util.getInstance(Util.java:387)

15.HDFS客户端变成本地的!

[root@WH0PRDBRP00AP0013 ogglog]# hdfs dfs -ls /Warning: fs.defaultFS isnotsetwhen running "ls" command.Found30 items-rw-r--r--1 root root 02016-12-2916:48/.autofsck-rw-r--r--1 root root 02016-07-2710:03/.autorelabeldrwxr-xr-x - root root 40962016-07-1514:41/backdr-xr-xr-x - root root 40962016-11-1503:47/bindr-xr-xr-x - root root 40962016-07-1515:03/bootdrwxr-xr-x - root root 40962013-11-2308:56/cgroupdrwxr-xr-x - root root 39602016-12-2916:48/devdrwxr-xr-x - root root 122882017-02-1716:49/etc

原因:

本节点在虽然在CDH中但并没有HDFS gateway角色,之前是通过复制/opt/parcels的方式复制过来的,里面有fs.defaultFS配置,最近新加机器后重新分发了配置,该机没有DATANODE角色,重新分开的配置文件中没有fs.defaultFS的设置,因此默认就成了本地目录!

解决办法:

把其它节点的设置文件复制到本节点即可.

复制其它节点的/opt/cloudera/parcels/CDH/lib/hadoop/etc/hadoop下的所有节点到本节点的相同目录.

注意:如果为本机器

16.hive 无法shwo create table ,metastore报错

show create table XX时挂起,metastore报错:

2017-02-1911:57:15,816 INFO org.apache.hadoop.hive.metastore.HiveMetaStore:[pool-4-thread-106]:106: source:10.80.2.106 drop_table : db=AF tbl=AF_TRIGGER_NY2017-02-1911:57:15,816 INFO org.apache.hadoop.hive.metastore.HiveMetaStore.audit:[pool-4-thread-106]: ugi=root ip=10.80.2.106 cmd=source:10.80.2.106 drop_table : db=AF tbl=AF_TRIGGER_NY2017-02-1911:57:15,821 ERROR org.apache.hadoop.hdfs.KeyProviderCache:[pool-4-thread-106]:Couldnot find uri with key [dfs.encryption.key.provider.uri] to create a keyProvider !!2017-02-1911:57:15,858 ERROR org.apache.hadoop.hive.metastore.RetryingHMSHandler:[pool-4-thread-106]:RetryingHMSHandler after 2000 ms (attempt 3 of 10)with error: javax.jdo.JDODataStoreException:Exception thrown flushing changes to datastoreat org.datanucleus.api.jdo.NucleusJDOHelper.getJDOExceptionForNucleusException(NucleusJDOHelper.java:451)at org.datanucleus.api.jdo.JDOTransaction.commit(JDOTransaction.java:165)at org.apache.hadoop.hive.metastore.ObjectStore.commitTransaction(ObjectStore.java:512)at sun.reflect.GeneratedMethodAccessor16.invoke(UnknownSource)at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)at java.lang.reflect.Method.invoke(Method.java:606)

JDO的数据库操作失败,可以看出用了NucleusJDOHelper 这个东西.

2017-02-2212:08:31,982 INFO org.apache.hadoop.hive.metastore.HiveMetaStore:[pool-7-thread-195]:195: source:10.80.2.67 add_partition : db=cloudera_manager_metastore_canary_test_db_hive_hivemetastore_ac86652cb39c742bde00ae73bc9ce531 tbl=CM_TEST_TABLE................................017-02-2212:08:32,166 WARN DataNucleus.Query:[pool-7-thread-195]:Queryfor candidates of org.apache.hadoop.hive.metastore.model.MTableColumnPrivilegeand subclasses resulted inno possible candidatesRequired table missing :"`TBL_COL_PRIVS`"inCatalog""Schema"".DataNucleus requires this table to perform its persistence operations.Either your MetaDatais incorrect,or you need to enable "datanucleus.autoCreateTables"org.datanucleus.store.rdbms.exceptions.MissingTableException:Required table missing :"`TBL_COL_PRIVS`"inCatalog""Schema"".DataNucleus requires this table to perform its persistence operations.Either your MetaDatais incorrect,or you need to enable "datanucleus.autoCreateTablesat org.datanucleus.store.rdbms.table.AbstractTable.exists(AbstractTable.java:485)at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.performTablesValidation(RDBMSStoreManager.java:3380)at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.addClassTablesAndValidate(RDBMSStoreManager.java:3190)at org.datanucleus.store.rdbms.RDBMSStoreManager$ClassAdder.run(RDBMSStoreManager.java:2841)at org.datanucleus.store.rdbms.AbstractSchemaTransaction.execute(AbstractSchemaTransaction.java:122)at org.datanucleus.store.rdbms.RDBMSStoreManager.addClasses(RDBMSStoreManager.java:1605)at org.datanucleus.store.AbstractStoreManager.addClass(AbstractStoreManager.java:954)

由于缺少了TBL_COL_PRIVS 这个表(该表在手动初始元数的时候确实是有的,但是刚查找却没有,生产库却有,莫名丢失),所以hive需要亲自创建该表,因此需要datanucleus.autoCreateSchema设置为true.同时也可以看到hive会尝试在元数据库创建测试库

db=cloudera_manager_metastore_canary_test_db_hive_hivemetastore_ac86652cb39c742bde00ae73bc9ce531 tbl=CM_TEST_TABLE

已经明确说明了要修改datanucleus.autoCreateSchema的值,但CDH中找这个值,有另外一个值:

勾上这个值重启hive.

2017-02-2212:06:20,604 INFO [main]: ql.Driver(SessionState.java:printInfo(927))-LaunchingJob36out of 442017-02-2212:06:20,604 INFO [main]: ql.Driver(Driver.java:launchTask(1772))-Starting task [Stage-49:MAPRED]in serial mode2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-Number of reduce tasks determined at compile time:12017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-In order to change the average load for a reducer (in bytes):2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-set hive.exec.reducers.bytes.per.reducer=<number>2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-In order to limit the maximum number of reducers:2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-set hive.exec.reducers.max=<number>2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-In order to set a constant number of reducers:2017-02-2212:06:20,605 INFO [main]:exec.Task(SessionState.java:printInfo(927))-set mapreduce.job.reduces=<number>2017-02-2212:06:20,611 INFO [main]: mr.ExecDriver(ExecDriver.java:execute(286))-Using org.apache.hadoop.hive.ql.io.CombineHiveInputFormat2017-02-2212:06:20,612 INFO [main]: mr.ExecDriver(ExecDriver.java:execute(308))- adding libjars: file:/home/job/hql/udf.jar,file:///opt/bqjr_extlib/hive/hive-function.jar2017-02-2212:06:20,712 ERROR [main]: mr.ExecDriver(ExecDriver.java:execute(398))- yarn2017-02-2212:06:20,741 WARN [main]: mapreduce.JobResourceUploader(JobResourceUploader.java:uploadFiles(64))-Hadoop command-line option parsing not performed.Implement the Toolinterfaceand execute your application withToolRunner to remedy this.

修改后以发现hive metastore出了问题.

检查hive metastore主机上的/var/log/hive/下的日志:

Unable to open a test connection to the given database. JDBC url = jdbc:mysql://bqdpm1:3306/hive?useUnicode=true&characterEncoding=UTF-8, username = admin. Terminating connection pool (set lazyInit to true if you expect to start your database after your app). Original Exception: ------java.sql.SQLException:Access denied for user 'admin'@'bqdpm1'(using password: YES)at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:957)at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3878)

检查CM上的密码,竟然是admin/admin,修改为mysql数据库的用户名密码,重启hive,show create table正常.

总结:

1)为什么需要设置datanucleus.autoCreateSchema=true? 因某种原因元数据少了一张表,hive尝试恢复元数据,需要有创建表的权限

2)hive cli的日志如果不在/var/log下,就在/tmp/root/hive.log中,CDH把日志做了修改.hivemetastore hiveserver2的都在/var/log/下,要登录到相应的节点去看.

17.CDH重启集群后RM启动不了

查看RM结点/var/log/hadoop-yarn/hadoop-cmf-yarn-RESOURCEMANAGER-bqdpm1.log.out

但是发现日志竟然是两天前的,今天是2.27:

通过CDH重启RM,竟然成功了,查看日志也变成27号的了.

说明一开始CM没有启动RM!

问题:

如果CM出问题了,该如何启动集群?

在CDH中,所有配置都放在内置的PG数据库中.在启动服务时,会从数据库读取配置创建各个配置文件用,每次启动服务都会创建新的配置文件./etc/hadoop下的都是客户端的配置文件.所以一旦CM出了问题,集群将不可用.

18.测试服务器上的YARN再一次挂了

2017-03-0219:16:15,166 ERROR org.apache.hadoop.yarn.server.resourcemanager.ResourceManager: RECEIVED SIGNAL 15: SIGTERM2017-03-0219:16:15,167 ERROR org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:ExpiredTokenRemover received java.lang.InterruptedException: sleep interrupted2017-03-0219:16:15,168 INFO org.mortbay.log:StoppedHttpServer2$SelectChannelConnectorWithSafeStartup@bqdpm1:80882017-03-0219:16:15,168 ERROR org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:ExpiredTokenRemover received java.lang.InterruptedException: sleep interrupted2017-03-0219:16:15,168 ERROR org.apache.hadoop.security.token.delegation.AbstractDelegationTokenSecretManager:ExpiredTokenRemover received java.lang.InterruptedException: sleep interrupted2017-03-0219:16:15,269 INFO org.apache.hadoop.ipc.Server:Stopping server on 80322017-03-0219:16:15,270 INFO org.apache.hadoop.ipc.Server:Stopping IPC Server listener on 80322017-03-0219:16:15,270 INFO org.apache.hadoop.ipc.Server:Stopping IPC ServerResponder2017-03-0219:16:15,270 INFO org.apache.hadoop.ipc.Server:Stopping server on 80332017-03-0219:16:15,271 INFO org.apache.hadoop.ipc.Server:Stopping IPC Server listener on 80332017-03-0219:16:15,271 INFO org.apache.hadoop.ipc.Server:Stopping IPC ServerResponder2017-03-0219:16:15,271 INFO org.apache.hadoop.yarn.server.resourcemanager.ResourceManager:Transitioning to standby state2017-03-0219:16:15,271 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl:StoppingResourceManager metrics system...2017-03-0219:16:15,272 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl:ResourceManager metrics system stopped.2017-03-0219:16:15,272 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl:ResourceManager metrics system shutdown complete.2017-03-0219:16:15,272 INFO org.apache.hadoop.yarn.event.AsyncDispatcher:AsyncDispatcheris draining to stop, igonring any new events.2017-03-0219:16:15,272 WARN org.apache.hadoop.yarn.server.resourcemanager.amlauncher.ApplicationMasterLauncher: org.apache.hadoop.yarn.server.resourcemanager.amlauncher.ApplicationMasterLauncher$LauncherThread interrupted.Returning.

第一条显示,YARN的RM收到一个KILL -15命令.个人觉得可以是由于某个原因操作系统要杀死RM.但是/var/log/messages里垃圾信息太多,没找到OOM相关信息.查看下机器的内容情况:

空闲内存确实比较少.使用top:

top -11:28:25 up 4 days,1:00,3 users, load average:0.16,0.13,0.08Tasks:153 total,1 running,152 sleeping,0 stopped,0 zombieCpu(s):3.7%us,0.5%sy,0.0%ni,95.0%id,0.8%wa,0.0%hi,0.0%si,0.0%stMem:7983596k total,6657872k used,1325724k free,279260k buffersSwap:8126460k total,0k used,8126460k free,2393344k cachedPID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND4423 kafka 2002919m296m13m S 9.63.857:44.57 java8194 yarn 2001245m305m17m S 1.33.90:54.67 java4466 hdfs 2005016m582m18m S 1.07.513:28.20 java14563 root 2003135m424m32m S 0.75.40:10.30 java626 root 200000 S 0.30.00:10.55 jbd2/dm-0-81962 root 2002446m69m2992 S 0.30.954:14.41 cmf-agent5140 mapred 2001092m309m17m S 0.34.02:47.51 java5208 hbase 2001163m329m23m S 0.34.22:58.30 java7746 hive 2002055m473m35m S 0.36.11:28.11 java```发现最占用内存的是HDFS,并不是YARN,这就很奇怪了.也许昨天19.17分确实YARN占用内存比较多.也许是YARN子进程占用的内存太多.# 19.HIVE提交任务失败

Job Submission failed with exception 'java.io.IOException(org.apache.hadoop.yarn.exceptions.InvalidResourceRequestException: Invalid resource request, requested memory < 0, or requested memory > max configured, requestedMemory=1706, maxMemory=1024

百度了很多,这个问题是由于yarn调度器最大可用内存设置的过小导致的,出现问题是设置为1G,非常小.现在改为6G后不出现这个错误.其实是要注意:mapreduce.map.memory.mb这个参数不能过大,注意这是yarn参数,与mar1参数不同.最小容器内存

yarn.scheduler.minimum-allocation-mb

ResourceManager Default Group

容器内存增量

yarn.scheduler.increment-allocation-mb

ResourceManager Default Group

最大容器内存

yarn.scheduler.maximum-allocation-mb

ResourceManager Default Group

1G512MB6GB# 20.yarn刷新资源池报错在CDH中执行修改后刷新资源池报错

3月 10, 下午2点10:30.750 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.AllocationFileLoaderService

Loading allocation file /var/run/cloudera-scm-agent/process/2116-yarn-RESOURCEMANAGER/fair-scheduler.xml

3月 10, 下午2点10:43.001 INFO org.apache.hadoop.yarn.client.RMProxy

Connecting to ResourceManager at bqdpm1/10.80.2.105:8033

3月 10, 下午2点10:43.309 WARN org.apache.hadoop.yarn.server.resourcemanager.AdminService

User yarn doesn't have permission to call 'refreshQueues'

3月 10, 下午2点10:43.309 WARN org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger

USER=yarn IP=10.80.2.105 OPERATION=refreshQueues TARGET=AdminService RESULT=FAILURE DESCRIPTION=Unauthorized user PERMISSIONS=Users [root] are allowed

3月 10, 下午2点10:43.309 WARN org.apache.hadoop.security.UserGroupInformation

PriviledgedActionException as:yarn (auth:SIMPLE) cause:org.apache.hadoop.yarn.exceptions.YarnException: org.apache.hadoop.security.AccessControlException: User yarn doesn't have permission to call 'refreshQueues'

3月 10, 下午2点10:43.309 INFO org.apache.hadoop.ipc.Server

IPC Server handler 0 on 8033, call org.apache.hadoop.yarn.server.api.ResourceManagerAdministrationProtocolPB.refreshQueues from 10.80.2.105:58010 Call#0 Retry#0

org.apache.hadoop.yarn.exceptions.YarnException: org.apache.hadoop.security.AccessControlException: User yarn doesn't have permission to call 'refreshQueues'

at org.apache.hadoop.yarn.ipc.RPCUtil.getRemoteException(RPCUtil.java:38)

at org.apache.hadoop.yarn.server.resourcemanager.AdminService.checkAcls(AdminService.java:222)

at org.apache.hadoop.yarn.server.resourcemanager.AdminService.refreshQueues(AdminService.java:351)

at org.apache.hadoop.yarn.server.api.impl.pb.service.ResourceManagerAdministrationProtocolPBServiceImpl.refreshQueues(ResourceManagerAdministrationProtocolPBServiceImpl.java:97)

at org.apache.hadoop.yarn.proto.ResourceManagerAdministrationProtocolResourceManagerAdministrationProtocolService2.callBlockingMethod(ResourceManagerAdministrationProtocol.java:223)

at org.apache.hadoop.ipc.ProtobufRpcEngineServerProtoBufRpcInvoker.call(ProtobufRpcEngine.java:617)

at org.apache.hadoop.ipc.RPCServer.call(RPC.java:1073)atorg.apache.hadoop.ipc.ServerHandler1.run(Server.java:2086)atorg.apache.hadoop.ipc.ServerHandler1.run(Server.java:2082)atjava.security.AccessController.doPrivileged(NativeMethod)atjavax.security.auth.Subject.doAs(Subject.java:415)atorg.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)atorg.apache.hadoop.ipc.ServerHandler.run(Server.java:2080)

Caused by: org.apache.hadoop.security.AccessControlException: User yarn doesn't have permission to call 'refreshQueues'

at org.apache.hadoop.yarn.server.resourcemanager.RMServerUtils.verifyAccess(RMServerUtils.java:181)

at org.apache.hadoop.yarn.server.resourcemanager.RMServerUtils.verifyAccess(RMServerUtils.java:147)

at org.apache.hadoop.yarn.server.resourcemanager.AdminService.checkAccess(AdminService.java:215)

at org.apache.hadoop.yarn.server.resourcemanager.AdminService.checkAcls(AdminService.java:220)

… 11 more

原因是:修改了yarn的ACL权限.yarn.admin.acl决定了哪些用户可以对yarn做修改,默认是*,为了配置资源队列,我修改为root,但是CDH是用yarn用户启动的yarn,而yarn不在acl中,因此无法刷新资源池.修改:yarn.admin.acl加入yarn.# 21. 执行hive任务报错 hive>select count(1) from test.t;

2017-04-26 15:00:50,719 WARN [main] org.apache.hadoop.mapred.YarnChild: Exception running child : java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:179)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:453)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild2.run(YarnChild.java:164)atjava.security.AccessController.doPrivileged(NativeMethod)atjavax.security.auth.Subject.doAs(Subject.java:422)atorg.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)atorg.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)Causedby:org.apache.hadoop.hive.ql.metadata.HiveException:HiveRuntimeErrorwhileprocessingrowatorg.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:52)atorg.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:170)…8moreCausedby:java.lang.ArrayIndexOutOfBoundsException:atorg.apache.hadoop.hive.ql.exec.vector.expressions.ConstantVectorExpression.evaluateLong(ConstantVectorExpression.java:99)atorg.apache.hadoop.hive.ql.exec.vector.expressions.ConstantVectorExpression.evaluate(ConstantVectorExpression.java:147)atorg.apache.hadoop.hive.ql.exec.vector.expressions.aggregates.VectorUDAFCount.aggregateInput(VectorUDAFCount.java:170)atorg.apache.hadoop.hive.ql.exec.vector.VectorGroupByOperatorProcessingModeGlobalAggregate.processBatch(VectorGroupByOperator.java:193)

at org.apache.hadoop.hive.ql.exec.vector.VectorGroupByOperator.processOp(VectorGroupByOperator.java:866)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:138)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:95)

at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.forward(MapOperator.java:157)

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:45)

... 9 more

初始怀疑是元数据错误,或者是数据中的某个值有问题。第二天准备着手处理,据说又好了。。重启了dbear..目测dbear中jdbc对元数据有锁定。。。# 21.hiveserver2连接不上hiveserver2进程正常,有些用户能使用,有些不能。日志刷新太快,没有参数意义。通过hiveserver2页面看到连接上限不超过100,搜索参数,hive.server2.thrift.max.worker.threads=100工作连接个数,就是连接数。# 22.sqoop导入hive出错

Error: java.lang.ClassCastException: org.apache.hadoop.io.Text cannot be cast to org.apache.hadoop.hive.ql.io.orc.OrcSerdeOrcSerdeRowatorg.apache.hadoop.hive.ql.io.orc.OrcOutputFormatOrcRecordWriter.write(OrcOutputFormat.java:55)

…………………

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1693)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

从报错看是不能转为orc格式.对比一下生产的表和开发的表结构:生产:

CREATE TABLE s2.user_info(

user_name bigint,

…….

etl_in_dt string)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY ','

LINES TERMINATED BY '\n'

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'

开发:

CREATE TABLE user_info(

user_name bigint,

…..

etl_in_dt string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

WITH SERDEPROPERTIES (

'field.delim'=',',

'line.delim'='\n',

'serialization.format'=',')

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat`

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'

比较一下发现:生产:ROW FORMAT DELIMITED开发:ROW FORMAT SERDE两者使用的序列化类应该是不一样的.生产的hive版本:hive-common-1.1.0-cdh5.7.0开发的hive版本:hive-common-1.1.0-cdh5.7.1CDH5.7.1中,hive不在默认使用存储格式所带的序列化类,而是使用hive默认的`org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe`这样,无法把数据存储到orc格式中.而CDH5.7.0中,hive默认使用存储格式的序列化类,即'org.apache.hadoop.hive.ql.io.orc.OrcSerde'.解决办法:建表时指定SERDE:

CREATE TABLE user_info(

user_name bigint,

……

ROW FORMAT SERDE

'org.apache.hadoop.hive.ql.io.orc.OrcSerde'

WITH SERDEPROPERTIES (

'field.delim'=',',

'line.delim'='\n',

'serialization.format'=',')

STORED AS INPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat'

LOCATION

'hdfs://bqbpdev/user/hive/warehouse/s2.db/user_info'

# 22. nodemanager 本地日志

2017-06-27 02:52:39,133 INFO org.apache.hadoop.metrics2.impl.MetricsSystemImpl: NodeManager metrics system started

2017-06-27 02:52:39,183 WARN org.apache.hadoop.yarn.server.nodemanager.DirectoryCollection: Unable to create directory /opt/hadoop-2.8.0/tmp/nm-local-dir error mkdir of /opt/hadoop-2.8.0/tmp failed, removing from the list of valid directories.

2017-06-27 02:52:39,194 ERROR org.apache.hadoop.yarn.server.nodemanager.LocalDirsHandlerService: Most of the disks failed. 1/1 local-dirs are bad: /opt/hadoop-2.8.0/tmp/nm-local-dir;

上面报错,无法在$HADOOP_YARN_HOME创建本地目录,最终创建到/tmp下了.这里会保存一次nodemanager的本地信息,比如健康检查等.报错的原因是没有权限.无法创建这些目录,在RM上将会显示这些node为unhealthy,并且nodemanager无法分配container!最好将其设置在持久的目录中.# 23.nodemanager的内存设置版本是2.8.0,启动后RM读不能容器信息.

2017-06-27 02:52:39,528 WARN org.apache.hadoop.yarn.server.nodemanager.containermanager.monitor.ContainersMonitorImpl: NodeManager configured with 8 G physical memory allocated to containers, which is more than 80% of the total physical memory available (1.8 G). Thrashi

ng might happen.

2017-06-27 02:52:39,529 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Nodemanager resources: memory set to 8192MB.

2017-06-27 02:52:39,529 INFO org.apache.hadoop.yarn.server.nodemanager.NodeStatusUpdaterImpl: Nodemanager resources: vcores set to 8.

如果没有设置的话,默认NM的内存是8G,VCORE是8.看来2.8.0版本必须要设置这些参数了.24.执行mr任务失败, vmemory

> insert into test values(1),(2),(3);

WARNING: Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Query ID = hive_20170627090441_eef8595f-07a6-4a45-b1b9-cca0c19ed8f4

Total jobs = 3

Launching Job 1 out of 3

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1498510574463_0009, Tracking URL = http://hadoop1:8088/proxy/application_1498510574463_0009/

Kill Command = /opt/hadoop-2.8.0/bin/hadoop job -kill job_1498510574463_0009

Hadoop job information for Stage-1: number of mappers: 0; number of reducers: 0

2017-06-27 09:04:54,994 Stage-1 map = 0%, reduce = 0%

Ended Job = job_1498510574463_0009 with errors

Error during job, obtaining debugging information…

FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

MapReduce Jobs Launched:

Stage-Stage-1: HDFS Read: 0 HDFS Write: 0 FAIL

Total MapReduce CPU Time Spent: 0 msec

yarn日志中:

Application application_1498529323445_0001 failed 2 times due to AM Container for appattempt_1498529323445_0001_000002 exited with exitCode: -103

Failing this attempt.Diagnostics: Container [pid=4847,containerID=container_1498529323445_0001_02_000001] is running beyond virtual memory limits. Current usage: 71.0 MB of 128 MB physical memory used; 2.7 GB of 268.8 MB virtual memory used. Killing container.

Dump of the process-tree for container_1498529323445_0001_02_000001 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 4856 4847 4847 4847 (java) 242 10 2795884544 17841 /opt/jdk1.8.0_131/bin/java -Djava.io.tmpdir=/home/yarn/nm-local-dir/usercache/hive/appcache/application_1498529323445_0001/container_1498529323445_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/var/log/yarn/userlogs/application_1498529323445_0001/container_1498529323445_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Xmx1024m org.apache.hadoop.mapreduce.v2.app.MRAppMaster

|- 4847 4845 4847 4847 (bash) 0 0 108634112 338 /bin/bash -c /opt/jdk1.8.0_131/bin/java -Djava.io.tmpdir=/home/yarn/nm-local-dir/usercache/hive/appcache/application_1498529323445_0001/container_1498529323445_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/var/log/yarn/userlogs/application_1498529323445_0001/container_1498529323445_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Xmx1024m org.apache.hadoop.mapreduce.v2.app.MRAppMaster 1>/var/log/yarn/userlogs/application_1498529323445_0001/container_1498529323445_0001_02_000001/stdout 2>/var/log/yarn/userlogs/application_1498529323445_0001/container_1498529323445_0001_02_000001/stderr

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

For more detailed output, check the application tracking page: http://hadoop1:8088/cluster/app/application_1498529323445_0001 Then click on links to logs of each attempt.

. Failing the application.

在https://stackoverflow.com/questions/21005643/container-is-running-beyond-memory-limits 有一段话:

Each Container will run JVMs for the Map and Reduce tasks. The JVM heap size should be set to lower than the Map and Reduce memory defined above, so that they are within the bounds of the Container memory allocated by YARN.

由于每个container中都要运行一个JVM来执行mr任务,因此mr启动jvm的内存要比container小.为什么要小,因为yarn按照mapreduce.map.memory.mb mapreduce.reduce.memory.mb 来为mr任务分配大小的container,而container中又要运行JVM,JVM的内存要小于container的.但为什么小这么多?看来还是对yarn的任务不理解.

vi mapred-site.xml

mapreduce.map.java.opts

-Xmx96m

mapreduce.reduce.java.opts

-Xmx96m

修改后问题,依旧.发现一个问题:`2.7 GB of 268.8 MB virtual memory used`很难以理解,这是什么情况.一些有用的网站:http://www.cnblogs.com/liuming1992/p/5040262.htmlhttps://stackoverflow.com/questions/21005643/container-is-running-beyond-memory-limits#http://bbs.umeng.com/thread-12864-1-1.html 这个资料比较全在第三个网页中找到了答案.原来container中的任务实际使用的内存超过了计算出来的内存(物理内存*2.1).但为什么会使用这么多虚拟内存?或者说需要这么2.7*1024/多物理内存?注意:在上面的yarn日志中,看到`-Xmx1024m org.apache.hadoop.mapreduce.v2.app.MRAppMaster`说明yarn还是按照1024来启动的.在yarn的配置中,确实搜索到一条:

yarn.app.mapreduce.am.command-opts

-Xmx1024m

mapred-default.xml

这个是mapreduce的java启动参数,来自mapred-default.xml.按这个来算,AM启动内存是1024MB,已经超过了一个container内存的大小(128MB).尝试修改这个参数:在mapred-site.xml中

yarn.app.mapreduce.am.command-opts

-Xmx96m

但实际话并不启作用,这个参数指的是JVM最大内存,在yarn的限制下最多只能使用128M的内存.进一步分析:128*2.1=268,正好是VM的总量.也就是是说,yarn允许使用的虚拟内存是268M,但程序要使用的内存是2.7G.而实际使用的物理内存只有71MB,并没有超过一个container的大小.这里可能是mr任务的算法问题,为一个任务申请过大的内存,从而超过了yarn允许使用的最大虚拟内存.我的机器上内存还是够用的:

[root@hadoop5 ~]# free -m

total used free shared buffers cached

Mem: 1877 1516 360 0 52 967

-/+ buffers/cache: 496 1381

Swap: 815 0 815

而且运行两个container还是够用的,但是mr任务需要的内存远远大于可用的.解决办法有两个:1.关闭yarn的vm检测2.修改vm使用比例(当前机器没有2.7G的虚拟内存)这里选择1,修改yarn参数:在我们的生产环境中直接禁用了虚拟内存检测:

yarn.nodemanager.vmem-check-enabled

false

yarn-default.xml

那么对应的解决办法就是:1.机器内存足够大的话,禁用虚拟内存2.内存不够,扩大这个参数.最后,CDH给出了这个问题的解释,原因是RHEL的内存分配有问题:http://blog.cloudera.com/blog/2014/04/apache-hadoop-yarn-avoiding-6-time-consuming-gotchas/https://www.ibm.com/developerworks/community/blogs/kevgrig/entry/linux_glibc_2_10_rhel_6_malloc_may_show_excessive_virtual_memory_usage?lang=enhttp://blog.csdn.net/chen19870707/article/details/43202679 大神的追踪http://blog.2baxb.me/archives/918在glibc 2.11.中都会出现这个问题,多线程都会维持自己的线程缓冲内存区,而这个大小是64M.在大内存的机器上,增加该参数可以提高多线程的情况,但是小内存机器只会增加负担.找出问题了,那么修改MALLOC_ARENA_MAX这个环境变量,在/etc/profile中加入这个变量.export MALLOC_ARENA_MAX=1重新登录后,重启yarn,问题依旧,尝试修改$HADOOP_CONF_DIR下的所有.sh文件.hadoop-env.sh httpfs-env.sh kms-env.sh mapred-env.sh yarn-env.sh问题依旧啊.说明这样设置没用啊.直接关闭检测吧可闭会可以.事实上,关闭该参数后,container直接被kill了,而且引发了其它问题,看23条.在https://issues.apache.org/jira/browse/MAPREDUCE-3068 上有关于这个问题的讨论.似乎java8 比java7需要更多的问题.

MAPREDUCE-3068.diff22/Sep/11 16:470.6 kB

Issue Links

duplicates

MAPREDUCE-3065 ApplicationMaster killed by NodeManager due to excessive virtual memory consumption

MAPREDUCE-3065 ApplicationMaster killed by NodeManager due to excessive virtual memory consumption  RESOLVED

RESOLVED

MAPREDUCE-2748 [MR-279] NM should pass a whitelisted environmental variables to the container

MAPREDUCE-2748 [MR-279] NM should pass a whitelisted environmental variables to the container  CLOSED

CLOSED

is related to

HADOOP-7154 Should set MALLOC_ARENA_MAX in hadoop-config.sh

HADOOP-7154 Should set MALLOC_ARENA_MAX in hadoop-config.sh  CLOSED

CLOSED

Activity

All

Comments

Work Log

History

Activity

Transitions

Ascending order - Click to sort in descending order

Permalink

Chris Riccomini added a comment - 22/Sep/11 16:41

Chris Riccomini added a comment - 22/Sep/11 16:41

Arun says in https://issues.apache.org/jira/browse/MAPREDUCE-3065 that the NM should be modified to do this.

Based on poking around, it appears that bin/yarn-config.sh is a better place to do this, as it then makes it into the RM, NM, and the Container from there (given that the container launch context gets the env variables from its parent process, the NM).

Does anyone have an argument for not doing it in yarn-config.sh? Already tested locally, and it appears to work.

Permalink

Chris Riccomini added a comment - 22/Sep/11 16:47

Chris Riccomini added a comment - 22/Sep/11 16:47

tested locally. pmap without this is 2g vmem. pmap with this is 1.3 gig vmem. echo'ing env variable makes it through to the AM and containers.

Permalink

Arun C Murthy added a comment - 22/Sep/11 17:24

Arun C Murthy added a comment - 22/Sep/11 17:24

Chris, setting this in yarn-default.sh will work for RM/NM itself.

However, the containers don't inherit NM's environment - thus we'll need to explicitly do it. Take a look at ContainerLaunch.sanitizeEnv.

To be more general we need to add a notion of 'admin env' so admins can set MALLOC_ARENA_MAX and others automatically for all containers.

Makes sense?

Chris Riccomini added a comment - 22/Sep/11 17:40 Think so. Regarding admin env, are you saying an admin-env.sh file (similar to hadoop-env.sh)?

Chris Riccomini added a comment - 22/Sep/11 17:40 Think so. Regarding admin env, are you saying an admin-env.sh file (similar to hadoop-env.sh)?

Permalink

Arun C Murthy added a comment - 22/Sep/11 17:46

Arun C Murthy added a comment - 22/Sep/11 17:46

No, not another *-env.sh file.

It's simpler to make it configurable via yarn-site.xml.

Add a yarn.nodemanager.admin-env config which takes 'key=value','key=value' etc.

There is similar functionality for MR applications - take a look at MRJobConfig.MAPRED_ADMIN_USER_ENV and how it's used in MapReduceChildJVM.setEnv which uses MRApps.setEnvFromInputString. Maybe you can move MRApps.setEnvFromInputString to yarn-common and use it.

来源: https://issues.apache.org/jira/browse/MAPREDUCE-3068上面说yarn.sh只能保证RM NM会改变(在container中可以找到am Launcher中可以查到MALLOC_ARENA_MAX参数),但是container不会.需要在yarn-site.xml中加入一条yarn.nodemanager.admin-env 变量,才能传到container里面.但是在yarn-site.xml,这个参数的默认值是"MALLOC_ARENA_MAX=$MALLOC_ARENA_MAX",如果起作用,那么这种方法就是无效的HADOOP-7154(https://issues.apache.org/jira/browse/HADOOP-7154) 介绍了这个问题,说是要export MALLOC_ARENA_MAX=1这个参数,但实际无效.https://issues.apache.org/jira/browse/YARN-4714里面说,JAVA 8比JAVA 7需要更多的内存,这个问题只在JAVA 8上出现过.尝试将JDK改为1.7后,用的vm大幅减少,但是还报错:

Application application_1498558234126_0002 failed 2 times due to AM Container for appattempt_1498558234126_0002_000002 exited with exitCode: -103

Failing this attempt.Diagnostics: Container [pid=9429,containerID=container_1498558234126_0002_02_000001] is running beyond virtual memory limits. Current usage: 96.0 MB of 128 MB physical memory used; 457.9 MB of 268.8 MB virtual memory used. Killing container.

Dump of the process-tree for container_1498558234126_0002_02_000001 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 9438 9429 9429 9429 (java) 481 15 470687744 24256 /opt/jdk1.7.0_80/bin/java -Djava.io.tmpdir=/home/yarn/nm-local-

但是用的vm依然不少关闭vm检测,再次执行任务,报物理内存不足.物理内存不足,是mr任务无法在一个container分配内存,虽然mr的jvm选项小于container大小,但是一个程序使用的内存是有下限的,container的大小不能满足mr的运行内存下限(我猜的),所有报物理内存错误.解决方式:1.增加container的最大内存上限2.设置mapreduce.map|reduce.memery.mb .该参数传递给container,来设置container大小,因此该值在container的上下限之间3.修改mr的jvm启动参数,大概为mapreduce.map.memery.mb的75%经过以上修改后,终于能执行这个任务了.总结:1.java8比java7多用三倍的虚拟内存2.MALLOC_ARENA_MAX环境变量似乎不起任务作用,在*.evn.sh以及yarn-site.xml里加入admin.evn配置都不行3.mr任务有最小内存限制,大概为100M?4.注意重启hive23./tmp/yarn/staging问题

017-06-27 14:59:15,847 INFO [main] org.apache.hadoop.mapreduce.v2.jobhistory.JobHistoryUtils: Default file system [hdfs://mycluster:8020]

2017-06-27 14:59:15,861 INFO [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Previous history file is at hdfs://mycluster:8020/tmp/hadoop-yarn/staging/hive/.staging/job_1498542214720_0008/job_1498542214720_0008_1.jhist

2017-06-27 14:59:15,879 WARN [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Could not parse the old history file. Will not have old AMinfos

java.io.FileNotFoundException: File does not exist: /tmp/hadoop-yarn/staging/hive/.staging/job_1498542214720_0008/job_1498542214720_0008_1.jhist

at org.apache.hadoop.hdfs.server.namenode.INodeFile.valueOf(INodeFile.java:71)

……….

at org.apache.hadoop.ipc.ServerRpcCall.run(Server.java:845)atorg.apache.hadoop.ipc.ServerRpcCall.run(Server.java:788)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1807)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2455)

执行hive insert into test values(1)遇到的问题.百般查找不没有解决办法https://issues.apache.org/jira/browse/YARN-1058这里的意思是,在rm恢复过程中发现之前的job状态挂了.上面说已经修复,这里又重现了???删除上面那个目录后报这个错:

2017-06-27 15:32:10,884 WARN [main] org.apache.hadoop.mapreduce.v2.app.MRAppMaster: Could not parse the old history file. Will not have old AMinfos

java.io.IOException: Event schema string not parsed since its nul

at org.apache.hadoop.mapreduce.jobhistory.EventReader.(EventReader.java:88)

at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.readJustAMInfos(MRAppMaster.java:1366)

at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.processRecovery(MRAppMaster.java:1303)

at org.apache.hadoop.mapreduce.v2.app.MRAppMaster.serviceStart(MRAppMaster.java:1156)

at org.apache.hadoop.service.AbstractService.start(AbstractService.java:193)

at org.apache.hadoop.mapreduce.v2.app.MRAppMaster$5.run(MRAppMaster.java:1644)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

重启yarn,重新执行.发现每个任务都会尝试两次:其中第一个,日志中没有发现错误,但AM中显示了上面的问题:

Application application_1498549196004_0001 failed 2 times due to AM Container for appattempt_1498549196004_0001_000002 exited with exitCode: -104

Failing this attempt.Diagnostics: Container [pid=9115,containerID=container_1498549196004_0001_02_000001] is running beyond physical memory limits. Current usage: 136.4 MB of 128 MB physical memory used; 1.7 GB of 268.8 MB virtual memory used. Killing container.

Dump of the process-tree for container_1498549196004_0001_02_000001 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 9124 9115 9115 9115 (java) 867 24 1712570368 34573 /opt/jdk1.8.0_131/bin/java -Djava.io.tmpdir=/home/yarn/nm-local-dir/usercache/hive/appcache/application_1498549196004_0001/container_1498549196004_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/var/log/yarn/userlogs/application_1498549196004_0001/container_1498549196004_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Xmx96m org.apache.hadoop.mapreduce.v2.app.MRAppMaster

|- 9115 9113 9115 9115 (bash) 0 0 108634112 338 /bin/bash -c /opt/jdk1.8.0_131/bin/java -Djava.io.tmpdir=/home/yarn/nm-local-dir/usercache/hive/appcache/application_1498549196004_0001/container_1498549196004_0001_02_000001/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/var/log/yarn/userlogs/application_1498549196004_0001/container_1498549196004_0001_02_000001 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog -Xmx96m org.apache.hadoop.mapreduce.v2.app.MRAppMaster 1>/var/log/yarn/userlogs/application_1498549196004_0001/container_1498549196004_0001_02_000001/stdout 2>/var/log/yarn/userlogs/application_1498549196004_0001/container_1498549196004_0001_02_000001/stderr

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

For more detailed output, check the application tracking page: http://hadoop1:8088/cluster/app/application_1498549196004_0001 Then click on links to logs of each attempt.

. Failing the application.

就是内存不够被kill了.第二个中,显示的是上面的问题,就是job的历史状态找不到了.估计上面的问题是一个bug,在旧版本中修复了,但是新版本中又出现了.因此,先要解决内存不够的问题.# 24.hive parquet格式查询的问题

我理解您的问题是, 执行以下查询得到的结果不正确

INSERT OVERWRITE TABLE S3.BUSINESS_CONTRACT_ERR PARTITION(P_DAY='2017-07-17')

SELECT T1.SERIALNO,

CURRENT_TIMESTAMP,

T1.DEAL_TIME,

T1.DEAL_FLAG,

T1.ERR_TYPE,

T1.ACCT_LOAN_NO,

'2017-07-17'

FROM S3.BUSINESS_CONTRACT_ERR T1

LEFT JOIN (SELECT SERIALNO,2 ERR_TYPE

FROM S3.BUSINESS_CONTRACT_ERR

WHERE P_DAY='2017-07-17'

GROUP BY SERIALNO

HAVING COUNT(1)>1

) T2 ON T1.SERIALNO=T2.SERIALNO AND T1.ERR_TYPE=T2.ERR_TYPE

WHERE T1.P_DAY='2017-07-17' AND T2.SERIALNO IS NULL;

您非常可能碰到了 HIVE 的Bug(HIVE-12762),该 bug 与Hive的Filter下推优化有关,而这个问题只出现在Parquet表上.建议您执行该 query 前使用以下命令看是否可以避免这个问题.>set hive.optimize.index.filter=false;# 25.sparksql执行报错当关联的小表比较多时,小表会被广播,一旦超过广播的缓存,就会报错.不是很了解sparksql,不做详解.# 26.hive锁表问题向表中插入数据,一直卡住不动

hive> insert into table S1.BANK_LINK_INFO PARTITION (P_DAY='2999-12-31',DATA_SOURCE='XDGL.BANK_LINK_INFO') SELECT * FROM STAGE.TS_AMAR_BANK_LINK_INFO;

Unable to acquire IMPLICIT, EXCLUSIVE lock s1@bank_link_info@p_day=2999-12-31/data_source=XDGL.BANK_LINK_INFO after 100 attempts.

但是在hive中查看没有lock:

hive> show locks S1.BANK_LINK_INFO extended;

OK

在zk中查看hive的锁:

[zk: localhost:2181(CONNECTED) 2] ls /hive_zookeeper_namespace_hive/s1

[pre_data, ac_actual_paydetail, send_payment_schedule_log, LOCK-SHARED-0000305819, LOCK-SHARED-0000322545, LOCK-SHARED-0000322540, flow_opinion, LOCK-SHARED-0000322543, business_paypkg_apply, retail_info, transfer_group, LOCK-SHARED-0000319012, LOCK-SHARED-0000322538, LOCK-SHARED-0000322539, LOCK-SHARED-0000319249, LOCK-SHARED-0000322533, LOCK-SHARED-0000322496, acct_payment_log, LOCK-SHARED-0000322532, LOCK-SHARED-0000322493, LOCK-SHARED-0000322494, business_contract, values__tmp__table__1, rpt_over_loan, LOCK-SHARED-0000319284, LOCK-SHARED-0000319313, business_credit, ind_info, consume_collectionregist_info, cms_contract_check_record, cms_contract_quality_mark, LOCK-SHARED-0000322280, LOCK-SHARED-0000305027, LOCK-SHARED-0000319276, code_library, flow_task, LOCK-SHARED-0000318982, dealcontract_reative, bank_link_info, LOCK-SHARED-0000319309, LOCK-SHARED-0000307876, LOCK-SHARED-0000319102, flow_object, LOCK-SHARED-0000322467, user_info, LOCK-SHARED-0000322503, store_info, LOCK-SHARED-0000313189, LOCK-SHARED-0000319298, post_data, LOCK-SHARED-0000319326, LOCK-SHARED-0000322458, LOCK-SHARED-0000310823, LOCK-SHARED-0000305237, acct_payment_schedule, LOCK-SHARED-0000322522, ind_info_cu, LOCK-SHARED-0000322521, LOCK-SHARED-0000322483, import_file_kft, LOCK-SHARED-0000322516, LOCK-SHARED-0000322517, acct_loan, import_file_ebu, LOCK-SHARED-0000322514, LOCK-SHARED-0000322477, LOCK-SHARED-0000322472, LOCK-SHARED-0000305198, LOCK-SHARED-0000322473]

[zk: localhost:2181(CONNECTED) 3] ls /hive_zookeeper_namespace_hive/s1/bank_link_info

[p_day=2017-02-17, p_day=2017-05-09, p_day=2017-02-15, p_day=2017-05-08, p_day=2017-02-16, p_day=2017-05-07, p_day=2017-02-13, p_day=2017-05-06, p_day=2017-07-30, p_day=2017-02-14, p_day=2017-05-05, p_day=2017-07-31, p_day=2017-02-11, p_day=2017-05-04, p_day=2017-08-01, p_day=2017-02-12, p_day=2017-05-03, p_day=2017-05-02, p_day=2017-08-03, p_day=2017-02-10, p_day=2017-05-01, p_day=2017-08-02, p_day=2017-08-05, p_day=2017-08-04, p_day=2017-04-30, p_day=2017-08-07, p_day=2017-08-06, p_day=2017-08-08, p_day=2017-02-08, p_day=2017-02-09, p_day=2017-04-29, p_day=2017-04-28, p_day=2016-09-07, p_day=2017-04-27, p_day=2017-04-26, p_day=2017-04-25, p_day=2017-04-24, p_day=2017-04-23, p_day=2017-04-22, p_day=2017-04-21, p_day=2017-04-20, p_day=2017-03-09, p_day=2017-05-29, p_day=2017-05-28, p_day=2017-05-27, p_day=2017-05-26, p_day=2017-07-10, p_day=2017-05-25, p_day=2017-07-11, p_day=2017-05-24, p_day=2017-07-12, p_day=2017-05-23, p_day=2017-07-13, p_day=2017-05-22, p_day=2017-07-14, p_day=2017-05-21, p_day=2017-07-15, p_day=2017-05-20, p_day=2017-07-16, p_day=2017-07-17, p_day=2017-07-18, p_day=2017-07-19, p_day=2017-05-19, p_day=2017-05-18, p_day=2017-05-17, p_day=2017-05-16, p_day=2017-07-20, p_day=2017-05-15, p_day=2017-07-21, p_day=2017-05-14, p_day=2017-07-22, p_day=2017-05-13, p_day=2017-07-23, LOCK-SHARED-0000001901, p_day=2017-05-12, p_day=2017-07-24, p_day=2017-05-11, p_day=2017-07-25, p_day=2017-05-10, p_day=2017-07-26, p_day=2017-07-27, p_day=2017-07-28, p_day=2017-07-29, p_day=2017-03-29, p_day=2017-03-28, p_day=2017-03-27, p_day=2017-03-26, p_day=2017-03-25, p_day=2017-03-24, p_day=2017-06-20, p_day=2017-03-23, p_day=2017-06-21, p_day=2017-03-22, p_day=2017-06-22, p_day=2017-03-21, p_day=2017-06-23, p_day=2017-03-20, p_day=2017-06-24, p_day=2017-06-25, p_day=2017-06-26, p_day=2017-06-27, p_day=2017-06-28, p_day=2017-06-29, p_day=2017-03-19, p_day=2017-03-18, p_day=2017-03-17, p_day=2017-03-16, p_day=2017-03-15, p_day=2017-03-14, p_day=2017-06-30, p_day=2017-03-13, p_day=2017-03-12, p_day=2017-03-11, p_day=2017-07-01, p_day=2017-03-10, p_day=2017-07-02, p_day=2017-07-03, p_day=2017-07-04, p_day=2017-05-31, p_day=2017-07-05, p_day=2017-05-30, p_day=2017-07-06, p_day=2017-07-07, p_day=2017-07-08, p_day=2017-07-09, p_day=2017-04-19, p_day=2017-04-18, p_day=2017-04-17, p_day=2017-04-16, p_day=2017-04-15, p_day=2017-04-14, p_day=2017-04-13, p_day=2017-04-12, p_day=2017-04-11, p_day=2017-06-01, p_day=2017-04-10, p_day=2017-06-02, p_day=2017-06-03, p_day=2017-06-04, p_day=2017-06-05, p_day=2017-06-06, p_day=2017-06-07, p_day=2017-06-08, p_day=2017-06-09, p_day=2017-04-09, p_day=2017-04-08, p_day=2017-04-07, p_day=2017-04-06, p_day=2017-04-05, p_day=2017-04-04, p_day=2017-04-03, p_day=2017-04-02, p_day=2017-06-10, p_day=2017-04-01, p_day=2017-06-11, p_day=2017-06-12, p_day=2017-03-31, p_day=2017-06-13, p_day=2017-03-30, p_day=2017-06-14, p_day=2017-06-15, p_day=2017-06-16, p_day=2017-06-17, p_day=2017-06-18, p_day=2017-06-19, p_day=2999-12-31]

[zk: localhost:2181(CONNECTED) 4] RMR /hive_zookeeper_namespace_hive/s1/bank_link_info

删除zk中锁:`[zk: localhost:2181(CONNECTED) 5] rmr /hive_zookeeper_namespace_hive/s1/bank_link_info`再执行sql语句,执行成功!说明hive中查看锁的方式不准确,必须要zk中删除!# 27 hive 存储格式由orc修改为parquet后,抽数据脚本没改导致的

17/08/23 03:12:20 INFO mapreduce.Job: Task Id : attempt_1503156702026_35479_m_000000_0, Status : FAILED

Error: java.lang.RuntimeException: Should never be used

at org.apache.hadoop.hive.ql.io.parquet.MapredParquetOutputFormat.getRecordWriter(MapredParquetOutputFormat.java:76)

at org.apache.hive.hcatalog.mapreduce.FileOutputFormatContainer.getRecordWriter(FileOutputFormatContainer.java:102)

at org.apache.hive.hcatalog.mapreduce.HCatOutputFormat.getRecordWriter(HCatOutputFormat.java:260)

at org.apache.hadoop.mapred.MapTask$NewDirectOutputCollector.(MapTask.java:647)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:767)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

原为orc,脚本为sqoop import,parquet是用sparksql抽的.用sqoop的语法

sqoop import --connect jdbc:mysql://10.39.1.45:3307/DIRECT --username bi_query --password 'ZI6hCcD9Kj6p' --query " select ID, CALL_ID, IS_CONNECTED, DIRECT, CALL_TIME, START_TIME, END_TIME, CALL_LONG, IS_QUALITY, AGENT_ID, AGENT_TEAM_NO, AGENT_DEPARTMENT_NO, CUST_ID, CUST_NO, CUST_NAME, CREATE_TIME, CUST_CHANNEL, CUST_GROUP, RELEASE_TIME, HEAD_IMG_ID, CUST_TRACE, RING_LONG, CHAT_LONG, SUM_LONG, WAIT_LONG, LEAVE_TIME , date_format(now(),'%Y-%c-%d %h:%i:%s') as ETL_IN_DT from DIRECT.CX_ETALK_SESSION_HISTORY where $CONDITIONS " --hcatalog-database STAGE --hcatalog-table TS_ICS_ETALK_SESSION_HISTORY --hcatalog-storage-stanza 'stored as ORC' --hive-delims-replacement " " -m 1

```

抽到parquet的表时,会报这个错。

将其改成用sparksql抽就好了。

<wiz_tmp_tag id="wiz-table-range-border" contenteditable="false" style="display: none;">

hadoop问题集(1)的更多相关文章

- Hadoop - Ambari集群管理剖析

1.Overview Ambari是Apache推出的一个集中管理Hadoop的集群的一个平台,可以快速帮助搭建Hadoop及相关以来组件的平台,管理集群方便.这篇博客记录Ambari的相关问题和注意 ...

- 超快速使用docker在本地搭建hadoop分布式集群

超快速使用docker在本地搭建hadoop分布式集群 超快速使用docker在本地搭建hadoop分布式集群 学习hadoop集群环境搭建是hadoop入门的必经之路.搭建分布式集群通常有两个办法: ...

- hadoop的集群安装

hadoop的集群安装 1.安装JDK,解压jar,配置环境变量 1.1.解压jar tar -zxvf jdk-7u79-linux-x64.tar.gz -C /opt/install //将jd ...

- 大数据系列之Hadoop分布式集群部署

本节目的:搭建Hadoop分布式集群环境 环境准备 LZ用OS X系统 ,安装两台Linux虚拟机,Linux系统用的是CentOS6.5:Master Ip:10.211.55.3 ,Slave ...

- 基于Hadoop分布式集群YARN模式下的TensorFlowOnSpark平台搭建

1. 介绍 在过去几年中,神经网络已经有了很壮观的进展,现在他们几乎已经是图像识别和自动翻译领域中最强者[1].为了从海量数据中获得洞察力,需要部署分布式深度学习.现有的DL框架通常需要为深度学习设置 ...

- Hadoop分布式集群搭建hadoop2.6+Ubuntu16.04

前段时间搭建Hadoop分布式集群,踩了不少坑,网上很多资料都写得不够详细,对于新手来说搭建起来会遇到很多问题.以下是自己根据搭建Hadoop分布式集群的经验希望给新手一些帮助.当然,建议先把HDFS ...

- Hadoop分布式集群搭建

layout: "post" title: "Hadoop分布式集群搭建" date: "2017-08-17 10:23" catalog ...

- 暑假第二弹:基于docker的hadoop分布式集群系统的搭建和测试

早在四月份的时候,就已经开了这篇文章.当时是参加数据挖掘的比赛,在计科院大佬的建议下用TensorFlow搞深度学习,而且要在自己的hadoop分布式集群系统下搞. 当时可把我们牛逼坏了,在没有基础的 ...

- Hadoop基础-Hadoop的集群管理之服役和退役

Hadoop基础-Hadoop的集群管理之服役和退役 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 在实际生产环境中,如果是上千万规模的集群,难免一个一个月会有那么几台服务器出点故 ...

- 使用Docker在本地搭建Hadoop分布式集群

学习Hadoop集群环境搭建是Hadoop入门必经之路.搭建分布式集群通常有两个办法: 要么找多台机器来部署(常常找不到机器) 或者在本地开多个虚拟机(开销很大,对宿主机器性能要求高,光是安装多个虚拟 ...

随机推荐

- sql 优化的几种方法

.对查询进行优化,应尽量避免全表扫描,首先应考虑在 where 及 order by 涉及的列上建立索引. .应尽量避免在 where 子句中对字段进行 null 值判断,否则将导致引擎放弃使用索引而 ...

- Virtualization state: Optimized (version 7.4 installed)

[Virtualization state: Optimized (version 7.4 installed)] [root@localhost ~]# cd /mnt/ [root@localho ...

- Java中泛型的运用实例

package example6; import java.util.ArrayList;import java.util.HashMap;import java.util.HashSet;impor ...

- webpack新建项目

记录如何搭建一个最简单的能跑的项目! 1.首先,需要下载安装nodejs环境,可以直接百度搜索nodejs去官网下载符合你操作系统的环境. 安装完nodejs后,在控制台输入命令: npm -vers ...

- 【reidis中ruby模块版本老旧利用rvm来更新】

//gem install redis时会遇到如下的error: //借助rvm来update ruby版本

- React基本语法

React 一.导入 0.局部安装 react 和 react-dom npm install --save-dev react react-dom 1.react ...

- Hive操作之向分区表中导入数据的语义错误

1.建完分区表之后,向表中导入数据 命令为: load data local inpath '/home/admin/Desktop/2015082818' into table db_web_dat ...

- Zabbix 3.4.11版本 自定义监控项

一.实验思路过程 创建项目.触发器.图形,验证监控效果: Template OS Linux 模板基本涵盖了所有系统层面的监控,包括了我们最关注的 几项:ping.load.cpu 使用率.memor ...

- Linux字符设备驱动--No.2

分析中断注册函数:request_irq int butsOpen(struct inode *p, struct file *f) { int irq; int i; ; printk(KERN_E ...

- c++动态库封装及调用(2、windows下动态库创建)

DLL即动态链接库(Dynamic-Link Libaray)的缩写,相当于Linux下的共享对象.Windows系统中大量采用了DLL机制,甚至内核的结构很大程度依赖与DLL机制.Windows下的 ...