gluoncv,faster rcnn 处理难样本

难样本,是我们在标注的时候,连肉眼都看不清的小像素物体,也可以说是既不是正样本,也不是负样本。

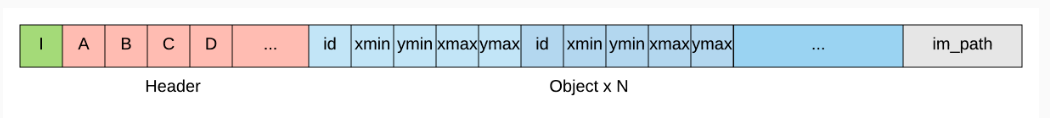

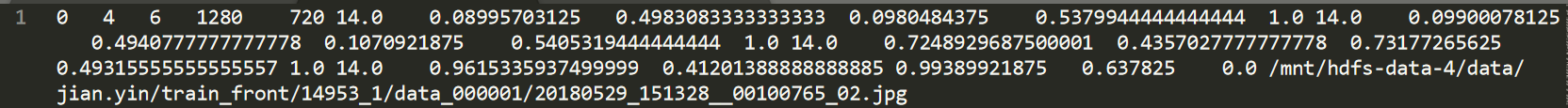

利用gluoncv时,这些标注框也实在存在,gluoncv会实在将他当做一个GT,但我们知道这是不好的。于是,我们在标注的时候,给他一个属性,他是一个难样本。之前用过lst文件训练自己的数据集,这里的Lst文件需要改一下格式;

这里的B就是6了,包括id,xmin,ymin,xmax,ymax,hard_flag,可以自己写,结构类似即可。

Faster rcnn:

https://github.com/dmlc/gluon-cv/blob/master/scripts/detection/faster_rcnn/train_faster_rcnn.py#L313

从这个大epoch里面,我们可以知道,将我们的难样本属性应该在train_data之前就弄好了。

也是就是说在:

from gluoncv.data.transforms.presets.rcnn import FasterRCNNDefaultTrainTransform

from gluoncv.data.transforms.presets.rcnn import FasterRCNNDefaultValTransform

这两个变化里,注意import方式,pip 进来就修改site-package里面的文件。

class FasterRCNNDefaultTrainTransform(object):

"""Default Faster-RCNN training transform. Parameters

----------

short : int, default is 600

Resize image shorter side to ``short``.

max_size : int, default is 1000

Make sure image longer side is smaller than ``max_size``.

net : mxnet.gluon.HybridBlock, optional

The faster-rcnn network. .. hint:: If net is ``None``, the transformation will not generate training targets.

Otherwise it will generate training targets to accelerate the training phase

since we push some workload to CPU workers instead of GPUs. mean : array-like of size 3

Mean pixel values to be subtracted from image tensor. Default is [0.485, 0.456, 0.406].

std : array-like of size 3

Standard deviation to be divided from image. Default is [0.229, 0.224, 0.225].

box_norm : array-like of size 4, default is (1., 1., 1., 1.)

Std value to be divided from encoded values.

num_sample : int, default is 256

Number of samples for RPN targets.

pos_iou_thresh : float, default is 0.7

Anchors larger than ``pos_iou_thresh`` is regarded as positive samples.

neg_iou_thresh : float, default is 0.3

Anchors smaller than ``neg_iou_thresh`` is regarded as negative samples.

Anchors with IOU in between ``pos_iou_thresh`` and ``neg_iou_thresh`` are

ignored.

pos_ratio : float, default is 0.5

``pos_ratio`` defines how many positive samples (``pos_ratio * num_sample``) is

to be sampled. """

def __init__(self, short=600, max_size=1000, net=None, mean=(0.485, 0.456, 0.406),

std=(0.229, 0.224, 0.225), box_norm=(1., 1., 1., 1.),

num_sample=256, pos_iou_thresh=0.7, neg_iou_thresh=0.3,

pos_ratio=0.5, **kwargs):

self._short = short

self._max_size = max_size

self._mean = mean

self._std = std

self._anchors = None

if net is None:

return # use fake data to generate fixed anchors for target generation

ashape = 128

# in case network has reset_ctx to gpu

anchor_generator = copy.deepcopy(net.rpn.anchor_generator)

anchor_generator.collect_params().reset_ctx(None)

anchors = anchor_generator(

mx.nd.zeros((1, 3, ashape, ashape))).reshape((1, 1, ashape, ashape, -1))

self._anchors = anchors

# record feature extractor for infer_shape

if not hasattr(net, 'features'):

raise ValueError("Cannot find features in network, it is a Faster-RCNN network?")

self._feat_sym = net.features(mx.sym.var(name='data'))

from ....model_zoo.rpn.rpn_target import RPNTargetGenerator

self._target_generator = RPNTargetGenerator(

num_sample=num_sample, pos_iou_thresh=pos_iou_thresh,

neg_iou_thresh=neg_iou_thresh, pos_ratio=pos_ratio,

stds=box_norm, **kwargs) def __call__(self, src, label):

"""Apply transform to training image/label."""

# resize shorter side but keep in max_size

h, w, _ = src.shape

img = timage.resize_short_within(src, self._short, self._max_size, interp=1)

bbox = tbbox.resize(label, (w, h), (img.shape[1], img.shape[0])) # random horizontal flip

h, w, _ = img.shape

img, flips = timage.random_flip(img, px=0.5)

bbox = tbbox.flip(bbox, (w, h), flip_x=flips[0]) # to tensor

img = mx.nd.image.to_tensor(img)

img = mx.nd.image.normalize(img, mean=self._mean, std=self._std) if self._anchors is None:

return img, bbox.astype(img.dtype)

# print 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxlabal',label.shape

# print label[:,5]

# generate RPN target so cpu workers can help reduce the workload

# feat_h, feat_w = (img.shape[1] // self._stride, img.shape[2] // self._stride)

oshape = self._feat_sym.infer_shape(data=(1, 3, img.shape[1], img.shape[2]))[1][0]

anchor = self._anchors[:, :, :oshape[2], :oshape[3], :].reshape((-1, 4))

gt_bboxes = mx.nd.array(bbox[:, :4])

cls_target, box_target, box_mask = self._target_generator(

gt_bboxes, anchor, img.shape[2], img.shape[1],label[:,5])

return img, bbox.astype(img.dtype), cls_target, box_target, box_mask

可以检查一下这里的label的结构,和我们修改的一样

我们可以知道rpn处生成target的部分就是.target_generator,他是

from ....model_zoo.rpn.rpn_target import RPNTargetGenerator

添加上我们难样本属性label[:,5]

过来的,于是找到那里;

class RPNTargetGenerator(gluon.Block):

"""RPN target generator network. Parameters

----------

num_sample : int, default is 256

Number of samples for RPN targets.

pos_iou_thresh : float, default is 0.7

Anchor with IOU larger than ``pos_iou_thresh`` is regarded as positive samples.

neg_iou_thresh : float, default is 0.3

Anchor with IOU smaller than ``neg_iou_thresh`` is regarded as negative samples.

Anchors with IOU in between ``pos_iou_thresh`` and ``neg_iou_thresh`` are

ignored.

pos_ratio : float, default is 0.5

``pos_ratio`` defines how many positive samples (``pos_ratio * num_sample``) is

to be sampled.

stds : array-like of size 4, default is (1., 1., 1., 1.)

Std value to be divided from encoded regression targets.

allowed_border : int or float, default is 0

The allowed distance of anchors which are off the image border. This is used to clip out of

border anchors. You can set it to very large value to keep all anchors. """

def __init__(self, num_sample=256, pos_iou_thresh=0.7, neg_iou_thresh=0.3,

pos_ratio=0.5, stds=(1., 1., 1., 1.), allowed_border=0):

super(RPNTargetGenerator, self).__init__()

self._num_sample = num_sample

self._pos_iou_thresh = pos_iou_thresh

self._neg_iou_thresh = neg_iou_thresh

self._pos_ratio = pos_ratio

self._allowed_border = allowed_border

self._bbox_split = BBoxSplit(axis=-1)

self._sampler = RPNTargetSampler(num_sample, pos_iou_thresh, neg_iou_thresh, pos_ratio)

self._cls_encoder = SigmoidClassEncoder()

self._box_encoder = NormalizedBoxCenterEncoder(stds=stds) # pylint: disable=arguments-differ

def forward(self, bbox, anchor, width, height,hard_label):

"""

RPNTargetGenerator is only used in data transform with no batch dimension.

Be careful there's numpy operations inside Parameters

----------

bbox: (M, 4) ground truth boxes with corner encoding.

anchor: (N, 4) anchor boxes with corner encoding.

width: int width of input image

height: int height of input image Returns

-------

cls_target: (N,) value +1: pos, 0: neg, -1: ignore

box_target: (N, 4) only anchors whose cls_target > 0 has nonzero box target

box_mask: (N, 4) only anchors whose cls_target > 0 has nonzero mask """

F = mx.nd

with autograd.pause():

# calculate ious between (N, 4) anchors and (M, 4) bbox ground-truths

# ious is (N, M)

ious = mx.nd.contrib.box_iou(anchor, bbox, format='corner')

N, M = ious.shape

# print '------------------ious.shape',ious.shape

# print ious[0:5,]

# print M==hard_label.size

# mask out invalid anchors, (N, 4)

a_xmin, a_ymin, a_xmax, a_ymax = F.split(anchor, num_outputs=4, axis=-1)

invalid_mask = (a_xmin < 0) + (a_ymin < 0) + (a_xmax >= width) + (a_ymax >= height)

invalid_mask = F.repeat(invalid_mask, repeats=bbox.shape[0], axis=-1)

ious = F.where(invalid_mask, mx.nd.ones_like(ious) * -1, ious) samples, matches = self._sampler(ious,hard_label) # training targets for RPN

cls_target, _ = self._cls_encoder(samples)

box_target, box_mask = self._box_encoder(

samples.expand_dims(axis=0), matches.expand_dims(0),

anchor.expand_dims(axis=0), bbox.expand_dims(0))

return cls_target, box_target[0], box_mask[0]

可以看到生成sample的地方就是self._sampler,它是

self._sampler = RPNTargetSampler(num_sample, pos_iou_thresh, neg_iou_thresh, pos_ratio)

添加自己的属性hard_label,找到那里(同一个文件里):

class RPNTargetSampler(gluon.Block):

"""A sampler to choose positive/negative samples from RPN anchors Parameters

----------

num_sample : int

Number of samples for RCNN targets.

pos_iou_thresh : float

Proposal whose IOU larger than ``pos_iou_thresh`` is regarded as positive samples.

neg_iou_thresh : float

Proposal whose IOU smaller than ``neg_iou_thresh`` is regarded as negative samples.

pos_ratio : float

``pos_ratio`` defines how many positive samples (``pos_ratio * num_sample``) is

to be sampled. """

def __init__(self, num_sample, pos_iou_thresh, neg_iou_thresh, pos_ratio):

super(RPNTargetSampler, self).__init__()

self._num_sample = num_sample

self._max_pos = int(round(num_sample * pos_ratio))

self._pos_iou_thresh = pos_iou_thresh

self._neg_iou_thresh = neg_iou_thresh

self._eps = np.spacing(np.float32(1.0)) # pylint: disable=arguments-differ

def forward(self, ious,hard_label):

"""RPNTargetSampler is only used in data transform with no batch dimension. Parameters

----------

ious: (N, M) i.e. (num_anchors, num_gt). Returns

-------

samples: (num_anchors,) value 1: pos, -1: neg, 0: ignore.

matches: (num_anchors,) value [0, M). """

for i,hard in enumerate(hard_label):

if hard == 1.0:

ious[:,i] = 0.5 matches = mx.nd.argmax(ious, axis=1) # samples init with 0 (ignore)

ious_max_per_anchor = mx.nd.max(ious, axis=1)

samples = mx.nd.zeros_like(ious_max_per_anchor) # set argmax (1, num_gt)

ious_max_per_gt = mx.nd.max(ious, axis=0, keepdims=True)

# ious (num_anchor, num_gt) >= argmax (1, num_gt) -> mark row as positive

mask = mx.nd.broadcast_greater(ious + self._eps, ious_max_per_gt)

# reduce column (num_anchor, num_gt) -> (num_anchor)

mask = mx.nd.sum(mask, axis=1)

# row maybe sampled by 2 columns but still only matches to most overlapping gt

samples = mx.nd.where(mask, mx.nd.ones_like(samples), samples) # set positive overlap to 1

samples = mx.nd.where(ious_max_per_anchor >= self._pos_iou_thresh,

mx.nd.ones_like(samples), samples)

# set negative overlap to -1

tmp = (ious_max_per_anchor < self._neg_iou_thresh) * (ious_max_per_anchor >= 0)

samples = mx.nd.where(tmp, mx.nd.ones_like(samples) * -1, samples) # subsample fg labels

samples = samples.asnumpy()

num_pos = int((samples > 0).sum())

if num_pos > self._max_pos:

disable_indices = np.random.choice(

np.where(samples > 0)[0], size=(num_pos - self._max_pos), replace=False)

samples[disable_indices] = 0 # use 0 to ignore # subsample bg labels

num_neg = int((samples < 0).sum())

# if pos_sample is less than quota, we can have negative samples filling the gap

max_neg = self._num_sample - min(num_pos, self._max_pos)

if num_neg > max_neg:

disable_indices = np.random.choice(

np.where(samples < 0)[0], size=(num_neg - max_neg), replace=False)

samples[disable_indices] = 0 # convert to ndarray

samples = mx.nd.array(samples, ctx=matches.context)

return samples, matches

加上自己的hard部分,默认0.7~0.3 既不是正样本,也不是负样本。

经过试验,直接改为既不是正样本,也不是负样本,效果不好,而他标记为难gt,而其他锚框与他交为正样本,则改为既不是正样本,也不是负样本,效果会好一点点。

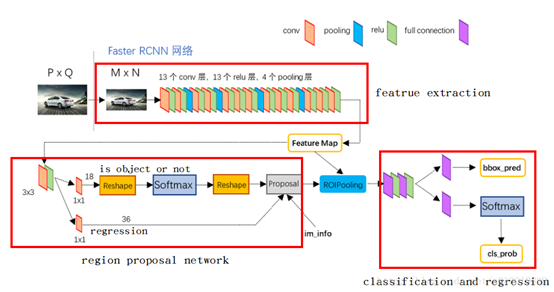

很多教程一本正经说Faster rcnn 的网络结构如下:

不可否认的,但是绝对不是gluon cv 的faster rcnn网络结构,后面的全连接层是RCNN层,想要达到更好的效果处理难样本,那里同样需要修改。

gluoncv,faster rcnn 处理难样本的更多相关文章

- 深度学习论文翻译解析(四):Faster R-CNN: Down the rabbit hole of modern object detection

论文标题:Faster R-CNN: Down the rabbit hole of modern object detection 论文作者:Zhi Tian , Weilin Huang, Ton ...

- 物体检测丨Faster R-CNN详解

这篇文章把Faster R-CNN的原理和实现阐述得非常清楚,于是我在读的时候顺便把他翻译成了中文,如果有错误的地方请大家指出. 原文:http://www.telesens.co/2018/03/1 ...

- 中文版 Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks 摘要 最先进的目标检测网络依靠区域提出算法 ...

- 目标检测算法的总结(R-CNN、Fast R-CNN、Faster R-CNN、YOLO、SSD、FNP、ALEXnet、RetianNet、VGG Net-16)

目标检测解决的是计算机视觉任务的基本问题:即What objects are where?图像中有什么目标,在哪里?这意味着,我们不仅要用算法判断图片中是不是要检测的目标, 还要在图片中标记出它的位置 ...

- Domain Adaptive Faster R-CNN:经典域自适应目标检测算法,解决现实中痛点,代码开源 | CVPR2018

论文从理论的角度出发,对目标检测的域自适应问题进行了深入的研究,基于H-divergence的对抗训练提出了DA Faster R-CNN,从图片级和实例级两种角度进行域对齐,并且加入一致性正则化来学 ...

- 深度学习论文翻译解析(十三):Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks

论文标题:Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks 标题翻译:基于区域提议(Regi ...

- 论文阅读之:Is Faster R-CNN Doing Well for Pedestrian Detection?

Is Faster R-CNN Doing Well for Pedestrian Detection? ECCV 2016 Liliang Zhang & Kaiming He 原文链接 ...

- object detection技术演进:RCNN、Fast RCNN、Faster RCNN

object detection我的理解,就是在给定的图片中精确找到物体所在位置,并标注出物体的类别.object detection要解决的问题就是物体在哪里,是什么这整个流程的问题.然而,这个问题 ...

- 读论文系列:Object Detection NIPS2015 Faster RCNN

转载请注明作者:梦里茶 Faster RCNN在Fast RCNN上更进一步,将Region Proposal也用神经网络来做,如果说Fast RCNN的最大贡献是ROI pooling layer和 ...

随机推荐

- PL/SQL之存储过程和触发器实例

1.Oracle存储过程实例 /*不带任何参数存储过程(输出系统日期)*/ CREATE OR REPLACE PROCEDURE output_date IS BEGIN DBMS_OUTPUT.P ...

- Vue 2.0的学习笔记:Vue的过滤器

转自: https://www.w3cplus.com/vue/how-to-create-filters-in-vuejs.html 过滤器的介绍 1.在Vue中使用过滤器(Filters)来渲染数 ...

- SpringFramework中重定向

需求: 需要在两个@Controller之间跳转,实现重定向 解决: @PostMapping("/files/{path1}") public String upload(... ...

- 【SSH网上商城项目实战25】使用java email给用户发送邮件

转自: https://blog.csdn.net/eson_15/article/details/51475046 当用户购买完商品后,我们应该向用户发送一封邮件,告诉他订单已生成之类的信息, ...

- Ora-03113\Ora-03114与Oracle In 拼接字符串的问题

刚深入接触Oracle不久(大学里以及刚参加工作时学到的Oracle知识只能算是皮毛),因为之前使用SqlServer有将近两年的时间,对SqlServer相对来说很熟悉,比较而言,Oracle真心很 ...

- Python删除开头空格

# -*- coding: utf-8 -*- '''打开delSpace.txt文本并删除每行开头的八个空格''' f=open("delSpace.txt") lines=f. ...

- Keras 自适应Learning Rate (LearningRateScheduler)

When training deep neural networks, it is often useful to reduce learning rate as the training progr ...

- webapi 实体作为参数,自动序列化成xml的问题

原文:http://bbs.csdn.net/topics/392038917 关注 Ray_Yang Ray_Yang 本版等级: #6 得分:0回复于: 2016-10-27 21:30:51 ...

- 解决linux下fflush(stdin)无效

void clean_stdin(void) { int c; do { c = getchar(); } while (c != '\n' && c != EOF); }

- mac安装软件提示没有权限

Mac 安装软件基本是各种爽,自动更新啥. 但是有一种提示没有权限的错误,很不爽,还要sudo管理员权限 有一个修复 /usr/local目录权限的命令 sudo chown -R 'whoami' ...