【转载】 日内瓦大学 & NeurIPS 2020 | 在强化学习中动态分配有限的内存资源

原文地址:

https://hub.baai.ac.cn/view/4029

========================================================

【论文标题】Dynamic allocation of limited memory resources in reinforcement learning

【作者团队】Nisheet Patel, Luigi Acerbi, Alexandre Pouget

【发表时间】2020/11/12

【论文链接】https://proceedings.neurips.cc//paper/2020/file/c4fac8fb3c9e17a2f4553a001f631975-Paper.pdf

【论文代码】https://github.com/nisheetpatel/DynamicResourceAllocator

【推荐理由】本文收录于NeurIPS 2020,来自日内瓦大学的研究人员研究强化学习与神经科学两个方面,提出了动态框架来对资源进行分配。

生物大脑固有的处理和存储信息的能力受到限制,但是仍然能够轻松地解决复杂的任务。

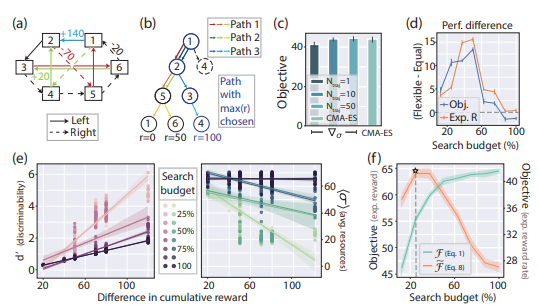

在本文中,研究人员提出了动态资源分配器(DRA),将其应用于强化学习中的两个标准任务和一个基于模型的计划任务,

发现它将更多资源分配给对内存有更高影响的项目。

此外,DRA从更高的资源预算开始学习时比为更好地完成任务而分配的学习速度要快,

这可以解释为什么生物大脑的额叶皮层区域在适应较低的渐近活动水平之前似乎更多地参与了学习的早期阶段。

本文的工作为学习如何将昂贵的资源分配给不确定的内存集合以适应环境变化的方式提供了一个规范性的解决方案。

代码地址:

https://github.com/nisheetpatel/DynamicResourceAllocator

======================================================

论文官方地址:

https://archive-ouverte.unige.ch/unige:149081

============================================

论文评审意见:

https://proceedings.neurips.cc/paper/2020/file/c4fac8fb3c9e17a2f4553a001f631975-MetaReview.html

Dynamic allocation of limited memory resources in reinforcement learning

Meta Review

This paper nicely bridges between neuroscience and RL, and considers the important topic of limited memory resources in RL agents. The topic is well-suited for NeurIPS (R2) as it has broader applicability toward e.g. model-based RL and planning, although this is not extensively discussed or shown in the paper itself. All reviewers agreed that it is well-motivated and written (R1, R2, R3, R4), although R3 did ask for a bit more explanation on some methodological details. It is also appropriately situated with respect to related work (R1, R2, R3) although R2 suggests a separate related works section, and R4 wanted to see more discussion of work outside of neuroscience, focused on optimizing RL with limited capacity. R1 pointed out that perhaps there’s a bit of confusion between memory precision and use of memory resources, as the former is more accurate for agents, the latter perhaps for real brains - ie more precise representations require more resources to encode in the brain, but this seems to be a minor point. R1 also asked to include standard baseline implementations to test for issues such how their model scales compared to other methods. R4 was the least positive, expressing that the contribution to AI is unclear, that the tasks are too easy and wouldn’t be expected to challenge memory resources. Also the connection to neuroscience is a bit tenuous as the implementation doesn’t seem particularly biologically plausible. In the rebuttal, authors argue that this approach will allow them to generate testable predictions regarding neural representations during learning, some of which are already included in the discussion. I find this adequate, but these predictions should maybe be foregrounded more so as to make clearer the neuroscientific contribution. I’m overall quite impressed with how responsive the authors were in their response, including almost all of the requested analyses. I think the final paper, with all of these changes incorporated, is likely to be much stronger, and so I recommend accept.

======================================

论文的视频讲解:(外网)

https://www.youtube.com/watch?v=1MJJkJd_umA

===================================

【转载】 日内瓦大学 & NeurIPS 2020 | 在强化学习中动态分配有限的内存资源的更多相关文章

- 深度强化学习中稀疏奖励问题Sparse Reward

Sparse Reward 推荐资料 <深度强化学习中稀疏奖励问题研究综述>1 李宏毅深度强化学习Sparse Reward4 强化学习算法在被引入深度神经网络后,对大量样本的需求更加 ...

- 强化学习中的无模型 基于值函数的 Q-Learning 和 Sarsa 学习

强化学习基础: 注: 在强化学习中 奖励函数和状态转移函数都是未知的,之所以有已知模型的强化学习解法是指使用采样估计的方式估计出奖励函数和状态转移函数,然后将强化学习问题转换为可以使用动态规划求解的 ...

- 强化学习中REIINFORCE算法和AC算法在算法理论和实际代码设计中的区别

背景就不介绍了,REINFORCE算法和AC算法是强化学习中基于策略这类的基础算法,这两个算法的算法描述(伪代码)参见Sutton的reinforcement introduction(2nd). A ...

- SpiningUP 强化学习 中文文档

2020 OpenAI 全面拥抱PyTorch, 全新版强化学习教程已发布. 全网第一个中文译本新鲜出炉:http://studyai.com/course/detail/ba8e572a 个人认为 ...

- 强化学习中的经验回放(The Experience Replay in Reinforcement Learning)

一.Play it again: reactivation of waking experience and memory(Trends in Neurosciences 2010) SWR发放模式不 ...

- 【转载】 “强化学习之父”萨顿:预测学习马上要火,AI将帮我们理解人类意识

原文地址: https://yq.aliyun.com/articles/400366 本文来自AI新媒体量子位(QbitAI) ------------------------------- ...

- (转) 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文)

本文转自:http://mp.weixin.qq.com/s/aAHbybdbs_GtY8OyU6h5WA 专题 | 深度强化学习综述:从AlphaGo背后的力量到学习资源分享(附论文) 原创 201 ...

- temporal credit assignment in reinforcement learning 【强化学习 经典论文】

Sutton 出版论文的主页: http://incompleteideas.net/publications.html Phd 论文: temporal credit assignment i ...

- ICML 2018 | 从强化学习到生成模型:40篇值得一读的论文

https://blog.csdn.net/y80gDg1/article/details/81463731 感谢阅读腾讯AI Lab微信号第34篇文章.当地时间 7 月 10-15 日,第 35 届 ...

- 【强化学习】1-1-2 “探索”(Exploration)还是“ 利用”(Exploitation)都要“面向目标”(Goal-Direct)

title: [强化学习]1-1-2 "探索"(Exploration)还是" 利用"(Exploitation)都要"面向目标"(Goal ...

随机推荐

- @RequestMapping 注解用在类上面有什么作用?

是一个用来处理请求地址映射的注解,可用于类或方法上.用于类上,表示类中的所有响应请求的方法都是以该地址作为父路径.

- 项目管理--PMBOK 读书笔记(3)【项目经理的角色 】

思维导图软件工具:https://www.xmind.cn/ 源文件地址:https://files-cdn.cnblogs.com/files/zj19940610/项目经理的角色.zip

- golang + postgresql + Kubernetes 后端学习

记录 链接 dbdiagram 基于 Golang + PostgreSQL + Kubernetes 后端开发大师班[中英字幕]

- lovelive - μ's

Tips:当你看到这个提示的时候,说明当前的文章是由原emlog博客系统搬迁至此的,文章发布时间已过于久远,编排和内容不一定完整,还请谅解` lovelive - μ's 日期:2017-12-16 ...

- 算法金 | 一个强大的算法模型:t-SNE !!

大侠幸会,在下全网同名「算法金」 0 基础转 AI 上岸,多个算法赛 Top 「日更万日,让更多人享受智能乐趣」 t-SNE(t-Distributed Stochastic Neighbor Emb ...

- python 使用pandas修改数据到excel,报“SettingwithCopyWarning A value is trying to be set on a copy of a slice from a DataFrame”的解决方法

场景: 通过pandas模块,将测试数据回写到excel,测试数据有写到excel文件,但控制台输出警告信息如下 警告: SettingwithCopyWarning A value is tryin ...

- 一款.NET开源的i茅台自动预约小助手

前言 今天大姚给大家分享一款.NET开源.基于WPF实现的i茅台APP接口自动化每日自动预约(抢茅台)小助手:HyggeImaotai. 项目介绍 该项目通过接口自动化模拟i茅台APP实现每日自动预约 ...

- 随机森林R语言预测工具

随机森林(Random Forest)是一种基于决策树的集成学习方法,它通过构建多个决策树并集成它们的预测结果来提高预测的准确性.在R语言中,我们可以使用randomForest包来构建和训练随机森林 ...

- OpenCV程序练习(一):图像基本操作

展示一张图片 代码 import cv2 img=cv2.imread("demoimg.png") #读取图像 cv2.imshow("demoName",i ...

- 使用sqlcel导入数据时出现“a column named '***' already belongs to this datatable”问题的解决办法

我修改编码为GBK之后,选择导入部分字段,如下: 这样就不会出现之前的问题了,完美 ----------------------------------------------- 但是出现一个问题,我 ...