kubernates使用kubeadm安装

kubeadm是Kubernetes官方提供的用于快速安装Kubernetes集群的工具,伴随Kubernetes每个版本的发布都会同步更新,kubeadm会对集群配置方面的一些实践做调整,通过实验kubeadm可以学习到Kubernetes官方在集群配置上一些新的最佳实践。

在Kubernetes的文档Creating a single master cluster with kubeadm中已经给出了目前kubeadm的主要特性已经处于beta状态了,在2018年将进入GA状态,说明kubeadm离可以在生产环境中使用的距离越来越近了。

当然我们线上稳定运行的Kubernetes集群是使用ansible以二进制形式的部署的高可用集群,这里体验Kubernetes 1.12中的kubeadm是为了跟随官方对集群初始化和配置方面的最佳实践,进一步完善我们的ansible部署脚本。

1.准备

1.1系统配置

在安装之前,需要先做如下准备。两台CentOS 7.4主机如下:

cat /etc/hosts

192.168.61.11 node1

192.168.61.12 node2

如果各个主机启用了防火墙,需要开放Kubernetes各个组件所需要的端口,可以查看Installing kubeadm中的”Check required ports”一节。 这里简单起见在各节点禁用防火墙:

systemctl stop firewalld

systemctl disable firewalld

禁用SELINUX:

setenforce 0

vi /etc/selinux/config

SELINUX=disabled

创建/etc/sysctl.d/k8s.conf文件,添加如下内容:

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

执行命令使修改生效。

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

1.2安装Docker

Kubernetes从1.6开始使用CRI(Container Runtime Interface)容器运行时接口。默认的容器运行时仍然是Docker,使用的是kubelet中内置dockershim CRI实现。

安装docker的yum源:

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

查看最新的Docker版本:

yum list docker-ce.x86_64 --showduplicates |sort -r

docker-ce.x86_64 18.06.1.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.06.0.ce-3.el7 docker-ce-stable

docker-ce.x86_64 18.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 18.03.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.12.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.09.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.06.0.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.3.ce-1.el7 docker-ce-stable

docker-ce.x86_64 17.03.2.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.1.ce-1.el7.centos docker-ce-stable

docker-ce.x86_64 17.03.0.ce-1.el7.centos docker-ce-stable

Kubernetes 1.12已经针对Docker的1.11.1, 1.12.1, 1.13.1, 17.03, 17.06, 17.09, 18.06等版本做了验证,需要注意Kubernetes 1.12最低支持的Docker版本是1.11.1。 我们这里在各节点安装docker的18.06.1版本。

yum makecache fast

yum install -y --setopt=obsoletes=0 \

docker-ce-18.06.1.ce-3.el7

systemctl start docker

systemctl enable docker

确认一下iptables filter表中FOWARD链的默认策略(pllicy)为ACCEPT。

iptables -nvL

Chain INPUT (policy ACCEPT 263 packets, 19209 bytes)

pkts bytes target prot opt in out source destination

Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

pkts bytes target prot opt in out source destination

0 0 DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0

Docker从1.13版本开始调整了默认的防火墙规则,禁用了iptables filter表中FOWARD链,这样会引起Kubernetes集群中跨Node的Pod无法通信。但这里通过安装docker 1806,发现默认策略又改回了ACCEPT,这个不知道是从哪个版本改回的,因为我们线上版本使用的1706还是需要手动调整这个策略的。

2.使用kubeadm部署Kubernetes

2.1 安装kubeadm和kubelet

下面在各节点安装kubeadm和kubelet:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

测试地址https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64是否可用,如果不可用需要科学上网。

curl https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

yum makecache fast

yum install -y kubelet kubeadm kubectl

...

Installed:

kubeadm.x86_64 0:1.12.0-0 kubectl.x86_64 0:1.12.0-0 kubelet.x86_64 0:1.12.0-0

Dependency Installed:

cri-tools.x86_64 0:1.11.1-0 kubernetes-cni.x86_64 0:0.6.0-0 socat.x86_64 0:1.7.3.2-2.el7

- 从安装结果可以看出还安装了cri-tools, kubernetes-cni, socat三个依赖:

- 官方从Kubernetes 1.9开始就将cni依赖升级到了0.6.0版本,在当前1.12中仍然是这个版本

- socat是kubelet的依赖

- cri-tools是CRI(Container Runtime Interface)容器运行时接口的命令行工具

运行kubelet --help可以看到原来kubelet的绝大多数命令行flag参数都被DEPRECATED了,如:

......

--address 0.0.0.0 The IP address for the Kubelet to serve on (set to 0.0.0.0 for all IPv4 interfaces and `::` for all IPv6 interfaces) (default 0.0.0.0) (DEPRECATED: This parameter should be set via the config file specified by the Kubelet's --config flag. See https://kubernetes.io/docs/tasks/administer-cluster/kubelet-config-file/ for more information.)

......

而官方推荐我们使用--config指定配置文件,并在配置文件中指定原来这些flag所配置的内容。具体内容可以查看这里Set Kubelet parameters via a config file。这也是Kubernetes为了支持动态Kubelet配置(Dynamic Kubelet Configuration)才这么做的,参考Reconfigure a Node’s Kubelet in a Live Cluster。

kubelet的配置文件必须是json或yaml格式,具体可查看这里。

Kubernetes 1.8开始要求关闭系统的Swap,如果不关闭,默认配置下kubelet将无法启动。

关闭系统的Swap方法如下:

swapoff -a

修改 /etc/fstab 文件,注释掉 SWAP 的自动挂载,使用

free -m确认swap已经关闭。 swappiness参数调整,修改/etc/sysctl.d/k8s.conf添加下面一行:vm.swappiness=0

执行

sysctl -p /etc/sysctl.d/k8s.conf使修改生效。

因为这里本次用于测试两台主机上还运行其他服务,关闭swap可能会对其他服务产生影响,所以这里修改kubelet的配置去掉这个限制。 之前的Kubernetes版本我们都是通过kubelet的启动参数--fail-swap-on=false去掉这个限制的。前面已经分析了Kubernetes不再推荐使用启动参数,而推荐使用配置文件。 所以这里我们改成配置文件配置的形式。

查看/etc/systemd/system/kubelet.service.d/10-kubeadm.conf,看到了下面的内容:

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS

上面显示kubeadm部署的kubelet的配置文件--config=/var/lib/kubelet/config.yaml,实际去查看/var/lib/kubelet和这个config.yaml的配置文件都没有被创建。 可以猜想肯定是运行kubeadm初始化集群时会自动生成这个配置文件,而如果我们不关闭Swap的话,第一次初始化集群肯定会失败的。

所以还是老老实实的回到使用kubelet的启动参数--fail-swap-on=false去掉必须关闭Swap的限制。 修改/etc/sysconfig/kubelet,加入:

KUBELET_EXTRA_ARGS=--fail-swap-on=false

2.2 使用kubeadm init初始化集群

在各节点开机启动kubelet服务:

systemctl enable kubelet.service

接下来使用kubeadm初始化集群,选择node1作为Master Node,在node1上执行下面的命令:

kubeadm init \

--kubernetes-version=v1.12.0 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address=192.168.61.11

因为我们选择flannel作为Pod网络插件,所以上面的命令指定–pod-network-cidr=10.244.0.0/16。

执行时报了下面的错误:

[init] using Kubernetes version: v1.12.0

[preflight] running pre-flight checks

[preflight] Some fatal errors occurred:

[ERROR Swap]: running with swap on is not supported. Please disable swap

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

有一个错误信息是running with swap on is not supported. Please disable swap。因为我们决定配置failSwapOn: false,所以重新添加–ignore-preflight-errors=Swap参数忽略这个错误,重新运行。

kubeadm init \

--kubernetes-version=v1.12.0 \

--pod-network-cidr=10.244.0.0/16 \

--apiserver-advertise-address=192.168.61.11 \

--ignore-preflight-errors=Swap

[init] using Kubernetes version: v1.12.0

[preflight] running pre-flight checks

[WARNING Swap]: running with swap on is not supported. Please disable swap

[preflight/images] Pulling images required for setting up a Kubernetes cluster

[preflight/images] This might take a minute or two, depending on the speed of your internet connection

[preflight/images] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[preflight] Activating the kubelet service

[certificates] Generated etcd/ca certificate and key.

[certificates] Generated etcd/peer certificate and key.

[certificates] etcd/peer serving cert is signed for DNS names [node1 localhost] and IPs [192.168.61.11 127.0.0.1 ::1]

[certificates] Generated apiserver-etcd-client certificate and key.

[certificates] Generated etcd/server certificate and key.

[certificates] etcd/server serving cert is signed for DNS names [node1 localhost] and IPs [127.0.0.1 ::1]

[certificates] Generated etcd/healthcheck-client certificate and key.

[certificates] Generated ca certificate and key.

[certificates] Generated apiserver certificate and key.

[certificates] apiserver serving cert is signed for DNS names [node1 kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.61.11]

[certificates] Generated apiserver-kubelet-client certificate and key.

[certificates] Generated front-proxy-ca certificate and key.

[certificates] Generated front-proxy-client certificate and key.

[certificates] valid certificates and keys now exist in "/etc/kubernetes/pki"

[certificates] Generated sa key and public key.

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/admin.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Wrote KubeConfig file to disk: "/etc/kubernetes/scheduler.conf"

[controlplane] wrote Static Pod manifest for component kube-apiserver to "/etc/kubernetes/manifests/kube-apiserver.yaml"

[controlplane] wrote Static Pod manifest for component kube-controller-manager to "/etc/kubernetes/manifests/kube-controller-manager.yaml"

[controlplane] wrote Static Pod manifest for component kube-scheduler to "/etc/kubernetes/manifests/kube-scheduler.yaml"

[etcd] Wrote Static Pod manifest for a local etcd instance to "/etc/kubernetes/manifests/etcd.yaml"

[init] waiting for the kubelet to boot up the control plane as Static Pods from directory "/etc/kubernetes/manifests"

[init] this might take a minute or longer if the control plane images have to be pulled

[apiclient] All control plane components are healthy after 26.503672 seconds

[uploadconfig] storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.12" in namespace kube-system with the configuration for the kubelets in the cluster

[markmaster] Marking the node node1 as master by adding the label "node-role.kubernetes.io/master=''"

[markmaster] Marking the node node1 as master by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "node1" as an annotation

[bootstraptoken] using token: zalj3i.q831ehufqb98d1ic

[bootstraptoken] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstraptoken] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstraptoken] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstraptoken] creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.61.11:6443 --token zalj3i.q831ehufqb98d1ic --discovery-token-ca-cert-hash sha256:6ee48b19ba61a2dda77f6b60687c5fd11072ab898cfdfef32a68821d1dbe8efa

上面记录了完成的初始化输出的内容,根据输出的内容基本上可以看出手动初始化安装一个Kubernetes集群所需要的关键步骤。

其中有以下关键内容:

[kubelet]生成kubelet的配置文件”/var/lib/kubelet/config.yaml”[certificates]生成相关的各种证书[kubeconfig]生成相关的kubeconfig文件[bootstraptoken]生成token记录下来,后边使用kubeadm join往集群中添加节点时会用到- 下面的命令是配置常规用户如何使用kubectl访问集群:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

- 最后给出了将节点加入集群的命令

kubeadm join 192.168.61.11:6443 --token zalj3i.q831ehufqb98d1ic --discovery-token-ca-cert-hash sha256:6ee48b19ba61a2dda77f6b60687c5fd11072ab898cfdfef32a68821d1dbe8efa

查看一下集群状态:

kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health": "true"}

确认个组件都处于healthy状态。

集群初始化如果遇到问题,可以使用下面的命令进行清理:

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

2.3 安装Pod Network

接下来安装flannel network add-on:

mkdir -p ~/k8s/

cd ~/k8s

wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

kubectl apply -f kube-flannel.yml

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.extensions/kube-flannel-ds-amd64 created

daemonset.extensions/kube-flannel-ds-arm64 created

daemonset.extensions/kube-flannel-ds-arm created

daemonset.extensions/kube-flannel-ds-ppc64le created

daemonset.extensions/kube-flannel-ds-s390x created

这里注意kube-flannel.yml这个文件里的flannel的镜像是0.10.0,quay.io/coreos/flannel:v0.10.0-amd64

如果Node有多个网卡的话,参考flannel issues 39701,目前需要在kube-flannel.yml中使用--iface参数指定集群主机内网网卡的名称,否则可能会出现dns无法解析。需要将kube-flannel.yml下载到本地,flanneld启动参数加上--iface=<iface-name>

......

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.10.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth1

......

本次按上面的步骤部署flannel,发现没有效果,查看一下集群中的daemonset:

kubectl get ds -l app=flannel -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel-ds-amd64 0 0 0 0 0 beta.kubernetes.i/oarch=amd64 17s

kube-flannel-ds-arm 0 0 0 0 0 beta.kubernetes.io/arch=arm 17s

kube-flannel-ds-arm64 0 0 0 0 0 beta.kubernetes.io/arch=arm64 17s

kube-flannel-ds-ppc64le 0 0 0 0 0 beta.kubernetes.io/arch=ppc64le 17s

kube-flannel-ds-s390x 0 0 0 0 0 beta.kubernetes.io/arch=s390x 17s

结合kube-flannel.yml,fannel官方的部署yaml文件是要在集群中创建5个针对不同平台的DaemonSet,通过Node的Label beta.kubernetes.i/oarch,在对应不同平台的Node节点上启动flannel的容器。当前的node1节点是beta.kubernetes.i/oarch=amd64,因此对于kube-flannel-ds-amd64这个DaemonSet来说,它的DESIRED数量应该为1才对。查看kube-flannel.yml中关于kube-flannel-ds-amd64的内容:

spec:

template:

metadata:

labels:

tier: node

app: flannel

spec:

hostNetwork: true

nodeSelector:

beta.kubernetes.io/arch: amd64

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

kube-flannel.yml中已经为kube-flannel-ds-amd64正确设置了调度相关的nodeSelector和tolerations,即将这个DaemonSet的Pod调度到Label为beta.kubernetes.io/arch: amd64,同时容忍node-role.kubernetes.io/master:NoSchedule污点的节点上。这个按照以前的部署经验来说当前的主节点node1应该是多满足的,可是现在是这样的吗?我们查看一下node1节点的基本信息:

kubectl describe node node1

Name: node1

Roles: master

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=node1

node-role.kubernetes.io/master=

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Wed, 03 Oct 2018 09:03:04 +0800

Taints: node-role.kubernetes.io/master:NoSchedule

node.kubernetes.io/not-ready:NoSchedule

Unschedulable: false

可以看到1.12版本的kubeadm额外给node1节点设置了一个污点(Taint):node.kubernetes.io/not-ready:NoSchedule,很容易理解,即如果节点还没有ready之前,是不接受调度的。可是如果Kubernetes的网络插件还没有部署的话,节点是不会进入ready状态的。因此我们修改以下kube-flannel.yaml的内容,加入对node.kubernetes.io/not-ready:NoSchedule这个污点的容忍:

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- key: node.kubernetes.io/not-ready

operator: Exists

effect: NoSchedule

重新apply一下kubectl apply -f kube-flannel.yml,这次成功完成flannel的部署了。

使用kubectl get pod --all-namespaces -o wide确保所有的Pod都处于Running状态。

kubectl get pod --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

kube-system coredns-576cbf47c7-njt7l 1/1 Running 0 12m 10.244.0.3 node1 <none>

kube-system coredns-576cbf47c7-vg2gd 1/1 Running 0 12m 10.244.0.2 node1 <none>

kube-system etcd-node1 1/1 Running 0 12m 192.168.61.11 node1 <none>

kube-system kube-apiserver-node1 1/1 Running 0 12m 192.168.61.11 node1 <none>

kube-system kube-controller-manager-node1 1/1 Running 0 12m 192.168.61.11 node1 <none>

kube-system kube-flannel-ds-amd64-bxtqh 1/1 Running 0 2m 192.168.61.11 node1 <none>

kube-system kube-proxy-fb542 1/1 Running 0 12m 192.168.61.11 node1 <none>

kube-system kube-scheduler-node1 1/1 Running 0 12m 192.168.61.11 node1 <none>

后来也在flannel的github中找到了关于node.kubernetes.io/not-ready:NoSchedule这个问题的讨论,相信很快就会将相关配置修改正确,详见https://github.com/coreos/flannel/issues/1044。

2.4 master node参与工作负载

使用kubeadm初始化的集群,出于安全考虑Pod不会被调度到Master Node上,也就是说Master Node不参与工作负载。这是因为当前的master节点node1被打上了node-role.kubernetes.io/master:NoSchedule的污点:

kubectl describe node node1 | grep Taint

Taints: node-role.kubernetes.io/master:NoSchedule

因为这里搭建的是测试环境,去掉这个污点使node1参与工作负载:

kubectl taint nodes node1 node-role.kubernetes.io/master-

node "node1" untainted

2.5 测试DNS

kubectl run curl --image=radial/busyboxplus:curl -it

kubectl run --generator=deployment/apps.v1beta1 is DEPRECATED and will be removed in a future version. Use kubectl create instead.

If you don't see a command prompt, try pressing enter.

[ root@curl-5cc7b478b6-r997p:/ ]$

进入后执行nslookup kubernetes.default确认解析正常:

nslookup kubernetes.default

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes.default

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

2.6 向Kubernetes集群中添加Node节点

下面我们将node2这个主机添加到Kubernetes集群中,因为我们同样在node2上的kubelet的启动参数中去掉了必须关闭swap的限制,所以同样需要--ignore-preflight-errors=Swap这个参数。 在node2上执行:

kubeadm join 192.168.61.11:6443 --token zalj3i.q831ehufqb98d1ic --discovery-token-ca-cert-hash sha256:6ee48b19ba61a2dda77f6b60687c5fd11072ab898cfdfef32a68821d1dbe8efa \

--ignore-preflight-errors=Swap

[preflight] running pre-flight checks

[WARNING RequiredIPVSKernelModulesAvailable]: the IPVS proxier will not be used, because the following required kernel modules are not loaded: [ip_vs_rr ip_vs_wrr ip_vs_sh ip_vs] or no builtin kernel ipvs support: map[ip_vs:{} ip_vs_rr:{} ip_vs_wrr:{} ip_vs_sh:{} nf_conntrack_ipv4:{}]

you can solve this problem with following methods:

1. Run 'modprobe -- ' to load missing kernel modules;

2. Provide the missing builtin kernel ipvs support

[WARNING Swap]: running with swap on is not supported. Please disable swap

[discovery] Trying to connect to API Server "192.168.61.11:6443"

[discovery] Created cluster-info discovery client, requesting info from "https://192.168.61.11:6443"

[discovery] Requesting info from "https://192.168.61.11:6443" again to validate TLS against the pinned public key

[discovery] Cluster info signature and contents are valid and TLS certificate validates against pinned roots, will use API Server "192.168.61.11:6443"

[discovery] Successfully established connection with API Server "192.168.61.11:6443"

[kubelet] Downloading configuration for the kubelet from the "kubelet-config-1.12" ConfigMap in the kube-system namespace

[kubelet] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[preflight] Activating the kubelet service

[tlsbootstrap] Waiting for the kubelet to perform the TLS Bootstrap...

[patchnode] Uploading the CRI Socket information "/var/run/dockershim.sock" to the Node API object "node2" as an annotation

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the master to see this node join the cluster.

node2加入集群很是顺利,下面在master节点上执行命令查看集群中的节点:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

node1 Ready master 26m v1.12.0

node2 Ready <none> 2m v1.12.0

如何从集群中移除Node

如果需要从集群中移除node2这个Node执行下面的命令:

在master节点上执行:

kubectl drain node2 --delete-local-data --force --ignore-daemonsets

kubectl delete node node2

在node2上执行:

kubeadm reset

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

在node1上执行:

kubectl delete node node2

3.Kubernetes常用组件部署

越来越多的公司和团队开始使用Helm这个Kubernetes的包管理器,我们也将使用Helm安装Kubernetes的常用组件。

3.1 Helm的安装

Helm由客户端命helm令行工具和服务端tiller组成,Helm的安装十分简单。 下载helm命令行工具到master节点node1的/usr/local/bin下,这里下载的2.9.1版本:

wget https://storage.googleapis.com/kubernetes-helm/helm-v2.11.0-linux-amd64.tar.gz

tar -zxvf helm-v2.11.0-linux-amd64.tar.gz

cd linux-amd64/

cp helm /usr/local/bin/

为了安装服务端tiller,还需要在这台机器上配置好kubectl工具和kubeconfig文件,确保kubectl工具可以在这台机器上访问apiserver且正常使用。 这里的node1节点以及配置好了kubectl。

因为Kubernetes APIServer开启了RBAC访问控制,所以需要创建tiller使用的service account: tiller并分配合适的角色给它。 详细内容可以查看helm文档中的Role-based Access Control。 这里简单起见直接分配cluster-admin这个集群内置的ClusterRole给它。创建rbac-config.yaml文件:

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

kubectl create -f rbac-config.yaml

serviceaccount/tiller created

clusterrolebinding.rbac.authorization.k8s.io/tiller created

接下来使用helm部署tiller:

helm init --service-account tiller --skip-refresh

Creating /root/.helm

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy.

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://docs.helm.sh/using_helm/#securing-your-helm-installation

Happy Helming!

tiller默认被部署在k8s集群中的kube-system这个namespace下:

kubectl get pod -n kube-system -l app=helm

NAME READY STATUS RESTARTS AGE

tiller-deploy-6f6fd74b68-kk2z9 1/1 Running 0 3m17s

helm version

Client: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.11.0", GitCommit:"2e55dbe1fdb5fdb96b75ff144a339489417b146b", GitTreeState:"clean"}

注意由于某些原因需要网络可以访问gcr.io和kubernetes-charts.storage.googleapis.com,如果无法访问可以通过

helm init --service-account tiller --tiller-image <your-docker-registry>/tiller:v2.11.0 --skip-refresh使用私有镜像仓库中的tiller镜像

3.2 使用Helm部署Nginx Ingress

为了便于将集群中的服务暴露到集群外部,从集群外部访问,接下来使用Helm将Nginx Ingress部署到Kubernetes上。 Nginx Ingress Controller被部署在Kubernetes的边缘节点上,关于Kubernetes边缘节点的高可用相关的内容可以查看我前面整理的Bare metal环境下Kubernetes Ingress边缘节点的高可用。 这里简单起见,只有一个edge节点。

我们将node1(192.168.61.11)同时做为边缘节点,打上Label:

kubectl label node node1 node-role.kubernetes.io/edge=

node/node1 labeled

kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready edge,master 46m v1.12.0

node2 Ready <none> 22m v1.12.0

stable/nginx-ingress chart的值文件ingress-nginx.yaml:

controller:

service:

externalIPs:

- 192.168.61.11

nodeSelector:

node-role.kubernetes.io/edge: ''

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

defaultBackend:

nodeSelector:

node-role.kubernetes.io/edge: ''

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

helm repo update

helm install stable/nginx-ingress \

-n nginx-ingress \

--namespace ingress-nginx \

-f ingress-nginx.yaml

kubectl get pod -n ingress-nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

nginx-ingress-controller-7577b57874-m4zkv 1/1 Running 0 9m13s 10.244.0.10 node1 <none>

nginx-ingress-default-backend-684f76869d-9jgtl 1/1 Running 0 9m13s 10.244.0.9 node1 <none>

如果访问http://192.168.61.11返回default backend,则部署完成:

curl http://192.168.61.11/

default backend - 404

3.2 将TLS证书配置到Kubernetes中

当使用Ingress将HTTPS的服务暴露到集群外部时,需要HTTPS证书,这里将*.frognew.com的证书和秘钥配置到Kubernetes中。

后边部署在kube-system命名空间中的dashboard要使用这个证书,因此这里先在kube-system中创建证书的secret

kubectl create secret tls frognew-com-tls-secret --cert=fullchain.pem --key=privkey.pem -n kube-system

secret/frognew-com-tls-secret created

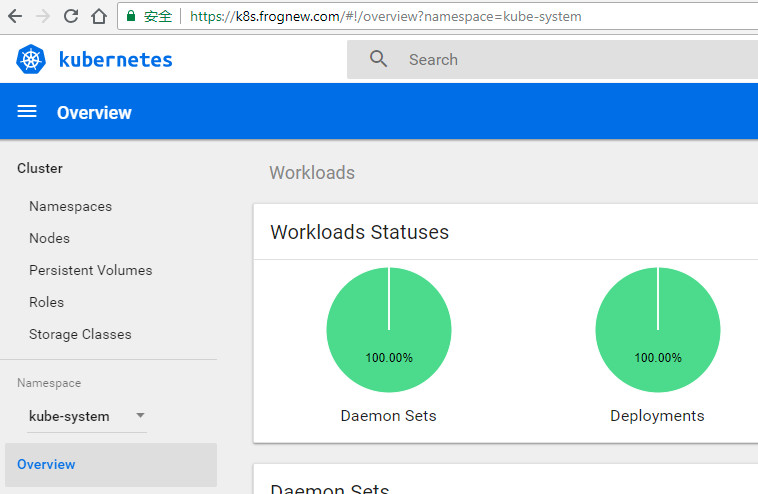

3.3 使用Helm部署dashboard

kubernetes-dashboard.yaml:

ingress:

enabled: true

hosts:

- k8s.frognew.com

annotations:

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/secure-backends: "true"

tls:

- secretName: frognew-com-tls-secret

hosts:

- k8s.frognew.com

rbac:

clusterAdminRole: true

helm install stable/kubernetes-dashboard \

-n kubernetes-dashboard \

--namespace kube-system \

-f kubernetes-dashboard.yaml

kubectl -n kube-system get secret | grep kubernetes-dashboard-token

kubernetes-dashboard-token-tjj25 kubernetes.io/service-account-token 3 37s

kubectl describe -n kube-system secret/kubernetes-dashboard-token-tjj25

Name: kubernetes-dashboard-token-tjj25

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name=kubernetes-dashboard

kubernetes.io/service-account.uid=d19029f0-9cac-11e8-8d94-080027db403a

Type: kubernetes.io/service-account-token

Data

====

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi10amoyNSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImQxOTAyOWYwLTljYWMtMTFlOC04ZDk0LTA4MDAyN2RiNDAzYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.w1HZrtBOhANdqSRLNs22z8dQWd5IOCpEl9VyWQ6DUwhHfgpAlgdhEjTqH8TT0f4ftu_eSPnnUXWbsqTNDobnlxet6zVvZv1K-YmIO-o87yn2PGIrcRYWkb-ADWD6xUWzb0xOxu2834BFVC6T5p5_cKlyo5dwerdXGEMoz9OW0kYvRpKnx7E61lQmmacEeizq7hlIk9edP-ot5tCuIO_gxpf3ZaEHnspulceIRO_ltjxb8SvqnMglLfq6Bt54RpkUOFD1EKkgWuhlXJ8c9wJt_biHdglJWpu57tvOasXtNWaIzTfBaTiJ3AJdMB_n0bQt5CKAUnKBhK09NP3R0Qtqog

在dashboard的登录窗口使用上面的token登录。

3.4 使用Helm部署metrics-server

从Heapster的github https://github.com/kubernetes/heapster中可以看到已经,heapster已经DEPRECATED。这里heapster的deprecation timeline。可以看出heapster从Kubernetes 1.12开始将从Kubernetes各种安装脚本中移除。

Kubernetes推荐使用metrics-server(https://github.com/kubernetes-incubator/metrics-server)。我们这里也使用helm来部署metrics-server。

metrics-server.yaml:

args:

- --logtostderr

- --kubelet-insecure-tls

helm install stable/metrics-server \

-n metrics-server \

--namespace kube-system \

-f metrics-server.yaml

部署后,查看metrics-server的日志,报下面的错误:

E1003 05:46:13.757009 1 manager.go:102] unable to fully collect metrics: [unable to fully scrape metrics from source kubelet_summary:node1: unable to fetch metrics from Kubelet node1 (node1): Get https://node1:10250/stats/summary/: dial tcp: lookup node1 on 10.96.0.10:53: no such host, unable to fully scrape metrics from source kubelet_summary:node2: unable to fetch metrics from Kubelet node2 (node2): Get https://node2:10250/stats/summary/: dial tcp: lookup node2 on 10.96.0.10:53: read udp 10.244.1.6:45288->10.96.0.10:53: i/o timeout]

可以看到metrics-server在从kubelet的10250端口获取信息时,使用的是hostname,而因为node1和node2是一个独立的演示环境,只是修改了这两个节点系统的/etc/hosts文件,而并没有内网的DNS服务器,所以metrics-server中不认识node1和node2的名字。这里我们可以直接修改Kubernetes集群中的coredns的configmap,修改Corefile加入hostnames插件,将Kubernetes的各个节点的主机名加入到hostnames中,这样Kubernetes集群中的所有Pod都可以从CoreDNS中解析各个节点的名字。

kubectl edit configmap coredns -n kube-system

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health

hosts {

192.168.61.11 node1

192.168.61.12 node2

fallthrough

}

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

upstream

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

proxy . /etc/resolv.conf

cache 30

loop

reload

loadbalance

}

kind: ConfigMap

配置修改完毕后重启集群中coredns和metrics-server,确认metrics-server不再有错误日志。使用下面的命令可以获取到关于集群节点基本的指标信息:

kubectl get --raw "/apis/metrics.k8s.io/v1beta1/nodes"

遗憾的是,当前Kubernetes Dashboard还不支持metrics-server。因此如果使用metrics-server替代了heapster,将无法在dashboard中以图形展示Pod的内存和CPU情况(实际上这也不是很重要,当前我们是在Prometheus和Grafana中定制的Kubernetes集群中各个Pod的监控,因此在dashboard中查看Pod内存和CPU也不是很重要)。 Dashboard的github上有很多这方面的讨论,如

https://github.com/kubernetes/dashboard/issues/3217和https://github.com/kubernetes/dashboard/issues/3270,Dashboard已经准备在将来的某个时间点支持metrics-server。但由于metrics-server和metrics pipeline肯定是Kubernetes在monitor方面未来的方向,所以我们也很果断的在各个环境中切换到了metrics-server。

4.总结

本次安装涉及到的Docker镜像:

# kubernetes

k8s.gcr.io/kube-apiserver:v1.12.0

k8s.gcr.io/kube-controller-manager:v1.12.0

k8s.gcr.io/kube-scheduler:v1.12.0

k8s.gcr.io/kube-proxy:v1.12.0

k8s.gcr.io/etcd:3.2.24

k8s.gcr.io/pause:3.1

# network and dns

quay.io/coreos/flannel:v0.10.0-amd64

k8s.gcr.io/coredns:1.2.2

# helm and tiller

gcr.io/kubernetes-helm/tiller:v2.11.0

# nginx ingress

quay.io/kubernetes-ingress-controller/nginx-ingress-controller:0.19.0

k8s.gcr.io/defaultbackend:1.4

# dashboard and metric-sever

k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.0

gcr.io/google_containers/metrics-server-amd64:v0.3.0

参考

kubernates使用kubeadm安装的更多相关文章

- CentOS7.3利用kubeadm安装kubernetes1.7.3完整版(官方文档填坑篇)

安装前记: 近来容器对企业来说已经不是什么陌生的概念,Kubernetes作为Google开源的容器运行平台,受到了大家的热捧.搭建一套完整的kubernetes平台,也成为试用这套平台必须迈过的坎儿 ...

- Centos7 使用 kubeadm 安装Kubernetes 1.13.3

目录 目录 什么是Kubeadm? 什么是容器存储接口(CSI)? 什么是CoreDNS? 1.环境准备 1.1.网络配置 1.2.更改 hostname 1.3.配置 SSH 免密码登录登录 1.4 ...

- kubernetes系列03—kubeadm安装部署K8S集群

本文收录在容器技术学习系列文章总目录 1.kubernetes安装介绍 1.1 K8S架构图 1.2 K8S搭建安装示意图 1.3 安装kubernetes方法 1.3.1 方法1:使用kubeadm ...

- kubeadm安装kubernetes-v1.13.1

kubeadm安装kubernetes-v1.13.1 centos虚拟机使用kubeadm安装k8s-v1.13.1. 机器信息如下: 主机名 ip master 192.168.239.200 n ...

- Kubeadm安装的K8S集群1年证书过期问题的解决思路

这个问题,很多使用使用kubeadm的用户都会遇到. 网上也有类似的帖子,从源代码编译这种思路, 在生产环境,有些不现实. 还是使用kubeadm的命令操作,比较自然一点. 当然,自行生成一套证书,也 ...

- kubeadm安装kubernetes V1.11.1 集群

之前测试了离线环境下使用二进制方法安装配置Kubernetes集群的方法,安装的过程中听说 kubeadm 安装配置集群更加方便,因此试着折腾了一下.安装过程中,也有一些坑,相对来说操作上要比二进制方 ...

- 使用 --image-repository 解决kubeadm 安装k8s 集群 谷歌镜像墙的问题

从网上我们看到的好多kubeadm 安装k8s 的时候都说需要下拉取镜像,然后修改,实际上 我们可以使用配置参数,快速的跳过墙的问题 说明: 基础镜像,我们仍然存在,拉取的问题,但是dockerhub ...

- 使用kubeadm安装kubernetes高可用集群

kubeadm安装kubernetes高可用集群搭建 第一步:首先搭建etcd集群 yum install -y etcd 配置文件 /etc/etcd/etcd.confETCD_NAME=inf ...

- kubeadm安装K8S单master双节点集群

宿主机:master:172.16.40.97node1:172.16.40.98node2:172.16.40.99 # 一.k8s初始化环境:(三台宿主机) 关闭防火墙和selinux syste ...

随机推荐

- 提交变更(git commit)

当所有的变更都进入暂存区,就可以使用git commit进行提交了 $ git commit 执行这句话后,会弹出文本编辑区(自己配置的或默认的),文本编辑器可能会显示如下内容 # Please en ...

- 查表法解决calendar中月份及星期初始值为0的情况。

Calendar ca = Calendar.getInstance(); String [] index = {"星期一","星期二","星期三&q ...

- MySQL插入中文数据出现?号

原文转载自:https://blog.csdn.net/LynneZoe/article/details/79174119 运行环境:win10 mysql版本:Mysql5.6 做一个项目的时候,向 ...

- (二)ORB描述子提取源码思路与实现

ORBSLAM2中ORB特征提取的特点 ORBSLAM2中通过对OpenCV中的ORB特征点提取类进行修改,对图像进行分块提取,而后划分节点,使得每个节点中保存的特征点性能是该节点所有特征点中最好的. ...

- iOS 单选框

iOS 单选框,可自定义横向和纵向显示,可定义显示的个数和内容,自定义间距,提供block 和代理方法可供使用,欢迎拍砖! github地址: https://github.com/joshuaGen ...

- Leetcode-1.两数之和

题目描述: 给定一个整数数组 nums 和一个目标值 target,请你在该数组中找出和为目标值的那 两个 整数,并返回他们的数组下标. 你可以假设每种输入只会对应一个答案.但是,你不能重复利用这个数 ...

- C语言中 if 和 else if 的区别

先看代码:我们本意是对i不同的值有不同的判断. #include<stdio.h> int main(void) { ; ) { i++; printf("%d\n", ...

- python封装configparser模块获取conf.ini值(优化版)

昨天晚上封装了configparser模块,是根据keyname获取的value.python封装configparser模块获取conf.ini值 我原本是想通过config.ini文件中的sect ...

- Nginx命令行控制

在Linux中,需要使用命令行来控制Nginx服务器的启动与停止.重载配置文件.回滚日志文件.平滑升级等行为.默认情况下,Nginx被安装在目录usrlocal/nginx/中,其二进制文件路径为us ...

- Centos安装Git、DotNet、Docker

1.安装Git yum install git 可通过下面的命令查看Git版本 git --version 2.安装Dotnet sudo yum install libunwind libicu 导 ...