Hadoop的NullWritable

1. Hadoop中的NullWritable

NullWritable是Writable的一个特殊类,实现方法为空实现,不从数据流中读数据,也不写入数据,只充当占位符,如在MapReduce中,如果你不需要使用键或值,你就可以将键或值声明为NullWritable,NullWritable是一个不可变的单实例类型。

比如,我设置map的输出为,这样做:

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString();

WebLogBean webLogBean = WebLogParser.parser(line);

WebLogParser.filtStaticResource(webLogBean, pages); // 过滤js/图片/css等静态资源

k.set(webLogBean.toString());

context.write(k, NullWritable.get(););

}

不能使用new NullWritable()来定义,获取空值只能NullWritable.get()来获取

来自https://www.cnblogs.com/Skyar/p/5815486.html

2. Yarn上的各种Id

- jobId

描述:出自MapReduce,对作业的唯一标识。

格式:job_${clusterStartTime}_${jobid}

例子:job_1498552288473_2742

- applicationId

描述:在yarn中对作业的唯一标识。

格式:application_${clusterStartTime}_${applicationId}

例子:application_1498552288473_2742

- taskId

描述:作业中的任务的唯一标识

格式:task_${clusterStartTime}_${applicationId}_[m|r]_${taskId}

例子:task_1498552288473_2742_m_000000、task_1498552288473_2742_r_000000

- attempId

描述:任务尝试执行的一次id

格式:attempt_${clusterStartTime}_${applicationId}_[m|r]_${taskId}_${attempId}

例子:attempt_1498552288473_2742_m_000000_0

- appAttempId

描述:ApplicationMaster的尝试执行的一次id。

格式:appattempt_${clusterStartTime}_${applicationId}_${appAttempId}

例子:appattempt_1498552288473_2742_000001

- containerId

描述:container的id

格式:container_e*epoch*_${clusterStartTime}_${applicationId}_${appAttempId}_${containerId}

例子:container_e20_1498552288473_2742_01_000032、container_1498552288473_2742_01_000032参考:Yarn之日志分析

3. Yarn的运行日志存放目录以及备份目录

Yarn上任务的运行日志存在哪呢?你有没有疑问过,今天终于明白了,以Flink on yarn上的任务为例(其他任务类似,不一定完全相同),看JobManager.log的截图

2019-08-05 09:51:55,826 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --------------------------------------------------------------------------------

2019-08-05 09:51:55,827 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Starting YarnSessionClusterEntrypoint (Version: 1.7.1, Rev:<unknown>, Date:<unknown>)

2019-08-05 09:51:55,827 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - OS current user: yarn

2019-08-05 09:51:56,255 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Current Hadoop/Kerberos user: worker

2019-08-05 09:51:56,255 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JVM: Java HotSpot(TM) 64-Bit Server VM - Oracle Corporation - 1.8/25.65-b01

2019-08-05 09:51:56,255 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Maximum heap size: 1388 MiBytes

2019-08-05 09:51:56,255 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JAVA_HOME: /usr/local/jdk/

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Hadoop version: 2.6.0-cdh5.5.0

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - JVM Options:

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Xmx1448m

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlog.file=/var/log/hadoop/container/application_1564969910131_0252/container_e05_1564969910131_0252_01_000001/jobmanager.log

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlogback.configurationFile=file:logback.xml

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - -Dlog4j.configuration=file:log4j.properties

2019-08-05 09:51:56,258 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Program Arguments: (none)

2019-08-05 09:51:56,259 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - Classpath: lib/flink-python_2.11-1.7.1.jar:lib/flink-shaded-hadoop2-uber-1.7.1.jar:lib/log4j-1.2.17.jar:lib/slf4j-log4j12-1.7.15.jar:log4j.properties:logback.xml:flink.jar:flink-conf.yaml::/etc/hadoop/conf.cloudera.yarn4:/run/cloudera-scm-agent/process/47188-yarn-NODEMANAGER:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-annotations.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-auth.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-aws.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-common-tests.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-common.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-nfs.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-nfs-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-common-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-common-2.6.0-cdh5.5.0-tests.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-aws-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-auth-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/hadoop-annotations-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-format.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-format-sources.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-format-javadoc.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-tools.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-thrift.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-test-hadoop2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-scrooge_2.10.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-scala_2.10.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-protobuf.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-pig.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-pig-bundle.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-jackson.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-hadoop.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-hadoop-bundle.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-generator.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-encoding.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-common.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-column.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-cascading.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/parquet-avro.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/hue-plugins-3.9.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-httpclient-3.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-math3-3.1.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-digester-1.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/curator-recipes-2.7.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-net-3.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-configuration-1.6.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/httpclient-4.2.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/gson-2.2.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-el-1.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jackson-xc-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/httpcore-4.2.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/curator-framework-2.7.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jersey-core-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jaxb-impl-2.2.3-1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jasper-compiler-5.5.23.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/htrace-core4-4.0.1-incubating.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-lang-2.6.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jsp-api-2.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jets3t-0.9.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jaxb-api-2.2.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jackson-mapper-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/hamcrest-core-1.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-logging-1.1.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/apacheds-i18n-2.0.0-M15.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/zookeeper.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/avro.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/stax-api-1.0-2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/protobuf-java-2.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/mockito-all-1.8.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/log4j-1.2.17.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jsr305-3.0.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jetty-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jersey-server-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/java-xmlbuilder-0.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jackson-jaxrs-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/guava-11.0.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-io-2.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/xmlenc-0.52.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/snappy-java-1.0.4.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/servlet-api-2.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/paranamer-2.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-compress-1.4.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-collections-3.2.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-codec-1.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-cli-1.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-beanutils-core-1.8.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/commons-beanutils-1.7.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/aws-java-sdk-1.7.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/asm-3.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/api-util-1.0.0-M20.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/api-asn1-api-1.0.0-M20.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/apacheds-kerberos-codec-2.0.0-M15.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/activation-1.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/slf4j-log4j12.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/xz-1.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/slf4j-api-1.7.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/netty-3.6.2.Final.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/logredactor-1.0.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/junit-4.11.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jsch-0.1.42.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jettison-1.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jersey-json-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jasper-runtime-5.5.23.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/jackson-core-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop/lib/curator-client-2.7.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs-nfs.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs-tests.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs-nfs-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/hadoop-hdfs-2.6.0-cdh5.5.0-tests.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/xmlenc-0.52.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/xml-apis-1.3.04.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/xercesImpl-2.9.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/servlet-api-2.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/protobuf-java-2.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/netty-3.6.2.Final.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/log4j-1.2.17.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/leveldbjni-all-1.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jsr305-3.0.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jsp-api-2.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jetty-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jersey-server-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jersey-core-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jasper-runtime-5.5.23.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jackson-mapper-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/jackson-core-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/htrace-core4-4.0.1-incubating.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/guava-11.0.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-logging-1.1.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-lang-2.6.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-io-2.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-el-1.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-daemon-1.0.13.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-codec-1.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/commons-cli-1.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-hdfs/lib/asm-3.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-api.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-applications-unmanaged-am-launcher.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-client.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-common.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-registry.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-applicationhistoryservice.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-common.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-nodemanager.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-resourcemanager.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-tests.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-web-proxy.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-web-proxy-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-tests-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-resourcemanager-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-nodemanager-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-common-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-server-applicationhistoryservice-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-registry-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-common-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-client-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-applications-distributedshell-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/hadoop-yarn-api-2.6.0-cdh5.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/spark-yarn-shuffle.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/xz-1.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/servlet-api-2.5.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/protobuf-java-2.5.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/log4j-1.2.17.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/leveldbjni-all-1.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jsr305-3.0.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jline-2.11.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jetty-util-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jetty-6.1.26.cloudera.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jettison-1.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jersey-server-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jersey-json-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jersey-guice-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jersey-core-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jersey-client-1.9.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jaxb-impl-2.2.3-1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jaxb-api-2.2.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/javax.inject-1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jackson-xc-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jackson-mapper-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jackson-jaxrs-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/jackson-core-asl-1.8.8.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/guice-servlet-3.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/guice-3.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/guava-11.0.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-logging-1.1.3.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-lang-2.6.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-io-2.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-httpclient-3.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-compress-1.4.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-collections-3.2.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-codec-1.4.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/commons-cli-1.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/asm-3.2.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/aopalliance-1.0.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/activation-1.1.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/zookeeper.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/spark-1.5.0-cdh5.5.0-yarn-shuffle.jar:/opt/cloudera/parcels/CDH-5.5.0-1.cdh5.5.0.p0.8/lib/hadoop-yarn/lib/stax-api-1.0-2.jar

2019-08-05 09:51:56,260 INFO org.apache.flink.runtime.entrypoint.ClusterEntrypoint - --------------------------------------------------------------------------------

看见了吧,去JobManager那台机器上对应目录下去找,可以看到日志所在文件夹

总用量 16K

drwx--x--- 3 yarn yarn 63 7月 25 17:58 application_1564048714696_0300

drwx--x--- 3 yarn yarn 63 7月 28 13:39 application_1564110901389_0235

drwx--x--- 4 yarn yarn 116 8月 5 09:52 application_1564969910131_0252

drwx--x--- 3 yarn yarn 63 8月 5 20:35 application_1564998210570_0394

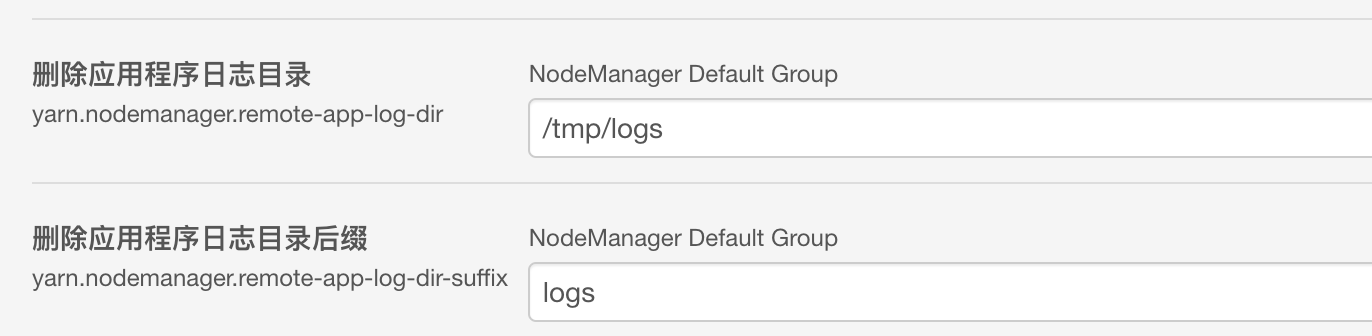

这个是运行时日志,如果任务停止了呢,Yarn一般会有备份的,比如我这边用的Cloudera,有个配置

然后你用

hadoop fs -ls /tmp/logs/用户名/logs

发现日志都在。

4. Hadoop中的主要配置文件

core-site.xml:hadoop全局配置:HDFS路径 ,临时数据的公共目录,ZooKeeper集群的地址和端口。

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/data/hadoop/tmp</value>

<!-- 其他临时目录的父目录 -->

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://hadoop-alone:9000</value>

<!--

hdfs://host:port/

默认的文件系统的名称。通常指定namenode的URI地址,包括主机和端口

-->

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

<!--

在序列文件中使用的缓冲区大小,这个缓冲区的大小应该是页大小(英特尔x86上为4096)的倍数

他决定读写操作中缓冲了多少数据(单位kb)

-->

</property>

<!--ZooKeeper集群的地址和端口。注意,数量一定是奇数,且不少于三个节点-->

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop1:2181,hadoop2:2181,hadoop3:2181,hadoop4:2181,hadoop5:2181</value>

</property>

</configuration>

hdfs-site.xml:HDFS文件系统属性:dfs.replication(文件块的数据备份数), dfs.data.dir(datanode节点存储在文件系统的目录) , dfs.name.dir(namenode节点存储在文件系统的目录) 。

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

<!--指定dataNode存储block的副本数量,默认值是3个,该值应该不大于4-->

</property>

<property>

<name>dfs.blocksize</name>

<value>268435456</value>

<!--大型的文件系统HDFS块大小为256MB,先默认是128MB-->

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/name</value>

<!--

存放namenode的名称表(fsimage)的目录,如果这是一个逗号分隔的目录列表,

那么在所有目录中复制名称表,用于冗余。

-->

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file://${hadoop.tmp.dir}/dfs/data</value>

<!--

存放datanode块的目录。如果这是一个逗号分隔的目录列表,

那么数据将存储在所有命名的目录中,通常存储在不同的设备上。

-->

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>0.0.0.0:50070</value>

<!---->

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>0.0.0.0:50090</value>

<!--secondary namenode HTTP服务器地址和端口。-->

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

<!-- 当为true时,则允许HDFS的检测,当为false时,则关闭HDFS的检测,但不影响其它HDFS的其它功能。-->

</property>

<property>

<name>dfs.nameservices</name>

<value>hadoop-cluster1</value>

<!--给hdfs集群起名字 -->

</property>

<property>

<name>dfs.namenode.handler.count</name>

<value>100</value>

<!-- RPC服务器的监听client线程数,如果dfs.namenode.servicerpc-address属性没有配置,则线程会监听所有节点的请求。-->

</property>

</configuration>

yarn-site.xml:设置Yarn的参数,NodeManager、ResourceManager等

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop-alone:8033</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop-alone:8025</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop-alone:8030</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>hadoop-alone:8050</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop-alone:8030</value>

</property>

</configuration>

mapred-site.xml:指明MapReduce的运行框架为YARN;

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<!--执行框架设置为 Hadoop YARN.-->

</property>

</configuration>

hadoop-env.sh:设置jdk的安装路径

Hadoop的NullWritable的更多相关文章

- [Hadoop in Action] 第7章 细则手册

向任务传递定制参数 获取任务待定的信息 生成多个输出 与关系数据库交互 让输出做全局排序 1.向任务传递作业定制的参数 在编写Mapper和Reducer时,通常会想让一些地方可以配 ...

- Hadoop学习笔记—12.MapReduce中的常见算法

一.MapReduce中有哪些常见算法 (1)经典之王:单词计数 这个是MapReduce的经典案例,经典的不能再经典了! (2)数据去重 "数据去重"主要是为了掌握和利用并行化思 ...

- Hadoop学习笔记—15.HBase框架学习(基础实践篇)

一.HBase的安装配置 1.1 伪分布模式安装 伪分布模式安装即在一台计算机上部署HBase的各个角色,HMaster.HRegionServer以及ZooKeeper都在一台计算机上来模拟. 首先 ...

- Hadoop学习笔记—20.网站日志分析项目案例(二)数据清洗

网站日志分析项目案例(一)项目介绍:http://www.cnblogs.com/edisonchou/p/4449082.html 网站日志分析项目案例(二)数据清洗:当前页面 网站日志分析项目案例 ...

- hadoop实战 -- 网站日志KPI指标分析

本项目分析apache服务器产生的日志,分析pv.独立ip数和跳出率等指标.其实这些指标在第三方系统中都可以检测到,在生产环境中通常用来分析用户交易等核心数据,此处只是用于演示说明日志数据的分析流程. ...

- hadoop 多表join:Map side join及Reduce side join范例

最近在准备抽取数据的工作.有一个id集合200多M,要从另一个500GB的数据集合中抽取出所有id集合中包含的数据集.id数据集合中每一个行就是一个id的字符串(Reduce side join要在每 ...

- Hadoop日记Day12---MapReduce学习

一.MapReduce简介 1.1MapReduce概述 MapReduce是一种分布式计算模型,由Google提出,主要用于搜索领域,解决海量数据的计算问题.MR由两个阶段组成:Map和Reduce ...

- Hadoop日记Day18---MapReduce排序分组

本节所用到的数据下载地址为:http://pan.baidu.com/s/1bnfELmZ MapReduce的排序分组任务与要求 我们知道排序分组是MapReduce中Mapper端的第四步,其中分 ...

- Hadoop: MapReduce2的几个基本示例

1) WordCount 这个就不多说了,满大街都是,网上有几篇对WordCount的详细分析 http://www.sxt.cn/u/235/blog/5809 http://www.cnblogs ...

随机推荐

- 【C#加深理解系列】(一)反射

什么是反射 反射是.NET中的重要机制,通过反射,可以在运行时获得程序或程序集中每一个类型(包括类.结构.委托.接口和枚举等)的成员和成员的信息.有了反射,即可对每一个类型了如指掌.另外我还可以直接创 ...

- 导航页-LeetCode专题-Python实现

LeetCode专题-Python实现之第1题:Two Sum LeetCode专题-Python实现之第7题:Reverse Integer LeetCode专题-Python实现之第9题:Pali ...

- springboot情操陶冶-web配置(四)

承接前文springboot情操陶冶-web配置(三),本文将在DispatcherServlet应用的基础上谈下websocket的使用 websocket websocket的简单了解可见维基百科 ...

- JavaScript 循环语句

while while循环由两个代码块组成,分别是条件语句和循环体. while ( [条件] ) { [循环体] } while循环类似于if语句,不同的是while循环将不断地执行循环体直 ...

- 【转】Android必备知识点- Android文件(File)操作

Android 使用与其他平台上基于磁盘的文件系统类似的文件系统. 本文讲述如何使用 Android 文件系统通过 File API 读取和写入文件. File 对象适合按照从开始到结束的顺序不跳过地 ...

- C# 消息队列-MSMQ

MQ是一种消息中间件技术,所以它能够支持多种类型的语言开发,同时也是跨平台的通信机制,也就是说MQ支持将信息转化为XML或者JSon等类型的数据存储到消息队列中,然后可以使用不同的语言来处理消息队列中 ...

- JS之类数组

类数组 什么是类数组? 定义: 拥有length属性,其属性(索引)为非负整数 不具有数组的所具有的方法 类数组与非类数组的比较 类数组: var obj = { 0 : "a", ...

- 引入UEditor后其他列表项不显示

最近在做的一个后台管理系统,发现一个bug: 问题描述:如果其他列表项都用类为col-xs-12的div包裹,而引入UEditor的部分不用类为col-xs-12的div包裹,那么其他列表项将无法显示 ...

- Dynamics CRM Web API中的and和or组合的正确方式!

关注本人微信和易信公众号: 微软动态CRM专家罗勇 ,回复243或者20170111可方便获取本文,同时可以在第一间得到我发布的最新的博文信息,follow me!我的网站是 www.luoyong. ...

- CentOS 7安装指南

CentOS 7安装指南(U盘版) 一.准备阶段 1.下载CentOS7镜像文件(ISO文件)到自己电脑,官网下载路径: http://isoredirect.centos.org/centos/7/ ...