Tensorflow object detection API 搭建属于自己的物体识别模型

一、下载Tensorflow object detection API工程源码

网址:https://github.com/tensorflow/models,可通过Git下载,打开Git Bash,输入git clone https://github.com/tensorflow/models.git进行下载。

二、标记需要训练的图片

①、在第一步下载的工程文件models\research\object_detection目录下,建立一个my_test_images用来放测试test和训练train的文件夹。将需要识别的图片放在test和train中进行训练和测试。

②、到https://tzutalin.github.io/labelImg/下载labelImg工具,打开labelImg.exe,点击open dir,打开models\research\object_detection\my_test_images\test和train,对里面的所有照片标注完成,标注完成后保存为与图片名字一样的.xml文件。

③、在models\research\object_detection\my_test_images文件夹下新建名字为xml_to_csv文件夹,在xml_to_csv文件夹下新建test_xml_to_csv.py和train_xml_to_csv.py文件。

test_xml_to_csv.py代码如下:

# -*- coding: utf-8 -*-

"""

Created on Wed Mar 13 21:50:27 2019 @author: CFF

""" import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET os.chdir('C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\test')

path = 'C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\test' def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

def main():

image_path = path

xml_df = xml_to_csv(image_path)

xml_df.to_csv('cat_test.csv', index=None)#cat_test.csv可以改为自己的文件名

print('Successfully converted xml to csv.')

main()

用Spyder打开test_xml_to_csv.py,点击编译,在C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\test目录下生成一个cat_test.csv文件,可用Excel打开。

同理,train_xml_to_csv.py代码如下:

# -*- coding: utf-8 -*-

"""

Created on Wed Mar 13 21:48:33 2019 @author: CFF

"""

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET os.chdir('C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\train')

path = 'C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\train' def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

def main():

image_path = path

xml_df = xml_to_csv(image_path)

xml_df.to_csv('cat_train.csv', index=None)

print('Successfully converted xml to csv.')

main()

用Spyder打开train_xml_to_csv.py,点击编译,在C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\my_test_images\\train目录下生成一个cat_train.csv文件,可用Excel打开。

三、将cat_train.csv和cat_test.csv文件转换为train.record和test.record数据集

①、先将cat_train.csv和cat_test.csv文件放在C:\\Users\\CFF\\Desktop\\models\\research\\object_detection\\data文件夹下。

②、在C:\\Users\\CFF\\Desktop\\models\\research\\object_detection文件夹下新建一个images文件夹,放入训练和测试的图片。

③、用Spyder在C:\\Users\\CFF\\Desktop\\models\\research\\object_detection文件夹下新建一个generate_tfrecord.py文件,generate_tfrecord.py代码如下:

# -*- coding: utf-8 -*-

"""

Created on Wed Mar 13 21:56:20 2019 @author: CFF

""" """

Usage:

# From tensorflow/models/

# Create train data:

python generate_tfrecord.py --csv_input=data/cat_train.csv --output_path=data/train.record

# Create test data:

python generate_tfrecord.py --csv_input=data/cat_test.csv --output_path=data/test.record

""" import os

import io

import pandas as pd

import tensorflow as tf from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict os.chdir('C:\\Users\\CFF\\Desktop\\models\\research\\object_detection') flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS # TO-DO replace this with label map

def class_text_to_int(row_label):#标签类型,根据实际情况写

if row_label == 'cat':

return 1

else:

None def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)] def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = [] for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class'])) tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example

def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(os.getcwd(), 'images')

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString())

writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.app.run()

打开Anaconda Prompt,分别输入python generate_tfrecord.py --csv_input=data/cat_train.csv --output_path=data/train.record和python generate_tfrecord.py --csv_input=data/cat_test.csv --output_path=data/test.record,在data文件夹下将生成train.record和test.record文件。(注意:出现tensorflow object detection API 验证时报No module named 'object_detection'时,在安装路径Anaconda3\Lib\site-packages下,新建tensorflow_model.pth文件,内容为模型文件路径:如C:\Users\CFF\Desktop\mymodels\research 和C:\Users\CFF\Desktop\mymodels\research\slim)

③、在data文件夹下,新建一个cat_label_map.pbtxt文件,用Spyder打开,内容为:

item {

id: 1

name: 'cat'

}

可根据分类数量进行修改。

四、在C:\Users\CFF\Desktop\models\research\object_detection文件夹下,建立一个training文件夹。

到https://github.com/tensorflow/models/tree/master/research/object_detection/samples/configs下载ssd_mobilenet_v1_coco.config模型,在training文件夹下新建一个文本文档,命名为ssd_mobilenet_v1_coco.config,内容如下:

# SSD with Mobilenet v1 configuration for MSCOCO Dataset.

# Users should configure the fine_tune_checkpoint field in the train config as

# well as the label_map_path and input_path fields in the train_input_reader and

# eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that

# should be configured. model {

ssd {

num_classes: 1 #根据实际情况填写分类数量

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

}

}

similarity_calculator {

iou_similarity {

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.2

max_scale: 0.95

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.3333

}

}

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

box_predictor {

convolutional_box_predictor {

min_depth: 0

max_depth: 0

num_layers_before_predictor: 0

use_dropout: false

dropout_keep_probability: 0.8

kernel_size: 1

box_code_size: 4

apply_sigmoid_to_scores: false

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

}

feature_extractor {

type: 'ssd_mobilenet_v1'

min_depth: 16

depth_multiplier: 1.0

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

loss {

classification_loss {

weighted_sigmoid {

}

}

localization_loss {

weighted_smooth_l1 {

}

}

hard_example_miner {

num_hard_examples: 3000

iou_threshold: 0.99

loss_type: CLASSIFICATION

max_negatives_per_positive: 3

min_negatives_per_image: 0

}

classification_weight: 1.0

localization_weight: 1.0

}

normalize_loss_by_num_matches: true

post_processing {

batch_non_max_suppression {

score_threshold: 1e-8

iou_threshold: 0.6

max_detections_per_class: 100

max_total_detections: 100

}

score_converter: SIGMOID

}

}

} train_config: {

batch_size: 1

optimizer {

rms_prop_optimizer: {

learning_rate: {

exponential_decay_learning_rate {

initial_learning_rate: 0.004

decay_steps: 800720

decay_factor: 0.95

}

}

momentum_optimizer_value: 0.9

decay: 0.9

epsilon: 1.0

}

}

# fine_tune_checkpoint: "PATH_TO_BE_CONFIGURED/model.ckpt"

# from_detection_checkpoint: true

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

num_steps: 200000

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

} train_input_reader: {

tf_record_input_reader {

input_path:"data/train.record"

}

label_map_path:"data/cat_label_map.pbtxt"

} eval_config: {

num_examples: 8000

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

} eval_input_reader: {

tf_record_input_reader {

input_path:"data/test.record"

}

label_map_path:"data/cat_label_map.pbtxt"

shuffle: false

num_readers: 1

}

其中,num_classes: 1 是根据实际情况填写分类数量,input_path:"data/train.record"和input_path:"data/test.record"为之前在data文件加下生成的train.record文件和tets.record文件。label_map_path:"data/cat_label_map.pbtxt"也是之前在data中生成的文件。

五、训练模型

①、在models/research路径下,输入protoc object_detection/protos/*.proto --python_out=.命令,将所有的.proto文件生成.py文件。

②、打开Anaconda Prompt,通过命令cd C:\Users\CFF\Desktop\models\research\object_detection到该目录下,运行以下命令:

python model_main.py --pipeline_config_path=training/ssd_mobilenet_v1_coco.config \ --model_dir=training \ --num_train_steps=50000 \ --num_eval_steps=2000 \

开始训练。训练一段时间后,可以在C:\Users\CFF\Desktop\models\research\object_detection,通过tensorboard --logdir=training命令,根据返回的网址在浏览器中打开,可以看到最新的图表。

六、测试自己的图片

①、在C:\Users\CFF\Desktop\models\research\object_detection\test_images文件夹下放需要识别的图片,用image1-imageN命名。

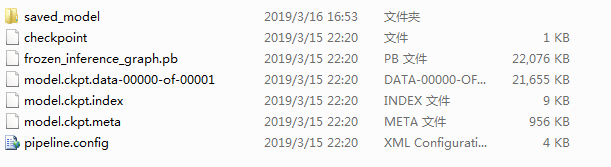

②、打开Anaconda Prompt,通过命令cd C:\Users\CFF\Desktop\models\research\object_detection到该目录下,输入python export_inference_graph.py \ --input_type image_tensor \ --pipeline_config_path training/ssd_mobilement_v1_coco.config \ --trained_checkpoint_prefix training/model.ckpt-9278 \ --output_directory cat_detection。其中model.ckpt-9278为训练的最后步数,可在training文件下看到。在cat_detection

下生成以下文件。

③、打开Anaconda Prompt,通过命令cd C:\Users\CFF\Desktop\models\research\object_detection到该目录下,输入jupyter notebook回车,打开交互环境。下载对应的Python文件object_detection_tutorial.py到本地。

④、用Spyder打开object_detection_tutorial.py文件,代码如下:

# coding: utf-8 # # Object Detection Demo

# Welcome to the object detection inference walkthrough! This notebook will walk you step by step through the process of using a pre-trained model to detect objects in an image. Make sure to follow the [installation instructions](https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/installation.md) before you start. # # Imports # In[1]: import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile from distutils.version import StrictVersion

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image # This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops if StrictVersion(tf.__version__) < StrictVersion('1.12.0'):

raise ImportError('Please upgrade your TensorFlow installation to v1.12.*.') # ## Env setup # In[2]: # This is needed to display the images.

get_ipython().run_line_magic('matplotlib', 'inline') # ## Object detection imports

# Here are the imports from the object detection module. # In[3]: from utils import label_map_util from utils import visualization_utils as vis_util # # Model preparation # ## Variables

#

# Any model exported using the `export_inference_graph.py` tool can be loaded here simply by changing `PATH_TO_FROZEN_GRAPH` to point to a new .pb file.

#

# By default we use an "SSD with Mobilenet" model here. See the [detection model zoo](https://github.com/tensorflow/models/blob/master/research/object_detection/g3doc/detection_model_zoo.md) for a list of other models that can be run out-of-the-box with varying speeds and accuracies. # In[4]: # What model to download.

MODEL_NAME = 'cat_detection'

#MODEL_FILE = MODEL_NAME + '.tar.gz'

#DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/' # Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb' # List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'cat_label_map.pbtxt') # ## Download Model # In[5]: #opener = urllib.request.URLopener()

#opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

#tar_file = tarfile.open(MODEL_FILE)

#for file in tar_file.getmembers():

# file_name = os.path.basename(file.name)

# if 'frozen_inference_graph.pb' in file_name:

# tar_file.extract(file, os.getcwd()) # ## Load a (frozen) Tensorflow model into memory. # In[6]: detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_FROZEN_GRAPH, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='') # ## Loading label map

# Label maps map indices to category names, so that when our convolution network predicts `5`, we know that this corresponds to `airplane`. Here we use internal utility functions, but anything that returns a dictionary mapping integers to appropriate string labels would be fine # In[7]: category_index = label_map_util.create_category_index_from_labelmap(PATH_TO_LABELS, use_display_name=True) # ## Helper code # In[8]: def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8) # # Detection # In[9]: # For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 5) ] # Size, in inches, of the output images.

IMAGE_SIZE = (12, 8) # In[10]: def run_inference_for_single_image(image, graph):

with graph.as_default():

with tf.Session() as sess:

# Get handles to input and output tensors

ops = tf.get_default_graph().get_operations()

all_tensor_names = {output.name for op in ops for output in op.outputs}

tensor_dict = {}

for key in [

'num_detections', 'detection_boxes', 'detection_scores',

'detection_classes', 'detection_masks'

]:

tensor_name = key + ':0'

if tensor_name in all_tensor_names:

tensor_dict[key] = tf.get_default_graph().get_tensor_by_name(

tensor_name)

if 'detection_masks' in tensor_dict:

# The following processing is only for single image

detection_boxes = tf.squeeze(tensor_dict['detection_boxes'], [0])

detection_masks = tf.squeeze(tensor_dict['detection_masks'], [0])

# Reframe is required to translate mask from box coordinates to image coordinates and fit the image size.

real_num_detection = tf.cast(tensor_dict['num_detections'][0], tf.int32)

detection_boxes = tf.slice(detection_boxes, [0, 0], [real_num_detection, -1])

detection_masks = tf.slice(detection_masks, [0, 0, 0], [real_num_detection, -1, -1])

detection_masks_reframed = utils_ops.reframe_box_masks_to_image_masks(

detection_masks, detection_boxes, image.shape[0], image.shape[1])

detection_masks_reframed = tf.cast(

tf.greater(detection_masks_reframed, 0.5), tf.uint8)

# Follow the convention by adding back the batch dimension

tensor_dict['detection_masks'] = tf.expand_dims(

detection_masks_reframed, 0)

image_tensor = tf.get_default_graph().get_tensor_by_name('image_tensor:0') # Run inference

output_dict = sess.run(tensor_dict,

feed_dict={image_tensor: np.expand_dims(image, 0)}) # all outputs are float32 numpy arrays, so convert types as appropriate

output_dict['num_detections'] = int(output_dict['num_detections'][0])

output_dict['detection_classes'] = output_dict[

'detection_classes'][0].astype(np.uint8)

output_dict['detection_boxes'] = output_dict['detection_boxes'][0]

output_dict['detection_scores'] = output_dict['detection_scores'][0]

if 'detection_masks' in output_dict:

output_dict['detection_masks'] = output_dict['detection_masks'][0]

return output_dict # In[11]: for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

output_dict = run_inference_for_single_image(image_np, detection_graph)

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

output_dict['detection_boxes'],

output_dict['detection_classes'],

output_dict['detection_scores'],

category_index,

instance_masks=output_dict.get('detection_masks'),

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

MODEL_NAME = 'cat_detection'为C:\Users\CFF\Desktop\models\research\object_detection文件加下建立的cat_detection目录。PATH_TO_FROZEN_GRAPH = MODEL_NAME + '/frozen_inference_graph.pb'为

cat_detection目录下的frozen_inference_graph.pb文件,PATH_TO_LABELS = os.path.join('data', 'cat_label_map.pbtxt')为data文件夹下的cat_label_map.pbtxt文件。PATH_TO_TEST_IMAGES_DIR = 'test_images'

为测试图片的路径。TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 5) ] 是根据图片的数量来修改。保存,编译。在Spyder控制台将输出测试后的图片。

Tensorflow object detection API 搭建属于自己的物体识别模型的更多相关文章

- Tensorflow object detection API 搭建物体识别模型(四)

四.模型测试 1)下载文件 在已经阅读并且实践过前3篇文章的情况下,读者会有一些文件夹.因为每个读者的实际操作不同,则文件夹中的内容不同.为了保持本篇文章的独立性,制作了可以独立运行的文件夹目标检测. ...

- Tensorflow object detection API 搭建物体识别模型(三)

三.模型训练 1)错误一: 在桌面的目标检测文件夹中打开cmd,即在路径中输入cmd后按Enter键运行.在cmd中运行命令: python /your_path/models-master/rese ...

- Tensorflow object detection API 搭建物体识别模型(一)

一.开发环境 1)python3.5 2)tensorflow1.12.0 3)Tensorflow object detection API :https://github.com/tensorfl ...

- Tensorflow object detection API 搭建物体识别模型(二)

二.数据准备 1)下载图片 图片来源于ImageNet中的鲤鱼分类,下载地址:https://pan.baidu.com/s/1Ry0ywIXVInGxeHi3uu608g 提取码: wib3 在桌面 ...

- Tensorflow object detection API ——环境搭建与测试

1.开发环境搭建 ①.安装Anaconda 建议选择 Anaconda3-5.0.1 版本,已经集成大多数库,并将其作为默认python版本(3.6.3),配置好环境变量(Anaconda安装则已经配 ...

- 使用Tensorflow object detection API——环境搭建与测试

[软件环境搭建] 操作系统:windows 10 64位 内存:8G CPU:I7-6700 Tensorflow: 1.4 Python:3.5 Anaconda3 (64-bit) 以上环境搭建请 ...

- Tensorflow object detection API(1)---环境搭建与测试

参考: https://blog.csdn.net/dy_guox/article/details/79081499 https://blog.csdn.net/u010103202/article/ ...

- 谷歌开源的TensorFlow Object Detection API视频物体识别系统实现教程

视频中的物体识别 摘要 物体识别(Object Recognition)在计算机视觉领域里指的是在一张图像或一组视频序列中找到给定的物体.本文主要是利用谷歌开源TensorFlow Object De ...

- 谷歌开源的TensorFlow Object Detection API视频物体识别系统实现(一)[超详细教程] ubuntu16.04版本

谷歌宣布开源其内部使用的 TensorFlow Object Detection API 物体识别系统.本教程针对ubuntu16.04系统,快速搭建环境以及实现视频物体识别系统功能. 本节首先介绍安 ...

随机推荐

- Android Studio帮助文档的安装及智能提示设置

初次使用Android Studio,发现其智能提示不能像Visual Studio一样显示系统方法等的详细用途描述.经查找资料,问题原因是未安装SDK Document. 解决办法如下: 1.打开如 ...

- 电子产品使用感受之-- AirPods + Apple Watch S4 = Smart iPod ?

- 使用Xilinx UART-LITE IP实现串口--逻辑代码实现

`timescale 1ns / 1ps /////////////////////////////////////////////////////////////////////////////// ...

- django创建命令及配置

创建项目django-admin startproject XXX(项目名字)运行项目 python manage.py runserver创建子应用python manage.py startapp ...

- TCP断开那些事

继上一篇后,我们再来看一下四次挥手的过程 这里其实没有必要过多阐述,一张图胜过千言万语. 与三次握手一样,四次挥手的过程中也有许多扩展问题. 当然问的最多的还是:为什么要四次握手?为什么要等待2MSL ...

- 描述逻辑(DL)基础知识

Logic逻辑理论实际上是一个规范性(normative)的理论,而不是一个描述性的(descriptive)理论.即,它并不是用来描述人类究竟是采用何种的形式来推理的,而是来研究人类应该如何有效的进 ...

- JMeter压测基础(三)——Mysql数据库

JMeter压测基础(三)——Mysql数据库 环境准备 mysql驱动 JMeter jdbc配置 JMeter jdbc请求 1.下载mysql驱动:mysql-connector-java.ja ...

- GRUB 的配置文件解析

原文:http://c.biancheng.net/view/1032.html 本节,我们就来看看 GRUB 的配置文件 /boot/gmb/grub.conf 中到底写了什么.命令如下: [roo ...

- BPDU报文(传统STP)

BPDU字段包含的信息: Protocol ID 协议ID Version STP版本(三种) STP(802.1D)传统生成树 值为0 RSTP(.1W)快速生成树 值为2 MSTP(.1S)多生成 ...

- 未能执行URL

在 <compilation debug="true"> 下 加入: <buildProviders> <add extension=".h ...