oozie.log报提示:org.apache.oozie.service.ServiceException: E0104错误 An Admin needs to install the sharelib with oozie-setup.sh and issue the 'oozie admin' CLI command to update sharelib

不多说,直接上干货!

问题详情

关于怎么启动oozie,我这里不多赘述。

Oozie的详细启动步骤(CDH版本的3节点集群)

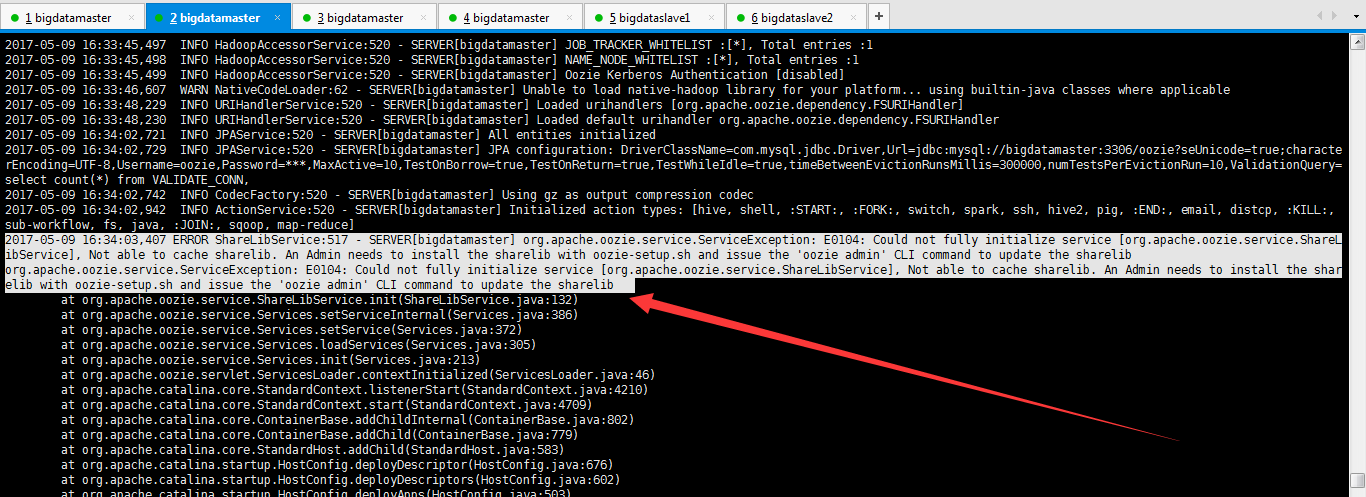

然后,我在查看

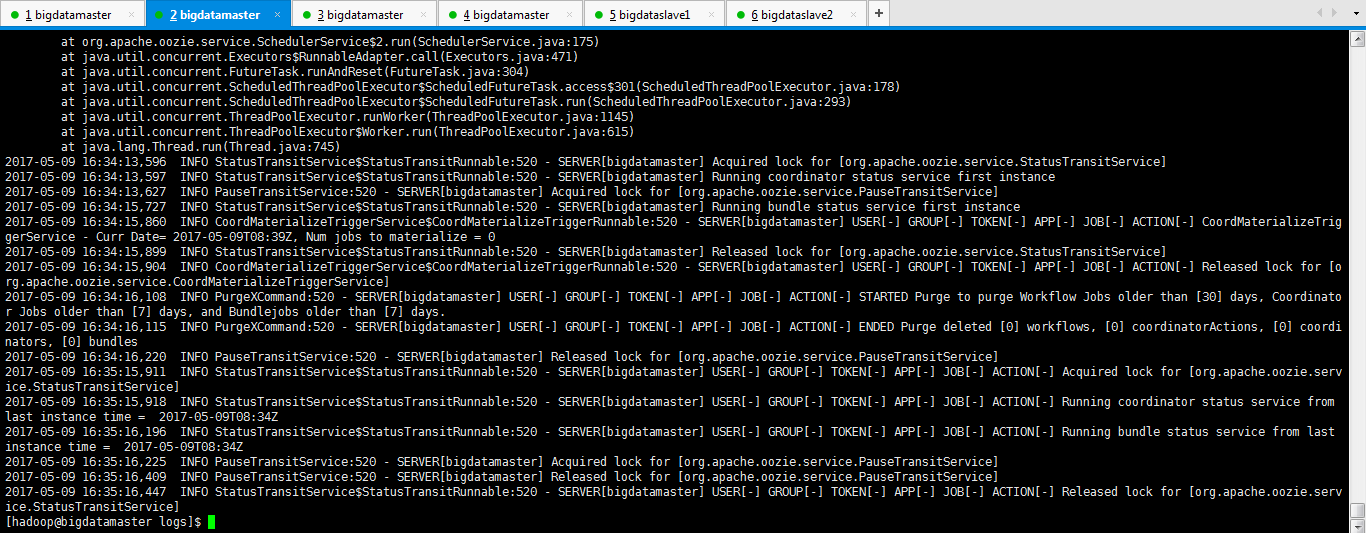

[hadoop@bigdatamaster logs]$ tail -n oozie.log

时,报错提示,如下

[hadoop@bigdatamaster logs]$ tail -n oozie.log

-- ::, WARN ConfigurationService: - SERVER[bigdatamaster] Invalid configuration defined, [oozie.service.ProxyUserervic.proxyuser.hadoop.hosts]

-- ::, WARN ConfigurationService: - SERVER[bigdatamaster] Invalid configuration defined, [oozie.service.ProxyUserervic.proxyuser.hadoop.groups]

-- ::, WARN ConfigurationService: - SERVER[bigdatamaster] Invalid configuration defined, [oozie.service.ProxyUserService.proxyuser.hadoop.groups]

-- ::, WARN Services: - SERVER[bigdatamaster] System ID [oozie-hado] exceeds maximum length [], trimming

-- ::, INFO Services: - SERVER[bigdatamaster] Exiting null Entering NORMAL

-- ::, INFO Services: - SERVER[bigdatamaster] Initialized runtime directory [/home/hadoop/app/oozie-4.1.-cdh5.5.4/oozie-server/temp/oozie-hado4109573002071520237.dir]

-- ::, WARN AuthorizationService: - SERVER[bigdatamaster] Oozie running with authorization disabled

-- ::, INFO HadoopAccessorService: - SERVER[bigdatamaster] JOB_TRACKER_WHITELIST :[*], Total entries :

-- ::, INFO HadoopAccessorService: - SERVER[bigdatamaster] NAME_NODE_WHITELIST :[*], Total entries :

-- ::, INFO HadoopAccessorService: - SERVER[bigdatamaster] Oozie Kerberos Authentication [disabled]

-- ::, WARN NativeCodeLoader: - SERVER[bigdatamaster] Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

-- ::, INFO URIHandlerService: - SERVER[bigdatamaster] Loaded urihandlers [org.apache.oozie.dependency.FSURIHandler]

-- ::, INFO URIHandlerService: - SERVER[bigdatamaster] Loaded default urihandler org.apache.oozie.dependency.FSURIHandler

-- ::, INFO JPAService: - SERVER[bigdatamaster] All entities initialized

-- ::, INFO JPAService: - SERVER[bigdatamaster] JPA configuration: DriverClassName=com.mysql.jdbc.Driver,Url=jdbc:mysql://bigdatamaster:3306/oozie?seUnicode=true;characterEncoding=UTF-8,Username=oozie,Password=***,MaxActive=10,TestOnBorrow=true,TestOnReturn=true,TestWhileIdle=true,timeBetweenEvictionRunsMillis=300000,numTestsPerEvictionRun=10,ValidationQuery=select count(*) from VALIDATE_CONN,

-- ::, INFO CodecFactory: - SERVER[bigdatamaster] Using gz as output compression codec

2017-05-09 16:34:02,942 INFO ActionService:520 - SERVER[bigdatamaster] Initialized action types: [hive, shell, :START:, :FORK:, switch, spark, ssh, hive2, pig, :END:, email, distcp, :KILL:, sub-workflow, fs, java, :JOIN:, sqoop, map-reduce]

2017-05-09 16:34:03,407 ERROR ShareLibService:517 - SERVER[bigdatamaster] org.apache.oozie.service.ServiceException: E0104: Could not fully initialize service [org.apache.oozie.service.ShareLibService], Not able to cache sharelib. An Admin needs to install the sharelib with oozie-setup.sh and issue the 'oozie admin' CLI command to update the sharelib

org.apache.oozie.service.ServiceException: E0104: Could not fully initialize service [org.apache.oozie.service.ShareLibService], Not able to cache sharelib. An Admin needs to install the sharelib with oozie-setup.sh and issue the 'oozie admin' CLI command to update the sharelib

at org.apache.oozie.service.ShareLibService.init(ShareLibService.java:)

at org.apache.oozie.service.Services.setServiceInternal(Services.java:)

at org.apache.oozie.service.Services.setService(Services.java:)

at org.apache.oozie.service.Services.loadServices(Services.java:)

at org.apache.oozie.service.Services.init(Services.java:)

at org.apache.oozie.servlet.ServicesLoader.contextInitialized(ServicesLoader.java:)

at org.apache.catalina.core.StandardContext.listenerStart(StandardContext.java:)

at org.apache.catalina.core.StandardContext.start(StandardContext.java:)

at org.apache.catalina.core.ContainerBase.addChildInternal(ContainerBase.java:)

at org.apache.catalina.core.ContainerBase.addChild(ContainerBase.java:)

at org.apache.catalina.core.StandardHost.addChild(StandardHost.java:)

at org.apache.catalina.startup.HostConfig.deployDescriptor(HostConfig.java:)

at org.apache.catalina.startup.HostConfig.deployDescriptors(HostConfig.java:)

at org.apache.catalina.startup.HostConfig.deployApps(HostConfig.java:)

at org.apache.catalina.startup.HostConfig.start(HostConfig.java:)

at org.apache.catalina.startup.HostConfig.lifecycleEvent(HostConfig.java:)

at org.apache.catalina.util.LifecycleSupport.fireLifecycleEvent(LifecycleSupport.java:)

at org.apache.catalina.core.ContainerBase.start(ContainerBase.java:)

at org.apache.catalina.core.StandardHost.start(StandardHost.java:)

at org.apache.catalina.core.ContainerBase.start(ContainerBase.java:)

at org.apache.catalina.core.StandardEngine.start(StandardEngine.java:)

at org.apache.catalina.core.StandardService.start(StandardService.java:)

at org.apache.catalina.core.StandardServer.start(StandardServer.java:)

at org.apache.catalina.startup.Catalina.start(Catalina.java:)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:)

at java.lang.reflect.Method.invoke(Method.java:)

at org.apache.catalina.startup.Bootstrap.start(Bootstrap.java:)

at org.apache.catalina.startup.Bootstrap.main(Bootstrap.java:)

Caused by: java.lang.NoClassDefFoundError: org/apache/htrace/core/Tracer$Builder

at org.apache.hadoop.fs.FsTracer.get(FsTracer.java:)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:)

at org.apache.hadoop.fs.FileSystem.access$(FileSystem.java:)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:)

at org.apache.oozie.service.ShareLibService.init(ShareLibService.java:)

... more

Caused by: java.lang.ClassNotFoundException: org.apache.htrace.core.Tracer$Builder

at org.apache.catalina.loader.WebappClassLoader.loadClass(WebappClassLoader.java:)

at org.apache.catalina.loader.WebappClassLoader.loadClass(WebappClassLoader.java:)

... more

-- ::, INFO ProxyUserService: - SERVER[bigdatamaster] Loading proxyuser settings [oozie.service.ProxyUserService.proxyuser.hue.hosts]=[*]

-- ::, INFO ProxyUserService: - SERVER[bigdatamaster] Loading proxyuser settings [oozie.service.ProxyUserService.proxyuser.hue.groups]=[*]

-- ::, INFO ProxyUserService: - SERVER[bigdatamaster] Loading proxyuser settings [oozie.service.ProxyUserService.proxyuser.hadoop.hosts]=[*]

-- ::, INFO ProxyUserService: - SERVER[bigdatamaster] Loading proxyuser settings [oozie.service.ProxyUserService.proxyuser.hadoop.groups]=[*]

-- ::, WARN SparkConfigurationService: - SERVER[bigdatamaster] Spark Configuration could not be loaded for *: /home/hadoop/app/oozie-4.1.-cdh5.5.4/conf/spark-conf does not exist

-- ::, INFO Services: - SERVER[bigdatamaster] Initialized

-- ::, INFO Services: - SERVER[bigdatamaster] Running with JARs for Hadoop version [2.6.-cdh5.5.4]

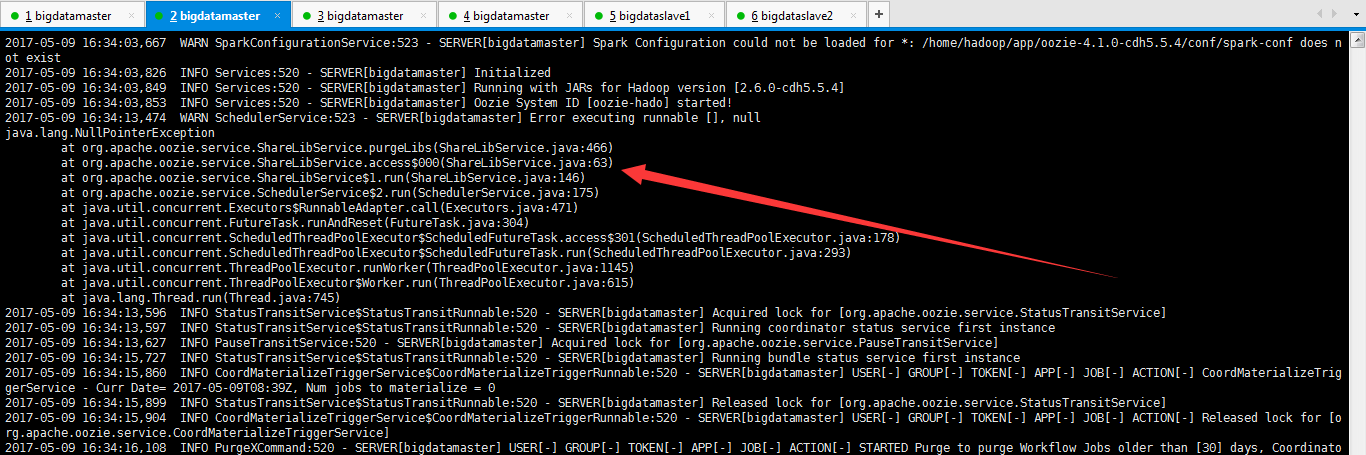

2017-05-09 16:34:03,853 INFO Services:520 - SERVER[bigdatamaster] Oozie System ID [oozie-hado] started!

2017-05-09 16:34:13,474 WARN SchedulerService:523 - SERVER[bigdatamaster] Error executing runnable [], null

java.lang.NullPointerException

at org.apache.oozie.service.ShareLibService.purgeLibs(ShareLibService.java:)

at org.apache.oozie.service.ShareLibService.access$(ShareLibService.java:)

at org.apache.oozie.service.ShareLibService$.run(ShareLibService.java:)

at org.apache.oozie.service.SchedulerService$.run(SchedulerService.java:)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:)

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$(ScheduledThreadPoolExecutor.java:)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:)

at java.lang.Thread.run(Thread.java:)

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] Acquired lock for [org.apache.oozie.service.StatusTransitService]

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] Running coordinator status service first instance

-- ::, INFO PauseTransitService: - SERVER[bigdatamaster] Acquired lock for [org.apache.oozie.service.PauseTransitService]

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] Running bundle status service first instance

-- ::, INFO CoordMaterializeTriggerService$CoordMaterializeTriggerRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] CoordMaterializeTriggerService - Curr Date= --09T08:39Z, Num jobs to materialize =

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] Released lock for [org.apache.oozie.service.StatusTransitService]

-- ::, INFO CoordMaterializeTriggerService$CoordMaterializeTriggerRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] Released lock for [org.apache.oozie.service.CoordMaterializeTriggerService]

-- ::, INFO PurgeXCommand: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] STARTED Purge to purge Workflow Jobs older than [] days, Coordinator Jobs older than [] days, and Bundlejobs older than [] days.

-- ::, INFO PurgeXCommand: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] ENDED Purge deleted [] workflows, [] coordinatorActions, [] coordinators, [] bundles

-- ::, INFO PauseTransitService: - SERVER[bigdatamaster] Released lock for [org.apache.oozie.service.PauseTransitService]

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] Acquired lock for [org.apache.oozie.serv

ice.StatusTransitService]

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] Running coordinator status service from last instance time = --09T08:34Z

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] Running bundle status service from last instance time = --09T08:34Z

-- ::, INFO PauseTransitService: - SERVER[bigdatamaster] Acquired lock for [org.apache.oozie.service.PauseTransitService]

-- ::, INFO PauseTransitService: - SERVER[bigdatamaster] Released lock for [org.apache.oozie.service.PauseTransitService]

-- ::, INFO StatusTransitService$StatusTransitRunnable: - SERVER[bigdatamaster] USER[-] GROUP[-] TOKEN[-] APP[-] JOB[-] ACTION[-] Released lock for [org.apache.oozie.service.StatusTransitService]

[hadoop@bigdatamaster logs]$

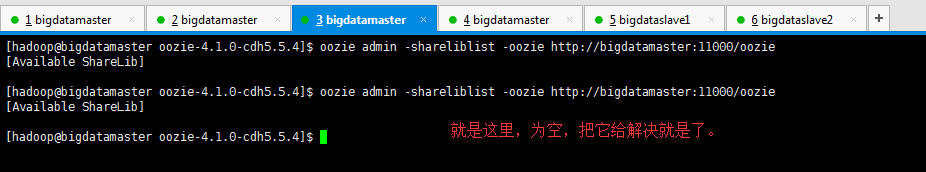

问题分析

说白了,这个问题,就是

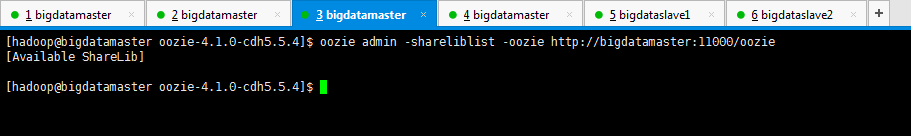

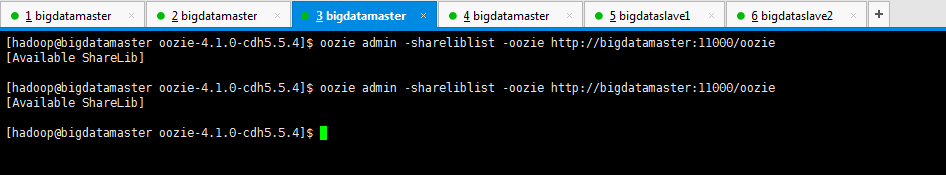

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ oozie admin -shareliblist -oozie http://bigdatamaster:11000/oozie

[Available ShareLib] [hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ oozie admin -shareliblist -oozie http://bigdatamaster:11000/oozie

[Available ShareLib] [hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$

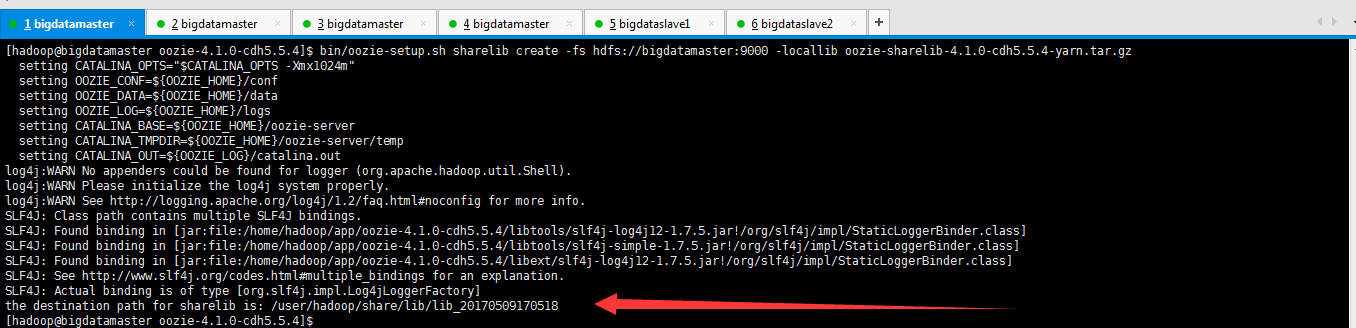

解决办法

得重新执行下面的命令,再来生成

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ bin/oozie-setup.sh sharelib create -fs hdfs://bigdatamaster:9000 -locallib oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

setting OOZIE_CONF=${OOZIE_HOME}/conf

setting OOZIE_DATA=${OOZIE_HOME}/data

setting OOZIE_LOG=${OOZIE_HOME}/logs

setting CATALINA_BASE=${OOZIE_HOME}/oozie-server

setting CATALINA_TMPDIR=${OOZIE_HOME}/oozie-server/temp

setting CATALINA_OUT=${OOZIE_LOG}/catalina.out

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libtools/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libtools/slf4j-simple-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libext/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

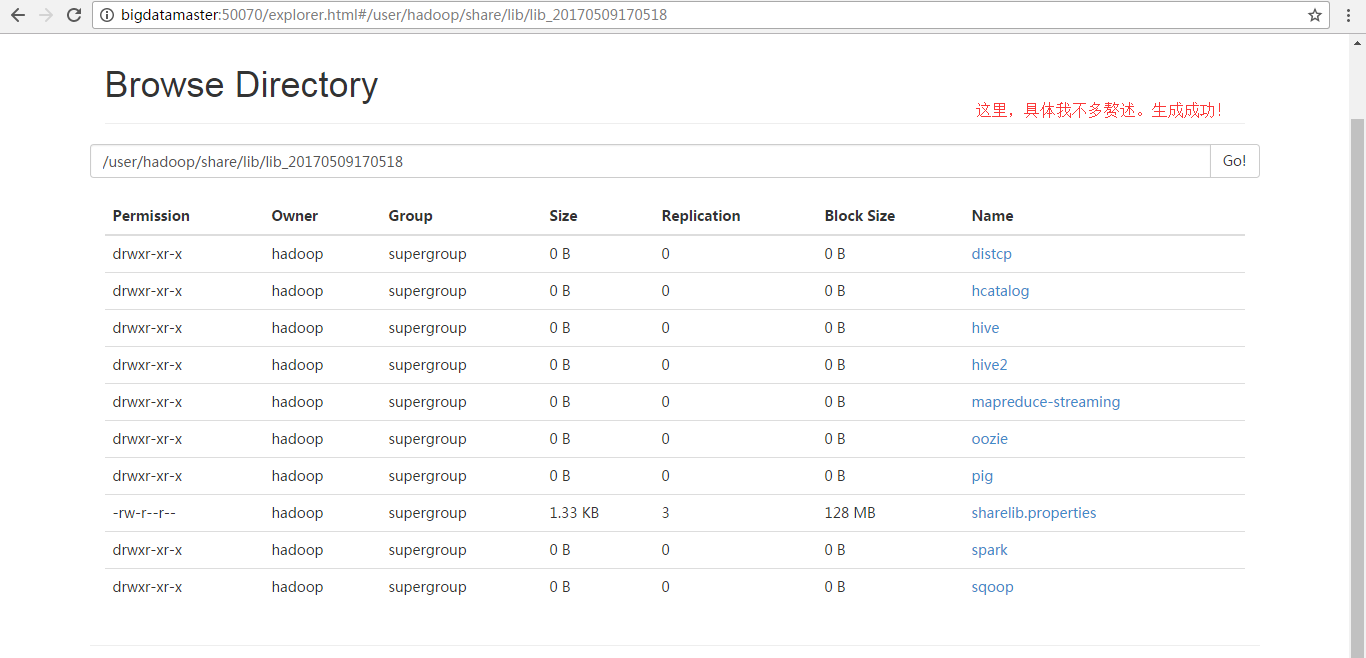

the destination path for sharelib is: /user/hadoop/share/lib/lib_20170509170518

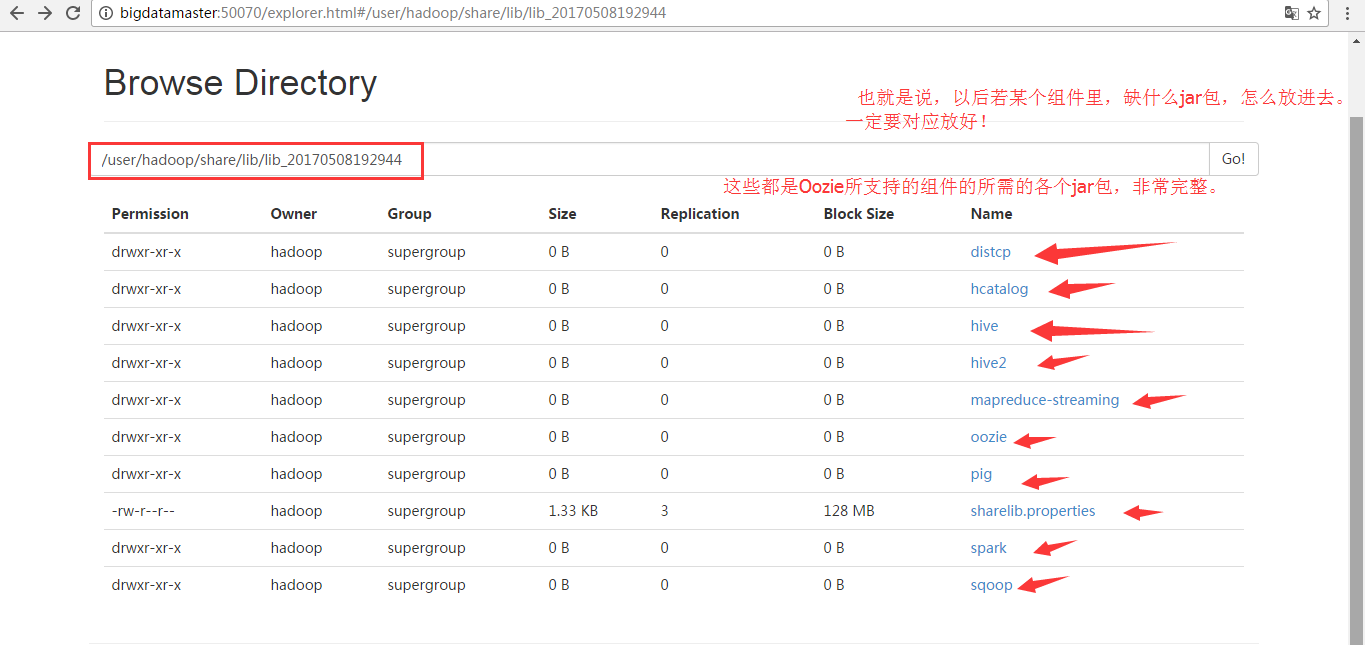

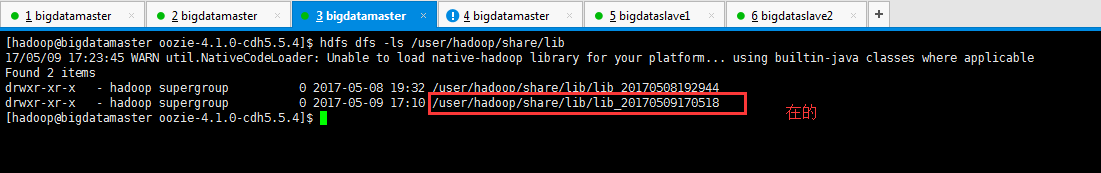

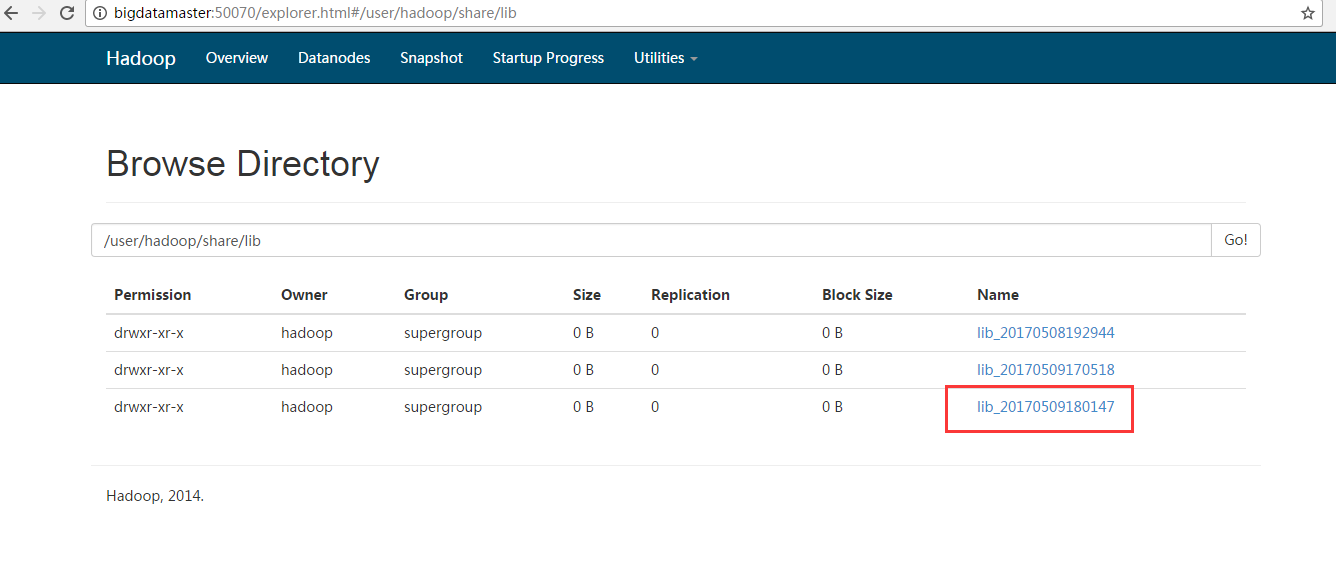

然后,现在,/user/hadoop/下,有了 /user/hadoop/share/lib/lib_20170508192944(注意这个时间,是刚执行那一刻时间命名的)(注意这是我之前的)。

[hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$ hdfs dfs -ls /user/hadoop/share/lib

17/05/09 17:23:45 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

drwxr-xr-x - hadoop supergroup 0 2017-05-08 19:32 /user/hadoop/share/lib/lib_20170508192944

drwxr-xr-x - hadoop supergroup 0 2017-05-09 17:10 /user/hadoop/share/lib/lib_20170509170518

[hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$

同时把,你的$HADOOP_HOME/etc/hadoop下的core-site.xml里的

<property>

<name>oozie.service.HadoopAccessorService.jobTracker.whitelist</name>

<value>*</value>

<description>whitelisted job tracker for oozie service</description>

</property>

<property>

<name>oozie.service.HadoopAccessorService.nameNode.whitelist</name>

<value>*</value>

<description>whitelisted job tracker for oozie service</description>

</property>

给去掉。

然后,再重新停止oozie,再重新开启oozie。

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ bin/oozie-stop.sh [hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ bin/oozie-start.sh

也许,你会立马去查看oozie.log日志,发现似乎好了,其实,你再多刷新几次,等上半分钟再去查看,发现又报E0104错误了。说白了,还是没解决到位!

问题继续解决

安装的过程中主要的问题在于sharelib包无法加载的问题,这个问题的处理方法如下:

1)检测sharelib包是否已经加入到hadoop文件系统中,执行hadoop fs –ls /user/Hadoop/share/lib 查看sharelib包是否存在

当然,大家也可以这样去查看

[hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$ oozie admin -shareliblist -oozie http://bigdatamaster:11000/oozie

[Available ShareLib] [hadoop@bigdatamaster oozie-4.1.0-cdh5.5.4]$

若未出现相应内容,请检查相关信息是否配置正确即可。(说白了,就是这个问题导致的)

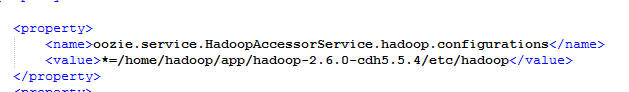

2)检查 oozie 与 Hadoop 的对接配置是否正确,主要是检查oozie.service.HadoopAccessorService.hadoop.configurations 属性,其值为 *=/home/hadoop/app/hadoop-2.6.0-cdh5.0.4/etc/Hadoop,其值所指向的目录是否是hadoop的配置文件目录,如果没有配置正常,则会报/user/Hadoop/share/lib文件找不到错误。

<property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=/home/hadoop/app/hadoop-2.6.0-cdh5.5.4/etc/hadoop</value>

</property>

3)检查oozie.services属性是否确置正常,主要是org.apache.oozie.service.JobsConcurrencyService类的次序是否已调致第一个,如果没有,则会报在oozie的后台目录中会报NullPointerException错误。

即说白了,oozie-site.xml配置文件是很重要的!(关键还是这个配置文件没配置好,所导致的!!!)

<?xml version="1.0"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration> <!--

Refer to the oozie-default.xml file for the complete list of

Oozie configuration properties and their default values.

--> <!-- Proxyuser Configuration --> <!-- <property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.hosts</name>

<value>*</value>

<description>

List of hosts the '#USER#' user is allowed to perform 'doAs'

operations. The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of hostnames. For multiple users copy this property and replace the user name

in the property name.

</description>

</property> <property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.groups</name>

<value>*</value>

<description>

List of groups the '#USER#' user is allowed to impersonate users

from to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of groups. For multiple users copy this property and replace the user name

in the property name.

</description>

</property> --> <!-- Default proxyuser configuration for Hue --> <property>

<name>oozie.services</name>

<value>

org.apache.oozie.service.SchedulerService,

org.apache.oozie.service.InstrumentationService,

org.apache.oozie.service.MemoryLocksService,

org.apache.oozie.service.UUIDService,

org.apache.oozie.service.ELService,

org.apache.oozie.service.AuthorizationService,

org.apache.oozie.service.UserGroupInformationService,

org.apache.oozie.service.HadoopAccessorService,

org.apache.oozie.service.JobsConcurrencyService,

org.apache.oozie.service.URIHandlerService,

org.apache.oozie.service.DagXLogInfoService,

org.apache.oozie.service.SchemaService,

org.apache.oozie.service.LiteWorkflowAppService,

org.apache.oozie.service.JPAService,

org.apache.oozie.service.StoreService,

org.apache.oozie.service.SLAStoreService,

org.apache.oozie.service.DBLiteWorkflowStoreService,

org.apache.oozie.service.CallbackService,

org.apache.oozie.service.ActionService,

org.apache.oozie.service.ShareLibService,

org.apache.oozie.service.CallableQueueService,

org.apache.oozie.service.ActionCheckerService,

org.apache.oozie.service.RecoveryService,

org.apache.oozie.service.PurgeService,

org.apache.oozie.service.CoordinatorEngineService,

org.apache.oozie.service.BundleEngineService,

org.apache.oozie.service.DagEngineService,

org.apache.oozie.service.CoordMaterializeTriggerService,

org.apache.oozie.service.StatusTransitService,

org.apache.oozie.service.PauseTransitService,

org.apache.oozie.service.GroupsService,

org.apache.oozie.service.ProxyUserService,

org.apache.oozie.service.XLogStreamingService,

org.apache.oozie.service.JvmPauseMonitorService

</value>

</property>

<property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=/home/hadoop/app/hadoop-2.6.-cdh5.5.4/etc/hadoop</value>

</property>

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name>

<value>*</value>

</property>

<property>

<name>oozie.service.ProxyUserService.proxyuser.hue.groups</name>

<value>*</value>

</property>

<property>

<name>oozie.service.ProxyUserervic.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>oozie.service.ProxyUserervic.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>oozie.db.schema.name</name>

<value>oozie</value>

<description>

Oozie DataBase Name

</description>

</property>

<property>

<name>oozie.service.JPAService.create.db.schema</name>

<value>false</value>

<description>

Creates Oozie DB.

If set to true, it creates the DB schema if it does not exist. If the DB schema exists is a NOP.

If set to false, it does not create the DB schema. If the DB schema does not exist it fails start up.

</description>

</property>

<property>

<name>oozie.service.JPAService.jdbc.driver</name>

<value>com.mysql.jdbc.Driver</value>

<description>

JDBC driver class.

</description>

</property>

<property>

<name>oozie.service.JPAService.jdbc.url</name>

<value>jdbc:mysql://bigdatamaster:3306/oozie?createDatabaseIfNotExist=true</value>

<description>

JDBC URL.

</description>

</property>

<property>

<name>oozie.service.JPAService.jdbc.username</name>

<value>oozie</value>

<description>

DB user name.

</description>

</property>

<property>

<name>oozie.service.JPAService.jdbc.password</name>

<value>oozie</value>

<description>

DB user password.

IMPORTANT: if password is emtpy leave a space string, the service trims the value,

if empty Configuration assumes it is NULL.

</description>

</property>

<property>

<name>oozie.servlet.CallbackServlet.max.data.len</name>

<value></value>

</property>

</configuration>

然后,再来,

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ bin/oozie-setup.sh sharelib create -fs hdfs://bigdatamaster:9000 -locallib oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz

setting CATALINA_OPTS="$CATALINA_OPTS -Xmx1024m"

setting OOZIE_CONF=${OOZIE_HOME}/conf

setting OOZIE_DATA=${OOZIE_HOME}/data

setting OOZIE_LOG=${OOZIE_HOME}/logs

setting CATALINA_BASE=${OOZIE_HOME}/oozie-server

setting CATALINA_TMPDIR=${OOZIE_HOME}/oozie-server/temp

setting CATALINA_OUT=${OOZIE_LOG}/catalina.out

log4j:WARN No appenders could be found for logger (org.apache.hadoop.util.Shell).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libtools/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libtools/slf4j-simple-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/app/oozie-4.1.-cdh5.5.4/libext/slf4j-log4j12-1.7..jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

the destination path for sharelib is: /user/hadoop/share/lib/lib_20170509180147

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$

然后,再

[hadoop@bigdatamaster oozie-4.1.-cdh5.5.4]$ bin/oozie-start.sh

再执行,

依然如此,还是没得到解决。

其实,当大家在执行这条命令的时候,你同时另开一个窗口,jps下,就会发现

[hadoop@bigdatamaster hadoop]$ jps

ResourceManager

NameNode

Bootstrap

Jps

SecondaryNameNode

OozieSharelibCLI

QuorumPeerMain

[hadoop@bigdatamaster hadoop]$

问题继续解决

得到

http://blog.csdn.net/maixia24/article/details/43759689

解决

我再次定位到oozie-site.xml。

以下是oozie-4.0.0-cdh5.3.6的默认oozie-site.xml配置文件 (因为我安装的是oozie-4.1.0-cdh5.5.4),发现两个之间差别有点大

<?xml version="1.0"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one

or more contributor license agreements. See the NOTICE file

distributed with this work for additional information

regarding copyright ownership. The ASF licenses this file

to you under the Apache License, Version 2.0 (the

"License"); you may not use this file except in compliance

with the License. You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration> <!--

Refer to the oozie-default.xml file for the complete list of

Oozie configuration properties and their default values.

--> <property>

<name>oozie.service.ActionService.executor.ext.classes</name>

<value>

org.apache.oozie.action.email.EmailActionExecutor,

org.apache.oozie.action.hadoop.HiveActionExecutor,

org.apache.oozie.action.hadoop.ShellActionExecutor,

org.apache.oozie.action.hadoop.SqoopActionExecutor,

org.apache.oozie.action.hadoop.DistcpActionExecutor,

org.apache.oozie.action.hadoop.Hive2ActionExecutor

</value>

</property> <property>

<name>oozie.service.SchemaService.wf.ext.schemas</name>

<value>

shell-action-0.1.xsd,shell-action-0.2.xsd,shell-action-0.3.xsd,email-action-0.1.xsd,email-action-0.2.xsd,

hive-action-0.2.xsd,hive-action-0.3.xsd,hive-action-0.4.xsd,hive-action-0.5.xsd,sqoop-action-0.2.xsd,

sqoop-action-0.3.xsd,sqoop-action-0.4.xsd,ssh-action-0.1.xsd,ssh-action-0.2.xsd,distcp-action-0.1.xsd,

distcp-action-0.2.xsd,oozie-sla-0.1.xsd,oozie-sla-0.2.xsd,hive2-action-0.1.xsd

</value>

</property> <property>

<name>oozie.system.id</name>

<value>oozie-${user.name}</value>

<description>

The Oozie system ID.

</description>

</property> <property>

<name>oozie.systemmode</name>

<value>NORMAL</value>

<description>

System mode for Oozie at startup.

</description>

</property> <property>

<name>oozie.service.AuthorizationService.security.enabled</name>

<value>false</value>

<description>

Specifies whether security (user name/admin role) is enabled or not.

If disabled any user can manage Oozie system and manage any job.

</description>

</property> <property>

<name>oozie.service.PurgeService.older.than</name>

<value></value>

<description>

Jobs older than this value, in days, will be purged by the PurgeService.

</description>

</property> <property>

<name>oozie.service.PurgeService.purge.interval</name>

<value></value>

<description>

Interval at which the purge service will run, in seconds.

</description>

</property> <property>

<name>oozie.service.CallableQueueService.queue.size</name>

<value></value>

<description>Max callable queue size</description>

</property> <property>

<name>oozie.service.CallableQueueService.threads</name>

<value></value>

<description>Number of threads used for executing callables</description>

</property> <property>

<name>oozie.service.CallableQueueService.callable.concurrency</name>

<value></value>

<description>

Maximum concurrency for a given callable type.

Each command is a callable type (submit, start, run, signal, job, jobs, suspend,resume, etc).

Each action type is a callable type (Map-Reduce, Pig, SSH, FS, sub-workflow, etc).

All commands that use action executors (action-start, action-end, action-kill and action-check) use

the action type as the callable type.

</description>

</property> <property>

<name>oozie.service.coord.normal.default.timeout

</name>

<value></value>

<description>Default timeout for a coordinator action input check (in minutes) for normal job.

- means infinite timeout</description>

</property> <property>

<name>oozie.db.schema.name</name>

<value>oozie</value>

<description>

Oozie DataBase Name

</description>

</property> <property>

<name>oozie.service.JPAService.create.db.schema</name>

<value>false</value>

<description>

Creates Oozie DB. If set to true, it creates the DB schema if it does not exist. If the DB schema exists is a NOP.

If set to false, it does not create the DB schema. If the DB schema does not exist it fails start up.

</description>

</property> <property>

<name>oozie.service.JPAService.jdbc.driver</name>

<value>org.apache.derby.jdbc.EmbeddedDriver</value>

<description>

JDBC driver class.

</description>

</property> <property>

<name>oozie.service.JPAService.jdbc.url</name>

<value>jdbc:derby:${oozie.data.dir}/${oozie.db.schema.name}-db;create=true</value>

<description>

JDBC URL.

</description>

</property> <property>

<name>oozie.service.JPAService.jdbc.username</name>

<value>sa</value>

<description>

DB user name.

</description>

</property> <property>

<name>oozie.service.JPAService.jdbc.password</name>

<value> </value>

<description>

DB user password. IMPORTANT: if password is emtpy leave a space string, the service trims the value,

if empty Configuration assumes it is NULL.

</description>

</property> <property>

<name>oozie.service.JPAService.pool.max.active.conn</name>

<value></value>

<description>

Max number of connections.

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.kerberos.enabled</name>

<value>false</value>

<description>

Indicates if Oozie is configured to use Kerberos.

</description>

</property> <property>

<name>local.realm</name>

<value>LOCALHOST</value>

<description>

Kerberos Realm used by Oozie and Hadoop. Using 'local.realm' to be aligned with Hadoop configuration

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.keytab.file</name>

<value>${user.home}/oozie.keytab</value>

<description>

Location of the Oozie user keytab file.

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.kerberos.principal</name>

<value>${user.name}/localhost@${local.realm}</value>

<description>

Kerberos principal for Oozie service.

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.jobTracker.whitelist</name>

<value> </value>

<description>

Whitelisted job tracker for Oozie service.

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.nameNode.whitelist</name>

<value> </value>

<description>

Whitelisted job tracker for Oozie service.

</description>

</property> <property>

<name>oozie.service.HadoopAccessorService.hadoop.configurations</name>

<value>*=hadoop-conf</value>

<description>

Comma separated AUTHORITY=HADOOP_CONF_DIR, where AUTHORITY is the HOST:PORT of

the Hadoop service (JobTracker, HDFS). The wildcard '*' configuration is

used when there is no exact match for an authority. The HADOOP_CONF_DIR contains

the relevant Hadoop *-site.xml files. If the path is relative is looked within

the Oozie configuration directory; though the path can be absolute (i.e. to point

to Hadoop client conf/ directories in the local filesystem.

</description>

</property> <property>

<name>oozie.service.WorkflowAppService.system.libpath</name>

<value>/user/${user.name}/share/lib</value>

<description>

System library path to use for workflow applications.

This path is added to workflow application if their job properties sets

the property 'oozie.use.system.libpath' to true.

</description>

</property> <property>

<name>use.system.libpath.for.mapreduce.and.pig.jobs</name>

<value>false</value>

<description>

If set to true, submissions of MapReduce and Pig jobs will include

automatically the system library path, thus not requiring users to

specify where the Pig JAR files are. Instead, the ones from the system

library path are used.

</description>

</property> <property>

<name>oozie.authentication.type</name>

<value>simple</value>

<description>

Defines authentication used for Oozie HTTP endpoint.

Supported values are: simple | kerberos | #AUTHENTICATION_HANDLER_CLASSNAME#

</description>

</property> <property>

<name>oozie.authentication.token.validity</name>

<value></value>

<description>

Indicates how long (in seconds) an authentication token is valid before it has

to be renewed.

</description>

</property> <property>

<name>oozie.authentication.cookie.domain</name>

<value></value>

<description>

The domain to use for the HTTP cookie that stores the authentication token.

In order to authentiation to work correctly across multiple hosts

the domain must be correctly set.

</description>

</property> <property>

<name>oozie.authentication.simple.anonymous.allowed</name>

<value>true</value>

<description>

Indicates if anonymous requests are allowed.

This setting is meaningful only when using 'simple' authentication.

</description>

</property> <property>

<name>oozie.authentication.kerberos.principal</name>

<value>HTTP/localhost@${local.realm}</value>

<description>

Indicates the Kerberos principal to be used for HTTP endpoint.

The principal MUST start with 'HTTP/' as per Kerberos HTTP SPNEGO specification.

</description>

</property> <property>

<name>oozie.authentication.kerberos.keytab</name>

<value>${oozie.service.HadoopAccessorService.keytab.file}</value>

<description>

Location of the keytab file with the credentials for the principal.

Referring to the same keytab file Oozie uses for its Kerberos credentials for Hadoop.

</description>

</property> <property>

<name>oozie.authentication.kerberos.name.rules</name>

<value>DEFAULT</value>

<description>

The kerberos names rules is to resolve kerberos principal names, refer to Hadoop's

KerberosName for more details.

</description>

</property> <!-- Proxyuser Configuration --> <!-- <property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.hosts</name>

<value>*</value>

<description>

List of hosts the '#USER#' user is allowed to perform 'doAs'

operations. The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of hostnames. For multiple users copy this property and replace the user name

in the property name.

</description>

</property> <property>

<name>oozie.service.ProxyUserService.proxyuser.#USER#.groups</name>

<value>*</value>

<description>

List of groups the '#USER#' user is allowed to impersonate users

from to perform 'doAs' operations. The '#USER#' must be replaced with the username o the user who is

allowed to perform 'doAs' operations. The value can be the '*' wildcard or a list of groups. For multiple users copy this property and replace the user name

in the property name.

</description>

</property> --> <!-- Default proxyuser configuration for Hue --> <property>

<name>oozie.service.ProxyUserService.proxyuser.hue.hosts</name>

<value>*</value>

</property> <property>

<name>oozie.service.ProxyUserService.proxyuser.hue.groups</name>

<value>*</value>

</property> </configuration>

如果在这一步中没有配置sharelib,在启动后也可以继续设置,而且不需要重启oozie:

bin/oozie-setup.sh sharelib create -fs hdfs://bigdatamaster:9000 -locallib oozie-sharelib-4.1.0-cdh5.5.4-yarn.tar.gz#从本地目录向hdfs复制sharelib

bin/oozie admin -oozie http://bigdatamaster:11000/oozie -sharelibupdate #更新oozie的sharelib

bin/oozie admin -oozie http://bigdatamaster:11000/oozie -shareliblist #查看sharelib列表(正常应该有多条数据)

注:如果sharelib设定不正确,会出现错误【 Could not locate Oozie sharelib 】

参考

http://stackoverflow.com/questions/28702100/apache-oozie-failed-loading-sharelib

oozie.log报提示:org.apache.oozie.service.ServiceException: E0104错误 An Admin needs to install the sharelib with oozie-setup.sh and issue the 'oozie admin' CLI command to update sharelib的更多相关文章

- Oozie时出现org.apache.oozie.service.ServiceException: E0103: Could not load service classes, Cannot load JDBC driver class 'com.mysql.jdbc.Driver'

不多说,直接上干货! 问题详情 查看你的$OOZIE_HOME/logs 我的是/home/hadoop/app/oozie-4.1.0-cdh5.5.4/logs/oozie.log文件 [hado ...

- CentOS7启动SSH服务报:Job for ssh.service failed because the control process exited with error code

CentOS7启动SSH服务报:Job for ssh.service failed because the control process exited with error code....... ...

- Vmware出现报错The VMware Authorization Service is not running.之后无法上网解决

今天一大早开VMware,启动ubuntu时出现了报错The VMware Authorization Service is not running,服务Authorization没有运行. 这之前一 ...

- 用java运行Hadoop程序报错:org.apache.hadoop.fs.LocalFileSystem cannot be cast to org.apache.

用java运行Hadoop例程报错:org.apache.hadoop.fs.LocalFileSystem cannot be cast to org.apache.所写代码如下: package ...

- mavne install 报错org.apache.maven.surefire.util.SurefireReflectionException: java.lang.reflect.InvocationTargetException

maven install 报错 org.apache.maven.surefire.util.SurefireReflectionException: java.lang.reflect.Invoc ...

- Centos7 网络报错Job for iptables.service failed because the control process exited with error code.

今天在进行项目联系的时候,启动在待机的虚拟机,发现虚拟机的网络设置又出现了问题. 我以为像往常一样重启网卡服务就能成功,但是它却报了Job for iptables.service failed be ...

- maven构建报错org.apache.maven.lifecycle.LifecycleExecutionException

2017年06月04日 15:03:10 阅读数:7991 maven构建报错 org.apache.maven.lifecycle.LifecycleExecutionException: Fail ...

- 报错org.apache.ibatis.binding.BindingException: Type interface com.atguigu.mybatis.bean.dao.EmployeeMapper is not known to the MapperRegistry.

报错org.apache.ibatis.binding.BindingException: Type interface com.atguigu.mybatis.bean.dao.EmployeeMa ...

- Oralce查询后修改数据,弹窗报提示these query result are not updateable,include the ROWID to get updateable

select t.*, (select a.ANNEXNAME from base_annex a where a.id = t.closeFile) closeFileName, (select a ...

随机推荐

- 按行读入xml文件,删除不需要的行 -Java

删除挺麻烦的,这里其实只是把需要的行存到arraylist中再存到另一个文件中 import java.io.BufferedReader;import java.io.BufferedWriter; ...

- Deep Learning 36:python中的一些函数

1.map(function, sequence[, sequence, ...])函数:返回一个list作用:map的作用是以参数序列中的每一个元素调用function函数,返回包含每次functi ...

- install build tools 25.0.2 and sync the project

install build tools 25.0.2 and sync the project in android studio bundle.gradle,将buildToolsVersion修改 ...

- ActiveMQ 入门使用p2p模型-主动消费

生产者 package clc.active; import com.sun.xml.internal.bind.v2.runtime.unmarshaller.XmlVisitor; import ...

- async-await系列翻译(一)

本篇翻译的英文链接:https://docs.microsoft.com/en-us/dotnet/articles/standard/async-in-depth 使用.NET的基于任务的异步编程模 ...

- ios打印frame等格式

1.打印frame:NSLog(@"%@",NSStringFromCGRect(pickerView.frame)); 或者CFShow(NSStringFromCGRect(p ...

- Struts2的各种标签库

1 在JSP中使用taglib编译指令导入标签库 <%@ taglib prefix="s" uri="/struts-tags" %> ----- ...

- python单元测试之unittest框架使用总结

一.什么是单元测试 单元测试是用来对一个模块.一个函数或者一个类来进行正确性检验的测试工作. 比如对于函数abs(),我们可以编写的测试用例为: (1)输入正数,比如1.1.2.0.99,期待返回值与 ...

- win7Setx修改环境变量

SETX.exe (Resource Kit, Windows 7) Set environment variables permanently, SETX can be used to set En ...

- 【转】Selenium模拟JQuery滑动解锁

滑动解锁一直做UI自动化的难点之一,我补一篇滑动解锁的例子,希望能给初做Web UI自动化测试的同学一些思路. 首先先看个例子. https://www.helloweba.com/demo/2017 ...