centos之hadoop的安装

Evernote Export

第一步 环境部署

参考

http://dblab.xmu.edu.cn/blog/install-hadoop-in-centos/

1.创建hadoop用户

$su #以root登录

$useradd -m hadoop -s /bin/bash # 创建新用户hadoop

$ passwd hadoop #密码设置为hadoop

$ visudo #修改hadoop 账号的权限,和root权限一样大 一般是在98行左右

2.安装ssh

默认是安装的

$ rpm -qa | grep ssh #一般情况下 默认是安装的 这个命令是为了检验是否安装

如果需要安装 命令如下

$ sudo yum install openssh-clients #

$ sudo yum install openssh-server

$ ssh localhost #测试可用

配置无密码链接ssh(此刻应该切换为hadoop账号)

$ exit # 退出刚才的 ssh localhost

$ cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhost

$ ssh-keygen -t rsa # 会有提示,都按回车就可以

$ cat id_rsa.pub >> authorized_keys # 加入授权

$ chmod 600 ./authorized_keys # 修改文件权限

$ ## 配置完这些 就可以无密码登录了

测试 ssh localhost

$ rpm -qa | grep ssh #一般情况下 默认是安装的 这个命令是为了检验是否安装

$ sudo yum install openssh-clients #

$ sudo yum install openssh-server

$ ssh localhost #测试可用

$ exit # 退出刚才的 ssh localhost

$ cd ~/.ssh/ # 若没有该目录,请先执行一次ssh localhost

$ ssh-keygen -t rsa # 会有提示,都按回车就可以

$ cat id_rsa.pub >> authorized_keys # 加入授权

$ chmod 600 ./authorized_keys # 修改文件权限

$ ## 配置完这些 就可以无密码登录了

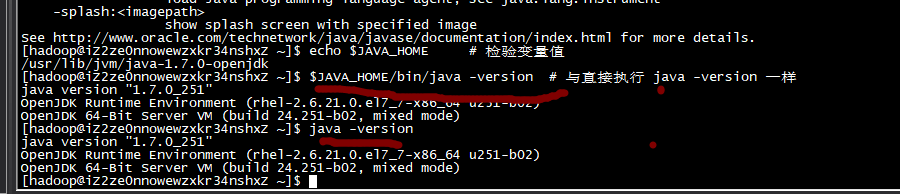

3.安装java环境

因为hadoop是java写的,需要jvm的支持 我们安装java1.7,

默认安装位置为 /usr/lib/jvm/java-1.7.0-openjdk

该路径可以通过执行 rpm -ql java-1.7.0-openjdk-devel | grep '/bin/javac' 命令确定,

$ sudo yum install java-1.7.0-openjdk java-1.7.0-openjdk-devel #安装java

$ vim ~/.bashrc # 修改环境变量

$ export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk #在文件最后面添加如下单独一行(指向 JDK 的安装位置),并保存:

$ source ~/.bashrc # 使变量设置生效

检验是否设置正确

$ echo $JAVA_HOME # 检验变量值

$ java -version

$ JAVA_HOME/bin/java -version # 与直接执行 java -version 一样

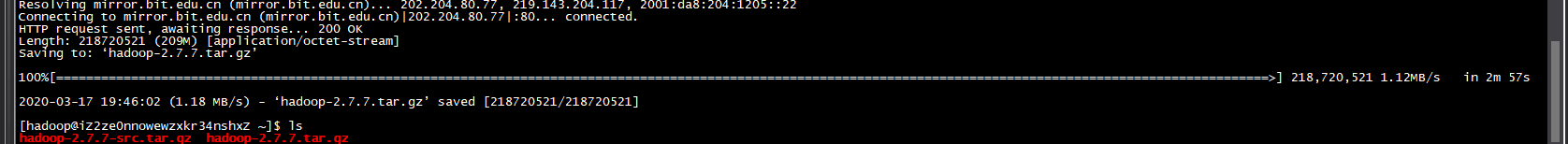

4.安装hadoop2

Hadoop 2 可以通过hadoop下载网站 这个教程是2.6版本,强烈建议跟着教程走,选择3的话,可能会有一些新的特性产生一些兼容问题

不会用ftp的话 可以在命令行输入wget http://mirror.bit.edu.cn/apache/hadoop/common/hadoop-2.7.7/hadoop-2.7.7.tar.gz

解压安装

$ sudo tar -zxf ~/hadoop-2.7.7.tar.gz -C /usr/local # 解压到/usr/local中

$

$ sudo mv ./hadoop-2.7.7/ ./hadoop # 将文件夹名改为hadoop

$ sudo chown -R hadoop:hadoop ./hadoop # 修改文件测试是否成功安装

成功则会显示 Hadoop 版本信息:

$ cd /usr/local/hadoop

$ ./bin/hadoop version

hadoop 的三种模式

| 模式 | 简介 |

|---|---|

| 单机模式 | 自己的机器玩 |

| 伪分布式模式 | 自己的机器模拟多态机器 |

| 分布式 | 多台机器一起玩 |

单机模式的安装

默认就是单机模式,无需修改其他配置,hadoop有些例子可以尝试下

./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-2.6.0.jar 路径在这 包括wordcount、terasort、join、grep 等。

$ cd /usr/local/hadoop

$ mkdir ./input

$ cp ./etc/hadoop/*.xml ./input # 将配置文件作为输入文件

$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar grep ./input ./output 'dfs[a-z.]+'

$ cat ./output/* # 查看运行结果

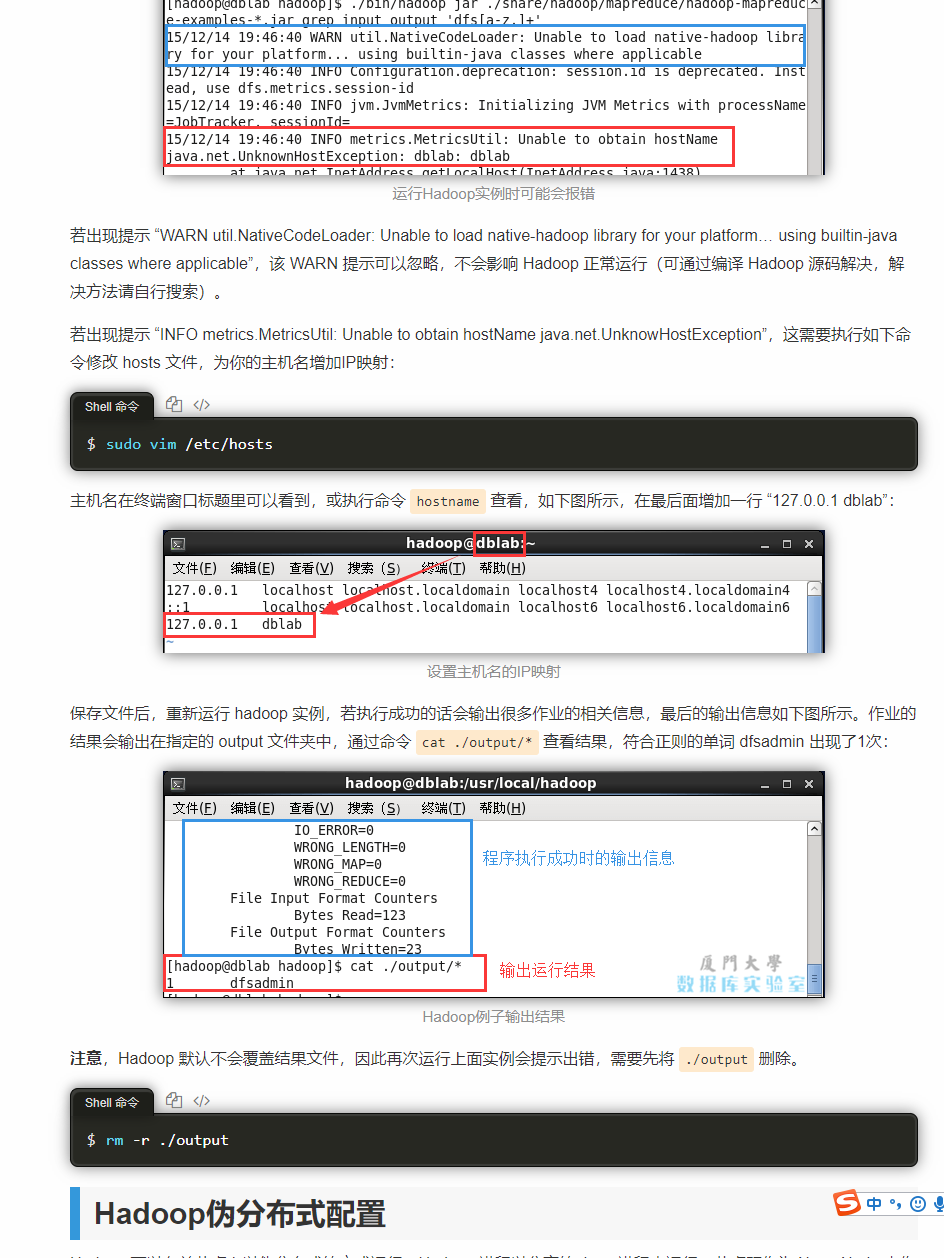

运行出错

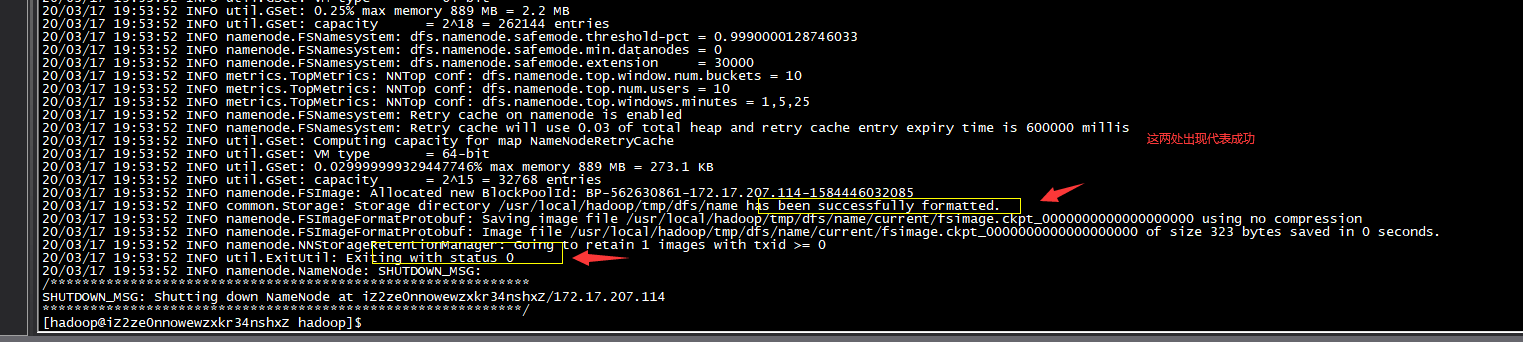

伪分布式的配置

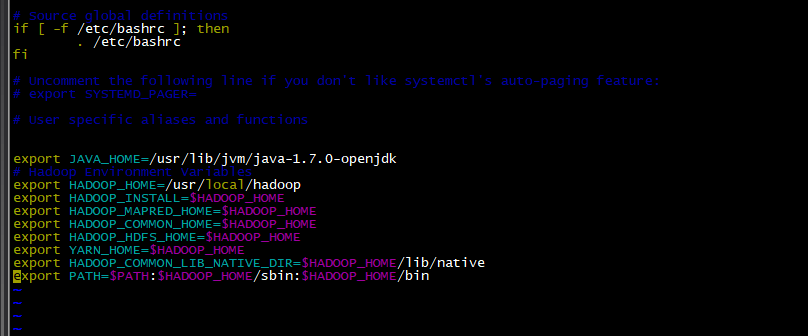

在设置 Hadoop 伪分布式配置前,我们还需要设置 HADOOP 环境变量,执行如下命令在 ~/.bashrc 中设置:

vim ~/.bashrc

在后面追加

# Hadoop Environment Variables

export HADOOP_HOME=/usr/local/hadoop

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

生效

source ~/.bashrc

修改core-site.xml

文件路径 /usr/local/hadoop/etc/hadoop/

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/usr/local/hadoop/tmp</value>

<description>Abase for other temporary directories.</description>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

修改hdfs-site.xml

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/tmp/dfs/data</value>

</property>

</configuration>

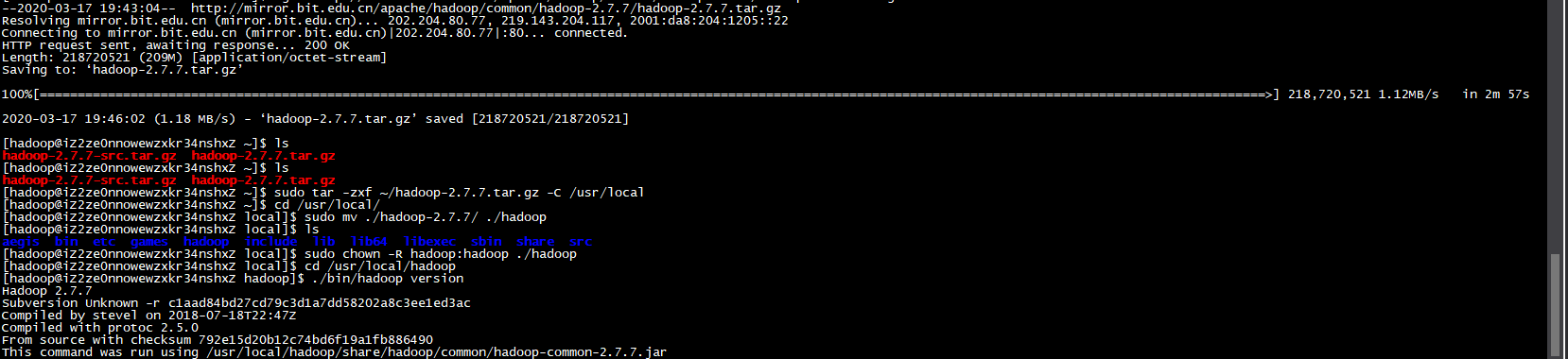

NameNode格式化

./bin/hdfs namenode -format

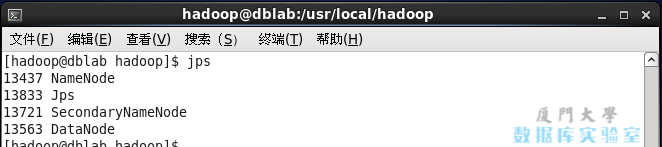

开启NameNode 和DataNode 守护进程

./sbin/start-dfs.sh

jps 查看进程

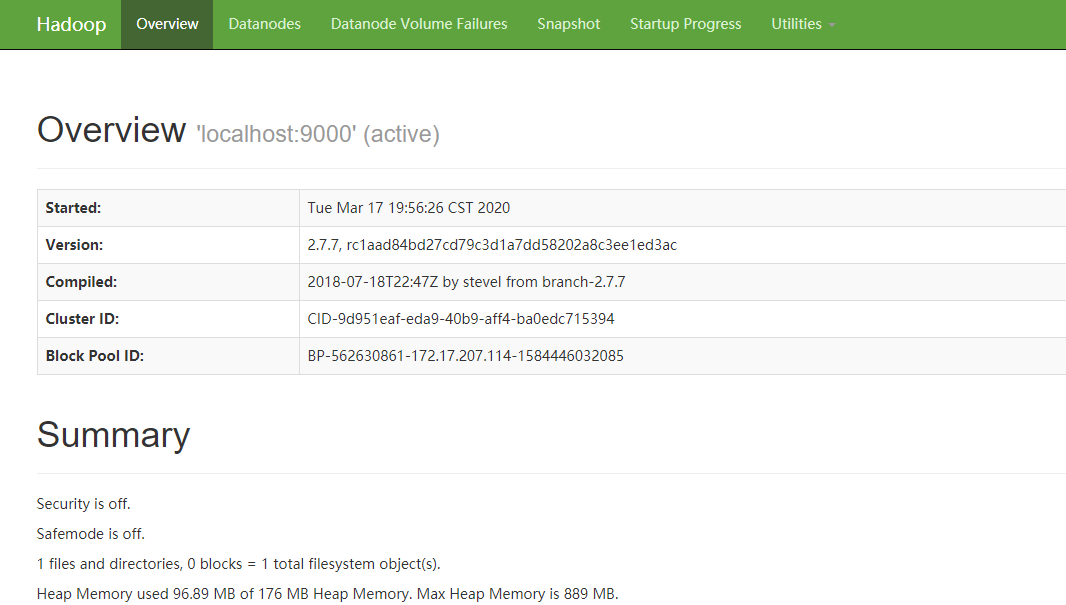

web在线http://localhost:50070

我用的是阿里云,如果要访问阿里云,需要配置安全组端口

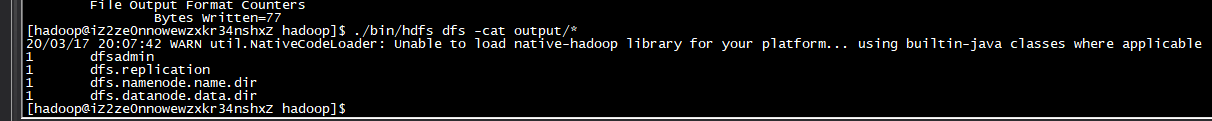

5.运行hadoop

伪分布式读取的则是 HDFS 上的数据。要使用 HDFS,首先需要在 HDFS 中创建用户目录:

$ ./bin/hdfs dfs -mkdir -p /user/hadoop

$ ./bin/hdfs dfs -mkdir input

$ ./bin/hdfs dfs -put ./etc/hadoop/*.xml input

$ ./bin/hdfs dfs -ls input

$ ./bin/hadoop jar ./share/hadoop/mapreduce/hadoop-mapreduce-examples-*.jar grep input output 'dfs[a-z.]+'

$

代表运行成功

Hadoop 运行程序时,输出目录不能存在,否则会提示错误 “org.apache.hadoop.mapred.FileAlreadyExistsException: Output directory hdfs://localhost:9000/user/hadoop/output already exists” ,因此若要再次执行,需要执行如下命令删除 output 文件夹:

$ rm -r ./output # 先删除本地的 output 文件夹(如果存在)

$ ./bin/hdfs dfs -get output ./output # 将 HDFS 上的 output 文件夹拷贝到本机

$ cat ./output/*

$ ./sbin/stop-dfs.sh

下次启动 hadoop 时,无需进行 NameNode 的初始化,只需要运行 ./sbin/start-dfs.sh 就可以!

%23%20%E7%AC%AC%E4%B8%80%E6%AD%A5%20%E7%8E%AF%E5%A2%83%E9%83%A8%E7%BD%B2%0A%E5%8F%82%E8%80%83%20http%3A%2F%2Fdblab.xmu.edu.cn%2Fblog%2Finstall-hadoop-in-centos%2F%0A%23%23%201.%E5%88%9B%E5%BB%BAhadoop%E7%94%A8%E6%88%B7%0A%60%60%60%20%20shell%0A%24su%20%23%E4%BB%A5root%E7%99%BB%E5%BD%95%0A%24useradd%20-m%20hadoop%20-s%20%2Fbin%2Fbash%20%23%20%E5%88%9B%E5%BB%BA%E6%96%B0%E7%94%A8%E6%88%B7hadoop%0A%24%20passwd%20hadoop%20%23%E5%AF%86%E7%A0%81%E8%AE%BE%E7%BD%AE%E4%B8%BAhadoop%0A%24%20visudo%20%23%E4%BF%AE%E6%94%B9hadoop%20%E8%B4%A6%E5%8F%B7%E7%9A%84%E6%9D%83%E9%99%90%EF%BC%8C%E5%92%8Croot%E6%9D%83%E9%99%90%E4%B8%80%E6%A0%B7%E5%A4%A7%20%E4%B8%80%E8%88%AC%E6%98%AF%E5%9C%A898%E8%A1%8C%E5%B7%A6%E5%8F%B3%0A%60%60%60%0A!%5Bfffb7478fbfc760c71e918661ba64fdb.png%5D(en-resource%3A%2F%2Fdatabase%2F745%3A1)%0A%0A%0A%23%23%202.%E5%AE%89%E8%A3%85ssh%0A%23%23%23%20%E9%BB%98%E8%AE%A4%E6%98%AF%E5%AE%89%E8%A3%85%E7%9A%84%0A%60%60%60%20%20shell%0A%0A%24%20rpm%20-qa%20%7C%20grep%20ssh%20%23%E4%B8%80%E8%88%AC%E6%83%85%E5%86%B5%E4%B8%8B%20%E9%BB%98%E8%AE%A4%E6%98%AF%E5%AE%89%E8%A3%85%E7%9A%84%20%E8%BF%99%E4%B8%AA%E5%91%BD%E4%BB%A4%E6%98%AF%E4%B8%BA%E4%BA%86%E6%A3%80%E9%AA%8C%E6%98%AF%E5%90%A6%E5%AE%89%E8%A3%85%0A%60%60%60%0A%23%23%23%20%E5%A6%82%E6%9E%9C%E9%9C%80%E8%A6%81%E5%AE%89%E8%A3%85%20%E5%91%BD%E4%BB%A4%E5%A6%82%E4%B8%8B%0A%60%60%60shell%0A%24%20sudo%20yum%20install%20openssh-clients%20%23%0A%24%20sudo%20yum%20install%20openssh-server%0A%24%20ssh%20localhost%20%23%E6%B5%8B%E8%AF%95%E5%8F%AF%E7%94%A8%0A%60%60%60%0A%23%23%23%20%E9%85%8D%E7%BD%AE%E6%97%A0%E5%AF%86%E7%A0%81%E9%93%BE%E6%8E%A5ssh%EF%BC%88%E6%AD%A4%E5%88%BB%E5%BA%94%E8%AF%A5%E5%88%87%E6%8D%A2%E4%B8%BAhadoop%E8%B4%A6%E5%8F%B7%EF%BC%89%0A%60%60%60%20shell%0A%24%20exit%20%23%20%E9%80%80%E5%87%BA%E5%88%9A%E6%89%8D%E7%9A%84%20ssh%20localhost%0A%24%20cd%20~%2F.ssh%2F%20%23%20%E8%8B%A5%E6%B2%A1%E6%9C%89%E8%AF%A5%E7%9B%AE%E5%BD%95%EF%BC%8C%E8%AF%B7%E5%85%88%E6%89%A7%E8%A1%8C%E4%B8%80%E6%AC%A1ssh%20localhost%0A%24%20ssh-keygen%20-t%20rsa%20%23%20%E4%BC%9A%E6%9C%89%E6%8F%90%E7%A4%BA%EF%BC%8C%E9%83%BD%E6%8C%89%E5%9B%9E%E8%BD%A6%E5%B0%B1%E5%8F%AF%E4%BB%A5%0A%24%20cat%20id_rsa.pub%20%3E%3E%20authorized_keys%20%23%20%E5%8A%A0%E5%85%A5%E6%8E%88%E6%9D%83%0A%24%20chmod%20600%20.%2Fauthorized_keys%20%23%20%E4%BF%AE%E6%94%B9%E6%96%87%E4%BB%B6%E6%9D%83%E9%99%90%0A%24%20%23%23%20%E9%85%8D%E7%BD%AE%E5%AE%8C%E8%BF%99%E4%BA%9B%20%E5%B0%B1%E5%8F%AF%E4%BB%A5%E6%97%A0%E5%AF%86%E7%A0%81%E7%99%BB%E5%BD%95%E4%BA%86%0A%60%60%60%0A%23%23%23%23%20%E6%B5%8B%E8%AF%95%20ssh%20localhost%0A!%5B5609c85fd7ff219efde458ec4adbfd3d.png%5D(en-resource%3A%2F%2Fdatabase%2F743%3A1)%0A%0A%0A%0A%23%23%203.%E5%AE%89%E8%A3%85java%E7%8E%AF%E5%A2%83%0A%3E%E5%9B%A0%E4%B8%BAhadoop%E6%98%AFjava%E5%86%99%E7%9A%84%EF%BC%8C%E9%9C%80%E8%A6%81jvm%E7%9A%84%E6%94%AF%E6%8C%81%20%E6%88%91%E4%BB%AC%E5%AE%89%E8%A3%85java1.7%2C%0A%E9%BB%98%E8%AE%A4%E5%AE%89%E8%A3%85%E4%BD%8D%E7%BD%AE%E4%B8%BA%20%2Fusr%2Flib%2Fjvm%2Fjava-1.7.0-openjdk%0A%E8%AF%A5%E8%B7%AF%E5%BE%84%E5%8F%AF%E4%BB%A5%E9%80%9A%E8%BF%87%E6%89%A7%E8%A1%8C%C2%A0rpm%20-ql%20java-1.7.0-openjdk-devel%20%7C%20grep%20'%2Fbin%2Fjavac'%C2%A0%E5%91%BD%E4%BB%A4%E7%A1%AE%E5%AE%9A%EF%BC%8C%0A%60%60%60%20shell%0A%0A%24%20sudo%20yum%20install%20java-1.7.0-openjdk%20java-1.7.0-openjdk-devel%20%23%E5%AE%89%E8%A3%85java%0A%24%20vim%20~%2F.bashrc%20%23%20%E4%BF%AE%E6%94%B9%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%0A%24%20export%20JAVA_HOME%3D%2Fusr%2Flib%2Fjvm%2Fjava-1.7.0-openjdk%20%23%E5%9C%A8%E6%96%87%E4%BB%B6%E6%9C%80%E5%90%8E%E9%9D%A2%E6%B7%BB%E5%8A%A0%E5%A6%82%E4%B8%8B%E5%8D%95%E7%8B%AC%E4%B8%80%E8%A1%8C%EF%BC%88%E6%8C%87%E5%90%91%20JDK%20%E7%9A%84%E5%AE%89%E8%A3%85%E4%BD%8D%E7%BD%AE%EF%BC%89%EF%BC%8C%E5%B9%B6%E4%BF%9D%E5%AD%98%EF%BC%9A%0A%24%20source%20~%2F.bashrc%20%23%20%E4%BD%BF%E5%8F%98%E9%87%8F%E8%AE%BE%E7%BD%AE%E7%94%9F%E6%95%88%0A%0A%60%60%60%0A!%5B8917fa8205b4bd09936a2426d5aa806d.png%5D(en-resource%3A%2F%2Fdatabase%2F747%3A1)%0A%0A%23%23%23%20%E6%A3%80%E9%AA%8C%E6%98%AF%E5%90%A6%E8%AE%BE%E7%BD%AE%E6%AD%A3%E7%A1%AE%0A%60%60%60shell%0A%24%20echo%20%24JAVA_HOME%20%20%20%20%20%23%20%E6%A3%80%E9%AA%8C%E5%8F%98%E9%87%8F%E5%80%BC%0A%24%20java%20-version%0A%24%20JAVA_HOME%2Fbin%2Fjava%20-version%20%20%23%20%E4%B8%8E%E7%9B%B4%E6%8E%A5%E6%89%A7%E8%A1%8C%20java%20-version%20%E4%B8%80%E6%A0%B7%0A%60%60%60%0A!%5Bcbf08fcc0a27a103c2a5f7ca662c4fa0.png%5D(en-resource%3A%2F%2Fdatabase%2F749%3A1)%0A%0A%23%23%204.%E5%AE%89%E8%A3%85hadoop2%0A%0A%3EHadoop%202%20%E5%8F%AF%E4%BB%A5%E9%80%9A%E8%BF%87%5Bhadoop%E4%B8%8B%E8%BD%BD%E7%BD%91%E7%AB%99%5D(http%3A%2F%2Fmirror.bit.edu.cn%2Fapache%2Fhadoop%2Fcommon%2F)%C2%A0%E8%BF%99%E4%B8%AA%E6%95%99%E7%A8%8B%E6%98%AF2.6%E7%89%88%E6%9C%AC%EF%BC%8C%E5%BC%BA%E7%83%88%E5%BB%BA%E8%AE%AE%E8%B7%9F%E7%9D%80%E6%95%99%E7%A8%8B%E8%B5%B0%EF%BC%8C%E9%80%89%E6%8B%A93%E7%9A%84%E8%AF%9D%EF%BC%8C%E5%8F%AF%E8%83%BD%E4%BC%9A%E6%9C%89%E4%B8%80%E4%BA%9B%E6%96%B0%E7%9A%84%E7%89%B9%E6%80%A7%E4%BA%A7%E7%94%9F%E4%B8%80%E4%BA%9B%E5%85%BC%E5%AE%B9%E9%97%AE%E9%A2%98%0A%3E%E4%B8%8D%E4%BC%9A%E7%94%A8ftp%E7%9A%84%E8%AF%9D%20%E5%8F%AF%E4%BB%A5%E5%9C%A8%E5%91%BD%E4%BB%A4%E8%A1%8C%E8%BE%93%E5%85%A5%60wget%20%0A%3Ehttp%3A%2F%2Fmirror.bit.edu.cn%2Fapache%2Fhadoop%2Fcommon%2Fhadoop-2.7.7%2Fhadoop-2.7.7.tar.gz%60%0A%3E!%5B520ee7bbdbb745da3da11eeec74137da.png%5D(en-resource%3A%2F%2Fdatabase%2F755%3A0)%0A%0A%0A%0A%23%23%23%20%E8%A7%A3%E5%8E%8B%E5%AE%89%E8%A3%85%0A%60%60%60%20shell%0A%24%20sudo%20tar%20-zxf%20~%2Fhadoop-2.7.7.tar.gz%20-C%20%2Fusr%2Flocal%20%20%20%20%23%20%E8%A7%A3%E5%8E%8B%E5%88%B0%2Fusr%2Flocal%E4%B8%AD%0A%24%20%0A%24%20sudo%20mv%20.%2Fhadoop-2.7.7%2F%20.%2Fhadoop%20%20%20%20%20%20%20%20%20%20%20%20%23%20%E5%B0%86%E6%96%87%E4%BB%B6%E5%A4%B9%E5%90%8D%E6%94%B9%E4%B8%BAhadoop%0A%24%20sudo%20chown%20-R%20hadoop%3Ahadoop%20.%2Fhadoop%20%20%20%20%20%20%20%20%23%20%E4%BF%AE%E6%94%B9%E6%96%87%E4%BB%B6%E6%9D%83%E9%99%90%0A%60%60%60%0A%23%23%23%20%E6%B5%8B%E8%AF%95%E6%98%AF%E5%90%A6%E6%88%90%E5%8A%9F%E5%AE%89%E8%A3%85%0A%E6%88%90%E5%8A%9F%E5%88%99%E4%BC%9A%E6%98%BE%E7%A4%BA%20Hadoop%20%E7%89%88%E6%9C%AC%E4%BF%A1%E6%81%AF%EF%BC%9A%0A%60%60%60%20shell%0A%24%20cd%20%2Fusr%2Flocal%2Fhadoop%0A%24%20.%2Fbin%2Fhadoop%20version%0A%60%60%60%0A!%5B0886cc32a2ff151a9b2056581ea8935c.png%5D(en-resource%3A%2F%2Fdatabase%2F757%3A0)%0A%0A%23%23%23%20hadoop%20%E7%9A%84%E4%B8%89%E7%A7%8D%E6%A8%A1%E5%BC%8F%0A%0A%7C%20%E6%A8%A1%E5%BC%8F%20%7C%E7%AE%80%E4%BB%8B%20%20%7C%0A%7C%20---%20%7C%20---%20%7C%0A%7C%20%E5%8D%95%E6%9C%BA%E6%A8%A1%E5%BC%8F%20%20%7C%E8%87%AA%E5%B7%B1%E7%9A%84%E6%9C%BA%E5%99%A8%E7%8E%A9%20%20%7C%0A%7C%20%E4%BC%AA%E5%88%86%E5%B8%83%E5%BC%8F%E6%A8%A1%E5%BC%8F%20%20%7C%20%E8%87%AA%E5%B7%B1%E7%9A%84%E6%9C%BA%E5%99%A8%E6%A8%A1%E6%8B%9F%E5%A4%9A%E6%80%81%E6%9C%BA%E5%99%A8%7C%0A%7C%20%E5%88%86%E5%B8%83%E5%BC%8F%20%7C%E5%A4%9A%E5%8F%B0%E6%9C%BA%E5%99%A8%E4%B8%80%E8%B5%B7%E7%8E%A9%20%20%7C%0A%0A%23%23%23%20%E5%8D%95%E6%9C%BA%E6%A8%A1%E5%BC%8F%E7%9A%84%E5%AE%89%E8%A3%85%0A%3E%E9%BB%98%E8%AE%A4%E5%B0%B1%E6%98%AF%E5%8D%95%E6%9C%BA%E6%A8%A1%E5%BC%8F%EF%BC%8C%E6%97%A0%E9%9C%80%E4%BF%AE%E6%94%B9%E5%85%B6%E4%BB%96%E9%85%8D%E7%BD%AE%EF%BC%8Chadoop%E6%9C%89%E4%BA%9B%E4%BE%8B%E5%AD%90%E5%8F%AF%E4%BB%A5%E5%B0%9D%E8%AF%95%E4%B8%8B%0A%3E%20%20.%2Fbin%2Fhadoop%20jar%20.%2Fshare%2Fhadoop%2Fmapreduce%2Fhadoop-mapreduce-examples-2.6.0.jar%20%E8%B7%AF%E5%BE%84%E5%9C%A8%E8%BF%99%20%20%E5%8C%85%E6%8B%ACwordcount%E3%80%81terasort%E3%80%81join%E3%80%81grep%20%E7%AD%89%E3%80%82%0A%0A%0A%60%60%60shell%0A%24%20cd%20%2Fusr%2Flocal%2Fhadoop%0A%24%20mkdir%20.%2Finput%0A%24%20cp%20.%2Fetc%2Fhadoop%2F*.xml%20.%2Finput%20%20%20%23%20%E5%B0%86%E9%85%8D%E7%BD%AE%E6%96%87%E4%BB%B6%E4%BD%9C%E4%B8%BA%E8%BE%93%E5%85%A5%E6%96%87%E4%BB%B6%0A%24%20.%2Fbin%2Fhadoop%20jar%20.%2Fshare%2Fhadoop%2Fmapreduce%2Fhadoop-mapreduce-examples-*.jar%20grep%20.%2Finput%20.%2Foutput%20'dfs%5Ba-z.%5D%2B'%0A%24%20cat%20.%2Foutput%2F*%20%20%20%20%20%20%20%20%20%20%23%20%E6%9F%A5%E7%9C%8B%E8%BF%90%E8%A1%8C%E7%BB%93%E6%9E%9C%0A%60%60%60%0A%23%23%23%23%20%E8%BF%90%E8%A1%8C%E5%87%BA%E9%94%99%0A!%5Bb037ef8790988f1b7f9d3fc730739b05.png%5D(en-resource%3A%2F%2Fdatabase%2F733%3A1)%0A%0A%23%23%23%20%E4%BC%AA%E5%88%86%E5%B8%83%E5%BC%8F%E7%9A%84%E9%85%8D%E7%BD%AE%0A%0A%3E%20%E5%9C%A8%E8%AE%BE%E7%BD%AE%20Hadoop%20%E4%BC%AA%E5%88%86%E5%B8%83%E5%BC%8F%E9%85%8D%E7%BD%AE%E5%89%8D%EF%BC%8C%E6%88%91%E4%BB%AC%E8%BF%98%E9%9C%80%E8%A6%81%E8%AE%BE%E7%BD%AE%20HADOOP%20%E7%8E%AF%E5%A2%83%E5%8F%98%E9%87%8F%EF%BC%8C%E6%89%A7%E8%A1%8C%E5%A6%82%E4%B8%8B%E5%91%BD%E4%BB%A4%E5%9C%A8%20~%2F.bashrc%20%E4%B8%AD%E8%AE%BE%E7%BD%AE%EF%BC%9A%0A%0A%60%60%60%20%20shell%0Avim%20~%2F.bashrc%0A%60%60%60%0A%E5%9C%A8%E5%90%8E%E9%9D%A2%E8%BF%BD%E5%8A%A0%0A%60%60%60%20shell%0A%23%20Hadoop%20Environment%20Variables%0Aexport%20HADOOP_HOME%3D%2Fusr%2Flocal%2Fhadoop%0Aexport%20HADOOP_INSTALL%3D%24HADOOP_HOME%0Aexport%20HADOOP_MAPRED_HOME%3D%24HADOOP_HOME%0Aexport%20HADOOP_COMMON_HOME%3D%24HADOOP_HOME%0Aexport%20HADOOP_HDFS_HOME%3D%24HADOOP_HOME%0Aexport%20YARN_HOME%3D%24HADOOP_HOME%0Aexport%20HADOOP_COMMON_LIB_NATIVE_DIR%3D%24HADOOP_HOME%2Flib%2Fnative%0Aexport%20PATH%3D%24PATH%3A%24HADOOP_HOME%2Fsbin%3A%24HADOOP_HOME%2Fbin%0A%60%60%60%0A!%5B8c056e3c2cbc50ea99c82aa4cd790baf.png%5D(en-resource%3A%2F%2Fdatabase%2F759%3A0)%0A%0A%E7%94%9F%E6%95%88%0A%60%60%60%0Asource%20~%2F.bashrc%0A%60%60%60%0A%0A%0A%23%23%23%23%20%E4%BF%AE%E6%94%B9core-site.xml%0A%3E%20%E6%96%87%E4%BB%B6%E8%B7%AF%E5%BE%84%20%2Fusr%2Flocal%2Fhadoop%2Fetc%2Fhadoop%2F%20%0A%60%60%60%20xml%0A%3Cconfiguration%3E%0A%20%20%20%20%3Cproperty%3E%0A%20%20%20%20%20%20%20%20%3Cname%3Ehadoop.tmp.dir%3C%2Fname%3E%0A%20%20%20%20%20%20%20%20%3Cvalue%3Efile%3A%2Fusr%2Flocal%2Fhadoop%2Ftmp%3C%2Fvalue%3E%0A%20%20%20%20%20%20%20%20%3Cdescription%3EAbase%20for%20other%20temporary%20directories.%3C%2Fdescription%3E%0A%20%20%20%20%3C%2Fproperty%3E%0A%20%20%20%20%3Cproperty%3E%0A%20%20%20%20%20%20%20%20%3Cname%3Efs.defaultFS%3C%2Fname%3E%0A%20%20%20%20%20%20%20%20%3Cvalue%3Ehdfs%3A%2F%2Flocalhost%3A9000%3C%2Fvalue%3E%0A%20%20%20%20%3C%2Fproperty%3E%0A%3C%2Fconfiguration%3E%0A%60%60%60%0A%0A%23%23%23%23%20%E4%BF%AE%E6%94%B9hdfs-site.xml%0A%60%60%60%20xml%0A%3Cconfiguration%3E%0A%20%20%20%20%3Cproperty%3E%0A%20%20%20%20%20%20%20%20%3Cname%3Edfs.replication%3C%2Fname%3E%0A%20%20%20%20%20%20%20%20%3Cvalue%3E1%3C%2Fvalue%3E%0A%20%20%20%20%3C%2Fproperty%3E%0A%20%20%20%20%3Cproperty%3E%0A%20%20%20%20%20%20%20%20%3Cname%3Edfs.namenode.name.dir%3C%2Fname%3E%0A%20%20%20%20%20%20%20%20%3Cvalue%3Efile%3A%2Fusr%2Flocal%2Fhadoop%2Ftmp%2Fdfs%2Fname%3C%2Fvalue%3E%0A%20%20%20%20%3C%2Fproperty%3E%0A%20%20%20%20%3Cproperty%3E%0A%20%20%20%20%20%20%20%20%3Cname%3Edfs.datanode.data.dir%3C%2Fname%3E%0A%20%20%20%20%20%20%20%20%3Cvalue%3Efile%3A%2Fusr%2Flocal%2Fhadoop%2Ftmp%2Fdfs%2Fdata%3C%2Fvalue%3E%0A%20%20%20%20%3C%2Fproperty%3E%0A%3C%2Fconfiguration%3E%0A%60%60%60%0A%23%23%23%23%20NameNode%E6%A0%BC%E5%BC%8F%E5%8C%96%0A%60%60%60%0A.%2Fbin%2Fhdfs%20namenode%20-format%0A%60%60%60%0A!%5Bb432588005b16e133bd51946e3fb6f35.png%5D(en-resource%3A%2F%2Fdatabase%2F761%3A1)%0A%0A%0A%23%23%23%23%20%20%E5%BC%80%E5%90%AFNameNode%20%E5%92%8CDataNode%20%E5%AE%88%E6%8A%A4%E8%BF%9B%E7%A8%8B%0A%0A%60%60%60%0A.%2Fsbin%2Fstart-dfs.sh%0A%60%60%60%0A%23%23%23%23%20jps%20%E6%9F%A5%E7%9C%8B%E8%BF%9B%E7%A8%8B%20%0A!%5Be871f04e71f24e1cfcb723012569f244.png%5D(en-resource%3A%2F%2Fdatabase%2F737%3A1)%0A%23%23%23%23%20web%E5%9C%A8%E7%BA%BF%5Bhttp%3A%2F%2Flocalhost%3A50070%5D(http%3A%2F%2Flocalhost%3A50070%C2%A0)%0A%E6%88%91%E7%94%A8%E7%9A%84%E6%98%AF%E9%98%BF%E9%87%8C%E4%BA%91%EF%BC%8C%E5%A6%82%E6%9E%9C%E8%A6%81%E8%AE%BF%E9%97%AE%E9%98%BF%E9%87%8C%E4%BA%91%EF%BC%8C%E9%9C%80%E8%A6%81%E9%85%8D%E7%BD%AE%E5%AE%89%E5%85%A8%E7%BB%84%E7%AB%AF%E5%8F%A3%0A!%5B8401cb51bc759a9f38d5a4d4622c0f67.png%5D(en-resource%3A%2F%2Fdatabase%2F765%3A0)%0A%0A!%5Bf17b42563f6d6c57017f77bec8be7515.png%5D(en-resource%3A%2F%2Fdatabase%2F763%3A0)%0A%0A%23%23%205.%E8%BF%90%E8%A1%8Chadoop%0A%3E%E4%BC%AA%E5%88%86%E5%B8%83%E5%BC%8F%E8%AF%BB%E5%8F%96%E7%9A%84%E5%88%99%E6%98%AF%20HDFS%20%E4%B8%8A%E7%9A%84%E6%95%B0%E6%8D%AE%E3%80%82%E8%A6%81%E4%BD%BF%E7%94%A8%20HDFS%EF%BC%8C%E9%A6%96%E5%85%88%E9%9C%80%E8%A6%81%E5%9C%A8%20HDFS%20%E4%B8%AD%E5%88%9B%E5%BB%BA%E7%94%A8%E6%88%B7%E7%9B%AE%E5%BD%95%EF%BC%9A%0A%60%60%60shell%0A%24%20.%2Fbin%2Fhdfs%20dfs%20-mkdir%20-p%20%2Fuser%2Fhadoop%0A%24%20.%2Fbin%2Fhdfs%20dfs%20-mkdir%20input%0A%24%20.%2Fbin%2Fhdfs%20dfs%20-put%20.%2Fetc%2Fhadoop%2F*.xml%20input%0A%24%20.%2Fbin%2Fhdfs%20dfs%20-ls%20input%0A%24%20.%2Fbin%2Fhadoop%20jar%20.%2Fshare%2Fhadoop%2Fmapreduce%2Fhadoop-mapreduce-examples-*.jar%20grep%20input%20output%20'dfs%5Ba-z.%5D%2B'%0A%24%20!%5B06efdd821d925618086667b857b8c7e3.png%5D(en-resource%3A%2F%2Fdatabase%2F767%3A0)%0A%0A%60%60%60%0A%E4%BB%A3%E8%A1%A8%E8%BF%90%E8%A1%8C%E6%88%90%E5%8A%9F%0A!%5B06efdd821d925618086667b857b8c7e3.png%5D(en-resource%3A%2F%2Fdatabase%2F767%3A1)%0A%0A%0AHadoop%20%E8%BF%90%E8%A1%8C%E7%A8%8B%E5%BA%8F%E6%97%B6%EF%BC%8C%E8%BE%93%E5%87%BA%E7%9B%AE%E5%BD%95%E4%B8%8D%E8%83%BD%E5%AD%98%E5%9C%A8%EF%BC%8C%E5%90%A6%E5%88%99%E4%BC%9A%E6%8F%90%E7%A4%BA%E9%94%99%E8%AF%AF%20%E2%80%9Corg.apache.hadoop.mapred.FileAlreadyExistsException%3A%20Output%20directory%20hdfs%3A%2F%2Flocalhost%3A9000%2Fuser%2Fhadoop%2Foutput%20already%20exists%E2%80%9D%20%EF%BC%8C%E5%9B%A0%E6%AD%A4%E8%8B%A5%E8%A6%81%E5%86%8D%E6%AC%A1%E6%89%A7%E8%A1%8C%EF%BC%8C%E9%9C%80%E8%A6%81%E6%89%A7%E8%A1%8C%E5%A6%82%E4%B8%8B%E5%91%BD%E4%BB%A4%E5%88%A0%E9%99%A4%20output%20%E6%96%87%E4%BB%B6%E5%A4%B9%3A%0A%60%60%60shell%0A%24%20rm%20-r%20.%2Foutput%20%20%20%20%23%20%E5%85%88%E5%88%A0%E9%99%A4%E6%9C%AC%E5%9C%B0%E7%9A%84%20output%20%E6%96%87%E4%BB%B6%E5%A4%B9%EF%BC%88%E5%A6%82%E6%9E%9C%E5%AD%98%E5%9C%A8%EF%BC%89%0A%24%20.%2Fbin%2Fhdfs%20dfs%20-get%20output%20.%2Foutput%20%20%20%20%20%23%20%E5%B0%86%20HDFS%20%E4%B8%8A%E7%9A%84%20output%20%E6%96%87%E4%BB%B6%E5%A4%B9%E6%8B%B7%E8%B4%9D%E5%88%B0%E6%9C%AC%E6%9C%BA%0A%24%20cat%20.%2Foutput%2F*%0A%60%60%60%0A%60%60%60%0A%24%20.%2Fsbin%2Fstop-dfs.sh%0A%60%60%60%0A%0A%0A%3Cu%3E**%E4%B8%8B%E6%AC%A1%E5%90%AF%E5%8A%A8%20hadoop%20%E6%97%B6%EF%BC%8C%E6%97%A0%E9%9C%80%E8%BF%9B%E8%A1%8C%20NameNode%20%E7%9A%84%E5%88%9D%E5%A7%8B%E5%8C%96%EF%BC%8C%E5%8F%AA%E9%9C%80%E8%A6%81%E8%BF%90%E8%A1%8C%C2%A0.%2Fsbin%2Fstart-dfs.sh%C2%A0%E5%B0%B1%E5%8F%AF%E4%BB%A5%EF%BC%81**%3C%2Fu%3E

centos之hadoop的安装的更多相关文章

- centos下hadoop的安装

hadoop的安装不难,可是须要做不少的准备工作. 一.JDK 须要先安装jdk.centos下能够直接通过yum install java-1.6.0-openjdk来安装.不同公布版的安装 ...

- centos中-hadoop单机安装及伪分布式运行实例

创建用户并加入授权 1,创建hadoop用户 sudo useradd -m hadoop -s /bin/bash 2,修改sudo的配置文件,位于/etc/sudoers,需要root权限才可以读 ...

- centos 7 hadoop的安装和使用

准备工作 安装jdk 用户免密登录 安装参考文章: http://blog.csdn.net/circyo/article/details/46724335 http://www.linuxidc.c ...

- CentOS 7 Hadoop安装配置

前言:我使用了两台计算机进行集群的配置,如果是单机的话可能会出现部分问题.首先设置两台计算机的主机名 root 权限打开/etc/host文件 再设置hostname,root权限打开/etc/hos ...

- Centos 7环境下安装配置Hadoop 3.0 Beta1简记

前言 由于以前已经写过一篇Centos 7环境下安装配置2.8的随笔,因此这篇写得精简些,只挑选一些重要环节记录一下. 安装环境为:两台主机均为Centos 7.*操作系统,两台机器配置分别为: 主机 ...

- Hadoop(2)-CentOS下的jdk和hadoop的安装与配置

准备工作 下载jdk8和hadoop2.7.2 使用sftp的方式传到hadoop100上的/opt/software目录中 配置环境 如果安装虚拟机时选择了open java,请先卸载 rpm -q ...

- hadoop集群搭建--CentOS部署Hadoop服务

在了解了Hadoop的相关知识后,接下来就是Hadoop环境的搭建,搭建Hadoop环境是正式学习大数据的开始,接下来就开始搭建环境!我们用到环境为:VMware 12+CentOS6.4 hadoo ...

- hadoop单机版安装及基本功能演示

本文所使用的Linux发行版本为:CentOS Linux release 7.4.1708 (Core) hadoop单机版安装 准备工作 创建用户 useradd -m hadoop passwd ...

- CentOS下Hadoop及ZooKeeper环境搭建

1. 测试环境 操作系统 CentOS 6.5. 总共5台机器,前两台作为namenode,称之为 nn01.nn02:后三台作为datanode,称为 dn01.dn02.dn03. 每台机器的内存 ...

随机推荐

- 00-Windows系统MySQL数据库的安装

1.数据库安装 官网下载MySQL数据库. 下载安装包后解压缩到相关目录,我解压缩到:D:\360极速浏览器下载\mysql-8.0.19-winx64. 打开刚刚解压的文件夹 D:\360极速浏览器 ...

- .net core微服务——gRPC(下)

序 上一篇博客把grpc的概念说了个大概,介绍了proto的数据类型,基本语法,也写了个小demo,是不是没那么难? 今天要从理论到实际,写两个微服务,并利用grpc完成两者之间的通信.只是作为dem ...

- ClickHouse源码笔记2:聚合流程的实现

上篇笔记讲到了聚合函数的实现并且带大家看了聚合函数是如何注册到ClickHouse之中的并被调用使用的.这篇笔记,笔者会续上上篇的内容,将剖析一把ClickHouse聚合流程的整体实现. 第二篇文章, ...

- python环境搭建及配置

我选择的是pycharm,这个对新手比较友好 我目前正在自学周志华的西瓜书,在做练习题3.3时需要用到python来实现,做这个练习需要numpy库和matplot库,最开始的时候忘了anaconda ...

- Python虚拟环境(virtualenv)

python虚拟环境 虚拟环境:一个独立的可以运行的python执行环境,可以创建多个,且相互之间互不影响 使用virtualenv库 pip install virtualenv 用法 # 创建虚拟 ...

- C++语法小记---类模板

类模板 类模板和函数模板类似,主要用于定义容器类 类模板可以偏特化,也可以全特化,使用的优先级和函数模板相同 类模板不能隐式推倒,只能显式调用 工程建议: 模板的声明和实现都在头文件中 成员函数的实现 ...

- [jvm] -- 类文件结构篇

类文件结构 结构图 魔数 头四个字节,作用是确定这个文件是否为一个能被虚拟机接收的 Class 文件. Class 文件版本 第五和第六是次版本号,第七和第八是主版本号. 高版本的 Java 虚拟机 ...

- .net core 使用 Serilog 作为日志提供者

nuget引入 Serilog.AspNetCore Startup构造函数: public Startup(IConfiguration configuration) { Configuration ...

- 搭建mysql NDB集群

NDB群集安装 介绍 https://dev.mysql.com/doc/refman/8.0/en/mysql-cluster-basics.html NDBCLUSTER (也称为NDB)是一种内 ...

- 2n皇后问题-------递归 暴力求解题与分布讨论题

问题描述 给定一个n*n的棋盘,棋盘中有一些位置不能放皇后.现在要向棋盘中放入n个黑皇后和n个白皇后,使任意的两个黑皇后都不在同一行.同一列或同一条对角线上,任意的两个白皇后都不在同一行.同一列或同一 ...