Istio中的流量配置

Istio中的流量配置

Istio注入的容器

Istio的数据面会在pod中注入两个容器:istio-init和istio-proxy。

Istio-init

istio-init会通过创建iptables规则来接管流量:

命令行参数 -p

15001表示出向流量被iptable重定向到Envoy的15001端口命令行参数 -z

15006表示入向流量被iptable重定向到Envoy的15006端口命令行参数 -u

1337参数用于排除用户ID为1337,即Envoy自身的流量,以避免Iptable把Envoy发出的数据又重定向到Envoy,形成死循环。在istio-proxy容器中执行如下命令可以看到Envoy使用的用户id为1337。$ id

uid=1337(istio-proxy) gid=1337(istio-proxy) groups=1337(istio-proxy)

istio-iptables

-p

15001

-z

15006

-u

1337

-m

REDIRECT

-i

*

-x

-b

*

-d

15090,15021,15020

--run-validation

--skip-rule-apply

istio-proxy

istio-proxy容器中会运行两个程序:pilot-agent和envoy。

$ ps -ef|cat

UID PID PPID C STIME TTY TIME CMD

istio-p+ 1 0 0 Sep10 ? 00:03:39 /usr/local/bin/pilot-agent proxy sidecar --domain default.svc.cluster.local --serviceCluster sleep.default --proxyLogLevel=warning --proxyComponentLogLevel=misc:error --trust-domain=cluster.local --concurrency 2

istio-p+ 27 1 0 Sep10 ? 00:14:30 /usr/local/bin/envoy -c etc/istio/proxy/envoy-rev0.json --restart-epoch 0 --drain-time-s 45 --parent-shutdown-time-s 60 --service-cluster sleep.default --service-node sidecar~10.80.3.109~sleep-856d589c9b-x6szk.default~default.svc.cluster.local --local-address-ip-version v4 --log-format-prefix-with-location 0 --log-format %Y-%m-%dT%T.%fZ.%l.envoy %n.%v -l warning --component-log-level misc:error --concurrency 2

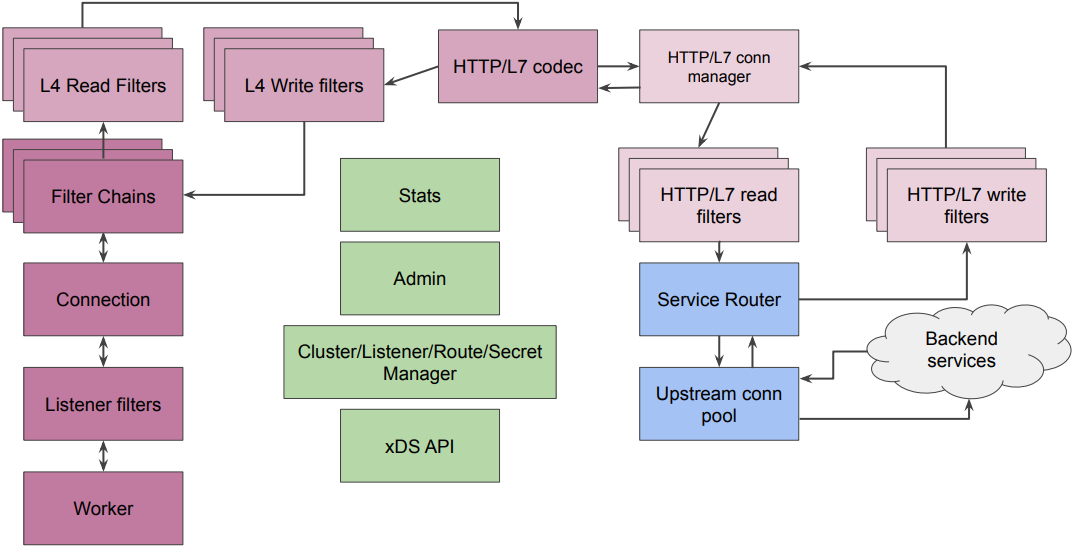

Envoy架构

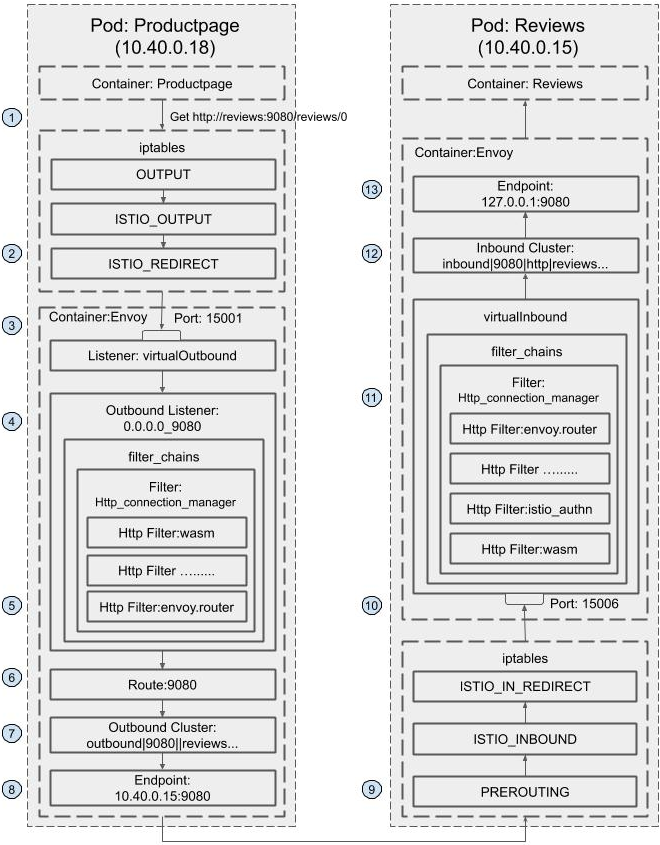

Envoy对入站/出站请求的处理过程如下,Envoy按照如下顺序依次在各个过滤器中处理请求。

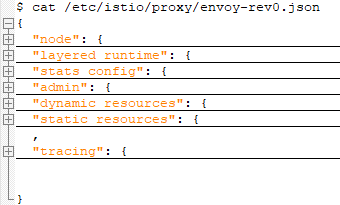

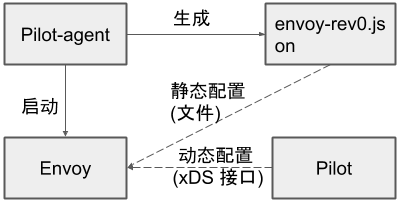

Pilot-agent生成的初始配置文件

pilot-agent根据启动参数和K8S API Server中的配置信息生成Envoy的bootstrap文件(/etc/istio/proxy/envoy-rev0.json),并负责启动Envoy进程(可以看到Envoy进程的父进程是pilot-agent);envoy会通过xDS接口从istiod动态获取配置文件。envoy-rev0.json初始配置文件结构如下:

node:给出了Envoy 实例的信息

"node": {

"id": "sidecar~10.80.3.109~sleep-856d589c9b-x6szk.default~default.svc.cluster.local",

"cluster": "sleep.default",

"locality": {

},

"metadata": {"APP_CONTAINERS":"sleep,istio-proxy","CLUSTER_ID":"Kubernetes","EXCHANGE_KEYS":"NAME,NAMESPACE,INSTANCE_IPS,LABELS,OWNER,PLATFORM_METADATA,WORKLOAD_NAME,MESH_ID,SERVICE_ACCOUNT,CLUSTER_ID","INSTANCE_IPS":"10.80.3.109,fe80::40fb:daff:feed:e56c","INTERCEPTION_MODE":"REDIRECT","ISTIO_PROXY_SHA":"istio-proxy:f642a7fd07d0a99944a6e3529566e7985829839c","ISTIO_VERSION":"1.7.0","LABELS":{"app":"sleep","istio.io/rev":"default","pod-template-hash":"856d589c9b","security.istio.io/tlsMode":"istio","service.istio.io/canonical-name":"sleep","service.istio.io/canonical-revision":"latest"},"MESH_ID":"cluster.local","NAME":"sleep-856d589c9b-x6szk","NAMESPACE":"default","OWNER":"kubernetes://apis/apps/v1/namespaces/default/deployments/sleep","POD_PORTS":"[{\"name\":\"http-envoy-prom\",\"containerPort\":15090,\"protocol\":\"TCP\"}]","PROXY_CONFIG":{"binaryPath":"/usr/local/bin/envoy","concurrency":2,"configPath":"./etc/istio/proxy","controlPlaneAuthPolicy":"MUTUAL_TLS","discoveryAddress":"istiod.istio-system.svc:15012","drainDuration":"45s","envoyAccessLogService":{},"envoyMetricsService":{},"parentShutdownDuration":"60s","proxyAdminPort":15000,"proxyMetadata":{"DNS_AGENT":""},"serviceCluster":"sleep.default","statNameLength":189,"statusPort":15020,"terminationDrainDuration":"5s","tracing":{"zipkin":{"address":"zipkin.istio-system:9411"}}},"SDS":"true","SERVICE_ACCOUNT":"sleep","WORKLOAD_NAME":"sleep","k8s.v1.cni.cncf.io/networks":"istio-cni","sidecar.istio.io/interceptionMode":"REDIRECT","sidecar.istio.io/status":"{\"version\":\"8e6e902b765af607513b28d284940ee1421e9a0d07698741693b2663c7161c11\",\"initContainers\":[\"istio-validation\"],\"containers\":[\"istio-proxy\"],\"volumes\":[\"istio-envoy\",\"istio-data\",\"istio-podinfo\",\"istiod-ca-cert\"],\"imagePullSecrets\":null}","traffic.sidecar.istio.io/excludeInboundPorts":"15020","traffic.sidecar.istio.io/includeOutboundIPRanges":"*"}

},

admin:给出了Envoy的日志路径和管理端口,例如可以通过

curl -X POST localhost:15000/logging?level=trace设置日志级别为trace。"admin": {

"access_log_path": "/dev/null", /* 管理服务器的访问日志路径 */

"profile_path": "/var/lib/istio/data/envoy.prof", /* 管理服务器的CPU输出路径 */

"address": { /* 管理服务器监听的TCP地址 */

"socket_address": {

"address": "127.0.0.1",

"port_value": 15000

}

}

},

dynamic_resources:配置动态资源,用于配置lds_config,cds_config和ads_config

Envoy通过

xds-grpccluster(参见static_resources)来获得xDS服务的地址。"dynamic_resources": {

"lds_config": { /* 通过一个LDS配置Listeners */

"ads": {},

"resource_api_version": "V3" /* LDS使用的API版本号 */

},

"cds_config": { /* 通过一个CDS配置Cluster */

"ads": {},

"resource_api_version": "V3"

},

"ads_config": { /* API配置资源,用于指定API类型和Envoy获取xDS API的cluster */

"api_type": "GRPC", /* 使用GRPC获取xDS信息 */

"transport_api_version": "V3", /* xDS传输协议的版本号 */

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "xds-grpc" /* 动态获取xDS配置的cluster */

}

}

]

}

},

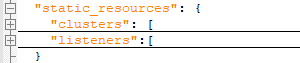

static_resources:配置静态资源,主要包括clusters和listeners两种资源:

clusters:下面给出了几个静态配置的cluster。

"clusters": [

{

"name": "prometheus_stats", /* 使用Prometheus暴露metrics,接口为127.0.0.1:15000/stats/prometheus */

"type": "STATIC", /* 明确指定上游host的网络名(IP地址/端口等) */

"connect_timeout": "0.250s",

"lb_policy": "ROUND_ROBIN",

"load_assignment": { /* 仅用于类型为STATIC, STRICT_DNS或LOGICAL_DNS,用于给非EDS的clyster内嵌与EDS等同的endpoint */

"cluster_name": "prometheus_stats",

"endpoints": [{

"lb_endpoints": [{ /* 负载均衡的后端 */

"endpoint": {

"address":{

"socket_address": {

"protocol": "TCP",

"address": "127.0.0.1",

"port_value": 15000

}

}

}

}]

}]

}

},

{

"name": "agent", /* 暴露健康检查接口,可以使用curl http://127.0.0.1:15020/healthz/ready -v查看 */

"type": "STATIC",

"connect_timeout": "0.250s",

"lb_policy": "ROUND_ROBIN",

"load_assignment": {

"cluster_name": "prometheus_stats",

"endpoints": [{

"lb_endpoints": [{ /* 负载均衡的后端 */

"endpoint": {

"address":{

"socket_address": {

"protocol": "TCP",

"address": "127.0.0.1",

"port_value": 15020

}

}

}

}]

}]

}

},

{

"name": "sds-grpc", /* 配置SDS cluster */

"type": "STATIC",

"http2_protocol_options": {},

"connect_timeout": "1s",

"lb_policy": "ROUND_ROBIN",

"load_assignment": {

"cluster_name": "sds-grpc",

"endpoints": [{

"lb_endpoints": [{

"endpoint": {

"address":{

"pipe": {

"path": "./etc/istio/proxy/SDS" /* 进行SDS的UNIX socket路径,用于在mTLS期间给istio-agent和proxy提供通信 */

}

}

}

}]

}]

}

},

{

"name": "xds-grpc", /* 动态xDS使用的grpc服务器配置 */

"type": "STRICT_DNS",

"respect_dns_ttl": true,

"dns_lookup_family": "V4_ONLY",

"connect_timeout": "1s",

"lb_policy": "ROUND_ROBIN",

"transport_socket": { /* 配置与上游连接的传输socket */

"name": "envoy.transport_sockets.tls", /* 需要实例化的传输socket名称 */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext",

"sni": "istiod.istio-system.svc", /* 创建TLS后端(即SDS服务器)连接时要使用的SNI字符串 */

"common_tls_context": { /* 配置client和server使用的TLS上下文 */

"alpn_protocols": [/* listener暴露的ALPN协议列表,如果为空,则不使用APPN */

"h2"

],

"tls_certificate_sds_secret_configs": [/*通过SDS API获取TLS证书的配置 */

{

"name": "default",

"sds_config": { /* 配置sds_config时将会从静态资源加载secret */

"resource_api_version": "V3", /* xDS的API版本 */

"initial_fetch_timeout": "0s",

"api_config_source": { /* SDS API配置,如版本和SDS服务 */

"api_type": "GRPC",

"transport_api_version": "V3", /* xDS传输协议的API版本 */

"grpc_services": [

{ /* SDS服务器对应上面配置的sds-grpc cluster */

"envoy_grpc": { "cluster_name": "sds-grpc" }

}

]

}

}

}

],

"validation_context": {

"trusted_ca": {

"filename": "./var/run/secrets/istio/root-cert.pem" /* 本地文件系统的数据源。挂载当前命名空间下的config istio-ca-root-cert,其中的CA证书与istio-system命名空间下的istio-ca-secret中的CA证书相同,用于校验对端istiod的证书 */

},

"match_subject_alt_names": [{"exact":"istiod.istio-system.svc"}] /* 验证证书中的SAN,即来自istiod的证书 */

}

}

}

},

"load_assignment": {

"cluster_name": "xds-grpc", /* 可以看到xds-grpc的后端为istiod的15012端口 */

"endpoints": [{

"lb_endpoints": [{

"endpoint": {

"address":{

"socket_address": {"address": "istiod.istio-system.svc", "port_value": 15012}

}

}

}]

}]

},

"circuit_breakers": { /* 断路器配置 */

"thresholds": [

{

"priority": "DEFAULT",

"max_connections": 100000,

"max_pending_requests": 100000,

"max_requests": 100000

},

{

"priority": "HIGH",

"max_connections": 100000,

"max_pending_requests": 100000,

"max_requests": 100000

}

]

},

"upstream_connection_options": {

"tcp_keepalive": {

"keepalive_time": 300

}

},

"max_requests_per_connection": 1,

"http2_protocol_options": { }

}

,

{

"name": "zipkin", /* 分布式链路跟踪zipkin的cluster配置 */

"type": "STRICT_DNS",

"respect_dns_ttl": true,

"dns_lookup_family": "V4_ONLY",

"connect_timeout": "1s",

"lb_policy": "ROUND_ROBIN",

"load_assignment": {

"cluster_name": "zipkin",

"endpoints": [{

"lb_endpoints": [{

"endpoint": {

"address":{

"socket_address": {"address": "zipkin.istio-system", "port_value": 9411}

}

}

}]

}]

}

}

],

上面使用

type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContextAPI接口来对传输socket进行配置,sni和common_tls_context都属于结构体UpstreamTlsContext中的成员变量。可以使用

istioctl pc cluster命令查看静态cluster资源,第一列对应上面的Cluster.name,其中sds-grpc用于提供SDS服务,SDS的原理可以参见官方文档。# istioctl pc cluster sleep-856d589c9b-x6szk.default |grep STATIC

BlackHoleCluster - - - STATIC

agent - - - STATIC

prometheus_stats - - - STATIC

sds-grpc - - - STATIC

sleep.default.svc.cluster.local 80 http inbound STATIC

listener,下面用到了Network filter中的HTTP connection manager

"listeners":[

{

"address": { /* listener监听的地址 */

"socket_address": {

"protocol": "TCP",

"address": "0.0.0.0",

"port_value": 15090

}

},

"filter_chains": [

{

"filters": [

{

"name": "envoy.http_connection_manager",

"typed_config": { /* 对扩展API的配置 */

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager",

"codec_type": "AUTO", /* 由连接管理器判断使用哪种编解码器 */

"stat_prefix": "stats",

"route_config": { /* 连接管理器的静态路由表 */

"virtual_hosts": [ /* 路由表使用的虚拟主机列表 */

{

"name": "backend", /* 路由表使用的虚拟主机 */

"domains": [ /* 匹配该虚拟主机的域列表 */

"*"

],

"routes": [ /* 匹配入请求的路由列表,使用第一个匹配的路由 */

{

"match": { /* 将HTTP地址为/stats/prometheus的请求路由到cluster prometheus_stats */

"prefix": "/stats/prometheus"

},

"route": {

"cluster": "prometheus_stats"

}

}

]

}

]

},

"http_filters": [{ /* 构成filter链的filter,用于处理请求。此处并没有定义任何规则 */

"name": "envoy.router",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.router.v3.Router"

}

}]

}

}

]

}

]

},

{

"address": {

"socket_address": {

"protocol": "TCP",

"address": "0.0.0.0",

"port_value": 15021

}

},

"filter_chains": [

{

"filters": [

{

"name": "envoy.http_connection_manager",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager",

"codec_type": "AUTO",

"stat_prefix": "agent",

"route_config": { /* 静态路由表配置 */

"virtual_hosts": [

{

"name": "backend",

"domains": [

"*"

],

"routes": [

{

"match": { /* 将HTTP地址为/healthz/ready的请求路由到cluster agent */

"prefix": "/healthz/ready"

},

"route": {

"cluster": "agent"

}

}

]

}

]

},

"http_filters": [{

"name": "envoy.router",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.router.v3.Router"

}

}]

}

}

]

}

]

}

]

}

tracing,对应上面static_resources里定义的zipkin cluster。

"tracing": {

"http": {

"name": "envoy.zipkin",

"typed_config": {

"@type": "type.googleapis.com/envoy.config.trace.v3.ZipkinConfig",

"collector_cluster": "zipkin",

"collector_endpoint": "/api/v2/spans",

"collector_endpoint_version": "HTTP_JSON",

"trace_id_128bit": true,

"shared_span_context": false

}

}

}

基本流程如下:

Envoy管理接口获取的完整配置

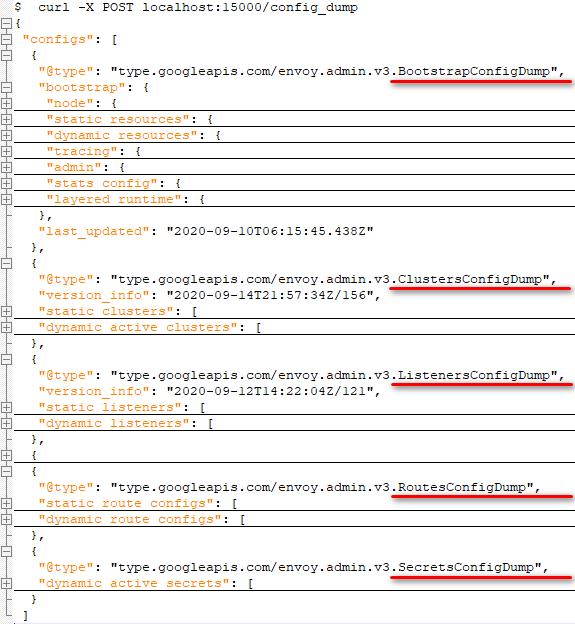

可以在注入Envoy sidecar的pod中执行curl -X POST localhost:15000/config_dump来获取完整的配置信息。可以看到它主要包含BootstrapConfig,ClustersConfig,ListenersConfig,RoutesConfig,SecretsConfig这5部分。

Bootstrap:它与上面由Pilot-agent生成的

envoy-rev0.json文件中的内容相同,即提供给Envoy proxy的初始化配置,给出了xDS服务器的地址等信息。Clusters:在Envoy中,Cluster是一个服务集群,每个cluster包含一个或多个endpoint(可以将cluster近似看作是k8s中的service)。

从上图可以看出,ClustersConfig包含两种cluster配置:

static_clusters和dynamic_active_clusters。前者中的cluster来自envoy-rev0.json中配置的静态cluster资源,包含agent,prometheus_stats,sds-grpc,xds-grpc和zipkin;后者是通过xDS接口从istio的控制面获取的动态配置信息,dynamic_active_clusters主要分为如下四种类型:BlackHoleCluster:

"cluster": {

"@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster",

"name": "BlackHoleCluster", /* cluster名称 */

"type": "STATIC",

"connect_timeout": "10s",

"filters": [ /* 出站连接的过滤器配置 */

{

"name": "istio.metadata_exchange",

"typed_config": { /* 对扩展API的配置 */

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange",

"value": {

"protocol": "istio-peer-exchange"

}

}

}

]

},

BlackHoleCluster使用的API类型为type.googleapis.com/udpa.type.v1.TypedStruct,表示控制面缺少缺少该扩展的模式定义,client会使用type_url指定的API,将内容转换为类型化的配置资源。在上面可以看到Istio使用了协议

istio-peer-exchange,服务网格内部的两个Envoy实例之间使用该协议来交互Node Metadata。NodeMetadata的数据结构如下:type NodeMetadata struct {

// ProxyConfig defines the proxy config specified for a proxy.

// Note that this setting may be configured different for each proxy, due user overrides

// or from different versions of proxies connecting. While Pilot has access to the meshConfig.defaultConfig,

// this field should be preferred if it is present.

ProxyConfig *NodeMetaProxyConfig `json:"PROXY_CONFIG,omitempty"` // IstioVersion specifies the Istio version associated with the proxy

IstioVersion string `json:"ISTIO_VERSION,omitempty"` // Labels specifies the set of workload instance (ex: k8s pod) labels associated with this node.

Labels map[string]string `json:"LABELS,omitempty"` // InstanceIPs is the set of IPs attached to this proxy

InstanceIPs StringList `json:"INSTANCE_IPS,omitempty"` // Namespace is the namespace in which the workload instance is running.

Namespace string `json:"NAMESPACE,omitempty"` // InterceptionMode is the name of the metadata variable that carries info about

// traffic interception mode at the proxy

InterceptionMode TrafficInterceptionMode `json:"INTERCEPTION_MODE,omitempty"` // ServiceAccount specifies the service account which is running the workload.

ServiceAccount string `json:"SERVICE_ACCOUNT,omitempty"` // RouterMode indicates whether the proxy is functioning as a SNI-DNAT router

// processing the AUTO_PASSTHROUGH gateway servers

RouterMode string `json:"ROUTER_MODE,omitempty"` // MeshID specifies the mesh ID environment variable.

MeshID string `json:"MESH_ID,omitempty"` // ClusterID defines the cluster the node belongs to.

ClusterID string `json:"CLUSTER_ID,omitempty"` // Network defines the network the node belongs to. It is an optional metadata,

// set at injection time. When set, the Endpoints returned to a note and not on same network

// will be replaced with the gateway defined in the settings.

Network string `json:"NETWORK,omitempty"` // RequestedNetworkView specifies the networks that the proxy wants to see

RequestedNetworkView StringList `json:"REQUESTED_NETWORK_VIEW,omitempty"` // PodPorts defines the ports on a pod. This is used to lookup named ports.

PodPorts PodPortList `json:"POD_PORTS,omitempty"` // TLSServerCertChain is the absolute path to server cert-chain file

TLSServerCertChain string `json:"TLS_SERVER_CERT_CHAIN,omitempty"`

// TLSServerKey is the absolute path to server private key file

TLSServerKey string `json:"TLS_SERVER_KEY,omitempty"`

// TLSServerRootCert is the absolute path to server root cert file

TLSServerRootCert string `json:"TLS_SERVER_ROOT_CERT,omitempty"`

// TLSClientCertChain is the absolute path to client cert-chain file

TLSClientCertChain string `json:"TLS_CLIENT_CERT_CHAIN,omitempty"`

// TLSClientKey is the absolute path to client private key file

TLSClientKey string `json:"TLS_CLIENT_KEY,omitempty"`

// TLSClientRootCert is the absolute path to client root cert file

TLSClientRootCert string `json:"TLS_CLIENT_ROOT_CERT,omitempty"` CertBaseDir string `json:"BASE,omitempty"` // IdleTimeout specifies the idle timeout for the proxy, in duration format (10s).

// If not set, no timeout is set.

IdleTimeout string `json:"IDLE_TIMEOUT,omitempty"` // HTTP10 indicates the application behind the sidecar is making outbound http requests with HTTP/1.0

// protocol. It will enable the "AcceptHttp_10" option on the http options for outbound HTTP listeners.

// Alpha in 1.1, based on feedback may be turned into an API or change. Set to "1" to enable.

HTTP10 string `json:"HTTP10,omitempty"` // Generator indicates the client wants to use a custom Generator plugin.

Generator string `json:"GENERATOR,omitempty"` // DNSCapture indicates whether the workload has enabled dns capture

DNSCapture string `json:"DNS_CAPTURE,omitempty"` // ProxyXDSViaAgent indicates that xds data is being proxied via the agent

ProxyXDSViaAgent string `json:"PROXY_XDS_VIA_AGENT,omitempty"` // Contains a copy of the raw metadata. This is needed to lookup arbitrary values.

// If a value is known ahead of time it should be added to the struct rather than reading from here,

Raw map[string]interface{} `json:"-"`

}

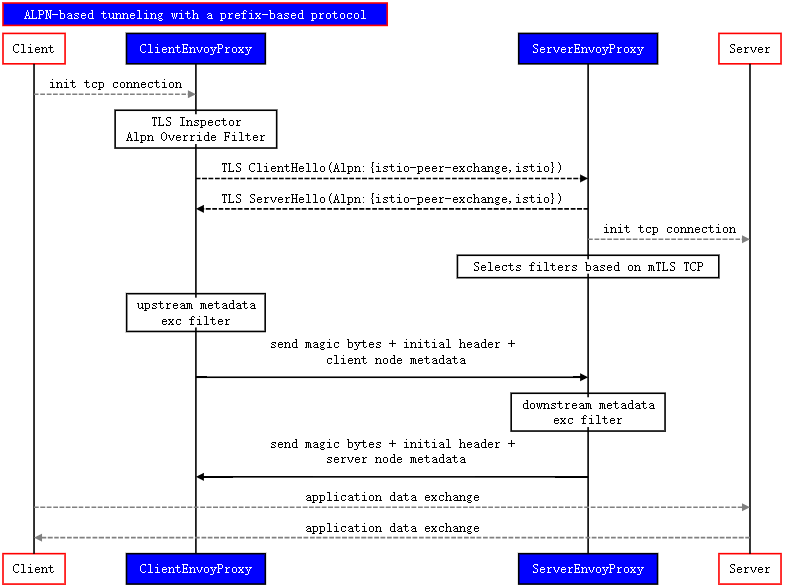

Istio通过一些特定的TCP属性来启用TCP策略和控制(这些属性由Envoy代理生成),并通过Envoy的Node Metadata来获取这些属性。Envoy使用ALPN隧道和基于前缀的协议来转发Node Metadata到对端的Envoy。Istio定义了一个新的协议

istio-peer-exchange,由网格中的客户端和服务端的sidecar在TLS协商时进行宣告并确定优先级。启用istio代理的两端会通过ALPN协商将协议解析为istio-peer-exchange(因此仅限于istio服务网格内的交互),后续的TCP交互将会按照istio-peer-exchange的协议规则进行交互:

使用如下命令可以看到cluster

BlackHoleCluster是没有endpoint的。# istioctl pc endpoint sleep-856d589c9b-x6szk.default --cluster BlackHoleCluster

ENDPOINT STATUS OUTLIER CHECK CLUSTER

如下内容参考官方博客:

对于外部服务,Istio提供了两种管理方式:通过将

global.outboundTrafficPolicy.mode设置为REGISTRY_ONLY来block所有到外部服务的访问;以及通过将global.outboundTrafficPolicy.mode设置为ALLOW_ANY来允许所有到外部服务的访问。默认会允许所有到外部服务的访问。BlackHoleCluster :当

global.outboundTrafficPolicy.mode设置为REGISTRY_ONLY时,Envoy会创建一个虚拟的cluster BlackHoleCluster。该模式下,所有到外部服务的访问都会被block(除非为每个服务添加service entries)。为了实现该功能,默认的outbound listener(监听地址为0.0.0.0:15001)使用原始目的地来设置TCP代理,BlackHoleCluster作为一个静态cluster。由于BlackHoleCluster没有任何endpoint,因此会丢弃所有到外部的流量。此外,Istio会为平台服务的每个端口/协议组合创建唯一的listener,如果对同一端口的外部服务发起请求,则不会命中虚拟listener。这种情况会对route配置进行扩展,添加到BlackHoleCluster的路由。如果没有匹配到其他路由,则Envoy代理会直接返回502 HTTP状态码(BlackHoleCluster可以看作是路由黑洞)。{

"name": "block_all",

"domains": [

"*"

],

"routes": [

{

"match": {

"prefix": "/"

},

"direct_response": {

"status": 502

},

"name": "block_all"

}

],

"include_request_attempt_count": true

},

PassthroughCluster:可以看到PassthroughCluster也使用了

istio-peer-exchange协议来处理TCP。"cluster": {

"@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster",

"name": "PassthroughCluster",

"type": "ORIGINAL_DST", /* 指定type为ORIGINAL_DST,这是一个特殊的cluster */

"connect_timeout": "10s",

"lb_policy": "CLUSTER_PROVIDED",

"circuit_breakers": {

"thresholds": [

{

"max_connections": 4294967295,

"max_pending_requests": 4294967295,

"max_requests": 4294967295,

"max_retries": 4294967295

}

]

},

"protocol_selection": "USE_DOWNSTREAM_PROTOCOL",

"filters": [

{

"name": "istio.metadata_exchange", /* 配置使用ALPN istio-peer-exchange协议来交换Node Metadata */

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange",

"value": {

"protocol": "istio-peer-exchange"

}

}

}

]

},

使用如下命令可以看到cluster

PassthroughCluster是没有endpoint的。# istioctl pc endpoint sleep-856d589c9b-x6szk.default --cluster PassthroughCluster

ENDPOINT STATUS OUTLIER CHECK CLUSTER

PassthroughCluster :当

global.outboundTrafficPolicy.mode设置为ALLOW_ANY时,Envoy会创建一个虚拟的clusterPassthroughCluster。该模式下,会允许所有到外部服务的访问。为了实现该功能,默认的outbound listener(监听地址为0.0.0.0:15001)使用SO_ORIGINAL_DST来配置TCP Proxy,PassthroughCluster作为一个静态cluster。PassthroughClustercluster使用原始目的地负载均衡策略来配置Envoy发送到原始目的地的流量。与

BlackHoleCluster类似,对于每个基于端口/协议的listener,都会添加虚拟路由,将PassthroughCluster作为为默认路由。{

"name": "allow_any",

"domains": [

"*"

],

"routes": [

{

"match": {

"prefix": "/"

},

"route": {

"cluster": "PassthroughCluster",

"timeout": "0s",

"max_grpc_timeout": "0s"

},

"name": "allow_any"

}

],

"include_request_attempt_count": true

},

由于

global.outboundTrafficPolicy.mode只能配置某一个值,因此BlackHoleCluster和PassthroughCluster的出现是互斥的,BlackHoleCluster和PassthroughCluster的路由仅存在istio服务网格内,即注入sidecar的pod中。可以使用Prometheus metrics来监控到

BlackHoleCluster和PassthroughCluster的访问。inbound cluster:处理入站请求的cluster,对于下面的sleep应用来说,其只有一个本地后端

127.0.0.1:80,并通过load_assignment指定了cluster名称和负载信息。由于该监听器上的流量不会出战,因此下面并没有配置过滤器。"cluster": {

"@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster",

"name": "inbound|80|http|sleep.default.svc.cluster.local",

"type": "STATIC",

"connect_timeout": "10s",

"circuit_breakers": {

"thresholds": [

{

"max_connections": 4294967295,

"max_pending_requests": 4294967295,

"max_requests": 4294967295,

"max_retries": 4294967295

}

]

},

"load_assignment": { /* 设置入站的cluster的endpoint的负载均衡 */

"cluster_name": "inbound|80|http|sleep.default.svc.cluster.local",

"endpoints": [

{

"lb_endpoints": [

{

"endpoint": {

"address": {

"socket_address": {

"address": "127.0.0.1",

"port_value": 80

}

}

}

}

]

}

]

}

},

也可以使用如下命令查看inbound的cluster信息:

# istioctl pc cluster sleep-856d589c9b-c6xsm.default --direction inbound

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

sleep.default.svc.cluster.local 80 http inbound STATIC

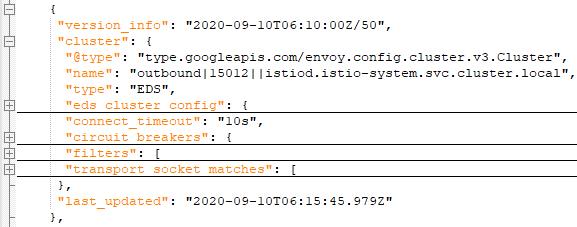

outbound cluster:这类cluster为Envoy节点外的服务,配置如何连接上游。下面的EDS表示该cluster的endpoint来自EDS服务发现。下面给出的outbound cluster是istiod的15012端口上的服务。基本结构如下,

transport_socket_matches仅在使用TLS才会出现,用于配置与TLS证书相关的信息。

具体内容如下:

{

"version_info": "2020-09-15T08:05:54Z/4",

"cluster": {

"@type": "type.googleapis.com/envoy.config.cluster.v3.Cluster",

"name": "outbound|15012||istiod.istio-system.svc.cluster.local",

"type": "EDS",

"eds_cluster_config": { /* EDS的配置 */

"eds_config": {

"ads": {},

"resource_api_version": "V3"

},

"service_name": "outbound|15012||istiod.istio-system.svc.cluster.local" /* EDS的cluster的可替代名称,无需与cluster名称完全相同 */

},

"connect_timeout": "10s",

"circuit_breakers": { /* 断路器设置 */

"thresholds": [

{

"max_connections": 4294967295,

"max_pending_requests": 4294967295,

"max_requests": 4294967295,

"max_retries": 4294967295

}

]

},

"filters": [ /* 设置Node Metadata交互使用的协议为istio-peer-exchange */

{

"name": "istio.metadata_exchange",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange",

"value": {

"protocol": "istio-peer-exchange"

}

}

}

],

"transport_socket_matches": [ /* 指定匹配的后端使用的带TLS的传输socket */

{

"name": "tlsMode-istio", /* match的名称 */

"match": { /* 匹配后端的条件,注入istio sidecar的pod会打上标签:security.istio.io/tlsMode=istio */

"tlsMode": "istio"

},

"transport_socket": { /* 匹配cluster的后端使用的传输socket的配置 */

"name": "envoy.transport_sockets.tls",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext",

"common_tls_context": { /* 配置client和server端使用的TLS上下文 */

"alpn_protocols": [ /* 配置交互使用的ALPN协议集,供上游选择 */

"istio-peer-exchange",

"istio"

],

"tls_certificate_sds_secret_configs": [ /* 通过SDS API获取TLS证书的配置 */

{

"name": "default",

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [ /* SDS的cluster */

{

"envoy_grpc": {

"cluster_name": "sds-grpc" /* 为上面静态配置的cluster */

}

}

],

"transport_api_version": "V3"

},

"initial_fetch_timeout": "0s",

"resource_api_version": "V3"

}

}

],

"combined_validation_context": { /* 包含一个CertificateValidationContext(即下面的default_validation_context)和SDS配置。当SDS服务返回动态的CertificateValidationContext时,动态和默认的CertificateValidationContext会合并为一个新的CertificateValidationContext来进行校验 */

"default_validation_context": { /* 配置如何认证对端istiod服务的证书 */

"match_subject_alt_names": [ /* Envoy会按照如下配置来校验证书中的SAN */

{

"exact": "spiffe://new-td/ns/istio-system/sa/istiod-service-account" /* 与Istio配置的serviceaccount授权策略相同 */

}

]

},

"validation_context_sds_secret_config": { /* SDS配置,也是通过静态的cluster sds-grpc提供SDS API服务 */

"name": "ROOTCA",

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc"

}

}

],

"transport_api_version": "V3"

},

"initial_fetch_timeout": "0s",

"resource_api_version": "V3"

}

}

}

},

"sni": "outbound_.15012_._.istiod.istio-system.svc.cluster.local" /* 创建TLS连接时使用的SNI字符串,即TLS的server_name扩展字段中的值 */

}

}

},

{

"name": "tlsMode-disabled", /* 如果与没有匹配到的后端(即istio服务网格外的后端)进行通信时,则使用明文方式 */

"match": {},

"transport_socket": {

"name": "envoy.transport_sockets.raw_buffer"

}

}

]

},

"last_updated": "2020-09-15T08:06:23.565Z"

},

Listeners:Envoy使用listener来接收并处理下游发来的请求。与cluster类似,listener也分为静态和动态两种配置。静态配置来自Istio-agent生成的

envoy-rev0.json文件。动态配置为:virtualOutbound Listener:Istio在注入sidecar时,会通过init容器来设置iptables规则,将所有出站的TCP流量拦截到本地的

15001端口:-A ISTIO_REDIRECT -p tcp -j REDIRECT --to-ports 15001

一个istio-agent配置中仅包含一个virtualOutbound listener,可以看到该listener并没有配置

transport_socket,它的下游流量就是来自本pod的业务容器,并不需要进行TLS校验,直接将流量重定向到15001端口即可,然后转发给和原始目的IP:Port匹配的listener。{

"name": "virtualOutbound",

"active_state": {

"version_info": "2020-09-15T08:05:54Z/4",

"listener": {

"@type": "type.googleapis.com/envoy.config.listener.v3.Listener",

"name": "virtualOutbound",

"address": { /* 监听器监听的地址 */

"socket_address": {

"address": "0.0.0.0",

"port_value": 15001

}

},

"filter_chains": [ /* 应用到该监听器的过滤器链 */

{

"filters": [ /* 与该监听器建立连接时使用的过滤器,按顺序处理各个过滤器。如果过滤器列表为空,则默认会关闭连接 */

{

"name": "istio.stats",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.network.wasm.v3.Wasm",

"value": {

"config": { /* Wasm插件配置 */

"root_id": "stats_outbound", /* 一个VM中具有相同root_id的一组filters/services会共享相同的RootContext和Contexts,如果该字段为空,所有该字段为空的filters/services都会共享具有相同vm_id的Context(s) */

"vm_config": { /* Wasm VM的配置 */

"vm_id": "tcp_stats_outbound", /* 使用相同vm_id和code将使用相同的VM */

"runtime": "envoy.wasm.runtime.null", /* Wasm运行时,v8或null */

"code": {

"local": {

"inline_string": "envoy.wasm.stats"

}

}

},

"configuration": {

"@type": "type.googleapis.com/google.protobuf.StringValue",

"value": "{\n \"debug\": \"false\",\n \"stat_prefix\": \"istio\"\n}\n"

}

}

}

}

},

{

"name": "envoy.tcp_proxy", /* 处理TCP的过滤器 */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy",

"stat_prefix": "PassthroughCluster",

"cluster": "PassthroughCluster", /* 连接的上游cluster */

"access_log": [

{

"name": "envoy.file_access_log",

"typed_config": { /* 配置日志的输出格式和路径 */

"@type": "type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog",

"path": "/dev/stdout",

"format": "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% \"%DYNAMIC_METADATA(istio.mixer:status)%\" \"%UPSTREAM_TRANSPORT_FAILURE_REASON%\" %BYTES_RECEIVED% %BYTES_SENT% %DURATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%\" %UPSTREAM_CLUSTER% %UPSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_REMOTE_ADDRESS% %REQUESTED_SERVER_NAME% %ROUTE_NAME%\n"

}

}

]

}

}

],

"name": "virtualOutbound-catchall-tcp"

}

],

"hidden_envoy_deprecated_use_original_dst": true,

"traffic_direction": "OUTBOUND"

},

"last_updated": "2020-09-15T08:06:24.066Z"

}

},

上面

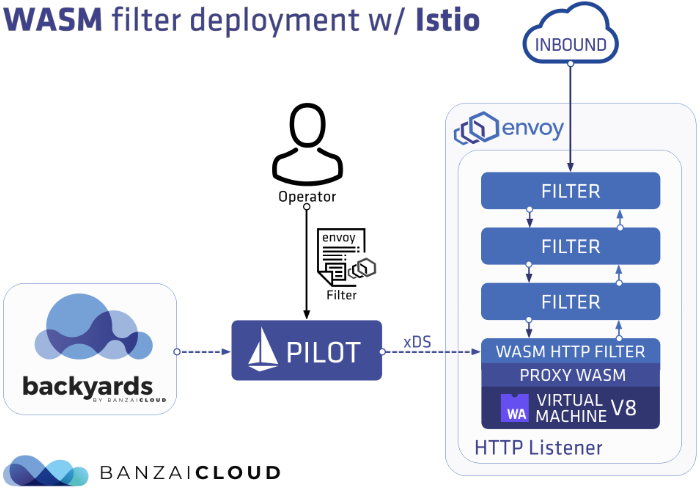

envoy.tcp_proxy过滤器的cluster为PassthroughCluster,这是因为将global.outboundTrafficPolicy.mode设置为了ALLOW_ANY,默认可以访问外部服务。如果global.outboundTrafficPolicy.mode设置为了REGISTRY_ONLY,则此处将变为clusterBlackHoleCluster,默认丢弃所有到外部服务的请求。上面使用wasm(WebAssembly)来记录遥测信息,Envoy官方文档中目前缺少对wasm的描述,可以参考开源代码的描述。从runtime字段为

null可以看到并没有启用。可以在安装istio的时候使用如下参数来启用基于Wasm的遥测。$ istioctl install --set values.telemetry.v2.metadataExchange.wasmEnabled=true --set values.telemetry.v2.prometheus.wasmEnabled=true

启用之后,与wasm有关的用于遥测的过滤器配置变为了如下内容,可以看到其runtime使用了envoy.wasm.runtime.v8。更多参见官方博客。

{

"name": "istio.stats",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.network.wasm.v3.Wasm",

"value": {

"config": {

"root_id": "stats_outbound",

"vm_config": { /* wasm虚拟机配置 */

"vm_id": "tcp_stats_outbound",

"runtime": "envoy.wasm.runtime.v8", /* 使用的wasm runtime */

"code": {

"local": {

"filename": "/etc/istio/extensions/stats-filter.compiled.wasm" /* 编译后的wasm插件路径 */

}

},

"allow_precompiled": true

},

"configuration": {

"@type": "type.googleapis.com/google.protobuf.StringValue",

"value": "{\n \"debug\": \"false\",\n \"stat_prefix\": \"istio\"\n}\n"

}

}

}

}

},

在istio-proxy容器的

/etc/istio/extensions/目录下可以看到wasm编译的相关程序,包含用于交换Node Metadata的metadata-exchange-filter.wasm和用于遥测的stats-filter.wasm,带compiled的wasm用于HTTP。$ ls

metadata-exchange-filter.compiled.wasm metadata-exchange-filter.wasm stats-filter.compiled.wasm stats-filter.wasm

Istio的filter处理示意图如下:

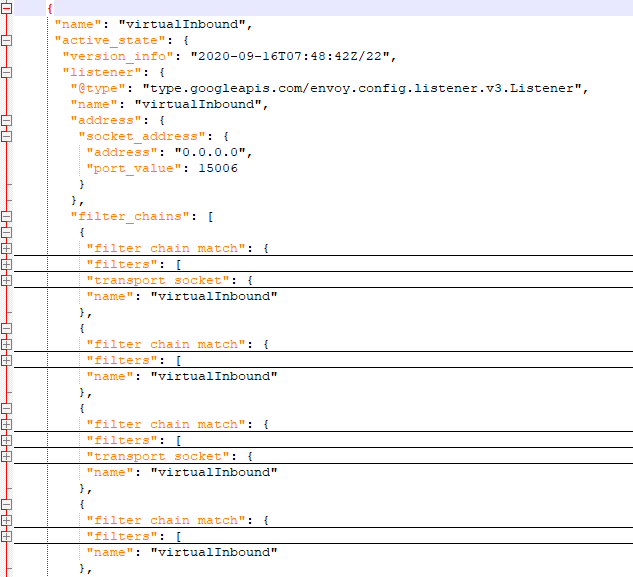

VirtualInbound/Inbound Listener:与virtualOutbound listener类似,通过如下规则将所有入站的TCP流量重定向到15006端口

-A ISTIO_IN_REDIRECT -p tcp -j REDIRECT --to-ports 15006

下面是一个demo环境中的典型配置,可以看到对于每个监听的地址,都配置了两个过滤器:一个带

transport_socket,一个不带transport_socket,分别处理使用TLS的连接和不使用TLS的连接。主要的入站监听器为:- 处理基于IPv4的带TLS 的TCP连接

- 处理基于IPv4的不带TLS 的TCP连接

- 处理基于IPv6的带TLS 的TCP连接

- 处理基于IPv6的不带TLS 的TCP连接

- 处理基于IPv4的带TLS 的HTTP连接

- 处理基于IPv4的不带TLS 的HTTP连接

- 处理基于IPv6的带TLS 的HTTP连接

- 处理基于IPv6的不带TLS 的HTTP连接

- 处理业务的带TLS(不带TLS)的连接

下面给出如下内容的inbound listener:

- 处理基于IPv4的带TLS 的TCP连接

- 处理基于IPv4的不带TLS 的TCP连接

- 处理业务的带TLS的连接

{

"name": "virtualInbound",

"active_state": {

"version_info": "2020-09-15T08:05:54Z/4",

"listener": {

"@type": "type.googleapis.com/envoy.config.listener.v3.Listener",

"name": "virtualInbound",

"address": { /* 该listener绑定的地址和端口 */

"socket_address": {

"address": "0.0.0.0",

"port_value": 15006

}

},

"filter_chains": [

/* 匹配所有IPV4地址,使用TLS且ALPN为istio-peer-exchange或istio的连接 */

{

"filter_chain_match": { /* 将连接匹配到该过滤器链时使用的标准 */

"prefix_ranges": [ /* 当listener绑定到0.0.0.0/::时匹配的IP地址和前缀长度,下面表示整个网络地址 */

{

"address_prefix": "0.0.0.0",

"prefix_len": 0

}

],

"transport_protocol": "tls", /* 匹配的传输协议 */

"application_protocols": [ /* 使用的ALPN */

"istio-peer-exchange",

"istio"

]

},

"filters": [

{

"name": "istio.metadata_exchange", /* 交换Node Metadata的配置 */

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange",

"value": {

"protocol": "istio-peer-exchange"

}

}

},

{

"name": "istio.stats", /* 使用wasm进行遥测的配置 */

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.network.wasm.v3.Wasm",

"value": {

"config": {

"root_id": "stats_inbound",

"vm_config": {

"vm_id": "tcp_stats_inbound",

"runtime": "envoy.wasm.runtime.null",

"code": {

"local": {

"inline_string": "envoy.wasm.stats"

}

}

},

"configuration": {

"@type": "type.googleapis.com/google.protobuf.StringValue",

"value": "{\n \"debug\": \"false\",\n \"stat_prefix\": \"istio\"\n}\n"

}

}

}

}

},

{

"name": "envoy.tcp_proxy", /* 配置连接上游cluster InboundPassthroughClusterIpv4时的访问日志,InboundPassthroughClusterIpv4 cluster用于处理基于IPv4的HTTP */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy",

"stat_prefix": "InboundPassthroughClusterIpv4",

"cluster": "InboundPassthroughClusterIpv4",

"access_log": [

{

"name": "envoy.file_access_log",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog",

"path": "/dev/stdout",

"format": "[%START_TIME%] \"%REQ(:METHOD)% %REQ(X-ENVOY-ORIGINAL-PATH?:PATH)% %PROTOCOL%\" %RESPONSE_CODE% %RESPONSE_FLAGS% \"%DYNAMIC_METADATA(istio.mixer:status)%\" \"%UPSTREAM_TRANSPORT_FAILURE_REASON%\" %BYTES_RECEIVED% %BYTES_SENT% %DURATION% %RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)% \"%REQ(X-FORWARDED-FOR)%\" \"%REQ(USER-AGENT)%\" \"%REQ(X-REQUEST-ID)%\" \"%REQ(:AUTHORITY)%\" \"%UPSTREAM_HOST%\" %UPSTREAM_CLUSTER% %UPSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_LOCAL_ADDRESS% %DOWNSTREAM_REMOTE_ADDRESS% %REQUESTED_SERVER_NAME% %ROUTE_NAME%\n"

}

}

]

}

}

],

"transport_socket": { /* 匹配TLS的传输socket */

"name": "envoy.transport_sockets.tls",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.DownstreamTlsContext",

"common_tls_context": {

"alpn_protocols": [ /* 监听器使用的ALPN列表 */

"istio-peer-exchange",

"h2",

"http/1.1"

],

"tls_certificate_sds_secret_configs": [ /* 通过SDS API获取证书的配置 */

{

"name": "default",

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc"

}

}

],

"transport_api_version": "V3"

},

"initial_fetch_timeout": "0s",

"resource_api_version": "V3"

}

}

],

"combined_validation_context": {

"default_validation_context": { /* 对对端的证书的SAN进行认证 */

"match_subject_alt_names": [

{

"prefix": "spiffe://new-td/"

},

{

"prefix": "spiffe://old-td/"

}

]

},

"validation_context_sds_secret_config": { /* 配置通过SDS API获取证书 */

"name": "ROOTCA",

"sds_config": {

"api_config_source": {

"api_type": "GRPC",

"grpc_services": [

{

"envoy_grpc": {

"cluster_name": "sds-grpc"

}

}

],

"transport_api_version": "V3"

},

"initial_fetch_timeout": "0s",

"resource_api_version": "V3"

}

}

}

},

"require_client_certificate": true

}

},

"name": "virtualInbound"

},

/* 与上面不同的是,此处匹配不带TLS的连接 */

{

"filter_chain_match": {

"prefix_ranges": [

{

"address_prefix": "0.0.0.0",

"prefix_len": 0

}

]

},

"filters": [

{

"name": "istio.metadata_exchange",

...

},

{

"name": "istio.stats",

...

},

{

"name": "envoy.tcp_proxy",

...

}

],

"name": "virtualInbound"

},

...

/* 应用的监听器,监听端口为HTTP 80端口 */

{

"filter_chain_match": {

"destination_port": 80, /* 匹配的请求的目的端口 */

"application_protocols": [ /* 匹配的ALPN,仅在使用TLS时使用 */

"istio",

"istio-http/1.0",

"istio-http/1.1",

"istio-h2"

]

},

"filters": [

{

"name": "istio.metadata_exchange", /* 交换Node Metadata的配置 */

...

},

{

"name": "envoy.http_connection_manager", /* HTTP连接管理过滤器 */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager",

"stat_prefix": "inbound_0.0.0.0_80",

"route_config": { /* 静态路由表 */

"name": "inbound|80|http|sleep.default.svc.cluster.local", /* 路由配置的名称 */

"virtual_hosts": [ /* 构成路由表的虚拟主机列表 */

{

"name": "inbound|http|80", /* 构成路由表的虚拟主机名 */

"domains": [ /* 匹配到该虚拟主机的域列表 */

"*"

],

"routes": [ /* 对入站请求的路由,将路径为"/"的HTTP请求路由到cluster inbound|80|http|sleep.default.svc.cluster.local*/

{

"match": {

"prefix": "/"

},

"route": {

"cluster": "inbound|80|http|sleep.default.svc.cluster.local",

"timeout": "0s",

"max_grpc_timeout": "0s"

},

"decorator": {

"operation": "sleep.default.svc.cluster.local:80/*"

},

"name": "default" /* 路由的名称 */

}

]

}

],

"validate_clusters": false

},

"http_filters": [ /* HTTP连接过滤器链 */

{

"name": "istio.metadata_exchange", /* 基于HTTP的Metadata的交换配置 */

...

},

{

"name": "istio_authn", /* istio的mTLS的默认值 */

"typed_config": {

"@type": "type.googleapis.com/istio.envoy.config.filter.http.authn.v2alpha1.FilterConfig",

"policy": {

"peers": [

{

"mtls": {

"mode": "PERMISSIVE"

}

}

]

},

"skip_validate_trust_domain": true

}

},

{

"name": "envoy.filters.http.cors",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.cors.v3.Cors"

}

},

{

"name": "envoy.fault",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault"

}

},

{

"name": "istio.stats", /* 基于HTTP的遥测配置 */

...

},

{

"name": "envoy.router",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.router.v3.Router"

}

}

],

"tracing": {

"client_sampling": {

"value": 100

},

"random_sampling": {

"value": 1

},

"overall_sampling": {

"value": 100

}

},

"server_name": "istio-envoy", /* 设置访问日志格式 */

"access_log": [

{

"name": "envoy.file_access_log",

...

}

],

"use_remote_address": false,

"generate_request_id": true,

"forward_client_cert_details": "APPEND_FORWARD",

"set_current_client_cert_details": {

"subject": true,

"dns": true,

"uri": true

},

"upgrade_configs": [

{

"upgrade_type": "websocket"

}

],

"stream_idle_timeout": "0s",

"normalize_path": true

}

}

],

"transport_socket": { /* TLS传输socket配置 */

"name": "envoy.transport_sockets.tls",

...

},

"name": "0.0.0.0_80"

},

Outbound listener: 下面是到Prometheus服务9092端口的outbound listener。

10.84.30.227为Prometheus的k8s service地址,指定了后端的clusteroutbound|9092||prometheus-k8s.openshift-monitoring.svc.cluster.local。route_config_name字段指定了该listener使用的routeprometheus-k8s.openshift-monitoring.svc.cluster.local:9092。{

"name": "10.84.30.227_9092",

"active_state": {

"version_info": "2020-09-15T08:05:54Z/4",

"listener": {

"@type": "type.googleapis.com/envoy.config.listener.v3.Listener",

"name": "10.84.30.227_9092",

"address": {

"socket_address": {

"address": "10.84.30.227",

"port_value": 9092

}

},

"filter_chains": [

{

"filters": [

{

"name": "istio.stats",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.network.wasm.v3.Wasm",

...

}

},

{

"name": "envoy.tcp_proxy",/* TCP过滤器设置,设置连接到对应cluster的日志格式 */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy",

"stat_prefix": "outbound|9092||prometheus-k8s.openshift-monitoring.svc.cluster.local",

"cluster": "outbound|9092||prometheus-k8s.openshift-monitoring.svc.cluster.local",

"access_log": [

...

]

}

}

]

},

{

"filter_chain_match": {

"application_protocols": [

"http/1.0",

"http/1.1",

"h2c"

]

},

"filters": [

{

"name": "envoy.http_connection_manager", /* 配置HTTP连接 */

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager",

"stat_prefix": "outbound_10.84.30.227_9092",

"rds": { /* RDS接口配置 */

"config_source": {

"ads": {},

"resource_api_version": "V3"

},

"route_config_name": "prometheus-k8s.openshift-monitoring.svc.cluster.local:9092" /* 指定路由配置 */

},

"http_filters": [

{

"name": "istio.metadata_exchange",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.http.wasm.v3.Wasm",

...

}

},

{

"name": "istio.alpn",

"typed_config": {

"@type": "type.googleapis.com/istio.envoy.config.filter.http.alpn.v2alpha1.FilterConfig",

"alpn_override": [

{

"alpn_override": [

"istio-http/1.0",

"istio"

]

},

{

"upstream_protocol": "HTTP11",

"alpn_override": [

"istio-http/1.1",

"istio"

]

},

{

"upstream_protocol": "HTTP2",

"alpn_override": [

"istio-h2",

"istio"

]

}

]

}

},

{

"name": "envoy.filters.http.cors",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.cors.v3.Cors"

}

},

{

"name": "envoy.fault",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.fault.v3.HTTPFault"

}

},

{

"name": "istio.stats",

"typed_config": {

"@type": "type.googleapis.com/udpa.type.v1.TypedStruct",

"type_url": "type.googleapis.com/envoy.extensions.filters.http.wasm.v3.Wasm",

...

}

},

{

"name": "envoy.router",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.http.router.v3.Router"

}

}

],

"tracing": {

...

},

"access_log": [

{

"name": "envoy.file_access_log",

...

}

}

],

"use_remote_address": false,

"generate_request_id": true,

"upgrade_configs": [

{

"upgrade_type": "websocket"

}

],

"stream_idle_timeout": "0s",

"normalize_path": true

}

}

]

}

],

"deprecated_v1": {

"bind_to_port": false

},

"listener_filters": [

{

"name": "envoy.listener.tls_inspector",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.listener.tls_inspector.v3.TlsInspector"

}

},

{

"name": "envoy.listener.http_inspector",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.listener.http_inspector.v3.HttpInspector"

}

}

],

"listener_filters_timeout": "5s",

"traffic_direction": "OUTBOUND",

"continue_on_listener_filters_timeout": true

},

"last_updated": "2020-09-15T08:06:23.989Z"

}

},

从上面的配置可以看出,路由配置位于HttpConnectionManager类型中,因此如果某个listener没有用到HTTP,则不会有对应的route。如下面的istiod15012端口上的服务,提供了基于gRPC协议的XDP和CA的服务(使用TLS)。

{

"name": "10.84.251.157_15012",

"active_state": {

"version_info": "2020-09-16T07:48:42Z/22",

"listener": {

"@type": "type.googleapis.com/envoy.config.listener.v3.Listener",

"name": "10.84.251.157_15012",

"address": {

"socket_address": {

"address": "10.84.251.157",

"port_value": 15012

}

},

"filter_chains": [

{

"filters": [

{

"name": "istio.stats",

...

},

{

"name": "envoy.tcp_proxy",

"typed_config": {

"@type": "type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy",

"stat_prefix": "outbound|15012||istiod.istio-system.svc.cluster.local",

"cluster": "outbound|15012||istiod.istio-system.svc.cluster.local",

"access_log": [

...

]

}

}

]

}

],

"deprecated_v1": {

"bind_to_port": false

},

"traffic_direction": "OUTBOUND"

},

"last_updated": "2020-09-16T07:49:34.134Z"

}

},

Route:Istio的route也分为静态配置和动态配置。静态路由配置与静态监听器,以及inbound 动态监听器中设置的静态路由配置(

envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager中的route_config)有关。下面看一个与Prometheus 9092端口提供的服务有关的动态路由,路由配置名称route_config.name与上面Prometheus outbound监听器

route_config_name字段指定的值是相同的。{

"version_info": "2020-09-16T07:48:42Z/22",

"route_config": {

"@type": "type.googleapis.com/envoy.config.route.v3.RouteConfiguration",

"name": "prometheus-k8s.openshift-monitoring.svc.cluster.local:9092",

"virtual_hosts": [

{

"name": "prometheus-k8s.openshift-monitoring.svc.cluster.local:9092",

"domains": [

"prometheus-k8s.openshift-monitoring.svc.cluster.local",

"prometheus-k8s.openshift-monitoring.svc.cluster.local:9092",

"prometheus-k8s.openshift-monitoring",

"prometheus-k8s.openshift-monitoring:9092",

"prometheus-k8s.openshift-monitoring.svc.cluster",

"prometheus-k8s.openshift-monitoring.svc.cluster:9092",

"prometheus-k8s.openshift-monitoring.svc",

"prometheus-k8s.openshift-monitoring.svc:9092",

"10.84.30.227",

"10.84.30.227:9092"

],

"routes": [

{

"match": {

"prefix": "/"

},

"route": { /* 路由到的后端cluster */

"cluster": "outbound|9092||prometheus-k8s.openshift-monitoring.svc.cluster.local",

"timeout": "0s",

"retry_policy": {

"retry_on": "connect-failure,refused-stream,unavailable,cancelled,retriable-status-codes",

"num_retries": 2,

"retry_host_predicate": [

{

"name": "envoy.retry_host_predicates.previous_hosts"

}

],

"host_selection_retry_max_attempts": "5",

"retriable_status_codes": [

503

]

},

"max_grpc_timeout": "0s"

},

"decorator": {

"operation": "prometheus-k8s.openshift-monitoring.svc.cluster.local:9092/*"

},

"name": "default"

}

],

"include_request_attempt_count": true

}

],

"validate_clusters": false

},

"last_updated": "2020-09-16T07:49:52.551Z"

},

更多内容可以参考Envoy的官方文档

下面是基于Istio官方BookInfo的一个访问流程图,可以帮助理解整个流程。

参考

- Sidecar 流量路由机制分析

- WebAssembly在Envoy与Istio中的应用

- Istio1.5 & Envoy 数据面 WASM 实践

- How to write WASM filters for Envoy and deploy it with Istio

- Implementing Filters in Envoy

Istio中的流量配置的更多相关文章

- 在 Istio 中实现 Redis 集群的数据分片、读写分离和流量镜像

Redis 是一个高性能的 key-value 存储系统,被广泛用于微服务架构中.如果我们想要使用 Redis 集群模式提供的高级特性,则需要对客户端代码进行改动,这带来了应用升级和维护的一些困难.利 ...

- 如何在 Istio 中支持 Dubbo、Thrift、Redis 以及任何七层协议?

赵化冰,腾讯云高级工程师,Istio Member,ServiceMesher管理委员,Istio 项目贡献者, Aerika 项目创建者 ,热衷于开源.网络和云计算.目前主要从事服务网格的开源和研发 ...

- Istio 中实现客户端源 IP 的保持

作者 尹烨,腾讯专家工程师, 腾讯云 TCM 产品负责人.在 K8s.Service Mesh 等方面有多年的实践经验. 导语 对于很多后端服务业务,我们都希望得到客户端源 IP.云上的负载均衡器,比 ...

- Apache运维中常用功能配置笔记梳理

Apache 是一款使用量排名第一的 web 服务器,LAMP 中的 A 指的就是它.由于其开源.稳定.安全等特性而被广泛使用.下边记录了使用 Apache 以来经常用到的功能,做此梳理,作为日常运维 ...

- Nginx中虚拟主机配置

一.Nginx中虚拟主机配置 1.基于域名的虚拟主机配置 1.修改宿主机的hosts文件(系统盘/windows/system32/driver/etc/HOSTS) linux : vim /etc ...

- 为什么网络损伤仪WANsim中没有流量通过

在使用网络损伤仪 WANsim 的过程中,有时候发现网损仪中没有流量通过.有些小伙伴可能会想:自己所有配置都是正确的 ,为什么会没有流量通过呢? 有可能,是你忽略了一些东西. 下面,我总结了一些导致网 ...

- WCF学习之旅—WCF4.0中的简化配置功能(十五)

六 WCF4.0中的简化配置功能 WCF4.0为了简化服务配置,提供了默认的终结点.绑定和服务行为.也就是说,在开发WCF服务程序的时候,即使我们不提供显示的 服务终结点,WCF框架也能为我们的服务提 ...

- asp.net中web.config配置节点大全详解

最近网上找了一些关于Web.config配置节点的文章,发现很多都写的都比较零散,而且很少有说明各个配置节点的作用和用法.搜索了一下发现有一篇写的不错,这里引用一下 原文地址 http://www.c ...

- CentOS-7.0.中安装与配置Tomcat-7的方法

安装说明 安装环境:CentOS-7.0.1406安装方式:源码安装 软件:apache-tomcat-7.0.29.tar.gz 下载地址:http://tomcat.apache.org/down ...

随机推荐

- 41. The Security Namespace

41.1 Web Application Security网络应用安全 41.1.1 <debug> 启用spring安全调试基础架构.这将提供人类可读的(多行)调试信息来监控进入安全过滤 ...

- 【算法•日更•第二十七期】基础python

▎前言 看到这个题目,你一定会很好奇,为什么学打NOIP的要学习python?其实python对我们是很有用的! NOIP虽然不支持使用python提交代码,但是在NOILinux上天生自带pytho ...

- Angular Datatable的一些问题

这几天改bug中发现的一些问题,小结一下.从简单到复杂逐个讲. angular datatable实质上是对jquery库的包装,但包装后不太好用,定制功能比较麻烦. 1. 基本用法 最简单的用法,大 ...

- SpringBoot整合Redis、mybatis实战,封装RedisUtils工具类,redis缓存mybatis数据 附源码

创建SpringBoot项目 在线创建方式 网址:https://start.spring.io/ 然后创建Controller.Mapper.Service包 SpringBoot整合Redis 引 ...

- CODING DevOps 微服务项目实战系列最后一课,周四开讲!

随着软件工程越来越复杂化,如何在 Kubernetes 集群进行灰度发布成为了生产部署的"必修课",而如何实现安全可控.自动化的灰度发布也成为了持续部署重点关注的问题.CODING ...

- 为什么 java.util.Stack不被官方所推荐使用!

Java 为什么不推荐使用 Stack 呢? 因为 Stack 是 JDK 1.0 的产物.它继承自 Vector,Vector 都不被推荐使用了,你说 Stack 还会被推荐吗? 当初 JDK1.0 ...

- Java数据结构——图的基本理论及简单实现

1. 图的定义图(graph)是由一些点(vertex)和这些点之间的连线(edge)所组成的:其中,点通常被成为"顶点(vertex)",而点与点之间的连线则被成为"边 ...

- 使用PowerShell连接Ubuntu

Ubuntu安装PowerShell Ubuntu安装PowerShell帮助文档 # Download the Microsoft repository GPG keys wget -q https ...

- promise和async await的区别

在项目中第一次遇到async await的这种异步写法,来搞懂它 项目场景 :点击登录按钮后执行的事件,先进行表单校验 this.$refs.loginFormRef.validate(element ...

- 据说这个是可以撸到2089年的idea2020.2

声明:本教程 IntelliJ IDEA IDEA2020.2破解 激活方式均收集于网络,请勿商用,仅供个人学习使用,如有侵权,请联系作者删除 注意: 本教程适用于 JetBrains 全系列产品 I ...