转:locality sensitive hashing

Motivation

The task of finding nearest neighbours is very common. You can think of applications like finding duplicate or similar documents, audio/video search. Although using brute force to check for all possible combinations will give you the exact nearest neighbour but it’s not scalable at all. Approximate algorithms to accomplish this task has been an area of active research. Although these algorithms don’t guarantee to give you the exact answer, more often than not they’ll be provide a good approximation. These algorithms are faster and scalable.

Locality sensitive hashing (LSH) is one such algorithm. LSH has many applications, including:

- Near-duplicate detection: LSH is commonly used to deduplicate large quantities of documents, webpages, and other files.

- Genome-wide association study: Biologists often use LSH to identify similar gene expressions in genome databases.

- Large-scale image search: Google used LSH along with PageRank to build their image search technology VisualRank.

- Audio/video fingerprinting: In multimedia technologies, LSH is widely used as a fingerprinting technique A/V data.

In this blog, we’ll try to understand the workings of this algorithm.

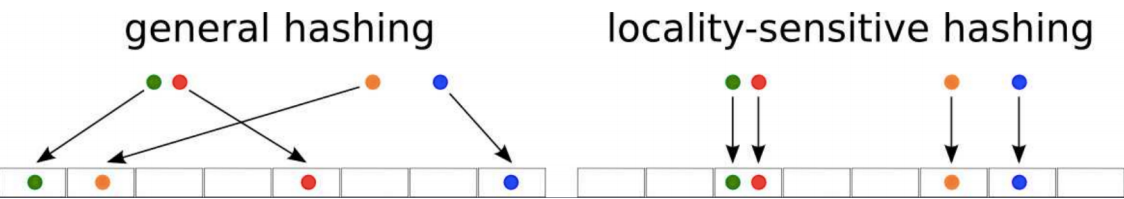

General Idea

LSH refers to a family of functions (known as LSH families) to hash data points into buckets so that data points near each other are located in the same buckets with high probability, while data points far from each other are likely to be in different buckets. This makes it easier to identify observations with various degrees of similarity.

Finding similar documents

Let’s try to understand how we can leverage LSH in solving an actual problem. The problem that we’re trying to solve:

Goal: You have been given a large collections of documents. You want to find “near duplicate” pairs.

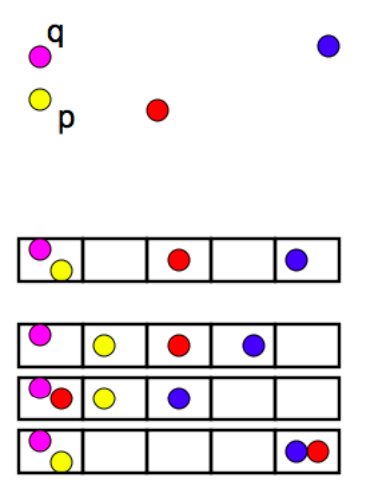

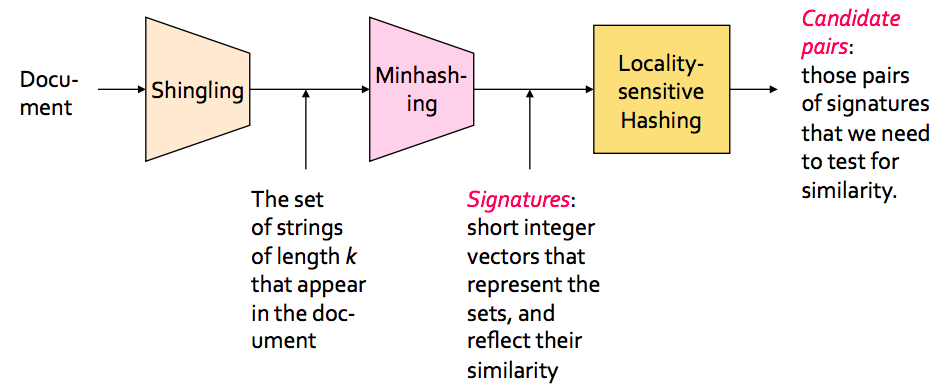

In the context of this problem//////再次问题的背景下, we can break down the LSH algorithm into 3 broad steps:

- Shingling

- Min hashing

- Locality-sensitive hashing

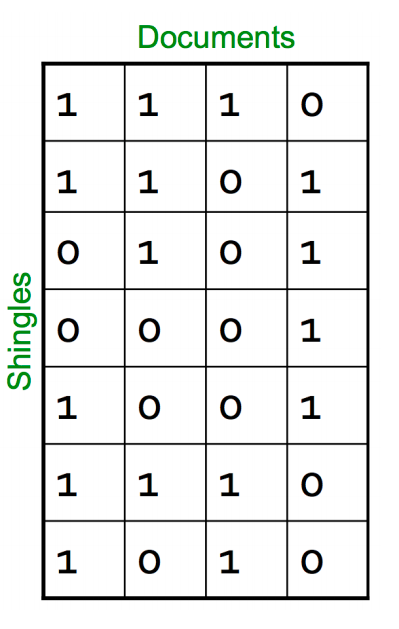

Shingling

In this step, we convert each document into a set of characters of length k (also known as k-shingles or k-grams). The key idea is to represent each document in our collection as a set of k-shingles.

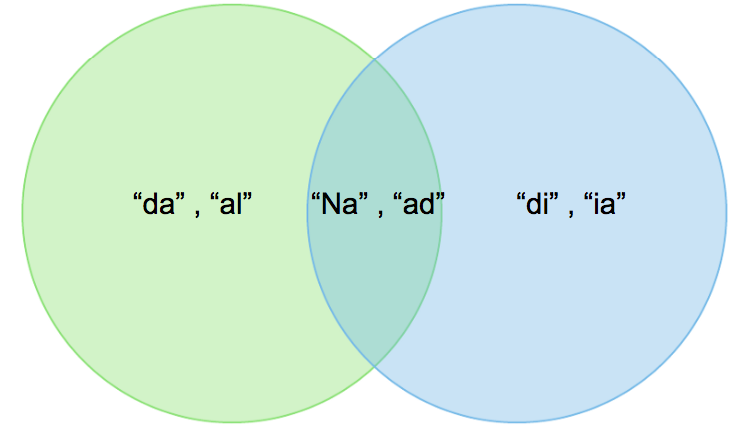

For ex: One of your document (D): “Nadal”. Now if we’re interested in 2-shingles, then our set: {Na, ad, da, al}. Similarly set of 3-shingles: {Nad, ada, dal}.

- Similar documents are more likely to share more shingles

- Reordering paragraphs in a document of changing words doesn’t have much affect on shingles

- k value of 8–10 is generally used in practice. A small value will result in many shingles which are present in most of the documents (bad for differentiating documents)

Jaccard Index

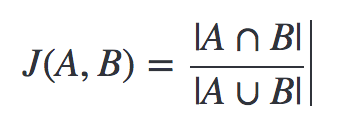

We’ve a representation of each document in the form of shingles. Now, we need a metric to measure similarity between documents. Jaccard Index is a good choice for this. Jaccard Index between document A & B can be defined as:

It’s also known as intersection over union (IOU).

A: {Na, ad, da, al} and B: {Na, ad, di, ia}.

Jaccard Index = 2/6

Let’s discuss 2 big issues that we need to tackle:

Time complexity

Now you may be thinking that we can stop here. But if you think about the scalability, doing just this won’t work. For a collection of n documents, you need to do n*(n-1)/2 comparison, basically O(n²). Imagine you have 1 million documents, then the number of comparison will be 5*10¹¹ (not scalable at all!).

Space complexity

The document matrix is a sparse matrix and storing it as it is will be a big memory overhead. One way to solve this is hashing.

Hashing

The idea of hashing is to convert each document to a small signature using a hashing function H*.* Suppose a document in our corpus is denoted by d. Then:

- H(d) is the signature and it’s small enough to fit in memory

- If similarity(d1,d2) is high then *Probability(H(d1)==H(d2))* is high

- If similarity(d1,d2) is low then *Probability(H(d1)==H(d2))* is low

Choice of hashing function is tightly linked to the similarity metric we’re using. For Jaccard similarity the appropriate hashing function is min-hashing.

Min hashing

This is the critical and the most magical aspect of this algorithm so pay attention:

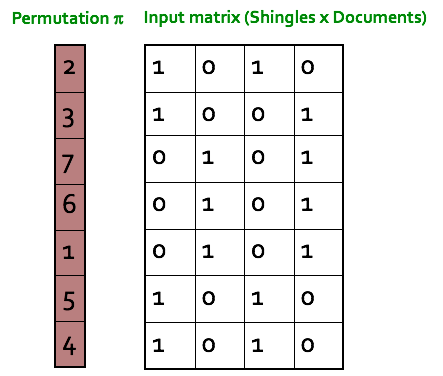

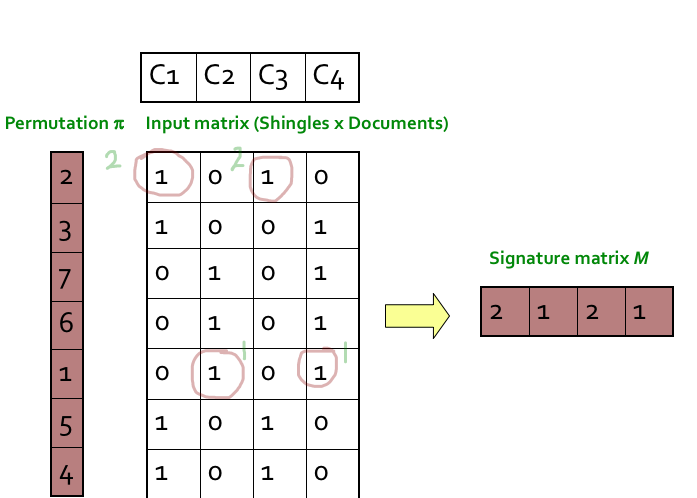

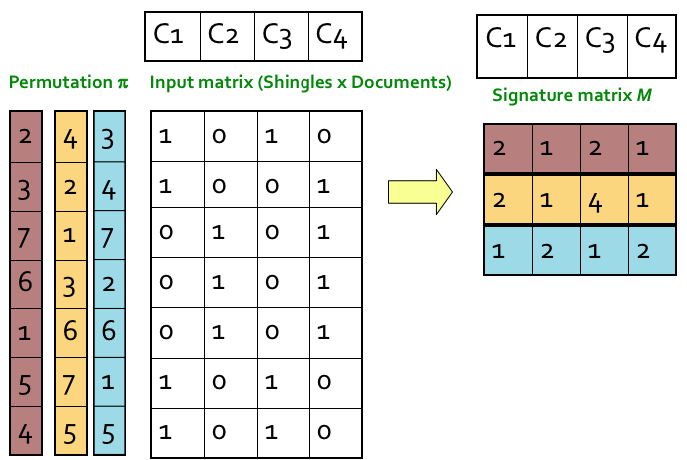

Step 1: Random permutation (π) of row index of document shingle matrix.

////////对行进行随机排列

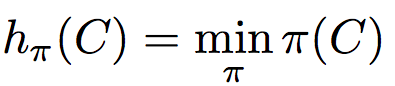

Step 2: Hash function is the index of the first (in the permuted order) row in which column C has value 1. Do this several time (use different permutations) to create signature of a column.

第2步:哈希函数是列C值为1的第一行(按顺序排列)的索引。这样做几次(使用不同的排列)来创建一个列的签名。

////这个图根本看不懂

转:locality sensitive hashing的更多相关文章

- [Algorithm] 局部敏感哈希算法(Locality Sensitive Hashing)

局部敏感哈希(Locality Sensitive Hashing,LSH)算法是我在前一段时间找工作时接触到的一种衡量文本相似度的算法.局部敏感哈希是近似最近邻搜索算法中最流行的一种,它有坚实的理论 ...

- 局部敏感哈希-Locality Sensitive Hashing

局部敏感哈希 转载请注明http://blog.csdn.net/stdcoutzyx/article/details/44456679 在检索技术中,索引一直须要研究的核心技术.当下,索引技术主要分 ...

- LSH(Locality Sensitive Hashing)原理与实现

原文地址:https://blog.csdn.net/guoziqing506/article/details/53019049 LSH(Locality Sensitive Hashing)翻译成中 ...

- Locality Sensitive Hashing,LSH

1. 基本思想 局部敏感(Locality Senstitive):即空间中距离较近的点映射后发生冲突的概率高,空间中距离较远的点映射后发生冲突的概率低. 局部敏感哈希的基本思想类似于一种空间域转换思 ...

- 局部敏感哈希算法(Locality Sensitive Hashing)

from:https://www.cnblogs.com/maybe2030/p/4953039.html 阅读目录 1. 基本思想 2. 局部敏感哈希LSH 3. 文档相似度计算 局部敏感哈希(Lo ...

- 局部敏感哈希Locality Sensitive Hashing(LSH)之随机投影法

1. 概述 LSH是由文献[1]提出的一种用于高效求解最近邻搜索问题的Hash算法.LSH算法的基本思想是利用一个hash函数把集合中的元素映射成hash值,使得相似度越高的元素hash值相等的概率也 ...

- 局部敏感哈希-Locality Sensitivity Hashing

一. 近邻搜索 从这里开始我将会对LSH进行一番长篇大论.因为这只是一篇博文,并不是论文.我觉得一篇好的博文是尽可能让人看懂,它对语言的要求并没有像论文那么严格,因此它可以有更强的表现力. 局部敏感哈 ...

- 从NLP任务中文本向量的降维问题,引出LSH(Locality Sensitive Hash 局部敏感哈希)算法及其思想的讨论

1. 引言 - 近似近邻搜索被提出所在的时代背景和挑战 0x1:从NN(Neighbor Search)说起 ANN的前身技术是NN(Neighbor Search),简单地说,最近邻检索就是根据数据 ...

- Locality Sensitive Hash 局部敏感哈希

Locality Sensitive Hash是一种常见的用于处理高维向量的索引办法.与其它基于Tree的数据结构,诸如KD-Tree.SR-Tree相比,它较好地克服了Curse of Dimens ...

随机推荐

- HTTP 抓包 ---复习一下

1.connection 字段 2.accept 字段 3.user-agent 字段 4.host字段 等字段需要注意: HTTP事务的延时主要有以下:1).解析时延 DNS解析与DNS缓存 客 ...

- tcpack--4延时ack

TCP在收到数据后必须发送ACK给对端,但如果每收到一个包就给一个ACK的话会使得网络中被注入过多报文.TCP的做法是在收到数据时不立即发送ACK,而是设置一个定时器,如果在定时器超时之前有数据发送给 ...

- linux中?*tee|\各类引号和-n-e\t\n

1.通配符:?和* ? --匹配任意字符单次. * --匹配任意字符任意次. [root@localhost test]# rm -fr * 2.管道符: | 将前面命令的结果传 ...

- Markdown 常用语言关键字

Markdown 语法高亮支持的语言还是比较多的,记下来备用. 语言名 关键字 Bash bash CoffeeScript coffeescript C++ cpp C# cs CSS css Di ...

- ThreadLocal应用及源码分析

ThreadLocal 基本使用 ThreadLocal 的作用是:提供线程内的局部变量,不同的线程之间不会相互干扰,这种变量在线程的生命周期内起作用,减少同一个线程内多个函数或组件之间一些公共变量传 ...

- 2020年SpringCloud 必知的18道面试题

今天跟大家分享下SpringCloud常见面试题的知识. 1.什么是Spring Cloud? Spring cloud流应用程序启动器是基于Spring Boot的Spring集成应用程序,提供与外 ...

- [LeetCode题解]142. 环形链表 II | 快慢指针

解题思路 本题是在141. 环形链表基础上的拓展,如果存在环,要找出环的入口. 如何判断是否存在环,我们知道通过快慢指针,如果相遇就表示有环.那么如何找到入口呢? 如下图所示的链表: 当 fast 与 ...

- linux shell简单快捷方式与通配符(元字符)echo -e文本显示颜色

1.shell常用快捷方式 ^R 搜索历史命令^D 退出^A 光标移动到命令行最前^E 光标移动到命令行最后^L 清屏^U 光标之前删除^K 光标之后删除^Y 撤销^S 锁屏^Q 解锁 2.多条命令执 ...

- 简单好用的TCP/UDP高并发性能测试工具

工具下载地址: 链接:https://pan.baidu.com/s/1fJ6Kz-mfFu_RANrgKqYiyA 提取码:0pyf 最近测试智能设备的远程的性能,思路主要是通过UDP对IP和端口发 ...

- [GIT]获取git最新的tag

背景 公司前端项目在Jenkins中打包,每次打包需要将新tag回推到仓库中.但是打包失败后如果不删除tag的话下次打包就会失败,需要手动删除,所以在Jenkinsfile中就需要在打包失败时自动删除 ...