COS418, Distributed System, Go Language

go test -run Sequential

func TestSequentialSingle(t *testing.T) {

mr := Sequential("test", makeInputs(1), 1, MapFunc, ReduceFunc)

mr.Wait()

check(t, mr.files)

checkWorker(t, mr.stats)

cleanup(mr)

}

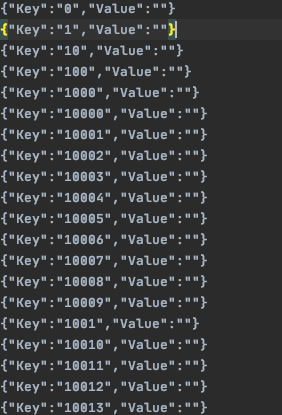

func Sequential(jobName string, files []string, nreduce int,

mapF func(string, string) []KeyValue,

reduceF func(string, []string) string,

) (mr *Master) {

mr = newMaster("master")

go mr.run(jobName, files, nreduce, func(phase jobPhase) {

switch phase {

case mapPhase:

for i, f := range mr.files {

doMap(mr.jobName, i, f, mr.nReduce, mapF)

}

case reducePhase:

for i := 0; i < mr.nReduce; i++ {

doReduce(mr.jobName, i, len(mr.files), reduceF)

}

}

}, func() {

mr.stats = []int{len(files) + nreduce}

})

return

}

func (mr *Master) run(jobName string, files []string, nreduce int,

schedule func(phase jobPhase),

finish func(),

) {

mr.jobName = jobName

mr.files = files

mr.nReduce = nreduce debug("%s: Starting Map/Reduce task %s\n", mr.address, mr.jobName) schedule(mapPhase)

schedule(reducePhase)

finish()

mr.merge() debug("%s: Map/Reduce task completed\n", mr.address) mr.doneChannel <- true

}

func(phase jobPhase) {

switch phase {

case mapPhase:

for i, f := range mr.files {

doMap(mr.jobName, i, f, mr.nReduce, mapF)

}

case reducePhase:

for i := 0; i < mr.nReduce; i++ {

doReduce(mr.jobName, i, len(mr.files), reduceF)

}

}

}

func() {

mr.stats = []int{len(files) + nreduce}

}

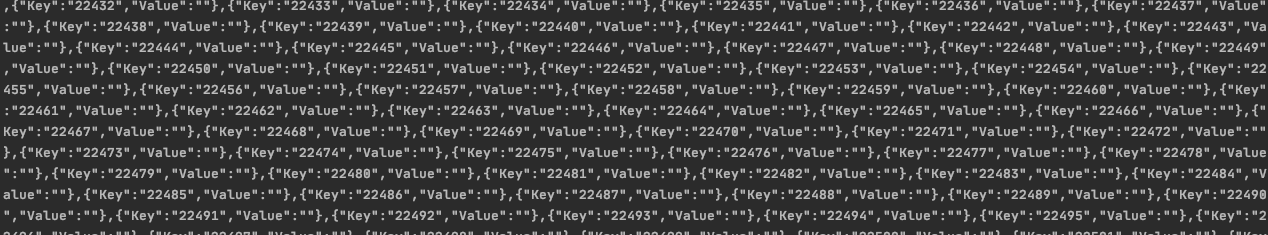

func reduceName(jobName string, mapTask int, reduceTask int) string {

return "mrtmp." + jobName + "-" + strconv.Itoa(mapTask) + "-" + strconv.Itoa(reduceTask)

}

func doMap(

jobName string, // the name of the MapReduce job

mapTaskNumber int, // which map task this is

inFile string,

nReduce int, // the number of reduce task that will be run

mapF func(file string, contents string) []KeyValue,

) {

dat, err := ioutil.ReadFile(inFile)

if err != nil {

debug("file open fail:%s", inFile)

} else {

kvs := mapF(inFile, string(dat))

partitions := make([][]KeyValue, nReduce)

for _ , kv:= range kvs {

r := int(ihash(kv.Key)) % nReduce

partitions[r] = append(partitions[r], kv)

}

for i := range partitions {

j, _ := json.Marshal(partitions[i])

f := reduceName(jobName, mapTaskNumber, i)

ioutil.WriteFile(f, j, 0644)

}

}

}

func mergeName(jobName string, reduceTask int) string {

return "mrtmp." + jobName + "-res-" + strconv.Itoa(reduceTask)

}

func doReduce(

jobName string, // the name of the whole MapReduce job

reduceTaskNumber int, // which reduce task this is

nMap int, // the number of map tasks that were run ("M" in the paper)

reduceF func(key string, values []string) string,

) {

kvs := make(map[string][]string)

for m := 0; m < nMap; m++ {

fileName := reduceName(jobName, m, reduceTaskNumber)

dat, err := ioutil.ReadFile(fileName)

if err != nil {

debug("file open fail:%s", fileName)

} else {

var items []KeyValue

json.Unmarshal(dat, &items)

for _ , item := range items {

k := item.Key

v := item.Value

kvs[k] = append(kvs[k], v)

}

}

} // create the final output file

mergeFileName := mergeName(jobName, reduceTaskNumber)

file, err := os.Create(mergeFileName)

if err != nil {

debug("file open fail:%s", mergeFileName)

} // sort

var keys []string

for k := range kvs {

keys = append(keys, k)

}

sort.Strings(keys) enc := json.NewEncoder(file)

for _, key := range keys {

enc.Encode(KeyValue{key, reduceF(key, kvs[key])})

}

file.Close()

}

go run wc.go master sequential pg-*.txt

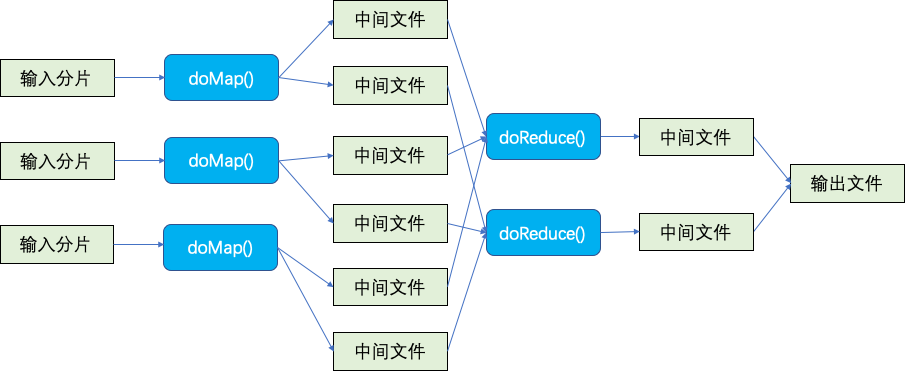

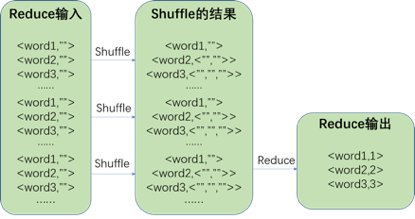

Reduce输入格式为list(<word,””> ),输出格式为list(<word,num>) 。处理过程如下图所示:

func mapF(document string, value string) (res []mapreduce.KeyValue) {

words := strings.FieldsFunc(value, func(r rune) bool {

return !unicode.IsLetter(r)

})

res = []mapreduce.KeyValue{}

for _, w := range words {

res = append(res, mapreduce.KeyValue{w, ""})

}

return res

}

func reduceF(key string, values []string) string {

return strconv.Itoa(len(values))

}

sort -n -k2 mrtmp.wcseq | tail -10

he: 34077

was: 37044

that: 37495

I: 44502

in: 46092

a: 60558

to: 74357

of: 79727

and: 93990

the: 154024

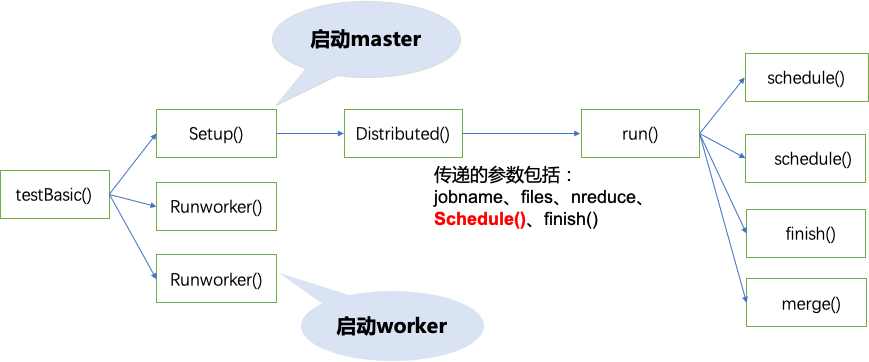

go test -run TestBasic

func TestBasic(t *testing.T) {

mr := setup()

for i := 0; i < 2; i++ {

go RunWorker(mr.address, port("worker"+strconv.Itoa(i)),

MapFunc, ReduceFunc, -1)

}

mr.Wait()

check(t, mr.files)

checkWorker(t, mr.stats)

cleanup(mr)

}

func setup() *Master {

files := makeInputs(nMap)

master := port("master")

mr := Distributed("test", files, nReduce, master)

return mr

}

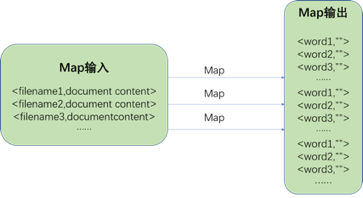

func Distributed(jobName string, files []string, nreduce int, master string) (mr *Master) {

mr = newMaster(master)

mr.startRPCServer()

go mr.run(jobName, files, nreduce,

func(phase jobPhase) {

ch := make(chan string)

go mr.forwardRegistrations(ch)

schedule(mr.jobName, mr.files, mr.nReduce, phase, ch)

},

func() {

mr.stats = mr.killWorkers()

mr.stopRPCServer()

})

return

}

func(phase jobPhase) {

ch := make(chan string)

go mr.forwardRegistrations(ch)

schedule(mr.jobName, mr.files, mr.nReduce, phase, ch)

}

func() {

mr.stats = mr.killWorkers()

mr.stopRPCServer()

}

func (mr *Master) schedule(phase jobPhase) {

var ntasks int

var nios int // number of inputs (for reduce) or outputs (for map)

switch phase {

case mapPhase:

ntasks = len(mr.files)

nios = mr.nReduce

case reducePhase:

ntasks = mr.nReduce

nios = len(mr.files)

}

debug("Schedule: %v %v tasks (%d I/Os)\n", ntasks, phase, nios)

stats := make([]bool, ntasks)

currentWorker := 0

for {

count := ntasks

for i := 0; i < ntasks; i++ {

if !stats[i] {

mr.Lock()

numWorkers := len(mr.workers)

fmt.Println(numWorkers)

if numWorkers==0 {

mr.Unlock()

time.Sleep(time.Second)

continue

}

currentWorker = (currentWorker + 1) % numWorkers

Worker := mr.workers[currentWorker]

mr.Unlock()

var file string

if phase == mapPhase {

file = mr.files[i]

}

args := DoTaskArgs{JobName: mr.jobName, File: file, Phase: phase, TaskNumber: i, NumOtherPhase: nios}

go func(slot int, worker_ string) {

success := call(worker_, "Worker.DoTask", &args, new(struct{}))

if success {

stats[slot] = true

}

}(i, Worker)

} else {

count--

}

}

if count == 0 {

break

}

time.Sleep(time.Second)

}

debug("Schedule: %v phase done\n", phase)

}

go test -run Failure

go run ii.go master sequential pg-*.txt

func mapF(document string, value string) (res []mapreduce.KeyValue) {

words := strings.FieldsFunc(value, func(c rune) bool {

return !unicode.IsLetter(c)

})

WordDocument := make(map[string]string, 0)

for _,word := range words {

WordDocument[word] = document

}

res = make([]mapreduce.KeyValue, 0)

for k,v := range WordDocument {

res = append(res, mapreduce.KeyValue{k, v})

}

return

}

func reduceF(key string, values []string) string {

nDoc := len(values)

sort.Strings(values)

var buf bytes.Buffer;

buf.WriteString(strconv.Itoa(nDoc))

buf.WriteRune(' ')

for i,doc := range values {

buf.WriteString(doc)

if (i != nDoc-1) {

buf.WriteRune(',')

}

}

return buf.String()

}

head -n5 mrtmp.iiseq

A: 16 pg-being_ernest.txt,pg-dorian_gray.txt,pg-dracula.txt,pg-emma.txt,pg-frankenstein.txt,pg-great_expectations.txt,pg-grimm.txt,pg-huckleberry_finn.txt,pg-les_miserables.txt,pg-metamorphosis.txt,pg-moby_dick.txt,pg-sherlock_holmes.txt,pg-tale_of_two_cities.txt,pg-tom_sawyer.txt,pg-ulysses.txt,pg-war_and_peace.txt

ABC: 2 pg-les_miserables.txt,pg-war_and_peace.txt

ABOUT: 2 pg-moby_dick.txt,pg-tom_sawyer.txt

ABRAHAM: 1 pg-dracula.txt

ABSOLUTE: 1 pg-les_miserables.txt

COS418, Distributed System, Go Language的更多相关文章

- 分布式系统(Distributed System)资料

这个资料关于分布式系统资料,作者写的太好了.拿过来以备用 网址:https://github.com/ty4z2008/Qix/blob/master/ds.md 希望转载的朋友,你可以不用联系我.但 ...

- 译《Time, Clocks, and the Ordering of Events in a Distributed System》

Motivation <Time, Clocks, and the Ordering of Events in a Distributed System>大概是在分布式领域被引用的最多的一 ...

- Aysnc-callback with future in distributed system

Aysnc-callback with future in distributed system

- Note: Time clocks and the ordering of events in a distributed system

http://research.microsoft.com/en-us/um/people/lamport/pubs/time-clocks.pdf 分布式系统的时钟同步是一个非常困难的问题,this ...

- 分布式学习材料Distributed System Prerequisite List

接下的内容按几个大类来列:1. 文件系统a. GFS – The Google File Systemb. HDFS1) The Hadoop Distributed File System2) Th ...

- Notes on Distributed System -- Distributed Hash Table Based On Chord

task: 基于Chord实现一个Hash Table 我负责写Node,队友写SuperNode和Client.总体参考paper[Stoica et al., 2001]上的伪代码 FindSuc ...

- 「2014-2-23」Note on Preliminary Introduction to Distributed System

今天读了几篇分布式相关的内容,记录一下.非经典论文,非系统化阅读,非严谨思考和总结.主要的着眼点在于分布式存储:好处是,跨越单台物理机器的计算和存储能力的限制,防止单点故障(single point ...

- Note on Preliminary Introduction to Distributed System

今天读了几篇分布式相关的内容,记录一下.非经典论文,非系统化阅读,非严谨思考和总结.主要的着眼点在于分布式存储:好处是,跨越单台物理机器的计算和存储能力的限制,防止单点故障(single point ...

- Time, Clocks, and the Ordering of Events in a Distributed System

作者:Leslie Lamport(非常厉害的老头了) 在使用消息进行通信的分布式系统中,使用物理时钟对不同process进行时间同步与事件排序是非常困难的.一是因为不同process的时钟有差异,另 ...

随机推荐

- 基于Centos7安装Docker-registry2.0

我们可能希望构建和存储包含不想公开的信息或数据的镜像,因为Docker公司的团队开源了docker-registry的代码,这样我们就可以基于此代码在内部运行自己的registry. 服务端1.拉去仓 ...

- reverse 字符串翻转

头文件 algorithm string s="hello"; reverse(s.begin(),s.end()); char c[]="hello"; re ...

- PHP array_unshift() 函数

实例 插入元素 "blue" 到数组中: <?php$a=array("a"=>"red","b"=> ...

- 解析laravel之redis简单模块操作

入门级操作 普通 set / get 操作: set操作,如果键名存在,则会覆盖原有的值: $redis = app('redis.connection'); $redis->set('libr ...

- odoo自定义模块项目结构,odoo自定义模块点安装不成功解决办法

如图所示:在odoo源码的根目录中创建自己的项目文件(project) 在odoo.conf配置文件中的addons_path路径中加入自己项目的文件夹路径,推荐使用绝对路径 addons_path ...

- Selenium多窗口切换代码

# #!/usr/bin/python3 # -*- coding: utf-8 -*- # @Time : 2020/7/31 16:05 # @Author : Gengwu # @FileNam ...

- 【Canal】互联网背景下有哪些数据同步需求和解决方案?看完我知道了!!

写在前面 在当今互联网行业,尤其是现在分布式.微服务开发环境下,为了提高搜索效率,以及搜索的精准度,会大量使用Redis.Memcached等NoSQL数据库,也会使用大量的Solr.Elastics ...

- [NewLife.Net]单机400万长连接压力测试

目标 对网络库NewLife.Net进行单机百万级长连接测试,并持续收发数据,检测网络库稳定性. [2020年8月1日晚上22点] 先上源码:https://github.com/NewLifeX/N ...

- iOS CALayer 简单介绍

https://www.jianshu.com/p/09f4e36afd66 什么是CALayer: 总结:能看到的都是uiview,uiview能显示在屏幕上是因为它内部的一个层calyer层. 在 ...

- 重温这几个屌爆的Python技巧!

我已经使用Python编程有多年了,即使今天我仍然惊奇于这种语言所能让代码表现出的整洁和对DRY编程原则的适用.这些年来的经历让我学到了很多的小技巧和知识,大多数是通过阅读很流行的开源软件,如Djan ...