FITTING A MODEL VIA CLOSED-FORM EQUATIONS VS. GRADIENT DESCENT VS STOCHASTIC GRADIENT DESCENT VS MINI-BATCH LEARNING. WHAT IS THE DIFFERENCE?

FITTING A MODEL VIA CLOSED-FORM EQUATIONS VS. GRADIENT DESCENT VS STOCHASTIC GRADIENT DESCENT VS MINI-BATCH LEARNING. WHAT IS THE DIFFERENCE?

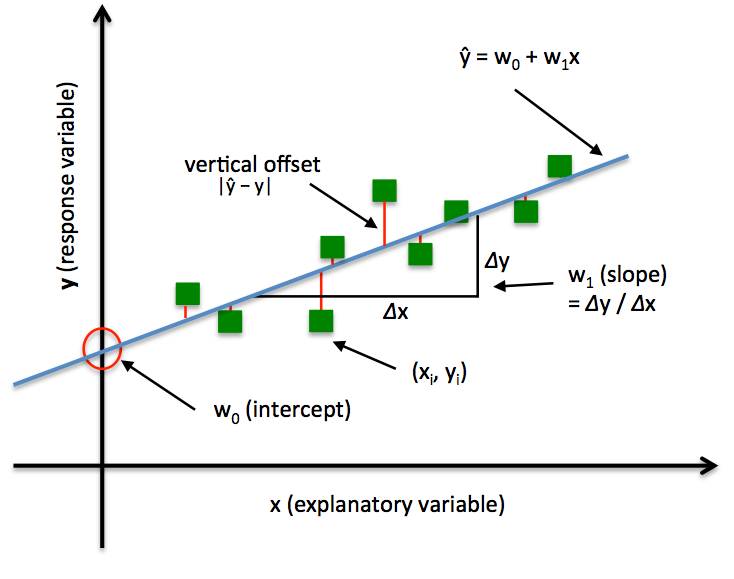

In order to explain the differences between alternative approaches to estimating the parameters of a model, let's take a look at a concrete example: Ordinary Least Squares (OLS) Linear Regression. The illustration below shall serve as a quick reminder to recall the different components of a simple linear regression model:

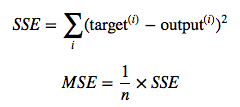

In Ordinary Least Squares (OLS) Linear Regression, our goal is to find the line (or hyperplane) that minimizes the vertical offsets. Or, in other words, we define the best-fitting line as the line that minimizes the sum of squared errors (SSE) or mean squared error (MSE) between our target variable (y) and our predicted output over all samples i in our dataset of size n.

Now, we can implement a linear regression model for performing ordinary least squares regression using one of the following approaches:

- Solving the model parameters analytically (closed-form equations)

- Using an optimization algorithm (Gradient Descent, Stochastic Gradient Descent, Newton's Method, Simplex Method, etc.)

1) NORMAL EQUATIONS (CLOSED-FORM SOLUTION)

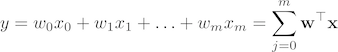

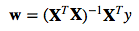

The closed-form solution may (should) be preferred for "smaller" datasets -- if computing (a "costly") matrix inverse is not a concern. For very large datasets, or datasets where the inverse of XTX may not exist (the matrix is non-invertible or singular, e.g., in case of perfect multicollinearity), the GD or SGD approaches are to be preferred. The linear function (linear regression model) is defined as:

where y is the response variable, x is an m-dimensional sample vector, and w is the weight vector (vector of coefficients). Note that w0 represents the y-axis intercept of the model and therefore x0=1. Using the closed-form solution (normal equation), we compute the weights of the model as follows:

2) GRADIENT DESCENT (GD)

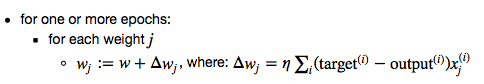

Using the Gradient Decent (GD) optimization algorithm, the weights are updated incrementally after each epoch (= pass over the training dataset).

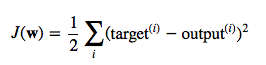

The cost function J(⋅), the sum of squared errors (SSE), can be written as:

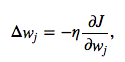

The magnitude and direction of the weight update is computed by taking a step in the opposite direction of the cost gradient

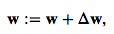

where η is the learning rate. The weights are then updated after each epoch via the following update rule:

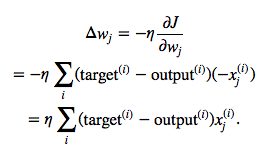

where Δw is a vector that contains the weight updates of each weight coefficient w, which are computed as follows:

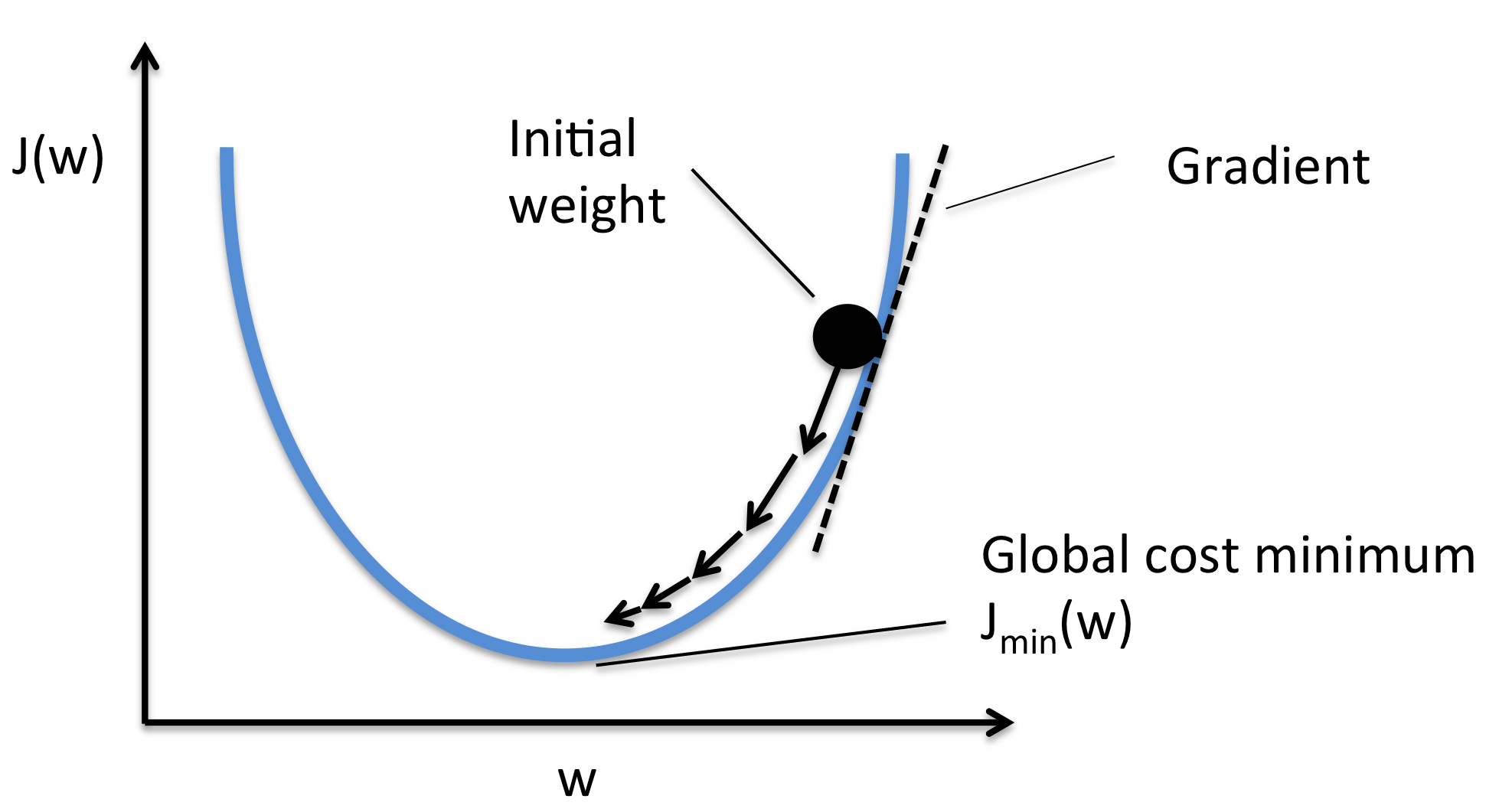

Essentially, we can picture GD optimization as a hiker (the weight coefficient) who wants to climb down a mountain (cost function) into a valley (cost minimum), and each step is determined by the steepness of the slope (gradient) and the leg length of the hiker (learning rate). Considering a cost function with only a single weight coefficient, we can illustrate this concept as follows:

3) STOCHASTIC GRADIENT DESCENT (SGD)

In GD optimization, we compute the cost gradient based on the complete training set; hence, we sometimes also call itbatch GD. In case of very large datasets, using GD can be quite costly since we are only taking a single step for one pass over the training set -- thus, the larger the training set, the slower our algorithm updates the weights and the longer it may take until it converges to the global cost minimum (note that the SSE cost function is convex).

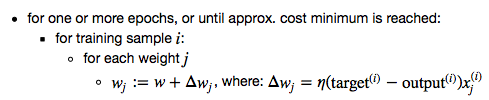

In Stochastic Gradient Descent (SGD; sometimes also referred to as iterative or on-line GD), we don't accumulate the weight updates as we've seen above for GD:

Instead, we update the weights after each training sample:

Here, the term "stochastic" comes from the fact that the gradient based on a single training sample is a "stochastic approximation" of the "true" cost gradient. Due to its stochastic nature, the path towards the global cost minimum is not "direct" as in GD, but may go "zig-zag" if we are visualizing the cost surface in a 2D space. However, it has been shown that SGD almost surely converges to the global cost minimum if the cost function is convex (or pseudo-convex)[1]. Furthermore, there are different tricks to improve the GD-based learning, for example:

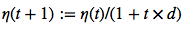

An adaptive learning rate η Choosing a decrease constant d that shrinks the learning rate over time:

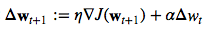

Momentum learning by adding a factor of the previous gradient to the weight update for faster updates:

A NOTE ABOUT SHUFFLING

There are several different flavors of SGD, which can be all seen throughout the literature. Let's take a look at the three most common variants:

A)

- randomly shuffle samples in the training set

- for one or more epochs, or until approx. cost minimum is reached

- for training sample i

- compute gradients and perform weight updates

- for training sample i

- for one or more epochs, or until approx. cost minimum is reached

B)

- for one or more epochs, or until approx. cost minimum is reached

- randomly shuffle samples in the training set

- for training sample i

- compute gradients and perform weight updates

- for training sample i

- randomly shuffle samples in the training set

C)

- for iterations t, or until approx. cost minimum is reached:

- draw random sample from the training set

- compute gradients and perform weight updates

- draw random sample from the training set

In scenario A [3], we shuffle the training set only one time in the beginning; whereas in scenario B, we shuffle the training set after each epoch to prevent repeating update cycles. In both scenario A and scenario B, each training sample is only used once per epoch to update the model weights.

In scenario C, we draw the training samples randomly with replacement from the training set [2]. If the number of iterationst is equal to the number of training samples, we learn the model based on a bootstrap sample of the training set.

4) MINI-BATCH GRADIENT DESCENT (MB-GD)

Mini-Batch Gradient Descent (MB-GD) a compromise between batch GD and SGD. In MB-GD, we update the model based on smaller groups of training samples; instead of computing the gradient from 1 sample (SGD) or all n training samples (GD), we compute the gradient from 1 < k < n training samples (a common mini-batch size is k=50).

MB-GD converges in fewer iterations than GD because we update the weights more frequently; however, MB-GD let's us utilize vectorized operation, which typically results in a computational performance gain over SGD.

REFERENCES

- [1] Bottou, Léon (1998). "Online Algorithms and Stochastic Approximations". Online Learning and Neural Networks. Cambridge University Press. ISBN 978-0-521-65263-6

- [2] Bottou, Léon. "Large-scale machine learning with SGD." Proceedings of COMPSTAT'2010. Physica-Verlag HD, 2010. 177-186.

- [3] Bottou, Léon. "SGD tricks." Neural Networks: Tricks of the Trade. Springer Berlin Heidelberg, 2012. 421-436.

FITTING A MODEL VIA CLOSED-FORM EQUATIONS VS. GRADIENT DESCENT VS STOCHASTIC GRADIENT DESCENT VS MINI-BATCH LEARNING. WHAT IS THE DIFFERENCE?的更多相关文章

- Python之路-(Django(csrf,中间件,缓存,信号,Model操作,Form操作))

csrf 中间件 缓存 信号 Model操作 Form操作 csrf: 用 django 有多久,我跟 csrf 这个概念打交道就有久了. 每次初始化一个项目时都能看到 django.middlewa ...

- 最大似然估计实例 | Fitting a Model by Maximum Likelihood (MLE)

参考:Fitting a Model by Maximum Likelihood 最大似然估计是用于估计模型参数的,首先我们必须选定一个模型,然后比对有给定的数据集,然后构建一个联合概率函数,因为给定 ...

- django 用model来简化form

django里面的model和form其实有很多地方有相同之处,django本身也支持用model来简化form 一般情况下,我们的form是这样的 from django import forms ...

- Django(八)下:Model操作和Form操作、序列化操作

二.Form操作 一般会创建forms.py文件,单独存放form模块. Form 专门做数据验证,而且非常强大.有以下两个插件: fields :验证(肯定会用的) widgets:生成HTML(有 ...

- Django(八)上:Model操作和Form操作

↑↑↑点上面的”+”号展开目录 Model和Form以及ModelForm简介 Model操作: 创建数据库表结构 操作数据库表 做一部分的验证 Form操作: 数据验证(强大) ModelForm ...

- day23 Model 操作,Form 验证以及序列化操作

Model 操作 1创建数据库表 定制表名: 普通索引: 创建两个普通索引,这样就会生成两个索引文件 联合索引: 为了只生成一个索引文件,才 ...

- [Angular2 Form] Reactive form: valueChanges, update data model only when form is valid

For each formBuild, formControl, formGroup they all have 'valueChanges' prop, which is an Observable ...

- 提高神经网络的学习方式Improving the way neural networks learn

When a golf player is first learning to play golf, they usually spend most of their time developing ...

- [C2P3] Andrew Ng - Machine Learning

##Advice for Applying Machine Learning Applying machine learning in practice is not always straightf ...

随机推荐

- web页面的回流,认识与避免

一.什么是回流? 回流是会导致页面重新渲染的一些元素,从而影响性能. 二.哪些因素会导致回流? 1.调整窗口的大小: 2.改变字体,如果用rem 设置了根目录的字体大小,这样就减少了回流的次数: 3 ...

- linux下c++开发环境安装(eclipse+cdt)

方法一: 此外,众所周知,Eclipse是Java程序,因此很容易就实现了跨平台,也是众所周知,Java的大型程序非常吃内存,即使有512MB内存, 仍然感觉Eclipse的启动速度很慢.个人认为1G ...

- JS实现颜色值的转换

从网上看了个案例,是实现颜色值转换的,就想着自己也写个.网上的案例链接找不到了,这里也就不贴了. JavaScript颜色转换的核心就是进制间的转换. rgba(0,0,0,.4)转换成#000000 ...

- [CareerCup] 10.7 Simplified Search Engine 简单的搜索引擎

10.7 Imagine a web server for a simplified search engine. This system has 100 machines to respond to ...

- [CareerCup] 11.3 Search in Rotated Sorted Array 在旋转有序矩阵中搜索

11.3 Given a sorted array of n integers that has been rotated an unknown number of times, write code ...

- c# 技巧之 泛型方法

泛型 指的是编译时不需要指定具体的参数类型,可以在运行时动态地赋予某一种数据类型的机制. 相信很多人对泛型类并不陌生,像Dictionary,List等结构都属于泛型类.有趣的是,一个函数/方法也可 ...

- 20145314郑凯杰《信息安全系统设计基础》GDB调试32位汇编堆栈分析

20145314郑凯杰<信息安全系统设计基础>GDB调试32位汇编堆栈分析 本篇博客将对第五周博客中的GDB调试32位汇编堆栈进行分析 首先放上以前环境配置的图: 图1: 测试代码: #i ...

- 通通的最后一篇博客(附自制html5平面射击小游戏一枚)

这是我最后一篇博客了,由于本人的人生规划吧,以后应该也写不出什么好的技术文章了,到现在在博客园写了2年, 今天一看,我也有了120个粉丝,好几万的浏览量,感谢大家的支持啊~~ 半年没有写博客了,由于半 ...

- WP&Win10开发: RichTextBlock实现富文本并处理换行

思路:1.构建字典.2.在字符串中匹配字典的key,将匹配到的key转换成对应的value3.将替换后的字符串,转化成xaml形式,加载该xaml以实现富文本. 代码如下: private Parag ...

- unity3d 依赖关系获取预制件任意资源

前段时间策划们想知道UI预制件中使用了哪些音效 N多预制件.N多音效!! 如果纯人工整理的话这还不累成狗? 累成狗不说,还容易出错 所以获取音频剪辑小工具就诞生了,将策划从死亡边缘拉了回来 我们先看一 ...