Cartographer源码阅读(6):LocalTrajectoryBuilder和PoseExtrapolator

LocalTrajectoryBuilder意思是局部轨迹的构建,下面的类图中方法的参数没有画进去。

注意其中的三个类:PoseExtrapolator类,RealTimeCorrelativeScanMatcher类和CeresScanMatcher类。

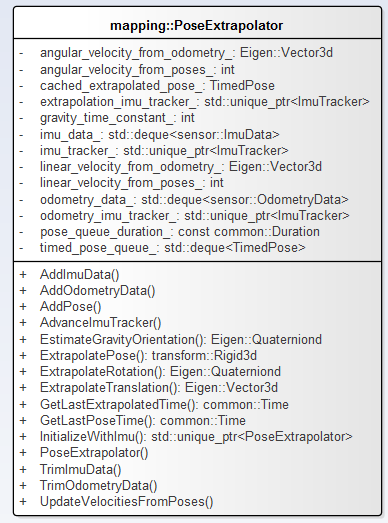

(1)PoseExtrapolator类(如下图),Node类和LocalTrajectoryBuilder类都有用到PoseExtrapolator对象,好像两者之间并没有什么关系?

LocalTrajectoryBuilder中的PoseExtrapolator对象类似于运动模型。

(Node类中的可能是为了发布位姿信息用的,单独进行了位姿推算。先不管了。)

PoseExtrapolator的构造函数 VS 通过IMU初始化InitializeWithImu方法。

在LocalTrajectoryBuilder::InitializeExtrapolator中对其构造函数的调用:

void LocalTrajectoryBuilder::InitializeExtrapolator(const common::Time time)

{

if (extrapolator_ != nullptr) {

return;

}

// We derive velocities from poses which are at least 1 ms apart for numerical

// stability. Usually poses known to the extrapolator will be further apart

// in time and thus the last two are used.

constexpr double kExtrapolationEstimationTimeSec = 0.001;

// TODO(gaschler): Consider using InitializeWithImu as 3D does.

extrapolator_ = common::make_unique<mapping::PoseExtrapolator>(

::cartographer::common::FromSeconds(kExtrapolationEstimationTimeSec),

options_.imu_gravity_time_constant());

extrapolator_->AddPose(time, transform::Rigid3d::Identity());

}

PoseExtrapolator::InitializeWithImu方法:

std::unique_ptr<PoseExtrapolator> PoseExtrapolator::InitializeWithImu(

const common::Duration pose_queue_duration,

const double imu_gravity_time_constant, const sensor::ImuData& imu_data)

{

auto extrapolator = common::make_unique<PoseExtrapolator>(pose_queue_duration, imu_gravity_time_constant);

extrapolator->AddImuData(imu_data);

extrapolator->imu_tracker_ =common::make_unique<ImuTracker>(imu_gravity_time_constant, imu_data.time);

extrapolator->imu_tracker_->AddImuLinearAccelerationObservation(

imu_data.linear_acceleration);

extrapolator->imu_tracker_->AddImuAngularVelocityObservation(

imu_data.angular_velocity);

extrapolator->imu_tracker_->Advance(imu_data.time);

extrapolator->AddPose(imu_data.time,transform::Rigid3d::Rotation(extrapolator->imu_tracker_->orientation()));

return extrapolator;

}

LocalTrajectoryBuilder的AddImuData和AddOdometryData方法不赘述。

void LocalTrajectoryBuilder::AddImuData(const sensor::ImuData& imu_data) {

CHECK(options_.use_imu_data()) << "An unexpected IMU packet was added.";

InitializeExtrapolator(imu_data.time);

extrapolator_->AddImuData(imu_data);

}

void LocalTrajectoryBuilder::AddOdometryData(

const sensor::OdometryData& odometry_data) {

if (extrapolator_ == nullptr) {

// Until we've initialized the extrapolator we cannot add odometry data.

LOG(INFO) << "Extrapolator not yet initialized.";

return;

}

extrapolator_->AddOdometryData(odometry_data);

}

如下查看LocalTrajectoryBuilder::AddRangeData方法。如果使用IMU数据,直接进入10行,如果不是用进入7行。

std::unique_ptr<LocalTrajectoryBuilder::MatchingResult>

LocalTrajectoryBuilder::AddRangeData(const common::Time time,

const sensor::TimedRangeData& range_data)

{

// Initialize extrapolator now if we do not ever use an IMU.

if (!options_.use_imu_data())

{

InitializeExtrapolator(time);

}

if (extrapolator_ == nullptr)

{

// Until we've initialized the extrapolator with our first IMU message, we

// cannot compute the orientation of the rangefinder.

LOG(INFO) << "Extrapolator not yet initialized.";

return nullptr;

} CHECK(!range_data.returns.empty());

CHECK_EQ(range_data.returns.back()[], );

const common::Time time_first_point =

time + common::FromSeconds(range_data.returns.front()[]);

if (time_first_point < extrapolator_->GetLastPoseTime()) {

LOG(INFO) << "Extrapolator is still initializing.";

return nullptr;

} std::vector<transform::Rigid3f> range_data_poses;

range_data_poses.reserve(range_data.returns.size());

for (const Eigen::Vector4f& hit : range_data.returns) {

const common::Time time_point = time + common::FromSeconds(hit[]);

range_data_poses.push_back(

extrapolator_->ExtrapolatePose(time_point).cast<float>());

} if (num_accumulated_ == ) {

// 'accumulated_range_data_.origin' is uninitialized until the last

// accumulation.

accumulated_range_data_ = sensor::RangeData{{}, {}, {}};

} // Drop any returns below the minimum range and convert returns beyond the

// maximum range into misses.

for (size_t i = ; i < range_data.returns.size(); ++i) {

const Eigen::Vector4f& hit = range_data.returns[i];

const Eigen::Vector3f origin_in_local =

range_data_poses[i] * range_data.origin;

const Eigen::Vector3f hit_in_local = range_data_poses[i] * hit.head<>();

const Eigen::Vector3f delta = hit_in_local - origin_in_local;

const float range = delta.norm();

if (range >= options_.min_range()) {

if (range <= options_.max_range()) {

accumulated_range_data_.returns.push_back(hit_in_local);

} else {

accumulated_range_data_.misses.push_back(

origin_in_local +

options_.missing_data_ray_length() / range * delta);

}

}

}

++num_accumulated_; if (num_accumulated_ >= options_.num_accumulated_range_data()) {

num_accumulated_ = ;

const transform::Rigid3d gravity_alignment = transform::Rigid3d::Rotation(

extrapolator_->EstimateGravityOrientation(time));

accumulated_range_data_.origin =

range_data_poses.back() * range_data.origin;

return AddAccumulatedRangeData(

time,

TransformToGravityAlignedFrameAndFilter(

gravity_alignment.cast<float>() * range_data_poses.back().inverse(),

accumulated_range_data_),

gravity_alignment);

}

return nullptr;

}

接着,LocalTrajectoryBuilder::AddAccumulatedRangeData代码如下,传入的参数为3个。

const common::Time time,const sensor::RangeData& gravity_aligned_range_data, const transform::Rigid3d& gravity_alignment

重力定向,定向后的深度数据和定向矩阵。

注意下面21行代码执行了扫描匹配的ScanMatch方法,之后代码29行调用的extrapolator_->AddPose()方法:

每次扫描匹配之后执行AddPose方法。

std::unique_ptr<LocalTrajectoryBuilder::MatchingResult>

LocalTrajectoryBuilder::AddAccumulatedRangeData(

const common::Time time,

const sensor::RangeData& gravity_aligned_range_data,

const transform::Rigid3d& gravity_alignment)

{

if (gravity_aligned_range_data.returns.empty())

{

LOG(WARNING) << "Dropped empty horizontal range data.";

return nullptr;

} // Computes a gravity aligned pose prediction.

const transform::Rigid3d non_gravity_aligned_pose_prediction =

extrapolator_->ExtrapolatePose(time);

const transform::Rigid2d pose_prediction = transform::Project2D(

non_gravity_aligned_pose_prediction * gravity_alignment.inverse()); // local map frame <- gravity-aligned frame

std::unique_ptr<transform::Rigid2d> pose_estimate_2d =

ScanMatch(time, pose_prediction, gravity_aligned_range_data);

if (pose_estimate_2d == nullptr)

{

LOG(WARNING) << "Scan matching failed.";

return nullptr;

}

const transform::Rigid3d pose_estimate =

transform::Embed3D(*pose_estimate_2d) * gravity_alignment;

extrapolator_->AddPose(time, pose_estimate); sensor::RangeData range_data_in_local =

TransformRangeData(gravity_aligned_range_data,

transform::Embed3D(pose_estimate_2d->cast<float>()));

std::unique_ptr<InsertionResult> insertion_result =

InsertIntoSubmap(time, range_data_in_local, gravity_aligned_range_data,

pose_estimate, gravity_alignment.rotation());

return common::make_unique<MatchingResult>(

MatchingResult{time, pose_estimate, std::move(range_data_in_local),

std::move(insertion_result)});

} std::unique_ptr<LocalTrajectoryBuilder::InsertionResult>

LocalTrajectoryBuilder::InsertIntoSubmap(

const common::Time time, const sensor::RangeData& range_data_in_local,

const sensor::RangeData& gravity_aligned_range_data,

const transform::Rigid3d& pose_estimate,

const Eigen::Quaterniond& gravity_alignment)

{

if (motion_filter_.IsSimilar(time, pose_estimate))

{

return nullptr;

} // Querying the active submaps must be done here before calling

// InsertRangeData() since the queried values are valid for next insertion.

std::vector<std::shared_ptr<const Submap>> insertion_submaps;

for (const std::shared_ptr<Submap>& submap : active_submaps_.submaps())

{

insertion_submaps.push_back(submap);

}

active_submaps_.InsertRangeData(range_data_in_local); sensor::AdaptiveVoxelFilter adaptive_voxel_filter(

options_.loop_closure_adaptive_voxel_filter_options());

const sensor::PointCloud filtered_gravity_aligned_point_cloud =

adaptive_voxel_filter.Filter(gravity_aligned_range_data.returns); return common::make_unique<InsertionResult>(InsertionResult{

std::make_shared<const mapping::TrajectoryNode::Data>(

mapping::TrajectoryNode::Data{

time,

gravity_alignment,

filtered_gravity_aligned_point_cloud,

{}, // 'high_resolution_point_cloud' is only used in 3D.

{}, // 'low_resolution_point_cloud' is only used in 3D.

{}, // 'rotational_scan_matcher_histogram' is only used in 3D.

pose_estimate}),

std::move(insertion_submaps)});

}

LocalTrajectoryBuilder::AddAccumulatedRangeData

(2)RealTimeCorrelativeScanMatcher类,实时的扫描匹配,用的相关分析方法。

Cartographer源码阅读(6):LocalTrajectoryBuilder和PoseExtrapolator的更多相关文章

- Cartographer源码阅读(4):Node和MapBuilder对象2

MapBuilder的成员变量sensor::Collator sensor_collator_; 再次阅读MapBuilder::AddTrajectoryBuilder方法.首先构造了mappin ...

- Cartographer源码阅读(2):Node和MapBuilder对象

上文提到特别注意map_builder_bridge_.AddTrajectory(x,x),查看其中的代码.两点: 首先是map_builder_.AddTrajectoryBuilder(...) ...

- Cartographer源码阅读(7):轨迹推算和位姿推算的原理

其实也就是包括两个方面的内容:类似于运动模型的位姿估计和扫描匹配,因为需要计算速度,所以时间就有必要了! 1. PoseExtrapolator解决了IMU数据.里程计和位姿信息进行融合的问题. 该类 ...

- Cartographer源码阅读(3):程序逻辑结构

Cartographer早期的代码在进行3d制图的时候使用了UKF方法,查看现有的tag版本,可以转到0.1.0和0.2.0查看,包含kalman_filter文件夹. 文件夹中的pose_track ...

- Cartographer源码阅读(1):程序入口

带着几个思考问题: (1)IMU数据的使用,如何融合,Kalman滤波? (2)图优化的具体实现,闭环检测的策略? (3)3D激光的接入和闭环策略? 1. 安装Kdevelop工具: http://b ...

- Cartographer源码阅读(8):imu_tracker

IMU的输入为imu_linear_acceleration 和 imu_angular_velocity 线加速和角速度.最终作为属性输出的是方位四元数. Eigen::Quaterniond ...

- Cartographer源码阅读(5):PoseGraph位姿图

PoseGraph位姿图 mapping2D::PoseGraph类的注释: // Implements the loop closure method called Sparse Pose Adju ...

- Cartographer源码阅读(9):图优化的前端——闭环检测

约束计算 闭环检测的策略:搜索闭环,通过匹配检测是否是闭环,采用了分支定界法. 前已经述及PoseGraph的内容,此处继续.位姿图类定义了pose_graph::ConstraintBuilder ...

- 【原】FMDB源码阅读(三)

[原]FMDB源码阅读(三) 本文转载请注明出处 —— polobymulberry-博客园 1. 前言 FMDB比较优秀的地方就在于对多线程的处理.所以这一篇主要是研究FMDB的多线程处理的实现.而 ...

随机推荐

- SQL2008R2的 遍历所有表更新统计信息 和 索引重建

[2.以下是更新统计信息] DECLARE UpdateStatisticsTables CURSOR READ_ONLY FOR SELECT sst.name, Schema_name(sst.s ...

- SLAM的前世今生

SLAM技术已经蓬勃发展起来,这里综述性地介绍下SLAM的主体知识.SLAM的主体技术不多,难点在于细节.来源是:技术分享.ppt 前世 人类惆怅近千年的问题不是:我是谁,我要做什么,我要去哪里!而是 ...

- 【转载】最强NLP预训练模型!谷歌BERT横扫11项NLP任务记录

本文介绍了一种新的语言表征模型 BERT--来自 Transformer 的双向编码器表征.与最近的语言表征模型不同,BERT 旨在基于所有层的左.右语境来预训练深度双向表征.BERT 是首个在大批句 ...

- odoo:开源 ERP/CRM 入门与实践 -- 上海嘉冰信息技术公司提供咨询服务

odoo:开源 ERP/CRM 入门与实践 看了这张图,或许你对odoo有了一些兴趣. 这次Chat就是和大家一起交流开源ERP/CRM系统:odoo 对以下读者有帮助:研发.产品.项目.市场.服务. ...

- Windows SDK DDK WDK (Windows Driver Kit) 区别

首先,先从基础的东西说起,开发WINDOWS下的驱动程序,需要一个专门的开发包,如:开发JAVA程序,我们可能需要一个JDK,开发WINDOWS应用程序,我们需要WINDOWS的SDK,现在开发WIN ...

- [hadoop] kettle spoon 基础使用 (txt 内容抽取到excel中)

spoon.bat 启动kettle. 测试数据 1. 新建转换 输入中选择文本文件输入 双击设置文本输入 字符集.分隔符设置 获取对应的字段,预览记录. 拖入 excel输出,设置转换关系 设置输出 ...

- Oracle 10G 安装文档

Oracle 10G安装指导 1. 解压文件10201_database_win32.zip,并双击解压目录下的setup.exe,出现安装界面,如下: 2. 输入口令和确认口令,如:password ...

- php.ini 开发和线上配置的差异

比对了一下php自带的php.ini-development和php.ini-production,备忘. display_errors = Ondisplay_startup_errors = On ...

- 【奇淫技巧】API接口字段table文档转代码工具

今天做一个视频接口对接,发现对方提供的文档没有json格式,无法自动生成请求和响应对象 json自动生成C#类的工具 http://tool.sufeinet.com/Creater/JsonClas ...

- LeetCode - 503. Next Greater Element II

Given a circular array (the next element of the last element is the first element of the array), pri ...