使用Kubespray在ubuntu上自动部署K8s1.9.0集群

Kubespray 是 Kubernetes incubator 中的项目,目标是提供 Production Ready Kubernetes 部署方案,该项目基础是通过 Ansible Playbook 来定义系统与 Kubernetes 集群部署的任务,具有以下几个特点:

可以部署在 AWS, GCE, Azure, OpenStack以及裸机上.

部署 High Available Kubernetes 集群.

可组合性(Composable),可自行选择 Network Plugin (flannel, calico, canal, weave) 来部署.

支持多种 Linux distributions(CoreOS, Debian Jessie, Ubuntu 16.04, CentOS/RHEL7)

利用Kubespray可快速扩展K8S集群;初次安装遇到不少问题,过程见下文。

一、环境介绍

宿主机Ubuntu16.04,也是ansible主机

kubernetes v1.9.0

etcd v3.2.4

calico v2.6.2

docker 17.09

二、安装前准备

1、ansible安装最新ansible,如下为ubuntu,其他系统参考官方说明http://www.ansible.com.cn/docs/intro_installation.html

配置PPA及安装ansible,执行如下命令:(使用root用户安装,也可使用普通用户sudo安装)

apt-get install software-properties-common

apt-add-repository ppa:ansible/ansible

apt-get update

apt-get install ansible

配置免密码ssh

ssh-keygen -t rsa

ssh-copy-id 172.28.2.210

ssh-copy-id 172.28.2.211

ssh-copy-id 172.28.2.212

ssh-copy-id 172.28.2.213

2、下载kubespary(官方)

官方:

cd /root/

git clone https://github.com/kubernetes-incubator/kubespray.git

3、虚拟机安装docker及其他依赖包

①安装docker

apt-cache policy docker-engine

apt-get install docker-engine

#查看版本

docker --version

②各服务器安装包

安装python 2.7.12,参考ansible安装

apt-add-repository ppa:ansible/ansible

apt-get install python

apt-get install python-netaddr

python -V

Python 2.7.12

③所有机器执行: 关闭swapswapoff -a free -m

编辑/etc/fstab

④配置dns

修改/etc/resolv.conf,将记录保留2个以内

echo "nameserver 172.16.0.1" >/etc/resolv.conf

4、下载镜像

默认镜像下载需翻墙,若无法翻墙,可在dockerhub或阿里云中查找对应镜像和版本。

阿里云镜像搜索地址:https://dev.aliyun.com/search.html。

dockerhub:https://hub.docker.com/

①查看和修改下述几个yml文件中的版本号:

cd /root/kubespray

grep -r 'v1.8.1' .

grep -r 'Versions' .

②相关yml文件及路径为“

kubespray/roles/kubernetes-apps/ansible/defaults/main.yml

kubespray/roles/download/defaults/main.yml

kubespray/extra_playbooks/roles/download/defaults/main.yml

kubespray/inventory/group_vars/k8s-cluster.yml

kubespray/roles/dnsmasq/templates/dnsmasq-autoscaler.yml

本文的源码对应版本如下,大家使用时候可能各镜像及版本已经有变动,请下载kubespray源码,地址为:https://github.com/kubernetes-incubator/kubespray。 或在git中查找历史记录,修改为指定版本。

kubespray/roles/kubernetes-apps/ansible/defaults/main.yml

版本相关行:

2 # Versions

3 kubedns_version: 1.14.7

4 kubednsautoscaler_version: 1.1.2

41 # Dashboard

42 dashboard_enabled: true

43 dashboard_image_repo: gcr.io/google_containers/kubernetes-dashboard-amd64

44 dashboard_image_tag: v1.8.1

45 dashboard_init_image_repo: gcr.io/google_containers/kubernetes-dashboard-init-amd64

46 dashboard_init_image_tag: v1.0.1

kubespray/roles/download/defaults/main.yml

26 # Versions

27 kube_version: v1.9.0

28 kubeadm_version: "{{ kube_version }}"

41 weave_version: 2.0.5

# Checksums

97 kubedns_version: 1.14.7

104 kubednsautoscaler_version: 1.1.2

kubespray/extra_playbooks/roles/download/defaults/main.yml

kubespray/inventory/group_vars/k8s-cluster.yml

23 kube_version: v1.9.0

根据需要修改

67 kube_network_plugin: calico

90 kube_service_addresses: 10.233.0.0/18

95 kube_pods_subnet: 10.233.64.0/18

kubespray/roles/dnsmasq/templates/*.yml 暂无修改

#综上相关版本

# Versions

kube_version: v1.9.0

weave_version: 2.0.5

kubednsautoscaler_version: 1.1.2

kubedns_version: 1.14.7

dashboard_image_tag: v1.8.1

kubednsautoscaler_version: 1.1.2

③需翻墙下载的镜像 tag列表(25个):

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.1.2

gcr.io/google_containers/pause-amd64:3.0

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.7

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.7 gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.7

gcr.io/google_containers/elasticsearch:v2.4.1

gcr.io/google_containers/fluentd-elasticsearch:1.22

gcr.io/google_containers/kibana:v4.6.1

gcr.io/kubernetes-helm/tiller:v2.7.2

gcr.io/google_containers/kubernetes-dashboard-init-amd64:v1.0.1

gcr.io/google_containers/kubernetes-dashboard-amd64:v1.8.1

quay.io/l23network/k8s-netchecker-agent:v1.0

quay.io/l23network/k8s-netchecker-server:v1.0

quay.io/coreos/etcd:v3.2.4

quay.io/coreos/flannel:v0.9.1

quay.io/coreos/flannel-cni:v0.3.0

quay.io/calico/ctl:v1.6.1

quay.io/calico/node:v2.6.2

quay.io/calico/cni:v1.11.0

quay.io/calico/kube-controllers:v1.0.0

quay.io/calico/routereflector:v0.4.0

quay.io/coreos/hyperkube:v1.9.0_coreos.0

quay.io/ant31/kargo:master

quay.io/external_storage/local-volume-provisioner-bootstrap:v1.0.0

quay.io/external_storage/local-volume-provisioner:v1.0.0

④dockerhub镜像

相关镜像,可去dockerhub中查找。

docker pull googlecontainer/镜像名:tag

下述为整理的各镜像。

docker pull jiang7865134/gcr.io_google_containers_cluster-proportional-autoscaler-amd64:1.1.2

docker pull jiang7865134/gcr.io_google_containers_pause-amd64:3.0

docker pull jiang7865134/gcr.io_google_containers_k8s-dns-kube-dns-amd64:1.14.7

docker pull jiang7865134/gcr.io_google_containers_k8s-dns-dnsmasq-nanny-amd64:1.14.7

docker pull jiang7865134/gcr.io_google_containers_k8s-dns-sidecar-amd64:1.14.7

docker pull jiang7865134/gcr.io_google_containers_elasticsearch:v2.4.1

docker pull jiang7865134/gcr.io_google_containers_fluentd-elasticsearch:1.22

docker pull jiang7865134/gcr.io_google_containers_kibana:v4.6.1

docker pull jiang7865134/gcr.io_kubernetes-helm_tiller:v2.7.2

docker pull jiang7865134/gcr.io_google_containers_kubernetes-dashboard-init-amd64:v1.0.1

docker pull jiang7865134/gcr.io_google_containers_kubernetes-dashboard-amd64:v1.8.1

docker pull jiang7865134/quay.io_l23network_k8s-netchecker-agent:v1.0

docker pull jiang7865134/quay.io_l23network_k8s-netchecker-server:v1.0

docker pull jiang7865134/quay.io_coreos_etcd:v3.2.4

docker pull jiang7865134/quay.io_coreos_flannel:v0.9.1

docker pull jiang7865134/quay.io_coreos_flannel-cni:v0.3.0

docker pull jiang7865134/quay.io_calico_ctl:v1.6.1

docker pull jiang7865134/quay.io_calico_node:v2.6.2

docker pull jiang7865134/quay.io_calico_cni:v1.11.0

docker pull jiang7865134/quay.io_calico_kube-controllers:v1.0.0

docker pull jiang7865134/quay.io_calico_routereflector:v0.4.0

docker pull jiang7865134/quay.io_coreos_hyperkube:v1.9.0_coreos.0

docker pull jiang7865134/quay.io_ant31_kargo:master

docker pull jiang7865134/quay.io_external_storage_local-volume-provisioner-bootstrap:v1.0.0

docker pull jiang7865134/quay.io_external_storage_local-volume-provisioner:v1.0.0

⑤将各yml中的镜像名改为dockerhub对应镜像名

(推荐,因master、node部署中均需自动下载各镜像)

cd /root/kubespray

grep -r 'gcr.io' .

grep -r 'quay.io' .

sed -i 's#gcr\.io\/google_containers\/#jiang7865134/gcr\.io_google_containers_#g' roles/download/defaults/main.yml

#sed -i 's#gcr\.io\/google_containers\/#jiang7865134/gcr\.io_google_containers_#g' roles/dnsmasq/templates/dnsmasq-autoscaler.yml.j2

sed -i 's#gcr\.io\/google_containers\/#jiang7865134/gcr\.io_google_containers_#g' roles/kubernetes-apps/ansible/defaults/main.yml

sed -i 's#gcr\.io\/kubernetes-helm\/#jiang7865134/gcr\.io_kubernetes-helm_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/l23network\/#jiang7865134/quay\.io_l23network_#g' docs/netcheck.md

sed -i 's#quay\.io\/l23network\/#jiang7865134/quay\.io_l23network_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/coreos\/#jiang7865134/quay\.io_coreos_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/calico\/#jiang7865134/quay\.io_calico_#g' roles/download/defaults/main.yml

sed -i 's#quay\.io\/external_storage\/#jiang7865134/quay\.io_external_storage_#g' roles/kubernetes-apps/local_volume_provisioner/defaults/main.yml

sed -i 's#quay\.io\/ant31\/kargo#jiang7865134/quay\.io_ant31_kargo_#g' .gitlab-ci.yml

5、修改ansible相关配置,inventory文件

在kubespray/inventory/inventory.cfg,添加内容:

[all]

master ansible_host=172.28.2.210 ip=172.28.2.210 ansible_user=root

node1 ansible_host=172.28.2.211 ip=172.28.2.211 ansible_user=root

node2 ansible_host=172.28.2.212 ip=172.28.2.212 ansible_user=root

node3 ansible_host=172.28.2.213 ip=172.28.2.213 ansible_user=root

[kube-master]

master

[kube-node]

node1

node2

node3

#etcd默认安装1台,若需多台,则修改/root/kubespray/roles/kubernetes/preinstall/tasks/verify-settings.yml

[etcd]

master

#node1

#node2

#node3

[k8s-cluster:children]

kube-node

kube-master

三、安装

1、安装

cd /root/kubespray/

ansible-playbook -i inventory/inventory.cfg cluster.yml

2、troubles shooting

报错1:python版本引起

fatal: [node1]: FAILED! => {"changed": false, "module_stderr": "Shared connection to 172.28.2.211 closed.\r\n", "module_stdout": "/bin/sh: 1: /usr/bin/python: not found\r\n", "msg": "MODULE FAILURE", "rc": 0}

问题分析:

因各节点均为ubuntu16.04

python默认已安装为:Python 3.5.2

# python3 -V

而master中安装ansible时,安装了python 2.7.12

python -V

Python 2.7.12

解决方法:各节点安装python 2.7.12,参考ansible安装

apt-add-repository ppa:ansible/ansible

apt-get install python

python -V

Python 2.7.12

报错2:缺python-netaddr包

localhost: The ipaddr filter requires python-netaddr be installed on the ansible controller

解决方法:apt-get install python-netaddr

报错3:默认配置问题

TASK [kubernetes/preinstall : Stop if even number of etcd hosts]

fatal: [node1]: FAILED! => {

"assertion": "groups.etcd|length is not divisibleby 2",

"changed": false,

"evaluated_to": false

........

解决方法1(临时):kubespray/inventory/inventory.cfg中etcd组,仅保留master(默认为2,即localhost 和 master)

[etcd]

master

对应yml

前期检查kubernetes/preinstall

cd /root/kubespray/roles/kubernetes/preinstall/tasks

grep etcd *

etchosts.yml: {% for item in (groups['k8s-cluster'] + groups['etcd'] + groups['calico-rr']|default([]))|unique -%}{{ hostvars[item]['access_ip'] | default(hostvars[item]['ip'] | default(hostvars[item]['ansible_default_ipv4']['address'])) }}{% if (item != hostvars[item]['ansible_hostname']) %} {{ hostvars[item]['ansible_hostname'] }} {{ hostvars[item]['ansible_hostname'] }}.{{ dns_domain }}{% endif %} {{ item }} {{ item }}.{{ dns_domain }}

verify-settings.yml:- name: Stop if even number of etcd hosts

verify-settings.yml: that: groups.etcd|length is not divisibleby 2

解决方法2:

保留inventory.cfg中etcd组中节点不变,修改verify-settings.yml

49 that: groups.etcd|length is not divisibleby 5

数量改为节点数+1=5

报错4:swap未关闭

TASK [kubernetes/preinstall : Stop if swap enabled] **************************************************************

Thursday 01 February 2018 16:10:27 +0800 (0:00:00.095) 0:00:15.064 *****

fatal: [node1]: FAILED! => {

"assertion": "ansible_swaptotal_mb == 0",

解决方法:

根据TASK [kubernetes/preinstall : Stop if swap enabled]可确定任务对应yml文件的目录

cd /root/kubespray/roles/kubernetes/preinstall/tasks

grep swap *

verify-settings.yml:- name: Stop if swap enabled

.....

vim verify-settings.yml

修改swap, 75 - name: Stop if swap enabled

76 assert:

77 that: ansible_swaptotal_mb == 0

78 when: kubelet_fail_swap_on|default(false)

79 ignore_errors: "{{ ignore_assert_errors }}"

或所有机器执行: 关闭swap

swapoff -a

free -m

报错5:dns配置导致

TASK [docker : check number of nameservers] **********************************************************************

Thursday 01 February 2018 16:46:08 +0800 (0:00:00.091) 0:07:27.328 *****

fatal: [node1]: FAILED! => {"changed": false, "msg": "Too many nameservers. You can relax this check by set docker_dns_servers_strict=no and we will only use the first 3."}

解决方法:

cd /root/kubespray/roles/docker/tasks

grep nameserver *

vim set_facts_dns.yml

......

/etc/resolv.conf中dns记录过多

按提示,两种解决方法:

set docker_dns_servers_strict=no

或修改/etc/resolv.conf,将记录保留2个以内

echo "nameserver 172.16.0.1" >/etc/resolv.conf

报错6:playbook默认配置问题

RUNNING HANDLER [docker : Docker | reload docker] ****************************************************************

Thursday 01 February 2018 17:27:02 +0800 (0:00:00.090) 0:01:42.994 *****

fatal: [master]: FAILED! => {"changed": false, "msg": "Unable to restart service docker: Job for docker.service failed because the control process exited with error code. See \"systemctl status docker.service\" and \"journalctl -xe\" for details.\n"}

fatal: [node2]: 。。。。。

解决方法:

cd /etc/systemd/system/docker.service.d

新增两个配置文件

docker-dns.conf docker-options.conf

systemctl status docker查看错误信息如下

Error starting daemon: error initializing graphdriver: /var/lib/docker contains several valid graphdrivers: aufs, overlay2; Please cleanup or explicitly choose storage driver (-s <DRIVER

修改配置文件vim docker-options.conf ,增加--storage-driver=**相关配置

[Service]

Environment="DOCKER_OPTS=--insecure-registry=172.28.2.2:4000 --graph=/var/lib/docker --log-opt max-size=50m --log-opt max-file=5 \

--iptables=false --storage-driver=aufs"

解决办法:

①修改模板文件

/root/kubespray/roles/docker/templates

vim docker-options.conf.j2

[Service]

Environment="DOCKER_OPTS={{ docker_options | default('') }} \

--iptables=false --storage-driver=aufs"

②修改playbook yml

vim /root/kubespray/inventory/group_vars/k8s-cluster.yml

136 docker_options: "--insecure-registry={{ kube_service_addresses }} --graph={{ docker_daemon_graph }} {{ docker_log_opts }}"

修改为

136 docker_options: "--insecure-registry=172.28.2.2:4000 --graph={{ docker_daemon_graph }} {{ docker_log_opts }}"

前提,手动修改docker配置并重启服务,保证docker服务在部署前正常

vim /etc/systemd/system/docker.service.d/docker-options.conf

systemctl daemon-reload

systemctl restart docker

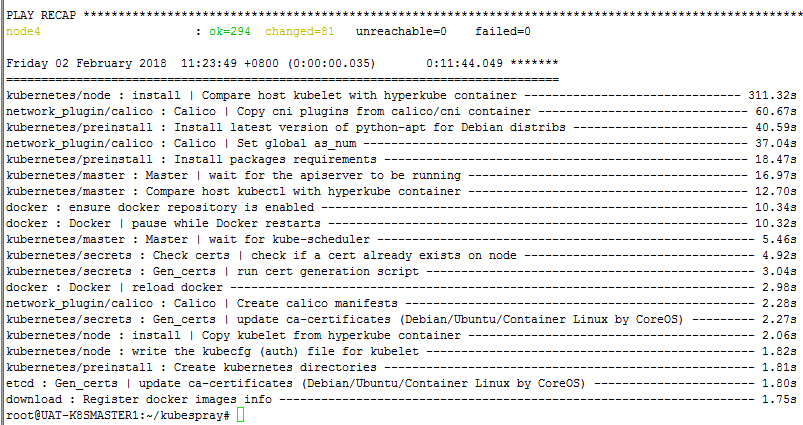

3、部署完成

查看节点

kubectl get node

kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-4gm72 1/1 Running 11 13h

calico-node-8fkfk 1/1 Running 0 13h

calico-node-fqdwj 1/1 Running 16 13h

calico-node-lpdtx 1/1 Running 15 13h

kube-apiserver-master 1/1 Running 0 13h

.....

kube-dns-79d99cdcd5-5cw5b 0/3 ImagePullBackOff 0 13h

若如上所示,有报错,则查看报错相关pod

kubectl describe pod kube-dns-79d99cdcd5-5cw5b -n kube-system

查看引起报错相关镜像,手动下载。

Name: kube-dns-79d99cdcd5-5cw5b

Namespace: kube-system

Node: node3/172.28.2.213

Image: **********

root@master:~/kubespray# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 33d v1.9.0+coreos.0

node1 Ready node 33d v1.9.0+coreos.0

node2 Ready node 33d v1.9.0+coreos.0

node3 Ready node 33d v1.9.0+coreos.0 root@master:~/kubespray# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-4gm72 1/1 Running 52 33d

calico-node-8fkfk 1/1 Running 0 33d

calico-node-fqdwj 1/1 Running 53 33d

calico-node-lpdtx 1/1 Running 47 33d

kube-apiserver-master 1/1 Running 0 33d

kube-apiserver-node4 1/1 Running 224 18d

kube-controller-manager-master 1/1 Running 0 33d

kube-controller-manager-node4 1/1 Running 5 18d

kube-dns-79d99cdcd5-6vvrw 3/3 Running 0 18d

kube-dns-79d99cdcd5-rkpf2 3/3 Running 0 18d

kube-proxy-master 1/1 Running 0 33d

kube-proxy-node1 1/1 Running 0 32d

kube-proxy-node2 1/1 Running 0 18d

kube-proxy-node3 1/1 Running 0 32d

kube-scheduler-master 1/1 Running 0 33d

kubedns-autoscaler-5564b5585f-7z62x 1/1 Running 0 18d

kubernetes-dashboard-6bbb86ffc4-zmmc2 1/1 Running 0 18d

nginx-proxy-node1 1/1 Running 0 32d

nginx-proxy-node2 1/1 Running 0 18d

nginx-proxy-node3 1/1 Running 0 32d

三、集群扩展

1、修改inventory文件,把新主机node4加入Master或者Node组

[all]

master ansible_host=172.28.2.210 ip=172.28.2.210 ansible_user=root

node1 ansible_host=172.28.2.211 ip=172.28.2.211 ansible_user=root

node2 ansible_host=172.28.2.212 ip=172.28.2.212 ansible_user=root

node3 ansible_host=172.28.2.213 ip=172.28.2.213 ansible_user=root

node4 ansible_host=172.28.2.214 ip=172.28.2.214 ansible_user=root

[kube-master]

master

node4

[kube-node]

node1

node2

node3

node4

[etcd]

master

[k8s-cluster:children]

kube-node

kube-master

2、安装

ssh-copy-id 172.28.2.214

#安装python 2.7.12

apt-add-repository ppa:ansible/ansible

apt-get install python python-netaddr

#所有机器执行: 关闭swapswapoff -a

echo "nameserver 172.16.0.1" >/etc/resolv.conf

执行:

cd /root/kubespray/

ansible-playbook -i inventory/inventory.cfg cluster.yml --limit node4

3、完成

root@master:~/kubespray# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 33d v1.9.0+coreos.0

node1 Ready node 33d v1.9.0+coreos.0

node2 Ready node 33d v1.9.0+coreos.0

node3 Ready node 33d v1.9.0+coreos.0

node4 Ready master,node 33d v1.9.0+coreos.0

root@master:~/kubespray# kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

calico-node-4gm72 1/1 Running 52 33d

calico-node-8fkfk 1/1 Running 0 33d

calico-node-fqdwj 1/1 Running 53 33d

calico-node-lpdtx 1/1 Running 47 33d

calico-node-nq8l2 1/1 Running 42 33d

kube-apiserver-master 1/1 Running 0 33d

kube-apiserver-node4 1/1 Running 224 18d

kube-controller-manager-master 1/1 Running 0 33d

kube-controller-manager-node4 1/1 Running 5 18d

kube-dns-79d99cdcd5-6vvrw 3/3 Running 0 18d

kube-dns-79d99cdcd5-rkpf2 3/3 Running 0 18d

kube-proxy-master 1/1 Running 0 33d

kube-proxy-node1 1/1 Running 0 32d

kube-proxy-node2 1/1 Running 0 18d

kube-proxy-node3 1/1 Running 0 32d

kube-proxy-node4 1/1 Running 0 18d

kube-scheduler-master 1/1 Running 0 33d

kube-scheduler-node4 1/1 Running 3 18d

kubedns-autoscaler-5564b5585f-7z62x 1/1 Running 0 18d

kubernetes-dashboard-6bbb86ffc4-zmmc2 1/1 Running 0 18d

nginx-proxy-node1 1/1 Running 0 32d

nginx-proxy-node2 1/1 Running 0 18d

nginx-proxy-node3 1/1 Running 0 32d

2019新增问题解决方法:

1、kubespary与ansible2.8.0版本不兼容,可安装为ansible2.7版本

相关报错:Ansible: ansible-playbook delegate_to error

##在线安装

apt-add-repository ppa:ansible/ansible

apt-get update

##查看版本

apt-cache madison ansible

##安装指定版本

apt-get install <<package name>>=<<version>> ##离线安装ansible2.7.5, 注意2..8需python3,不建议安装

wget http://ftp.jaist.ac.jp/debian/pool/main/a/ansible/ansible_2.7.5+dfsg-1~bpo9+1_all.deb

dpkg -i ansible_2.7.5+dfsg-~bpo9+1_all.deb

ansible --version

2、若离线安装(ubuntu),除了上述apt-get install安装的相关镜像及包之外,还需下载:

1、nginx镜像: nginx:1.13

2、deb包

- python-apt

- aufs-tools

- apt-transport-https

- software-properties-common

- ebtables

- python-httplib2

- openssl

- curl

- rsync

- bash-completion

- socat

- unzip

##其他服务器下载后传输

wget http://us.archive.ubuntu.com/ubuntu/pool/main/a/apt/libapt-pkg5.0_1.2.31_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/a/apt/libapt-inst2.0_1.2.31_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/a/apt/apt_1.2.31_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/a/apt/apt-utils_1.2.31_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/p/python-apt/python-apt_1.1.0~beta1ubuntu0.16.04.4_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/a/apt/apt-transport-https_1.2.31_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/s/software-properties/software-properties-common_0.96.20.8_all.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/s/software-properties/python3-software-properties_0.96.20.8_all.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/main/e/ebtables/ebtables_2.0.10.4-3.4ubuntu2.16.04.2_amd64.deb

wget http://security.ubuntu.com/ubuntu/pool/main/c/curl/curl_7.47.0-1ubuntu2.13_amd64.deb

wget http://security.ubuntu.com/ubuntu/pool/main/c/curl/libcurl3-gnutls_7.47.0-1ubuntu2.13_amd64.deb

wget http://security.ubuntu.com/ubuntu/pool/main/o/openssl/openssl_1.0.2g-1ubuntu4.15_amd64.deb

wget http://security.ubuntu.com/ubuntu/pool/main/r/rsync/rsync_3.1.1-3ubuntu1.2_amd64.deb

wget http://us.archive.ubuntu.com/ubuntu/pool/universe/s/socat/socat_1.7.3.1-1_amd64.deb

##安装

dpkg -i apt_1..31_amd64.deb

dpkg -i apt-transport-https_1..31_amd64.deb

dpkg -i apt-utils_1..31_amd64.deb

dpkg -i libapt-inst2.0_1..31_amd64.deb

dpkg -i libapt-pkg5.0_1..31_amd64.deb

dpkg -i python-apt_1.1.0~beta1ubuntu0.16.04.4_amd64.deb

dpkg -i software-properties-common_0.96.20.8_all.deb

dpkg -i python3-software-properties_0.96.20.8_all.deb

dpkg -i ebtables_2.0.10.-.4ubuntu2.16.04.2_amd64.deb

dpkg -i curl_7.47.0-1ubuntu2.13_amd64.deb

dpkg -i libcurl3-gnutls_7.47.0-1ubuntu2.13_amd64.deb

dpkg -i openssl_1..2g-1ubuntu4.15_amd64.deb

dpkg -i rsync_3.1.1-3ubuntu1.2_amd64.deb

dpkg -i socat_1.7.3.-1_amd64.deb

3、建议安装的其他包

apt-get install python-pip

pip install --upgrade jinja2

4、安装问题排查日志

vim /var/log/syslog

##检索Pull image,可查看是否有镜像下载失败问题

##其他问题

使用Kubespray在ubuntu上自动部署K8s1.9.0集群的更多相关文章

- 在CentOS上部署kubernetes1.9.0集群

原文链接: https://jimmysong.io/kubernetes-handbook/cloud-native/play-with-kubernetes.html (在CentOS上部署kub ...

- hype-v上centos7部署高可用kubernetes集群实践

概述 在上一篇中已经实践了 非高可用的bubernetes集群的实践 普通的k8s集群当work node 故障时是高可用的,但是master node故障时将会发生灾难,因为k8s api serv ...

- 1.如何在虚拟机ubuntu上安装hadoop多节点分布式集群

要想深入的学习hadoop数据分析技术,首要的任务是必须要将hadoop集群环境搭建起来,可以将hadoop简化地想象成一个小软件,通过在各个物理节点上安装这个小软件,然后将其运行起来,就是一个had ...

- k8s-1.15.0集群部署+dashboard

环境:外网环境硬件master-centos7.4 2核4G node1-centos7.4 2核4Gnode2-centos7.4 2核4G软件:三台服务器 :docker-19. ...

- 分布式Hbase-0.98.4在Hadoop-2.2.0集群上的部署

fesh个人实践,欢迎经验交流!本文Blog地址:http://www.cnblogs.com/fesh/p/3898991.html Hbase 是Apache Hadoop的数据库,能够对大数据提 ...

- 06、部署Spark程序到集群上运行

06.部署Spark程序到集群上运行 6.1 修改程序代码 修改文件加载路径 在spark集群上执行程序时,如果加载文件需要确保路径是所有节点能否访问到的路径,因此通常是hdfs路径地址.所以需要修改 ...

- 最新二进制安装部署kubernetes1.15.6集群---超详细教程

00.组件版本和配置策略 00-01.组件版本 Kubernetes 1.15.6 Docker docker-ce-18.06.1.ce-3.el7 Etcd v3.3.13 Flanneld v0 ...

- Kubernetes 部署 Nebula 图数据库集群

Kubernetes 是什么 Kubernetes 是一个开源的,用于管理云平台中多个主机上的容器化的应用,Kubernetes 的目标是让部署容器化的应用简单并且高效,Kubernetes 提供了应 ...

- Redis 3.0.0 集群部署

简述: 1.0.1:redis cluster的现状 目前redis支持的cluster特性 1):节点自动发现 2):slave->master 选举,集群容错 3):Hot reshardi ...

随机推荐

- Request功能

1.获取请求消息数据 获取请求行数据 获取请求头数据 获取请求体数据 请求空行没必要获取 1.获取请求行数据 GET /虚拟目录 /servlet路径 ?请求参数 HTTP/1.1 GET/day1 ...

- Oracle存储过程----存储过程执行简单的增删改查

1.存储过程执行增加的sql create or replace procedure test_add(id varchar,name varchar,time varchar,age varchar ...

- [lua]紫猫lua教程-命令宝典-L1-01-07. table表

L1[table]01. table表的定义与赋值 小知识:声明表的例子 xx={}--创建一个空表xx --给这表的元素赋值 test="a" xx[test]="a& ...

- 数据库程序接口——JDBC——初篇——目录

目录 建立连接 核心对象 Driver DriverManager Connection DataSource 常用功能 第一个程序 C3P0数据源 DBCP数据源 事务之Spring事务 执行SQL ...

- ubuntu 终端快捷方式汇总

terminal 是一个命令行终端,将启动系统默认的shell,shell是一个解释并执行在命令行提示符输入的命令的程序. 启动 terminal1 在 “面板主页” 的应用程序搜索栏中,输入命令gn ...

- xv6 trapframe定义的位置

在x86.h的最下面,真是把我找吐了,MD

- js无缝滚动跑马灯

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8&quo ...

- python正则子组匹配

子组匹配返回找到的第一个匹配项 []表示匹配列表中的任意一个,返回找到的第一个 这样可以发现如果要查找字母的话可以使用[a-z],返回找到的第一个字母 查找数字使用[0-9],返回找到的第一个数字相当 ...

- google插件跨域含用户请求WebApi解决的方案

问题描述: google插件跨域请求WebApi相关解决方案 1.ajax解决含登录用户信息 $.ajax({ url: url, type: "POST", timeout: 6 ...

- AcWing 858. Prim算法求最小生成树 稀疏图

//稀疏图 #include <cstring> #include <iostream> #include <algorithm> using namespace ...