elasticSearch6源码分析(2)模块化管理

elasticsearch里面的组件基本都是用Guice的Injector进行注入与获取实例方式进行模块化管理。

在node的构造方法中

/**

* Constructs a node

*

* @param environment the environment for this node

* @param classpathPlugins the plugins to be loaded from the classpath

* @param forbidPrivateIndexSettings whether or not private index settings are forbidden when creating an index; this is used in the

* test framework for tests that rely on being able to set private settings

*/

protected Node(

final Environment environment, Collection<Class<? extends Plugin>> classpathPlugins, boolean forbidPrivateIndexSettings) {

logger = LogManager.getLogger(Node.class);

final List<Closeable> resourcesToClose = new ArrayList<>(); // register everything we need to release in the case of an error

boolean success = false;

try {

originalSettings = environment.settings();

Settings tmpSettings = Settings.builder().put(environment.settings())

.put(Client.CLIENT_TYPE_SETTING_S.getKey(), CLIENT_TYPE).build(); nodeEnvironment = new NodeEnvironment(tmpSettings, environment);

resourcesToClose.add(nodeEnvironment);

logger.info("node name [{}], node ID [{}]",

NODE_NAME_SETTING.get(tmpSettings), nodeEnvironment.nodeId()); final JvmInfo jvmInfo = JvmInfo.jvmInfo();

logger.info(

"version[{}], pid[{}], build[{}/{}/{}/{}], OS[{}/{}/{}], JVM[{}/{}/{}/{}]",

Version.displayVersion(Version.CURRENT, Build.CURRENT.isSnapshot()),

jvmInfo.pid(),

Build.CURRENT.flavor().displayName(),

Build.CURRENT.type().displayName(),

Build.CURRENT.shortHash(),

Build.CURRENT.date(),

Constants.OS_NAME,

Constants.OS_VERSION,

Constants.OS_ARCH,

Constants.JVM_VENDOR,

Constants.JVM_NAME,

Constants.JAVA_VERSION,

Constants.JVM_VERSION);

logger.info("JVM arguments {}", Arrays.toString(jvmInfo.getInputArguments()));

warnIfPreRelease(Version.CURRENT, Build.CURRENT.isSnapshot(), logger); if (logger.isDebugEnabled()) {

logger.debug("using config [{}], data [{}], logs [{}], plugins [{}]",

environment.configFile(), Arrays.toString(environment.dataFiles()), environment.logsFile(), environment.pluginsFile());

} this.pluginsService = new PluginsService(tmpSettings, environment.configFile(), environment.modulesFile(), environment.pluginsFile(), classpathPlugins);

this.settings = pluginsService.updatedSettings();

localNodeFactory = new LocalNodeFactory(settings, nodeEnvironment.nodeId()); // create the environment based on the finalized (processed) view of the settings

// this is just to makes sure that people get the same settings, no matter where they ask them from

this.environment = new Environment(this.settings, environment.configFile());

Environment.assertEquivalent(environment, this.environment); final List<ExecutorBuilder<?>> executorBuilders = pluginsService.getExecutorBuilders(settings); final ThreadPool threadPool = new ThreadPool(settings, executorBuilders.toArray(new ExecutorBuilder[0]));

resourcesToClose.add(() -> ThreadPool.terminate(threadPool, 10, TimeUnit.SECONDS));

// adds the context to the DeprecationLogger so that it does not need to be injected everywhere

DeprecationLogger.setThreadContext(threadPool.getThreadContext());

resourcesToClose.add(() -> DeprecationLogger.removeThreadContext(threadPool.getThreadContext())); final List<Setting<?>> additionalSettings = new ArrayList<>(pluginsService.getPluginSettings());

final List<String> additionalSettingsFilter = new ArrayList<>(pluginsService.getPluginSettingsFilter());

for (final ExecutorBuilder<?> builder : threadPool.builders()) {

additionalSettings.addAll(builder.getRegisteredSettings());

}

client = new NodeClient(settings, threadPool);

final ResourceWatcherService resourceWatcherService = new ResourceWatcherService(settings, threadPool);

final ScriptModule scriptModule = new ScriptModule(settings, pluginsService.filterPlugins(ScriptPlugin.class));

AnalysisModule analysisModule = new AnalysisModule(this.environment, pluginsService.filterPlugins(AnalysisPlugin.class));

// this is as early as we can validate settings at this point. we already pass them to ScriptModule as well as ThreadPool

// so we might be late here already final Set<SettingUpgrader<?>> settingsUpgraders = pluginsService.filterPlugins(Plugin.class)

.stream()

.map(Plugin::getSettingUpgraders)

.flatMap(List::stream)

.collect(Collectors.toSet()); final SettingsModule settingsModule =

new SettingsModule(this.settings, additionalSettings, additionalSettingsFilter, settingsUpgraders);

scriptModule.registerClusterSettingsListeners(settingsModule.getClusterSettings());

resourcesToClose.add(resourceWatcherService);

final NetworkService networkService = new NetworkService(

getCustomNameResolvers(pluginsService.filterPlugins(DiscoveryPlugin.class))); List<ClusterPlugin> clusterPlugins = pluginsService.filterPlugins(ClusterPlugin.class);

final ClusterService clusterService = new ClusterService(settings, settingsModule.getClusterSettings(), threadPool);

clusterService.addStateApplier(scriptModule.getScriptService());

resourcesToClose.add(clusterService);

final IngestService ingestService = new IngestService(clusterService, threadPool, this.environment,

scriptModule.getScriptService(), analysisModule.getAnalysisRegistry(), pluginsService.filterPlugins(IngestPlugin.class));

final DiskThresholdMonitor listener = new DiskThresholdMonitor(settings, clusterService::state,

clusterService.getClusterSettings(), client);

final ClusterInfoService clusterInfoService = newClusterInfoService(settings, clusterService, threadPool, client,

listener::onNewInfo);

final UsageService usageService = new UsageService(settings); ModulesBuilder modules = new ModulesBuilder();

// plugin modules must be added here, before others or we can get crazy injection errors...

for (Module pluginModule : pluginsService.createGuiceModules()) {

modules.add(pluginModule);

}

final MonitorService monitorService = new MonitorService(settings, nodeEnvironment, threadPool, clusterInfoService);

ClusterModule clusterModule = new ClusterModule(settings, clusterService, clusterPlugins, clusterInfoService);

modules.add(clusterModule);

IndicesModule indicesModule = new IndicesModule(pluginsService.filterPlugins(MapperPlugin.class));

modules.add(indicesModule); SearchModule searchModule = new SearchModule(settings, false, pluginsService.filterPlugins(SearchPlugin.class));

CircuitBreakerService circuitBreakerService = createCircuitBreakerService(settingsModule.getSettings(),

settingsModule.getClusterSettings());

resourcesToClose.add(circuitBreakerService);

modules.add(new GatewayModule()); PageCacheRecycler pageCacheRecycler = createPageCacheRecycler(settings);

BigArrays bigArrays = createBigArrays(pageCacheRecycler, circuitBreakerService);

resourcesToClose.add(bigArrays);

modules.add(settingsModule);

List<NamedWriteableRegistry.Entry> namedWriteables = Stream.of(

NetworkModule.getNamedWriteables().stream(),

indicesModule.getNamedWriteables().stream(),

searchModule.getNamedWriteables().stream(),

pluginsService.filterPlugins(Plugin.class).stream()

.flatMap(p -> p.getNamedWriteables().stream()),

ClusterModule.getNamedWriteables().stream())

.flatMap(Function.identity()).collect(Collectors.toList());

final NamedWriteableRegistry namedWriteableRegistry = new NamedWriteableRegistry(namedWriteables);

NamedXContentRegistry xContentRegistry = new NamedXContentRegistry(Stream.of(

NetworkModule.getNamedXContents().stream(),

indicesModule.getNamedXContents().stream(),

searchModule.getNamedXContents().stream(),

pluginsService.filterPlugins(Plugin.class).stream()

.flatMap(p -> p.getNamedXContent().stream()),

ClusterModule.getNamedXWriteables().stream())

.flatMap(Function.identity()).collect(toList()));

modules.add(new RepositoriesModule(this.environment, pluginsService.filterPlugins(RepositoryPlugin.class), xContentRegistry));

final MetaStateService metaStateService = new MetaStateService(settings, nodeEnvironment, xContentRegistry); // collect engine factory providers from server and from plugins

final Collection<EnginePlugin> enginePlugins = pluginsService.filterPlugins(EnginePlugin.class);

final Collection<Function<IndexSettings, Optional<EngineFactory>>> engineFactoryProviders =

Stream.concat(

indicesModule.getEngineFactories().stream(),

enginePlugins.stream().map(plugin -> plugin::getEngineFactory))

.collect(Collectors.toList()); final Map<String, Function<IndexSettings, IndexStore>> indexStoreFactories =

pluginsService.filterPlugins(IndexStorePlugin.class)

.stream()

.map(IndexStorePlugin::getIndexStoreFactories)

.flatMap(m -> m.entrySet().stream())

.collect(Collectors.toMap(Map.Entry::getKey, Map.Entry::getValue)); final IndicesService indicesService =

new IndicesService(settings, pluginsService, nodeEnvironment, xContentRegistry, analysisModule.getAnalysisRegistry(),

clusterModule.getIndexNameExpressionResolver(), indicesModule.getMapperRegistry(), namedWriteableRegistry,

threadPool, settingsModule.getIndexScopedSettings(), circuitBreakerService, bigArrays,

scriptModule.getScriptService(), client, metaStateService, engineFactoryProviders, indexStoreFactories); final AliasValidator aliasValidator = new AliasValidator(settings); final MetaDataCreateIndexService metaDataCreateIndexService = new MetaDataCreateIndexService(

settings,

clusterService,

indicesService,

clusterModule.getAllocationService(),

aliasValidator,

environment,

settingsModule.getIndexScopedSettings(),

threadPool,

xContentRegistry,

forbidPrivateIndexSettings); Collection<Object> pluginComponents = pluginsService.filterPlugins(Plugin.class).stream()

.flatMap(p -> p.createComponents(client, clusterService, threadPool, resourceWatcherService,

scriptModule.getScriptService(), xContentRegistry, environment, nodeEnvironment,

namedWriteableRegistry).stream())

.collect(Collectors.toList()); ActionModule actionModule = new ActionModule(false, settings, clusterModule.getIndexNameExpressionResolver(),

settingsModule.getIndexScopedSettings(), settingsModule.getClusterSettings(), settingsModule.getSettingsFilter(),

threadPool, pluginsService.filterPlugins(ActionPlugin.class), client, circuitBreakerService, usageService);

modules.add(actionModule); final RestController restController = actionModule.getRestController();

final NetworkModule networkModule = new NetworkModule(settings, false, pluginsService.filterPlugins(NetworkPlugin.class),

threadPool, bigArrays, pageCacheRecycler, circuitBreakerService, namedWriteableRegistry, xContentRegistry,

networkService, restController);

Collection<UnaryOperator<Map<String, MetaData.Custom>>> customMetaDataUpgraders =

pluginsService.filterPlugins(Plugin.class).stream()

.map(Plugin::getCustomMetaDataUpgrader)

.collect(Collectors.toList());

Collection<UnaryOperator<Map<String, IndexTemplateMetaData>>> indexTemplateMetaDataUpgraders =

pluginsService.filterPlugins(Plugin.class).stream()

.map(Plugin::getIndexTemplateMetaDataUpgrader)

.collect(Collectors.toList());

Collection<UnaryOperator<IndexMetaData>> indexMetaDataUpgraders = pluginsService.filterPlugins(Plugin.class).stream()

.map(Plugin::getIndexMetaDataUpgrader).collect(Collectors.toList());

final MetaDataUpgrader metaDataUpgrader = new MetaDataUpgrader(customMetaDataUpgraders, indexTemplateMetaDataUpgraders);

final MetaDataIndexUpgradeService metaDataIndexUpgradeService = new MetaDataIndexUpgradeService(settings, xContentRegistry,

indicesModule.getMapperRegistry(), settingsModule.getIndexScopedSettings(), indexMetaDataUpgraders);

final GatewayMetaState gatewayMetaState = new GatewayMetaState(settings, nodeEnvironment, metaStateService,

metaDataIndexUpgradeService, metaDataUpgrader);

new TemplateUpgradeService(settings, client, clusterService, threadPool, indexTemplateMetaDataUpgraders);

final Transport transport = networkModule.getTransportSupplier().get();

Set<String> taskHeaders = Stream.concat(

pluginsService.filterPlugins(ActionPlugin.class).stream().flatMap(p -> p.getTaskHeaders().stream()),

Stream.of(Task.X_OPAQUE_ID)

).collect(Collectors.toSet());

final TransportService transportService = newTransportService(settings, transport, threadPool,

networkModule.getTransportInterceptor(), localNodeFactory, settingsModule.getClusterSettings(), taskHeaders);

final ResponseCollectorService responseCollectorService = new ResponseCollectorService(this.settings, clusterService);

final SearchTransportService searchTransportService = new SearchTransportService(settings, transportService,

SearchExecutionStatsCollector.makeWrapper(responseCollectorService));

final HttpServerTransport httpServerTransport = newHttpTransport(networkModule); final DiscoveryModule discoveryModule = new DiscoveryModule(this.settings, threadPool, transportService, namedWriteableRegistry,

networkService, clusterService.getMasterService(), clusterService.getClusterApplierService(),

clusterService.getClusterSettings(), pluginsService.filterPlugins(DiscoveryPlugin.class),

clusterModule.getAllocationService(), environment.configFile());

this.nodeService = new NodeService(settings, threadPool, monitorService, discoveryModule.getDiscovery(),

transportService, indicesService, pluginsService, circuitBreakerService, scriptModule.getScriptService(),

httpServerTransport, ingestService, clusterService, settingsModule.getSettingsFilter(), responseCollectorService,

searchTransportService); final SearchService searchService = newSearchService(clusterService, indicesService,

threadPool, scriptModule.getScriptService(), bigArrays, searchModule.getFetchPhase(),

responseCollectorService); final List<PersistentTasksExecutor<?>> tasksExecutors = pluginsService

.filterPlugins(PersistentTaskPlugin.class).stream()

.map(p -> p.getPersistentTasksExecutor(clusterService, threadPool, client))

.flatMap(List::stream)

.collect(toList()); final PersistentTasksExecutorRegistry registry = new PersistentTasksExecutorRegistry(settings, tasksExecutors);

final PersistentTasksClusterService persistentTasksClusterService =

new PersistentTasksClusterService(settings, registry, clusterService);

final PersistentTasksService persistentTasksService = new PersistentTasksService(settings, clusterService, threadPool, client); modules.add(b -> {

b.bind(Node.class).toInstance(this);

b.bind(NodeService.class).toInstance(nodeService);

b.bind(NamedXContentRegistry.class).toInstance(xContentRegistry);

b.bind(PluginsService.class).toInstance(pluginsService);

b.bind(Client.class).toInstance(client);

b.bind(NodeClient.class).toInstance(client);

b.bind(Environment.class).toInstance(this.environment);

b.bind(ThreadPool.class).toInstance(threadPool);

b.bind(NodeEnvironment.class).toInstance(nodeEnvironment);

b.bind(ResourceWatcherService.class).toInstance(resourceWatcherService);

b.bind(CircuitBreakerService.class).toInstance(circuitBreakerService);

b.bind(BigArrays.class).toInstance(bigArrays);

b.bind(ScriptService.class).toInstance(scriptModule.getScriptService());

b.bind(AnalysisRegistry.class).toInstance(analysisModule.getAnalysisRegistry());

b.bind(IngestService.class).toInstance(ingestService);

b.bind(UsageService.class).toInstance(usageService);

b.bind(NamedWriteableRegistry.class).toInstance(namedWriteableRegistry);

b.bind(MetaDataUpgrader.class).toInstance(metaDataUpgrader);

b.bind(MetaStateService.class).toInstance(metaStateService);

b.bind(IndicesService.class).toInstance(indicesService);

b.bind(AliasValidator.class).toInstance(aliasValidator);

b.bind(MetaDataCreateIndexService.class).toInstance(metaDataCreateIndexService);

b.bind(SearchService.class).toInstance(searchService);

b.bind(SearchTransportService.class).toInstance(searchTransportService);

b.bind(SearchPhaseController.class).toInstance(new SearchPhaseController(settings,

searchService::createReduceContext));

b.bind(Transport.class).toInstance(transport);

b.bind(TransportService.class).toInstance(transportService);

b.bind(NetworkService.class).toInstance(networkService);

b.bind(UpdateHelper.class).toInstance(new UpdateHelper(settings, scriptModule.getScriptService()));

b.bind(MetaDataIndexUpgradeService.class).toInstance(metaDataIndexUpgradeService);

b.bind(ClusterInfoService.class).toInstance(clusterInfoService);

b.bind(GatewayMetaState.class).toInstance(gatewayMetaState);

b.bind(Discovery.class).toInstance(discoveryModule.getDiscovery());

{

RecoverySettings recoverySettings = new RecoverySettings(settings, settingsModule.getClusterSettings());

processRecoverySettings(settingsModule.getClusterSettings(), recoverySettings);

b.bind(PeerRecoverySourceService.class).toInstance(new PeerRecoverySourceService(settings, transportService,

indicesService, recoverySettings));

b.bind(PeerRecoveryTargetService.class).toInstance(new PeerRecoveryTargetService(settings, threadPool,

transportService, recoverySettings, clusterService));

}

b.bind(HttpServerTransport.class).toInstance(httpServerTransport);

pluginComponents.stream().forEach(p -> b.bind((Class) p.getClass()).toInstance(p));

b.bind(PersistentTasksService.class).toInstance(persistentTasksService);

b.bind(PersistentTasksClusterService.class).toInstance(persistentTasksClusterService);

b.bind(PersistentTasksExecutorRegistry.class).toInstance(registry);

}

);

injector = modules.createInjector(); // TODO hack around circular dependencies problems in AllocationService

clusterModule.getAllocationService().setGatewayAllocator(injector.getInstance(GatewayAllocator.class)); List<LifecycleComponent> pluginLifecycleComponents = pluginComponents.stream()

.filter(p -> p instanceof LifecycleComponent)

.map(p -> (LifecycleComponent) p).collect(Collectors.toList());

pluginLifecycleComponents.addAll(pluginsService.getGuiceServiceClasses().stream()

.map(injector::getInstance).collect(Collectors.toList()));

resourcesToClose.addAll(pluginLifecycleComponents);

this.pluginLifecycleComponents = Collections.unmodifiableList(pluginLifecycleComponents);

client.initialize(injector.getInstance(new Key<Map<Action, TransportAction>>() {}),

() -> clusterService.localNode().getId(), transportService.getRemoteClusterService()); logger.debug("initializing HTTP handlers ...");

actionModule.initRestHandlers(() -> clusterService.state().nodes());

logger.info("initialized"); success = true;

} catch (IOException ex) {

throw new ElasticsearchException("failed to bind service", ex);

} finally {

if (!success) {

IOUtils.closeWhileHandlingException(resourcesToClose);

}

}

}

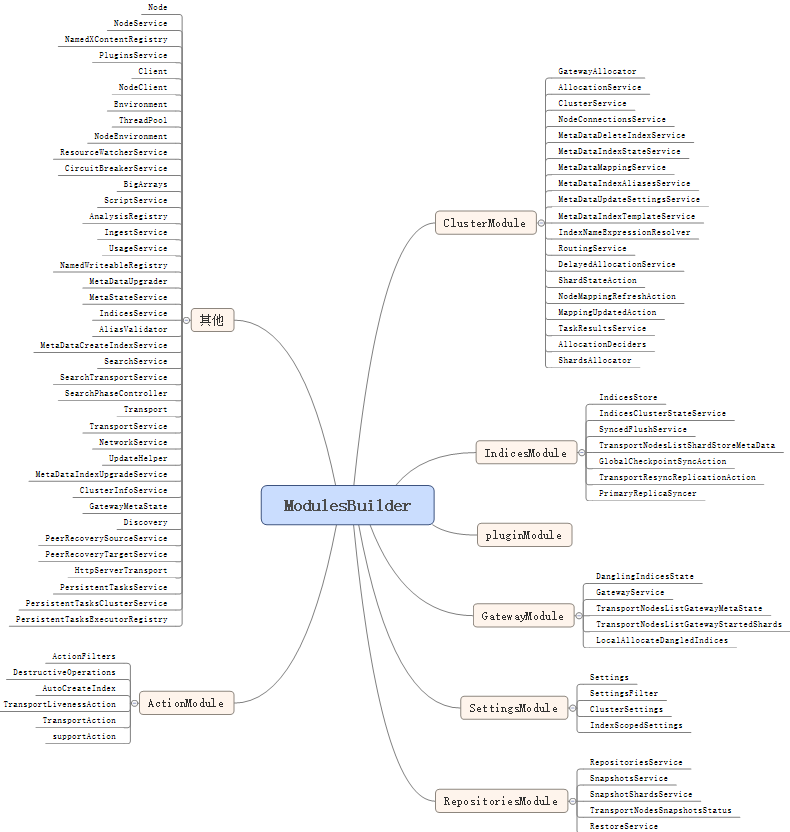

涉及的主要模块

上图的文本如下;

ClusterModule

GatewayAllocator

AllocationService

ClusterService

NodeConnectionsService

MetaDataDeleteIndexService

MetaDataIndexStateService

MetaDataMappingService

MetaDataIndexAliasesService

MetaDataUpdateSettingsService

MetaDataIndexTemplateService

IndexNameExpressionResolver

RoutingService

DelayedAllocationService

ShardStateAction

NodeMappingRefreshAction

MappingUpdatedAction

TaskResultsService

AllocationDeciders

ShardsAllocator

IndicesModule

IndicesStore

IndicesClusterStateService

SyncedFlushService

TransportNodesListShardStoreMetaData

GlobalCheckpointSyncAction

TransportResyncReplicationAction

PrimaryReplicaSyncer

其他

Node

NodeService

NamedXContentRegistry

PluginsService

Client

NodeClient

Environment

ThreadPool

NodeEnvironment

ResourceWatcherService

CircuitBreakerService

BigArrays

ScriptService

AnalysisRegistry

IngestService

UsageService

NamedWriteableRegistry

MetaDataUpgrader

MetaStateService

IndicesService

AliasValidator

MetaDataCreateIndexService

SearchService

SearchTransportService

SearchPhaseController

Transport

TransportService

NetworkService

UpdateHelper

MetaDataIndexUpgradeService

ClusterInfoService

GatewayMetaState

Discovery

PeerRecoverySourceService

PeerRecoveryTargetService

HttpServerTransport

PersistentTasksService

PersistentTasksClusterService

PersistentTasksExecutorRegistry

pluginModule

GatewayModule

DanglingIndicesState

GatewayService

TransportNodesListGatewayMetaState

TransportNodesListGatewayStartedShards

LocalAllocateDangledIndices

SettingsModule

Settings

SettingsFilter

ClusterSettings

IndexScopedSettings

ActionModule

ActionFilters

DestructiveOperations

AutoCreateIndex

TransportLivenessAction

TransportAction

supportAction

RepositoriesModule

RepositoriesService

SnapshotsService

SnapshotShardsService

TransportNodesSnapshotsStatus

RestoreService

elasticSearch6源码分析(2)模块化管理的更多相关文章

- Memcached源码分析之内存管理

先再说明一下,我本次分析的memcached版本是1.4.20,有些旧的版本关于内存管理的机制和数据结构与1.4.20有一定的差异(本文中会提到). 一)模型分析在开始解剖memcached关于内存管 ...

- TOMCAT源码分析——生命周期管理

前言 从server.xml文件解析出来的各个对象都是容器,比如:Server.Service.Connector等.这些容器都具有新建.初始化完成.启动.停止.失败.销毁等状态.tomcat的实现提 ...

- Mybatis 源码分析之事物管理

Mybatis 提供了事物的顶层接口: public interface Transaction { /** * Retrieve inner database connection * @retur ...

- Spring源码分析笔记--事务管理

核心类 InfrastructureAdvisorAutoProxyCreator 本质是一个后置处理器,和AOP的后置处理器类似,但比AOP的使用级别低.当开启AOP代理模式后,优先使用AOP的后置 ...

- Duilib源码分析(四)绘制管理器—CPaintManagerUI—(前期准备二)

接下来,我们继续分析UIlib.h文件中余下的文件,当然部分文件可能顺序错开分析,这样便于从简单到复杂的整个过程的里面,而避免一开始就出现各种不理解的地方. 1. UIManager.h:UI管理器, ...

- Duilib源码分析(四)绘制管理器—CPaintManagerUI

接下来,分析uilib.h中的UIManager.h,在正式分析CPaintManagerUI前先了解前面的一些宏.结构: 枚举类型EVENTTYPE_UI:定义了UIManager.h中事件通告类型 ...

- Duilib源码分析(四)绘制管理器—CPaintManagerUI—(前期准备四)

接下来,分析uilib.h中的WinImplBase.h和UIManager.h: WinImplBase.h:窗口实现基类,已实现大部分的工作,基本上窗口类均可直接继承该类,可发现该类继承于多个类, ...

- Duilib源码分析(四)绘制管理器—CPaintManagerUI—(前期准备三)

接下来,我们将继续分析UIlib.h文件中其他的文件, UIContainer.h, UIRender.h, WinImplBase.h, UIManager.h,以及其他布局.控件等: 1. UIR ...

- Duilib源码分析(四)绘制管理器—CPaintManagerUI—(前期准备一)

上节中提到在遍历创建控件树后,执行了以下操作: 1. CDialogBuilder构建各控件对象并形成控件树,并返回第一个控件对象pRoot: 2. m_pm.AttachDialo ...

随机推荐

- Android RelativeLayout属性介绍

在Android开发当中,虽然有五大布局,但我推荐使用的是相对布局,Google也是推荐使用相对布局,所有对RelativeLayout布局,常用的属性做一个整理: android:layout_ma ...

- JavaScript從剪切板中獲取圖片並在光標處插入

edit_content_text.addEventListener('paste', function (ev) { var clipboardData, items, item; co ...

- 基于jTopo的拓扑图设计工具库ujtopo

绘制拓扑图有很多开源的工具,知乎上也有人回答了这个问题: https://www.zhihu.com/question/41026400/answer/118726253 ujtopo是基于jTopo ...

- js框操作-----Selenium快速入门(八)

js框,就是JavaScript中的警告框(alert),确认框(confirm),提示框(prompt),他们都是模态窗口.什么是模态窗口,大家可以自行百度一下,简单说就是弹出的窗口是在最顶端的,你 ...

- ueditor图片上传插件的使用

在项目里使用到ueditor图片上传插件,以前图片上传都是直接使用js代码直接上传图片,比较麻烦,而且效率也比较低,而ueditor这款插件完美的解决了这个问题,这个是百度开发的一款富文本编辑器,在这 ...

- httprunner 使用总结

HttpRunner 概念 HttpRunner 是一款面向 HTTP(S) 协议的通用测试框架,只需编写维护一份 YAML/JSON 脚本,即可实现自动化测试.性能测试.线上监控.持续集成等多种测试 ...

- maven添加仓库没有的jar包

mvn install:install-file -DgroupId=com.oracle -DartifactId=ojdbc14 -Dversion=10.2.0.4.0 -Dpackaging= ...

- [Angular 6] 初学angular,环境全部最新,[ ng serve ] 不能启动,卡在 95% 不动 => 解决方案

2018.9.7 问题描述: 通过ng serve命令启动angular应用时,卡在95%, ctrl+c 停掉后看到错误内容为找不到ng_modules下的angular模块下的package.js ...

- css 三角图标

.triangle-right{ display: inline-block; width: 0; height: 0; border-top: 6px solid transparent; bord ...

- Xcode 10 如何创建自定义 Snippet

或者