Step-by-step from Markov Process to Markov Decision Process

In this post, I will illustrate Markov Property, Markov Reward Process and finally Markov Decision Process, which are fundamental concepts in Reinforcement Learning.

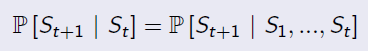

Markov Property

'The state is independent of the past given the present'

Markov Process (Markov Chain)

Keywords: state, transition matrix

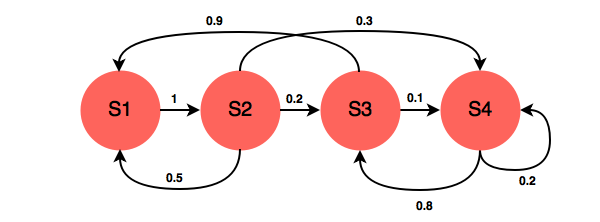

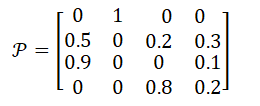

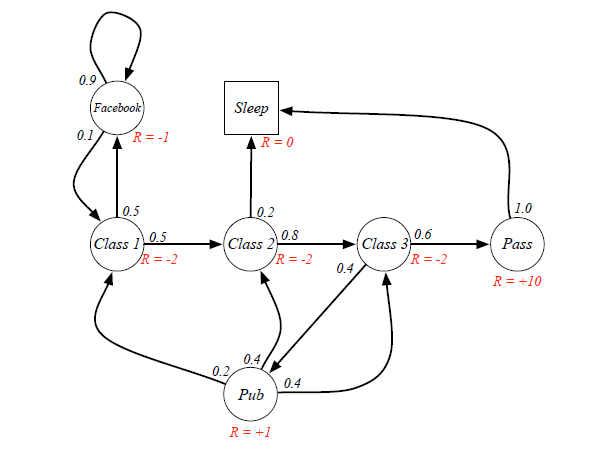

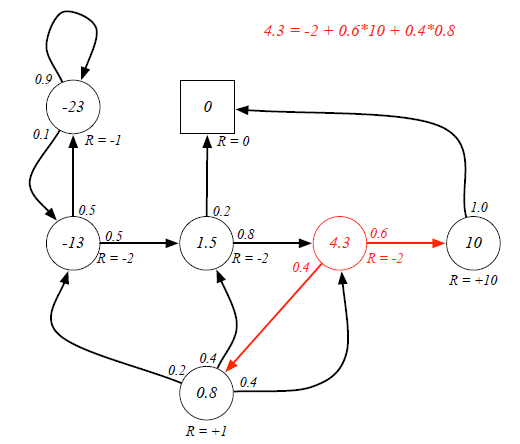

A Markov Process is defined by a Tuple(S,P), in which S is the state space, and P is the transition matrix. The following chart is an example.

A transition matrix demonstrates the probabilities of transitioning from one state to another.

In the example above, the transition matrix is:

Markov Reward Process: Markov Process with Value Judgement

Keywords: Reward, Return, Discount Factor, Value Function

MRP add two additional properties into Markov Chain: one is Reward, who represents the immediate feedback an agent can receive at time t+1 if he is in state s at time t; another property is Discount Factor γ∈[0,1]. So the representation tuple is [S,P,R,γ].

Formally, Reward is the immediate feedback, which means when agent gets to state s at time t, it can definetly receive this reward at time t+1. It is defined by:

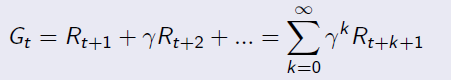

Given reward and discount factor, we can calculate the Return for a given senario by this equation:

Example for Return calculation:

Senario: Class1->Class2->Class3->Pass->Sleep, and the agent is at state=Class1.

Case 1: when gamma=0, g=-2+(-2)*0+(-2)*0+10*0=-2

Case 2: when gamma=1, g=-2+(-2)*1+(-2)*1+10*1=4

Case 3: when gamma=0.8, g=-2+(-2)*0.8+(-2)*0.64+10*=-4-1.6+5.12=-0.48

From different γ, we know our agent can be exetremely short-sighted (far-sighted) only for immediate reward, or trying to seek balance between short and long term reward.

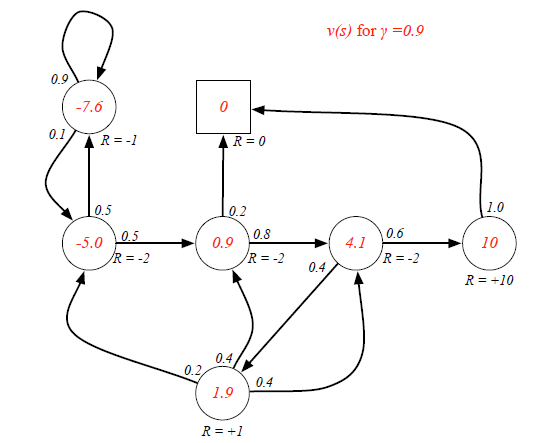

When an agent is in a certain state, the way to measure the total reward from this state over time is calculating expected Returns for all possible senarios. The function to calculate it is called Value Function:

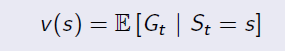

Ex. If the agent is at Class3 state, it has 0.6 and 0.4 probabilities to transite to Pass and Pub respectively. Because there are loops inside the graph, it's difficult to directly derive expected return from value function. (Forget the red labeled value, they are result...)

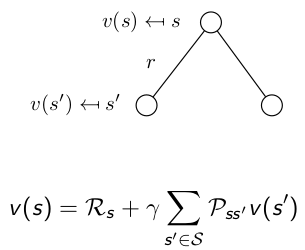

Bellman Equation helps to solve this complexity:

It breaks the value function into two parts: Immediate Reward and Future Reward: The future reward is discounted by γ, and it has probabilities on different states, so actually the future reward is an expectation.

The future reward is discounted by γ, and it has probabilities on different states, so actually the future reward is an expectation.

Now we can use Bellman Equation to solve value function:

Markov Decision Process: MRP with Actions

Keywords: Action

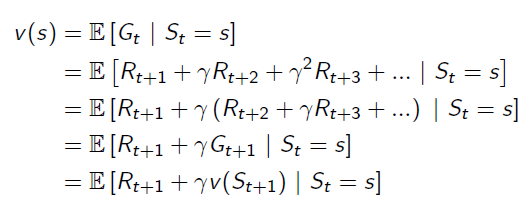

Markov Decision Process adds more complexity onto MRP, it is defined by a tuple(S,A,P,R,γ), in which:

S is state space, and γ is discount factor, they are same as MRP.

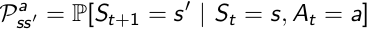

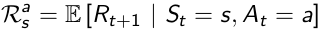

A is a finite set of Actions, which is new. Then because of the existense of Action, Transition Matrix and Reward Function are all conditional on both State and Action.

P is State Transition Matrix: it is conditional on state and action at time t, which means different actions would result in different distribution of state at time t+1.

R is Reward Function conditional upon state and action: also, different actions lead to different reward, despite of the same state s.

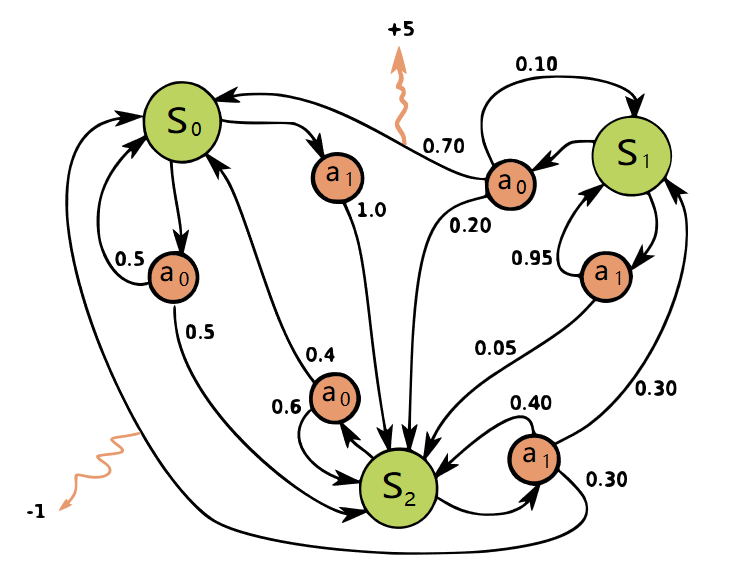

A graph(from Wikipedia) helps understanding the role of actions:

So by now, we have already had the model of the environment: all states, all possible actions and transition matrix conditional on state and actions.

Step-by-step from Markov Process to Markov Decision Process的更多相关文章

- Step by step Process of creating APD

Step by step Process of creating APD: Business Scenario: Here we are going to create an APD on top o ...

- Step by Step Process of Migrating non-CDBs and PDBs Using ASM for File Storage (Doc ID 1576755.1)

Step by Step Process of Migrating non-CDBs and PDBs Using ASM for File Storage (Doc ID 1576755.1) AP ...

- Tomcat Clustering - A Step By Step Guide --转载

Tomcat Clustering - A Step By Step Guide Apache Tomcat is a great performer on its own, but if you'r ...

- [ZZ] Understanding 3D rendering step by step with 3DMark11 - BeHardware >> Graphics cards

http://www.behardware.com/art/lire/845/ --> Understanding 3D rendering step by step with 3DMark11 ...

- e2e 自动化集成测试 架构 实例 WebStorm Node.js Mocha WebDriverIO Selenium Step by step (二) 图片验证码的识别

上一篇文章讲了“e2e 自动化集成测试 架构 京东 商品搜索 实例 WebStorm Node.js Mocha WebDriverIO Selenium Step by step 一 京东 商品搜索 ...

- Code Understanding Step by Step - We Need a Task

Code understanding is a task we are always doing, though we are not even aware that we're doing it ...

- enode框架step by step之saga的思想与实现

enode框架step by step之saga的思想与实现 enode框架系列step by step文章系列索引: 分享一个基于DDD以及事件驱动架构(EDA)的应用开发框架enode enode ...

- 课程五(Sequence Models),第一 周(Recurrent Neural Networks) —— 1.Programming assignments:Building a recurrent neural network - step by step

Building your Recurrent Neural Network - Step by Step Welcome to Course 5's first assignment! In thi ...

- 精通initramfs构建step by step

(一)hello world 一.initramfs是什么 在2.6版本的linux内核中,都包含一个压缩过的cpio格式 的打包文件.当内核启动时,会从这个打包文件中导出文件到内核的rootfs ...

随机推荐

- 教你使用Python制作酷炫二维码

这篇文章讲的是如何利用python制作狂拽酷炫吊炸天的二维码,非常有趣哦! 可能你见过的二维码大多长这样: 普普通通,平平凡凡,没什么特色... 但,如果二维码长这样呢! 或者 这样! 是不是炒鸡好看 ...

- Spring、Spring MVC、Struts2、、优缺点整理(转)

Spring 及其优点 大部分项目都少不了spring的身影,为什么大家对他如此青睐,而且对他的追捧丝毫没有减退之势呢 Spring是什么: Spring是一个轻量级的DI和AOP容器框架. 说它轻量 ...

- td内容超出 以…显示

table中的td内容超出以省略号显示,需满足的条件是: <style type="text/css"> table{ table-layout: fixed; bor ...

- linux测试 Sersync 是否正常

[root@SERSYNC web]# for i in {1..10000};do echo 123456 > /data/web/$i &>/dev/null;do ne [r ...

- [sqlmap 源码阅读] heuristicCheckSqlInjection 探索式注入

上面是探索式注入时大致调用过程,注入 PAYLOAD 1.)("'(.((. , 数据库报错,通过报错信息获取网站数据库类型(kb.dbms),并将保存报错(lasterrorpage). ...

- [CSS]CSS中使用span和div遇到的问题

一. span和div的区别 1.span是行级元素,div是块级元素2.span占用的宽度是内容的宽度,而div默认是一行.所以一般在页面中,只有一行或不到一行文字用span,元素占据多行时用div ...

- docker设置proxy

该方法是持久化的,修改后会一直生效.该方法覆盖了默认的docker.service文件. 1. 为docker服务创建一个内嵌的systemd目录 mkdir -p /etc/systemd/syst ...

- 如何在centos7中设置redis服务器开机自启动

1.简单说明centos7系统中有不同类型的程序,一类是操作系统的服务程序,另一类是第三方程序,而redis就是第三方程序,每次关机后开机都要手工重新启动,很麻烦,那么如何把redis设置为开机自启动 ...

- Ubuntu Server下MySql数据库备份脚本代码

明: 我这里要把MySql数据库存放目录/var/lib/mysql下面的pw85数据库备份到/home/mysql_data里面,并且保存为mysqldata_bak_2012_04_11.tar. ...

- CBV和FBV

CBV和FBV 刚开始写的视图都是基于函数版本的,称为FBV,后来写了一个NB的叫CBV,就是基于类的 FBV就是在URL中的一个路径对应一个函数 urlpatterns = [ url(r'^adm ...