Hive学习笔记——metadata

Hive结构体系

https://blog.csdn.net/zhoudaxia/article/details/8855937

可以在hive的jdbc接口中使用getMetaData方法来获取hive表的相关元信息

statement = connection.createStatement();

DatabaseMetaData meta = connection.getMetaData();

参考

https://blog.csdn.net/u010368839/article/details/76358831

hive metadata源码解析可以参考

https://cloud.tencent.com/developer/article/1330250

hive thrift接口可以参考

http://www.laicar.com/book/echapter/5cb0bcfe739207662ac88ed1/links/x_Chapter_16.html/OEBPS/Text/part0024.xhtml

获得表的信息接口,指定tableNamePattern为hive表名

ResultSet tableRet = meta.getTables(null, "%", "ads_nsh_trade", new String[]{"TABLE"});

while (tableRet.next()) {

System.out.println("TABLE_CAT:" + tableRet.getString("TABLE_CAT"));

System.out.println("TABLE_SCHEM:" + tableRet.getString("TABLE_SCHEM"));

System.out.println("TABLE_NAME => " + tableRet.getString("TABLE_NAME"));

System.out.println("table_type => " + tableRet.getString("table_type"));

System.out.println("remarks => " + tableRet.getString("remarks"));

System.out.println("type_cat => " + tableRet.getString("type_cat"));

System.out.println("type_schem => " + tableRet.getString("type_schem"));

System.out.println("type_name => " + tableRet.getString("type_name"));

System.out.println("self_referencing_col_name => " + tableRet.getString("self_referencing_col_name"));

System.out.println("ref_generation => " + tableRet.getString("ref_generation"));

}

其中的参数可以是

table_cat, table_schem, table_name, table_type, remarks, type_cat, type_schem, type_name, self_referencing_col_name, ref_generation

如果填写不正确将会抛出异常

java.sql.SQLException: Could not find COLUMN_NAME in [table_cat, table_schem, table_name, table_type, remarks, type_cat, type_schem, type_name, self_referencing_col_name, ref_generation]

at org.apache.hive.jdbc.HiveBaseResultSet.findColumn(HiveBaseResultSet.java:100)

at org.apache.hive.jdbc.HiveBaseResultSet.getString(HiveBaseResultSet.java:541)

输出的结果

TABLE_CAT:

TABLE_SCHEM:tmp

TABLE_NAME => ads_nsh_trade

table_type => TABLE

remarks => ???????????

type_cat => null

type_schem => null

type_name => null

self_referencing_col_name => null

ref_generation => null TABLE_CAT:

TABLE_SCHEM:default

TABLE_NAME => ads_nsh_trade

table_type => TABLE

remarks => null

type_cat => null

type_schem => null

type_name => null

self_referencing_col_name => null

ref_generation => null

如果再指定schemaPattern为hive库名

ResultSet tableRet = meta.getTables(null, "default", "ads_nsh_trade", new String[]{"TABLE"});

while (tableRet.next()) {

System.out.println("TABLE_CAT:" + tableRet.getString("TABLE_CAT"));

System.out.println("TABLE_SCHEM:" + tableRet.getString("TABLE_SCHEM"));

System.out.println("TABLE_NAME => " + tableRet.getString("TABLE_NAME"));

System.out.println("table_type => " + tableRet.getString("table_type"));

System.out.println("remarks => " + tableRet.getString("remarks"));

System.out.println("type_cat => " + tableRet.getString("type_cat"));

System.out.println("type_schem => " + tableRet.getString("type_schem"));

System.out.println("type_name => " + tableRet.getString("type_name"));

System.out.println("self_referencing_col_name => " + tableRet.getString("self_referencing_col_name"));

System.out.println("ref_generation => " + tableRet.getString("ref_generation"));

}

输出结果

TABLE_CAT:

TABLE_SCHEM:default

TABLE_NAME => ads_nsh_trade

table_type => TABLE

remarks => null

type_cat => null

type_schem => null

type_name => null

self_referencing_col_name => null

ref_generation => null

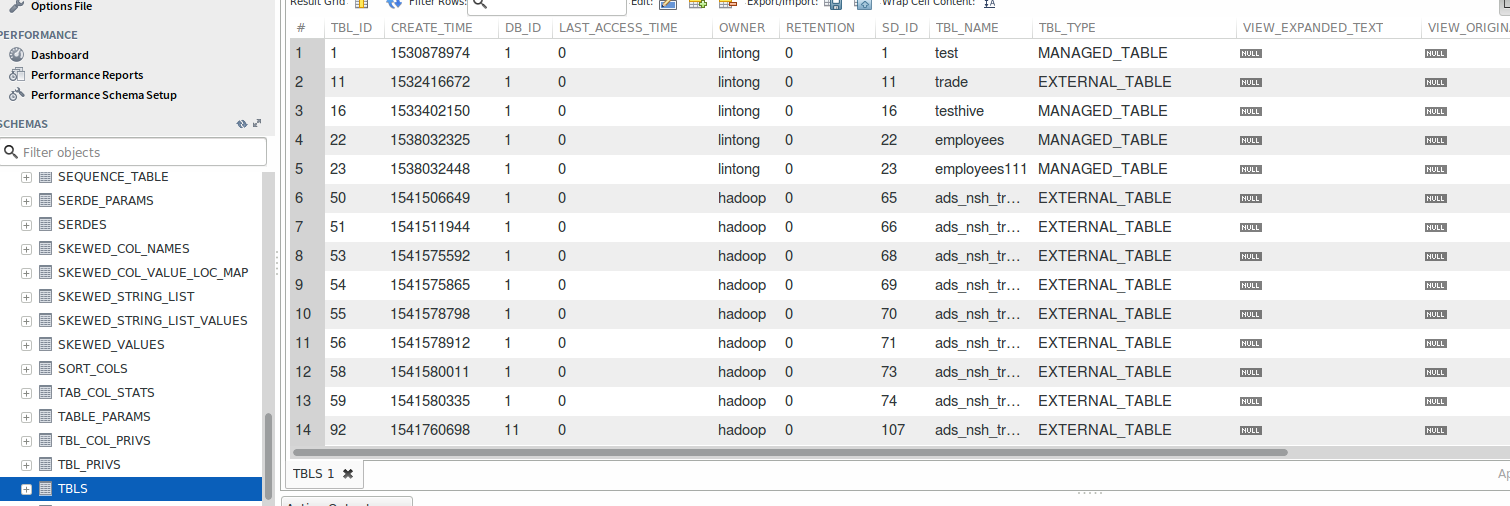

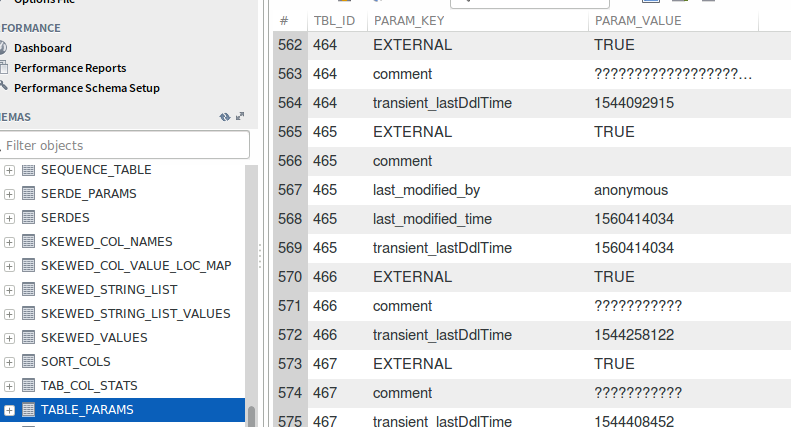

在hive的元数据表中,表的信息主要在TBLS和TABLE_PARAMS这两张表中

参考

https://blog.csdn.net/haozhugogo/article/details/73274832

比如TBLS表

和TABLE_PARAMS表

获得表的字段信息的接口

ResultSet rs1 = meta.getColumns("default", "%", "ads_nsh_trade", "%");

while (rs1.next()) {

String tableCat = rs1.getString("table_cat");

String tableSchem = rs1.getString("table_schem");

String tableName = rs1.getString("table_name");

String columnName = rs1.getString("COLUMN_NAME");

String columnType = rs1.getString("TYPE_NAME");

String remarks = rs1.getString("REMARKS");

int datasize = rs1.getInt("COLUMN_SIZE");

int digits = rs1.getInt("DECIMAL_DIGITS");

int nullable = rs1.getInt("NULLABLE");

System.out.println(tableCat + " " + tableSchem + " " + tableName + " " + columnName + " " +

columnType + " " + datasize + " " + digits + " " + nullable + " " + remarks);

}

其中的参数可以是

table_cat, table_schem, table_name, column_name, data_type, type_name, column_size, buffer_length, decimal_digits, num_prec_radix, nullable, remarks, column_def, sql_data_type, sql_datetime_sub, char_octet_length, ordinal_position, is_nullable, scope_catalog, scope_schema, scope_table, source_data_type, is_auto_increment

输出的结果

null default ads_nsh_trade test_string STRING 2147483647 0 1 string??????

null default ads_nsh_trade test_boolean BOOLEAN 0 0 1 boolean??????

null default ads_nsh_trade test_short SMALLINT 5 0 1 short??????

null default ads_nsh_trade test_double DOUBLE 15 15 1 double??????

null default ads_nsh_trade test_byte TINYINT 3 0 1 byte??????

null default ads_nsh_trade test_list array<string> 0 0 1 list<String>????

null default ads_nsh_trade test_map map<string,int> 0 0 1 map<String,Int>????

null default ads_nsh_trade test_int INT 10 0 1 int??????

null default ads_nsh_trade test_set array<bigint> 0 0 1 set<Long>??????

null default ads_nsh_trade col_name DECIMAL 10 2 1 null

null default ads_nsh_trade col_name2 DECIMAL 10 2 1 null

null default ads_nsh_trade test_long BIGINT 19 0 1 null

null tmp ads_nsh_trade test_boolean BOOLEAN 0 0 1 boolean??????

null tmp ads_nsh_trade test_short SMALLINT 5 0 1 short??????

null tmp ads_nsh_trade test_double DOUBLE 15 15 1 double??????

null tmp ads_nsh_trade test_byte TINYINT 3 0 1 byte??????

null tmp ads_nsh_trade test_list array<string> 0 0 1 list<String>????

null tmp ads_nsh_trade test_map map<string,int> 0 0 1 map<String,Int>????

null tmp ads_nsh_trade test_int INT 10 0 1 int??????

null tmp ads_nsh_trade test_set array<bigint> 0 0 1 set<Long>??????

null tmp ads_nsh_trade test_long BIGINT 19 0 1 null

null tmp ads_nsh_trade test_string STRING 2147483647 0 1 null

Hive学习笔记——metadata的更多相关文章

- hive学习笔记之十:用户自定义聚合函数(UDAF)

欢迎访问我的GitHub 这里分类和汇总了欣宸的全部原创(含配套源码):https://github.com/zq2599/blog_demos 本篇概览 本文是<hive学习笔记>的第十 ...

- hive学习笔记之十一:UDTF

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之一:基本数据类型

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之三:内部表和外部表

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之四:分区表

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之五:分桶

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之六:HiveQL基础

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之七:内置函数

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

- hive学习笔记之九:基础UDF

欢迎访问我的GitHub https://github.com/zq2599/blog_demos 内容:所有原创文章分类汇总及配套源码,涉及Java.Docker.Kubernetes.DevOPS ...

随机推荐

- Android研发技术的进阶之路

前言 移动研发火热不停,越来越多人开始学习android开发.但很多人感觉入门容易成长很难,对未来比较迷茫,不知道自己技能该怎么提升,到达下一阶段需要补充哪些内容.市面上也多是谈论知识图谱,缺少体系和 ...

- 洛谷P3810 陌上花开(CDQ分治)

洛谷P3810 陌上花开 传送门 题解: CDQ分治模板题. 一维排序,二维归并,三维树状数组. 核心思想是分治,即计算左边区间对右边区间的影响. 代码如下: #include <bits/st ...

- Docker 中 MySQL 数据的导入导出

Creating database dumps Most of the normal tools will work, although their usage might be a little c ...

- runloop事件、UI更新、observer与coranimation

一.触摸事件派发与视图绘制打包 __CFRUNLOOP_IS_CALLING_OUT_TO_A_SOURCE0_PERFORM_FUNCTION__ __dispatchPreprocessedEve ...

- Async/await promise实现

An async function can contain an await expression that pauses the execution of the async function an ...

- 使用grok exporter 做为log 与prometheus 的桥

grok 是一个工具,可以用来解析非结构化的日志文件,可以使其结构化,同时方便查询,grok 被logstash 大量依赖 同时社区也提供了一个prometheus 的exporter 可以方便的进行 ...

- win10系统2分钟睡眠

https://blog.csdn.net/widenstage/article/details/78982722 HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSe ...

- 前端微服务初试(singleSpa)

1.基本概念 实现一套微前端架构,可以把其分成四部分(参考:https://alili.tech/archive/11052bf4/) 加载器:也就是微前端架构的核心,主要用来调度子应用,决定何时展示 ...

- MySQL避免插入重复记录:唯一性约束

mysql在存在主键冲突或者唯一键冲突的情况下,根据插入策略不同,一般有以下三种避免方法.1.insert ignore2.replace into3.insert on duplicate ke ...

- python: isdigit int float 使用

>>> num1 = '2.0' >>> print num1.isdigit() False >>> num2 = ' >>> ...