017 Ceph的集群管理_3

一、验证OSD

1.1 osd状态

运行状态有:up,in,out,down

正常状态的OSD为up且in

当OSD故障时,守护进程offline,在5分钟内,集群仍会将其标记为up和in,这是为了防止网络抖动

如果5分钟内仍未恢复,则会标记为down和out。此时该OSD上的PG开始迁移。这个5分钟的时间间隔可以通过mon_osd_down_out_interval配置项修改

当故障的OSD重新上线以后,会触发新的数据再平衡

当集群有noout标志位时,则osd下线不会导致数据重平衡

OSD每隔6s会互相验证状态。并每隔120s向mon报告一次状态。

容量状态:nearfull,full

1.2 常用指令

[root@ceph2 ~]# ceph osd stat

osds: up, in; remapped pgs # 显示OSD状态

[root@ceph2 ~]# ceph osd df

ID CLASS WEIGHT REWEIGHT SIZE USE AVAIL %USE VAR PGS # 报告osd使用量

hdd 0.01500 1.00000 15348M 112M 15236M 0.74 0.58

hdd 0.01500 1.00000 15348M 112M 15236M 0.73 0.57

hdd 0.01500 1.00000 15348M 114M 15234M 0.75 0.58

hdd 0.01500 1.00000 15348M 269M 15079M 1.76 1.37

hdd 0.01500 1.00000 15348M 208M 15140M 1.36 1.07

hdd 0.01500 1.00000 15348M 228M 15120M 1.49 1.17

hdd 0.01500 1.00000 15348M 245M 15103M 1.60 1.25

hdd 0.01500 1.00000 15348M 253M 15095M 1.65 1.29

hdd 0.01500 1.00000 15348M 218M 15130M 1.42 1.11

hdd 0.01500 1.00000 15348M 269M 15079M 1.76 1.37

hdd 0.01500 1.00000 15348M 208M 15140M 1.36 1.07

hdd 0.01500 1.00000 15348M 228M 15120M 1.49 1.17

hdd 0.01500 1.00000 15348M 245M 15103M 1.60 1.25

hdd 0.01500 1.00000 15348M 253M 15095M 1.65 1.29

hdd 0.01500 1.00000 15348M 218M 15130M 1.42 1.11

TOTAL 134G 1765M 133G 1.28

MIN/MAX VAR: 0.57/1.37 STDDEV: 0.36

[root@ceph2 ~]# ceph osd find osd.0

{

"osd": ,

"ip": "172.25.250.11:6800/185671", #查找指定osd位置

"crush_location": {

"host": "ceph2-ssd",

"root": "ssd-root"

}

}

1.3 osd的心跳参数

osd_heartbeat_interval # osd之间传递心跳的间隔时间

osd_heartbeat_grace # 一个osd多久没心跳,就会被集群认为它down了

mon_osd_min_down_reporters # 确定一个osd状态为down的最少报告来源osd数

mon_osd_min_down_reports # 一个OSD必须重复报告一个osd状态为down的次数

mon_osd_down_out_interval # 当osd停止响应多长时间,将其标记为down和out

mon_osd_report_timeout # monitor宣布失败osd为down前的等待时间

osd_mon_report_interval_min # 一个新的osd加入集群时,等待多长时间,开始向monitor报告osd_mon_report_interval_max # monitor允许osd报告的最大间隔,超时就认为它down了

osd_mon_heartbeat_interval # osd向monitor报告心跳的时间

二、管理OSD容量

当集群容量达到mon_osd_nearfull_ratio的值时,集群会进入HEALTH_WARN状态。这是为了在达到full_ratio之前,提醒添加OSD。默认设置为0.85,即85%

当集群容量达到mon_osd_full_ratio的值时,集群将停止写入,但允许读取。集群会进入到HEALTH_ERR状态。默认为0.95,即95%。这是为了防止当一个或多个OSD故障时仍留有余地能重平衡数据

2.1 设置

[root@ceph2 ~]# ceph osd set-nearfull-ratio 0.75

osd set-nearfull-ratio 0.75

[root@ceph2 ~]# ceph osd set-full-ratio 0.85

osd set-full-ratio 0.85

[root@ceph2 ~]# ceph osd dump

crush_version full_ratio 0.85 backfillfull_ratio 0.9 nearfull_ratio 0.75

[root@ceph2 ~]# ceph daemon osd.0 config show|grep full_ratio

"mon_osd_backfillfull_ratio": "0.900000",

"mon_osd_full_ratio": "0.950000",

"mon_osd_nearfull_ratio": "0.850000",

"osd_failsafe_full_ratio": "0.970000",

"osd_pool_default_cache_target_full_ratio": "0.800000",

[root@ceph2 ~]# ceph daemon osd.0 config show|grep full_ratio

"mon_osd_backfillfull_ratio": "0.900000",

"mon_osd_full_ratio": "0.950000",

"mon_osd_nearfull_ratio": "0.850000",

"osd_failsafe_full_ratio": "0.970000",

"osd_pool_default_cache_target_full_ratio": "0.800000",

[root@ceph2 ~]# ceph tell osd.* injectargs --mon_osd_full_ratio 0.85

[root@ceph2 ~]# ceph daemon osd.0 config show|grep full_ratio

"mon_osd_backfillfull_ratio": "0.900000",

"mon_osd_full_ratio": "0.850000",

"mon_osd_nearfull_ratio": "0.850000",

"osd_failsafe_full_ratio": "0.970000",

"osd_pool_default_cache_target_full_ratio": "0.800000",

三、集群状态full的问题

3.1 设置集群状态为full

[root@ceph2 ~]# ceph osd set full

full is set

[root@ceph2 ~]# ceph -s

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_WARN

full flag(s) set services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

mds: cephfs-// up {=ceph2=up:active}, up:standby

osd: osds: up, in; remapped pgs

flags full

rbd-mirror: daemon active data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: active+clean

active+clean+remapped #pg有问题 io:

client: B/s rd, B/s wr, op/s rd, op/s wr

3.2 取消full状态

[root@ceph2 ~]# ceph osd unset full

full is unset

[root@ceph2 ~]# ceph -s

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_ERR

full ratio(s) out of order

Reduced data availability: pgs inactive, pgs peering, pgs stale

Degraded data redundancy: pgs unclean #PG也有问题 services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

mds: cephfs-// up {=ceph2=up:active}, up:standby

osd: osds: up, in

rbd-mirror: daemon active data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: 5.970% pgs not active

active+clean

stale+peering io:

client: B/s rd, B/s wr, op/s rd, op/s wr

查看,去的定是一个存储池ssdpool的问题

3.3 删除ssdpool

[root@ceph2 ~]# ceph osd pool delete ssdpool ssdpool --yes-i-really-really-mean-it

[root@ceph2 ~]# ceph -s

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_ERR

full ratio(s) out of order services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

mds: cephfs-// up {=ceph2=up:active}, up:standby

osd: osds: up, in

rbd-mirror: daemon active data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: active+clean io:

client: B/s rd, op/s rd, op/s wr

[root@ceph2 ~]# ceph osd unset full

[root@ceph2 ceph]# ceph -s

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_ERR

full ratio(s) out of order #依然不起作用 services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

mds: cephfs-// up {=ceph2=up:active}, up:standby

osd: osds: up, in

rbd-mirror: daemon active data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: active+clean io:

client: B/s rd, B/s wr, op/s rd, op/s wr

[root@ceph2 ceph]# ceph health detail

HEALTH_ERR full ratio(s) out of order

OSD_OUT_OF_ORDER_FULL full ratio(s) out of order

full_ratio (0.85) < backfillfull_ratio (0.9), increased #发现是在前面配置full_ratio导致小于backfillfull_ratio

3.4 重设full_ratio

[root@ceph2 ceph]# ceph osd set-full-ratio 0.95

osd set-full-ratio 0.95

[root@ceph2 ceph]# ceph osd set-nearfull-ratio 0.9

osd set-nearfull-ratio 0.9

[root@ceph2 ceph]# ceph osd dump

epoch

fsid 35a91e48--4e96-a7ee-980ab989d20d

created -- ::22.552356

modified -- ::42.035882

flags sortbitwise,recovery_deletes,purged_snapdirs

crush_version

full_ratio 0.95

backfillfull_ratio 0.9

nearfull_ratio 0.9

require_min_compat_client jewel

min_compat_client jewel

require_osd_release luminous

pool 'testpool' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rbd

snap 'testpool-snap-20190316' -- ::34.150433

snap 'testpool-snap-2' -- ::15.430823

pool 'rbd' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rbd

removed_snaps [~]

pool 'rbdmirror' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rbd

removed_snaps [~]

pool '.rgw.root' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rgw

pool 'default.rgw.control' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rgw

pool 'default.rgw.meta' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rgw

pool 'default.rgw.log' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rgw

pool 'xiantao.rgw.control' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change owner flags hashpspool stripe_width application rgw

pool 'xiantao.rgw.meta' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change owner flags hashpspool stripe_width application rgw

pool 'xiantao.rgw.log' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change owner flags hashpspool stripe_width application rgw

pool 'cephfs_metadata' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application cephfs

pool 'cephfs_data' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application cephfs

pool 'test' replicated size min_size crush_rule object_hash rjenkins pg_num pgp_num last_change flags hashpspool stripe_width application rbd

max_osd

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ exists,up 745dce53-1c63-4c50-b434-d441038dafe4

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ exists,up a7562276-6dfd--b248-a7cbdb64ebec

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ exists,up bbef1a00-3a31-48a0-a065-3a16b9edc3b1

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ exists,up e934a4fb--4e85-895c-f66cc5534ceb

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ exists,up e2c33bb3-02d2-4cce-85e8-25c419351673

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ exists,up d299e33c-0c24-4cd9-a37a-a6fcd420a529

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ 172.25.250.11:/ exists,up debe7f4e-656b-48e2-a0b2-bdd8613afcc4

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ 172.25.250.13:/ exists,up 8c403679--48d0-812b-72050ad43aae

osd. up in weight up_from up_thru down_at last_clean_interval [,) 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ 172.25.250.12:/ exists,up bb73edf8-ca97-40c3-a727-d5fde1a9d1d9

3.5 再次尝试

[root@ceph2 ceph]# ceph osd unset full

full is unset

[root@ceph2 ceph]# ceph -s

cluster:

id: 35a91e48--4e96-a7ee-980ab989d20d

health: HEALTH_OK #成功 services:

mon: daemons, quorum ceph2,ceph3,ceph4

mgr: ceph4(active), standbys: ceph2, ceph3

mds: cephfs-// up {=ceph2=up:active}, up:standby

osd: osds: up, in

rbd-mirror: daemon active data:

pools: pools, pgs

objects: objects, MB

usage: MB used, GB / GB avail

pgs: active+clean io:

client: B/s wr, op/s rd, op/s wr

四、手动控制PG的Primary OSD

可以通过手动修改osd的权重以提升 特定OSD被选为PG Primary OSD的概率,避免将速度慢的磁盘用作primary osd

4.1 查看osd.4为主的pg

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"

dumped all #查看OSD.4位主的PG

.7e active+clean -- ::25.280490 '0 311:517 [4,0,8] 4 [4,0,8] 4 0' -- ::30.900982 '0 2019-03-24 06:16:20.594466

.7b active+clean -- ::25.256673 '0 311:523 [4,6,5] 4 [4,6,5] 4 0' -- ::27.659275 '0 2019-03-23 09:10:34.438462

15.77 active+clean -- ::25.282033 '0 311:162 [4,5,0] 4 [4,5,0] 4 0' -- ::28.324399 '0 2019-03-26 17:10:19.390530

1.77 active+clean -- ::03.733420 '0 312:528 [4,0,5] 4 [4,0,5] 4 0' -- ::03.733386 '0 2019-03-27 08:26:21.579623

.7a active+clean -- ::25.257051 '0 311:158 [4,2,3] 4 [4,2,3] 4 0' -- ::22.186467 '0 2019-03-26 17:10:19.390530

.7c active+clean -- ::25.273391 '0 311:144 [4,0,8] 4 [4,0,8] 4 0' -- ::38.124535 '0 2019-03-26 17:10:19.390530

1.72 active+clean -- ::25.276870 '0 311:528 [4,8,0] 4 [4,8,0] 4 0' -- ::06.125767 '0 2019-03-24 13:59:12.569691

15.7f active+clean -- ::25.258669 '0 311:149 [4,8,0] 4 [4,8,0] 4 0' -- ::22.082918 '0 2019-03-27 21:48:22.082918

15.69 active+clean -- ::25.258736 '0 311:150 [4,0,8] 4 [4,0,8] 4 0' -- ::06.805003 '0 2019-03-28 00:07:06.805003

1.67 active+clean -- ::25.275098 '0 311:517 [4,0,8] 4 [4,0,8] 4 0' -- ::41.166673 '0 2019-03-24 06:16:29.598240

14.22 active+clean -- ::25.257257 '0 311:149 [4,5,6] 4 [4,5,6] 4 0' -- ::16.816439 '0 2019-03-26 17:09:56.246887

14.29 active+clean -- ::25.252788 '0 311:151 [4,5,6] 4 [4,5,6] 4 0' -- ::42.189434 '0 2019-03-26 17:09:56.246887

5.21 active+clean -- ::25.257694 '139 311:730 [4,6,2] 4 [4,6,2] 4 189' -- ::33.483252 '139 2019-03-25 08:42:13.970938

.2a active+clean -- ::25.256911 '0 311:150 [4,6,5] 4 [4,6,5] 4 0' -- ::45.512728 '0 2019-03-26 17:09:56.246887

.2b active+clean -- ::25.258316 '1 311:162 [4,6,2] 4 [4,6,2] 4 214' -- ::05.092971 '0 2019-03-26 17:09:56.246887

14.2d active+clean -- ::25.282383 '1 311:171 [4,3,2] 4 [4,3,2] 4 214' -- ::08.690676 '0 2019-03-26 17:09:56.246887

.2c active+clean -- ::25.258195 '0 311:157 [4,3,5] 4 [4,3,5] 4 0' -- ::17.819746 '0 2019-03-28 02:03:17.819746

.1a active+clean -- ::25.281807 '2 311:267 [4,2,6] 4 [4,2,6] 4 161' -- ::45.639905 '2 2019-03-26 12:51:51.614941

5.18 active+clean -- ::25.258482 '98 311:621 [4,8,3] 4 [4,8,3] 4 172' -- ::03.723920 '98 2019-03-27 21:27:03.723920

15.14 active+clean -- ::25.252656 '0 311:148 [4,6,5] 4 [4,6,5] 4 0' -- ::18.466744 '0 2019-03-26 17:10:19.390530

15.17 active+clean -- ::25.256549 '0 311:164 [4,5,0] 4 [4,5,0] 4 0' -- ::46.490357 '0 2019-03-26 17:10:19.390530

1.18 active+clean -- ::25.277674 '0 311:507 [4,6,8] 4 [4,6,8] 4 0' -- ::47.944309 '0 2019-03-26 18:31:14.774358

.1c active+clean -- ::25.257857 '250 311:19066 [4,2,6] 4 [4,2,6] 4 183' -- ::09.856046 '250 2019-03-25 23:36:49.652800

15.19 active+clean -- ::25.257506 '0 311:164 [4,2,3] 4 [4,2,3] 4 0' -- ::31.020637 '0 2019-03-26 17:10:19.390530

16.7 active+clean -- ::25.282212 '0 311:40 [4,3,2] 4 [4,3,2] 4 0' -- ::12.974900 '0 2019-03-26 21:40:00.073686

.e active+clean -- ::25.258109 '0 311:251 [4,6,2] 4 [4,6,2] 4 0' -- ::11.963158 '0 2019-03-27 06:36:11.963158

13.5 active+clean -- ::25.257437 '0 311:168 [4,0,2] 4 [4,0,2] 4 0' -- ::21.320611 '0 2019-03-26 13:31:34.012304

16.19 active+clean -- ::25.257560 '0 311:42 [4,2,6] 4 [4,2,6] 4 0' -- ::53.015903 '0 2019-03-26 21:40:00.073686

7.1 active+clean -- ::25.257994 '14 311:303 [4,2,3] 4 [4,2,3] 4 192' -- ::04.858102 '14 2019-03-27 12:08:04.858102

14.9 active+clean -- ::25.252723 '0 311:163 [4,3,5] 4 [4,3,5] 4 0' -- ::30.060857 '0 2019-03-28 04:45:30.060857

5.1 active+clean -- ::25.258586 '119 311:635 [4,3,8] 4 [4,3,8] 4 189' -- ::39.725401 '119 2019-03-25 09:40:24.623173

13.6 active+clean -- ::25.257198 '0 311:157 [4,5,0] 4 [4,5,0] 4 0' -- ::19.196870 '0 2019-03-26 13:31:34.012304

.f active+clean -- ::25.258053 '128 311:1179 [4,2,3] 4 [4,2,3] 4 183' -- ::30.134353 '128 2019-03-22 12:21:02.832942

16.1d active+clean -- ::25.257306 '0 311:42 [4,0,2] 4 [4,0,2] 4 0' -- ::37.043172 '0 2019-03-26 21:40:00.073686

12.0 active+clean -- ::25.258535 '0 311:140 [4,6,8] 4 [4,6,8] 4 0' -- ::11.927266 '0 2019-03-26 13:31:31.916623

16.1f active+clean -- ::25.258248 '0 311:41 [4,0,5] 4 [4,0,5] 4 0' -- ::48.349363 '0 2019-03-28 08:01:48.349363

9.6 active+clean -- ::25.257612 '0 311:211 [4,2,3] 4 [4,2,3] 4 0' -- ::31.386965 '0 2019-03-27 23:02:31.386965

.f active+clean -- ::25.279868 '0 311:503 [4,3,8] 4 [4,3,8] 4 0' -- ::02.022670 '0 2019-03-24 07:50:30.260358

1.10 active+clean -- ::25.257936 '0 311:538 [4,2,0] 4 [4,2,0] 4 0' -- ::31.429879 '0 2019-03-23 06:36:38.178339

1.12 active+clean -- ::25.256725 '0 311:527 [4,3,5] 4 [4,3,5] 4 0' -- ::49.213043 '0 2019-03-25 17:35:25.833155

16.2 active+clean -- ::25.278599 '0 311:31 [4,6,8] 4 [4,6,8] 4 0' -- ::10.065419 '0 2019-03-26 21:40:00.073686

15.1d active+clean -- ::25.252838 '0 311:155 [4,5,3] 4 [4,5,3] 4 0' -- ::04.416619 '0 2019-03-26 17:10:19.390530

.2a active+clean -- ::25.281096 '107 311:621 [4,6,8] 4 [4,6,8] 4 172' -- ::40.781443 '107 2019-03-25 17:35:38.835798

.2b active+clean -- ::25.257363 '225 311:2419 [4,0,5] 4 [4,0,5] 4 189' -- ::42.972494 '225 2019-03-25 04:13:33.567532

1.31 active+clean -- ::25.256401 '0 311:514 [4,5,6] 4 [4,5,6] 4 0' -- ::23.076113 '0 2019-03-25 10:06:22.224727

5.31 active+clean -- ::25.282326 '113 311:661 [4,2,3] 4 [4,2,3] 4 189' -- ::50.633871 '113 2019-03-25 10:27:03.837772

14.37 active+clean -- ::25.282270 '0 311:153 [4,5,0] 4 [4,5,0] 4 0' -- ::25.969312 '0 2019-03-26 17:09:56.246887

15.34 active+clean -- ::25.258369 '0 311:132 [4,8,3] 4 [4,8,3] 4 0' -- ::49.442053 '0 2019-03-26 17:10:19.390530

1.43 active+clean -- ::25.279242 '0 311:501 [4,6,8] 4 [4,6,8] 4 0' -- ::51.254952 '0 2019-03-26 13:16:37.312462

1.48 active+clean -- ::25.281910 '0 311:534 [4,0,5] 4 [4,0,5] 4 0' -- ::00.053793 '0 2019-03-24 04:51:10.218424

15.45 active+clean -- ::25.258421 '0 311:155 [4,0,8] 4 [4,0,8] 4 0' -- ::15.366349 '0 2019-03-26 17:10:19.390530

.4e active+clean -- ::25.252906 '0 311:519 [4,5,3] 4 [4,5,3] 4 0' -- ::17.495390 '0 2019-03-21 01:02:41.709506

1.51 active+clean -- ::25.281974 '0 311:530 [4,6,2] 4 [4,6,2] 4 0' -- ::04.730515 '0 2019-03-26 00:23:54.419333

.5a active+clean -- ::25.257140 '0 311:158 [4,0,2] 4 [4,0,2] 4 0' -- ::17.000955 '0 2019-03-26 17:10:19.390530

1.56 active+clean -- ::25.256961 '0 311:521 [4,5,3] 4 [4,5,3] 4 0' -- ::10.512235 '0 2019-03-27 16:24:10.512235

15.50 active+clean -- ::25.252599 '0 311:154 [4,5,3] 4 [4,5,3] 4 0' -- ::01.475477 '0 2019-03-26 17:10:19.390530

统计

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all

4.2 权重设为0

[root@ceph2 ceph]# ceph osd primary-affinity osd.4 0

Error EPERM: you must enable 'mon osd allow primary affinity = true' on the mons before you can adjust primary-affinity. note that older clients will no longer be able to communicate with the cluster.

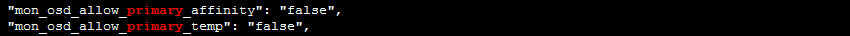

[root@ceph2 ceph]# ceph daemon /var/run/ceph/ceph-mon.$(hostname -s).asok config show|grep primary

"mon_osd_allow_primary_affinity": "false",

"mon_osd_allow_primary_temp": "false",

4.3 修改配置文件

[root@ceph1 ~]# vim /etc/ceph/ceph.conf

[global]

fsid = 35a91e48--4e96-a7ee-980ab989d20d

mon initial members = ceph2,ceph3,ceph4

mon host = 172.25.250.11,172.25.250.12,172.25.250.13

public network = 172.25.250.0/

cluster network = 172.25.250.0/

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

[osd]

osd mkfs type = xfs

osd mkfs options xfs = -f -i size=

osd mount options xfs = noatime,largeio,inode64,swalloc

osd journal size = [mon]

mon_allow_pool_delete = true

mon_osd_allow_primary_affinity = true

[root@ceph1 ~]# ansible all -m copy -a 'src=/etc/ceph/ceph.conf dest=/etc/ceph/ceph.conf owner=ceph group=ceph mode=0644'

[root@ceph1 ~]# ansible mons -m shell -a ' systemctl restart ceph-mon.target'

[root@ceph1 ~]# ansible mons -m shell -a ' systemctl restart ceph-osd.target'

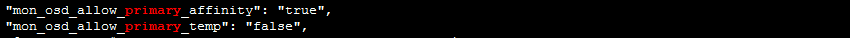

[root@ceph2 ceph]# ceph daemon /var/run/ceph/ceph-mon.$(hostname -s).asok config show|grep primary

没有生效

4.5 使用命令行修改

[root@ceph2 ceph]# ceph tell mon.\* injectargs '--mon_osd_allow_primary_affinity=true'

mon.ceph2: injectargs:mon_osd_allow_primary_affinity = 'true' (not observed, change may require restart)

mon.ceph3: injectargs:mon_osd_allow_primary_affinity = 'true' (not observed, change may require restart)

mon.ceph4: injectargs:mon_osd_allow_primary_affinity = 'true' (not observed, change may require restart)

[root@ceph2 ceph]# ceph daemon /var/run/ceph/ceph-mon.$(hostname -s).asok config show|grep primary

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all

4.6 修改权重

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph osd primary-affinity osd.

set osd. primary-affinity to ()

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph osd primary-affinity osd. 0.5

set osd. primary-affinity to 0.5 ()

[root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all [root@ceph2 ceph]# ceph pg dump|grep 'active+clean'|egrep "\[4,"|wc -l

dumped all

博主声明:本文的内容来源主要来自誉天教育晏威老师,由本人实验完成操作验证,需要的博友请联系誉天教育(http://www.yutianedu.com/),获得官方同意或者晏老师(https://www.cnblogs.com/breezey/)本人同意即可转载,谢谢!

017 Ceph的集群管理_3的更多相关文章

- 016 Ceph的集群管理_2

一.Ceph集群的运行状态 集群状态:HEALTH_OK,HEALTH_WARN,HEALTH_ERR 1.1 常用查寻状态指令 [root@ceph2 ~]# ceph health deta ...

- 015 Ceph的集群管理_1

一.理解Cluster Map cluster map由monitor维护,用于跟踪ceph集群状态 当client启动时,会连接monitor获取cluster map副本,发现所有其他组件的位置, ...

- Centos6.5下一个Ceph存储集群结构

简单的介绍 Ceph的部署模式下主要包括下面几个类型的节点 • Ceph OSDs: A Ceph OSD 进程主要用来存储数据,处理数据的replication,恢复,填充.调整资源组合以及通过检查 ...

- 002.RHCS-配置Ceph存储集群

一 前期准备 [kiosk@foundation0 ~]$ ssh ceph@serverc #登录Ceph集群节点 [ceph@serverc ~]$ ceph health #确保集群状态正常 H ...

- 容器、容器集群管理平台与 Kubernetes 技术漫谈

原文:https://www.kubernetes.org.cn/4786.html 我们为什么使用容器? 我们为什么使用虚拟机(云主机)? 为什么使用物理机? 这一系列的问题并没有一个统一的标准答案 ...

- 003.Ceph扩展集群

一 基础准备 参考<002.Ceph安装部署>文档部署一个基础集群. 二 扩展集群 2.1 扩展架构 需求:添加Ceph元数据服务器node1.然后添加Ceph Monitor和Ceph ...

- Ceph 存储集群 - 搭建存储集群

目录 一.准备机器 二.ceph节点安装 三.搭建集群 四.扩展集群(扩容) 一.准备机器 本文描述如何在 CentOS 7 下搭建 Ceph 存储集群(STORAGE CLUSTER). 一共4 ...

- Ceph 存储集群搭建

前言 Ceph 分布式存储系统,在企业中应用面较广 初步了解并学会使用很有必要 一.简介 Ceph 是一个开源的分布式存储系统,包括对象存储.块设备.文件系统.它具有高可靠性.安装方便.管理简便.能够 ...

- ceph部署-集群建立

一.配置storage集群1.建立集群管理目录(管理配置文件,密钥)mkdir ceph-clustercd ceph-cluster/ 2.创建一个新集群(需要先将主机名加入/etc/hosts 必 ...

随机推荐

- ORACLE学习笔记-ORACLE(基本命令)

--查看VGA信息: show sga; select * from v$sgastat;--可以通过以下几个动态性能视图查看信息: V$sysstat 系统统计信息 V ...

- Lists and keys

function NumberList(props) { const numbers = props.numbers; const listItems = numbers.map((number) = ...

- angular安装应用

首先你要有node 和npm 全局安装angular npm install -g @angular/cli 安装一个angular项目 ng new 项目名称 cd进入新建的项目 跑页 ...

- Ext.FormPanel-----FieldSet的用法

Ext.form.FieldSet的常用配置项: 1.checkboxToggle : Mixed True表示在lengend标签之前fieldset的范围内渲染一个checkbox,或者送入一个D ...

- 深度学习(二十九)Batch Normalization 学习笔记

Batch Normalization 学习笔记 原文地址:http://blog.csdn.net/hjimce/article/details/50866313 作者:hjimce 一.背景意义 ...

- gensim的word2vec如何得出词向量(python)

首先需要具备gensim包,然后需要一个语料库用来训练,这里用到的是skip-gram或CBOW方法,具体细节可以去查查相关资料,这两种方法大致上就是把意思相近的词映射到词空间中相近的位置. 语料库t ...

- 2019-7-29-NetBIOS-计算机名称命名限制

title author date CreateTime categories NetBIOS 计算机名称命名限制 lindexi 2019-07-29 09:59:17 +0800 2018-12- ...

- HTML静态网页---标签

一. 创建HTML: (一) body的属性: bgcolor 页面背景色 background 背景壁纸.图片 text 文字颜色 topmargin 上边距 leftmargin ...

- Python--day61--Django ORM单表操作之展示用户列表

user_list.html views.py 项目的urls.py文件

- HDU 1286

欧拉函数 φ函数的值 通式:φ(x)=x(1-1/p1)(1-1/p2)(1-1/p3)(1-1/p4)…..(1-1/pn),其中p1, p2……pn为x的所有质因数,x是不为0的整数.φ(1)=1 ...