使用kubeadm部署k8s集群[v1.18.0]

使用kubeadm部署k8s集群

环境

| IP地址 | 主机名 | 节点 |

|---|---|---|

| 10.0.0.63 | k8s-master1 | master1 |

| 10.0.0.65 | k8s-node1 | node1 |

| 10.0.0.66 | k8s-node2 | node2 |

1. 简要

kubeadm是官方社区推出的快速部署kubernetes集群工具

部署环境适用于学习和使用k8s相关软件和功能

2. 安装要求

3台纯净centos虚拟机,版本为7.x及以上机器配置 2核4G以上 x3台服务器网络互通禁止swap分区

3. 学习目标

学会使用kubeadm来安装一个集群,便于学习k8s相关知识

4. 环境准备

# 1. 关闭防火墙功能systemctl stop firewalldsystemctl disable firewalld# 2.关闭selinuxsed -i 's/enforcing/disabled/' /etc/selinux/configsetenforce 0# 3. 关闭swapswapoff -a#或将命令加入开机启动echo "swapoff -a" >>/etc/profilesource /etc/profile# 4. 服务器规划cat > /etc/hosts << EOF10.0.0.63 k8s-master110.0.0.65 k8s-node110.0.0.66 k8s-node2EOF#5. 临时主机名配置方法:hostnamectl set-hostname k8s-master1bash#6. 时间同步配置yum install -y ntpdatentpdate time.windows.com#开启转发cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsysctl --system#7. 时间同步echo '*/5 * * * * /usr/sbin/ntpdate -u ntp.api.bz' >>/var/spool/cron/rootsystemctl restart crond.servicecrontab -l# 以上可以全部复制粘贴直接运行,但是主机名配置需要重新修改

5. docker安装[所有节点都需要安装]

#源添加wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repowget -P /etc/yum.repos.d/ http://mirrors.aliyun.com/repo/epel-7.repowget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repoyum clean allyum install -y bash-completion.noarch# 安装指定版版本yum -y install docker-ce-18.09.9-3.el7#也可以查看版本安装yum list docker-ce --showduplicates | sort -r#启动dockersystemctl enable dockersystemctl start dockersystemctl status docker

6. docker配置cgroup驱动[所有节点]

rm -f /etc/docker/*sudo mkdir -p /etc/dockersudo tee /etc/docker/daemon.json <<-'EOF'{"registry-mirrors": ["https://ajvcw8qn.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]}EOFsudo systemctl daemon-reloadsudo systemctl restart docker拉取flanel镜像:docker pull lizhenliang/flannel:v0.11.0-amd64

7. 镜像加速[所有节点]

curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.iosystemctl restart docker#如果源太多容易出错. 错了就删除一个.bak源试试看#保留 curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io这个是阿里云配置的加速https://cr.console.aliyun.com/cn-hangzhou/instances/mirrors

8.kubernetes源配置[所有节点]

cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

9. 安装kubeadm,kubelet和kubectl[所有节点]

yum install -y kubelet-1.18.0 kubeadm-1.18.0 kubectl-1.18.0systemctl enable kubelet

10. 部署Kubernetes Master [ master 10.0.0.63]

kubeadm init \--apiserver-advertise-address=10.0.0.63 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.18.0 \--service-cidr=10.1.0.0/16 \--pod-network-cidr=10.244.0.0/16#成功后加入环境变量:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

初始化后获取到token:

kubeadm join 10.0.0.63:6443 --token 2cdgi6.79j20fhly6xpgfud

--discovery-token-ca-cert-hash sha256:3d847b858ed649244b4110d4d60ffd57f43856f42ca9c22e12ca33946673ccb4

记住token,后面使用

注意:

W0507 00:43:52.681429 3118 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [init] Using Kubernetes version: v1.18.0 [preflight] Running pre-flight checks [WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/ error execution phase preflight: [preflight] Some fatal errors occurred: [ERROR NumCPU]: the number of available CPUs 1 is less than the required 2 [preflight] If you know what you are doing, you can make a check non-fatal with --ignore-preflight-errors=... To see the stack trace of this error execute with --v=5 or higher

10.1 报错处理

报错1: 需要修改docker驱动为systemd /etc/docker/daemon.json 文件中加入: "exec-opts": ["native.cgroupdriver=systemd"]

报错2: [ERROR NumCPU]: the number of available CPUs 1 is less than the required 2

出现该报错,是cpu有限制,将cpu修改为2核4G以上配置即可

报错2: 出现该报错,是cpu有限制,将cpu修改为2核4G以上配置即可

报错3: 加入集群出现报错:

W0507 01:19:49.406337 26642 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checkserror execution phase preflight: [preflight] Some fatal errors occurred:[ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`To see the stack trace of this error execute with --v=5 or higher[root@k8s-master2 yum.repos.d]# kubeadm join 10.0.0.63:6443 --token q8bfij.fipmsxdgv8sgcyq4 \> --discovery-token-ca-cert-hash sha256:26fc15b6e52385074810fdbbd53d1ba23269b39ca2e3ec3bac9376ed807b595c> --discovery-token-ca-cert-hash sha256:26fc15b6e52385074810fdbbd53d1ba23269b39ca2e3ec3bac9376ed807b595cW0507 01:20:26.246981 26853 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checkserror execution phase preflight: [preflight] Some fatal errors occurred:[ERROR DirAvailable--etc-kubernetes-manifests]: /etc/kubernetes/manifests is not empty[ERROR FileAvailable--etc-kubernetes-kubelet.conf]: /etc/kubernetes/kubelet.conf already exists[ERROR FileAvailable--etc-kubernetes-pki-ca.crt]: /etc/kubernetes/pki/ca.crt already exists[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`To see the stack trace of this error execute with --v=5 or higher解决办法:执行: kubeadm reset 重新加入

10.2. kubectl命令工具配置[master]:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config#获取节点信息# kubectl get nodes[root@k8s-master1 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master1 NotReady master 2m59s v1.18.0k8s-node1 NotReady <none> 86s v1.18.0k8s-node2 NotReady <none> 85s v1.18.0#可以获取到其他主机的状态信息,证明集群完毕,另一台k8s-master2 没有加入到集群中,是因为要做多master,这里就不加了.

10.2. 安装网络插件[master]

[直在master上操作]上传kube-flannel.yaml,并执行:kubectl apply -f kube-flannel.yamlkubectl get pods -n kube-system下载地址:https://www.chenleilei.net/soft/k8s/kube-flannel.yaml[必须全部运行起来,否则有问题.][root@k8s-master1 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-7ff77c879f-5dq4s 1/1 Running 0 13mcoredns-7ff77c879f-v68pc 1/1 Running 0 13metcd-k8s-master1 1/1 Running 0 13mkube-apiserver-k8s-master1 1/1 Running 0 13mkube-controller-manager-k8s-master1 1/1 Running 0 13mkube-flannel-ds-amd64-2ktxw 1/1 Running 0 3m45skube-flannel-ds-amd64-fd2cb 1/1 Running 0 3m45skube-flannel-ds-amd64-hb2zr 1/1 Running 0 3m45skube-proxy-4vt8f 1/1 Running 0 13mkube-proxy-5nv5t 1/1 Running 0 12mkube-proxy-9fgzh 1/1 Running 0 12mkube-scheduler-k8s-master1 1/1 Running 0 13m[root@k8s-master1 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master1 Ready master 14m v1.18.0k8s-node1 Ready <none> 12m v1.18.0k8s-node2 Ready <none> 12m v1.18.0

11. 将node1 node2 加入master

node1 node2加入集群配置

在要加入的节点种执行以下命令来加入:kubeadm join 10.0.0.63:6443 --token fs0uwh.7yuiawec8tov5igh \--discovery-token-ca-cert-hash sha256:471442895b5fb77174103553dc13a4b4681203fbff638e055ce244639342701d#这个配置在安装master的时候有过提示,请注意首先要配置cni网络插件#加入成功后,master节点检测:[root@k8s-master1 docker]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master1 Ready master 14m v1.18.0k8s-node1 Ready <none> 12m v1.18.0k8s-node2 Ready <none> 12m v1.18.0

12 token创建和查询

默认token会保存24消失,过期后就不可用,如果需要重新建立token,可在master节点使用以下命令重新生成:kubeadm token createkubeadm token listopenssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'结果:3d847b858ed649244b4110d4d60ffd57f43856f42ca9c22e12ca33946673ccb4新token加入集群方法:kubeadm join 10.0.0.63:6443 --discovery-token nuja6n.o3jrhsffiqs9swnu --discovery-token-ca-cert-hash 63bca849e0e01691ae14eab449570284f0c3ddeea590f8da988c07fe2729e924

13. 安装dashboard界面

[root@k8s-master1 ~]# kubectl get svc -n kubernetes-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdashboard-metrics-scraper ClusterIP 10.1.94.43 <none> 8000/TCP 7m58skubernetes-dashboard NodePort 10.1.187.162 <none> 443:30001/TCP 7m58s

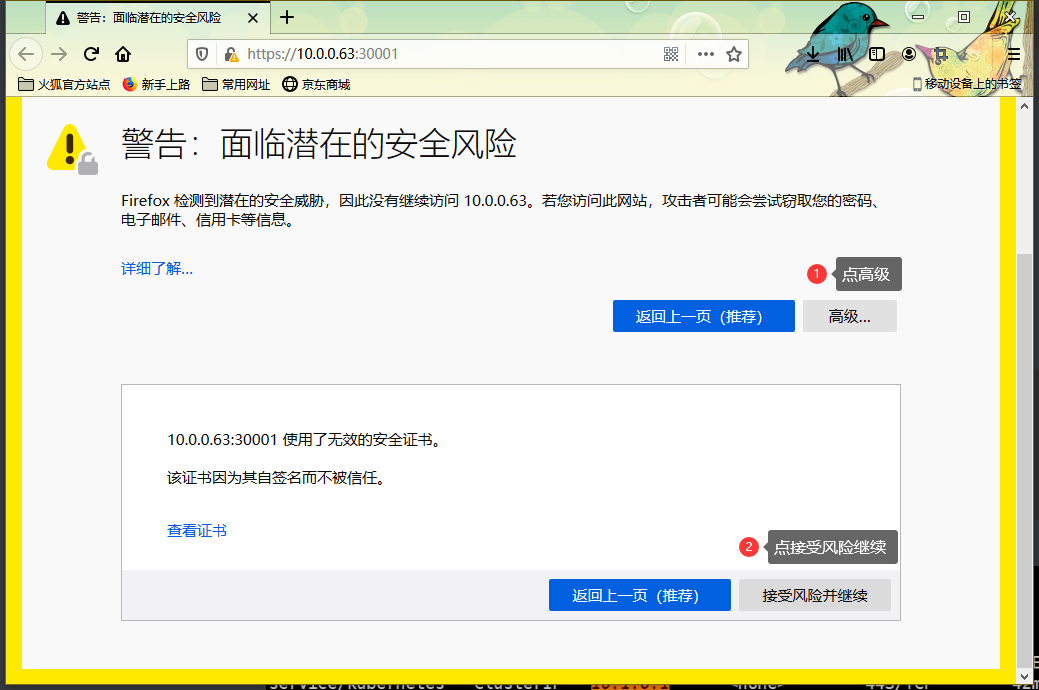

13.1 访问测试

10.0.0.63 10.0.0.64 10.0.0.65 集群任意一个角色访问30001端口都可以访问到dashboard页面.

13.2 获取dashboard token, 也就是创建service account并绑定默认cluster-admin管理员集群角色

# kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard# kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:dashboard-admin# kubectl describe secrets -n kubernetes-dashboard $(kubectl -n kubernetes-dashboard get secret | awk '/dashboard-admin/{print $1}')将复制的token 填写到 上图中的 token选项,并选择token登录

14. 验证集群是否工作正常

验证集群状态是否正常有三个方面:1. 能否正常部署应用2. 集群网络是否正常3. 集群内部dns解析是否正常

14.1 验证部署应用和日志查询

#创建一个nginx应用kubectl create deployment k8s-status-checke --image=nginx#暴露80端口kubectl expose deployment k8s-status-checke --port=80 --target-port=80 --type=NodePort#删除这个deploymentkubectl delete deployment k8s-status-checke#查询日志:[root@k8s-master1 ~]# kubectl logs -f nginx-f89759699-m5k5z

14.2 验证集群网络是否正常

1. 拿到一个应用地址[root@k8s-master1 ~]# kubectl get pods -o wideNAME READY STATUS RESTARTS AGE IP NODE NOMINATED READINESSpod/nginx 1/1 Running 0 25h 10.244.2.18 k8s-node2 <none> <none>2. 通过任意节点ping这个应用ip[root@k8s-node1 ~]# ping 10.244.2.18PING 10.244.2.18 (10.244.2.18) 56(84) bytes of data.64 bytes from 10.244.2.18: icmp_seq=1 ttl=63 time=2.63 ms64 bytes from 10.244.2.18: icmp_seq=2 ttl=63 time=0.515 ms3. 访问节点[root@k8s-master1 ~]# curl -I 10.244.2.18HTTP/1.1 200 OKServer: nginx/1.17.10Date: Sun, 10 May 2020 13:19:02 GMTContent-Type: text/htmlContent-Length: 612Last-Modified: Tue, 14 Apr 2020 14:19:26 GMTConnection: keep-aliveETag: "5e95c66e-264"Accept-Ranges: bytes4. 查询日志[root@k8s-master1 ~]# kubectl logs -f nginx10.244.1.0 - - [10/May/2020:13:14:25 +0000] "GET / HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36" "-"

14.3 验证集群内部dns解析是否正常

检查DNS:[root@k8s-master1 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-7ff77c879f-5dq4s 1/1 Running 1 4d #有时dns会出问题coredns-7ff77c879f-v68pc 1/1 Running 1 4d #有时dns会出问题etcd-k8s-master1 1/1 Running 4 4dkube-apiserver-k8s-master1 1/1 Running 3 4dkube-controller-manager-k8s-master1 1/1 Running 3 4dkube-flannel-ds-amd64-2ktxw 1/1 Running 1 4dkube-flannel-ds-amd64-fd2cb 1/1 Running 1 4dkube-flannel-ds-amd64-hb2zr 1/1 Running 4 4dkube-proxy-4vt8f 1/1 Running 4 4dkube-proxy-5nv5t 1/1 Running 2 4dkube-proxy-9fgzh 1/1 Running 2 4dkube-scheduler-k8s-master1 1/1 Running 4 4d#有时dns会出问题,解决方法:1. 导出yaml文件kubectl get deploy coredns -n kube-system -o yaml >coredns.yaml2. 删除coredonskubectl delete -f coredns.yaml检查:[root@k8s-master1 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEetcd-k8s-master1 1/1 Running 4 4dkube-apiserver-k8s-master1 1/1 Running 3 4dkube-controller-manager-k8s-master1 1/1 Running 3 4dkube-flannel-ds-amd64-2ktxw 1/1 Running 1 4dkube-flannel-ds-amd64-fd2cb 1/1 Running 1 4dkube-flannel-ds-amd64-hb2zr 1/1 Running 4 4dkube-proxy-4vt8f 1/1 Running 4 4dkube-proxy-5nv5t 1/1 Running 2 4dkube-proxy-9fgzh 1/1 Running 2 4dkube-scheduler-k8s-master1 1/1 Running 4 4dcoredns已经删除了3. 重建corednskubectl apply -f coredns.yaml[root@k8s-master1 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcoredns-7ff77c879f-5mmjg 1/1 Running 0 13scoredns-7ff77c879f-t74th 1/1 Running 0 13setcd-k8s-master1 1/1 Running 4 4dkube-apiserver-k8s-master1 1/1 Running 3 4dkube-controller-manager-k8s-master1 1/1 Running 3 4dkube-flannel-ds-amd64-2ktxw 1/1 Running 1 4dkube-flannel-ds-amd64-fd2cb 1/1 Running 1 4dkube-flannel-ds-amd64-hb2zr 1/1 Running 4 4dkube-proxy-4vt8f 1/1 Running 4 4dkube-proxy-5nv5t 1/1 Running 2 4dkube-proxy-9fgzh 1/1 Running 2 4dkube-scheduler-k8s-master1 1/1 Running 4 4d日志复查:coredns-7ff77c879f-5mmjg:[root@k8s-master1 ~]# kubectl logs coredns-7ff77c879f-5mmjg -n kube-system.:53[INFO] plugin/reload: Running configuration MD5 = 4e235fcc3696966e76816bcd9034ebc7CoreDNS-1.6.7linux/amd64, go1.13.6, da7f65bcoredns-7ff77c879f-t74th:[root@k8s-master1 ~]# kubectl logs coredns-7ff77c879f-t74th -n kube-system.:53[INFO] plugin/reload: Running configuration MD5 = 4e235fcc3696966e76816bcd9034ebc7CoreDNS-1.6.7linux/amd64, go1.13.6, da7f65b#k8s创建一个容器验证dns[root@k8s-master1 ~]# kubectl run -it --rm --image=busybox:1.28.4 sh/ # nslookup kubernetesServer: 10.1.0.10Address 1: 10.1.0.10 kube-dns.kube-system.svc.cluster.localName: kubernetesAddress 1: 10.1.0.1 kubernetes.default.svc.cluster.local#通过 nslookup来解析 kubernetes 能够出现解析,说明dns正常工作

15. 集群证书问题处理 [kuberadm部署的解决方案]

1. 删除默认的secret,使用自签证书创建新的secretkubectl delete secret kubernetes-dashboard-certs -n kubernetes-dashboardkubectl create secret generic kubernetes-dashboard-certs \--from-file=/etc/kubernetes/pki/apiserver.key --from-file=/etc/kubernetes/pki/apiserver.crt -n kubernetes-dashboard使用二进制部署的这里的证书需要根据自己当时存储的路径进行修改即可.2. 证书配置后需要修改dashboard.yaml文件,重新构建dashboardwget https://www.chenleilei.net/soft/k8s/recommended.yamlvim recommended.yaml找到: kind: Deployment,找到这里之后再次查找 args 看到这两行:- --auto-generate-certificates- --namespace=kubernetes-dashboard改为[中间插入两行证书地址]:- --auto-generate-certificates- --tls-key-file=apiserver.key- --tls-cert-file=apiserver.crt- --namespace=kubernetes-dashboard[已修改的,可直接使用: wget https://www.chenleilei.net/soft/k8s/dashboard.yaml]3. 修改完毕后重新应用 recommended.yamlkubectl apply -f recommended.yaml应用后,可以看到触发了一次滚动更新,然后重新打开浏览器发现证书已经正常显示,不会提示不安全了.[root@k8s-master1 ~]# kubectl get pods -n kubernetes-dashboardNAME READY STATUS RESTARTS AGEdashboard-metrics-scraper-694557449d-r9h5r 1/1 Running 0 2d1hkubernetes-dashboard-5d8766c7cc-trdsv 1/1 Running 0 93s <---滚动更新.

报错处理:

问题1 k8s-node节点加入时报错::

k8s-node节点加入时报错:W0315 22:16:20.123204 5795 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.[preflight] Running pre-flight checks[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/error execution phase preflight: [preflight] Some fatal errors occurred:[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`To see the stack trace of this error execute with --v=5 or higher处理办法:echo "1" >/proc/sys/net/bridge/bridge-nf-call-iptables增加后重新加入:kubeadm join 10.0.0.63:6443 --token 0dr1pw.ejybkufnjpalb8k6 --discovery-token-ca-cert-hash sha256:ca1aa9cb753a26d0185e3df410cad09d8ec4af4d7432d127f503f41bc2b14f2a这里的token由kubadm服务器生成.

问题2: web页面无法访问处理:

重建dashboard删除:kubectl delete -f dashboard.yaml删除后创建:kubectl create -f dashboard.yaml创建账户:kubectl create serviceaccount dashboard-admin -n kubernetes-dashboard查看密码:kubectl describe secrets -n kubernetes-dashboard $(kubectl -n kubernetes-dashboard get secret | awk '/dashboard-admin/{print $1}')重新打开登录即可

问题3: 部署dashboard失败

有可能是网络问题,需要切换一个别的网络,比如vpn,然后重新部署.

16. 在k8s中部署一个nginx

[root@k8s-master1 ~]# kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePortservice/nginx exposed[root@k8s-master1 ~]# kubectl get pod,svcNAME READY STATUS RESTARTS AGEpod/nginx-f89759699-dnfmg 0/1 ImagePullBackOff 0 3m41sImagePullBackOff报错:检查k8s日志: kubectl describe pod nginx-f89759699-dnfmg结果:Normal Pulling 3m27s (x4 over 7m45s) kubelet, k8s-node2 Pulling image "nginx"Warning Failed 2m55s (x2 over 6m6s) kubelet, k8s-node2 Failed to pull image "nginx": rpc error: code = Unknown desc = Get https://registry-1.docker.io/v2/library/nginx/manifests/sha256:cccef6d6bdea671c394956e24b0d0c44cd82dbe83f543a47fdc790fadea48422: net/http: TLS handshake timeout可以看到是因为docker下载镜像报错,需要更新别的docker源[root@k8s-master1 ~]# cat /etc/docker/daemon.json{"registry-mirrors": ["https://ajvcw8qn.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]}使用其中一个node节点docker来pull nginx:然后发现了错误:[root@k8s-node1 ~]# docker pull nginxUsing default tag: latestlatest: Pulling from library/nginx54fec2fa59d0: Pulling fs layer4ede6f09aefe: Pulling fs layerf9dc69acb465: Pulling fs layerGet https://registry-1.docker.io/v2/: net/http: TLS handshake timeout #源没有修改重新修改源后:[root@k8s-master1 ~]# docker pull nginxUsing default tag: latestlatest: Pulling from library/nginx54fec2fa59d0: Pull complete4ede6f09aefe: Pull completef9dc69acb465: Pull completeDigest: sha256:86ae264c3f4acb99b2dee4d0098c40cb8c46dcf9e1148f05d3a51c4df6758c12Status: Downloaded newer image for nginx:latestdocker.io/library/nginx:latest再次运行:kubectl delete pod,svc nginxkubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort这是一个k8s拉取镜像失败的排查过程:1. k8s部署nginx失败,检查节点 kubectl get pod,svc2. 检查k8s日志: Failed to pull image "nginx": rpc error: code = Unknown desc = Get https://registry-...net/http: TLS handshake timeout [出现这个故障可以看到是源没有更换]3. 修改docker源为阿里云的.然后重新启动dockercat /etc/docker/daemon.json{"registry-mirrors": ["https://ajvcw8qn.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]}systemctl restart docker.service4. 再次使用docker pull 来下载一个nginx镜像, 发现已经可以拉取成功5. 删除docker下载好的nginx镜像 docker image rm -f [镜像名]6. k8s中删除部署失败的nginx kubectl delete deployment nginx7. 重新创建镜像 kubectl create deployment nginx --image=nginx8. k8s重新部署应用: kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

17. 暴露应用

1.创建镜像kubectl create deployment nginx --image=nginx2.暴露应用kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

18. 优化: k8s自动补全工具

yum install -y bash-completionsource <(kubectl completion bash)source /usr/share/bash-completion/bash_completion

19. 本节问题点:

一. token过期处理办法:每隔24小时,之前创建的token就会过期,这样会无法登录集群的dashboard页面,此时需要重新生成token生成命令:kubeadm token createkubeadm token listopenssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'查询tokenopenssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'3d847b858ed649244b4110d4d60ffd57f43856f42ca9c22e12ca33946673ccb4然后使用新的token让新服务器加入:kubeadm join 10.0.0.63:6443 --token 0dr1pw.ejybkufnjpalb8k6 --discovery-token-ca-cert-hash sha256:3d847b858ed649244b4110d4d60ffd57f43856f42ca9c22e12ca33946673ccb4二. dashboard登录密码获取kubectl describe secrets -n kubernetes-dashboard $(kubectl -n kubernetes-dashboard get secret | awk '/dashboard-admin/{print $1}')三. k8s拉取镜像失败的排查过程1. k8s部署nginx失败,检查节点 kubectl get pod,svc2. 检查k8s日志: Failed to pull image "nginx": rpc error: code = Unknown desc = Get https://registry-...net/http: TLS handshake timeout [出现这个故障可以看到是源没有更换]3. 修改docker源为阿里云的.然后重新启动dockercat /etc/docker/daemon.json{"registry-mirrors": ["https://ajvcw8qn.mirror.aliyuncs.com"],"exec-opts": ["native.cgroupdriver=systemd"]}systemctl restart docker.service4. 再次使用docker pull 来下载一个nginx镜像, 发现已经可以拉取成功5. 删除docker下载好的nginx镜像 docker image rm -f [镜像名]6. k8s中删除部署失败的nginx kubectl delete deployment nginx7. 重新创建镜像 kubectl create deployment nginx --image=nginx8. k8s重新部署应用: kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

20. YAML附件[请保存为 .yaml 为后缀]

https://www.chenleilei.net/soft/kubeadm快速部署一个Kubernetes集群yaml.zip

使用kubeadm部署k8s集群[v1.18.0]的更多相关文章

- kubeadm部署K8S集群v1.16.3

本次先更新kubeadm快速安装K8S,二进制安装上次没写文档,后续更新,此次最新的版本是V1.16.3 1.关闭防火墙.关闭selinux.关闭swapoff -a systemctl stop f ...

- 【02】Kubernets:使用 kubeadm 部署 K8S 集群

写在前面的话 通过上一节,知道了 K8S 有 Master / Node 组成,但是具体怎么个组成法,就是这一节具体谈的内容.概念性的东西我们会尽量以实验的形式将其复现. 部署 K8S 集群 互联网常 ...

- (二)Kubernetes kubeadm部署k8s集群

kubeadm介绍 kubeadm是Kubernetes项目自带的及集群构建工具,负责执行构建一个最小化的可用集群以及将其启动等的必要基本步骤,kubeadm是Kubernetes集群全生命周期的管理 ...

- kubeadm部署k8s集群

kubeadm是官方社区推出的一个用于快速部署kubernetes集群的工具. 这个工具能通过两条指令完成一个kubernetes集群的部署: # 创建一个 Master 节点 kubeadm ini ...

- 企业运维实践-还不会部署高可用的kubernetes集群?使用kubeadm方式安装高可用k8s集群v1.23.7

关注「WeiyiGeek」公众号 设为「特别关注」每天带你玩转网络安全运维.应用开发.物联网IOT学习! 希望各位看友[关注.点赞.评论.收藏.投币],助力每一个梦想. 文章目录: 0x00 前言简述 ...

- kubernetes系列03—kubeadm安装部署K8S集群

本文收录在容器技术学习系列文章总目录 1.kubernetes安装介绍 1.1 K8S架构图 1.2 K8S搭建安装示意图 1.3 安装kubernetes方法 1.3.1 方法1:使用kubeadm ...

- 二进制方法-部署k8s集群部署1.18版本

二进制方法-部署k8s集群部署1.18版本 1. 前置知识点 1.1 生产环境可部署kubernetes集群的两种方式 目前生产部署Kubernetes集群主要有两种方式 kuberadm Kubea ...

- 通过kubeadm工具部署k8s集群

1.概述 kubeadm是一工具箱,通过kubeadm工具,可以快速的创建一个最小的.可用的,并且符合最佳实践的k8s集群. 本文档介绍如何通过kubeadm工具快速部署一个k8s集群. 2.主机规划 ...

- 使用Kubeadm创建k8s集群之部署规划(三十)

前言 上一篇我们讲述了使用Kubectl管理k8s集群,那么接下来,我们将使用kubeadm来启动k8s集群. 部署k8s集群存在一定的挑战,尤其是部署高可用的k8s集群更是颇为复杂(后续会讲).因此 ...

随机推荐

- Spring+Hibernate整合配置 --- 比较完整的spring、hibernate 配置

Spring+Hibernate整合配置 分类: J2EE2010-11-25 17:21 16667人阅读 评论(1) 收藏 举报 springhibernateclassactionservlet ...

- fiddler composer post请求

必加部分:Content-Type: application/json

- 委托的 `DynamicInvoke` 小优化

委托的 DynamicInvoke 小优化 Intro 委托方法里有一个 DynamicInvoke 的方法,可以在不清楚委托实际类型的情况下执行委托方法,但是用 DynamicInvoke 去执行的 ...

- python2.7安装numpy和pandas

扩展官网安装numpy,use [v][p][n]下载得会比较快 然后在CMD命令行下进入该文件夹然后输入pip install +numpy的路径+文件名.比如我的是:pip install num ...

- 高德APP启动耗时剖析与优化实践(iOS篇)

前言最近高德地图APP完成了一次启动优化专项,超预期将双端启动的耗时都降低了65%以上,iOS在iPhone7上速度达到了400毫秒以内.就像产品们用后说的,快到不习惯.算一下每天为用户省下的时间,还 ...

- 利用SSIS进行SharePoint 列表数据的ETL

好几年前写了一篇<SSIS利用Microsoft Connector for Oracle by Attunity组件进行ETL!>,IT技术真是日新月异,这种方式对于新的SQL SERV ...

- I - Fill The Bag codeforces 1303D

题解:注意这里的数组a中的元素,全部都是2的整数幂.然后有二进制可以拼成任意数.只要一堆2的整数幂的和大于x,x也是2的整数幂,那么那一堆2的整数幂一定可以组成x. 思路:位运算,对每一位,如果该位置 ...

- I. Same String

有两个只由小写字母组成的长度为n的字符串s1,s2和m组字母对应关系,每一组关系由两个字母c1和c2组成,代表c1可以直接变成c2,你需要判断s1是否可以通过这m组关系转换为s2. 输入格式 第一行输 ...

- linux上Docker安装gogs私服

一.背景介绍 Gogs 是一款类似GitHub的开源文件/代码管理系统(基于Git),Gogs 的目标是打造一个最简单.最快速和最轻松的方式搭建自助 Git 服务.使用 Go 语言开发使得 Gogs ...

- 用long类型让我出了次生产事故,写代码还是要小心点

昨天发现线上试跑期的一个程序挂了,平时都跑的好好的,查了下日志是因为昨天运营跑了一家美妆top级淘品牌店,会员量近千万,一下子就把128G的内存给爆了,当时并行跑了二个任务,没辙先速写一段代码限流,后 ...