Problem of Uninstall Cloudera: Cannot Add Hdfs and Reported Cannot Find CDH's bigtop-detect-javahome

1. Problem

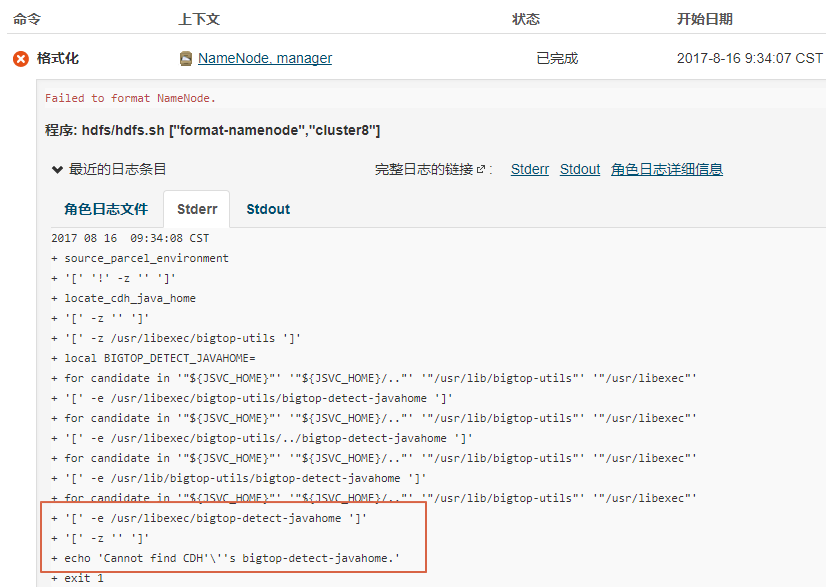

We wrote a shell script to uninstall Cloudera Manager(CM) that run in a cluster with 3 linux server. After run the script, we reinstalled the CM normally. But when we established Hdfs encountered a problem: **failed to format NameNode. Cannot find CDH's bigtop-detect-javahome. **

2.Thinking

- By google we found if it caused by the exist of floder "/dfs", but after used command

rm -rf /dfsthe problem still happen. - We found the error reported:

/usr/libexec/bigtop-detect-javahome, so we thought if caused by JAVA_HOME variable exception. At first, we disable the file:/etc/profile's JAAVA_HOME variable. To make the change effect, we shouldsource /etc/profileand disconnect the xshell. however, the problem still happen. - Recheck the uninstall script, we found the script delete files:

/usr/bin/hadoop*which was important.

now /etc/profile as follow:

#export JAVA_HOME=/usr/java/jdk1.7.0_67-cloudera

#export PATH=$JAVA_HOME/bin:$PATH

#export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

3. Slove

follwing step you should do in each server of the cluster.

3.1 Check file hadoop avaliable

cd to the floder /usr/bin and check if file hadoopis avaliable. the content of file as follows:

#!/bin/bash

# Autodetect JAVA_HOME if not defined

. /usr/lib/bigtop-utils/bigtop-detect-javahome

export HADOOP_HOME=/usr/lib/hadoop

export HADOOP_MAPRED_HOME=/usr/lib/hadoop

export HADOOP_LIBEXEC_DIR=//usr/lib/hadoop/libexec

export HADOOP_CONF_DIR=/etc/hadoop/conf

exec /usr/lib/hadoop/bin/hadoop "$@"

we found it load file bigtop-detect-javahome and set HADOOP_HOME.

It's a pity that /usr/lib/hadoop* and /usr/lib/hadoop* was delete by our uninstall script, so we copied those file and floder from another cluster to our cluster.

3.2 Check file bigtop-detect-javahome avaliable

cd to the floder /usr/lib/bigtop-utils and check if filebigtop-detect-javahome is avaliable, the content of file as follows:

# attempt to find java

if [ -z "${JAVA_HOME}" ]; then

for candidate_regex in ${JAVA_HOME_CANDIDATES[@]}; do

for candidate in `ls -rvd ${candidate_regex}* 2>/dev/null`; do

if [ -e ${candidate}/bin/java ]; then

export JAVA_HOME=${candidate}

break 2

fi

done

done

fi

the file is used to set JAVA_HOME variable when system doesn't define JAVA_HOME in /etc/profile.

Finally, I found no matter the file /etc/profile with JAVA_HOME variable or without it, Hdfs can be installed.

3.3 More and more

Go through the first two steps can slove the problem, but more google let me know /etc/default/ is real config floder !

refer: http://blog.sina.com.cn/s/blog_5d9aca630101pxr1.html

addexport JAVA_HOME=path into /etc/default/bigtop-utils, then source /etc/default/bigtop-utils.

You only need to do it once and all CDH components would use that variable to figure out where JDK is.

4. Conclusion

- Always focus on the log file content and error's relation is.

- Make the best use of your time and modest ask for advise to other. otherwise, the problem always exists.

Problem of Uninstall Cloudera: Cannot Add Hdfs and Reported Cannot Find CDH's bigtop-detect-javahome的更多相关文章

- Problem of Uninstall Cloudera: Can't Add Hdfs and Reported Cannot Find CDH's bigtop-detect-javahome

[toc] 1. Problem We wrote a shell script to uninstall Cloudera Manager(CM) that run in a cluster wit ...

- 使用Cloudera Manager搭建HDFS完全分布式集群

使用Cloudera Manager搭建HDFS完全分布式集群 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 关于Cloudera Manager的搭建我这里就不再赘述了,可以参考 ...

- 使用Cloudera Manager搭建zookeeper集群及HDFS HA实战篇

使用Cloudera Manager搭建zookeeper集群及HDFS HA实战篇 作者:尹正杰 版权声明:原创作品,谢绝转载!否则将追究法律责任. 一.使用Cloudera Manager搭建zo ...

- hdfs client access the hdfs cluster not in one domain

https://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-hdfs/HdfsMultihoming.html#Clients_u ...

- cloudera learning1:cloudera简介及安装

cloudera分为两个部分:CDH和CM.CDH是Cloudera Distribution Hadoop的简称,顾名思义,就是cloudera公司发布的Hadoop版本,封装了Apache Had ...

- CDH(Cloudera)与hadoop(apache)对比

本文出自:CDH(Cloudera)与hadoop(apache)对比http://www.aboutyun.com/thread-9225-1-1.html(出处: about云开发) 问题导读 ...

- 纯手工全删除域内最后一个EXCHANGE--How to Manually Uninstall Last Exchange 2010 Server from Organization

http://www.itbigbang.com/how-to-manually-uninstall-last-exchange-2010-server-from-organization/ 没办法, ...

- Hadoop cloudera版和Apache(原生态)的区别

---------------------------------------------------------------------------------------------------- ...

- HDFS 和 YARN 的 HA 故障切换【转】

来源:https://blog.csdn.net/u011414200/article/details/50336735 一 非 HDFS HA 集群转换成 HA 集群二 HDFS 的 HA 自动切换 ...

随机推荐

- IAR 9+ 编译 TI CC2541 出现 Segment ISTACK (size: 0xc0 align: 0) is too long for segment definition.

IAR 9+ 编译 TI CC2541 出现 Segment ISTACK (size: 0xc0 align: 0) is too long for segment definition. Segm ...

- FastAdmin 学习线路 (2018-06-09 更新)

FastAdmin 学习线路 以下为常规线路,非常规可跳过. FastAdmin 学习线路 基础 HTML CSS DIV Javascript 基础 jQuery php 基础 对象 命名空间 进阶 ...

- wamp 配置多站点访问

1:在f:\wamp\bin\apache\apache2.2.21\conf目录下打开 httpd.conf 查找到 #include conf/extra/httpd-vhosts.conf 把前 ...

- Java 字符串 String

什么是Java中的字符串 在 Java 中,字符串被作为 String 类型的对象处理. String 类位于 java.lang 包中.默认情况下,该包被自动导入所有的程序. 创建 String 对 ...

- LeetCode 177. Nth Highest Salary

https://leetcode.com/problems/nth-highest-salary/description/ Write a SQL query to get the nth highe ...

- Jenkins集成selenium

目的:将selenium用例集成到Jenkins,需要执行时,只需要执行curl命令即可. 1.准备selenium测试脚本 from selenium import webdriver import ...

- 常见的加密和解密算法—AES

一.AES加密概述 高级加密标准(英语:Advanced Encryption Standard,缩写:AES),在密码学中又称Rijndael加密法,是美国联邦政府采用的一种区块加密标准.这个标准用 ...

- hibench学习

hibench包含几个hadoop的负载 micro benchmarksSort:使用hadoop randomtextwriter生成数据,并对数据进行排序. Wordcount:统计输入数据中每 ...

- 实现一个最简单的plot函数调用:

实现一个最简单的plot函数调用: 1 import matplotlib.pyplot as plt 2 3 y=pp.DS.Transac_open # 设置y轴数据,以数组形式提供 4 5 x= ...

- Ubuntu上装KVM:安装、初次使用

KVM 是 Linux 内核自带的虚拟机系统, 使用它,你的机器就可以变成几台机了 试用过程如下: 环境: Ubuntu 14.04 64bit 1, KVM需要CPU硬件支持虚拟化,所以首先要确认 ...