tensorflow利用预训练模型进行目标检测(二):预训练模型的使用

一、运行样例

官网链接:https://github.com/tensorflow/models/blob/master/research/object_detection/object_detection_tutorial.ipynb 但是一直有问题,没有运行起来,所以先使用一个别人写好的代码

上一个在ubuntu下可用的代码链接:https://gitee.com/bubbleit/JianDanWuTiShiBie 使用python2运行,python3可能会有问题

该代码由https://gitee.com/talengu/JianDanWuTiShiBie/tree/master而来,经过我部分的调整与修改,代码包含在ODtest.py文件中,/ssd_mobilenet_v1_coco_11_06_2017中存储的是预训练模型

原始代码如下

import numpy as np

from matplotlib import pyplot as plt

import os

import tensorflow as tf

from PIL import Image

from utils import label_map_util

from utils import visualization_utils as vis_util import datetime

# 关闭tensorflow警告

os.environ['TF_CPP_MIN_LOG_LEVEL']='' detection_graph = tf.Graph() # 加载模型数据-------------------------------------------------------------------------------------------------------

def loading(): with detection_graph.as_default():

od_graph_def = tf.GraphDef()

PATH_TO_CKPT = 'ssd_mobilenet_v1_coco_11_06_2017' + '/frozen_inference_graph.pb'

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

return detection_graph # Detection检测-------------------------------------------------------------------------------------------------------

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=90, use_display_name=True)

category_index = label_map_util.create_category_index(categories) def Detection(image_path="images/image1.jpg"):

loading()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path) # the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image) # Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0') # Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0') # Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0') # Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded}) # Visualization of the results of a detection.将识别结果标记在图片上

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# output result输出

for i in range(3):

if classes[0][i] in category_index.keys():

class_name = category_index[classes[0][i]]['name']

else:

class_name = 'N/A'

print("物体:%s 概率:%s" % (class_name, scores[0][i])) # matplotlib输出图片

# Size, in inches, of the output images.

IMAGE_SIZE = (20, 12)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show() # 运行

Detection()

git clone到本地后执行有几个错误

问题1

报错信息: UnicodeDecodeError: 'ascii' codec can't decode byte 0xe5 in position 1: ordinal not in range(128)

solution:参考:https://www.cnblogs.com/QuLory/p/3615584.html

主要错误是上面最后一行的Unicode解码问题,网上搜索说是读取文件时使用的编码默认时ascii而不是utf8,导致的错误;

在代码中加上如下几句即可。

import sys

reload(sys)

sys.setdefaultencoding('utf8')

问题2

报错信息:_tkinter.TclError: no display name and no $DISPLAY environment variable 详情:

Traceback (most recent call last):

File "ODtest.py", line 103, in <module>

Detection()

File "ODtest.py", line 96, in Detection

plt.figure(figsize=IMAGE_SIZE)

File "/usr/local/lib/python2.7/dist-packages/matplotlib/pyplot.py", line 533, in figure

**kwargs)

File "/usr/local/lib/python2.7/dist-packages/matplotlib/backend_bases.py", line 161, in new_figure_manager

return cls.new_figure_manager_given_figure(num, fig)

File "/usr/local/lib/python2.7/dist-packages/matplotlib/backends/_backend_tk.py", line 1046, in new_figure_manager_given_figure

window = Tk.Tk(className="matplotlib")

File "/usr/lib/python2.7/lib-tk/Tkinter.py", line 1822, in __init__

self.tk = _tkinter.create(screenName, baseName, className, interactive, wantobjects, useTk, sync, use)

_tkinter.TclError: no display name and no $DISPLAY environment variable

solution:参考:https://blog.csdn.net/qq_22194315/article/details/77984423

纯代码解决方案

这也是大部分人在网上诸如stackoverflow的问答平台得到的解决方案,在引入pyplot、pylab之前,要先更改matplotlib的后端模式为”Agg”。直接贴代码吧!

# do this before importing pylab or pyplot

Import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot asplt

修改之后代码为:

#!usr/bin/python

# -*- coding: utf-8 -*- import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot

from matplotlib import pyplot as plt

import os

import tensorflow as tf

from PIL import Image

from utils import label_map_util

from utils import visualization_utils as vis_util import datetime

# 关闭tensorflow警告

import sys

reload(sys)

sys.setdefaultencoding('utf8') os.environ['TF_CPP_MIN_LOG_LEVEL']='' detection_graph = tf.Graph() # 加载模型数据-------------------------------------------------------------------------------------------------------

def loading(): with detection_graph.as_default():

od_graph_def = tf.GraphDef()

PATH_TO_CKPT = 'ssd_mobilenet_v1_coco_11_06_2017' + '/frozen_inference_graph.pb'

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

return detection_graph # Detection检测-------------------------------------------------------------------------------------------------------

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=90, use_display_name=True)

category_index = label_map_util.create_category_index(categories) def Detection(image_path="images/image1.jpg"):

loading()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path) # the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image) # Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0') # Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0') # Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0') # Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded}) # Visualization of the results of a detection.将识别结果标记在图片上

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# output result输出

for i in range(3):

if classes[0][i] in category_index.keys():

class_name = category_index[classes[0][i]]['name']

else:

class_name = 'N/A'

print("object:%s gailv:%s" % (class_name, scores[0][i])) # matplotlib输出图片

# Size, in inches, of the output images.

IMAGE_SIZE = (20, 12)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show() # 运行

Detection()

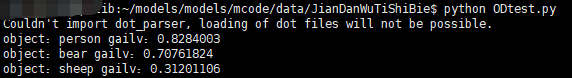

运行结果:

如无意外,加上时间统计函数,调用已下载好的预训练模型即可

二、使用预训练模型

使用ssd_mobilenet_v1_coco_11_06_2017

对上边的代码进行修改,注意修改PATH_TO_LABELS的路径,models中包括mscoco_label_map.pbtxt文件,在/models/research/object_detection/data目录下,另外注意from object_detection.utils import label_map_util中,在utils前边要加上object_detection

修改后的代码路径为:/models/models/mcode/ODtest.py 代码如下

#!usr/bin/python

# -*- coding: utf-8 -*- import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot

from matplotlib import pyplot as plt

import os

import tensorflow as tf

from PIL import Image

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util import datetime

# 关闭tensorflow警告

import sys

reload(sys)

sys.setdefaultencoding('utf8') os.environ['TF_CPP_MIN_LOG_LEVEL']='' detection_graph = tf.Graph() # 加载模型数据-------------------------------------------------------------------------------------------------------

def loading(): with detection_graph.as_default():

od_graph_def = tf.GraphDef()

PATH_TO_CKPT = '/home/yanjieliu/models/models/research/object_detection/pretrained_models/ssd_mobilenet_v1_coco_11_06_2017' + '/frozen_inference_graph.pb'

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

return detection_graph # Detection检测-------------------------------------------------------------------------------------------------------

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('/home/yanjieliu/models/models/research/object_detection/data', 'mscoco_label_map.pbtxt')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=90, use_display_name=True)

category_index = label_map_util.create_category_index(categories) def Detection(image_path="/home/yanjieliu/dataset/img_test/p1.jpg"):

loading()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path) # the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image) # Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0') # Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0') # Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0') # Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded}) # Visualization of the results of a detection.将识别结果标记在图片上

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# output result输出

for i in range(3):

if classes[0][i] in category_index.keys():

class_name = category_index[classes[0][i]]['name']

else:

class_name = 'N/A'

print("object:%s gailv:%s" % (class_name, scores[0][i])) # matplotlib输出图片

# Size, in inches, of the output images.

IMAGE_SIZE = (20, 12)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show() # 运行

Detection()

可正确运行

若想使用该链接中的脚本http://www.baiguangnan.com/2018/02/12/objectdetection2/,则需要进行一些修改,否则会有错误,待做

传参并将时间记录到txt文档的版本,将ODtest.py进行部分修改,修改后的内容在1to10test.py中

#!usr/bin/python

# -*- coding: utf-8 -*- import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot

from matplotlib import pyplot as plt

import os

import tensorflow as tf

from PIL import Image

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util import datetime

# 关闭tensorflow警告

import time

import argparse

import sys

reload(sys)

sys.setdefaultencoding('utf8') os.environ['TF_CPP_MIN_LOG_LEVEL']='' detection_graph = tf.Graph() # 加载模型数据-------------------------------------------------------------------------------------------------------

def loading(model_name): with detection_graph.as_default():

od_graph_def = tf.GraphDef()

PATH_TO_CKPT = '/home/yanjieliu/models/models/research/object_detection/pretrained_models/'+model_name + '/frozen_inference_graph.pb'

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

return detection_graph # Detection检测-------------------------------------------------------------------------------------------------------

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('/home/yanjieliu/models/models/research/object_detection/data', 'mscoco_label_map.pbtxt')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=90, use_display_name=True)

category_index = label_map_util.create_category_index(categories) def Detection(args):

image_path=args.image_path

loading(args.model_name)

#start = time.time()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path) # the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image) # Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0') # Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0') # Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0') # Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded}) # Visualization of the results of a detection.将识别结果标记在图片上

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# output result输出

for i in range(3):

if classes[0][i] in category_index.keys():

class_name = category_index[classes[0][i]]['name']

else:

class_name = 'N/A'

print("object:%s gailv:%s" % (class_name, scores[0][i])) # matplotlib输出图片

# Size, in inches, of the output images.

IMAGE_SIZE = (20, 12)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show() def parse_args():

'''parse args'''

parser = argparse.ArgumentParser()

parser.add_argument('--image_path', default='/home/yanjieliu/dataset/img_test/p4.jpg')

parser.add_argument('--model_name',

default='mask_rcnn_inception_resnet_v2_atrous_coco_2018_01_28')

return parser.parse_args() # 运行

start = time.time()

Detection(parse_args())

end = time.time()

print('time:\n')

print str(end-start) with open('./outputs/1to10test_outputs.txt', 'a') as f:

f.write('\n')

f.write(str(end-start))

使用 python 1to10test_new.py --image_path /home/yanjieliu/dataset/img_test/p5.jpg --model_name mask_rcnn_inception_resnet_v2_atrous_coco_2018_01_28 便可调用运行

或者写一个shell脚本,将此命令执行10遍,然后求平均运行时间即可

例如

#!/bin/bash for i in $(seq 1 10)

do

python 1to10test_new.py --image_path /home/yanjieliu/dataset/img_test/p4.jpg --model_name mask_rcnn_inception_resnet_v2_atrous_coco_2018_01_28

done

检测单张图片的模板

#!usr/bin/python

# -*- coding: utf-8 -*- import numpy as np

import matplotlib

matplotlib.use('Agg')

import matplotlib.pyplot

from matplotlib import pyplot as plt

import os

import tensorflow as tf

from PIL import Image

from object_detection.utils import label_map_util

from object_detection.utils import visualization_utils as vis_util import datetime

# 关闭tensorflow警告

import time

import MySQLdb

import argparse

import sys

reload(sys)

sys.setdefaultencoding('utf8') os.environ['TF_CPP_MIN_LOG_LEVEL']='' detection_graph = tf.Graph() # 加载模型数据-------------------------------------------------------------------------------------------------------

def loading(model_name): with detection_graph.as_default():

od_graph_def = tf.GraphDef()

PATH_TO_CKPT = '/home/yanjieliu/models/models/research/object_detection/pretrained_models/'+model_name + '/frozen_inference_graph.pb'

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

return detection_graph # Detection检测-------------------------------------------------------------------------------------------------------

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('/home/yanjieliu/models/models/research/object_detection/data', 'mscoco_label_map.pbtxt')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=90, use_display_name=True)

category_index = label_map_util.create_category_index(categories) def Detection(args):

image_path=args.image_path

image_name=args.image_name

loading(args.model_name)

#start = time.time()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# for image_path in TEST_IMAGE_PATHS:

image = Image.open('%s%s'%(image_path, image_name)) # the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image) # Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0') # Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0') # Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0') # Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={image_tensor: image_np_expanded}) # Visualization of the results of a detection.将识别结果标记在图片上

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

# output result输出

list = []

for i in range(5):

if classes[0][i] in category_index.keys():

class_name = category_index[classes[0][i]]['name']

else:

class_name = 'N/A'

print("object:%s gailv:%s" % (class_name, scores[0][i]))

#print(boxes)

if(float(scores[0][i])>0.5):

list.append(class_name.encode('utf-8')) # matplotlib输出图片

# Size, in inches, of the output images.

IMAGE_SIZE = (20, 12)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show() def parse_args():

'''parse args'''

parser = argparse.ArgumentParser()

parser.add_argument('--image_path', default='/home/yanjieliu/rdshare/dataset/dog/')

parser.add_argument('--image_name', default='dog768_576.jpg')

parser.add_argument('--model_name',

default='faster_rcnn_resnet50_coco_2018_01_28')

return parser.parse_args() if __name__ == '__main__':

# 运行

args=parse_args()

start = time.time()

Detection(args)

end = time.time()

print('time:\n')

print str(end-start)

tensorflow利用预训练模型进行目标检测(二):预训练模型的使用的更多相关文章

- tensorflow利用预训练模型进行目标检测(三):将检测结果存入mysql数据库

mysql版本:5.7 : 数据库:rdshare:表captain_america3_sd用来记录某帧是否被检测.表captain_america3_d用来记录检测到的数据. python模块,包部 ...

- caffe-ssd使用预训练模型做目标检测

首先参考https://www.jianshu.com/p/4eaedaeafcb4 这是一个傻瓜似的目标检测样例,目前还不清楚图片怎么转换,怎么验证,后续继续跟进 模型测试(1)图片数据集上测试 p ...

- 目标检测(二) SPPNet

引言 先简单回顾一下R-CNN的问题,每张图片,通过 Selective Search 选择2000个建议框,通过变形,利用CNN提取特征,这是非常耗时的,而且,形变必然导致信息失真,最终影响模型的性 ...

- TensorFlow + Keras 实战 YOLO v3 目标检测图文并茂教程

运行步骤 1.从 YOLO 官网下载 YOLOv3 权重 wget https://pjreddie.com/media/files/yolov3.weights 下载过程如图: 2.转换 Darkn ...

- tensorflow利用预训练模型进行目标检测(一):安装tensorflow detection api

一.tensorflow安装 首先系统中已经安装了两个版本的tensorflow,一个是通过keras安装的, 一个是按照官网教程https://www.tensorflow.org/install/ ...

- tensorflow利用预训练模型进行目标检测(四):检测中的精度问题以及evaluation

一.tensorflow提供的evaluation Inference and evaluation on the Open Images dataset:https://github.com/ten ...

- 目标检测 <二> TensorFlow安装

一:创建TensorFlow工作环境目录 1. 在anconda安装目录下找到envs目录然后进入 2. 在当前目录下创建一个文件夹改名为tensorflow 二: 创建TensorFlow工作环境 ...

- 在opencv3中利用SVM进行图像目标检测和分类

采用鼠标事件,手动选择样本点,包括目标样本和背景样本.组成训练数据进行训练 1.主函数 #include "stdafx.h" #include "opencv2/ope ...

- AI佳作解读系列(二)——目标检测AI算法集杂谈:R-CNN,faster R-CNN,yolo,SSD,yoloV2,yoloV3

1 引言 深度学习目前已经应用到了各个领域,应用场景大体分为三类:物体识别,目标检测,自然语言处理.本文着重与分析目标检测领域的深度学习方法,对其中的经典模型框架进行深入分析. 目标检测可以理解为是物 ...

随机推荐

- TexturePacker贴图打包工具

1.该软件是收费的,不过对于这么一款实用的工具来说,物有所值,下载地址 http://www.codeandweb.com/texturepacker 2.openGL载入纹理图片的时候,所用内存是会 ...

- PCL:PCL与MFC 冲突总结

(1):max,min问题 MFC程序过程中使用STL一些类编译出错,放到Console Application里一切正常.比如: void CMyDialog::OnBnClickedButton1 ...

- css—文字渐变色

.text-gradient { display: inline-block; color: green; font-size: 10em; font-family: ´微软雅黑´; backgrou ...

- strusts2_json

引用别人的 Struts.xml <package name="default" extends ="json-default" > <act ...

- Pycharm 设置

1:显示行号 打上对勾OK 2:设置作者 & 文件编码 3:选择切换Python的版本

- 解读:20大5G关键技术

解读:20大5G关键技术 5G网络技术主要分为三类:核心网.回传和前传网络.无线接入网. 核心网 核心网关键技术主要包括:网络功能虚拟化(NFV).软件定义网络(SDN).网络切片和多接入边缘计算(M ...

- 路飞学城Python-Day107

88-Ajax简介 Ajax是前端的JS技术,目前向服务器发送请求是通过1.向浏览器的地址栏发送请求的方式:2.form表单的请求方式是两种get和post方式:3.a标签的href属性对接地址 是一 ...

- FFmpeg avcodec_send_packet压缩包函数

首先看一下FFmpeg关于该packet函数的注释: int avcodec_send_packet ( AVCodecContext * avctx, const AVPacket * ...

- 判断list数组里的json对象有无重复,有则去重留1个

查找有无重复的 var personLength = [{ certType: '2015-10-12', certCode:'Apple'}, { certType: '2015-10-12', c ...

- python的jieba分词

# 官方例程 # encoding=utf-8 import jieba seg_list = jieba.cut("我来到北京清华大学", cut_all=True) print ...