内核hlist的使用

struct hlist_head {

struct hlist_node *first;

};

struct hlist_node {

struct hlist_node *next, **pprev;

};

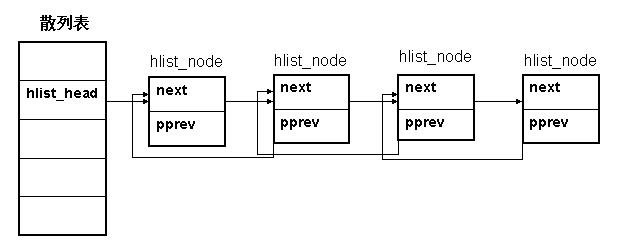

hlist_head表示哈希表的头结点,是hash数组的一个元素。

哈希表中每一个entry(hlist_head)所对应的都是一个链表(hlist),该链表的结点由hlist_node表示。

hlist_head结构体只有一个域,即first。 first指针指向该hlist链表的第一个节点。

hlist_node结构体有两个域,next 和pprev。 next指针很容易理解,它指向下个hlist_node结点,倘若该节点是链表的最后一个节点,next指向NULL。

pprev是一个二级指针, 它指向前一个节点的next指针。为什么我们需要这样一个指针呢?它的好处是什么?

在回答这个问题之前,我们先研究另一个问题:为什么散列表的实现需要两个不同的数据结构?

散列表的目的是为了方便快速的查找,所以散列表通常是一个比较大的数组,否则“冲突”的概率会非常大, 这样也就失去了散列表的意义。如何做到既能维护一张大表,又能不使用过多的内存呢?就只能从数据结构上下功夫了。所以对于散列表的每个entry,它的结构体中只存放一个指针,解决了占用空间的问题。

现在又出现了另一个问题:数据结构不一致。显然,如果hlist_node采用传统的next,prev指针, 对于第一个节点和后面其他节点的处理会不一致。这样并不优雅,而且效率上也有损失。

hlist_node巧妙地将pprev指向上一个节点的next指针的地址,由于hlist_head和hlist_node指向的下一个节点的指针类型相同,这样就解决了通用性!

以IP分片时hlist使用为例,说明一下hlist的使用。

/**

32 * struct inet_frag_queue - fragment queue

33 *

34 * @lock: spinlock protecting the queue

35 * @timer: queue expiration timer

36 * @list: hash bucket list

37 * @refcnt: reference count of the queue

38 * @fragments: received fragments head

39 * @fragments_tail: received fragments tail

40 * @stamp: timestamp of the last received fragment

41 * @len: total length of the original datagram

42 * @meat: length of received fragments so far

43 * @flags: fragment queue flags

44 * @max_size: maximum received fragment size

45 * @net: namespace that this frag belongs to

46 * @list_evictor: list of queues to forcefully evict (e.g. due to low memory)

47 */

struct inet_frag_queue {

spinlock_t lock;

struct timer_list timer;

struct hlist_node list; //hash节点

atomic_t refcnt;

struct sk_buff *fragments;

struct sk_buff *fragments_tail;

ktime_t stamp;

int len;

int meat;

__u8 flags;

u16 max_size;

struct netns_frags *net;

struct hlist_node list_evictor;

}; #define INETFRAGS_HASHSZ 1024 //hash数组的大小 /* averaged:

67 * max_depth = default ipfrag_high_thresh / INETFRAGS_HASHSZ /

68 * rounded up (SKB_TRUELEN(0) + sizeof(struct ipq or

69 * struct frag_queue))

70 */

#define INETFRAGS_MAXDEPTH 128 struct inet_frag_bucket {

struct hlist_head chain; //hash冲突链表

spinlock_t chain_lock;

}; struct inet_frags {

struct inet_frag_bucket hash[INETFRAGS_HASHSZ]; //hash数组 struct work_struct frags_work;

unsigned int next_bucket;

unsigned long last_rebuild_jiffies;

bool rebuild; /* The first call to hashfn is responsible to initialize

87 * rnd. This is best done with net_get_random_once.

88 *

89 * rnd_seqlock is used to let hash insertion detect

90 * when it needs to re-lookup the hash chain to use.

91 */

u32 rnd;

seqlock_t rnd_seqlock;

int qsize; unsigned int (*hashfn)(const struct inet_frag_queue *);

bool (*match)(const struct inet_frag_queue *q,

const void *arg);

void (*constructor)(struct inet_frag_queue *q,

const void *arg);

void (*destructor)(struct inet_frag_queue *);

void (*skb_free)(struct sk_buff *);

void (*frag_expire)(unsigned long data);

struct kmem_cache *frags_cachep;

const char *frags_cache_name;

};

/*

2 * inet fragments management

3 *

4 * This program is free software; you can redistribute it and/or

5 * modify it under the terms of the GNU General Public License

6 * as published by the Free Software Foundation; either version

7 * 2 of the License, or (at your option) any later version.

8 *

9 * Authors: Pavel Emelyanov <xemul@openvz.org>

10 * Started as consolidation of ipv4/ip_fragment.c,

11 * ipv6/reassembly. and ipv6 nf conntrack reassembly

12 */ #include <linux/list.h>

#include <linux/spinlock.h>

#include <linux/module.h>

#include <linux/timer.h>

#include <linux/mm.h>

#include <linux/random.h>

#include <linux/skbuff.h>

#include <linux/rtnetlink.h>

#include <linux/slab.h> #include <net/sock.h>

#include <net/inet_frag.h>

#include <net/inet_ecn.h> #define INETFRAGS_EVICT_BUCKETS 128

#define INETFRAGS_EVICT_MAX 512 /* don't rebuild inetfrag table with new secret more often than this */

#define INETFRAGS_MIN_REBUILD_INTERVAL (5 * HZ) /* Given the OR values of all fragments, apply RFC 3168 5.3 requirements

35 * Value : 0xff if frame should be dropped.

36 * 0 or INET_ECN_CE value, to be ORed in to final iph->tos field

37 */

const u8 ip_frag_ecn_table[] = {

/* at least one fragment had CE, and others ECT_0 or ECT_1 */

[IPFRAG_ECN_CE | IPFRAG_ECN_ECT_0] = INET_ECN_CE,

[IPFRAG_ECN_CE | IPFRAG_ECN_ECT_1] = INET_ECN_CE,

[IPFRAG_ECN_CE | IPFRAG_ECN_ECT_0 | IPFRAG_ECN_ECT_1] = INET_ECN_CE, /* invalid combinations : drop frame */

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_CE] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_ECT_0] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_ECT_1] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_ECT_0 | IPFRAG_ECN_ECT_1] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_CE | IPFRAG_ECN_ECT_0] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_CE | IPFRAG_ECN_ECT_1] = 0xff,

[IPFRAG_ECN_NOT_ECT | IPFRAG_ECN_CE | IPFRAG_ECN_ECT_0 | IPFRAG_ECN_ECT_1] = 0xff,

};

EXPORT_SYMBOL(ip_frag_ecn_table); static unsigned int //hash函数

inet_frag_hashfn(const struct inet_frags *f, const struct inet_frag_queue *q)

{

return f->hashfn(q) & (INETFRAGS_HASHSZ - );

} static bool inet_frag_may_rebuild(struct inet_frags *f)

{

return time_after(jiffies,

f->last_rebuild_jiffies + INETFRAGS_MIN_REBUILD_INTERVAL);

} static void inet_frag_secret_rebuild(struct inet_frags *f)

{

int i; write_seqlock_bh(&f->rnd_seqlock); if (!inet_frag_may_rebuild(f))

goto out; get_random_bytes(&f->rnd, sizeof(u32)); for (i = ; i < INETFRAGS_HASHSZ; i++) {

struct inet_frag_bucket *hb;

struct inet_frag_queue *q;

struct hlist_node *n; hb = &f->hash[i];

spin_lock(&hb->chain_lock); hlist_for_each_entry_safe(q, n, &hb->chain, list) {//安全遍历所有hash冲突链表

unsigned int hval = inet_frag_hashfn(f, q); //计算hash值 if (hval != i) {

struct inet_frag_bucket *hb_dest; hlist_del(&q->list); /* Relink to new hash chain. */

hb_dest = &f->hash[hval]; //hash冲突链表 /* This is the only place where we take

98 * another chain_lock while already holding

99 * one. As this will not run concurrently,

100 * we cannot deadlock on hb_dest lock below, if its

101 * already locked it will be released soon since

102 * other caller cannot be waiting for hb lock

103 * that we've taken above.

104 */

spin_lock_nested(&hb_dest->chain_lock,

SINGLE_DEPTH_NESTING); //把节点list加入到hash冲突链表chain里面

hlist_add_head(&q->list, &hb_dest->chain);

spin_unlock(&hb_dest->chain_lock);

}

}

spin_unlock(&hb->chain_lock);

} f->rebuild = false;

f->last_rebuild_jiffies = jiffies;

out:

write_sequnlock_bh(&f->rnd_seqlock);

} static bool inet_fragq_should_evict(const struct inet_frag_queue *q)

{

return q->net->low_thresh == ||

frag_mem_limit(q->net) >= q->net->low_thresh;

} static unsigned int

inet_evict_bucket(struct inet_frags *f, struct inet_frag_bucket *hb)

{

struct inet_frag_queue *fq;

struct hlist_node *n;

unsigned int evicted = ;

HLIST_HEAD(expired); spin_lock(&hb->chain_lock); hlist_for_each_entry_safe(fq, n, &hb->chain, list) {

if (!inet_fragq_should_evict(fq))

continue; if (!del_timer(&fq->timer))

continue; hlist_add_head(&fq->list_evictor, &expired);

++evicted;

} spin_unlock(&hb->chain_lock); hlist_for_each_entry_safe(fq, n, &expired, list_evictor)

f->frag_expire((unsigned long) fq); return evicted;

} static void inet_frag_worker(struct work_struct *work)

{

unsigned int budget = INETFRAGS_EVICT_BUCKETS;

unsigned int i, evicted = ;

struct inet_frags *f; f = container_of(work, struct inet_frags, frags_work); BUILD_BUG_ON(INETFRAGS_EVICT_BUCKETS >= INETFRAGS_HASHSZ); local_bh_disable(); for (i = ACCESS_ONCE(f->next_bucket); budget; --budget) {

evicted += inet_evict_bucket(f, &f->hash[i]);

i = (i + ) & (INETFRAGS_HASHSZ - );

if (evicted > INETFRAGS_EVICT_MAX)

break;

} f->next_bucket = i; local_bh_enable(); if (f->rebuild && inet_frag_may_rebuild(f))

inet_frag_secret_rebuild(f);

} static void inet_frag_schedule_worker(struct inet_frags *f)

{

if (unlikely(!work_pending(&f->frags_work)))

schedule_work(&f->frags_work);

} int inet_frags_init(struct inet_frags *f)

{

int i; INIT_WORK(&f->frags_work, inet_frag_worker); for (i = ; i < INETFRAGS_HASHSZ; i++) { //初始化hash数组中所有的元素

struct inet_frag_bucket *hb = &f->hash[i]; spin_lock_init(&hb->chain_lock); //初始化一个hash冲突链表

INIT_HLIST_HEAD(&hb->chain);

} seqlock_init(&f->rnd_seqlock);

f->last_rebuild_jiffies = ;

f->frags_cachep = kmem_cache_create(f->frags_cache_name, f->qsize, , ,

NULL);

if (!f->frags_cachep)

return -ENOMEM; return ;

}

EXPORT_SYMBOL(inet_frags_init); void inet_frags_init_net(struct netns_frags *nf)

{

init_frag_mem_limit(nf);

}

EXPORT_SYMBOL(inet_frags_init_net); void inet_frags_fini(struct inet_frags *f)

{

cancel_work_sync(&f->frags_work);

kmem_cache_destroy(f->frags_cachep);

}

EXPORT_SYMBOL(inet_frags_fini); void inet_frags_exit_net(struct netns_frags *nf, struct inet_frags *f)

{

unsigned int seq;

int i; nf->low_thresh = ; evict_again:

local_bh_disable();

seq = read_seqbegin(&f->rnd_seqlock); for (i = ; i < INETFRAGS_HASHSZ ; i++)

inet_evict_bucket(f, &f->hash[i]); local_bh_enable();

cond_resched(); if (read_seqretry(&f->rnd_seqlock, seq) ||

percpu_counter_sum(&nf->mem))

goto evict_again; percpu_counter_destroy(&nf->mem);

}

EXPORT_SYMBOL(inet_frags_exit_net); static struct inet_frag_bucket *

get_frag_bucket_locked(struct inet_frag_queue *fq, struct inet_frags *f)

__acquires(hb->chain_lock)

{

struct inet_frag_bucket *hb;

unsigned int seq, hash; restart:

seq = read_seqbegin(&f->rnd_seqlock); hash = inet_frag_hashfn(f, fq);

hb = &f->hash[hash]; spin_lock(&hb->chain_lock);

if (read_seqretry(&f->rnd_seqlock, seq)) {

spin_unlock(&hb->chain_lock);

goto restart;

} return hb;

} static inline void fq_unlink(struct inet_frag_queue *fq, struct inet_frags *f)

{

struct inet_frag_bucket *hb; hb = get_frag_bucket_locked(fq, f);

hlist_del(&fq->list);

fq->flags |= INET_FRAG_COMPLETE;

spin_unlock(&hb->chain_lock);

} void inet_frag_kill(struct inet_frag_queue *fq, struct inet_frags *f)

{

if (del_timer(&fq->timer))

atomic_dec(&fq->refcnt); if (!(fq->flags & INET_FRAG_COMPLETE)) {

fq_unlink(fq, f);

atomic_dec(&fq->refcnt);

}

}

EXPORT_SYMBOL(inet_frag_kill); static inline void frag_kfree_skb(struct netns_frags *nf, struct inet_frags *f,

struct sk_buff *skb)

{

if (f->skb_free)

f->skb_free(skb);

kfree_skb(skb);

} void inet_frag_destroy(struct inet_frag_queue *q, struct inet_frags *f)

{

struct sk_buff *fp;

struct netns_frags *nf;

unsigned int sum, sum_truesize = ; WARN_ON(!(q->flags & INET_FRAG_COMPLETE));

WARN_ON(del_timer(&q->timer) != ); /* Release all fragment data. */

fp = q->fragments;

nf = q->net;

while (fp) {

struct sk_buff *xp = fp->next; sum_truesize += fp->truesize;

frag_kfree_skb(nf, f, fp);

fp = xp;

}

sum = sum_truesize + f->qsize; if (f->destructor)

f->destructor(q);

kmem_cache_free(f->frags_cachep, q); sub_frag_mem_limit(nf, sum);

}

EXPORT_SYMBOL(inet_frag_destroy); static struct inet_frag_queue *inet_frag_intern(struct netns_frags *nf,

struct inet_frag_queue *qp_in,

struct inet_frags *f,

void *arg)

{

struct inet_frag_bucket *hb = get_frag_bucket_locked(qp_in, f);

struct inet_frag_queue *qp; #ifdef CONFIG_SMP

/* With SMP race we have to recheck hash table, because

341 * such entry could have been created on other cpu before

342 * we acquired hash bucket lock.

343 */

hlist_for_each_entry(qp, &hb->chain, list) {

if (qp->net == nf && f->match(qp, arg)) {

atomic_inc(&qp->refcnt);

spin_unlock(&hb->chain_lock);

qp_in->flags |= INET_FRAG_COMPLETE;

inet_frag_put(qp_in, f);

return qp;

}

}

#endif

qp = qp_in;

if (!mod_timer(&qp->timer, jiffies + nf->timeout))

atomic_inc(&qp->refcnt); atomic_inc(&qp->refcnt);

hlist_add_head(&qp->list, &hb->chain); spin_unlock(&hb->chain_lock); return qp;

} static struct inet_frag_queue *inet_frag_alloc(struct netns_frags *nf,

struct inet_frags *f,

void *arg)

{

struct inet_frag_queue *q; if (frag_mem_limit(nf) > nf->high_thresh) {

inet_frag_schedule_worker(f);

return NULL;

} q = kmem_cache_zalloc(f->frags_cachep, GFP_ATOMIC);

if (!q)

return NULL; q->net = nf;

f->constructor(q, arg);

add_frag_mem_limit(nf, f->qsize); setup_timer(&q->timer, f->frag_expire, (unsigned long)q);

spin_lock_init(&q->lock);

atomic_set(&q->refcnt, ); return q;

} static struct inet_frag_queue *inet_frag_create(struct netns_frags *nf,

struct inet_frags *f,

void *arg)

{

struct inet_frag_queue *q; q = inet_frag_alloc(nf, f, arg);

if (!q)

return NULL; return inet_frag_intern(nf, q, f, arg);

} struct inet_frag_queue *inet_frag_find(struct netns_frags *nf,

struct inet_frags *f, void *key,

unsigned int hash)

{

struct inet_frag_bucket *hb;

struct inet_frag_queue *q;

int depth = ; if (frag_mem_limit(nf) > nf->low_thresh)

inet_frag_schedule_worker(f); hash &= (INETFRAGS_HASHSZ - );

hb = &f->hash[hash]; spin_lock(&hb->chain_lock);

hlist_for_each_entry(q, &hb->chain, list) {

if (q->net == nf && f->match(q, key)) {

atomic_inc(&q->refcnt);

spin_unlock(&hb->chain_lock);

return q;

}

depth++;

}

spin_unlock(&hb->chain_lock); if (depth <= INETFRAGS_MAXDEPTH)

return inet_frag_create(nf, f, key); if (inet_frag_may_rebuild(f)) {

if (!f->rebuild)

f->rebuild = true;

inet_frag_schedule_worker(f);

} return ERR_PTR(-ENOBUFS);

}

EXPORT_SYMBOL(inet_frag_find); void inet_frag_maybe_warn_overflow(struct inet_frag_queue *q,

const char *prefix)

{

static const char msg[] = "inet_frag_find: Fragment hash bucket"

" list length grew over limit " __stringify(INETFRAGS_MAXDEPTH)

". Dropping fragment.\n"; if (PTR_ERR(q) == -ENOBUFS)

net_dbg_ratelimited("%s%s", prefix, msg);

}

EXPORT_SYMBOL(inet_frag_maybe_warn_overflow);

内核hlist的使用的更多相关文章

- Linux 内核 hlist 详解

在Linux内核中,hlist(哈希链表)使用非常广泛.本文将对其数据结构和核心函数进行分析. 和hlist相关的数据结构有两个:hlist_head 和 hlist_node //hash桶的头结点 ...

- Linux内核hlist数据结构分析

在内核编程中哈希链表hlist使用非常多,比方在openvswitch中流表的存储中就使用了(见[1]).hlist的表头仅有一个指向首节点的指针.而没有指向尾节点的指针,这样在有非常多个b ...

- Linux 内核链表 list.h 的使用

Linux 内核链表 list.h 的使用 C 语言本身并不自带集合(Collection)工具,当我们需要把结构体(struct)实例串联起来时,就需要在结构体内声明指向下一实例的指针,构成所谓的& ...

- Linux内核list/hlist解读

转自:http://blog.chinaunix.net/uid-20671208-id-3763131.html 目录 1. 前言 2 2. 通用宏 2 2.1. typeof 2 2.1.1. 定 ...

- linux内核数据结构学习总结

目录 . 进程相关数据结构 ) struct task_struct ) struct cred ) struct pid_link ) struct pid ) struct signal_stru ...

- 深入分析 Linux 内核链表--转

引用地址:http://www.ibm.com/developerworks/cn/linux/kernel/l-chain/index.html 一. 链表数据结构简介 链表是一种常用的组织有序数据 ...

- 深入分析 Linux 内核链表

转载:http://www.ibm.com/developerworks/cn/linux/kernel/l-chain/ 一. 链表数据结构简介 链表是一种常用的组织有序数据的数据结构,它通过指 ...

- linux 内核分析之list_head

转自:http://www.cnblogs.com/riky/archive/2006/12/28/606242.html 一.链表数据结构简介 链表是一种常用的组织有序数据的数据结构,它通过指针将一 ...

- Linux内核 hlist_head/hlist_node结构解析

内核中的定义: struct hlist_head { struct hlist_node *first;}; struct hlist_node { struct hlist_node ...

随机推荐

- 【BZOJ1922】[Sdoi2010]大陆争霸 Dijkstra

Description 具体地说,杰森国有 N 个城市,由 M条单向道 路连接.神谕镇是城市 1而杰森国的首都是城市 N.你只需摧毁位于杰森国首都 的曾·布拉泽大神殿,杰森国的信仰,军队还有一切就都会 ...

- [Algorithms] Radix Sort

Radix sort is another linear time sorting algorithm. It sorts (using another sorting subroutine) the ...

- 【转】Spring MVC 3.x 基本配置

WEB-INF/web.xml 例1 <?xml version="1.0" encoding="UTF-8"?> <web-app xmln ...

- ubuntu设置开机启动脚本

rc.local脚本 rc.local脚本是一个ubuntu开机后会自动执行的脚本,我们可以在该脚本内添加命令行指令.该脚本位于/etc/路径下,需要root权限才能修改. 该脚本具体格式如下: #! ...

- Python2 显示 unicode

用户想要看的是 u'中文' 而不是 u'\u4e2d\u6587',但是在 Python2 中有时并不能实现. 转译 转义字符是这样一个字符,标志着在一个字符序列中出现在它之后的后续几个字符采取一种替 ...

- wsgi pep333

转自:https://www.aliyun.com/jiaocheng/444966.html?spm=5176.100033.1.11.559a7C 摘要:wsgi介绍参考:pep333wsgi有两 ...

- Hessian矩阵与多元函数极值

Hessian矩阵与多元函数极值 海塞矩阵(Hessian Matrix),又译作海森矩阵,是一个多元函数的二阶偏导数构成的方阵.虽然它是一个具有悠久历史的数学成果.可是在机器学习和图像处理(比如SI ...

- mysql 时间处理函数

(1)求两个时间戳之间相差的天数 SELECT TIMESTAMPDIFF(DAY, FROM_UNIXTIM ...

- django 通过orm操作数据库

Django Model 每一个Django Model都继承自django.db.models.Model 在Model当中每一个属性attribute都代表一个database field 通过D ...

- unittest 单元测试框架断言方法

unittest单元测试框架的TestCase类下,测试结果断言方法:Assertion methods 方法 检查 版本 assertEqual(a, b) a == b assertNotEqu ...