在flink中使用jackson JSONKeyValueDeserializationSchema反序列化Kafka消息报错解决

在做支付订单宽表的场景,需要关联的表比较多而且支付有可能要延迟很久,这种情况下不太适合使用Flink的表Join,想到的另外一种解决方案是消费多个Topic的数据,再根据订单号进行keyBy,再在逻辑中根据不同Topic处理,所以在接收到的消息中最好能够有topic字段,JSONKeyValueDeserializationSchema就完美的解决了这个问题。

def getKafkaConsumer(kafkaAddr: String, topicNames: util.ArrayList[String], groupId: String): FlinkKafkaConsumer[ObjectNode] = {

val properties = getKafkaProperties(groupId, kafkaAddr)

val consumer = new FlinkKafkaConsumer[ObjectNode](topicNames, new JSONKeyValueDeserializationSchema(true), properties)

consumer.setStartFromGroupOffsets() // the default behaviour

consumer

}

在这里new JSONKeyValueDeserializationSchema(true)是需要带上元数据信息,false则不带上,源码如下

public class JSONKeyValueDeserializationSchema implements KafkaDeserializationSchema<ObjectNode> {

private static final long serialVersionUID = 1509391548173891955L;

private final static Logger log = LoggerFactory.getLogger(JSONKeyValueDeserializationSchema.class);

private final boolean includeMetadata;

private ObjectMapper mapper;

public JSONKeyValueDeserializationSchema(boolean includeMetadata) {

this.includeMetadata = includeMetadata;

}

public ObjectNode deserialize(ConsumerRecord<byte[], byte[]> record) {

if (this.mapper == null) {

this.mapper = new ObjectMapper();

}

ObjectNode node = this.mapper.createObjectNode(); if (record.key() != null) {

node.set("key", this.mapper.readValue(record.key(), JsonNode.class));

}

if (record.value() != null) {

node.set("value", this.mapper.readValue(record.value(), JsonNode.class));

}

if (this.includeMetadata) {

node.putObject("metadata").put("offset", record.offset()).put("topic", record.topic()).put("partition", record.partition());

}return node;

}

public boolean isEndOfStream(ObjectNode nextElement) {

return false;

}

public TypeInformation<ObjectNode> getProducedType() {

return TypeExtractor.getForClass(ObjectNode.class);

}

}

本来以为到这里就大功告成了,谁不想居然报错了。。每条消息反序列化的都报错。

2019-11-29 19:55:15.401 flink [Source: kafkasource (1/1)] ERROR c.y.b.D.JSONKeyValueDeserializationSchema - Unrecognized token 'xxxxx': was expecting ('true', 'false' or 'null')

at [Source: [B@2e119f0e; line: 1, column: 45]org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.JsonParseException: Unrecognized token 'xxxxxxx': was expecting ('true', 'false' or 'null')

at [Source: [B@2e119f0e; line: 1, column: 45]

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.JsonParser._constructError(JsonParser.java:1586)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.base.ParserMinimalBase._reportError(ParserMinimalBase.java:521)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.json.UTF8StreamJsonParser._reportInvalidToken(UTF8StreamJsonParser.java:3464)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.json.UTF8StreamJsonParser._handleUnexpectedValue(UTF8StreamJsonParser.java:2628)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.json.UTF8StreamJsonParser._nextTokenNotInObject(UTF8StreamJsonParser.java:854)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.core.json.UTF8StreamJsonParser.nextToken(UTF8StreamJsonParser.java:748)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper._initForReading(ObjectMapper.java:3847)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper._readMapAndClose(ObjectMapper.java:3792)

at org.apache.flink.shaded.jackson2.com.fasterxml.jackson.databind.ObjectMapper.readValue(ObjectMapper.java:2890)

at com.xx.xx.DeserializationSchema.JSONKeyValueDeserializationSchema.deserialize(JSONKeyValueDeserializationSchema.java:33)

at com.xx.xx.DeserializationSchema.JSONKeyValueDeserializationSchema.deserialize(JSONKeyValueDeserializationSchema.java:15)

at org.apache.flink.streaming.connectors.kafka.internal.KafkaFetcher.runFetchLoop(KafkaFetcher.java:140)

at org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumerBase.run(FlinkKafkaConsumerBase.java:711)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:93)

at org.apache.flink.streaming.api.operators.StreamSource.run(StreamSource.java:57)

at org.apache.flink.streaming.runtime.tasks.SourceStreamTask.run(SourceStreamTask.java:97)

at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:300)

at org.apache.flink.runtime.taskmanager.Task.run(Task.java:711)

at java.lang.Thread.run(Thread.java:745)

因为源码是没有try catch的,无法获取到报错的具体数据,只能直接重写这个方法了

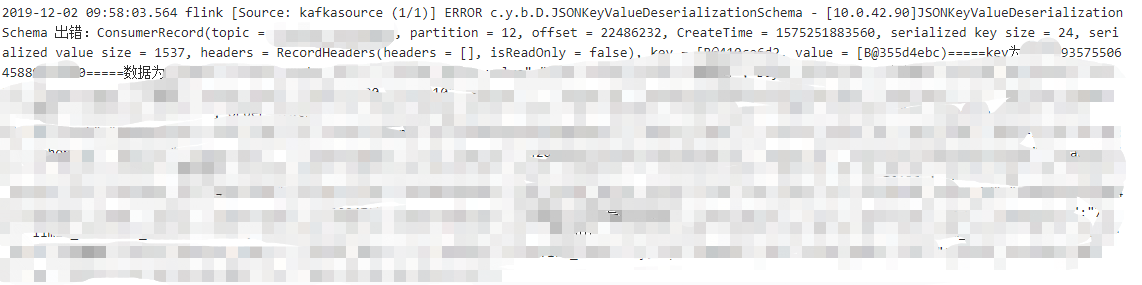

新建一个DeserializationSchema包,再创建JSONKeyValueDeserializationSchema类,然后在getKafkaConsumer重新引用我们自己的JSONKeyValueDeserializationSchema类,再在日志中我们就可以知道是哪些数据无法反序列化

@PublicEvolving

public class JSONKeyValueDeserializationSchema implements KafkaDeserializationSchema<ObjectNode> {

private static final long serialVersionUID = 1509391548173891955L;

private final static Logger log = LoggerFactory.getLogger(JSONKeyValueDeserializationSchema.class);

private final boolean includeMetadata;

private ObjectMapper mapper; public JSONKeyValueDeserializationSchema(boolean includeMetadata) {

this.includeMetadata = includeMetadata;

} public ObjectNode deserialize(ConsumerRecord<byte[], byte[]> record) {

if (this.mapper == null) {

this.mapper = new ObjectMapper();

}

ObjectNode node = this.mapper.createObjectNode();

try {

if (record.key() != null) {

node.set("key", this.mapper.readValue(record.key(), JsonNode.class));

} if (record.value() != null) {

node.set("value", this.mapper.readValue(record.value(), JsonNode.class));

} if (this.includeMetadata) {

node.putObject("metadata").put("offset", record.offset()).put("topic", record.topic()).put("partition", record.partition());

}

} catch (Exception e) {

log.error(e.getMessage(), e);

log.error("JSONKeyValueDeserializationSchema 出错:" + record.toString() + "=====key为" + new String(record.key()) + "=====数据为" + new String(record.value()));

}

return node;

} public boolean isEndOfStream(ObjectNode nextElement) {

return false;

} public TypeInformation<ObjectNode> getProducedType() {

return TypeExtractor.getForClass(ObjectNode.class);

}

}

发现key为一串订单号,因为topic数据不是原生canal json数据,是被加工过的,那应该是上游生产的时候指定的key

那继续修改我们的JSONKeyValueDeserializationSchema代码,因为key用不到,所以直接注释掉,当然也可以将class指定为String

if (record.key() != null) {

node.set("key", this.mapper.readValue(record.key(), JsonNode.class));

}

try catch在这里我们还是保留并将出错的数据打到日志,修改后的代码如下

@PublicEvolving

public class JSONKeyValueDeserializationSchema implements KafkaDeserializationSchema<ObjectNode> {

private static final long serialVersionUID = 1509391548173891955L;

private final static Logger log = LoggerFactory.getLogger(JSONKeyValueDeserializationSchema.class);

private final boolean includeMetadata;

private ObjectMapper mapper; public JSONKeyValueDeserializationSchema(boolean includeMetadata) {

this.includeMetadata = includeMetadata;

} public ObjectNode deserialize(ConsumerRecord<byte[], byte[]> record) {

if (this.mapper == null) {

this.mapper = new ObjectMapper();

}

ObjectNode node = this.mapper.createObjectNode();

try {

// if (record.key() != null) {

// node.set("key", this.mapper.readValue(record.key(), JsonNode.class));

// } if (record.value() != null) {

node.set("value", this.mapper.readValue(record.value(), JsonNode.class));

} if (this.includeMetadata) {

node.putObject("metadata").put("offset", record.offset()).put("topic", record.topic()).put("partition", record.partition());

}

} catch (Exception e) {

log.error(e.getMessage(), e);

log.error("JSONKeyValueDeserializationSchema 出错:" + record.toString() + "=====key为" + new String(record.key()) + "=====数据为" + new String(record.value()));

}

return node;

} public boolean isEndOfStream(ObjectNode nextElement) {

return false;

} public TypeInformation<ObjectNode> getProducedType() {

return TypeExtractor.getForClass(ObjectNode.class);

}

}

至此,问题解决。

在flink中使用jackson JSONKeyValueDeserializationSchema反序列化Kafka消息报错解决的更多相关文章

- cmd命令中运行pytest命令导入模块报错解决方法

报错截图 ImportError while loading conftest 'E:\python\HuaFansApi\test_case\conftest.py'. test_case\conf ...

- 【报错】IntelliJ IDEA中绿色注释扫描飘红报错解决

几天开机,突然发现自己昨天的项目可以运行,今天就因为绿色注释飘红而不能运行,很是尴尬: 解决办法如下: 1.在IDEA中的setting中搜索:"javadoc" 2.把Javad ...

- laravel 迁移文件中修改含有enum字段的表报错解决方法

解决方法: 在迁移文件中up方法最上方加上下面这一行代码即可: Schema::getConnection()->getDoctrineSchemaManager()->getDataba ...

- 在eclipse中使用git的pull功能时报错解决办法

打开项目的 .git/config文件,参照以下进行编辑 [core] symlinks = false repositoryformatversion = 0 filemode = false lo ...

- vuex中的babel编译mapGetters/mapActions报错解决方法

vex使用...mapActions报错解决办法 vuex2增加了mapGetters和mapActions的方法,借助stage2的Object Rest Operator 所在通过 methods ...

- Spring Boot在反序列化过程中:jackson.databind.exc.InvalidDefinitionException cannot deserialize from Object value

错误场景 用Spring boot写了一个简单的RESTful API,在测试POST请求的时候,request body是一个符合对应实体类要求的json串,post的时候报错. 先贴一段error ...

- Flink 使用(一)——从kafka中读取数据写入到HBASE中

1.前言 本文是在<如何计算实时热门商品>[1]一文上做的扩展,仅在功能上验证了利用Flink消费Kafka数据,把处理后的数据写入到HBase的流程,其具体性能未做调优.此外,文中并未就 ...

- Flink学习笔记:Connectors之kafka

本文为<Flink大数据项目实战>学习笔记,想通过视频系统学习Flink这个最火爆的大数据计算框架的同学,推荐学习课程: Flink大数据项目实战:http://t.cn/EJtKhaz ...

- Flink中的Time

戳更多文章: 1-Flink入门 2-本地环境搭建&构建第一个Flink应用 3-DataSet API 4-DataSteam API 5-集群部署 6-分布式缓存 7-重启策略 8-Fli ...

随机推荐

- [考试反思]1013csp-s模拟测试72:距离

最近总是这个样子. 看上去排名好像还可以,但是实际上离上面的分差往往能到80分,但是身后的分差其实只有10/20分. 比上不足,比下也不怎么的. 所以虽然看起来没有出rank10,但是在总分排行榜上却 ...

- 手把手教你定制标准Spring Boot starter,真的很清晰

写在前面 我们每次构建一个 Spring 应用程序时,我们都不希望从头开始实现具有「横切关注点」的内容:相反,我们希望一次性实现这些功能,并根据需要将它们包含到任何我们要构建的应用程序中 横切关注点 ...

- MinIO 参数解析与限制

MinIO 参数解析与限制 MinIO server 在默认情况下会将所有配置信息存到 ${HOME}/.minio/config.json 文件中. 以下部分提供每个字段的详细说明以及如何自定义它们 ...

- Mokia(三维偏序)P4390

提到cdq,就不得不提这道该死的,挨千刀的题目了. 极简题面: 给定一个二维平面,在ti时刻会在(xi,yi)放一个点,会在tj时刻查询一个方框里面的点的数量 看道题就是二维线段树乱搞啊,这么水??? ...

- 「Usaco2008 Jan」人工湖O(∩_∩)O 纯属的模拟+栈

题目描述 夏日那让人喘不过气的酷热将奶牛们的烦躁情绪推到了最高点.最终,约翰决定建一个人工湖供奶牛消暑之用. 为了使湖看起来更加真实,约翰决定将湖的横截面建成N(1≤N≤105)个连续的平台高低错落的 ...

- Project Euler 53: Combinatoric selections

从12345这个数字中挑选出三个数共有十种方式: \[ 123, 124, 125, 134, 135, 145, 234, 235, 245,345 \] 在组合学中,我们将其记为\(C(5,3)= ...

- 安装cnpm遇到的问题

安装 cnpm时,用git安装时,安装好node环境后,测试版本号node -v和npm -v都没问题,可以输出版本号,但是安装cnpm时,使用淘宝镜像安装后,会出现如下警告: 这个是提醒你安装的版本 ...

- 『题解』洛谷P5436 【XR-2】缘分

Problem Portal Portal1:Luogu Description 一禅希望知道他和师父之间的缘分大小.可是如何才能知道呢? 一禅想了个办法,他先和师父约定一个正整数\(n\),接着他们 ...

- javascript采用Broadway实现安卓视频自动播放的方法(这种坑比较多 不建议使用)

javascript采用Broadway实现安卓视频自动播放的方法Broadway 是一个 H.264 解码器, 比jsmpge清晰度要高 使用 Emscripten 工具从 Android 的 H. ...

- Java 调用 Hbase API 访问接口实现方案

HBase是一个分布式的.面向列的开源数据库,该技术来源于 Fay Chang 所撰写的Google论文“Bigtable:一个结构化数据的分布式存储系统”.就像Bigtable利用了Google文件 ...