Docker 搭建Spark 依赖singularities/spark:2.2镜像

singularities/spark:2.2版本中

Hadoop版本:2.8.2

Spark版本: 2.2.1

Scala版本:2.11.8

Java版本:1.8.0_151

拉取镜像:

[root@localhost docker-spark-2.1.]# docker pull singularities/spark

查看:

[root@localhost docker-spark-2.1.]# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/singularities/spark latest 84222b254621 months ago 1.39 GB

创建docker-compose.yml文件

[root@localhost home]# mkdir singularitiesCR

[root@localhost home]# cd singularitiesCR

[root@localhost singularitiesCR]# touch docker-compose.yml

内容:

version: "" services:

master:

image: singularities/spark

command: start-spark master

hostname: master

ports:

- "6066:6066"

- "7070:7070"

- "8080:8080"

- "50070:50070"

worker:

image: singularities/spark

command: start-spark worker master

environment:

SPARK_WORKER_CORES:

SPARK_WORKER_MEMORY: 2g

links:

- master

执行docker-compose up即可启动一个单工作节点的standlone模式下运行的spark集群

[root@localhost singularitiesCR]# docker-compose up -d

Creating singularitiescr_master_1 ... done

Creating singularitiescr_worker_1 ... done

查看容器:

[root@localhost singularitiesCR]# docker-compose ps

Name Command State Ports

--------------------------------------------------------------------------------------------------------------------------------------------------------

singularitiescr_master_1 start-spark master Up /tcp, /tcp, /tcp, /tcp, /tcp, /tcp,

0.0.0.0:->/tcp, /tcp, /tcp, /tcp, /tcp,

0.0.0.0:->/tcp, 0.0.0.0:->/tcp, /tcp, /tcp,

0.0.0.0:->/tcp, /tcp, /tcp

singularitiescr_worker_1 start-spark worker master Up /tcp, /tcp, /tcp, /tcp, /tcp, /tcp, /tcp, /tcp,

/tcp, /tcp, /tcp, /tcp, /tcp, /tcp, /tcp, /tcp,

/tcp

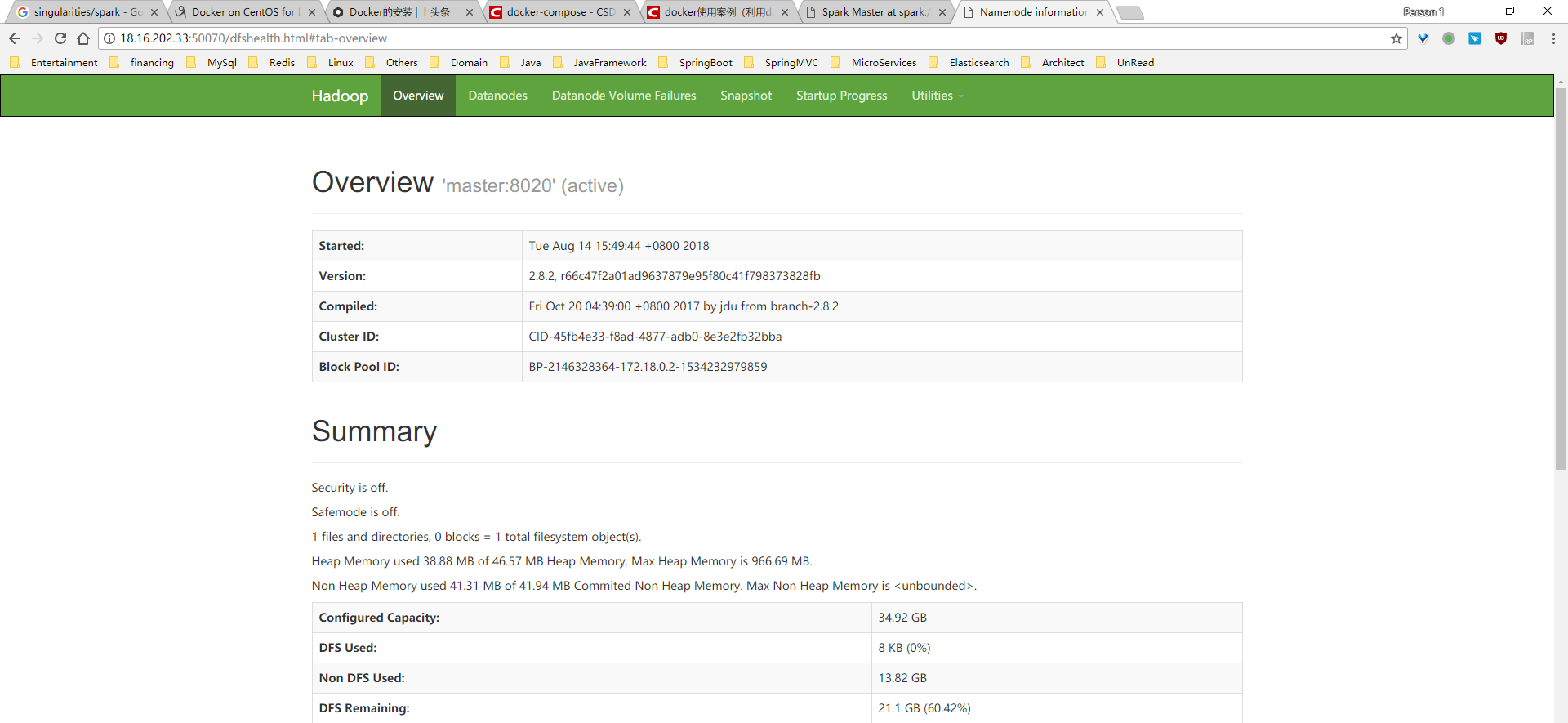

查看结果:

停止容器:

[root@localhost singularitiesCR]# docker-compose stop

Stopping singularitiescr_worker_1 ... done

Stopping singularitiescr_master_1 ... done

[root@localhost singularitiesCR]# docker-compose ps

Name Command State Ports

-----------------------------------------------------------------------

singularitiescr_master_1 start-spark master Exit

singularitiescr_worker_1 start-spark worker master Exit

删除容器:

[root@localhost singularitiesCR]# docker-compose rm

Going to remove singularitiescr_worker_1, singularitiescr_master_1

Are you sure? [yN] y

Removing singularitiescr_worker_1 ... done

Removing singularitiescr_master_1 ... done

[root@localhost singularitiesCR]# docker-compose ps

Name Command State Ports

------------------------------

进入master容器查看版本:

[root@localhost singularitiesCR]# docker exec -it /bin/bash

root@master:/# hadoop version

Hadoop 2.8.

Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 66c47f2a01ad9637879e95f80c41f798373828fb

Compiled by jdu on --19T20:39Z

Compiled with protoc 2.5.

From source with checksum dce55e5afe30c210816b39b631a53b1d

This command was run using /usr/local/hadoop-2.8./share/hadoop/common/hadoop-common-2.8..jar

root@master:/# which is hadoop

/usr/local/hadoop-2.8./bin/hadoop

root@master:/# spark-shell

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

// :: WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Spark context Web UI available at http://172.18.0.2:4040

Spark context available as 'sc' (master = local[*], app id = local-).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 2.2.

/_/ Using Scala version 2.11. (OpenJDK -Bit Server VM, Java 1.8.0_151)

Type in expressions to have them evaluated.

Type :help for more information.

参考:

https://github.com/SingularitiesCR/spark-docker

https://blog.csdn.net/u013705066/article/details/80030732

Docker 搭建Spark 依赖singularities/spark:2.2镜像的更多相关文章

- Docker 搭建Spark 依赖sequenceiq/spark:1.6镜像

使用Docker-Hub中Spark排行最高的sequenceiq/spark:1.6.0. 操作: 拉取镜像: [root@localhost home]# docker pull sequence ...

- docker搭建本地仓库并制作自己的镜像

原文地址https://blog.csdn.net/junmoxi/article/details/80004796 1. 搭建本地仓库1.1 下载仓库镜像1.2 启动仓库容器2. 在CentOS容器 ...

- 用Docker搭建RabbitMq的普通集群和镜像集群

普通集群:多个节点组成的普通集群,消息随机发送到其中一个节点的队列上,其他节点仅保留元数据,各个节点仅有相同的元数据,即队列结构.消费者消费消息时,会从各个节点拉取消息,如果保存消息的节点故障,则无法 ...

- 使用 docker 搭建 nginx+php-fpm 环境 (两个独立镜像)

:first-child{margin-top:0!important}.markdown-body>:last-child{margin-bottom:0!important}.markdow ...

- Mac下docker搭建lnmp环境 + redis + elasticsearch

之前在windows下一直使用vagrant做开发, 团队里面也是各种开发环境,几个人也没有统一环境,各种上线都是人肉,偶尔还会有因为开发.测试.生产环境由于软件版本或者配置不一致产生的问题, 今年准 ...

- docker搭建elasticsearch、kibana,并集成至spring boot

步骤如下: 一.基于docker搭建elasticsearch环境 1.拉取镜像 docker pull elasticsearch5.6.8 2.制作elasticsearch的配置文件 maste ...

- 使用Docker搭建Spark集群(用于实现网站流量实时分析模块)

上一篇使用Docker搭建了Hadoop的完全分布式:使用Docker搭建Hadoop集群(伪分布式与完全分布式),本次记录搭建spark集群,使用两者同时来实现之前一直未完成的项目:网站日志流量分析 ...

- Docker搭建大数据集群 Hadoop Spark HBase Hive Zookeeper Scala

Docker搭建大数据集群 给出一个完全分布式hadoop+spark集群搭建完整文档,从环境准备(包括机器名,ip映射步骤,ssh免密,Java等)开始,包括zookeeper,hadoop,hiv ...

- Spark认识&环境搭建&运行第一个Spark程序

摘要:Spark作为新一代大数据计算引擎,因为内存计算的特性,具有比hadoop更快的计算速度.这里总结下对Spark的认识.虚拟机Spark安装.Spark开发环境搭建及编写第一个scala程序.运 ...

随机推荐

- jdk自动安装java_home 无法修改解决方法

使用命令行修改 cmd下set java_home=D:\soft\java\jdk1.7.0_72 搞定

- Python -- 连接数据库SqlServer

用Python几行代码查询数据库,此处以Sql server为例. 1. 安装pymssql,在cmd中运行一下代码 pip install pymssql 2. 链接并执行sql语句 #-*-cod ...

- java.security.NoSuchAlgorithmException: AES KeyGenerator not available

异常信息 Caused by: Java.lang.IllegalStateException: Unable to acquire AES algorithm. This is required t ...

- linux 系统监控和进程管理

1.命令top,查看cpu和内存使用,主要进程列表和占用资源. 2.内存使用命令foree -g 3.查询所有java进程:pgrep -l java ------ps aux|grep .j ...

- URL some

** 路由系统:URL配置(URLconf)就像Django所支撑网站的目录. 本质是URL与该URL要调用的函数的映射表 基本格式 : from django.conf.urls import ur ...

- vue组件通信之任意级组件之间的通信

<div id="app"> <comp1></comp1> <comp2></comp2> </div> ...

- scala 操作hdfs

获取hdfs文件下所有文件getAllFiles 遍历 spark读取 1 package com.spark.demo import java.io.IOException import java. ...

- jumpserver堡垒机安装

1. 下载jumpserver cd /opt wget https://github.com/jumpserver/jumpserver/archive/master.zip unzip maste ...

- 打印一个浮点值%f和%g

详见代码 后续或有更新 #include <stdio.h> #include <stdlib.h> int main(int argc, char *argv[]) { fl ...

- Linux的简单介绍.

Linux操作系统概述: Linux是基于Unix的开源免费的操作系统,由于系统的稳定性和安全性几乎成为程序代码运行的最佳系统环境.Linux是由Linux Torvalds(林纳斯·托瓦兹)起初开发 ...