【cs231n笔记】assignment1之KNN

k-Nearest Neighbor (kNN) 练习

这篇博文是对cs231n课程assignment1的第一个问题KNN算法的完成,参考了一些网上的博客,不具有什么创造性,以个人学习笔记为目的发布。

参考:

http://cs231n.github.io/assignments2017/assignment1/

https://blog.csdn.net/Sean_csy/article/details/89028970

https://www.cnblogs.com/daihengchen/p/5754383.html

KNN分类中K=1时,为最邻近分类,其中的K体现在算法中就是选择与测试样本距离最近的前K个训练样本中出现最多的标签即为测试样本的标签。训练KNN分类器需要记忆所有训练样本,速度很慢,实际应用的很少,但是对于理解机器学习以及深度学习中的一些基础概念还是很有帮助的

The kNN classifier consists of two stages:

- During training, the classifier takes the training data and simply remembers it

- During testing, kNN classifies every test image by comparing to all training images and transfering the labels of the k most similar training examples

- The value of k is cross-validated

In this exercise you will implement these steps and understand the basic Image Classification pipeline, cross-validation, and gain proficiency in writing efficient, vectorized code.

下面是我对cs231n assignment1 KNN代码的完成和我的一些注释

# Run some setup code for this notebook.

from __future__ import print_function # python新旧版本的兼容性方面存在差异,处理方法是按照最新的特性来处理,即print都需要添加括号

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10 # 作业代码中提供的用于加载数据等功能的程序包

import matplotlib.pyplot as plt

# This is a bit of magic to make matplotlib figures appear inline in the notebook

# rather than in a new window.

%matplotlib inline

plt.rcParams['figure.figsize'] = (10.0, 8.0) # set default size of plots

plt.rcParams['image.interpolation'] = 'nearest'

plt.rcParams['image.cmap'] = 'gray'

# Some more magic so that the notebook will reload external python modules;

# see http://stackoverflow.com/questions/1907993/autoreload-of-modules-in-ipython

%load_ext autoreload

%autoreload 2

# Load the raw CIFAR-10 data.

cifar10_dir = './cs231n/datasets/cifar-10-batches-py'

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# As a sanity check, we print out the size of the training and test data.

print('Training data shape: ', X_train.shape)

print('Training labels shape: ', y_train.shape)

print('Test data shape: ', X_test.shape)

print('Test labels shape: ', y_test.shape)

Training data shape: (50000, 32, 32, 3)

Training labels shape: (50000,)

Test data shape: (10000, 32, 32, 3)

Test labels shape: (10000,)

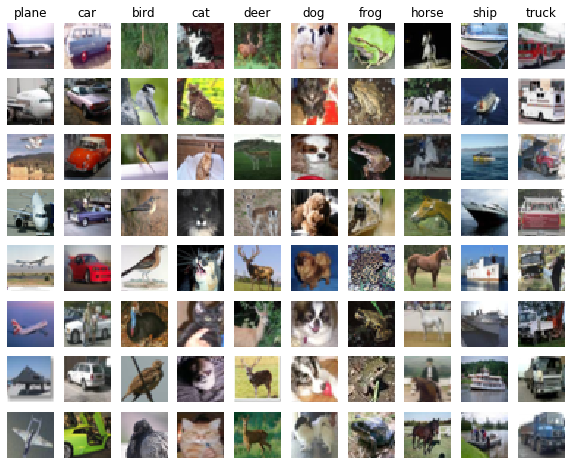

# Visualize some examples from the dataset.

# We show a few examples of training images from each class.

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

num_classes = len(classes)

samples_per_class = 8

for y, cls in enumerate(classes):

idxs = np.flatnonzero(y_train == y)

idxs = np.random.choice(idxs, samples_per_class, replace=False)

for i, idx in enumerate(idxs):

plt_idx = i * num_classes + y + 1

plt.subplot(samples_per_class, num_classes, plt_idx)

plt.imshow(X_train[idx].astype('uint8'))

plt.axis('off')

if i == 0:

plt.title(cls)

plt.show()

# Subsample the data for more efficient code execution in this exercise

num_training = 5000

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

num_test = 500

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

# Reshape the image data into rows

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

print(X_train.shape, X_test.shape)

(5000, 3072) (500, 3072)

from cs231n.classifiers import KNearestNeighbor

# Create a kNN classifier instance.

# Remember that training a kNN classifier is a noop:

# the Classifier simply remembers the data and does no further processing

classifier = KNearestNeighbor()

classifier.train(X_train, y_train)

We would now like to classify the test data with the kNN classifier. Recall that we can break down this process into two steps:

- First we must compute the distances between all test examples and all train examples.

- Given these distances, for each test example we find the k nearest examples and have them vote for the label

Lets begin with computing the distance matrix between all training and test examples. For example, if there are Ntr training examples and Nte test examples, this stage should result in a Nte x Ntr matrix where each element (i,j) is the distance between the i-th test and j-th train example.

First, open cs231n/classifiers/k_nearest_neighbor.py and implement the function compute_distances_two_loops that uses a (very inefficient) double loop over all pairs of (test, train) examples and computes the distance matrix one element at a time.

# Open cs231n/classifiers/k_nearest_neighbor.py and implement

# compute_distances_two_loops.

# Test your implementation:

dists = classifier.compute_distances_two_loops(X_test)

print(dists.shape)

(500, 5000)

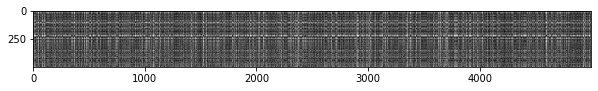

# We can visualize the distance matrix: each row is a single test example and

# its distances to training examples

plt.imshow(dists, interpolation='none')

plt.show()

Inline Question #1: Notice the structured patterns in the distance matrix, where some rows or columns are visible brighter. (Note that with the default color scheme black indicates low distances while white indicates high distances.)

- What in the data is the cause behind the distinctly bright rows?

- What causes the columns?

Your Answer:

极其明亮的行表明这一个测试样本与所有的训练样本都不相似

及其明亮的列表明这一个训练样本与所有的测试样本都不相似

# Now implement the function predict_labels and run the code below:

# We use k = 1 (which is Nearest Neighbor).

y_test_pred = classifier.predict_labels(dists, k=1)

# Compute and print the fraction of correctly predicted examples

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 137 / 500 correct => accuracy: 0.274000

You should expect to see approximately 27% accuracy. Now lets try out a larger k, say k = 5:

y_test_pred = classifier.predict_labels(dists, k=5)

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 145 / 500 correct => accuracy: 0.290000

You should expect to see a slightly better performance than with k = 1.

# Now lets speed up distance matrix computation by using partial vectorization

# with one loop. Implement the function compute_distances_one_loop and run the

# code below:

dists_one = classifier.compute_distances_one_loop(X_test)

# To ensure that our vectorized implementation is correct, we make sure that it

# agrees with the naive implementation. There are many ways to decide whether

# two matrices are similar; one of the simplest is the Frobenius norm. In case

# you haven't seen it before, the Frobenius norm of two matrices is the square

# root of the squared sum of differences of all elements; in other words, reshape

# the matrices into vectors and compute the Euclidean distance between them.

difference = np.linalg.norm(dists - dists_one, ord='fro')

print('Difference was: %f' % (difference, ))

if difference < 0.001:

print('Good! The distance matrices are the same')

else:

print('Uh-oh! The distance matrices are different')

Difference was: 0.000000

Good! The distance matrices are the same

# Now implement the fully vectorized version inside compute_distances_no_loops

# and run the code

dists_two = classifier.compute_distances_no_loops(X_test)

# check that the distance matrix agrees with the one we computed before:

difference = np.linalg.norm(dists - dists_two, ord='fro')

print('Difference was: %f' % (difference, ))

if difference < 0.001:

print('Good! The distance matrices are the same')

else:

print('Uh-oh! The distance matrices are different')

Difference was: 0.000000

Good! The distance matrices are the same

# Let's compare how fast the implementations are

def time_function(f, *args):

"""

Call a function f with args and return the time (in seconds) that it took to execute.

"""

import time

tic = time.time()

f(*args)

toc = time.time()

return toc - tic

two_loop_time = time_function(classifier.compute_distances_two_loops, X_test)

print('Two loop version took %f seconds' % two_loop_time)

one_loop_time = time_function(classifier.compute_distances_one_loop, X_test)

print('One loop version took %f seconds' % one_loop_time)

no_loop_time = time_function(classifier.compute_distances_no_loops, X_test)

print('No loop version took %f seconds' % no_loop_time)

# you should see significantly faster performance with the fully vectorized implementation

# 一个循环嵌套竟然比两个花费的时间多,这个地方的原因还需要讨论

Two loop version took 38.740425 seconds

One loop version took 97.031580 seconds

No loop version took 0.307179 seconds

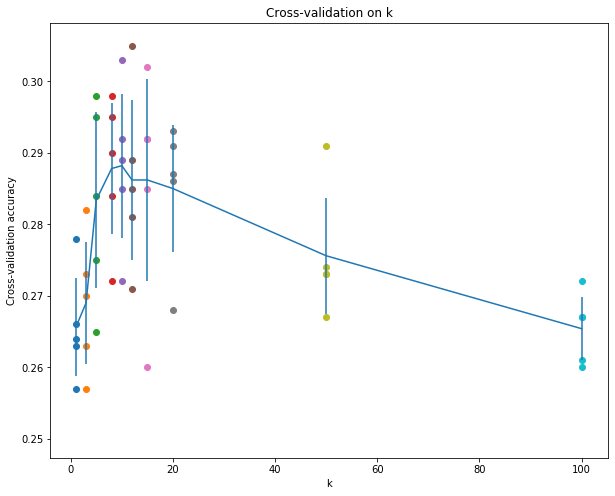

Cross-validation

We have implemented the k-Nearest Neighbor classifier but we set the value k = 5 arbitrarily. We will now determine the best value of this hyperparameter with cross-validation.

交叉验证的实现思路

num_folds = 5

k_choices = [1, 3, 5, 8, 10, 12, 15, 20, 50, 100]

X_train_folds = []

y_train_folds = []

################################################################################

# TODO: #

# Split up the training data into folds. After splitting, X_train_folds and #

# y_train_folds should each be lists of length num_folds, where #

# y_train_folds[i] is the label vector for the points in X_train_folds[i]. #

# Hint: Look up the numpy array_split function. #

################################################################################

# numpy.array_split可以不均等分割,numpy.split不均等分割会报错

X_train_folds = np.array_split(X_train,num_folds,axis=0)

y_train_folds = np.array_split(y_train,num_folds,axis=0)

pass

################################################################################

# END OF YOUR CODE #

################################################################################

# A dictionary holding the accuracies for different values of k that we find

# when running cross-validation. After running cross-validation,

# k_to_accuracies[k] should be a list of length num_folds giving the different

# accuracy values that we found when using that value of k.

k_to_accuracies = {}

################################################################################

# TODO: #

# Perform k-fold cross validation to find the best value of k. For each #

# possible value of k, run the k-nearest-neighbor algorithm num_folds times, #

# where in each case you use all but one of the folds as training data and the #

# last fold as a validation set. Store the accuracies for all fold and all #

# values of k in the k_to_accuracies dictionary. #

################################################################################

for k in k_choices:

accuracies = []

for i in range(num_folds):

X_train_cv = np.vstack(X_train_folds[0:i] + X_train_folds[i+1:])

y_train_cv = np.hstack(y_train_folds[0:i] + y_train_folds[i+1:])

X_valid_cv = X_train_folds[i]

y_valid_cv = y_train_folds[i]

classifier.train(X_train_cv, y_train_cv)

dists = classifier.compute_distances_no_loops(X_valid_cv)

y_valid_pred = classifier.predict_labels(dists, k)

num_correct = np.sum(y_valid_pred == y_valid_cv)

accuracy = float(num_correct) / y_valid_cv.shape[0]

accuracies.append(accuracy)

k_to_accuracies[k] = accuracies

pass

################################################################################

# END OF YOUR CODE #

################################################################################

# Print out the computed accuracies

for k in sorted(k_to_accuracies):

for accuracy in k_to_accuracies[k]:

print('k = %d, accuracy = %f' % (k, accuracy))

k = 1, accuracy = 0.263000

k = 1, accuracy = 0.257000

k = 1, accuracy = 0.264000

k = 1, accuracy = 0.278000

k = 1, accuracy = 0.266000

k = 3, accuracy = 0.257000

k = 3, accuracy = 0.263000

k = 3, accuracy = 0.273000

k = 3, accuracy = 0.282000

k = 3, accuracy = 0.270000

k = 5, accuracy = 0.265000

k = 5, accuracy = 0.275000

k = 5, accuracy = 0.295000

k = 5, accuracy = 0.298000

k = 5, accuracy = 0.284000

k = 8, accuracy = 0.272000

k = 8, accuracy = 0.295000

k = 8, accuracy = 0.284000

k = 8, accuracy = 0.298000

k = 8, accuracy = 0.290000

k = 10, accuracy = 0.272000

k = 10, accuracy = 0.303000

k = 10, accuracy = 0.289000

k = 10, accuracy = 0.292000

k = 10, accuracy = 0.285000

k = 12, accuracy = 0.271000

k = 12, accuracy = 0.305000

k = 12, accuracy = 0.285000

k = 12, accuracy = 0.289000

k = 12, accuracy = 0.281000

k = 15, accuracy = 0.260000

k = 15, accuracy = 0.302000

k = 15, accuracy = 0.292000

k = 15, accuracy = 0.292000

k = 15, accuracy = 0.285000

k = 20, accuracy = 0.268000

k = 20, accuracy = 0.293000

k = 20, accuracy = 0.291000

k = 20, accuracy = 0.287000

k = 20, accuracy = 0.286000

k = 50, accuracy = 0.273000

k = 50, accuracy = 0.291000

k = 50, accuracy = 0.274000

k = 50, accuracy = 0.267000

k = 50, accuracy = 0.273000

k = 100, accuracy = 0.261000

k = 100, accuracy = 0.272000

k = 100, accuracy = 0.267000

k = 100, accuracy = 0.260000

k = 100, accuracy = 0.267000

# plot the raw observations

for k in k_choices:

accuracies = k_to_accuracies[k]

plt.scatter([k] * len(accuracies), accuracies)

# plot the trend line with error bars that correspond to standard deviation

accuracies_mean = np.array([np.mean(v) for k,v in sorted(k_to_accuracies.items())])

accuracies_std = np.array([np.std(v) for k,v in sorted(k_to_accuracies.items())])

plt.errorbar(k_choices, accuracies_mean, yerr=accuracies_std)

plt.title('Cross-validation on k')

plt.xlabel('k')

plt.ylabel('Cross-validation accuracy')

plt.show()

# Based on the cross-validation results above, choose the best value for k,

# retrain the classifier using all the training data, and test it on the test

# data. You should be able to get above 28% accuracy on the test data.

best_k = 8

classifier = KNearestNeighbor()

classifier.train(X_train, y_train)

y_test_pred = classifier.predict(X_test, k=best_k)

# Compute and display the accuracy

num_correct = np.sum(y_test_pred == y_test)

accuracy = float(num_correct) / num_test

print('Got %d / %d correct => accuracy: %f' % (num_correct, num_test, accuracy))

Got 147 / 500 correct => accuracy: 0.294000

下面是KNN算法实现的代码

import numpy as np

from past.builtins import xrange

class KNearestNeighbor(object):

""" a kNN classifier with L2 distance """

def __init__(self):

pass

def train(self, X, y):

"""

Train the classifier. For k-nearest neighbors this is just

memorizing the training data.

Inputs:

- X: A numpy array of shape (num_train, D) containing the training data

consisting of num_train samples each of dimension D.

- y: A numpy array of shape (N,) containing the training labels, where

y[i] is the label for X[i].

"""

self.X_train = X

self.y_train = y

def predict(self, X, k=1, num_loops=0):

"""

Predict labels for test data using this classifier.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data consisting

of num_test samples each of dimension D.

- k: The number of nearest neighbors that vote for the predicted labels.

- num_loops: Determines which implementation to use to compute distances

between training points and testing points.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

if num_loops == 0:

dists = self.compute_distances_no_loops(X)

elif num_loops == 1:

dists = self.compute_distances_one_loop(X)

elif num_loops == 2:

dists = self.compute_distances_two_loops(X)

else:

raise ValueError('Invalid value %d for num_loops' % num_loops)

return self.predict_labels(dists, k=k)

def compute_distances_two_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a nested loop over both the training data and the

test data.

Inputs:

- X: A numpy array of shape (num_test, D) containing test data.

Returns:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

is the Euclidean distance between the ith test point and the jth training

point.

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

for j in xrange(num_train):

#####################################################################

# TODO: #

# Compute the l2 distance between the ith test point and the jth #

# training point, and store the result in dists[i, j]. You should #

# not use a loop over dimension. #

#####################################################################

# numpy array可以array[i]得到第i行数据,等价于array[i][:]或array[i, :];

#可以array[:][j]或array[:, j]得到第j列数据;

dists[i,j] = np.sqrt(np.sum(np.square(X[i] - self.X_train[j])))

pass

#####################################################################

# END OF YOUR CODE #

#####################################################################

return dists

def compute_distances_one_loop(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using a single loop over the test data.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

for i in xrange(num_test):

#######################################################################

# TODO: #

# Compute the l2 distance between the ith test point and all training #

# points, and store the result in dists[i, :]. #

#######################################################################

# 这里利用了numpy array的broadcast特性

dists[i] = np.sqrt(np.sum(np.square(X[i] - self.X_train),axis=1))

pass

#######################################################################

# END OF YOUR CODE #

#######################################################################

return dists

def compute_distances_no_loops(self, X):

"""

Compute the distance between each test point in X and each training point

in self.X_train using no explicit loops.

Input / Output: Same as compute_distances_two_loops

"""

num_test = X.shape[0]

num_train = self.X_train.shape[0]

dists = np.zeros((num_test, num_train))

#########################################################################

# TODO: #

# Compute the l2 distance between all test points and all training #

# points without using any explicit loops, and store the result in #

# dists. #

# #

# You should implement this function using only basic array operations; #

# in particular you should not use functions from scipy. #

# #

# HINT: Try to formulate the l2 distance using matrix multiplication #

# and two broadcast sums. #

#########################################################################

#HINT:(x - y)^2 = x^2 - 2*x*y + y^2

xy = np.dot(X, self.X_train.T)

x2 = np.sum(np.square(X), axis=1).reshape(-1,1)

y2 = np.sum(np.square(self.X_train.T), axis=0).reshape(1,-1)

dists = np.sqrt(-2*xy + x2 + y2) #根据broadcast机质,不同维度会自动计算

pass

#########################################################################

# END OF YOUR CODE #

#########################################################################

return dists

def predict_labels(self, dists, k=1):

"""

Given a matrix of distances between test points and training points,

predict a label for each test point.

Inputs:

- dists: A numpy array of shape (num_test, num_train) where dists[i, j]

gives the distance betwen the ith test point and the jth training point.

Returns:

- y: A numpy array of shape (num_test,) containing predicted labels for the

test data, where y[i] is the predicted label for the test point X[i].

"""

num_test = dists.shape[0]

y_pred = np.zeros(num_test)

for i in xrange(num_test):

# A list of length k storing the labels of the k nearest neighbors to

# the ith test point.

closest_y = []

#########################################################################

# TODO: #

# Use the distance matrix to find the k nearest neighbors of the ith #

# testing point, and use self.y_train to find the labels of these #

# neighbors. Store these labels in closest_y. #

# Hint: Look up the function numpy.argsort. #

#########################################################################

# numpy.argsort(array)返回array降序或升序的下标数组

closest_y = self.y_train[np.argsort(dists[i])[0:k]].tolist()

pass

#########################################################################

# TODO: #

# Now that you have found the labels of the k nearest neighbors, you #

# need to find the most common label in the list closest_y of labels. #

# Store this label in y_pred[i]. Break ties by choosing the smaller #

# label. #

#########################################################################

y_pred[i] = max(closest_y, key=closest_y.count) # 寻找List中出现次数最多的元素

pass

#########################################################################

# END OF YOUR CODE #

#########################################################################

return y_pred

【cs231n笔记】assignment1之KNN的更多相关文章

- cs231n笔记:线性分类器

cs231n线性分类器学习笔记,非完全翻译,根据自己的学习情况总结出的内容: 线性分类 本节介绍线性分类器,该方法可以自然延伸到神经网络和卷积神经网络中,这类方法主要有两部分组成,一个是评分函数(sc ...

- cs231n笔记 (一) 线性分类器

Liner classifier 线性分类器用作图像分类主要有两部分组成:一个是假设函数, 它是原始图像数据到类别的映射.另一个是损失函数,该方法可转化为一个最优化问题,在最优化过程中,将通过更新假设 ...

- cs231n笔记:最优化

本节是cs231学习笔记:最优化,并介绍了梯度下降方法,然后应用到逻辑回归中 引言 在上一节线性分类器中提到,分类方法主要有两部分组成:1.基于参数的评分函数.能够将样本映射到类别的分值.2.损失函数 ...

- cs231n笔记(二) 最优化方法

回顾上一节中,介绍了图像分类任务中的两个要点: 假设函数.该函数将原始图像像素映射为分类评分值. 损失函数.该函数根据分类评分和训练集图像数据实际分类的一致性,衡量某个具体参数集的质量好坏. 现在介绍 ...

- CS231n笔记列表

课程基础1:Numpy Tutorial 课程基础2:Scipy Matplotlib 1.1 图像分类和Nearest Neighbor分类器 1.2 k-Nearest Neighbor分类器 1 ...

- CS231n笔记 Lecture 2 Image Classification pipeline

距离度量\(L_1\) 和\(L_2\)的区别 一些感性的认识,\(L_1\)可能更适合一些结构化数据,即每个维度是有特别含义的,如雇员的年龄.工资水平等等:如果只是一个一般化的向量,\(L_2\)可 ...

- 李航-统计学习方法-笔记-3:KNN

KNN算法 基本模型:给定一个训练数据集,对新的输入实例,在训练数据集中找到与该实例最邻近的k个实例.这k个实例的多数属于某个类,就把输入实例分为这个类. KNN没有显式的学习过程. KNN使用的模型 ...

- 【笔记】初探KNN算法(3)

KNN算法(3) 测试算法的目的就是为了帮助我们选择一个更好的模型 训练数据集,测试数据集方面 一般来说,我们训练得到的模型直接在真实的环境中使用 这就导致了一些问题 如果模型很差,未经改进就应用在现 ...

- 【笔记】初探KNN算法(2)

KNN算法(2) 机器学习算法封装 scikit-learn中的机器学习算法封装 在python chame中将算法写好 import numpy as np from math import sqr ...

随机推荐

- [bzoj2756]奇怪的游戏

对棋盘黑白染色后,若n和m都是奇数(即白色和黑色点数不同),可以直接算得答案(根据白-黑不变):若n和m不都是奇数,二分答案(二分的上限要大一点,开$2^50$),最后都要用用网络流来判定.考虑判定, ...

- [cf878D]Magic Breeding

对于每一行,用一个2^12个01来表示,其中这一行就是其中所有为1的点所代表的行(i二进制中包含的行)的max的min,然后就可以支持取max和min了,查询只需要枚举答案即可 1 #include& ...

- [loj3343]超现实树

定义1:两棵树中的$x$和$y$对应当且仅当$x$到根的链与$y$到根的链同构 定义2:$x$和$y$的儿子状态相同当且仅当$x$与儿子所构成的树与$y$与儿子所构成的树同构 根据题中所给的定义,有以 ...

- python中else的三种用法

与if搭配 要么--不然-- num = input("输入一个数字") if(num % 2 == 0): print("偶数") else: print(& ...

- Codeforces 1149C - Tree Generator™(线段树+转化+标记维护)

Codeforces 题目传送门 & 洛谷题目传送门 首先考虑这个所谓的"括号树"与直径的本质是什么.考虑括号树上两点 \(x,y\),我们不妨用一个"DFS&q ...

- C++ and OO Num. Comp. Sci. Eng. - Part 5.

类 class 关键字提供了一种包含机制,将数据和操作数据的方法结合到一起,作为内置类型来使用. 类可以包含私有部分,仅其成员和 friend 类访问,公有部分可以在程序中任意位置处访问. 构造函数与 ...

- SNP 过滤(一)

通用过滤 Vcftools(http://vcftools.sourceforge.net) 对vcf文件进行过滤 第一步:过滤最低质量低于30,次等位基因深度(minor allele count) ...

- R shinydashboard ——2. 结构

目录 1.Shiny和HTML 2.结构 3. 标题Header 4. 侧边栏Siderbar 5.主体/正文Body box tabBox infoBox valueBox Layouts 1.Sh ...

- Linux-普通用户和root用户任意切换

普通用户切换为root: 1.[xnlay@bogon ~]$含义:xnlay代表当前用户,bogon指的是主机名,~表示当前用户,$表示普通用户:[root@bogon ~]#root代表是超级用户 ...

- 强化学习实战 | 表格型Q-Learning玩井字棋(一)

在 强化学习实战 | 自定义Gym环境之井子棋 中,我们构建了一个井字棋环境,并进行了测试.接下来我们可以使用各种强化学习方法训练agent出棋,其中比较简单的是Q学习,Q即Q(S, a),是状态动作 ...