爬虫--Scrapy之Downloader Middleware

下载器中间件(Downloader Middleware)

下载器中间件是介于Scrapy的request/response处理的钩子框架。 是用于全局修改Scrapy request和response的一个轻量、底层的系统。

激活下载器中间件

要激活下载器中间件组件,将其加入到 DOWNLOADER_MIDDLEWARES 设置中。 该设置是一个字典(dict),键为中间件类的路径,值为其中间件的顺序(order)。

这里是一个例子:

DOWNLOADER_MIDDLEWARES = {

'myproject.middlewares.CustomDownloaderMiddleware': 543,

}

DOWNLOADER_MIDDLEWARES 设置会与Scrapy定义的 DOWNLOADER_MIDDLEWARES_BASE 设置合并(但不是覆盖), 而后根据顺序(order)进行排序,最后得到启用中间件的有序列表: 第一个中间件是最靠近引擎的,最后一个中间件是最靠近下载器的。

关于如何分配中间件的顺序请查看 DOWNLOADER_MIDDLEWARES_BASE 设置,而后根据您想要放置中间件的位置选择一个值。 由于每个中间件执行不同的动作,您的中间件可能会依赖于之前(或者之后)执行的中间件,因此顺序是很重要的。

如果您想禁止内置的(在 DOWNLOADER_MIDDLEWARES_BASE 中设置并默认启用的)中间件, 您必须在项目的 DOWNLOADER_MIDDLEWARES 设置中定义该中间件,并将其值赋为 None 。 例如,如果您想要关闭user-agent中间件:

DOWNLOADER_MIDDLEWARES = {

'myproject.middlewares.CustomDownloaderMiddleware': 543,

'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware': None,

}

最后,请注意,有些中间件需要通过特定的设置来启用。

编写您自己的下载器中间件

编写下载器中间件十分简单。每个中间件组件是一个定义了以下一个或多个方法的Python类:

class scrapy.contrib.downloadermiddleware.DownloaderMiddleware

1、process_request(request, spider)

当每个request通过下载中间件时,该方法被调用。

process_request() 必须返回其中之一: 返回 None 、返回一个 Response 对象、返回一个 Request 对象或raise IgnoreRequest 。

如果其返回 None ,Scrapy将继续处理该request,执行其他的中间件的相应方法,直到合适的下载器处理函数(download handler)被调用, 该request被执行(其response被下载)。

如果其返回 Response 对象,Scrapy将不会调用 任何 其他的 process_request() 或 process_exception() 方法,或相应地下载函数; 其将返回该response。 已安装的中间件的 process_response() 方法则会在每个response返回时被调用。

如果其返回 Request 对象,Scrapy则停止调用 process_request方法并重新调度返回的request。当新返回的request被执行后, 相应地中间件链将会根据下载的response被调用。

如果其raise一个 IgnoreRequest 异常,则安装的下载中间件的 process_exception() 方法会被调用。如果没有任何一个方法处理该异常, 则request的errback(Request.errback)方法会被调用。如果没有代码处理抛出的异常, 则该异常被忽略且不记录(不同于其他异常那样)。

| 参数: |

|---|

操作演示:

输入指令:

scrapy startproject httpbintest

cd httpbintest

scrapy genspider httpbin httpbin.org

之后进入工程修改httpbin.py的内容,修改为:

import scrapy class HttpbinSpider(scrapy.Spider):

name = 'httpbin'

allowed_domains = ['httpbin.org']

start_urls = ['http://httpbin.org/'] def parse(self, response):

print(response.text)

输入scrapy crawl httpbin

打印后的结果为:

2018-10-11 12:04:53 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 12:04:53 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 12:04:53 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'ROBOTSTXT_OBEY': True, '

SPIDER_MODULES': ['httpbintest.spiders']}

2018-10-11 12:04:54 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 12:04:55 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 12:04:55 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 12:04:55 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 12:04:55 [scrapy.core.engine] INFO: Spider opened

2018-10-11 12:04:55 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 12:04:55 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 12:04:56 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/robots.txt> (referer: None)

2018-10-11 12:04:58 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/> (referer: None)

<!DOCTYPE html>

<html lang="en"> <head>

<meta charset="UTF-8">

<title>httpbin.org</title>

<link href="https://fonts.googleapis.com/css?family=Open+Sans:400,700|Source+Code+Pro:300,600|Titillium+Web:400,600,700"

rel="stylesheet">

<link rel="stylesheet" type="text/css" href="/flasgger_static/swagger-ui.css">

<link rel="icon" type="image/png" href="/static/favicon.ico" sizes="64x64 32x32 16x16" />

<style>

html {

box-sizing: border-box;

overflow: -moz-scrollbars-vertical;

overflow-y: scroll;

} *,

*:before,

*:after {

box-sizing: inherit;

} body {

margin: 0;

background: #fafafa;

}

</style>

</head> <body>

<a href="https://github.com/requests/httpbin" class="github-corner" aria-label="View source on Github">

<svg width="" height="" viewBox="0 0 250 250" style="fill:#151513; color:#fff; position: absolute; top: 0; border: 0; right: 0;"

aria-hidden="true">

<path d="M0,0 L115,115 L130,115 L142,142 L250,250 L250,0 Z"></path>

<path d="M128.3,109.0 C113.8,99.7 119.0,89.6 119.0,89.6 C122.0,82.7 120.5,78.6 120.5,78.6 C119.2,72.0 123.4,76.3 123.4,76.3 C127.3,80.9 125.5,87.3 1

25.5,87.3 C122.9,97.6 130.6,101.9 134.4,103.2"

fill="currentColor" style="transform-origin: 130px 106px;" class="octo-arm"></path>

<path d="M115.0,115.0 C114.9,115.1 118.7,116.5 119.8,115.4 L133.7,101.6 C136.9,99.2 139.9,98.4 142.2,98.6 C133.8,88.0 127.5,74.4 143.8,58.0 C148.5,5

3.4 154.0,51.2 159.7,51.0 C160.3,49.4 163.2,43.6 171.4,40.1 C171.4,40.1 176.1,42.5 178.8,56.2 C183.1,58.6 187.2,61.8 190.9,65.4 C194.5,69.0 197.7,73.2 200.1,77.

6 C213.8,80.2 216.3,84.9 216.3,84.9 C212.7,93.1 206.9,96.0 205.4,96.6 C205.1,102.4 203.0,107.8 198.3,112.5 C181.9,128.9 168.3,122.5 157.7,114.1 C157.9,116.9 156

.7,120.9 152.7,124.9 L141.0,136.5 C139.8,137.7 141.6,141.9 141.8,141.8 Z"

fill="currentColor" class="octo-body"></path>

</svg>

</a>

<svg xmlns="http://www.w3.org/2000/svg" xmlns:xlink="http://www.w3.org/1999/xlink" style="position:absolute;width:0;height:0">

<defs>

<symbol viewBox="0 0 20 20" id="unlocked">

<path d="M15.8 8H14V5.6C14 2.703 12.665 1 10 1 7.334 1 6 2.703 6 5.6V6h2v-.801C8 3.754 8.797 3 10 3c1.203 0 2 .754 2 2.199V8H4c-.553 0-1 .646-1

1.199V17c0 .549.428 1.139.951 1.307l1.197.387C5.672 18.861 6.55 19 7.1 19h5.8c.549 0 1.428-.139 1.951-.307l1.196-.387c.524-.167.953-.757.953-1.306V9.199C17 8.64

6 16.352 8 15.8 8z"></path>

</symbol> <symbol viewBox="0 0 20 20" id="locked">

<path d="M15.8 8H14V5.6C14 2.703 12.665 1 10 1 7.334 1 6 2.703 6 5.6V8H4c-.553 0-1 .646-1 1.199V17c0 .549.428 1.139.951 1.307l1.197.387C5.672 18

.861 6.55 19 7.1 19h5.8c.549 0 1.428-.139 1.951-.307l1.196-.387c.524-.167.953-.757.953-1.306V9.199C17 8.646 16.352 8 15.8 8zM12 8H8V5.199C8 3.754 8.797 3 10 3c1

.203 0 2 .754 2 2.199V8z"

/>

</symbol> <symbol viewBox="0 0 20 20" id="close">

<path d="M14.348 14.849c-.469.469-1.229.469-1.697 0L10 11.819l-2.651 3.029c-.469.469-1.229.469-1.697 0-.469-.469-.469-1.229 0-1.697l2.758-3.15-2

.759-3.152c-.469-.469-.469-1.228 0-1.697.469-.469 1.228-.469 1.697 0L10 8.183l2.651-3.031c.469-.469 1.228-.469 1.697 0 .469.469.469 1.229 0 1.697l-2.758 3.152 2

.758 3.15c.469.469.469 1.229 0 1.698z"

/>

</symbol> <symbol viewBox="0 0 20 20" id="large-arrow">

<path d="M13.25 10L6.109 2.58c-.268-.27-.268-.707 0-.979.268-.27.701-.27.969 0l7.83 7.908c.268.271.268.709 0 .979l-7.83 7.908c-.268.271-.701.27-

.969 0-.268-.269-.268-.707 0-.979L13.25 10z"

/>

</symbol> <symbol viewBox="0 0 20 20" id="large-arrow-down">

<path d="M17.418 6.109c.272-.268.709-.268.979 0s.271.701 0 .969l-7.908 7.83c-.27.268-.707.268-.979 0l-7.908-7.83c-.27-.268-.27-.701 0-.969.271-.

268.709-.268.979 0L10 13.25l7.418-7.141z"

/>

</symbol> <symbol viewBox="0 0 24 24" id="jump-to">

<path d="M19 7v4H5.83l3.58-3.59L8 6l-6 6 6 6 1.41-1.41L5.83 13H21V7z" />

</symbol> <symbol viewBox="0 0 24 24" id="expand">

<path d="M10 18h4v-2h-4v2zM3 6v2h18V6H3zm3 7h12v-2H6v2z" />

</symbol> </defs>

</svg> <div id="swagger-ui">

<div data-reactroot="" class="swagger-ui">

<div>

<div class="information-container wrapper">

<section class="block col-12">

<div class="info">

<hgroup class="main">

<h2 class="title">httpbin.org

<small>

<pre class="version">0.9.2</pre>

</small>

</h2>

<pre class="base-url">[ Base URL: httpbin.org/ ]</pre>

</hgroup>

<div class="description">

<div class="markdown">

<p>A simple HTTP Request & Response Service.

<br>

<br>

<b>Run locally: </b>

<code>$ docker run -p 80:80 kennethreitz/httpbin</code>

</p>

</div>

</div>

<div>

<div>

<a href="https://kennethreitz.org" target="_blank">the developer - Website</a>

</div>

<a href="mailto:me@kennethreitz.org">Send email to the developer</a>

</div>

</div>

<!-- ADDS THE LOADER SPINNER -->

<div class="loading-container">

<div class="loading"></div>

</div> </section>

</div>

</div>

</div>

</div> <div class='swagger-ui'>

<div class="wrapper">

<section class="clear">

<span style="float: right;">

[Powered by

<a target="_blank" href="https://github.com/rochacbruno/flasgger">Flasgger</a>]

<br>

</span>

</section>

</div>

</div> <script src="/flasgger_static/swagger-ui-bundle.js"> </script>

<script src="/flasgger_static/swagger-ui-standalone-preset.js"> </script>

<script src='/flasgger_static/%20lib/jquery.min.js' type='text/javascript'></script>

<script> window.onload = function () { fetch("/spec.json")

.then(function (response) {

response.json()

.then(function (json) {

var current_protocol = window.location.protocol.slice(0, -1);

if (json.schemes[0] != current_protocol) {

// Switches scheme to the current in use

var other_protocol = json.schemes[0];

json.schemes[0] = current_protocol;

json.schemes[1] = other_protocol; }

json.host = window.location.host; // sets the current host const ui = SwaggerUIBundle({

spec: json,

validatorUrl: null,

dom_id: '#swagger-ui',

deepLinking: true,

jsonEditor: true,

docExpansion: "none",

apisSorter: "alpha",

//operationsSorter: "alpha",

presets: [

SwaggerUIBundle.presets.apis,

// yay ES6 modules ↘

Array.isArray(SwaggerUIStandalonePreset) ? SwaggerUIStandalonePreset : SwaggerUIStandalonePreset.default

],

plugins: [

SwaggerUIBundle.plugins.DownloadUrl

], // layout: "StandaloneLayout" // uncomment to enable the green top header

}) window.ui = ui // uncomment to rename the top brand if layout is enabled

// $(".topbar-wrapper .link span").replaceWith("<span>httpbin</span>");

})

})

}

</script> <script type="text/javascript">

var _gauges = _gauges || [];

(function() {

var t = document.createElement('script');

t.type = 'text/javascript';

t.async = true;

t.id = 'gauges-tracker';

t.setAttribute('data-site-id', '58cb2e71c88d9043ac01d000');

t.setAttribute('data-track-path', 'https://track.gaug.es/track.gif');

t.src = 'https://d36ee2fcip1434.cloudfront.net/track.js';

var s = document.getElementsByTagName('script')[0];

s.parentNode.insertBefore(t, s);

})();

</script> <div class='swagger-ui'>

<div class="wrapper">

<section class="block col-12 block-desktop col-12-desktop">

<div> <h2>Other Utilities</h2> <ul>

<li>

<a href="/forms/post">HTML form</a> that posts to /post /forms/post</li>

</ul> <br />

<br />

</div>

</section>

</div>

</div>

</body> </html>

2018-10-11 12:04:58 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 12:04:58 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 430,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 10556,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 4, 4, 58, 747159),

'log_count/DEBUG': 3,

'log_count/INFO': 7,

'response_received_count': 2,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 4, 4, 55, 342964)}

2018-10-11 12:04:58 [scrapy.core.engine] INFO: Spider closed (finished)

查看打印后的结果,我们发现有一个"origin": "221.208.253.90",的这行代码,我们通过上网查询发现这个本地的IP地址为:黑龙江省哈尔滨市 联通 ,那么现在想做的是做一个代理的中间件,实现一个IP的伪装

现在我们需要在middleware.py这个文件编写代码了,其代码如下:

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_request(self,request, spider):

self.logger.debug('Using Proxy')

request.meta['proxy'] = 'http://211.101.136.86:49784' # 设置request的代理,如果请求的时候,request会自动加上这个代理

return None

根据上文需要,所以在settings.py的文件里写入代码。其代码如下:

DOWNLOADER_MIDDLEWARES = {

'httpbintest.middlewares.ProxyMiddleware': 543,

}

然后敲入指令scrapy crawl httpbin

其打印结果为:

2018-10-11 14:16:58 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 14:16:58 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 14:16:58 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'ROBOTSTXT_OBEY': True, '

SPIDER_MODULES': ['httpbintest.spiders']}

2018-10-11 14:16:58 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 14:16:59 [scrapy.core.engine] INFO: Spider opened

2018-10-11 14:16:59 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 14:16:59 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 14:16:59 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:16:59 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/robots.txt> (referer: None)

2018-10-11 14:16:59 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:17:00 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/get> (referer: None)

{

"args": {},

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Encoding": "gzip,deflate",

"Accept-Language": "en",

"Connection": "close",

"Host": "httpbin.org",

"User-Agent": "Scrapy/1.5.1 (+https://scrapy.org)"

},

"origin": "211.101.136.86",

"url": "http://httpbin.org/get"

} 2018-10-11 14:17:00 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 14:17:00 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 433,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 794,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 6, 17, 0, 472256),

'log_count/DEBUG': 5,

'log_count/INFO': 7,

'response_received_count': 2,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 6, 16, 59, 96177)}

2018-10-11 14:17:00 [scrapy.core.engine] INFO: Spider closed (finished)

2、process_response(request, response, spider)

process_request() 必须返回以下之一: 返回一个 Response 对象、 返回一个 Request 对象或raise一个 IgnoreRequest 异常。

如果其返回一个 Response (可以与传入的response相同,也可以是全新的对象), 该response会被在链中的其他中间件的 process_response() 方法处理。

如果其返回一个 Request 对象,则中间件链停止, 返回的request会被重新调度下载。处理类似于 process_request() 返回request所做的那样。

如果其抛出一个 IgnoreRequest 异常,则调用request的errback(Request.errback)。 如果没有代码处理抛出的异常,则该异常被忽略且不记录(不同于其他异常那样)。

| 参数: |

|---|

现在我们在middlewares.py里写入代码,如下:

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_request(self,request, spider):

self.logger.debug('Using Proxy')

request.meta['proxy'] = 'http://211.101.136.86:49784' # 设置request的代理,如果请求的时候,request会自动加上这个代理

def process_response(self,request, response, spider):

response.status = 201

return response

在httpbin.py里写入:

import scrapy class HttpbinSpider(scrapy.Spider):

name = 'httpbin'

allowed_domains = ['httpbin.org']

start_urls = ['http://httpbin.org/get'] def parse(self, response):

print(response.text)

print(response.status)

输入scrapy crawl httpbin,看下打印的结果为:

2018-10-11 14:16:58 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 14:16:58 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 14:16:58 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'ROBOTSTXT_OBEY': True, '

SPIDER_MODULES': ['httpbintest.spiders']}

2018-10-11 14:16:58 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 14:16:59 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 14:16:59 [scrapy.core.engine] INFO: Spider opened

2018-10-11 14:16:59 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 14:16:59 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 14:16:59 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:16:59 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/robots.txt> (referer: None)

2018-10-11 14:16:59 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:17:00 [scrapy.core.engine] DEBUG: Crawled (200) <GET http://httpbin.org/get> (referer: None)

{

"args": {},

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Encoding": "gzip,deflate",

"Accept-Language": "en",

"Connection": "close",

"Host": "httpbin.org",

"User-Agent": "Scrapy/1.5.1 (+https://scrapy.org)"

},

"origin": "211.101.136.86",

"url": "http://httpbin.org/get"

} 2018-10-11 14:17:00 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 14:17:00 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 433,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 794,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 6, 17, 0, 472256),

'log_count/DEBUG': 5,

'log_count/INFO': 7,

'response_received_count': 2,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 6, 16, 59, 96177)}

2018-10-11 14:17:00 [scrapy.core.engine] INFO: Spider closed (finished) C:\Users\Administrator\Desktop\爬虫程序\Scrapy\httpbintest>scrapy crawl httpbin

2018-10-11 14:50:40 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 14:50:40 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 14:50:40 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'ROBOTSTXT_OBEY': True, '

SPIDER_MODULES': ['httpbintest.spiders']}

2018-10-11 14:50:40 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 14:50:40 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.robotstxt.RobotsTxtMiddleware',

'scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 14:50:40 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 14:50:40 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 14:50:40 [scrapy.core.engine] INFO: Spider opened

2018-10-11 14:50:40 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 14:50:40 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 14:50:40 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:50:41 [scrapy.core.engine] DEBUG: Crawled (201) <GET http://httpbin.org/robots.txt> (referer: None)

2018-10-11 14:50:41 [httpbintest.middlewares] DEBUG: Using Proxy

2018-10-11 14:50:41 [scrapy.core.engine] DEBUG: Crawled (201) <GET http://httpbin.org/get> (referer: None)

{

"args": {},

"headers": {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

"Accept-Encoding": "gzip,deflate",

"Accept-Language": "en",

"Connection": "close",

"Host": "httpbin.org",

"User-Agent": "Scrapy/1.5.1 (+https://scrapy.org)"

},

"origin": "211.101.136.86",

"url": "http://httpbin.org/get"

} 201

2018-10-11 14:50:41 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 14:50:41 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/request_bytes': 433,

'downloader/request_count': 2,

'downloader/request_method_count/GET': 2,

'downloader/response_bytes': 794,

'downloader/response_count': 2,

'downloader/response_status_count/200': 2,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 6, 50, 41, 966878),

'log_count/DEBUG': 5,

'log_count/INFO': 7,

'response_received_count': 2,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 6, 50, 40, 436791)}

2018-10-11 14:50:41 [scrapy.core.engine] INFO: Spider closed (finished)

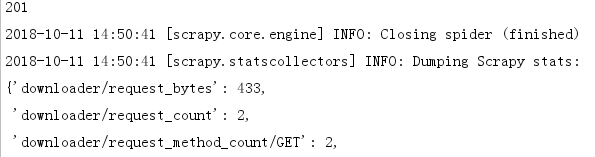

我们可以看到这一行代码:

输出了 201状态码,那么这里实际上就是说downloader得到了request之后,然后记过middewire改写把状态码变成了201,然后再传给response,那么我们得到的状态码就变成了201

3、process_exception(request, exception, spider)

当下载处理器(download handler)或 process_request() (下载中间件)抛出异常(包括 IgnoreRequest 异常)时, Scrapy调用 process_exception() 。

process_exception() 应该返回以下之一: 返回 None 、 一个 Response 对象、或者一个 Request 对象。

如果其返回 None ,Scrapy将会继续处理该异常,接着调用已安装的其他中间件的process_exception() 方法,直到所有中间件都被调用完毕,则调用默认的异常处理。

如果其返回一个 Response 对象,则已安装的中间件链的 process_response() 方法被调用。Scrapy将不会调用任何其他中间件的 process_exception() 方法。

如果其返回一个 Request 对象, 则返回的request将会被重新调用下载。这将停止中间件的process_exception() 方法执行,就如返回一个response的那样。

| 参数: |

|---|

我们敲入代码,用一个代理对google进行爬取,先敲入 scrapy genspider google www.google.com ,在spiders里会有个google.py文件,为了防止不必要的麻烦,所以在settings.py里将ROBOTSTXT_OBEY 设置为False

现在我们在google.py里敲入代码:

# -*- coding: utf-8 -*-

import scrapy class GoogleSpider(scrapy.Spider):

name = 'google'

allowed_domains = ['www.google.com']

start_urls = ['http://www.google.com/'] def make_requests_from_url(self, url):

return scrapy.Request(url = url,meta={'download_timeout':10},callback=self.parse) # 设置超时时间 def parse(self, response):

print(response.text)

并在middlewares.py里添加代码为:

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_exception(self,request, exception, spider):

return request

输入scrapy crawl google,查看输出的内容为:

2018-10-11 16:21:07 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 16:21:07 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 16:21:07 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'SPIDER_MODULES': ['httpb

intest.spiders']}

2018-10-11 16:21:07 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 16:21:08 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.retry.RetryMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 16:21:08 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 16:21:08 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 16:21:08 [scrapy.core.engine] INFO: Spider opened

2018-10-11 16:21:08 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 16:21:08 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 16:21:08 [py.warnings] WARNING: G:\Anaconda3-5.0.1\install\lib\site-packages\scrapy\spiders\__init__.py:76: UserWarning: Spider.make_requests_from_ur

l method is deprecated; it won't be called in future Scrapy releases. Please override Spider.start_requests method instead (see httpbintest.spiders.google.Googl

eSpider).

cls.__module__, cls.__name__ 2018-10-11 16:21:09 [scrapy.downloadermiddlewares.retry] DEBUG: Retrying <GET http://www.google.com/> (failed 1 times): Connection was refused by other side: 10

061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:10 [scrapy.downloadermiddlewares.retry] DEBUG: Retrying <GET http://www.google.com/> (failed 2 times): Connection was refused by other side: 10

061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:11 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:12 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:13 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:14 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:15 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:16 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:17 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:18 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:19 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:20 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:21 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:22 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:23 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:24 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:25 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:26 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:27 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:28 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:29 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:30 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:31 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:31 [scrapy.crawler] INFO: Received SIGINT, shutting down gracefully. Send again to force

2018-10-11 16:21:31 [scrapy.core.engine] INFO: Closing spider (shutdown)

2018-10-11 16:21:32 [scrapy.downloadermiddlewares.retry] DEBUG: Gave up retrying <GET http://www.google.com/> (failed 3 times): Connection was refused by other

side: 10061: 由于目标计算机积极拒绝,无法连接。.

2018-10-11 16:21:32 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/exception_count': 24,

'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 24,

'downloader/request_bytes': 5112,

'downloader/request_count': 24,

'downloader/request_method_count/GET': 24,

'finish_reason': 'shutdown',

'finish_time': datetime.datetime(2018, 10, 11, 8, 21, 32, 343622),

'log_count/DEBUG': 25,

'log_count/INFO': 8,

'log_count/WARNING': 1,

'retry/count': 2,

'retry/max_reached': 22,

'retry/reason_count/twisted.internet.error.ConnectionRefusedError': 2,

'scheduler/dequeued': 24,

'scheduler/dequeued/memory': 24,

'scheduler/enqueued': 25,

'scheduler/enqueued/memory': 25,

'start_time': datetime.datetime(2018, 10, 11, 8, 21, 8, 197241)}

2018-10-11 16:21:32 [scrapy.core.engine] INFO: Spider closed (shutdown)

为了防止它持续输出错误结果,所以要禁止它重复的提示错误信息,所以在settings.py里添加下面代码:

DOWNLOADER_MIDDLEWARES = {

'httpbintest.middlewares.ProxyMiddleware': 543,

'scrapy.downloadermiddlewares.retry.RetryMiddleware':None,

}

重新运行代码,会看到,解决了重复的问题:

2018-10-11 16:40:39 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 16:40:39 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 16:40:39 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'SPIDER_MODULES': ['httpb

intest.spiders']}

2018-10-11 16:40:39 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 16:40:39 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 16:40:39 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 16:40:39 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 16:40:39 [scrapy.core.engine] INFO: Spider opened

2018-10-11 16:40:39 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 16:40:39 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 16:40:39 [py.warnings] WARNING: G:\Anaconda3-5.0.1\install\lib\site-packages\scrapy\spiders\__init__.py:76: UserWarning: Spider.make_requests_from_ur

l method is deprecated; it won't be called in future Scrapy releases. Please override Spider.start_requests method instead (see httpbintest.spiders.google.Googl

eSpider).

cls.__module__, cls.__name__ 2018-10-11 16:40:40 [scrapy.dupefilters] DEBUG: Filtered duplicate request: <GET http://www.google.com/> - no more duplicates will be shown (see DUPEFILTER_DEBU

G to show all duplicates)

2018-10-11 16:40:40 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 16:40:40 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/exception_count': 1,

'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 1,

'downloader/request_bytes': 213,

'downloader/request_count': 1,

'downloader/request_method_count/GET': 1,

'dupefilter/filtered': 1,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 8, 40, 40, 872314),

'log_count/DEBUG': 2,

'log_count/INFO': 7,

'log_count/WARNING': 1,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 8, 40, 39, 842255)}

2018-10-11 16:40:40 [scrapy.core.engine] INFO: Spider closed (finished)

那么,接下来就是改process_exception,第一次请求五个是不能请求的,所以这个错误会被process_exception捕捉掉,然后敲写self.logger.debug('Get Exception')代表已经出错了

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_exception(self,request, exception, spider):

self.logger.debug('Get Exception')

return request

重新运行下就会看到

2018-10-11 16:45:36 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 16:45:36 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 16:45:36 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'SPIDER_MODULES': ['httpb

intest.spiders']}

2018-10-11 16:45:36 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 16:45:36 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 16:45:36 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 16:45:36 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 16:45:36 [scrapy.core.engine] INFO: Spider opened

2018-10-11 16:45:36 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 16:45:36 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 16:45:36 [py.warnings] WARNING: G:\Anaconda3-5.0.1\install\lib\site-packages\scrapy\spiders\__init__.py:76: UserWarning: Spider.make_requests_from_ur

l method is deprecated; it won't be called in future Scrapy releases. Please override Spider.start_requests method instead (see httpbintest.spiders.google.Googl

eSpider).

cls.__module__, cls.__name__ 2018-10-11 16:45:37 [httpbintest.middlewares] DEBUG: Get Exception

2018-10-11 16:45:37 [scrapy.dupefilters] DEBUG: Filtered duplicate request: <GET http://www.google.com/> - no more duplicates will be shown (see DUPEFILTER_DEBU

G to show all duplicates)

2018-10-11 16:45:37 [scrapy.core.engine] INFO: Closing spider (finished)

2018-10-11 16:45:37 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/exception_count': 1,

'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 1,

'downloader/request_bytes': 213,

'downloader/request_count': 1,

'downloader/request_method_count/GET': 1,

'dupefilter/filtered': 1,

'finish_reason': 'finished',

'finish_time': datetime.datetime(2018, 10, 11, 8, 45, 37, 546283),

'log_count/DEBUG': 3,

'log_count/INFO': 7,

'log_count/WARNING': 1,

'scheduler/dequeued': 1,

'scheduler/dequeued/memory': 1,

'scheduler/enqueued': 1,

'scheduler/enqueued/memory': 1,

'start_time': datetime.datetime(2018, 10, 11, 8, 45, 36, 500223)}

2018-10-11 16:45:37 [scrapy.core.engine] INFO: Spider closed (finished)

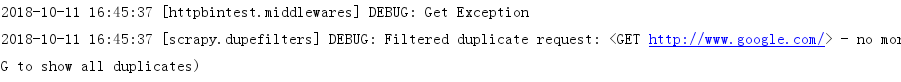

仔细看,看到

现在我们在middlewares.py里重新设置代理,看能否请求成功

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_exception(self,request, exception, spider):

self.logger.debug('Get Exception')

request.meta['proxy'] = 'http://211.101.136.86:49784' # 设置request的代理,如果请求的时候,request会自动加上这个代理

return request

设置完成之后,return request就会将这个request重新加在图下的请求队列里,就会重新的执行request请求,然后在回调下面的方法里面,然后输出网页的源代码了

设置好之后,我们就运行一下代码,其输出结果如下:

2018-10-11 17:06:05 [scrapy.utils.log] INFO: Scrapy 1.5.1 started (bot: httpbintest)

2018-10-11 17:06:05 [scrapy.utils.log] INFO: Versions: lxml 4.1.0.0, libxml2 2.9.4, cssselect 1.0.3, parsel 1.5.0, w3lib 1.19.0, Twisted 18.7.0, Python 3.6.3 |A

naconda custom (64-bit)| (default, Oct 15 2017, 03:27:45) [MSC v.1900 64 bit (AMD64)], pyOpenSSL 17.2.0 (OpenSSL 1.0.2p 14 Aug 2018), cryptography 2.0.3, Platf

orm Windows-7-6.1.7601-SP1

2018-10-11 17:06:05 [scrapy.crawler] INFO: Overridden settings: {'BOT_NAME': 'httpbintest', 'NEWSPIDER_MODULE': 'httpbintest.spiders', 'SPIDER_MODULES': ['httpb

intest.spiders']}

2018-10-11 17:06:06 [scrapy.middleware] INFO: Enabled extensions:

['scrapy.extensions.corestats.CoreStats',

'scrapy.extensions.telnet.TelnetConsole',

'scrapy.extensions.logstats.LogStats']

2018-10-11 17:06:06 [scrapy.middleware] INFO: Enabled downloader middlewares:

['scrapy.downloadermiddlewares.httpauth.HttpAuthMiddleware',

'scrapy.downloadermiddlewares.downloadtimeout.DownloadTimeoutMiddleware',

'scrapy.downloadermiddlewares.defaultheaders.DefaultHeadersMiddleware',

'scrapy.downloadermiddlewares.useragent.UserAgentMiddleware',

'httpbintest.middlewares.ProxyMiddleware',

'scrapy.downloadermiddlewares.redirect.MetaRefreshMiddleware',

'scrapy.downloadermiddlewares.httpcompression.HttpCompressionMiddleware',

'scrapy.downloadermiddlewares.redirect.RedirectMiddleware',

'scrapy.downloadermiddlewares.cookies.CookiesMiddleware',

'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware',

'scrapy.downloadermiddlewares.stats.DownloaderStats']

2018-10-11 17:06:06 [scrapy.middleware] INFO: Enabled spider middlewares:

['scrapy.spidermiddlewares.httperror.HttpErrorMiddleware',

'scrapy.spidermiddlewares.offsite.OffsiteMiddleware',

'scrapy.spidermiddlewares.referer.RefererMiddleware',

'scrapy.spidermiddlewares.urllength.UrlLengthMiddleware',

'scrapy.spidermiddlewares.depth.DepthMiddleware']

2018-10-11 17:06:06 [scrapy.middleware] INFO: Enabled item pipelines:

[]

2018-10-11 17:06:06 [scrapy.core.engine] INFO: Spider opened

2018-10-11 17:06:06 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min)

2018-10-11 17:06:06 [scrapy.extensions.telnet] DEBUG: Telnet console listening on 127.0.0.1:6023

2018-10-11 17:06:06 [py.warnings] WARNING: G:\Anaconda3-5.0.1\install\lib\site-packages\scrapy\spiders\__init__.py:76: UserWarning: Spider.make_requests_from_ur

l method is deprecated; it won't be called in future Scrapy releases. Please override Spider.start_requests method instead (see httpbintest.spiders.google.Googl

eSpider).

cls.__module__, cls.__name__ 2018-10-11 17:06:07 [httpbintest.middlewares] DEBUG: Get Exception

2018-10-11 17:06:17 [httpbintest.middlewares] DEBUG: Get Exception

2018-10-11 17:06:21 [scrapy.crawler] INFO: Received SIGINT, shutting down gracefully. Send again to force

2018-10-11 17:06:21 [scrapy.core.engine] INFO: Closing spider (shutdown)

2018-10-11 17:06:27 [httpbintest.middlewares] DEBUG: Get Exception

2018-10-11 17:06:27 [scrapy.statscollectors] INFO: Dumping Scrapy stats:

{'downloader/exception_count': 3,

'downloader/exception_type_count/twisted.internet.error.ConnectionRefusedError': 1,

'downloader/exception_type_count/twisted.internet.error.TimeoutError': 2,

'downloader/request_bytes': 639,

'downloader/request_count': 3,

'downloader/request_method_count/GET': 3,

'finish_reason': 'shutdown',

'finish_time': datetime.datetime(2018, 10, 11, 9, 6, 27, 317766),

'log_count/DEBUG': 4,

'log_count/INFO': 8,

'log_count/WARNING': 1,

'scheduler/dequeued': 3,

'scheduler/dequeued/memory': 3,

'scheduler/enqueued': 4,

'scheduler/enqueued/memory': 4,

'start_time': datetime.datetime(2018, 10, 11, 9, 6, 6, 268562)}

2018-10-11 17:06:27 [scrapy.core.engine] INFO: Spider closed (shutdown)

我们在修改下代码:

class GoogleSpider(scrapy.Spider):

name = 'google'

allowed_domains = ['www.google.com']

start_urls = ['http://www.google.com/'] def make_requests_from_url(self, url):

self.logger.debug('Try First Time')

return scrapy.Request(url = url,meta={'download_timeout':10},callback=self.parse,dont_filter=True) # 设置超时时间,dont_filter=True 防止多次请求,进行过滤 def parse(self, response):

print(response.text)

class ProxyMiddleware(object): # 代理中间件

logger = logging.getLogger(__name__) # 利用这个可以方便进行scrapy 的log输出

def process_exception(self,request, exception, spider):

self.logger.debug('Get Exception')

self.logger.debug('Try Second Time')

request.meta['proxy'] = 'http://60.208.32.201:80' # 设置request的代理,如果请求的时候,request会自动加上这个代理

return request

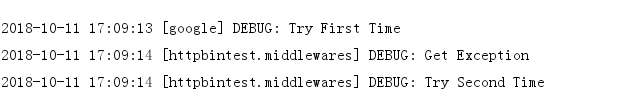

打印后的结果为:

OK,Byby

爬虫--Scrapy之Downloader Middleware的更多相关文章

- scrapy之 downloader middleware

一. 功能说明 Downloader Middleware有三个核心的方法 process_request(request, spider) process_response(request, res ...

- Python爬虫从入门到放弃(二十三)之 Scrapy的中间件Downloader Middleware实现User-Agent随机切换

总架构理解Middleware 通过scrapy官网最新的架构图来理解: 这个图较之前的图顺序更加清晰,从图中我们可以看出,在spiders和ENGINE提及ENGINE和DOWNLOADER之间都可 ...

- Scrapy框架——介绍、安装、命令行创建,启动、项目目录结构介绍、Spiders文件夹详解(包括去重规则)、Selectors解析页面、Items、pipelines(自定义pipeline)、下载中间件(Downloader Middleware)、爬虫中间件、信号

一 介绍 Scrapy一个开源和协作的框架,其最初是为了页面抓取 (更确切来说, 网络抓取 )所设计的,使用它可以以快速.简单.可扩展的方式从网站中提取所需的数据.但目前Scrapy的用途十分广泛,可 ...

- 小白学 Python 爬虫(36):爬虫框架 Scrapy 入门基础(四) Downloader Middleware

人生苦短,我用 Python 前文传送门: 小白学 Python 爬虫(1):开篇 小白学 Python 爬虫(2):前置准备(一)基本类库的安装 小白学 Python 爬虫(3):前置准备(二)Li ...

- 爬虫(十五):Scrapy框架(二) Selector、Spider、Downloader Middleware

1. Scrapy框架 1.1 Selector的用法 我们之前介绍了利用Beautiful Soup.正则表达式来提取网页数据,这确实非常方便.而Scrapy还提供了自己的数据提取方法,即Selec ...

- Python之爬虫(二十五) Scrapy的中间件Downloader Middleware实现User-Agent随机切换

总架构理解Middleware 通过scrapy官网最新的架构图来理解: 这个图较之前的图顺序更加清晰,从图中我们可以看出,在spiders和ENGINE提及ENGINE和DOWNLOADER之间都可 ...

- Scrapy框架学习(三)Spider、Downloader Middleware、Spider Middleware、Item Pipeline的用法

Spider有以下属性: Spider属性 name 爬虫名称,定义Spider名字的字符串,必须是唯一的.常见的命名方法是以爬取网站的域名来命名,比如爬取baidu.com,那就将Spider的名字 ...

- Scrapy学习篇(十)之下载器中间件(Downloader Middleware)

下载器中间件是介于Scrapy的request/response处理的钩子框架,是用于全局修改Scrapy request和response的一个轻量.底层的系统. 激活Downloader Midd ...

- 网页爬虫--scrapy入门

本篇从实际出发,展示如何用网页爬虫.并介绍一个流行的爬虫框架~ 1. 网页爬虫的过程 所谓网页爬虫,就是模拟浏览器的行为访问网站,从而获得网页信息的程序.正因为是程序,所以获得网页的速度可以轻易超过单 ...

随机推荐

- 【week10】psp

项目 内容 开始时间 结束时间 中断时间 净时间 2016/11/19(星期六) 写博客 吉林一日游规格说明书 10:30 15:10 20 260 2016/11/20(星期日) 看论文 磷酸化+三 ...

- git管理策略

master:生产环境,用于发布正式稳定版 release-*.*:预发布分支,发布稳定版之前的正式分支 develop:开发分支,测试环境中使用 feature/who xxx:功能分支,功能未开发 ...

- css & text-overflow & ellipsis

css & text-overflow & ellipsis https://developer.mozilla.org/en-US/docs/Web/CSS/text-overflo ...

- 阿里中间件RocketMQ

阿里RocketMQ是怎样孵化成Apache顶级项目的? RocketMQ 迈入50万TPS消息俱乐部 Apache RocketMQ背后的设计思路与最佳实践 专访RocketMQ联合创始人:项目思路 ...

- 第76天:jQuery中的宽高

Window对象和document对象的区别 1.window对象表示浏览器中打开的窗口 2.window对象可以省略,比如alert()也可以写成window.alert() Document对象是 ...

- HDU4669_Mutiples on a circle

题目的意思是给你一些数字a[i](首位相连),现在要你选出一些连续的数字连续的每一位单独地作为一个数位.现在问你有多少种选择的方式使得选出的数字为k的一个倍数. 其实题目是很简单的.由于k不大(200 ...

- ZOJ2290_Game

题目意思是这样的,给定一个数N,第一个可以减去任意一个数(不能为N本身),然后接下来轮流减去一个数字,下一个人减去的数字必须大于0,且不大于2倍上一次被减去的数字. 把N减为0的人获胜. 看完题目后不 ...

- BZOJ 2157 旅行(树链剖分码农题)

写了5KB,1发AC... 题意:给出一颗树,支持5种操作. 1.修改某条边的权值.2.将u到v的经过的边的权值取负.3.求u到v的经过的边的权值总和.4.求u到v的经过的边的权值最大值.5.求u到v ...

- BZOJ4977 跳伞求生(贪心)

如果现在选定了一些要求消灭的敌人而不考虑积分,显然应该让每个敌人被刚好能消灭他的人消灭.再考虑最大化积分,显然我们应该优先消灭ci-bi大的敌人,所选用的a也应尽量大.于是按ci-bi从大到小排序,用 ...

- Expect the Expected UVA - 11427(概率dp)

题意: 每天晚上你都玩纸牌,如果第一次就赢了,就高高兴兴的去睡觉,如果输了就继续玩.假如每盘游戏你获胜的概率都为p,每盘游戏输赢独立.如果当晚你获胜的局数的比例严格大于p时才停止,而且每天晚上最多只能 ...