flume学习笔记——安装和使用

Flume是一个分布式、可靠、和高可用的海量日志聚合的系统,支持在系统中定制各类数据发送方,用于收集数据;

同时,Flume提供对数据进行简单处理,并写到各种数据接受方(可定制)的能力。

Flume是一个专门设计用来从大量的源,推送数据到Hadoop生态系统中各种各样存储系统中去的,例如HDFS和HBase。

Guide: http://flume.apache.org/FlumeUserGuide.html

体系架构

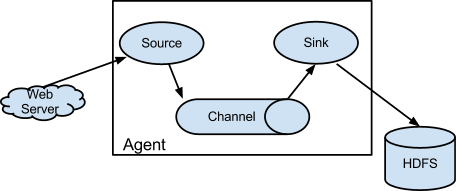

Flume的数据流由事件(Event)贯穿始终。事件是Flume的基本数据单位,它携带日志数据(字节数组形式)并且携带有头信息,这些Event由Agent外部的Source生成,当Source捕获事件后会进行特定的格式化,然后Source会把事件推入(单个或多个)Channel中。你可以把Channel看作是一个缓冲区,它将保存事件直到Sink处理完该事件。Sink负责持久化日志或者把事件推向另一个Source。

Flume以Flume Agent为最小的独立运行单位。一个Agent就是一个JVM。单agent由Source、Sink和Channel三大组件构成。一个Flume Agent可以连接一个或者多个其他的Flume Agent;一个Flume Agent也可以从一个或者多个Flume Agent接收数据。

注意:在Flume管道中如果有意想不到的错误、超时并进行了重试,Flume会产生重复的数据最终被被写入,后续需要处理这些冗余的数据。

具体可以参考文章:Flume教程(一) Flume入门教程

组件

Source:source是从一些其他产生数据的应用中接收数据的活跃组件。Source可以监听一个或者多个网络端口,用于接收数据或者可以从本地文件系统读取数据。每个Source必须至少连接一个Channel。基于一些标准,一个Source可以写入几个Channel,复制事件到所有或者某些Channel。

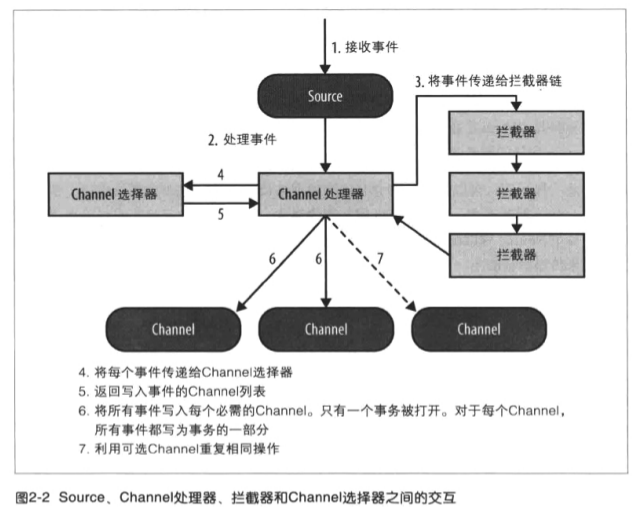

Source可以通过处理器 - 拦截器 - 选择器路由写入多个Channel。

Channel:channel的行为像队列,Source写入到channel,Sink从Channel中读取。多个Source可以安全地写入到相同的Channel,并且多个Sink可以从相同的Channel进行读取。

可是一个Sink只能从一个Channel读取。如果多个Sink从相同的Channel读取,它可以保证只有一个Sink将会从Channel读取一个指定特定的事件。

Flume自带两类Channel:Memory Channel和File Channel。Memory Channel的数据会在JVM或者机器重启后丢失;File Channel不会。

Sink: sink连续轮询各自的Channel来读取和删除事件。

拦截器:每次Source将数据写入Channel,它是通过委派该任务到其Channel处理器来完成,然后Channel处理器将这些事件传到一个或者多个Source配置的拦截器中。

拦截器是一段代码,基于某些标准,如正则表达式,拦截器可以用来删除事件,为事件添加新报头或者移除现有的报头等。每个Source可以配置成使用多个拦截器,按照配置中定义的顺序被调用,将拦截器的结果传递给链的下一个单元。一旦拦截器处理完事件,拦截器链返回的事件列表传递到Channel列表,即通过Channel选择器为每个事件选择的Channel。

|

组件 |

功能 |

|

Agent |

使用JVM运行Flume。每台机器运行一个agent,但是可以在一个agent中包含多个sources和sinks。 |

|

Client |

生产数据,运行在一个独立的线程。 |

|

Source |

从Client收集数据,传递给Channel。 |

|

Sink |

从Channel收集数据,运行在一个独立线程。 |

|

Channel |

连接sources和sinks,这个有点像一个队列。 |

|

Events |

可以是日志记录、avro对象等。 |

配置文件

Flume Agent使用纯文本配置文件来配置。Flume配置使用属性文件格式,仅仅是用换行符分隔的键值对的纯文本文件,如:key1 = value1;当有多个的时候:agent.sources = r1 r2

参考 flume配置介绍

1. 从file source 到 file sink的配置文件

# ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1

agent.sources.r1.interceptors = i1 agent.sources.r1.interceptors.i1.type = Inteceptor.DemoInterceptor$Builder # ========= Describe the source =============

agent.sources.r1.type = spooldir

agent.sources.r1.spoolDir = /home/lintong/桌面/data/input # ========= Describe the channel =============

# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000 # ========= Describe the sink =============

agent.sinks.s1.type = file_roll

agent.sinks.s1.sink.directory = /home/lintong/桌面/data/output

agent.sinks.s1.sink.rollInterval = 0 # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel = c1

2. 从kafka source 到 file sink的配置文件,kafka使用zookeeper,但是建议使用bootstrap-server

# ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1

agent.sources.r1.interceptors = i1 agent.sources.r1.interceptors.i1.type = Inteceptor.DemoInterceptor$Builder # ========= Describe the source =============

agent.sources.r1.type=org.apache.flume.source.kafka.KafkaSource

agent.sources.r1.zookeeperConnect=127.0.0.1:2181

agent.sources.r1.topic=test #不能写成topics

#agent.sources.kafkaSource.groupId=flume

agent.sources.kafkaSource.kafka.consumer.timeout.ms=100 # ========= Describe the channel =============

# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000 # ========= Describe the sink =============

agent.sinks.s1.type = file_roll

agent.sinks.s1.sink.directory = /home/lintong/桌面/data/output

agent.sinks.s1.sink.rollInterval = 0 # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel = c1

3.kafka source到kafka sink的配置文件

# ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1 s2

agent.sources.r1.interceptors = i1 agent.sources.r1.interceptors.i1.type = com.XXX.interceptor.XXXInterceptor$Builder # ========= Describe the source =============

agent.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

agent.sources.r1.channels = c1

agent.sources.r1.zookeeperConnect = localhost:2181

agent.sources.r1.topic = input # ========= Describe the channel =============

# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000 # ========= Describe the sink =============

agent.sinks.s1.type = org.apache.flume.sink.kafka.KafkaSink

agent.sinks.s1.topic = test

agent.sinks.s1.brokerList = localhost:9092

# 避免死循环

agent.sinks.s1.allowTopicOverride = false agent.sinks.s2.type = file_roll

agent.sinks.s2.sink.directory = /home/lintong/桌面/data/output

agent.sinks.s2.sink.rollInterval = 0 # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel = c1

#agent.sinks.s2.channel = c1

4.file source到hbase sink的配置文件

从文件读取实时消息,不做处理直接存储到Hbase

# ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1 # ========= Describe the source =============

agent.sources.r1.type = exec

agent.sources.r1.command = tail -f /home/lintong/桌面/test.log

agent.sources.r1.checkperiodic = 50 # ========= Describe the sink =============

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000 # agent.channels.file-channel.type = file

# agent.channels.file-channel.checkpointDir = /data/flume-hbase-test/checkpoint

# agent.channels.file-channel.dataDirs = /data/flume-hbase-test/data # ========= Describe the sink =============

agent.sinks.s1.type = org.apache.flume.sink.hbase.HBaseSink

agent.sinks.s1.zookeeperQuorum=master:2183

#HBase表名

agent.sinks.s1.table=mikeal-hbase-table

#HBase表的列族名称

agent.sinks.s1.columnFamily=familyclom1

agent.sinks.s1.serializer = org.apache.flume.sink.hbase.SimpleHbaseEventSerializer

#HBase表的列族下的某个列名称

agent.sinks.s1.serializer.payloadColumn=cloumn-1 # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel=c1

5.source是http,sink是kafka

# ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1 s2 # ========= Describe the source =============

agent.sources.r1.type=http

agent.sources.r1.bind=localhost

agent.sources.r1.port=50000

agent.sources.r1.channels=c1 # ========= Describe the channel =============

# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000 # ========= Describe the sink =============

agent.sinks.s1.type = org.apache.flume.sink.kafka.KafkaSink

agent.sinks.s1.topic = test_topic

agent.sinks.s1.brokerList = master:9092

# 避免死循环

agent.sinks.s1.allowTopicOverride = false agent.sinks.s2.type = file_roll

agent.sinks.s2.sink.directory = /home/lintong/桌面/data/output

agent.sinks.s2.sink.rollInterval = 0 # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel = c1

#agent.sinks.s2.channel = c1

如果在启动flume的时候遇到

java.lang.NoClassDefFoundError: org/apache/hadoop/hbase/***

解决方案,在 ~/software/apache/hadoop-2.9.1/etc/hadoop/hadoop-env.sh 中添加

HADOOP_CLASSPATH=/home/lintong/software/apache/hbase-1.2.6/lib/*

5.kafka source到hdfs sink的配置文件

# Licensed to the Apache Software Foundation (ASF) under one

# or more contributor license agreements. See the NOTICE file

# distributed with this work for additional information

# regarding copyright ownership. The ASF licenses this file

# to you under the Apache License, Version 2.0 (the

# "License"); you may not use this file except in compliance

# with the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing,

# software distributed under the License is distributed on an

# "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY

# KIND, either express or implied. See the License for the

# specific language governing permissions and limitations

# under the License. # The configuration file needs to define the sources,

# the channels and the sinks.

# Sources, channels and sinks are defined per agent,

# in this case called 'agent' # ========= Name the components on this agent =========

agent.sources = r1

agent.channels = c1

agent.sinks = s1

agent.sources.r1.interceptors = i1 agent.sources.r1.interceptors.i1.type = Util.HdfsInterceptor$Builder # ========= Describe the source =============

agent.sources.r1.type = org.apache.flume.source.kafka.KafkaSource

agent.sources.r1.channels = c1

agent.sources.r1.zookeeperConnect = localhost:2181

agent.sources.r1.topic = topicB

#agent.sources.r1.kafka.consumer.max.partition.fetch.bytes = 409600000 # ========= Describe the channel =============

# Use a channel which buffers events in memory

agent.channels.c1.type = memory

agent.channels.c1.capacity = 100000

agent.channels.c1.transactionCapacity = 1000

#agent.channels.c1.keep-alive = 60 # ========= Describe the sink =============

agent.sinks.s1.type = hdfs

agent.sinks.s1.hdfs.path = /user/lintong/logs/nsh/json/%{filepath}/ds=%{ds}

agent.sinks.s1.hdfs.filePrefix = test

agent.sinks.s1.hdfs.fileSuffix = .log

agent.sinks.s1.hdfs.fileType = DataStream

agent.sinks.s1.hdfs.useLocalTimeStamp = true

agent.sinks.s1.hdfs.writeFormat = Text

agent.sinks.s1.hdfs.rollCount = 0

agent.sinks.s1.hdfs.rollSize = 10240

agent.sinks.s1.hdfs.rollInterval = 600

agent.sinks.s1.hdfs.batchSize = 500

agent.sinks.s1.hdfs.threadsPoolSize = 10

agent.sinks.s1.hdfs.idleTimeout = 0

agent.sinks.s1.hdfs.minBlockReplicas = 1

agent.sinks.s1.channel = fileChannel # ========= Bind the source and sink to the channel =============

agent.sources.r1.channels = c1

agent.sinks.s1.channel = c1

hdfs sink的配置参数参考:Flume中的HDFS Sink配置参数说明

因为写HDFS的速度很慢,当数据量大的时候会出现一下问题

org.apache.flume.ChannelException: Take list for MemoryTransaction, capacity 1000 full, consider committing more frequently, increasing capacity, or increasing thread count

可以将内存channel改成file channel或者改成kafka channel

当换成kafka channel的时候,数据量大的时候,依然会问题

16:07:48.615 ERROR org.apache.kafka.clients.consumer.internals.ConsumerCoordinator:550 - Error ILLEGAL_GENERATION occurred while committing offsets for group flume

16:07:48.617 ERROR org.apache.flume.source.kafka.KafkaSource:317 - KafkaSource EXCEPTION, {}

org.apache.kafka.clients.consumer.CommitFailedException: Commit cannot be completed due to group rebalance

at org.apache.kafka.clients.consumer.internals.ConsumerCoordinator$OffsetCommitResponseHandler.handle(ConsumerCoordinator.java:552)

或者

ERROR org.apache.kafka.clients.consumer.internals.ConsumerCoordinator:550 - Error UNKNOWN_MEMBER_ID occurred while committing offsets for group flume

参考:flume1.7使用KafkaSource采集大量数据

修改增大以下两个参数

agent.sources.r1.kafka.consumer.max.partition.fetch.bytes = 409600000

agent.sources.r1.kafka.consumer.timeout.ms = 100

kafka channel 爆了

ERROR org.apache.kafka.clients.consumer.internals.ConsumerCoordinator:550 - Error UNKNOWN_MEMBER_ID occurred while committing offsets for group flume

添加参数

agent.channels.c1.kafka.consumer.session.timeout.ms=100000

agent.channels.c1.kafka.consumer.request.timeout.ms=110000

agent.channels.c1.kafka.consumer.fetch.max.wait.ms=1000

命令

启动

bin/flume-ng agent -c conf -f conf/flume-conf.properties -n agent -Dflume.root.logger=INFO,console

flume学习笔记——安装和使用的更多相关文章

- SystemTap 学习笔记 - 安装篇

https://segmentfault.com/a/1190000000671438 在安装前,需要知道下自己的系统环境,我的环境如下: uname -r 2.6.18-308.el5 Linux ...

- alfs学习笔记-安装和使用blfs工具

我的邮箱地址:zytrenren@163.com欢迎大家交流学习纠错! 一名linux爱好者,记录构建Beyond Linux From Scratch的过程 经博客园-骏马金龙前辈介绍,开始接触学习 ...

- Flume 学习笔记之 Flume NG概述及单节点安装

Flume NG概述: Flume NG是一个分布式,高可用,可靠的系统,它能将不同的海量数据收集,移动并存储到一个数据存储系统中.轻量,配置简单,适用于各种日志收集,并支持 Failover和负载均 ...

- cocos2d-js 学习笔记 --安装调试(1)

目前从国内来看,比较知名的,适合前端程序员使用的游戏框架,基本是 egret 和cocos2d-js;刚好两个框架都学习了下, egret的是最近兴起的一个框架主要使用TypeScript.js语言做 ...

- [TenserFlow学习笔记]——安装

最近人工智能.深度学习.机器学习等词汇很是热闹,所以想进一步学习一下.不一定吃这口饭,但多了解一下没有坏处.接下来将学习到的一些知识点做一下记录. 1.安装环境 在VMWare虚拟机中安装最新版本的U ...

- elasticsearch学习笔记——安装,初步使用

前言 久仰elasticsearch大名,近年来,fackbook,baidu等大型网站的搜索功能均开始采用elasticsearch,足见其在处理大数据和高并发搜索中的卓越性能.不少其他网站也开始将 ...

- flume学习笔记

#################################################################################################### ...

- Python学习笔记——安装

最近打算使用下GAE,便准备学习一下python.我对python是一窍不通,因此这里将我的学习历程记录下来,方便后续复习. 安装python: 可以从如下地址:http://www.python.o ...

- Nodejs 学习笔记 --- 安装与环境配置

一.安装Node.js步骤 1.下载对应自己系统对应的 Node.js 版本,地址:https://nodejs.org/zh-cn/ 2.选安装目录进行安装 3.环境配置 ...

随机推荐

- 解决 Excel2013打开提示 文件格式和扩展名不匹配。文件可能已损坏或不安全

有的时候打开xls文档时,会提示“文件格式和扩展名不匹配.文件可能已损坏或不安全.除非您信任其来源,否则请勿打开.是否仍要打开它?” 遇到这种情况,我们需要 1.win键+R键,打开“运行“,输入re ...

- [BZOJ2863]愤怒的元首

Description: Pty生活在一个奇葩的国家,这个国家有n个城市,编号为1~n. 每个城市到达其他城市的路径都是有向的. 不存在两个城市可以互相到达. 这个国家的元首现在很愤怒,他大喊 ...

- 生成缓存文件cache file

生成缓存文件cache file class Test{ public function index(){ $arrConfig = Array( 'name' => 'daicr', 'age ...

- Java RMI的轻量级实现 - LipeRMI

Java RMI的轻量级实现 - LipeRMI 之前博主有记录关于Java标准库的RMI,后来发现问题比较多,尤其是在安卓端直接被禁止使用,于是转向了第三方的LipeRMI 注意到LipeRMI的中 ...

- servlet 表单加上multipart/form-data后request.getParameter获取NULL(已解决)

先上结论(可能不对,因为这是根据实践猜测而来,欢迎指正) 表单改为multipart/form-data传值后,数据就不能通过普通的request.getParameter获取. 文件和文件名通过Fi ...

- xcode9上传app时报错iTunes Store operation failed 解决方案

问题 上传至itunes Connect时报了两个错: iTunes Store Operation Failed ERROR ITMS-xxxxx:"description length: ...

- 基于ubuntu的docker安装

系统版本:Ubuntu16.04 docker版本:18.02.0 Ubuntu 系统的内核版本>3.10(执行 uname -r 可查看内核版本) 在安装前先简单介绍一下docker,按照 ...

- Listener(2)—案例

ServletContext的事件监听器,创建:当前web应用被加载(或重新加载)到服务器中,销毁:当前web应用被卸载 import javax.servlet.ServletContextEven ...

- gitbook build 报错

如下图所示 解决方案,通过 everythings 找到 copyPluginAssets.js,然后搜索 confirm,将其值 true –> false 参考链接:https://git ...

- SpringBoot(十三):springboot2.0.2定时任务

使用定义任务: 第一步:启用定时任务第二步:配置定时器资源等第三步:定义定时任务并指定触发规则 1)启动类启用定时任务 在springboot入口类上添加注解@EnableScheduling即可. ...